The Construction and Approximation of ReLU Neural Network Operators

Abstract

In the present paper, we construct a new type of two-hidden-layer feedforward neural network operators with ReLU activation function. We estimate the rate of approximation by the new operators by using the modulus of continuity of the target function. Furthermore, we analyze features such as parameter sharing and local connectivity in this kind of network structure.

1. Introduction

Artificial neural network is a fundamental method in machine learning, which has been applied in many fields such as pattern recognition, automatic control, signal processing, auxiliary decision-making, and artificial intelligence. In particular, the successful applications of deep (multihidden layer) neural networks in image recognition, natural language processing, computer vision, etc. developed in recent years have made neural networks attract great attention. In fact, ever the function of XOR gate was implemented by adding one layer from the simplest perceptron, which leaded to the single-hidden-layer feedforward neural network.

We call w = max{n1, n2} as the width of the network , and its depth is naturally 2.

The theoretical research and applications of the single-hidden-layer neural network model had been greatly developed in the 80’s and 90’s of last century; particularly, there were also some research results on the neural networks with multihidden layers at that time. So, in [1], Pinkus pointed out that “Nonetheless there seems to be reasonable to conjecture that the two-hidden-layer model may be significantly more promising than the single layer model, at least from a purely approximation-theoretical point of view. This problem certainly warrants further study.” However, whether it is a single-hidden-layer or multihidden-layer neural network, three fundamental issues are always involved: density, complexity, and algorithms.

The so-called density or universal approximation of a neural network structure means that for any given error accuracy and the target function in a function space with some metrics, there is a specific neural network model (except for the input x, other parameters are determined) such that the error between the output and target is less than the preaccuracy. In the 1980s and 1990s, the research on the density of feedforward neural network has achieved many satisfactory results [2–9]. Since the single-hidden-layer neural network is an extreme case of the multilayer neural networks, the current focus of neural network research is still on complexity and algorithms. So-called the complexity of a neural network means that to guarantee a prescribed degree of approximation, a neural network model requires the numbers of structural parameters, including the number of layers (or depth), the number of neuron in each layer (sometimes use width), and the number of link weights and the number of thresholds. In particular, it is of interest to have more equal weights and thresholds, which is called as the parameter sharing, as this reduces computational complexity. The representation ability that has attracted much attention in deep neural networks is actually the study of complexity problem, which needs to be investigated extensively and urgently.

The constructive method is an important approach to the study of complexity, which is applicable to single- and multiple-hidden-layer neural network. In fact, there are two cases here: one is that the depth, width, and approximation degree are given, while the weights and thresholds are uncertain; the other is that all these are given; that is, the neural network model is completely determined. In order to determine the weights and thresholds in the first kind of neural network, we simply use samples to learn or train. Theoretically, the second kind of neural network can be applied directly, but the parameters are often fine-tuned with a small number of samples before use. There have been many results about the constructions of network operators [10–26]. It can be seen that these research results have an important guiding role in the construction and design of neural networks. Therefore, the purpose of this paper is to construct a kind of two-hidden-layer feedforward neural network operators with the ReLU activation function, and the upper bound estimate of approximation (or regression) ability of this neural network for two variable continuous function defined on [−1, 1]2 is given.

The rest of the paper is organized as follows: in Section 2, we introduce new two-hidden-layer neural network operators with ReLU activation function and give the approximation rate of approximation by the new operators. In Section 3, we give the proof of the main result. Finally, in Section 4, we give some numerical experiments and discussions.

2. Construction of ReLU Neural Network Operators and Its Approximation Properties

From the above representation, we see that σ(x1, x2) can be explained as the output of a two-hidden-layer feedforward neural network. It is obvious that σ possesses the following some important properties:

(A1) σ(−x1, x2) = σ(x1, x2), σ(x1, −x2) = σ(x1, x2)

(A2) For any given x1 ∈ ℝ, σ(x1, x2), it is nondecreasing for x2 ≤ 0 and nonincreasing for x2 ≥ 0. Simultaneously, for any given x2 ∈ ℝ, σ(x1, x2),it is nondecreasing for x1 ≤ 0 and nonincreasing for x1 ≥ 0

(A3) 0 ≤ σ(x1, x2) ≤ 3/4

(A4)

We prove that the rate of approximation by can be estimated by using the modulus of smoothness of the target function. In fact, we have

Theorem 1. Let f(x1, x2) is a continuous function defined on [−1, 1]2. Then,

where and are the modulus of continuity of f defined by

Remark 2. For 0 < α < 1, we define the following neural network operators:

Using a similar process of the proof in Theorem 1, we can get

Remark 3. Let β (0 < β ≤ 1) be a fixed number, if there is a constant L > 0 such that

Remark 4. Now, we describe the structure of by using the form (3).

The input matrix of the first hidden layer is

and its size is . The bias vector of the first hidden layer is

and the dimension is . The input matrix of the second hidden layer is

and its size is . Θ2 is a constant 1 vector with dimension . The output weight vector is

We can see that there are two different numbers in weight matrices A1 and A2, respectively. That is, neural network operators have a strong weight sharing feature. There are some results about the constructions of this kind of neural networks [14, 27–29]. Moreover, A2 shows that this neural network is locally connected. Finally, the simplicity of bias vector Θ2 also greatly reduces the complexity of the neural network.

3. Proof of the Main Result

To prove Theorem 1, we need the following auxiliary lemma.

Lemma 5. For function σ(x1, x2), we have

Proof. We only prove (1), and (2), (3), and (4) can be proved similarly.

- (1)

When ki − 1 < ki < ki + 1 ≤ [nxi] − 1(i = 1, 2), we have

Considering the monotonicity of σ(x1, x2), we have

Combining (19) and (20) leads to

Similarly, we have

By (21), (22), and summation from to [nxi] − 1(i = 1, 2), we obtain (1) of Lemma 5.

- (2)

When k2 + 1 > k2 > k2 − 1 ≥ ⌈nx2⌉ + 1, we have

From (18), (23), and in a similar way to the proof in proving (1), we get

By summation for and , we obtain (2) of Lemma 5.

Proof of Theorem 6. Let

Then,

We further estimate by estimating I1 and I2, respectively.

Set

Since in ∑2 + ∑3 + ∑4, at least one of inequalities and holds; so, either

For ∑1, by the facts that , for (x1, x2) ∈ [−1, 1]2, we obtain that

Hence,

where we have used the inequality 0 ≤ σ ≤ 3/4, and the fact that the number of the terms in ∑1 is no more than . From (27)-(33), it follows that

Set

Then,

Firstly, we have

Noting that , we get Δ1 = 0 by the similar arguments for estimating ∑2 + ∑3 + ∑4 in (27). Therefore,

Consequently,

Similarly, we have

Thus, we already have

Now, let us estimate I21. By

Similarly, by

By (1) of Lemma 5, (43), and (45), we have

By (2)-(4) of Lemma 5, and the arguments similar to (43) and (45), we obtain that

By (46)-(49) and the identity , we have

It follows from (26), (34)-(41), and (50) that

which completes the proof of Theorem 6.

4. Numerical Experiments and Some Discussions

In this section, we give some numerical experiments to illustrate the theoretical results. We take as the target function.

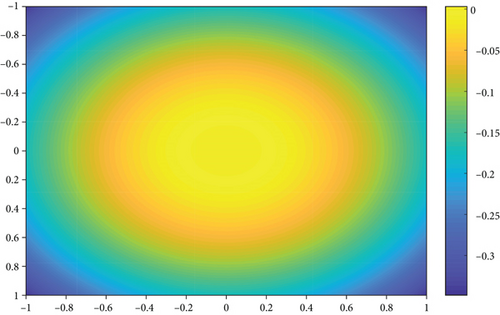

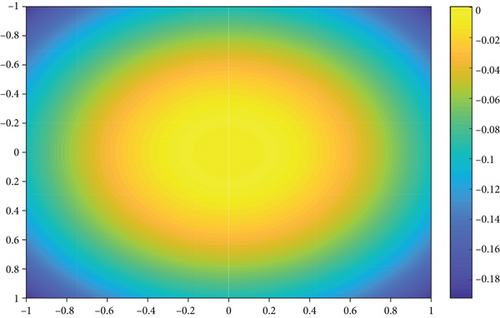

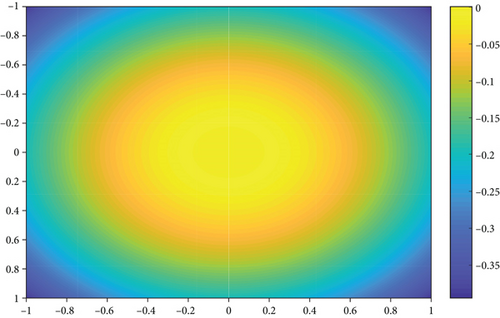

Figures 1–3 show the results of e100(x1, x2), e1000(x1, x2) and e10000(x1, x2), respectively. When n equals to 106, the amount of calculation of is large. Therefore, we choose 6 specific points and the corresponding values of en(x1, x2), which are shown in Table 1.

| (x1, x2) | (0, −1) | (−1, 1) | (0.5,0.5) | (0, 0) | (0.5, −0.6) | (0.25,0.8) |

|---|---|---|---|---|---|---|

| en(x1, x2) | 0.0020 | 0.0040 | -9.982e-04 | 3.992e-07 | 0.0012 | -0.0014 |

From the results of experiments we see that as the parameter n of neural network operators increases, the approximation effect increases; we only need to notice , and after the simple calculation, we can demonstrate the validity of the obtained result.

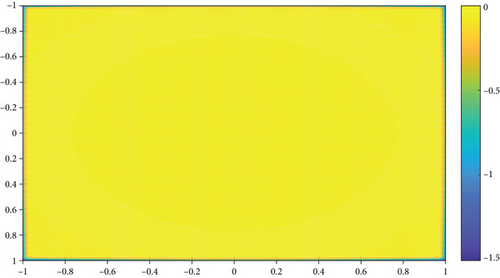

Figure 4 shows the with n = 1000. We can see that next to the border of [−1, 1]2 the effect of approximating f is not satisfactory. Particularly, we can see this phenomenon from Table 2 below. So, we modified f((k1/n), (k2/n)) to construct operators (6). Then, we obtain the error estimation of approximation of operators (6) and give the numerical experiments.

| (x1, x2) | (0, −1) | (−1, 1) | (0.5,0.5) | (0, 0) | (0.5, −0.6) | (0.25,0.8) |

|---|---|---|---|---|---|---|

|

|

-0.5000 | -1.4862 | 2.749e-05 | 3.999e-05 | 2.47e-05 | 2.24e-05 |

| en(x1, x2) | -0.0197 | -0.0394 | -0.0098 | 3.921e-05 | -0.0120 | -0.0138 |

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

This research was supported by the National Natural Science Foundation of China under Grant No. 12171434 and Zhejiang Provincial Natural Science Foundation of China under Grant No. LZ19A010002.

Open Research

Data Availability

Data are available on request from the authors.