Wood Species Recognition Based on Visible and Near-Infrared Spectral Analysis Using Fuzzy Reasoning and Decision-Level Fusion

Abstract

A novel wood species spectral classification scheme is proposed based on a fuzzy rule classifier. The visible/near-infrared (VIS/NIR) spectral reflectance curve of a wood sample’s cross section was captured using a USB 2000-VIS-NIR spectrometer and a FLAME-NIR spectrometer. First, the wood spectral curve—with spectral bands of 376.64–779.84 nm and 950–1650 nm—was processed using the principal component analysis (PCA) dimension reduction algorithm. The wood spectral data were divided into two datasets, namely, training and testing sets. The training set was used to generate the membership functions and the initial fuzzy rule set, with the fuzzy rule being adjusted to supplement and refine the classification rules to form a perfect fuzzy rule set. Second, a fuzzy classifier was applied to the VIS and NIR bands. An improved decision-level fusion scheme based on the Dempster–Shafer (D-S) evidential theory was proposed to further improve the accuracy of wood species recognition. The test results using the testing set indicated that the overall recognition accuracy (ORA) of our scheme reached 94.76% for 50 wood species, which is superior to that of conventional classification algorithms and recent state-of-the-art wood species classification schemes. This method can rapidly achieve good recognition results, especially using small datasets, owing to its low computational time and space complexity.

1. Introduction

Wood species classification has been investigated for several years, as different wood species have diverse physical and chemical properties. Many such schemes have been proposed for automatic processing using sensors and computers, with spectra-based [1–3], chemometric-based [4], and image-based schemes [5–9] being the most commonly investigated. Spectral analysis technology is also widely used in the prediction of wood properties [10–12] such as wood density [13], wood moisture content [14, 15], and others [16, 17]. The NIR spectral band is often used in these applications. A wood spectral analysis scheme usually pertains to one-dimensional (1D) spectral reflectance curves, which exhibits low computational complexity and, thus, high processing speeds. A wood image processing scheme usually considers the wood’s texture features for species classification, as its texture is more stable than its color [18–20].

Fuzzy logic can provide nonseparated output classes and functions well with just a few learning datasets. In the past, fuzzy-rule-based schemes were successfully used in control systems and pattern recognition applications [21, 22]. In the wood industry, fuzzy rule classification has been successfully applied to wood color classification and wood defect recognition [23–25]. For example, considering the uncertainty of wood color and processing speed, Bombardier et al. proposed a fuzzy rule classifier to fulfill wood color recognition [24, 25].

Fuzzy rule classification is not frequently used in wood species recognition. However, Ibrahim et al. proposed a fuzzy preclassifier to classify 48 tropical wood species into four broad categories, followed by the use of a support vector machine (SVM) classifier in each broad category to further determine the wood species of a sample [19]. One advantage of their approach was that when a new wood species was added to the system, only the SVM classifier of one broad category required retraining instead of the entire system. Similarly, Yusof et al. also proposed a fuzzy-logic-based preclassifier to classify tropical wood species into four broad categories, followed by performing specific wood species recognition using definite classifiers to increase recognition accuracy and decrease processing time requirements [7].

In this study, we propose a fuzzy rule classification scheme for the recognition of 50 wood species using 1D spectral reflectance data. The spectral range covers both the visible (VIS) band (i.e., 376.64–779.84 nm) and the near-infrared (NIR) band (i.e., 950–1650 nm). For the wood spectral curves, the VIS band may vary as the color of a wood sample may change owing to environmental variations, while the NIR band remains relatively stable. Consequently, these two bands were processed using different fuzzy classifiers, and the two classification results were further processed using an improved decision-level fusion based on the D-S evidential theory.

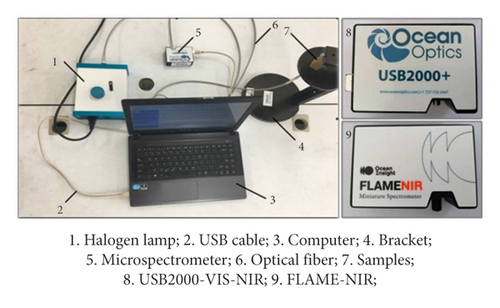

Our scheme has the two following advantages. First, it can achieve good recognition accuracy rapidly in a small spectral dataset. There are only 50 samples for each wood species, and each sample consists of a VIS spectral curve and an NIR curve. Second, the experimental equipment cost is low. The used Ocean Optics USB2000-VIS-NIR spectrometer costs approximately $3,000, while the Ocean Optics FLAME-NIR spectrometer costs $8,000. Several comparisons with other classical and state-of-the-art classifiers in wood species recognition also verified the merits of our scheme.

2. Materials and Methods

2.1. Wood Samples and Spectral Data Acquisition

| Class label | Latin name |

|---|---|

| 1 | Pterocarpus soyauxii |

| 2 | Ulmus glabra |

| 3 | Calophyllum brasiliense |

| 4 | Intsia bijuga |

| 5 | Tectona grandis |

| 6 | Pouteria speciosa |

| 7 | Quercus mongolica |

| 8 | Magnolia fordiana |

| 9 | Guibourtia ehie |

| 10 | Terninalia catappa |

| 11 | Cinnamomum camphora |

| 12 | Guibourtia demeusei |

| 13 | Swintonia florbunda |

| 14 | Fraxinus mandshurica |

| 15 | Pometia pinnata |

| 16 | Dipterocarpus alatus |

| 17 | Vernicia fordii |

| 18 | Sophora japonica |

| 19 | Robinia pseudoacacia |

| 20 | Quercus acutissima |

| 21 | Populus alba |

| 22 | Fraxinus chinensis |

| 23 | Pinus sylvestris |

| 24 | Populus tomentosa |

| 25 | Betula alnoides |

| 26 | Betula platyphylla |

| 27 | Platanus orientalis |

| 28 | Picea asperata |

| 29 | Larix gmelinii |

| 30 | Salix matsudana |

| 31 | Amygdalus davidiana |

| 32 | Juglans mandshurica |

| 33 | Pinus koraiensis |

| 34 | Populus cathayana |

| 35 | Tona ciliate |

| 36 | Prunus avium |

| 37 | Pinus massoniana |

| 38 | Aucoumea klaineana |

| 39 | Shorea contorta |

| 40 | Entandrophragma candollei |

| 41 | Millettia laurentii |

| 42 | Swietenia mahagoni |

| 43 | Rhodamnia dumetorum |

| 44 | Cyclobalanopsis glauca |

| 45 | Shorea laevis |

| 46 | Chamaecyparis nootkatensis |

| 47 | Juglans nigra |

| 48 | Tilia mandshurica |

| 49 | Pseudotsuga menziesii |

| 50 | Pinups radiata |

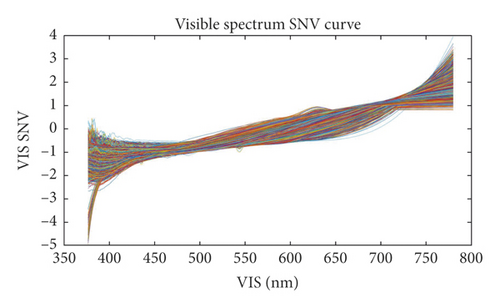

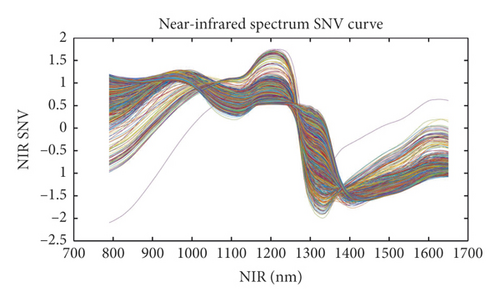

The spectral range of the USB2000-VIS-NIR spectrometer was 340–1027 nm. Both ends of this spectral range can be noisy, so the useful spectral range is 376.64–779.84 nm. The spectral data in the VIS band may be variable as the color of a wood sample may change with changing environmental conditions. To avoid this, we maintained a stable environment during spectral acquisition and wood sample preservation. The NIR band of 950–1650 nm is relatively stable and can be used to characterize different wood properties to some extent. Therefore, these two bands were processed using different fuzzy classifiers—that is, with different membership functions and fuzzy rules—the two classification results of which were further processed using an improved decision-level fusion based on the D-S evidential theory.

2.2. Spectral Dimension Reduction

For each spectral curve, the VIS/NIR band includes thousands of spectral data, so that the spectral dimension is very large. Consequently, each spectral curve contains redundant information, which reduces the wood species classification accuracy and speed. We used principal component analysis (PCA) and the T-distributed stochastic neighbor embedding (T-SNE) algorithms to compare and reduce the spectral dimension results. The PCA algorithm is a transformation algorithm in multivariate statistics, which is based on the variance maximization of a mapped low-dimension vector. The procedure is as follows: First, the initial dataset matrix is defined as Am×n = [a1, …, an]—where m and n are the dimension and number of feature vectors, respectively. The mean vector is computed as . Second, we compute xi = ai − u to form Xm×n = [x1, …, xn]. Third, the k largest eigenvalues of XXT are selected, their k corresponding eigenvectors ε1, …, εk forming a matrix D = [ε1, …, εk]. Finally, the new dataset matrix after dimension reduction is computed as DT × X = Pk×n(k < m).

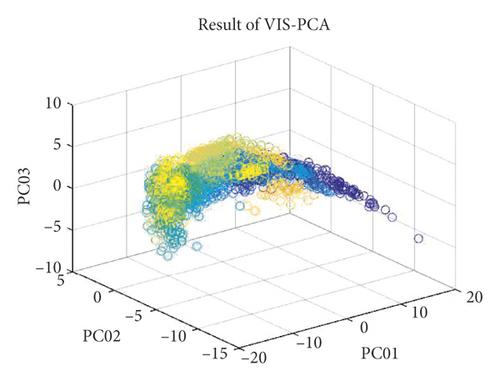

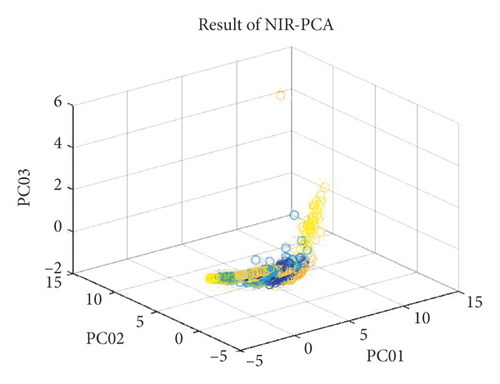

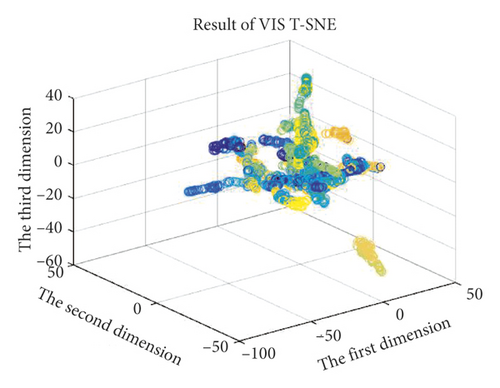

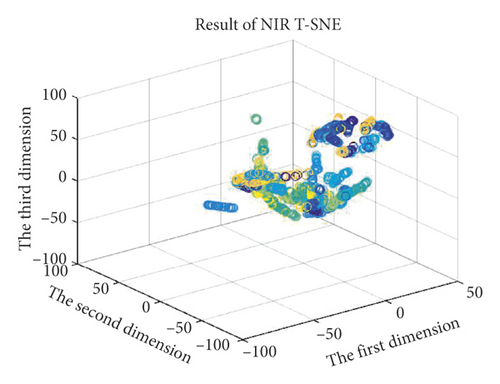

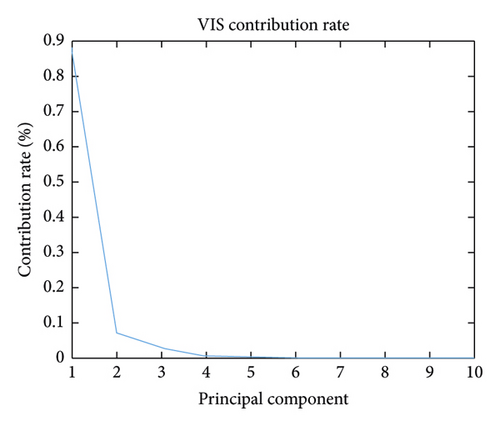

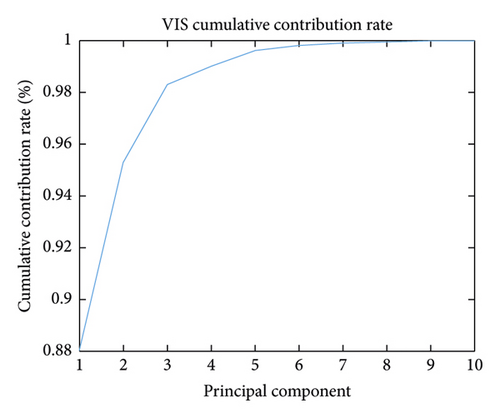

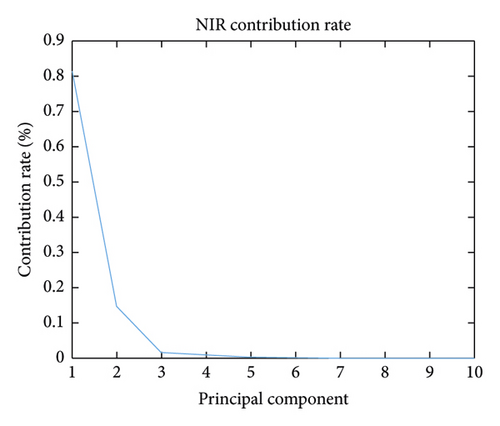

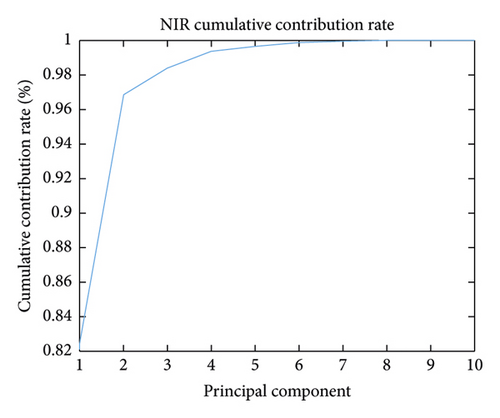

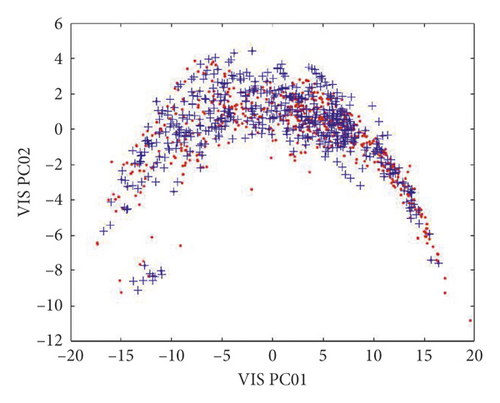

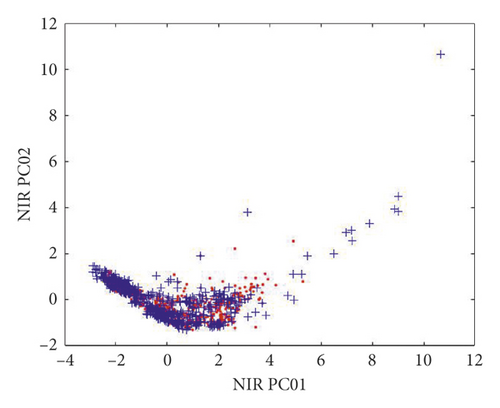

Additionally, the T-SNE algorithm was also used for spectral dimension reduction. This algorithm performs nonlinear dimension reduction, an improvement on the original SNE algorithm [27]. The algorithm is based on the invariant data distribution probability of the mapped low-dimensional vector. Here, the symbol “T” in T-SNE represents the t-distribution, the freedom of the t-distribution being 1 in this study. The graphs of the three-dimensional (3D) spectral vectors for the VIS and NIR bands after spectral dimension reduction using the PCA and T-SNE algorithms are shown in Figure 5. The contribution rate (CR) and cumulative contribution rate (CCR) of the first ten principal components are shown in Figure 6 and Table 2 for the VIS and NIR bands. In Figure 5, the wood sample’s 3D spectral vectors were acquired using the PCA and T-SNE algorithms and are shown with 50 different colors for the 50 wood species. The best case is shown in Figure 5(b), where the wood samples were the most dispersed.

| PC01 | PC02 | PC03 | PC04 | PC05 | PC06 | PC07 | PC08 | PC09 | PC10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| VIS CR | 0.8804 | 0.0726 | 0.0301 | 0.0070 | 0.0061 | 0.0018 | 0.00074982 | 0.00055908 | 0.00047882 | 0.00027888 |

| VIS CCR | 0.8804 | 0.9529 | 0.9830 | 0.9900 | 0.9961 | 0.9979 | 0.9986 | 0.9992 | 0.9997 | 0.9999 |

| NIR CR | 0.8215 | 0.1468 | 0.0155 | 0.0097 | 0.0029 | 0.0021 | 0.00082330 | 0.00023093 | 0.00017311 | 0.00010637 |

| NIR CCR | 0.8215 | 0.9683 | 0.9838 | 0.9935 | 0.9965 | 0.9987 | 0.9994 | 0.9997 | 0.9998 | 0.9999 |

2.3. Fuzzy Rule Classifier

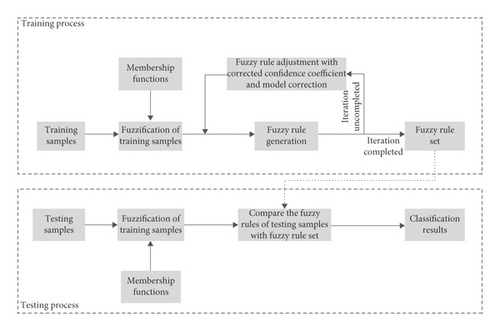

-

Step 1. The membership functions are set to fuzzify the training sample’s feature and generate the training sample’s fuzzy rules

-

Step 2. Fuzzy rules are adjusted by using a corrected confidence coefficient

-

Step 3. The fuzzy rule set is generated

-

Step 4. The testing sample’s feature is fuzzified, and the generated rule is compared with the fuzzy rule set for wood species classification

The training process consisted of three steps. First, membership functions were used to fulfill the fuzzification of the training dataset. Here, the membership functions could be a triangular function, trapezoid function, or Gaussian function. Second, fuzzy rules were generated based on the wood species of the wood samples in the training dataset. A fuzzy rule was defined as “IF … AND …, THEN …” using the conjunction or disjunction operation. Third, the fuzzy rules required modification using a confidence coefficient to form a perfect fuzzy rule set.

In the testing process, the testing dataset was added to the trained fuzzy rule classifier, and the same membership functions were used to fulfill its fuzzification. In this way, a corresponding fuzzy rule of a testing sample could be obtained, and this rule was compared with the generated perfect fuzzy rule set to obtain the classified wood species. This species was then compared with the correctly labeled species of the testing sample to evaluate the performance of the fuzzy rule classifier. If the corresponding fuzzy rule of a testing sample was not found in the perfect fuzzy rule set, the sample was classified as an unknown species.

2.3.1. Fuzzification of the Feature Vector

Here, the feature vector refers to the spectral vector after dimension reduction. This fuzzification step transforms spectral feature vectors into linguistic variables. A linguistic variable is defined using a triple value (V, X, TV), where V is a variable (e.g., PC1) defined on a set of reference X, X is the universe of discourse (field of variation of V), and TV is the vocabulary chosen to describe the values of V in a symbolic way. The set TV = {A1, A2, …} contains the normalized fuzzy subsets of X, and each fuzzy subset Ai is defined by the membership degree .

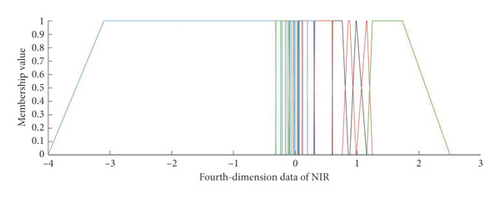

For example, the wood spectral feature vector is obtained using the PCA algorithm. The fourth principal component (PC4) of the first ten wood species is shown in Table 3 for the NIR band, where the maximum, minimum, mean, and median values of PC4 are shown. Thus, we can design the membership functions to divide the NIR PC4 (i.e., the 4th dimension) into twenty-six intervals TV = {A1, A2, …, A26} based on these values, as shown in Figure 8.

| Wood species | Minimum value | Mean value | Median value | Maximum value |

|---|---|---|---|---|

| Pterocarpus soyauxii | −0.292834701 | −0.16041152 | −0.169974712 | 0.007724295 |

| Ulmus glabra | 0.025315788 | 0.195750566 | 0.210818609 | 0.363952969 |

| Calophyllum brasiliense | −0.192778352 | −0.117200877 | −0.117229875 | −0.034786326 |

| Intsia bijuga | −0.389917083 | −0.139350711 | −0.137772612 | 0.114471138 |

| Tectona grandis | −0.057557826 | 0.148158636 | 0.153314903 | 0.280022623 |

| Pouteria speciosa | −0.095030985 | 0.053811088 | 0.050028547 | 0.191835329 |

| Quercus mongolica | −0.062964338 | 0.056146512 | 0.046516552 | 0.338022639 |

| Magnolia fordiana | −0.154656742 | −0.037434328 | −0.048184982 | 0.126992175 |

| Guibourtia ehie | −0.338868726 | −0.178740936 | −0.201291866 | 0.085960311 |

| Terninalia catappa | −0.187178353 | 0.060716356 | 0.053703539 | 0.267102296 |

For instance, if the PCA algorithm is used for wood spectral dimension reduction, the CCR should be above 99% in view of the PC number. As can be seen from Table 2, the CCR of the first four PCs reaches 99% and 99.35% for the VIS and NIR bands, respectively, but remains approximately stable when N > 4, so we should set N ≥ 4.

After several tests with the PCA algorithm, N and Card(TV) were as follows: for the VIS band, Card(T1) = 37, Card(T2) = 35, Card(T3) = 25, Card(T4) = 21, Card(T5) = 15, and Card(T6) = 2. For the NIR band, Card(T1) = 47, Card(T2) = 40, Card(T3) = 28, Card(T4) = 26, Card(T5) = 20, and Card(T6) = 2. The triangular, trapezoid, and triangular-trapezoid functions were used and compared 50 times—in terms of the ORA and mean training time requirement (i.e., the wood sample number of the training set was 2000) for the fuzzy rule set—as described in Tables 4 and 5 for the VIS and NIR bands, respectively. The maximum and practical Nrules in terms of N and Card(TV) are listed in Table 6. We observed that the training time requirement for the fuzzy rule set was practically invariant with regard to the fuzzy classifiers based on different membership functions. However, this time requirement was only relevant to the N dimension of the spectral feature vectors. In fact, both the time requirement and the Nrules increased rapidly when the dimension N increased. Moreover, the overall recognition accuracy in terms of the triangular-function-based fuzzy classifier increased when the dimension N increased, but the trapezoid-function- or triangular-trapezoid-function-based classifier remained practically invariant when the dimensions were N ≥ 4 and N ≥ 6 for the VIS and NIR bands, respectively. Finally, using our fuzzy classifier, the optimal trapezoid function parameters were dimension N = 37, Card(T1) = 35, Card(T2) = 25, Card(T3) = 25, and Card(T4) = 21 for the VIS band and N = 4, Card(T1) = 47, Card(T2) = 40, Card(T3) = 28, and Card(T4) = 26 for the NIR band.

| Dimension N | Triangular function | Trapezoid function | Triangular-trapezoid | |||

|---|---|---|---|---|---|---|

| ORA (%) | TR (s) | ORA (%) | TR (s) | ORA (%) | TR (s) | |

| 3 | 75.65 | 1051.27 | 76.85 | 1154.12 | 77.80 | 1219.26 |

| 4 | 89.05 | 24393.24 | 89.60 | 25128.75 | 90.20 | 24983.20 |

| 5 | 89.60 | 312764.52 | 90.05 | 299023.20 | 90.25 | 327279.92 |

| 6 | 90.20 | 556719.47 | 90.40 | 469466.53 | 90.65 | 483718.36 |

| Dimension N | Triangular function | Trapezoid function | Triangular-trapezoid | |||

|---|---|---|---|---|---|---|

| ORA (%) | TR (s) | ORA (%) | TR (s) | ORA (%) | TR (s) | |

| 3 | 77.65 | 1822.23 | 82.80 | 1733.46 | 81.15 | 1807.12 |

| 4 | 90.25 | 45125.02 | 92.90 | 44783.24 | 93.25 | 48353.50 |

| 5 | 91.55 | 657271.15 | 93.10 | 631285.46 | 92.85 | 718375.61 |

| 6 | 92.05 | 1038488.18 | 93.05 | 940615.34 | 93.35 | 1223481.25 |

| Dimension N | |||

|---|---|---|---|

| 3 | 4 | 5 | |

| (VIS) maximum fuzzy rule number | 45325 | 951825 | 14277375 |

| (VIS) practical fuzzy rule number | 987 | 1362 | 1475 |

| (NIR) maximum fuzzy rule number | 52640 | 1368640 | 27372800 |

| (NIR) practical fuzzy rule number | 1135 | 1674 | 1689 |

2.3.2. Fuzzy Rule Generation

2.3.3. Fuzzy Rule Adjustment

When the fuzzy rule is generated, there are usually several rules with the same judgment condition (i.e., the same “IF … AND …,”) but different classification results (i.e., different “THEN …”). Consequently, fuzzy rule adjustment is usually required to add a confidence coefficient (CC) to each fuzzy rule.

If the same rule is not produced by other samples, the CC of this fuzzy rule remains invariant. Some final rules with their CCs are listed in Table 7. In practical classification work, when there are rules that have the same judgment condition but different classification results, the final classification is determined by the fuzzy rule with the largest CC.

| A | B | C | D | CC | Wood species |

|---|---|---|---|---|---|

| 2 | 24 | 8 | 6 | 0.9939 | Pinups radiata |

| 5 | 20 | 9 | 15 | 0.9876 | Tilia mandshurica |

| 28 | 29 | 20 | 15 | 0.9072 | Juglans nigra |

| … | … | … | … | … | … |

| 17 | 2 | 12 | 6 | 0.7993 | Entandrophragma candollei |

| 3 | 23 | 11 | 6 | 0.6161 | Chamaecyparis nootkatensis |

| … | … | … | … | … | … |

2.4. Decision-Level Fusion

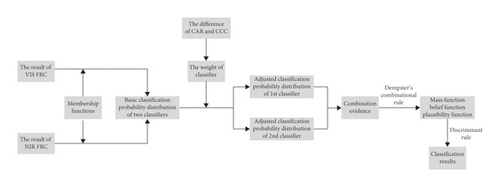

The D-S evidential theory [28] is an effective decision-level fusion scheme, but the classifier’s reliability is not considered fully when each classifier’s result is converted into a classification probability distribution before the decision-level fusion. To solve this problem, we proposed an improved D-S decision-level fusion method that determines each classifier’s weight in view of the differences in the classification accuracy ratio and correlation coefficient.

-

Step 1. The basic classification probability distribution of two classifiers is generated using classification results

-

Step 2. Classifier weights are determined using classification the accuracy ratio (CAR) and classification correlation coefficient (CCC)

-

Step 3. The adjusted classification probability distribution is generated using classifier weights

-

Step 4. The final classification results are calculated using D-S decision-level fusion

2.4.1. Conversion from the Classification Result to Classification Probability Distribution

Here, nlast is the number of Tarout. Note that, for wood species that do not exist in Tarout, their corresponding classification probabilities are set as 0. For example, the classification probability distribution in the VIS and NIR bands for a wood sample is shown in Table 8.

| Class label | VIS FRC (%) | NIR FRC (%) |

|---|---|---|

| 1 | 0.00 | 0.00 |

| 2 | 0.00 | 0.00 |

| 3 | 0.00 | 0.00 |

| 4 | 0.00 | 0.00 |

| 5 | 0.00 | 0.00 |

| 6 | 27.22 | 2.75 |

| 7 | 0.74 | 0.00 |

| 8 | 0.00 | 0.00 |

| 9 | 0.00 | 0.00 |

| 10 | 0.00 | 0.00 |

| 11 | 0.00 | 0.00 |

| 12 | 21.45 | 93.17 |

| 13 | 0.00 | 0.00 |

| 14 | 1.68 | 0.00 |

| 15 | 0.00 | 0.00 |

| 16 | 0.00 | 0.00 |

| 17 | 14.37 | 0.00 |

| 18 | 0.00 | 0.00 |

| 19 | 0.29 | 0.00 |

| 20 | 0.00 | 0.00 |

| 21 | 0.00 | 0.00 |

| 22 | 0.00 | 0.00 |

| 23 | 0.00 | 0.07 |

| 24 | 13.15 | 0.17 |

| 25 | 0.00 | 0.00 |

| 26 | 0.00 | 0.00 |

| 27 | 0.00 | 0.00 |

| 28 | 4.70 | 0.00 |

| 29 | 0.00 | 0.62 |

| 30 | 0.00 | 0.00 |

| 31 | 0.00 | 0.00 |

| 32 | 0.00 | 0.00 |

| 33 | 0.00 | 0.00 |

| 34 | 0.00 | 0.00 |

| 35 | 0.00 | 0.00 |

| 36 | 0.00 | 0.00 |

| 37 | 0.00 | 2.12 |

| 38 | 0.00 | 0.00 |

| 39 | 0.00 | 0.00 |

| 40 | 0.00 | 0.00 |

| 41 | 3.65 | 0.00 |

| 42 | 4.32 | 0.00 |

| 43 | 0.00 | 0.00 |

| 44 | 0.00 | 0.35 |

| 45 | 0.00 | 0.00 |

| 46 | 7.92 | 0.00 |

| 47 | 0.00 | 0.00 |

| 48 | 0.00 | 0.75 |

| 49 | 0.51 | 0.00 |

| 50 | 0.00 | 0.00 |

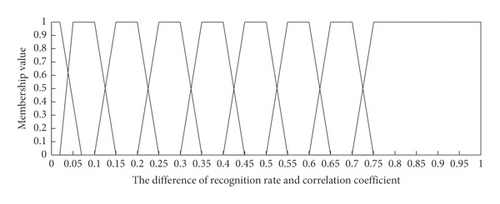

2.4.2. Weight Determination of VIS and NIR Fuzzy Rule Classifier

In this section, we propose a novel classifier’s weight determination based on the difference between the fuzzy classification accuracy ratio (CAR) and the correlation coefficient for the VIS and NIR bands using an analytical hierarchy process. In Section 2.3.1, we concluded that the fuzzy classification accuracy was 90.20% after the PCA algorithm processing for rVIS = 4, while it was 93.25% for rNIR = 4 in the training set.

Here, Cov(x, y) refers to the covariance between the corrected and actual classification probability distributions. For instance, as described in Table 8, the actual classification probability distribution for one wood sample for the NIR and VIS classifiers was [0,0,0,0,0,2.75, 0, …, 0,0] and [0,0,0,0,0,27.22, 0.74, …, 0.51, 0], respectively, while the corrected one was [0,0,0,0,0,1,0, …, 0]. The σx, σy represent the standard deviations for the corrected and actual classification probability distribution vectors in the training set, respectively. The computed fuzzy CCC was 0.8073 after PCA algorithm processing for rVIS = 4, whereas it was 0.8862 for rNIR = 4.

| RR and CC difference | Credibility | Description |

|---|---|---|

| 1st interval | 1 | The same trusted |

| 2nd interval | 2 | Between 1 and 3 |

| 3rd interval | 3 | A little trusted |

| 4th interval | 4 | Between 3 and 5 |

| 5th interval | 5 | Obviously trusted |

| 6th interval | 6 | Between 5 and 7 |

| 7th interval | 7 | Strongly trusted |

| 8th interval | 8 | Between 7 and 9 |

| 9th interval | 9 | Extremely trusted |

2.4.3. Improved D-S Decision-Level Fusion Based on the Classifier’s Weight

| Class label | VIS FRC (%) | NIR FRC (%) |

|---|---|---|

| 1 | 0.00 | 0.00 |

| 2 | 0.00 | 0.00 |

| 3 | 0.00 | 0.00 |

| 4 | 0.00 | 0.00 |

| 5 | 0.00 | 0.00 |

| 6 | 19.95 | 2.10 |

| 7 | 0.81 | 0.00 |

| 8 | 0.00 | 0.00 |

| 9 | 0.00 | 0.00 |

| 10 | 0.00 | 0.00 |

| 11 | 0.00 | 0.00 |

| 12 | 23.59 | 94.78 |

| 13 | 0.00 | 0.00 |

| 14 | 1.85 | 0.00 |

| 15 | 0.00 | 0.00 |

| 16 | 0.00 | 0.00 |

| 17 | 15.81 | 0.00 |

| 18 | 0.00 | 0.00 |

| 19 | 0.32 | 0.00 |

| 20 | 0.00 | 0.00 |

| 21 | 0.00 | 0.00 |

| 22 | 0.00 | 0.00 |

| 23 | 0.00 | 0.05 |

| 24 | 14.46 | 0.13 |

| 25 | 0.00 | 0.00 |

| 26 | 0.00 | 0.00 |

| 27 | 0.00 | 0.00 |

| 28 | 5.17 | 0.00 |

| 29 | 0.00 | 0.47 |

| 30 | 0.00 | 0.00 |

| 31 | 0.00 | 0.00 |

| 32 | 0.00 | 0.00 |

| 33 | 0.00 | 0.00 |

| 34 | 0.00 | 0.00 |

| 35 | 0.00 | 0.00 |

| 36 | 0.00 | 0.00 |

| 37 | 0.00 | 1.62 |

| 38 | 0.00 | 0.00 |

| 39 | 0.00 | 0.00 |

| 40 | 0.00 | 0.00 |

| 41 | 4.01 | 0.00 |

| 42 | 4.75 | 0.00 |

| 43 | 0.00 | 0.00 |

| 44 | 0.00 | 0.28 |

| 45 | 0.00 | 0.00 |

| 46 | 8.71 | 0.00 |

| 47 | 0.00 | 0.00 |

| 48 | 0.00 | 0.57 |

| 49 | 0.57 | 0.00 |

| 50 | 0.00 | 0.00 |

3. Results and Comparisons

In our experiments, there were 2,500 spectral curves for 50 wood species. These samples were divided into a training set and a testing set using k-fold cross validation [29] with k = 5, so that the training set consisted of 2000 samples and the testing set consisted of 500 samples. Consequently, there were 40 training samples and 10 testing samples for each wood species. The PC1 and PC2 of the training and testing datasets were distributed as shown in Figure 11, where the distributions of both the training and testing sets were dispersed and uniform, indicating that the dataset was suitable for pattern classification.

3.1. Comparisons with Conventional Algorithms

| Model | Dataset | ORA (%) | TR (ms) |

|---|---|---|---|

| BC | VIS (PCA7dim) | 76.20 | 0.050256 |

| NIR (PCA7dim) | 77.80 | 0.037920 | |

| VIS (TSNE7dim) | 55.00 | 0.047946 | |

| NIR (TSNE7dim) | 61.60 | 0.052136 | |

| RF | VIS (PCA7dim) | 83.80 | 2.437714 |

| NIR (PCA7dim) | 84.00 | 2.326404 | |

| VIS (TSNE7dim) | 76.40 | 2.654658 | |

| NIR (TSNE7dim) | 80.40 | 2.310316 | |

| BPN | VIS (PCA7dim) | 63.84 | 0.135030 |

| NIR (PCA7dim) | 61.48 | 0.117026 | |

| VIS (TSNE7dim) | 54.04 | 0.123924 | |

| NIR (TSNE7dim) | 60.80 | 0.140712 | |

| LIBSVM | VIS (PCA7dim) | 83.60 (linear) | 0.092936 |

| 54.80 (RBF) | 0.123525 | ||

| NIR (PCA7dim) | 84.40 (linear) | 0.081890 | |

| 80.20 (RBF) | 0.131751 | ||

| VIS (TSNE7dim) | 72.40 (linear) | 0.086184 | |

| 23.80 (RBF) | 0.253162 | ||

| NIR (TSNE7dim) | 77.60 (linear) | 0.091526 | |

| 23.00 (RBF) | 0.211325 | ||

| LeNet-5 | VIS | 92.40 | 0.763721 |

| FRC | VIS (PCA4dim) | 90.20 | 0.137274 |

| NIR (PCA4dim) | 92.92 | 0.141672 | |

| VIS (TSNE4dim) | 71.88 | 0.159326 | |

| NIR (TSNE4dim) | 81.12 | 0.173528 | |

| D-S FRC | VIS + NIR (PCA4dim) | 93.84 | 3.026516 |

| FW-D-S FRC | VIS + NIR (PCA4dim) | 94.76 | 3.071578 |

- VIS: visible band; NIR: near-infrared band; FRC: fuzzy reasoning classifier; PCA: principal component analysis; BC: Bayes classifier; RF: random forest; BPN: BP network; CNN: convolutional neural network; LIBSVM: support vector machine; T-SNE: T-distributed stochastic neighbor embedding; D-S: Dempster–Shafer.

| Configuration | Model | Parameter |

|---|---|---|

| CPU | Intel I5-8400 | 6 core 6 thread, CPU clock speed 2.8 GHz |

| RAM | Samsung | 8 GB, DDR4 2800 MHz |

| Hard disk | Samsung | 256 GB, SSD read: 470 MB/s, write: 473 MB/s |

In the RF classification, the time complexity was O(m · ntest · d)—where m is the feature dimension, d is the tree depth, and the RF number is 500. In the BP classification, the time complexity was — where ni refers to the node number of the ith layer and d refers to the overall layer number, including the input and output layers. The structure of the BP network was 7 (input layer)-31 (hidden layer)-20 (output layer), and the transfer functions for the input and hidden layers were logsig and purelin, respectively. The BP network’s iteration times were 1000 with a target error and learning rate of 0.01. In the LeNet-5 classification, spectral dimension reduction was not used, whereas the spectral band 451–984 nm was used to form a 40 × 40 matrix. The training dataset was used to train a CNN with a structure comprising six convolution-2 pooling-12 convolution-2 pooling. The size of the convolution cores in the convolutional layers was 5, with a learning rate of 1 and training times of 1000. The time complexity was —where Mi is the size of the output feature image in the ith layer, Ki is the size of the convolution cores in the ith convolutional layer, Ci is the core number of the ith convolutional layer, and d is the overall convolutional layer number. In the SVM classification, a linear kernel function for the PCA algorithm and a radial basis function (RBF) for the T-SNE were used with a penalty factor of 1.2.

In our fuzzy reasoning classifier (FRC) classification, we observed that the PCA algorithm performed much better than the T-SNE algorithm in terms of spectral dimension reduction. The best ORA of 96.3636% was achieved with our FRC, and the D-S decision-level fusion scheme was improved with the use of four PCs for the VIS band and six PCs for the NIR band, with a TR of 2.535915 ms for one testing sample.

In other words, the RF algorithm could achieve good and efficient classification results using a large training dataset. However, its classification performance was poor with our small dataset—our training dataset only consisted of 2000 wood samples—and with low-dimensional feature vectors. Similarly, the BP neural network and LeNet-5 also required a large training dataset to complete network training. Regarding spectral dimension reduction, the classification results using our FRC and T-SNE were relatively poor compared with those of the PCA algorithm as the T-SNE is a nonlinear dimension reduction algorithm.

3.2. Comparisons with State-of-the-Art Algorithms

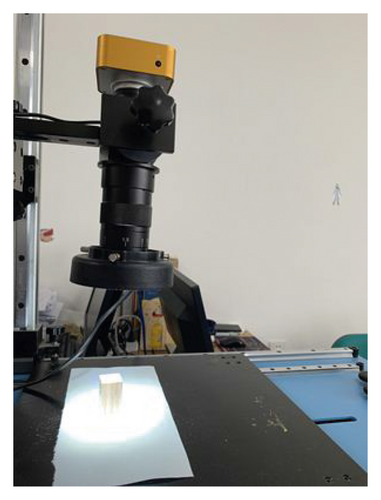

We compared the proposed scheme with other state-of-the-art wood species recognition schemes, all of which performed wood species classification using digital images of wood sample cross sections. We set up the image collection experimental equipment, as shown in Figure 12, consisting of a charge-coupled device (CCD) camera, an optical microscope with a magnification ratio of 10–100, and a light-emitting diode (LED) light source. The wood color image selected was of size 1600 × 1200 pixels and magnification ratio 50. We used this imaging equipment to capture the cross-sectional color image of each wood sample so that each species included 50 images. This color image was then converted into a grayscale image of size 400 × 300 pixels, although the color image was used in the CNN scheme. The computer configuration used is presented in Table 13, and the classification performance comparison is given in Table 14. We found that our FRC and improved D-S decision-level fusion scheme outperformed all other state-of-the-art classification schemes in terms of ORA.

| Configuration | Model | Parameter |

|---|---|---|

| CPU | Intel I7–9700F | 8 core 8 thread, CPU clock speed 3.00 GHz |

| GPU | NVIDIA GeForce | 8 GB, GDDR6 1650 MHz |

| RTX 2060 SUPER | 2076 stream processor | |

| RAM | Corsair Memory | 16 GB, DDR4 3000 MHZ |

| Hard disk | Intel | 256 GB, SSD read: 1570 MB/s, write 540 MB/s |

| Reference | Model | ORA (%) |

|---|---|---|

| [35] | I-BGLAM | 72.32 |

| [36] | LBP | 68.68 |

| [19, 37] | SPPD | 7.32 |

| SPPD + I-BGLAM | 32.68 | |

| Fuzzy + SPPD + BGLAM | 34.68 | |

| [18] | GA | 25.68 |

| GA + KDA | 29.00 | |

| [38] | VGG16 | 83.36 |

| [39] | SqueezeNet | 86.28 |

| [40] | ResNet18 | 94.40 |

| [41] | GoogLeNet | 92.80 |

| [42] | CNN | 83.00 |

| Our scheme | FRC + improved D-S fusion | 94.76 |

- ORA: overall recognition accuracy; TR: time requirement; I-BGLAM: improved basic gray-level aura matrix; LBP: local binary pattern; SPPD: statistical property of pore distribution; GA: genetic algorithm; KDA: kernel discriminant analysis; CNN: convolutional neural network; FRC: fuzzy reasoning classifier; D-S: Dempster–Shafer.

In these state-of-the-art wood species recognition schemes, Yusof et al. employed texture feature operators (e.g., basic gray-level aura matrix (BGLAM), improved basic gray-level aura matrix (I-BGLAM)), and pore distribution features (i.e., statistical properties of pores distribution (SPPD)) and several feature extraction algorithms, such as the genetic algorithm (GA) and kernel discriminant analysis (KDA) to classify more than 50 tropical wood species [18, 19, 35, 37]. However, their schemes were only suitable for the classification of hardwood species with pores. For softwood species without pores, the classification performance of their schemes was poor. Because our 50 wood species consist of both hardwood and softwood species, their schemes delivered poor classification results. Another texture feature operator, the local binary pattern (LBP), and a deep learning neural network were also used for classification comparisons [36, 38–42].

4. Conclusions

In this study, a novel wood species spectral classification scheme was proposed based on a fuzzy linguistic rule classifier and an improved decision-level fusion technique based on the D-S evidential theory. The VIS/NIR spectral reflectance curves of a wood sample’s cross section were measured using a USB 2000-VIS-NIR spectrometer and a FLAME-NIR spectrometer. As the VIS and NIR bands for the wood sample’s cross section have different spectral stabilities, they were processed using two different FRCs, and their classification results were further fused using an improved decision-level fusion technique based on the D-S evidential theory. The test results showed that the best ORA of our scheme reached 94.76% for 50 wood species, with little computational overhead.

More generally, our scheme has the following advantages compared with existing wood species recognition schemes. First, the acquired wood spectral curves were usually noisy and variable because of the variability of the external environment—such as optical illumination intensity—and the wood sample’s internal factors—such as humidity, surface roughness, and intraspecies differences. The uncertainty of these wood spectral features could be resolved using fuzzy processing to some extent. Second, our scheme rapidly achieved good recognition results, particularly with small datasets, and the cost of the VIS/NIR spectrometer used was relatively low. The spectral dataset was a 1D signal set, which had a relatively low computational overhead compared to other 2D image datasets. Therefore, our scheme could be readily applied to wood recognition and classification in the industry in the near future.

However, our scheme can only classify the known wood species which is included in our training dataset. In practical work, some unknown wood species samples may be encountered by our wood classification system. This issue is an unknown species identification problem under the open-world assumption, which can be solved in the certainty or uncertainty framework. For example, in an uncertainty framework, Tang et al. have proposed a new method for generation of generalized basic probability assignment (GBPA) based on evidence theory [43]. In this method, the maximum, minimum, and mean values were used to produce triangular fuzzy number of each class in different attributes, and the intersection points of test samples with triangular fuzzy numbers were calculated. Based on this method, it is feasible to produce a classifier which can effectively recognize the known and unknown classes. Therefore, we plan to investigate and improve this method for wood species classification, since the wood species quantity is greatly larger than the class quantity in [43]. On the other hand, in a certainty framework, we have also used a one-class classifier Support Vector Data Description (SVDD) [44], which can preclassify the wood samples into two categories (i.e., the known and unknown wood categories).

Conflicts of Interest

The authors declare no conflicts of interest.

Acknowledgments

This research was supported by the National Natural Science Foundation of China (Grant no. 31670717) and by the Fundamental Research Funds for the Central University (Grant no. 2572017EB09).

Open Research

Data Availability

Access to research data is restricted. The experimental instruments and wood species samples are owned by other academic institutions; therefore, we cannot publicly release the research spectral and image wood dataset.