Recognition of Point Sets Objects in Realistic Scenes

Abstract

With the emergence of new intelligent sensing technologies such as 3D scanners and stereo vision, high-quality point clouds have become very convenient and lower cost. The research of 3D object recognition based on point clouds has also received widespread attention. Point clouds are an important type of geometric data structure. Because of its irregular format, many researchers convert this data into regular three-dimensional voxel grids or image collections. However, this can lead to unnecessary bulk of data and cause problems. In this paper, we consider the problem of recognizing objects in realistic senses. We first use Euclidean distance clustering method to segment objects in realistic scenes. Then we use a deep learning network structure to directly extract features of the point cloud data to recognize the objects. Theoretically, this network structure shows strong performance. In experiment, there is an accuracy rate of 98.8% on the training set, and the accuracy rate in the experimental test set can reach 89.7%. The experimental results show that the network structure in this paper can accurately identify and classify point cloud objects in realistic scenes and maintain a certain accuracy when the number of point clouds is small, which is very robust.

1. Introduction

Point cloud is a collection of points. It contains rich information, which can be three-dimensional coordinates X, Y, Z, color, intensity value, time, and so on. Point clouds are representative of geometric data structures. In this paper, we use deep learning network structures to perform feature extraction and recognition for each point cloud object in a realistic scene.

Compared with other methods, most of the objects in point cloud scene model in this paper have no repeated occlusion. Euclidean distance clustering segmentation methods can be used to segment objects in complex scenes. The samples n points require as much original feature information as possible, so the Monte Carlo method is used. Using the deep learning network structure proposed in this paper to directly recognition point cloud objects can greatly reduce the amount of data calculation (compared to mainstream methods such as converting point clouds to regular depth maps, multiviews, or voxel grids). Point clouds do not introduce quantization artifacts, which can better maintain the natural invariance of data. The experimental results show that the network structure in this paper can accurately identify and classify point cloud objects in realistic scenes and maintain a certain accuracy when the number of point clouds is small, which is very robust.

The deep learning network structure proposed in this paper to identify point cloud objects is a systematic method. The three-dimensional coordinates of n points of a point cloud object are input to a deep learning network, and local features or global features are extracted and added to other dimensions to identify and classify point cloud objects in a realistic scene. Before the point cloud object is input into the deep learning network structure, each point of the input is preprocessed identically and independently, and each point of each point cloud object includes information of only three coordinates.

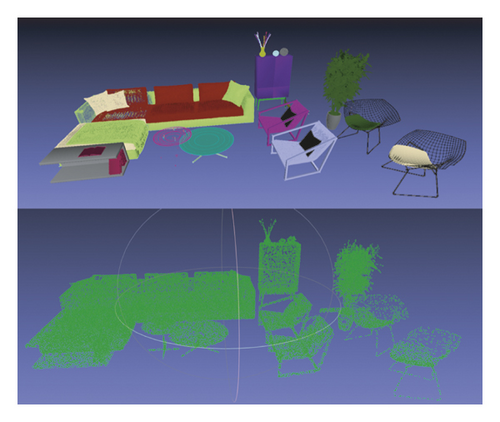

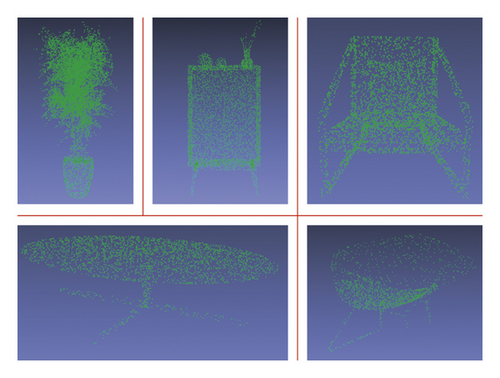

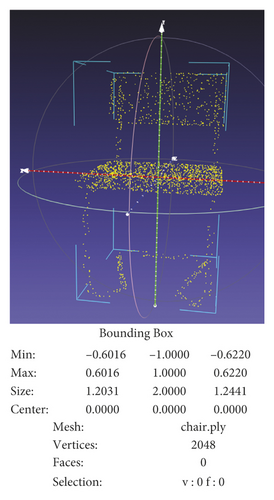

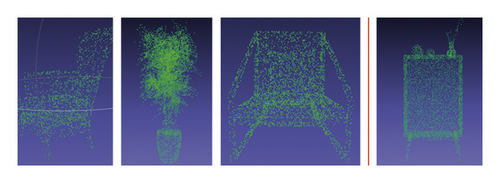

The realistic scene in this article is shown in Figure 1. The point cloud diagram of the realistic scene in this paper is shown in Figure 2. Figure 3 is a single-point cloud object after segmenting the realistic scene in this paper using Euclidean distance clustering segmentation. A single-point cloud object segmented using Euclidean distance clustering is input to the deep learning network trained in advance in this paper, and the global features of a single point cloud object are extracted through the max-pooling layer in the network structure. Then, the multilayer perceptron connected through the fully connected layer performs classification and recognition of the point cloud object on such learned features.

The input data format is easy to use rigid or affine transformation, so the experiment results can be further improved. For the realistic scene and network structure adopted in this paper, this paper provides both theoretical basis and numerical evaluation.

- (i)

Use the Euclidean distance clustering segmentation method to divide multiple objects in realistic scenes into clusters and perform unified data processing. The same and independent processing of data is done by the Monte Carlo sampling method, with zero mean and normalization.

- (ii)

Use a deep learning network architecture that directly consumes irregular point sets to complete the recognition task.

- (iii)

Provide an analysis for the accuracy of object recognition in the realistic scene using the improved network method and to evaluate the robustness of the network method.

The rest of this article is organized as follows: Section 2 reviews various methods proposed in the literature for different forms of 3D data; Section 3 describes two main problems addressed in paper; Section 4 proposes a solution to the first problem in Section 3; Section 5 proposes a solution to the second problem in Section 3; Section 6 mainly analyzes the experimental results and the robustness of the proposed network method; and Section 7 illustrates the deficiencies in the experiment and suggests work to be done next.

2. Related Work

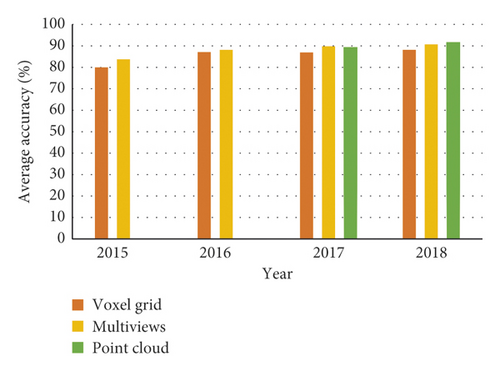

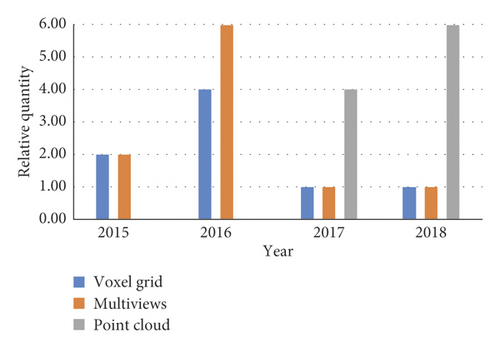

There are three main methods for 3D object recognition, which are based on 3D voxel grid, collections of images, and point cloud data. The point cloud learning-based approach is currently getting more accurate as shown in Figure 4, and the number is growing dramatically as shown in Figure 5. In addition to these methods, there are some other methods such as spectral convolutional neural network (CNN), feature-based deep neural network (DNN), etc.

Methods based on collections of images data are as follows. The main research is to use the geometric method to transform a three-dimensional object into several multiview geometric two-dimensional images and retain as much feature information as possible. In addition, in recent years, many deep learning algorithms are mostly based on two-dimensional images, and many excellent research results have appeared in two-dimensional images. Qi et al. and Su et al. [1, 2] attempt to convert a 3D point cloud object into multiple different 2D images and then design a new convolutional neural network algorithm structure to integrate the view information of multiple 2D images into a compact shape descriptor. Yi et al. [3] uses multiple views to represent local information on the graph by parameterizing kernels in the spectral domain spanned by the characteristic roots of the graph. Experiments have shown that all benchmark datasets in each task achieve state-of-the-art performance.

Methods based on the 3D voxel grid data are given as follows: this method works by meshing or voxelizing various 3D data and then designing the corresponding 3D convolutional neural network for feature extraction and recognition. References [1, 4–7] is a series of convolutional neural network algorithms whose input data is a voxel grid, but these algorithms all consume a lot of computational costs because of the sparseness of the data and the features of convolution in 3D. The requirements for resolution are high. FPNN [8] and Vote3D [9] have proposed different solutions to the problem of sparsity of voxel grid data. However, these two schemes are not very ideal, and the experimental results are not very satisfactory, so it is still a very big challenge to process point cloud data with a very large amount of data.

Methods based on the point clouds. This method mainly includes two types. One is to convert the point cloud data into a multiview, polygon network, or voxel grid and then use deep learning networks for feature extraction and recognition (as described above). The other part is to directly process the point cloud data. The recognition accuracy is high and there is development in speed in the last three years. Qi et al. [10] are the first to directly process the point cloud data, avoiding the unnecessary huge problem of the data and well respecting the replacement and deformation of the input points. Based on this, they proposed a new neural network structure PointNet [10] and PointNet++ [11] to directly process point cloud data. Based on the principle of kdtree, Klokov and V. Lempitsky [12] propose a network algorithm Kdnetwork that is different from the current mainstream convolution structure. It uses rasterization on a uniform two-dimensional or three-dimensional grid to avoid bad scaling behavior for 3D models and point clouds. Identifying tasks is explained as follows. Zaheer et al. [13] mainly propose a series of permutation invariant functions that can be run on a set. This series of permutation invariant functions can be used in various positions. Among the various algorithms that process point cloud data, the best and the most accurate are PointCNN [14] proposed by researchers at Shandong University based on convolution operators in convolutional neural networks. PointCNN uses χ transform to weight the input features associated with points, which works very well in classification and segmentation scenes.

For spectral CNN [15–17], at present, this series of methods is only applicable to convolutional neural networks such as organic matter. Feature-based DNNs (deep neural networks) [18,19] usually convert a series of 3D data features into appropriate features and then use a fully connected layer to classify and recognize the point cloud data. In summary, CNN can extract high-level semantic information from the original data through a series of operations such as convolution and pooling and finally generate a valid feature. It aims to improve the classification accuracy of large-scale multicategory complex 3D models. Firstly, the three-dimensional polygon mesh model is discretized into three-dimensional point cloud data, and then the deep feature of the model is extracted by convolution and pooling of the deep point cloud convolutional neural network, and the model is classified and identified by using multilayer perceptron.

On the Generation of Point Cloud Data Sets: Step One in the Knowledge Discovery Process [20], Andreas Holzinger et al. describe the case for natural point clouds and then provide some fundamentals of medical images, particularly dermoscopy, confocal laser scanning microscopy, and total-body photography; they describe the use of graph theoretic concepts for image analysis, give some medical background on skin cancer, and concentrate on the challenges when dealing with lesion images and the discussion of related algorithms. The point cloud data is extracted from different weakly structured sources and topologically analyzed to produce feasible results. The quality of these results depends not only on the quality of the algorithm itself, but also to a large extent on the quality of the input maps they receive, so point clouds are a necessary preprocessing step and affect the quality of the experimental results.

3. Problem Statement

In order to complete the recognition of point cloud objects in realistic scenes, two key problems need to be solved. The first key question: how to separate multiple point cloud objects in a realistic scene. Since the object input to the deep learning network is a complete point cloud object, and each point cloud object in the realistic scene is clustered together, an algorithm needs to be designed to segment multiple point cloud objects in the scene into a single. It is mainly based on the information that each point cloud object has different textures and colors to segment and save to each separate file and then input each individual point cloud object to the trained deep learning network for classification and recognition.

The second key issue is to design and propose a new deep learning network structure for point cloud data to process it directly. After solving the first critical problem mentioned above, we will obtain a single point cloud object for preprocessing, and then input each point of the point cloud object to the trained deep learning network for classification and recognition. Point cloud is a collection of x, y, and z coordinates, as well as color, normal vectors, and other feature channel feature information. For the convenience of processing and clarity, this article only uses the three coordinates (x, y, z) of each point as the input of the deep learning network.

4. Euclidean Distance Clustering Segmentation and Data Preprocessing

Euclidean distance clustering segmentation and data preprocessing are divided into two parts. First, the working principle of Euclidean distance clustering segmentation algorithm is introduced (Section 4.1). The method has a better effect in a realistic scene with less overlap. Second, all point cloud objects are processed identically and independently. These processing methods mainly include Monte Carlo sampling, zero mean, and normalization (Section 4.2).

4.1. Euclidean Distance Clustering Segmentation

This paper first calculates the Euclidean distance between two points in the point cloud data and uses the distance less than the specified threshold as a criterion for classification. Then iteratively calculates until the distance between all classes is greater than the specified threshold and completes Euclidean clustering. The specific steps are (1) use the Octree method to establish the topological organization structure of the point cloud data; (2) perform a k-nearest neighbor search on each point, calculate the Euclidean distance between the point and k neighboring points, and classify the smallest class; (3) set a certain threshold and iteratively calculate Step (2) until the distance between all classes is greater than the specified threshold. For a real scene, calculate the distance dij from each point in the scene to all other points, and then calculate the density, ρi = ∑β(dij − dc). The Euclidean distance of the maximum density point is δi. Compare ρi and δi values, with a larger value as the center point in a series of point cloud objects. Choose the appropriate threshold r according to the different scenes that need to be segmented, (1) use the above method to find the center point p1 in space, and compare the distance between n and p1, put the points p2, p3, … whose distance is less than the threshold r into class A; (2) find any point p2 in A\p1, repeat Step (1) again; (3) then A\p1p2 find a dot, repeat Step 1) again, find p7, p8… and put in A; (4) when A no longer changes, the entire search process is complete. The segmentation result of the realistic scene is shown in Figure 3.

4.2. Data Processing

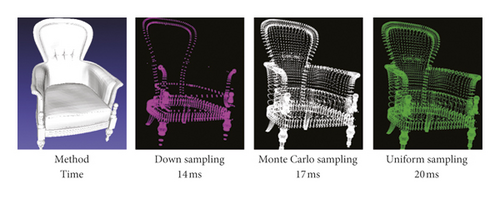

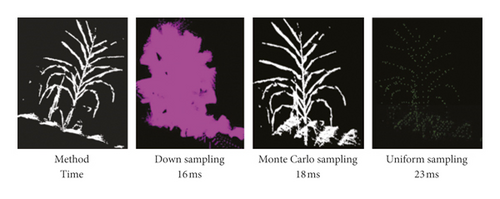

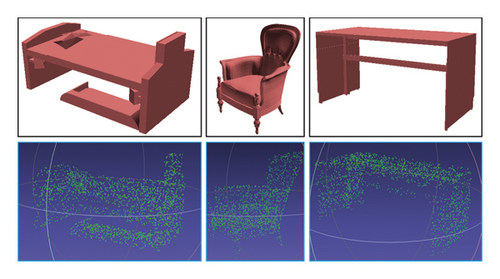

After performing Euclidean distance clustering and segmentation on a realistic scene, there will be many different point cloud objects, and these point cloud objects are composed of different numbers of points. Because of the premise of a network that recognizes point cloud objects: each point cloud object has the same number of points, so you need to use the sampling method to sample each point cloud object into n points. The n points after sampling are zero-mean and normalized. Each object is processed into a uniform format and entered into a network algorithm. The choice of sampling method is crucial. The sampling methods mainly considered in this paper are Monte Carlo sampling, downsampling, and uniform sampling. These three methods are used to sample the same object into 1024 points. The results after sampling and the time spent sampling are shown in Figure 6. The figure clearly shows that the Monte Carlo sampling method and uniform sampling can better show the contours and shapes of point cloud objects. However, when there are a large number of point clouds and each point contains more dimensional information, the unified sampling method requires greater computational cost and time cost. Taking the above two points into consideration, the method of sampling point cloud objects in this paper uses the Monte Carlo sampling method.

The main content of the Monte Carlo sampling method is to use Monte Carlo ideas to maximize the approximation of a series of data. That is, the point cloud is sampled, and the sampled points must retain the information of the original point cloud to the greatest extent. The larger the number of sampling points, the more accurate the approximation result is and the more it matches the distribution of the points in the original point cloud. For the theory of Monte Carlo sampling in this paper, the proof and algorithm are implemented as follows.

Therefore, the cumulative distribution function of the probability distribution can usually be obtained by integrating the probability density function. If you need to get n samples, repeat the following steps n times. (1) The computer can randomly sample a value from the point cloud data, expressed in μ. (2) Calculate the value x of F−1(u), where x is a sample point derived from f(x).

5. Deep Learning on Point Sets

Deep learning on point sets are divided into two parts. First, Section 5.1 introduces two main problems, solutions and proofs, in the process of deep learning processing point sets. Second, Section 5.2 introduces the improved network structure for identifying objects.

5.1. Problems of Point Sets in ℝn

All data in the point cloud are a collection of points from European space. The point set of these Euclidean spaces will encounter two key problems: the disorder of the point cloud and the invariance of rotation and translation during the processing of the algorithm. This article gives corresponding solutions to these two problems.

5.1.1. Unordered

Affected by the acquisition equipment and the coordinate system, the same object is scanned using different equipment or positions, and the order of the three-dimensional points varies greatly. The point cloud data is very different from the pixel arrangement in the two-dimensional image or the voxel arrangement in the voxel grid. Point cloud is a set of points without a fixed order. When using a deep learning network to perform different tasks on a point cloud, no matter in what order it is input to the network, the same results must be output. In RGB-D or grayscale images, the relative position of each pixel is fixed, and there is no problem of disorder. However, for point cloud data, there are N! types for entering points into the network using a different order. Therefore, the input point cloud data needs to be processed accordingly.

Theorem 1. Suppose f is a continuous set function about Hausdorff distance dH, ∀ε > 0, ∃ a continuous function h and asymmetric function g(xi, …, xn = γ∘Max), such that for any W ϵ Q,

Formally, letQ = {W : W⊆[0,1]m and |W| = n}, f : Q⟶ℝ is a continuous set function on Q about Hausdorff distance dH, ∀ε > 0, ∃δ > 0, for any W, W′ϵQ, if dH(W, W′) < δ, then |f(W) − f(W′)| < ε.

5.1.2. Rotation and Translation Invariance

Point cloud is a geometric object. If the point cloud undergoes certain geometric transformations (such as rotation and translation operations), the semantic label of point cloud classification and segmentation must be constant. Therefore, we expect our learning of the point set to be invariant to these transformations.

After adding regular terms, not only can you get a more stable solution, but also reduce the parameters to a large extent.

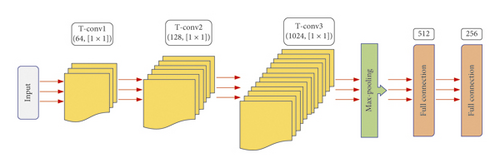

The schematic diagram of adjusting the network structure is shown in Figure 8. The adjustment network is a subnetwork used to predict the transformation matrix in the feature space. It learns the transformation matrix that is consistent with the feature space dimension by learning from the input data, which multiplies the learned transformation matrix with the original data. The transformation operation in the data feature space causes each point of the subsequent input to be associated with each point in the input. Through such processing, the feature inclusion in the input point cloud data is hierarchically abstracted. The adjustment network consists of three convolutional layers, one max-pooling layer, and two fully connected layers. Convolution layer 1 has 64 feature maps, convolution kernel is [1 × 1]; convolution layer 2 has 128 feature maps, convolution kernel is [1 × 1]; convolution kernel 3 has 1024 feature maps, and convolution kernel is [1 × 1] The full connection layer is 512 and 256 nodes, respectively.

5.2. Network Architecture

Because PointNet’s [10] classification and segmentation network has achieved good results and its ability to extract features is very strong, it motivates our recognition requirements for point cloud objects in realistic scenes. This article draws on the classification network of PointNet. The adjustment of the structure of this network in this paper makes the network further enhance the feature extraction ability. The experimental results show that the proposed deep learning network structure can improve the accuracy of point cloud object classification and recognition, while also greatly improving the accuracy on the training and testing sets. The network structure is shown in Figure 9. The input data is the 3D coordinates of a series of point sets for each point cloud object. The max-pooling layer solves the problem of disorder of point cloud data and adjusts the network to solve the problem of rotation and translation invariance of point cloud objects. The structure of feature extraction for point cloud objects is accomplished through a combination of multilayer perceptrons and adjustment networks. The max-pooling layer can also obtain the global characteristics of each object. Through this process, the point cloud object recognition in the realistic scene is completed.

The main part of feature extraction in Figure 9 includes three adjustment networks, a maximum pooling layer, and three multilayer perceptrons, which are input through the three-dimensional coordinates of n points. The features are extracted, and the extracted features are mapped to a column vector through the maximum pooling layer to complete the feature extraction of the point cloud data. In order to complete the task of identifying and classifying point cloud data, it is necessary to perform probabilistic calculations on the features extracted by the deep network architecture. Therefore, it is necessary to connect the fully connected layer after the maximum pooling layer to map the learned feature representation to the sample tag space. In the figure, the first fully connected layer contains 512 neurons, the second fully connected layer contains 256 neurons, and the number of the third fully connected layer neurons is the number of categories for the classification task. The dropout layer is set to zero for each neuron node with a probability of 70%. At this time, the effect is also the best. This can alleviate the complex collaborative adaptation between neurons, reduce neuron dependencies, and avoid network training. Overfitting occurs during the process. In addition, the generalization ability of the model can be improved and the complexity of the model can be reduced.

6. Experiment

Experiments are divided into two parts. First, Section 6.1 provides detailed training process. Second, Section 6.2 analyzes the experimental results and tests the robustness of the network.

6.1. Training Process

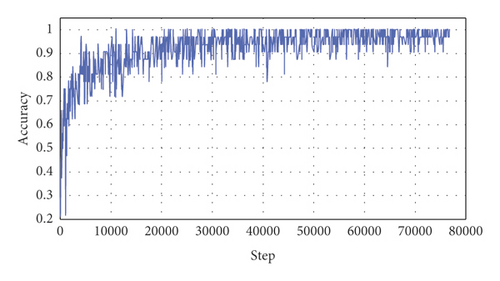

In this paper, in order to train the deep learning network structure, we choose the ModelNet [22] data set established by Stanford University as the training set and test set for network learning. ModelNet data has 40 different kinds of 3D models and each has a corresponding number, and there are 12, 311 3D objects in total. We then divide these models into 4 files with, 64 group per file and 32 models per group. The remaining 2048 model files are used as test sets. They were written in a file in the same way. These files are entered into the network and trained. Figure 10 shows some of the model files in ModelNet40 and the point cloud files that have been processed for training. During the training of deep learning network algorithms, point clouds are randomly rotated along the upper axis and point clouds are dynamically added by adding Gaussian noise.

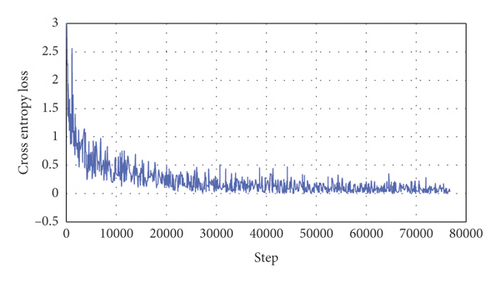

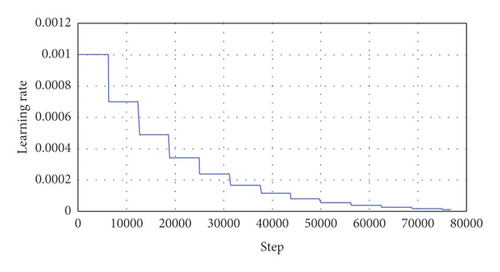

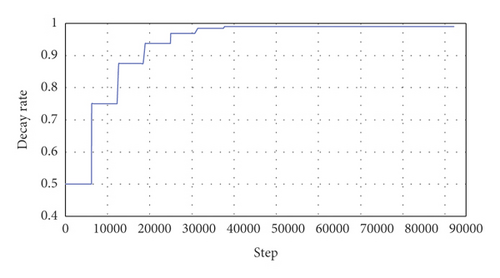

The server hardware configuration for deep learning network training is as follows: Ubuntu 18.04 system, 8-core 16-thread Inter Core i7 − 5960 X processor, 2 NVIDIA M 4000 graphics cards, 16 GROM, TensorFlow 1.7.0 [23]. The training process record of the entire deep learning network algorithm is shown in Figure 11 below.

Due to the particularity of deep learning networks, setting different parameters for the same deep learning network will have different results. In order to reproduce the experimental results, record the parameter settings of the deep learning network before training in this paper in Table 1.

| Initial value | End value | |

|---|---|---|

| Accuracy | 0 | 0.988 |

| Cross-entropy loss | 1.9408 | 0.024 |

| Learning rate | 0.001 | 0.0000133 |

| Decay rate | 0.5 | 0.982 |

6.2. Results and Analysis

After the deep learning network training is completed, the preset test set is used to test the recognition accuracy. There are three main indicators: average loss, average accuracy, and average classification accuracy. The test results are shown in Table 2.

| Average loss | 0.5014 |

|---|---|

| Average accuracy | 0.897 |

| Average classification accuracy | 0.873 |

The experimental results in this paper are compared with the better method of idle money. The comparison results are shown in Table 3.

The task of this paper is to identify point cloud objects in realistic scenes. Table 4 below is an analysis of the accuracy of recognition objects that may appear in realistic scenes.

| Bed | Guitar | Cup | Bottle | Bowl | Curtain |

|---|---|---|---|---|---|

| 0.99 | 0.94 | 0.70 | 0.70 | 0.99 | 0.90 |

| Bookshelf | Person | Door | Keyboard | Plant | Piano |

| 0.91 | 0.95 | 0.85 | 0.85 | 0.80 | 0.87 |

| Chair | Laptop | Lamp | Sofa | Desk | Wardrobe |

| 0.98 | 0.99 | 0.95 | 0.97 | 0.99 | 0.55 |

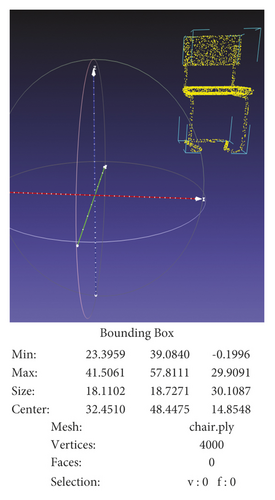

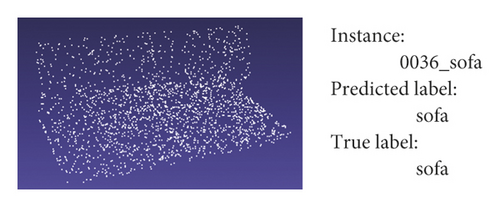

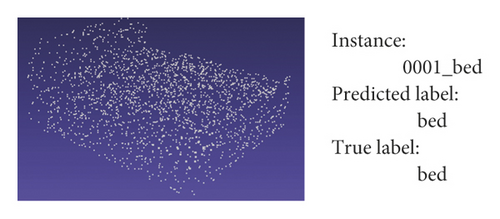

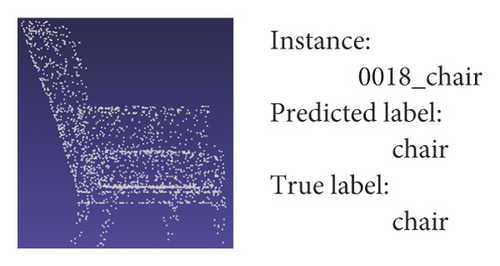

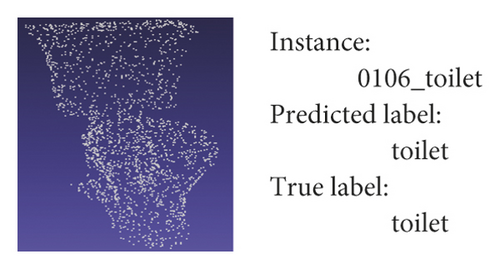

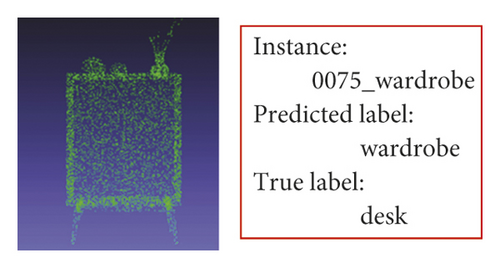

Table 4 shows the method used in this paper to identify some point cloud objects in the realistic scene and the accuracy rate of recognition. It has a high accuracy rate for some objects with obvious features. In short, the method in this paper can accurately identify objects in realistic scenes. Figure 12 shows an example of point cloud object recognition in the real scene by the algorithm in this paper. Recognize the objects in the realistic scene, the main information and results of the recognition are shown in Figure 13.

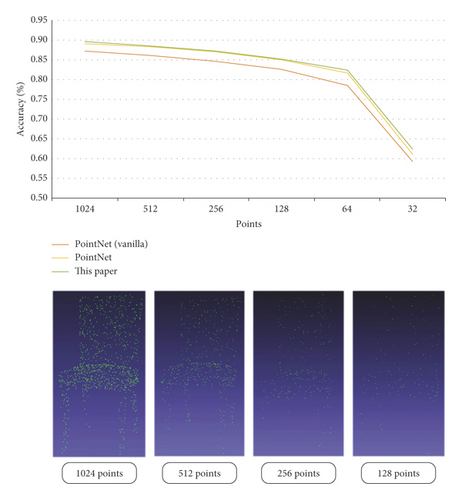

In order to test the robustness of the network algorithm in this paper, we test the accuracy of the point cloud object recognition by gradually reducing the number of each point cloud object. Ideally, as the number of point clouds decreases, the accuracy is maintained as much as possible. Through experiments, we find that with the reduction of the number of point clouds, the accuracy of point cloud object recognition gradually decreases, but the accuracy of recognition is maintained at a high level. Even with only 64 points of information, the accuracy rate remains above 60%. The robustness test of the deep learning network in this paper is shown in Figure 14.

7. Conclusion

This paper proposes a new approach to recognize point sets objects in realistic scenes by clustering and segmenting point cloud data in realistic scenes using on the Euclidean distance clustering segmentation algorithm. It effectively solves the problem of clustering and segmentation of multiple objects in complex scenes. Using a deep learning network that directly processes point cloud greatly reduces the amount of data calculation. Point cloud does not introduce quantization artifacts, which can better maintain the natural invariance of data. The experimental results show that the network structure in this paper can accurately identify and classify point cloud objects in realistic scenes and maintain a certain accuracy when the number of point clouds is small, which is very robust. For any point cloud object has global features and local features, the algorithm proposed in this paper mainly extracts global features and does not make use of local features. In the next work, we want to further change the network structure to extract local features and further improve the accuracy of point cloud object recognition in realistic scenes.

Conflicts of Interest

There are no conflicts of interest regarding the publication of this manuscript.

Acknowledgments

This research was supported by Natural Science Foundation of Hebei Province of China (No. F2017402182) and Science and Technology Research Projects of Colleges and Universities in Hebei, China (No. ZD2018207).

Open Research

Data Availability

The data used to support the findings of this study can be found in the online versions at http://openaccess.thecvf.com/content_cvpr_2017/papers/Qi_PointNet_Deep_Learning_CVPR_2017_paper.pdf