WiFi Offloading Algorithm Based on Q-Learning and MADM in Heterogeneous Networks

Abstract

This paper proposes a WiFi offloading algorithm based on Q-learning and MADM (multiattribute decision making) in heterogeneous networks for a mobile user scenario where cellular networks and WiFi networks coexist. The Markov model is used to describe the changes of the network environment. Four attributes including user throughput, terminal power consumption, user cost, and communication delay are considered to define the user satisfaction function reflecting QoS (Quality of Service), and Q-learning is used to optimize it. Through AHP (Analytic Hierarchy Process) and TOPSIS (Technique for Order Preference by Similarity to an Ideal Solution) in MADM, the intrinsic connection between each attribute and the reward function is obtained. The user uses Q-learning to make offloading decisions based on current network conditions and their own offloading history, ultimately maximizing their satisfaction. The simulation results show that the user satisfaction of the proposed algorithm is better than the traditional WiFi offloading algorithm.

1. Introduction

With the popularity of smart devices, cellular data traffic is growing at an unprecedented rate. Cisco visual network index [1] predicts that global mobile data traffic will reach 49 exabytes per month in 2021, which is equivalent to six times that of 2016. In order to solve the problem of data traffic explosion, we can add cellular BS (base station) or upgrade the cellular network into networks such as LTE (long-term evolution), LTE-A (LTE-Advanced), and WiMAX release 2 (IEEE 802.16m), but this is usually not economical, which requires expensive CAPEX (capital expenditure) and OPEX (operating expense) [2]. In addition, the limited licensed band is another bottleneck to improve network capacity [3]. As a result, mobile data offloading technology [4] has gradually become a mainstream in 5G, and WiFi offloading is one of the most effective offloading solutions.

WiFi offloading technology transfers part of the cellular network load to WiFi network through the WiFi AP (access point), by which we can solve the congestion in licensed band, achieve load balancing, and fully utilize unlicensed spectrum resources. Due to the effectiveness of WiFi offloading, many literatures have studied it. Li et al. [5] considered the coexistence of WiFi and LTE-U on unlicensed bands and offloaded LTE-U services to WiFi networks, establishing multiple targets for maximizing LTE-U user throughput while optimizing WiFi user throughput. To solve the problem, the authors used the Pareto optimization algorithm to get the optimal value. In [6], a satisfaction function reflecting the user communication rate is defined in the scenario of overlapping WiFi network and cellular network, and a resource block allocation matrix is constructed. Based on the accurate potential game theory, the best response algorithm is used to optimize the total system satisfaction. Cai et al. [7] proposed an incentive mechanism to compensate cellular users who are willing to delay their traffic for WiFi offloading. The authors calculated the optimal compensation value according to the available attribute parameters in the scenario and modeled the problem into two stages. In the first stage of the Stackelberg game, the operator announces that it would provide users with uniform compensation to delay its cellular services. In the second phase, each user decides whether to join the delayed offloading based on the compensation, network congestion, and estimation of the waiting cost for WiFi connection. From the perspective of operators, Kang et al. [8] formulated mobile data offloading problem as a utility maximization problem. The authors established an integer programming problem and obtained a mobile data offloading scheme by considering the relaxed condition. The authors further proved that when the number of users is large, the proposed centralized data offloading scheme is near optimal. Jung et al. [9] proposed a user-centric, network-assisted WiFi offloading model. In this model, heterogeneous networks are responsible for collecting network information and users make offloading decisions based on this information to maximize their throughput. In the heterogeneous network scenario composed of LTE and WiFi, aiming at maximizing the minimum energy efficiency of users, a closed expression is proposed in [10] to calculate the number of users to be offloaded, and these users with the smallest SINR (signal to interference and noise ratio) are offloaded into WiFi network. According to the above references, the most challenging problem in WiFi offloading is how to make an offloading decision, that is, how to choose the most suitable WiFi AP for communication. Fakhfakh and Hamouda [11] aimed to minimize the residence time of the cellular network and optimized it by Q-learning. The reward function considers SINR, handover delay, and AP load. By offloading cellular services to the best WiFi AP nearby, operators can greatly increase their network capacity, and users’ QoS will also increase. However, the above references only make an immediate offloading decision based on the current network conditions, without considering the user’s previous access history. In addition, most of the references only perform an offloading decision for the optimization of one particular attribute, such as throughput or energy efficiency, without considering multiple network attributes for comprehensive decision making.

In this paper, for the mobile user scenario where the cellular base station and the WiFi AP coexist, considering the current network conditions and the access history, a Q-learning scheme is used to make the offloading decision. By considering its own access history, users will accumulate the experience of offloading, which will not only avoid offloading to the poor network that was previously accessed but also actively select the best WiFi AP according to the maximum discounted cumulative reward, which in turn increases user’s QoS. In this paper, four attributes including user throughput, terminal power consumption, user cost, and communication delay are considered and the reward function in Q-learning is defined by TOPSIS. In addition, if the service type is different, the importance of each network attribute will be different. We use AHP to define the weight of each network attribute according to the specific service type. The mobile terminal collects various attributes of the heterogeneous network, and the user continuously updates his discounted cumulative reward in combination with the instant reward and the experience reward until convergence. After the convergence, the user can make the best offloading decision in each state.

The rest of this paper is arranged as follows. Section 2 gives the system model of WiFi offloading in heterogeneous networks. Section 3 builds the Q-learning model, defines the reward function model based on AHP and TOPSIS, and gives the specific steps of the WiFi offloading algorithm. In Section 4, the simulation results are presented and analysed. Finally, Section 5 concludes the paper.

2. System Model

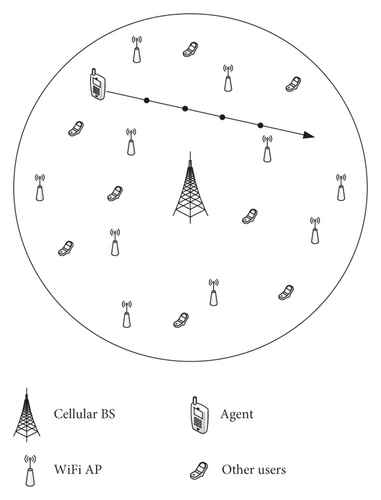

The system model in this paper is shown in Figure 1. A cellular base station is located in the center of the cell with a radius equal to rcell. There are NAP WiFi APs in the cell, which are represented as APk, k ∈ {1,2, …, NAP}. The cell is covered by overlapping cellular network and WiFi network. These networks are divided into valid networks and invalid networks. When the throughput of the user accessing a certain network is greater than a threshold, we regard this network as a valid network; otherwise, it is considered as an invalid network. The mobile multimode terminal is the agent of Q-learning, and it can perform data transmission through both cellular network and WiFi network. The agent moves straightly inside the cell, marking its passing position as Posii, i ∈ {1,2, …, Np}, where Np represents the total number of positions the user has passed. Due to the movement of the agent, the network environment such as channel quality and available bandwidth is constantly changing, which will cause the network attribute of the user to change. This paper regards the four network attributes of the agent in different locations as the state in Q-learning, including throughput, power consumption, cost, and delay. In addition, we consider the offloading decision as the action choice in Q-learning and offload mobile data if agent chooses WiFi network.

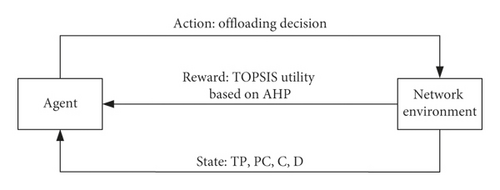

Figure 2 shows the algorithm structure based on Q-learning. The agent first collects the network environment information, filters out invalid networks, and calculates four attributes of user throughput (TP), terminal power consumption (PC), user cost (C), and communication delay (D) of the valid network. The AHP algorithm is used to calculate the weights of the four attributes under different services, and the instant rewards obtained by selecting each network under the current state are calculated by TOPSIS. In combination with the instant reward and the experience reward, the Q-learning iteration is performed and the Q-table is updated. As a result, the offloading decision is made based on the discounted cumulative reward in Q-table.

The operator charges the agent whether he accesses the cellular BS or a WiFi AP. In this paper, the unit price costed per second after the agent accesses a network in i − this defined as , which is used to represent a relative price of two networks. It is usually cheaper if the user chooses to offload.

Communication delay is also an important indicator for users to evaluate the network. In this paper, the transmission delay after the agent accesses a network in i − th location is defined as . Because of CSMA/CA (Carrier Sense Multiple Access with Collision Avoidance), the delay time is longer when the user accesses WiFi, which makes bigger than accessing the BS.

This paper considers the above four network attributes to calculate the satisfaction of the agent in the whole mobile scenario.

Firstly, we calculate the average of the four network attributes at Np locations; that is, , , , and .

3. WiFi Offloading Algorithm Based on Q-Learning and MADM

For the mobile user scenario where the cellular BS and the WiFi AP coexist, we propose a WiFi offloading algorithm based on Q-learning and MADM. Considering the current network conditions and the access history, the Q-learning algorithm is used to make the offloading decision, which will not only avoid offloading to the poor network that was previously accessed but also actively select the best WiFi AP according to the maximum discounted cumulative reward. MADM is an effective decision-making method when we need to consider a variety of factors. According to [16], attribute weight and network utility value are of great importance in MADM. We use two MADM algorithms in this paper, called AHP and TOPSIS. AHP is used to define the weight of each network attribute according to the specific service type. TOPSIS is used to obtain the instant reward of Q-learning based on the network utility. The agent collects various attributes of the heterogeneous network and continuously updates his discounted cumulative reward in combination with the instant reward and the experience reward. After the convergence, the user can make the best offloading decision in each state.

3.1. Q-Learning

Q-learning is one of the widely used reinforcement learning algorithms that treat learning as a process of trying, evaluation, and feedback. Q-learning consists of three elements, including state, action, and reward. The state set is denoted as S and the action set is denoted as A, and the purpose of Q-learning is to obtain the optimal action selection strategy Π∗ to maximize the agent′s discounted cumulative reward [11]. In state s ∈ S, the agent selects an action a ∈ A from the action set to act on the environment. After the environment accepts the action, the environment changes and generates an instant reward Rw(s, a) feedback to the agent. Then, the agent will select the next action a′ ∈ A based on the reward and his own experience, which will in turn affect the discounted cumulative reward Rc(s) and state s′ of the next moment. It has been proved that for any given Markov decision process, Q-learning can be used to obtain an optimal action selection strategy Π∗ for each state s, maximizing the discounted cumulative reward for each state [17].

- (1)

State set S: the location that agent passes and the network environment around the location, that is, S = {si = (Posii, Envii)|i ∈ {1,2, …, Np}}, where Posii represents the location of the agent and Envii represents the network attributes of location i, including throughput, power consumption, cost, and delay

- (2)

Action set A: the process of selecting an action is regarded as an offloading decision, that is, A = {ak, k ∈ {0,1,2, …, NAP}}, where a0 indicates that the terminal accesses the cellular BS and ak, k ∈ {1,2, …, NAP} indicates that the terminal is offloaded to the WiFi AP corresponding to the subscript

- (3)

Reward function Rw(s, a): the utility value of the TOPSIS algorithm is used to represent the instant reward that the user obtains after attempting to access a certain network

3.2. AHP Algorithm

-

Step 1: construct the paired comparison matrix according to the user service type j and the attributes to be analysed. Since this paper considers four attributes of throughput, power consumption, cost, and delay, the paired comparison matrix B can be expressed as

-

where bmn represents the ratio of the importance degree between m and n network attributes. We assume bmn as an integer from 1 to 9 or a reciprocal of them to evaluate the relative importance between different attributes. Furthermore, we have bmn = 1/bnm, and the value on the diagonal is 1.

-

Step 2: calculate the weight of each network attribute in the service type scenario. According to [19], B is a positive reciprocal matrix which has multiple eigenvalues and eigenvector pairs (λ, V):

-

where λ is a certain feature value of B and V is a feature vector corresponding to λ. The feature vector corresponding to the largest eigenvalue λ∗ is selected and normalized into , which is also the AHP weight of the four attributes.

-

Step 3: check the consistency of the paired comparison matrix. Normally, the most accurate AHP weight cannot be obtained at one time because the paired comparison matrix may be inconsistent if bmn ≠ bmk/bkn, so the weight calculated in Step 2 is not accurate. It is necessary to check consistency of comparison matrix to ensure the subjective weight reasonable [15]. This paper uses the consistency ratio CR to measure the rationality of B:

According to the theory of AHP, if the consistency ratio CR > 0.1, then B is unacceptable, and it is necessary to return to Step 1 to adjust B until CR > 0.1. Finally, the accurate AHP weights of the four network attributes can be obtained (Table 1).

| Matrix order | RI |

|---|---|

| 1 | 0.00 |

| 2 | 0.00 |

| 3 | 0.58 |

| 4 | 0.90 |

| 5 | 1.12 |

| 6 | 1.24 |

| 7 | 1.32 |

| 8 | 1.41 |

| 9 | 1.45 |

3.3. TOPSIS Algorithm

-

Step 1: establish a standardized decision matrix H. Constructing a candidate network attribute matrix X using the network attribute values calculated in Section 2:

-

where l represents the number of the candidate network and n represents the number of the network attribute. Normalize each column to obtain a standardized decision matrix , where hln is the normalization of xln:

-

Step 2: establish a weighted decision matrix Y. Each attribute is weighted by the AHP weight obtained in Section 3.2, which is represented by , and the attribute value of each column in H is multiplied by the corresponding AHP weight to obtain :

-

Step 3: calculate the proximity of each candidate solution and two extreme solutions. First, determine the ideal solution and the least ideal solution. Since throughput is a positive attribute and power consumption, cost, and delay are negative attributes, the ideal solution Solution+ is

-

On the contrary, the least ideal solution is:

-

Calculate the Euclidean distances between the l-th candidate network and Solution+ and Solution− to get and :

-

Step 4: calculate the instant reward after the user selects a candidate network. In this paper, Rwl is expressed by the relative proximity of the candidate network to the ideal solution:

-

The larger is, the smaller is and the closer Rwl is to 1, indicating the candidate solution is closer to ideal solution and the reward is larger. Conversely, the smaller is, the larger is, indicating that the network accessed by the agent is poor and Rwl is closer to 0.

3.4. Algorithm Steps

In addition, this paper also introduces the ε-greedy algorithm. In each action selection of Q-learning, the agent explores with a small probability ε, that is, randomly selects a network to offload. Without ε-greedy algorithm, it is possible that the cumulative reward of a suboptimal action becomes bigger and bigger, which makes the user choose this action and increase the cumulative reward again, instead of finding a better one. In other words, the core of ε-greedy is to explore. The reason why the ε-greedy algorithm performs better is that it continuously explores the probability of finding the optimal action. Although it is possible to reduce the user satisfaction in the next period of time, hoping that in the future, we can make better action choices and ultimately get the most user satisfaction. Based on the above analysis, Algorithm 1 gives the WiFi offloading algorithm based on Q-learning and MADM.

-

Algorithm 1: WiFi offloading algorithm based on Q-learning and MADM in heterogeneous networks.

-

Input: state set S, action set A, paired comparison matrix B, candidate network attribute matrix X, and iteration limit Z

-

Output: trained Q-table, best action selection strategy Π∗, and user satisfaction

- (1)

Calculate attribute weights based on B

- (2)

For s ∈ S, a ∈ A

- (3)

Q(s, a) = 0

- (4)

End For

- (5)

Randomly choose sini ∈ S as the initialization state

- (6)

While iteration < Z

- (7)

For each state

- (8)

If rand < ε

- (9)

Randomly choose an action

- (10)

Else

- (11)

Select the action corresponding to the maximum Q value in this state.

- (12)

End If

- (13)

Perform a

- (14)

Calculate Rwt(s, a) according to equation (23)

- (15)

Observe the next state s′

- (16)

Update the Q-table according to equation (24)

- (17)

End For

- (18)

End While

- (19)

Record the action corresponding to the maximum Q value in each state into Π∗

- (20)

Calculate user satisfaction Φsat

4. Numerical and Simulation Results

As shown in Figure 1, the simulation scenario is established in a circular cell with a radius rcell of 500 m. The cellular BS is located in the cell center, and NAP WiFi AP is randomly distributed inside the cell. The additive white Gaussian noise power spectral density N0 is −174 dBm/Hz, and reference distance d0 is 1 m. In FRayleigh(θ, β), mean θ = 0 and variance β = 5 dB. Furthermore, the learning rate μ of the Q-learning is set to 0.8, the discount factor of the experience reward δ is set to 0.1, and ε in ε − greedy is set to 0.01. In AHP, when network attribute number N = 4, the consistency index RI = 0.9 [15]. The paired comparison matrices B of different services are shown in Table 2, and they are recognized results based on the general needs of each service, which are given by experts’ opinions. The remaining parameters are shown in Table 3.

| Network attribute | Stream | Conversation | ||||||

|---|---|---|---|---|---|---|---|---|

| TP | PC | C | D | TP | PC | C | D | |

| TP | 1 | 3 | 2 | 5 | 1 | 2 | 1 | 1/9 |

| PC | 1/3 | 1 | 1 | 2 | 1/2 | 1 | 1/3 | 1/9 |

| C | 1/2 | 1 | 1 | 3 | 1 | 3 | 1 | 1/9 |

| D | 1/5 | 1/2 | 1/3 | 1 | 9 | 9 | 9 | 1 |

| Simulation parameters | Cellular network | WiFi network |

|---|---|---|

| User cost (/s) | 0.8 | 0.1 |

| Communication delay (ms) | 25 to 50 | 100 to 150 |

| Bandwidth W (MHz) | 4 to 6 | 10 to 12 |

| Path loss L0 at d0 (dB) | 5.27 | 8 |

| Terminal fixed power consumption P0 (mW) | 10 | 10 |

| Minimum received power (dBm) | −110 | −100 |

| User throughput threshold (kb/s) | 10 | 12 |

| Path loss exponent α | 3.76 | 4 |

Firstly, we analyse the performance of this algorithm under stream service. According to AHP algorithm, the weight vector corresponding to throughput, power consumption, cost, and delay is obtained as . When the user conducts streaming media services like watching a video, the most important thing is throughput and the least is delay. Because a video usually has a large size such as 500 MB, 1 GB, or more, we need the throughput to be big enough to support the cache of the video. The user equipment only needs to read the data precached in it to perform the service, which is not real-time. So stream service does not need low delay.

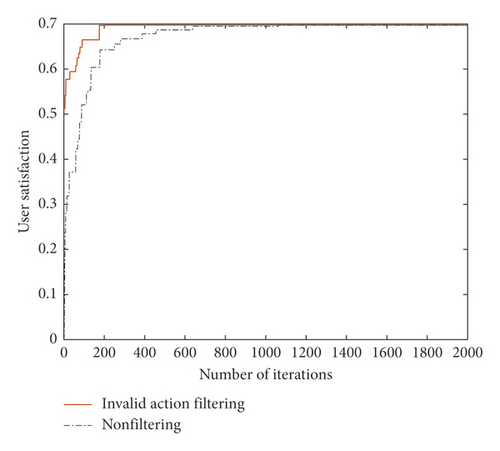

Figure 3 shows the convergence comparison between the invalid action filtering and nonfiltering in the WiFi offloading algorithm under stream service. Advance filtering means that this paper filters the invalid network whose actual throughput is less than the throughput threshold before Q-learning. Assume NAP = 30, and the total number of positions Np passed by the user is equal to 10. The two cases are subjected to Q-learning in the same experimental scenario, and the convergence was observed. Since the action selection in Q-learning is discontinuous, user satisfaction will jump when changing the action selection strategy. As can be seen from Figure 3, after filtering out the invalid network whose throughput is less than the threshold in advance, the convergence speed of the Q-learning can be greatly accelerated.

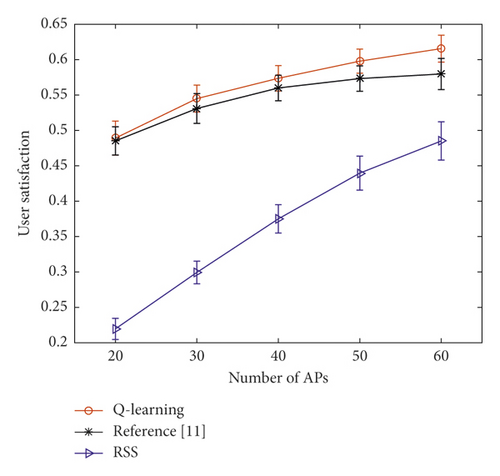

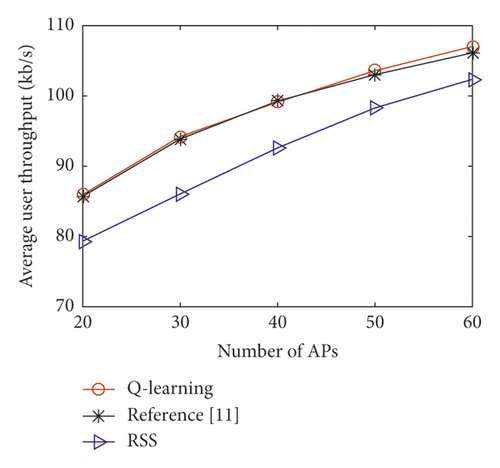

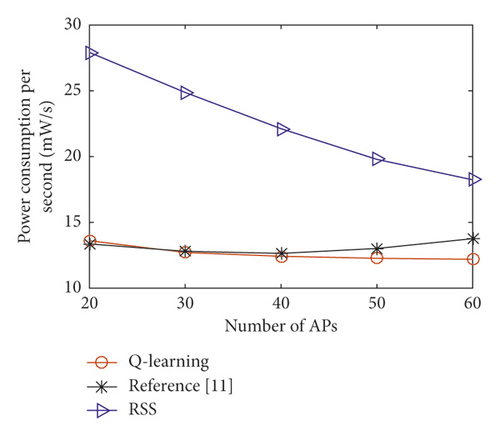

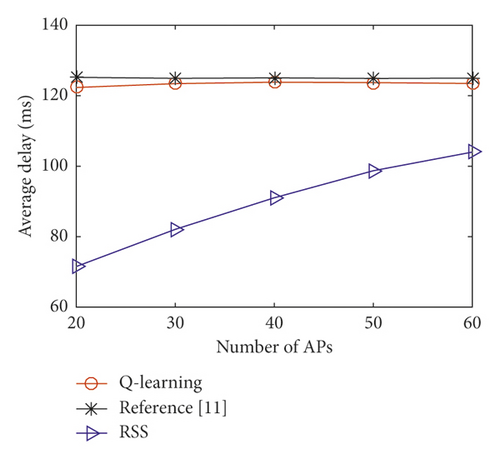

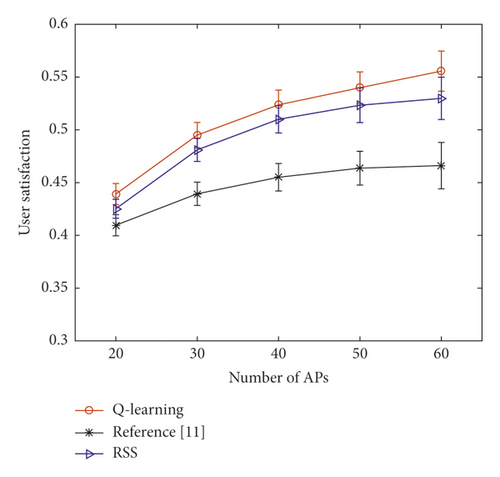

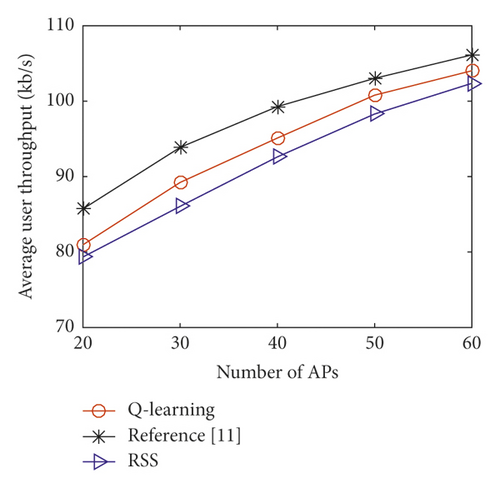

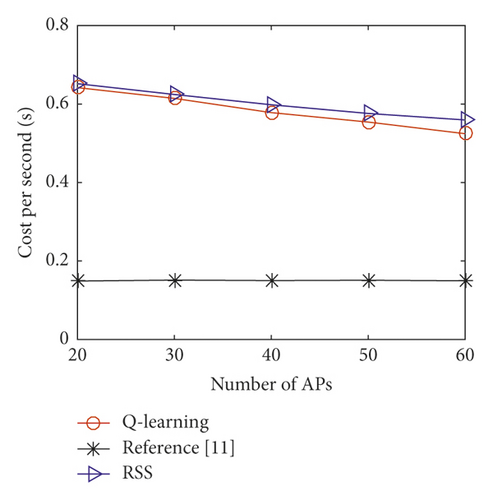

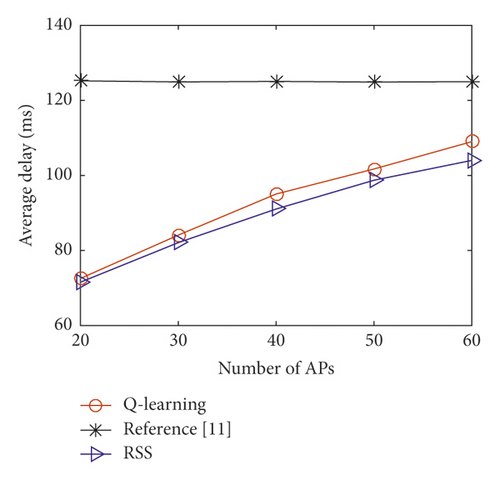

Figures 4 and 5 show the comparison between this paper’s algorithm, Fakhfakh and Hamouda’s algorithm [11], and RSS (received signal strength) algorithm based on user satisfaction, throughput, power consumption, cost, and delay under stream service. We repeatedly scatter APs 1000 times to eliminate randomness. The number of user-passed positions Np is equal to 10, and the number of WiFi AP is changed from 20 to 60. As can be seen from Figure 4, the WiFi offloading algorithm in this paper is superior to the other two algorithms in user satisfaction. The main difference between this paper and [11] is the reward function of the Q-learning. Fakhfakh and Hamouda’s algorithm [11] aims to minimize the residence time of the cellular network and optimize it by Q-learning, but its reward function only considers SINR, handover delay, and AP load, without considering the attributes directly related to user QoS, such as terminal power consumption, user cost, and communication delay. The RSS algorithm only considers the received signal strength of the terminal, and the terminal automatically accesses network with the largest RSS, so the user satisfaction is lower. The Q-learning algorithm in this paper not only considers the attributes directly related to user QoS but also uses two MADM algorithms to obtain the intrinsic relationship of these attributes. It establishes a more reasonable Q-learning reward function and obtains the best user satisfaction. As can be seen from Figure 5, the algorithm in this paper is similar to [11] in terms of user throughput. This is because Fakhfakh and Hamouda’s algorithm [11] regards SINR as the most important aspect of the reward function, which directly affects throughput. Since the simulation is based on the stream service, the weight of throughput accounts for almost half of all the attributes, so the two algorithms perform similarly in throughput. Since the other two algorithms do not consider power consumption and cost, the algorithm performs better on these two network attributes. The RSS algorithm selects the network with the highest receiving power to access. In this scenario, as long as the terminal is not too far away from the cellular BS, RSS of the cellular network will be the largest, so the number of WiFi offloading is reduced. Since the WiFi network uses the unlicensed frequency band, the bandwidth available to the user is usually larger than accessing the cellular network. As a result, the throughput of it becomes less. Because the delay of cellular network is usually lower than WiFi network, the RSS algorithm performs best on the delay attribute. However, since the weight of the delay attribute in the stream service is very low, the user does not pay attention to the delay of the precached data when watching video or listening to music. As a result, although the algorithm in this paper is not as good as the RSS algorithm in delay, user satisfaction is much higher than it.

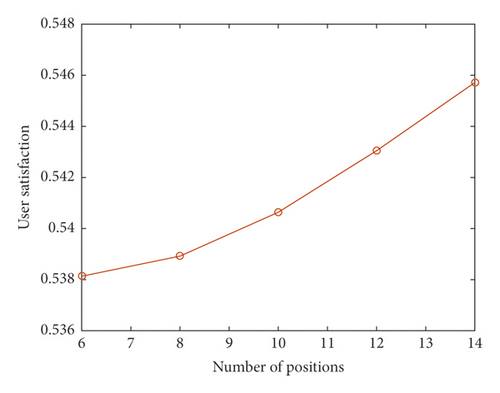

Figure 6 shows the user satisfaction against the number of positions passed by agent after repeatedly scattering AP 1000 times to eliminate randomness. The number of WiFi AP NAP = 30, and the terminal passes through 6, 8, 10, 12, and 14 positions, respectively. It can be seen that the more the positions, the higher the user satisfaction because as the number of positions increases, the states of Q-learning will increase, and the chances of agent actively selecting the optimal network to offload will also increase, so the satisfaction will also become higher.

Figures 7 and 8 show the comparison between this paper’s algorithm, Fakhfakh and Hamouda’s algorithm [11], and RSS algorithm based on user satisfaction, throughput, power consumption, cost, and delay under conversation service. The number of user-passed positions Np is equal to 10, and the number of WiFi AP is changed from 20 to 60. According to AHP algorithm, the weight vector is obtained as , which indicates that when the user chooses conversation service like making a voice call, the most important attribute is communication delay while the other three attributes are less important. When we make a voice call, it will drastically reduce the QoS if the time we wait is too long. As can be seen from Figure 7, the WiFi offloading algorithm in this paper is superior to the other two algorithms in user satisfaction. Fakhfakh and Hamouda’s algorithm [11] does not consider the communication delay, so the satisfaction is the worst. As is mentioned above, RSS algorithm usually makes the terminal access the cellular BS which has a bigger transmit power and a lower delay, so the satisfaction is better than [11]. As can be seen from Figure 8, the WiFi offloading algorithm in this paper is superior to the RSS algorithm in throughput, power consumption, and cost, while the communication delay performance is near RSS algorithm. In this paper, delay is the most important attribute under conversation service, so the delay performance nears RSS algorithm. We also consider other attributes, which makes a few users offload to WiFi network, so the delay of this algorithm is slightly higher than the RSS algorithm.

5. Conclusion

In the heterogeneous network scenario where cellular network and WiFi network overlap, this paper establishes a model of mobile terminal WiFi offloading, and the Markov model is used to describe the change of available bandwidth. Four network attributes of user throughput, terminal power consumption, user cost, and communication delay are considered to define a user satisfaction function. The AHP algorithm is used to calculate the attribute weights, and the TOPSIS algorithm is used to obtain the instant rewards when the user accesses the cellular network or offloads to the WiFi network. Using the Q-learning algorithm, combined with instant rewards and experience rewards to update the discounted cumulative rewards, the user can make the optimal offloading decision and get the maximum satisfaction in each passing position. The simulation results show that the proposed algorithm can converge under limited times, and compared with the comparison algorithm, the algorithm has a great improvement in user satisfaction.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (61971239 and 61631020).

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.