Automatic Evaluation of Internal Combustion Engine Noise Based on an Auditory Model

Abstract

To improve the accuracy and efficiency of the objective evaluation of noise quality from internal combustion engines, an automatic noise quality classification model was constructed by introducing an auditory model-based acoustic spectrum analysis method and a convolutional neural network (CNN) model. A band-pass filter was also designed in the model to automatically extract the features of the noise samples, which were later used as input data. The adaptive moment estimation (Adam) algorithm was used to optimize the weights of each layer in the network, and the model was used to evaluate sound quality. To evaluate the predictive performance of the CNN model based on the auditory input, a back propagation (BP) sound quality evaluation model based on psychoacoustic parameters was constructed and used as a control. When processing the label values of the samples, the correlation between the psychoacoustic parameters of the objective evaluation and evaluation scores was analyzed. Four psychoacoustic parameters with the greatest correlation with subjective evaluation results were selected as the input values of the BP model. The results showed that the sound quality evaluation model based on the CNN could predict the sound quality of internal combustion engines more accurately, and the input evaluation score based on the auditory spectrum in the CNN classification model was more accurate than the short-time average energy input evaluation score based on the time domain.

1. Introduction

The loudness and sound quality of internal combustion engines directly affect the operator’s experience. Therefore, noise control and evaluation of internal combustion engines is a popular topic in the field of engineering. The objective evaluation methods of noise quality include linear and nonlinear evaluation and predictive models. In previous studies [1, 2], multiple linear regression theory was used to establish a sound quality classification model, the results of which agreed closely with the measured values of subjective evaluation. Huang et al. [3] proposed the use of psychoacoustic parameters as inputs to a genetic algorithm (GA)-wavelet neural network and back propagation (BP) neural network to predict sound quality, which was proven to be somewhat effective. In a study by Xu et al. [2], a nonlinear evaluation model based on an adaptive boosting (AdaBoost) algorithm was proposed. The predictive results of the model were compared with those of the GA-BP, GA-extreme learning machine (ELM), and GA-support vector machine (SVM) models, which showed that the proposed model improved the accuracy and precision. In the above models, the sound qualities were predicted using the objective psychoacoustic parameters of sound quality as inputs. The accuracy and precision of the predictions were the main focus of the evaluation model research. Auditory models are widely used in target recognition, fault diagnosis, and speech recognition. An underwater target echo recognition method based on auditory spectrum features was proposed [4]. The underwater target single-frequency echo recognition experiment showed better robustness. Under the same test conditions, the recognition rate was about 3% higher than that of a perceptual linear prediction (PLP) model. In a study by Wu et al. [5], the auditory spectrum feature extraction was applied to the fault diagnosis of broken teeth. A gammatone (GT) band-pass filter and phase adjustment were applied to signals to calculate the probability density of the amplitude at each extreme point. The results showed that the proposed method could accurately characterize and extract the fault features of broken teeth, and the extraction accuracy was high. Liang [6] proposed a binaural auditory model and applied it to the analysis and control of a car’s interior noise quality. The results showed that the interior noise quality of the car was greatly improved. At present, there have been no studies on the application of auditory models in the automatic evaluation of noise quality of internal combustion engines.

In this study, the noise samples of certain types of diesel engines were processed using a gammatone filter to establish an auditory model similar to human ears, and an automatic classification model of noise quality was constructed based on a convolutional neural network (CNN). We aimed to study the following: (1) time domain signal processing of noise samples, (2) auditory spectrum transformation of noise samples, and (3) applications of the auditory spectrum-based CNN in the classification of noise quality. The auditory model of sound samples was taken as the input, and the subjective evaluation score was taken as the output label for model training and optimization. Compared to the model using objective sound quality psychoacoustic parameters as the input, the proposed model exhibited higher classification accuracy.

2. Auditory Model

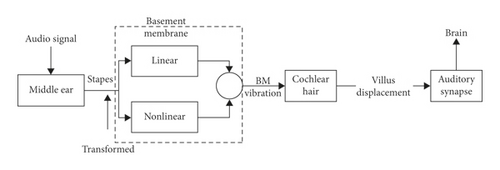

The human auditory system consists of several parts of the ear and brain. The widely used auditory model in the field of signal analysis simulates the ear functions. Different locations of the basement membrane inside the cochlea will produce different traveling wave deflections when stimulated by corresponding frequencies, similar to a set of band-pass filter banks, and the nerve fibers for transmission are called channels. Each channel corresponds to a specific point on the basement membrane. In the human auditory system, each channel has an optimal frequency (center frequency), which defines the frequency of maximum excitation [7], as shown in Figure 1.

The value of b1 was 1.019, so that the physiological parameters of basement membrane could be better simulated.

3. Automatic Evaluation Method

The idea of an unsupervised learning algorithm in machine learning was adopted for automatic evaluation. A CNN model was chosen to automatically extract the eigenvalues of the input noise samples, and the parameters that were learned through training were applied to the online automatic evaluation system to continuously optimize the model and improve the classification accuracy.

3.1. Evaluation Based on CNN

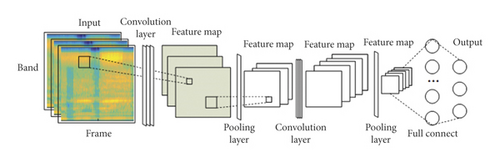

A CNN [9] is a deep feed-forward artificial neural network to which a convolution layer and pooling layer are added. It can be used to extract the features of training samples under unsupervised conditions, realize sparse representation of sample features, and achieve the principle of detecting optical signals similar to animal visual cortex neurons.

The use of CNNs has been highly successful in the field of image and speech recognition. The convolution layer of a CNN involves sparse interactions and parameter sharing, so that each convolution kernel can extract the feature information on the time-frequency axis of the sample sound, which is directly related to the sound quality perception.

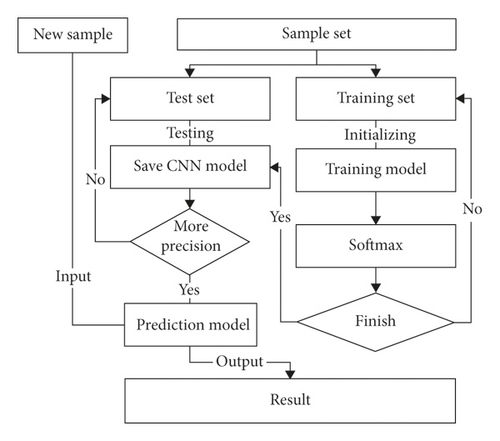

The preprocessed sound samples and subjective evaluation results were divided into two parts: a training set and a test set. The training set data were taken as the original input to train the CNN model. A Softmax classifier was used to obtain the attribution probability of the current samples. The test set was used to validate the training model completed iteratively, and the optimal model was selected and saved. Thus, the saved CNN classification model could be used to predict the attributes of new samples. The process is shown in Figure 3.

3.2. Definition of CNN Evaluation Model

The convolution layer and the pooling layer appeared in pairs and could be stacked many times to obtain more abstract features. The final fully connected layers were used to combine the features of different frequency bands. The model is shown in Figure 4.

3.3. Training of CNN Evaluation Model

The training method used in the CNN model was basically the same as the back propagation training method used in the BP neural network [12]. The error calculation of each layer was updated layer by layer from back to front according to the chain rule. The upper layer of convolution layer l was pooling layer l + 1, and its error was calculated using the following equations.

4. Sound Quality Evaluation Based on Psychoacoustic Parameters

To test the predictive accuracy of the CNN evaluation method in the auditory model, the widely used BP evaluation model based on psychoacoustic parameter input was selected as the control model.

4.1. Sample Collection and Preprocessing

A HeadRec ASMR head recording binaural microphone was used as the front-end equipment for audio acquisition of the test samples, and 90 sound samples were collected as the test sample database. The sample database contained 30 groups of steady-state sound signals collected from three types of internal combustion engines, a Mitsubishi 4G6 MIVEC gasoline engine, Toyota HR16DE gasoline engine, and Hyundai D4BH diesel engine, with speeds ranging from 800 to 4500 rpm. The audio sampling frequency was 44 kHz. The frequency identification resolution was 1 Hz. The recording length of each sample was 15 s. The values of the sound quality in the sample database were based on subjective evaluations by an assessment group. In the experiment, 25 students with normal hearing and 5 teachers of related majors were selected to form the sound quality assessment group, and the sound samples were divided into nine grades. Grade 1 was the best, and Grade 9 was the worst. The artificial evaluation module of the automatic evaluation system developed in this study was used for the evaluation of the internal combustion engine noise quality.

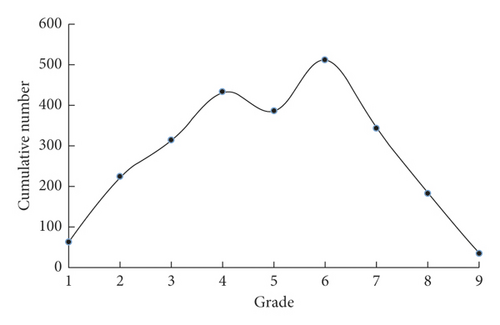

After the evaluation, Spearman correlation analysis between the rating results and the evaluators was carried out, and 8% of the unreliable data were removed. The distribution of evaluation results is shown in Figure 5. Most of the sample scores were within the range of 4–7 points, i.e., the grades ranged from “satisfactory” to “poor” in the subjective evaluation of sound quality.

4.2. Psychoacoustic Parameter Processing of Noise Samples

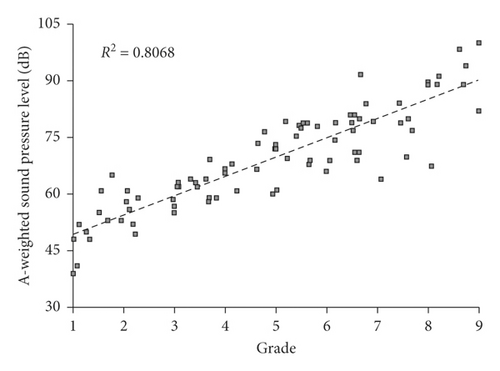

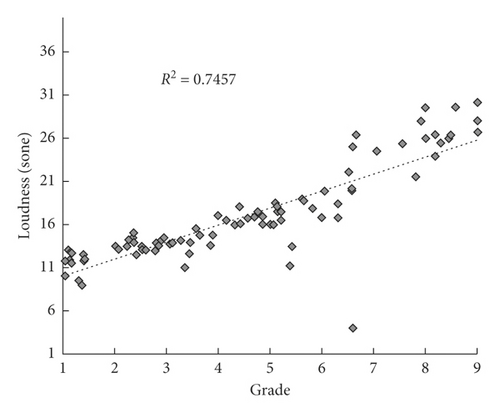

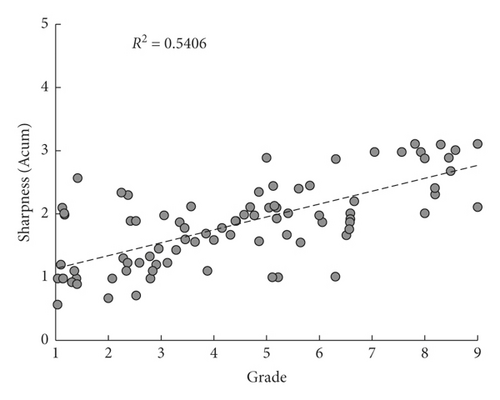

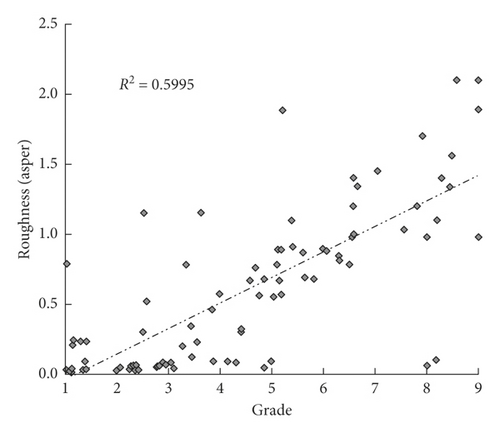

The psychoacoustic parameters [14] of the noise samples mainly included 8 parameters, which were the A-weighted sound pressure level (hereinafter referred to as A sound level), loudness, impulsiveness, sharpness, tonality, roughness, fluctuation strength, and articulation index (AI index). To reduce the complexity and training time of the BP neural network, the Pearson correlation analysis method was used to obtain the correlation distribution between the psychoacoustic parameters and evaluation score, as shown in Figures 6–9.

The correlation fitting curve shows that the correlation coefficients of the four parameters, i.e., A sound level, loudness, sharpness, and roughness, with the evaluation score were all more than 0.70. Table 1 shows that the correlation coefficients of the fluctuation strength and impulsiveness with the evaluation score were 0.563 and 0.346, respectively. The correlation significance <0.05 indicates that both the fluctuation strength and impulsiveness were linearly correlated to the evaluation score, but the correlation was insignificant. The correlations of the tonality and AI with evaluation score were less than 0.31, which indicated that the two parameters were not correlated to the evaluation score. The main reason was that the vibration noise of the vehicle’s internal combustion engine was insensitive to the sound quality, and the pitch values of internal combustion engines were not highly discriminated at different rotational speeds. Given the complexity of the BP model, training time, and the correlations between the objective parameters and evaluation score, four parameters, A sound level, loudness, sharpness, and roughness, were selected as the input variables of BP neural network.

| Parameters | Correlation coefficient |

|---|---|

| A sound level | 0.898 ∗∗ |

| Loudness | 0.863 ∗∗ |

| Sharpness | 0.735 ∗∗ |

| AI | 0.392 ∗ |

| Tonality | 0.282 |

| Fluctuation strength | 0.563 ∗ |

| Impulsiveness | 0.386 ∗ |

| Roughness | 0.774 ∗∗ |

- ∗∗P < 0.01; ∗P ≤ 0.05, two-tailed.

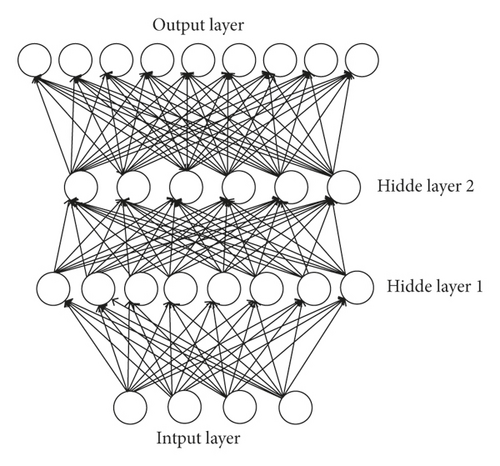

4.3. BP Neural Network Structure

The topological structure of the BP neural network in this experiment consisted of four layers: an input layer, hidden layer 1, hidden layer 2, and output layer, as shown in Figure 10.

4.4. BP Neural Network Training

5. Sound Quality Evaluation Based on the Auditory Model

The sound samples collected in Section 4.1 were used in the auditory model test. The input sound signals were processed using the time domain signal and auditory spectrum [8], respectively. The CNN model was used for comparison.

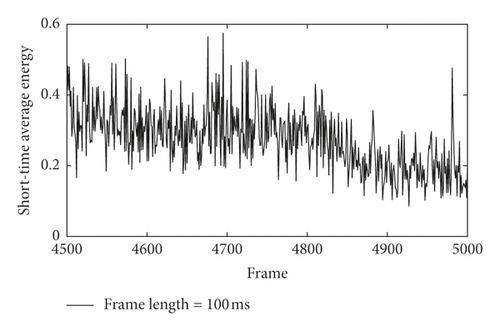

5.1. Time-Domain Signal Processing of Noise Samples

5.2. Auditory Spectrum Transformation of Noise Samples

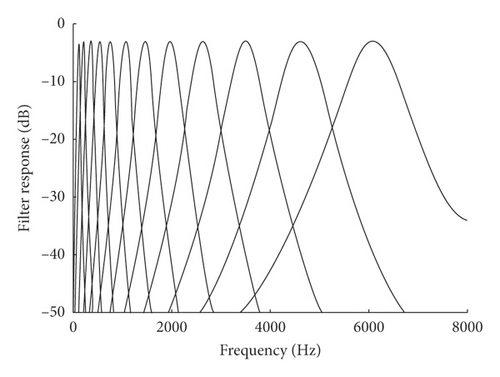

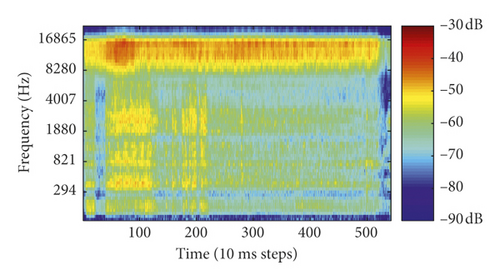

The proposed auditory model based on gammatone filter banks was used for the auditory spectrum transformation. The overlapping segmentation method was used for signal processing. During downsampling, the filter response y(t, s) of each frequency channel was windowed at an interval of 100 ms frame size and an offset of 10 ms. A 64 × 100 matrix representing the time-frequency domain of input signal was thereby obtained. Its spectrum is shown in Figure 12.

The output label value of the CNN model is the grade vector representation of the subjective evaluation results on a scale of 1 to 9.

5.3. Structure of Convolutional Neural Network

In the CNN model, the first layer was the input layer, followed by a three-layer convolution and three-layer pooling alternately. Next, there was the fully connected layer, followed by the Softmax classifier [16]. The detailed structure is shown in Table 2.

| Layer | Type | Structure (quantity × row × column) | Description |

|---|---|---|---|

| Conv1-64 | Convolution layer | 64 × 7 × 7 | 64 convolution kernels of size 7 × 7 |

| MaxPooling | Pooling layer | 3 × 3 | Pool size 3 × 3 |

| Conv2-128 | Convolution layer | 128 × 5 × 5 | 128 convolution kernels of size 5 × 5 |

| MaxPooling | Pooling layer | 3 × 3 | Pool size 3 × 3 |

| Conv3-256 | Convolution layer | 256 × 3 × 3 | 256 convolution kernels of size 3 × 3 |

| MaxPooling | Pooling layer | 3 × 3 | Pool size 3 × 3 |

5.4. Training and Optimization

To ensure the comparability of test results, in the evaluation based on the CNN model, Conv1-64 in Table 2 was used in the input signal experiment for the first layer convolution (pad = 1, stride = 1), and a 3 × 3 pooling layer (pad = 1, 0, stride = 2) was used for the maxpooling to obtain 64 × 30 × 48 output feature map. In the second convolution layer, Conv2-128 (5 × 5, pad = 1, stride = 1) and the maxpooling window were used to generate a 128 × 14 × 23 feature map. In the third convolution layer, Conv3-256 (3 × 3, pad = 1, stride = 2) and the same pooling window were used to complete the convolution operation and output 256 × 3 × 6 eigenvalues. In the fourth layer, i.e., the fully connected layer, there were 4608 explicit nodes and 300 hidden nodes. Finally, the predictive probabilities for nine kinds of noise qualities were output by connecting the Softmax classifier.

The random initialization algorithm proposed in Section 4.4 was also used to initialize the weights in the CNN. In the training experiment, 10 samples were selected for each batch to predict the gradient and 60 iterations were performed for each batch. To accelerate the convergence, batch normalization was applied to the output of each layer. The adaptive moment estimation (Adam) algorithm [17] was used for training gradient descent optimization. The Adam algorithm could calculate the adaptive learning rate of each parameter. It preserved not only the exponential attenuation average of the square gradient but also the exponential attenuation average of the previous gradient M(t). Thus, it could be used to deal with the sparse gradient problem of convex functions quickly. The learning rate was set to 0.01. To prevent overfitting, 50% of the nodes were randomly removed or 30% of the hidden layer nodes were randomly removed in each batch of training in the fourth layer.

6. Results and Analysis

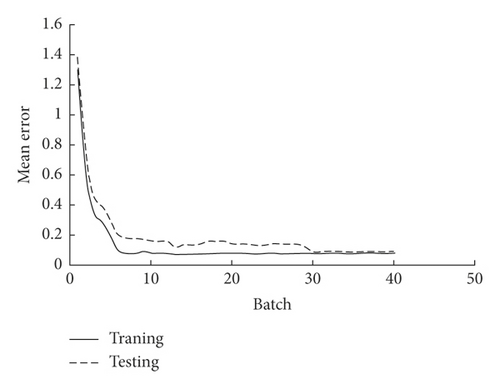

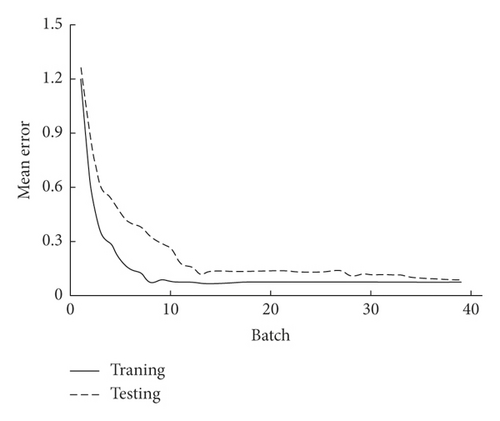

Figure 13 shows the trends of the training and test losses of the auditory spectrum input in the CNN evaluation test. The loss tended to be stable after 5300 iterations, indicating that the model converged and no overfitting occurred. Noise was added randomly to the sample, and dropout technology was used to prevent overfitting, which showed that the robustness of the classification model increased. Figure 14 shows the trends of the training and test losses in the BP evaluation test. After 4500 training iterations, the model converged without underfitting or overfitting.

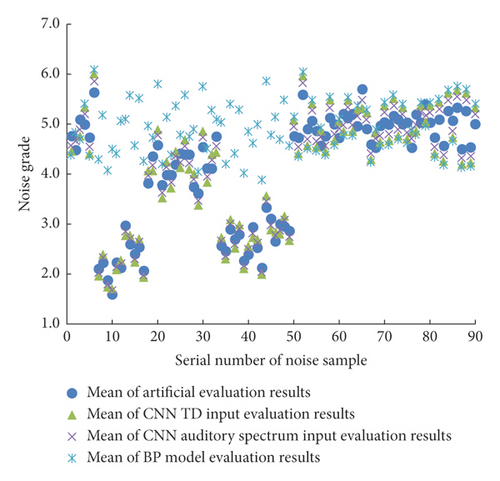

As shown in Figure 15, the x-axis represents the serial number of noise sample and the y-axis means the noise grade. Different types of points in the graph show the mean of multiple evaluation results for different models. The coincidence degree of the evaluation average based on the CNN auditory spectrum input and artificial evaluation average was the highest, and the coincidence degree of the evaluation average based on the CNN time and frequency domain inputs and artificial evaluation average was higher than that of BP model.

The overall accuracy and error are shown in Tables 3 and 4. Variable A represents the weighted sound pressure level. Input variable L represents the loudness, and variables R and S represent the roughness and sharpness, respectively. The accuracy of the auditory spectrum input training in the CNN model was 97.31%, and the testing accuracy was 95.28%. Compared to the control model, i.e., the BP neural network evaluation model, the accuracy of the CNN model was at most 5.95% higher. In the CNN evaluation model, the accuracy of the auditory model input was 3.53% higher than that of the time domain input. The single input results in the CNN model obtained using loudness, roughness, and sharpness showed that the input had no obvious advantages in the classification accuracy of the sound quality. The results also showed that the convolution neural network possessed strong automatic feature extraction and expression abilities. It could accurately express the features of the samples when the samples were input with complete time-frequency information and achieve good classification. Furthermore, because the convolution operation had sparse features of the sample signal expression, the complexity of the training was effectively reduced by features such as parameter sharing.

| Model | Input | Training accuracy (%) | Test accuracy (%) |

|---|---|---|---|

| CNN | Auditory spectrum | 97.31 | 95.28 |

| CNN | L | 90.25 | 89.13 |

| CNN | R | 88.58 | 87.47 |

| CNN | S | 88.22 | 87.13 |

| CNN | A | 90.25 | 89.56 |

| BP | L, R, S, A | 90.11 | 89.33 |

| Model | Input | Training accuracy (%) | Test accuracy (%) |

|---|---|---|---|

| CNN | Auditory spectrum | 97.31 | 95.28 |

| CNN | Time domain | 92.79 | 91.75 |

7. Conclusions

- (1)

In this study, an auditory model-based automatic evaluation method was proposed to evaluate the sound quality of internal combustion engines. The hierarchical structure of a CNN and the size and thickness of the convolution kernel were designed. The sound samples collected in the evaluation were used to obtain a time-frequency auditory spectrum simulating the auditory characteristics of the human ear basement membrane through gammatone filter banks. The input was used to train the CNN model, which could accurately express the characteristics of the samples and achieve good evaluation and classification.

- (2)

The training accuracy of the auditory spectrum input in the CNN model was much higher than that of the BP neural network evaluation model, and the time domain input test results of the CNN evaluation model also indicated better performance than that of the BP neural network evaluation model. Therefore, the CNN evaluation model was a better evaluation model for the classification of internal combustion engine sound quality.

- (3)

The experiments of 20 groups of different types of newly collected internal combustion engine sound samples showed that the proposed model has good generalization abilities. It was compared with CNN experiments using frequency domain and time domain inputs. The results showed that the sound quality classification method based on an auditory model was effective.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.