Convergent Power Series of sech(x) and Solutions to Nonlinear Differential Equations

Abstract

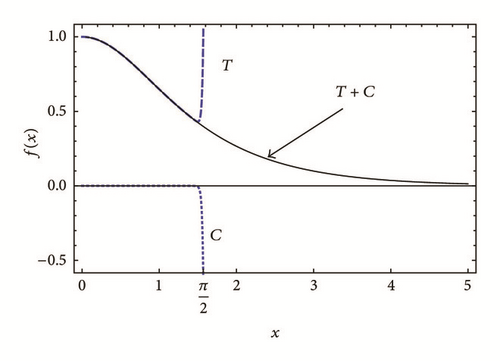

It is known that power series expansion of certain functions such as sech(x) diverges beyond a finite radius of convergence. We present here an iterative power series expansion (IPS) to obtain a power series representation of sech(x) that is convergent for all x. The convergent series is a sum of the Taylor series of sech(x) and a complementary series that cancels the divergence of the Taylor series for x ≥ π/2. The method is general and can be applied to other functions known to have finite radius of convergence, such as 1/(1 + x2). A straightforward application of this method is to solve analytically nonlinear differential equations, which we also illustrate here. The method provides also a robust and very efficient numerical algorithm for solving nonlinear differential equations numerically. A detailed comparison with the fourth-order Runge-Kutta method and extensive analysis of the behavior of the error and CPU time are performed.

1. Introduction

It is well-known that the Taylor series of some functions diverge beyond a finite radius of convergence [1]. For instance, by way of example not exhaustive enumeration, the Taylor series of sech(x) and 1/(1 + x2) diverge for x ≥ π/2 and x ≥ 1, respectively. Increasing the number of terms in the power series does not increase the radius of convergence; it only makes the divergence sharper. The radius of convergence can be increased only slightly via some functional transforms [2]. Among the many different methods of solving nonlinear differential equations [3–9], the power series is the most straightforward and efficient [10]. It has been used as a powerful numerical scheme for many problems [11–19] including chaotic systems [20–23]. Many numerical algorithms and codes have been developed based on this method [10–12, 20–24]. However, the above-mentioned finiteness of radius of convergence is a serious problem that hinders the use of this method to wide class of differential equations, in particular the nonlinear ones. For instance, the nonlinear Schrödinger equation (NLSE) with cubic nonlinearity has the sech(x) as a solution. Using the power series method to solve this equation produces the power series of a sech(x), which is valid only for x < π/2.

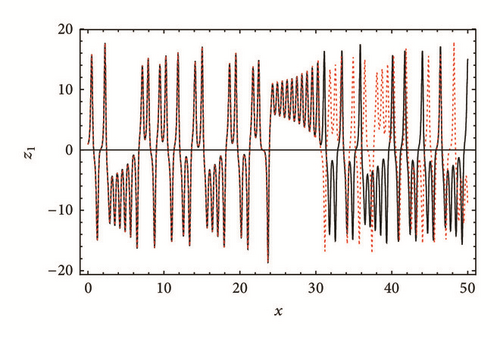

A review of the literature reveals that the power series expansion was exploited by several researchers [10–12, 20–24] to develop powerful numerical methods for solving nonlinear differential equations. Therefore, this paper is motivated by a desire to extend these attempts to a develop a numerical scheme with systematic control on the accuracy and error. Specifically, two main advances are presented in this paper: (1) a method of constructing a convergent power series representation of a given function with an arbitrarily large radius of convergence and (2) a method of obtaining analytic power series solution of a given nonlinear differential equation that is free from the finite radius of convergence. Through this paper, we show robustness and efficiency of the method via a number of examples including the chaotic Lorenz system [25] and the NLSE. Therefore, solving the problem of finite radius of convergence will open the door wide for applying the power series method to much larger class of differential equations, particularly the nonlinear ones.

It is worth mentioning that the literature includes several semianalytical methods for solving nonlinear differential equations; such as homotopy analysis method (HAM), homotopy perturbation method (HPM), and Adomian decomposition method (ADM); for more details see [26–29] and the references therein. Essentially, these methods generate iteratively a series solution for the nonlinear systems where we have to solve a linear differential equation at each iteration. Although these methods prove to be effective in solving most of nonlinear differential equations and in obtaining a convergent series solution, they have few disadvantages such as the large number of terms in the solution as the number of iterations increases. One of the most important advantages of the present technique is the simplicity in transforming the nonlinear differential equation into a set of simple algebraic difference equations which can be easily solved.

The paper is thus divided into two, seemingly separated, but actually connected main parts. In the first (Section 2), we show, for a given function, how a convergent power series is constructed out of the nonconverging one. In the second part (Section 3.1), we essentially use this idea to solve nonlinear differential equations. In Section 3.2, we investigate the robustness and efficiency of the method by studying the behavior of its error and CPU time versus the parameters of the method. We summarise our results in Section 4.

2. Iterative Power Series Method

This section describes how to obtain a convergent power series for a given function that is otherwise not converging for all x. In brief, the method is described as follows. We expand the function f(x) in a power series as usual, say around x = 0. Then we reexpress the coefficients, f(n)(x), in terms of f(x). This establishes a recursion relation between the higher-order coefficients, f(n)(0), and the lowest order ones, f(0)(0) and f(1)(0), and thus the power series is written in terms of only these two coefficients. Then the series and its derivative are calculated at x = Δ, where Δ is much less than the radius of convergence of the power series. A new power series expansion of f(x) is then performed at x = Δ. Similarly, the higher-order coefficients are reexpressed in terms of the lowest order coefficients f(0)(Δ) and f(1)(Δ). The value of the previous series and its derivative calculated at x = Δ are then given to f(0)(Δ) and f(1)(Δ), respectively. Then a new expansion around 2Δ is performed with the lowest order coefficients being taken from the previous series, and so on. This iterative process is repeated N times. The final series will correspond to a convergent series at x = NΔ.

We present now a proof that the convergent power series produced by the recursive procedure always regenerates the Taylor series in addition to a complementary one.

Proposition 1. If we expand f(x) in a Taylor series, T, around x = 0 truncated at nmax and use the recursive procedure, as described above, the resulting convergent power series always takes the form T + C where C is a power series of orders larger than nmax. This is true for any number of iterations, N, maximum power of the Taylor series, nmax, and for all functions that satisfy the general differential equation

Proof. It is trivial to prove this for a specific case, such as sech(x). For the general case, we prove this only for nmax = 4 and N = 2. The Taylor series expansion of sech(x) around x = 0 is

Now, we present the proof for the more general case, namely, when f(x) is unspecified but is a solution to (19). We start with the following Taylor series expansion of f(x) and its derivative

3. Application to Nonlinear Differential Equations

The method described in the previous section can be used as a powerful solver and integrator of nonlinear differential equations both analytically and numerically. In Section 3.1, we apply the method on a number of well-known problems. In Section 3.2, we show the power of the method in terms of detailed analysis of the error and CPU time.

3.1. Examples

3.2. Numerical Method

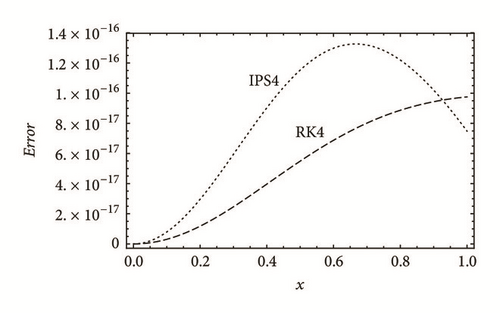

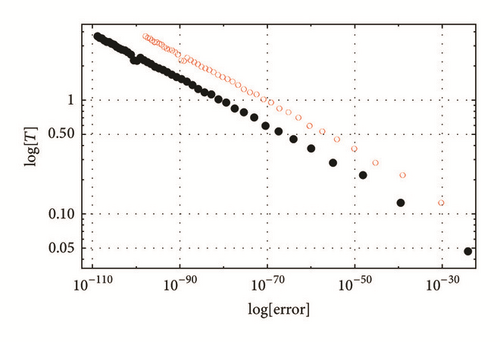

As a numerical method, the power series is very powerful and efficient [10]. The power series method with Nmax = 4, denoted by IPS4, is used to solve the NLSE, (29) and the error is calculated as the difference between the numerical solution and the exact solution, namely, sech(x). The equation is then resolved using the fourth-order Runge-Kutta (RK4) method. In Figure 2, we plot the error of both methods which turn out to be of the same order. Using the iterative power series method with Nmax = 12, (IPS12), the error drops to infinitesimally low values. Neither the CPU time nor the memory requirements for IPS12 are much larger than those for IPS4; it is straight forward upgrade to higher orders which leads to ultrahigh efficiency. This is verified by the set of tables, Tables 1–4, where we compute sech(1) using both the RK4 and the iterative power series method and show the corresponding CPU time. For the same N, Tables 1 and 3 show that both RK4 and IPS4 produce the first 16 digits of the exact value (underlined numbers in the last raw) and consume almost the same CPU time. Table 3 shows that, for the same N, IPS12, reproduces the first 49 digits of the exact value. The CPU time needed for such ultrahigh accuracy is just about 3 times that of the RK4 and IPS4. Of course the accuracy can be arbitrarily increased by increasing N or more efficiently Nmax. For IPS12 to produce only the first 16 digits, as in RK4 and IPS4, only very small number of iterations is needed, as shown in Table 4. The CPU time in this case is about 100 times less than that of RK4 and IPS4, highlighting the high efficiency of the power series method.

| RK4 | ||

|---|---|---|

| N | sech(1) | CPU time |

| 1000 | 0.6480542736639463753683598987682775440961395993015 | 0.087257 |

| 2000 | 0.6480542736638892102461002212645001242748400651983 | 0.166534 |

| 3000 | 0.6480542736638861522791729310382098086291071707752 | 0.229016 |

| 4000 | 0.6480542736638856377319630763886301512099763161750 | 0.314480 |

| 5000 | 0.6480542736638854971232600221160524143929321411426 | 0.427610 |

| Exact | 0.6480542736638853995749773532261503231084893120719 | |

| IPS4 | ||

|---|---|---|

| N | sech(1) | CPU time |

| 1000 | 0.6480542736639323955233350367786700400715255548373 | 0.056793 |

| 2000 | 0.6480542736638883277492809223389308596155602276884 | 0.137941 |

| 3000 | 0.6480542736638859773823119964459740185046024606302 | 0.215395 |

| 4000 | 0.6480542736638855823023023050565759829475356280281 | 0.304501 |

| 5000 | 0.6480542736638854743968579026604641270130595226186 | 0.372284 |

| Exact | 0.6480542736638853995749773532261503231084893120719 | |

| IPS12 | ||

|---|---|---|

| N | sech(1) | CPU time |

| 1000 | 0.6480542736638853995749773532261503231079594354079 | 0.280617 |

| 2000 | 0.6480542736638853995749773532261503231084891816361 | 0.595639 |

| 3000 | 0.6480542736638853995749773532261503231084893110634 | 0.891370 |

| 4000 | 0.6480542736638853995749773532261503231084893120400 | 1.080357 |

| 5000 | 0.6480542736638853995749773532261503231084893120697 | 1.366386 |

| Exact | 0.6480542736638853995749773532261503231084893120719 | |

| IPS12 | ||

|---|---|---|

| N | sech(1) | CPU time |

| 3 | 0.6480542794079665629469114154348980055814088430953 | 0.000782 |

| 6 | 0.6480542736643770346283969779587807646256058100135 | 0.001429 |

| 9 | 0.6480542736638872007452856567074697922787489590238 | 0.002123 |

| 12 | 0.6480542736638854259793573015747954030451932565027 | 0.002768 |

| 15 | 0.6480542736638854001495023257702479909741369016589 | 0.003594 |

| Exact | 0.6480542736638853995749773532261503231084893120719 | |

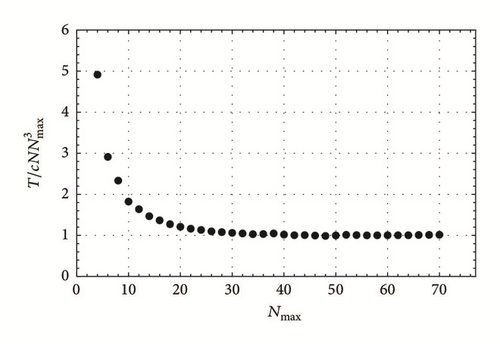

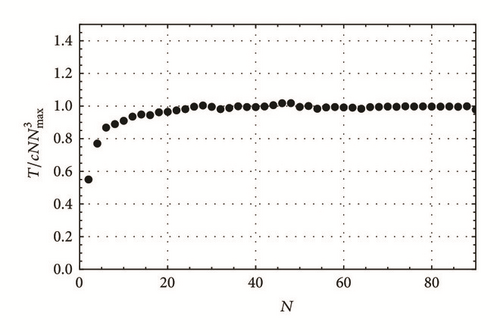

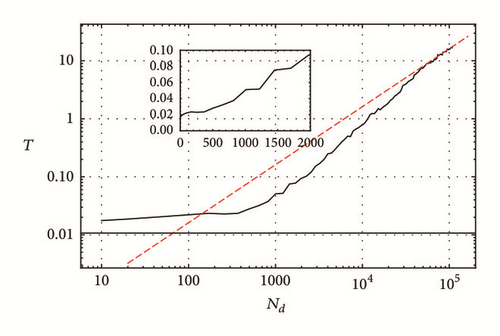

Achieving higher accuracy requires larger CPU time usage. Therefore, it is important to investigate how the CPU time, denoted here by T, depends on the main parameters of the method, namely N and Nmax. A typical plot is shown in Figure 4, where we plot on a log-log scale the CPU time versus the error. The linear relationship indicates T ∝ errorp, where p is the slope of the line joining the points in Figure 4. The error can be calculated in two ways: (i) the difference between the numerical solution and (ii) theoretical estimate, (15). Both ways are shown in the figure and they have the same slope. However, as expected, error defined by (15), which is actually an upper limit on the error, is always larger than the first one. To find how the CPU time depends explicitly on N and Nmax, we argue that the dependence should be of the form . This is justified by the fact that CPU time should be linearly proportional to the number of terms computed. The number of terms computed increases linearly with the number of iterations N. The number of terms in the power series is linearly proportional to Nmax. When substituted in the NLSE with cubic nonlinearity, the resulting number of terms, and thus T, will be proportional to . In Figure 5, it is shown indeed that the ratio saturates asymptotically to a constant for large N and Nmax since the scaling behaviors mentioned here apply for large N and Nmax. The proportionality constant, c, is very small and corresponds to the CPU time of calculating one term. It is dependent on the machine, the programming optimization [10], and the number of digits used, Nd. In terms of the number of digits, the CPU time increases, as shown in Figure 6, where it is noticed that CPU time is almost constant for number of digits Nd < 500.

4. Conclusions

We have presented an iterative power series method that solves the problem of finite radius of convergence. We have proved that the iterative power series is always composed of a sum of the typical power series of the function and a complementary series that cancels the divergency. The method is divided into two schemes where in the first we find a convergent power series for a given function and in the second we solve a given nonlinear differential equation. The result of the iterative power series expansion of sech(x) is remarkably convergent for arbitrary radius of convergence and accuracy, as shown by Figures 1 and 2 and Tables 1–4. Extremely high accuracy can be obtained by using higher-order iterative power series via increasing Nmax with relatively low CPU time usage. Robustness and efficiency of the method have been shown by solving the chaotic Lorenz system and the NLSE. Extensive analysis of the error and CPU time characterising the method is performed. Although we have focused on the localised sech(x) solution of the NLSE, all other solitary wave solutions (conoidal waves) can be obtained using the present method, just by choosing the appropriate initial conditions.

The method can be generalised to partial and fractional differential equations making its domain of applicability even wider.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.