Identifying Initial Condition in Degenerate Parabolic Equation with Singular Potential

Abstract

A hybrid algorithm and regularization method are proposed, for the first time, to solve the one-dimensional degenerate inverse heat conduction problem to estimate the initial temperature distribution from point measurements. The evolution of the heat is given by a degenerate parabolic equation with singular potential. This problem can be formulated in a least-squares framework, an iterative procedure which minimizes the difference between the given measurements and the value at sensor locations of a reconstructed field. The mathematical model leads to a nonconvex minimization problem. To solve it, we prove the existence of at least one solution of problem and we propose two approaches: the first is based on a Tikhonov regularization, while the second approach is based on a hybrid genetic algorithm (married genetic with descent method type gradient). Some numerical experiments are given.

1. Introduction

The inverse problem is expressed when the PDE solution is measured or specified, and we are interested in determining some properties: coefficients, forcing term, boundary, or initial condition from the partial knowledge of the system in a limited time interval (see [1, 2]).

In the last recent years, an increasing interest has been devoted to degenerate parabolic equations. Indeed, many problems coming from physics (boundary layer models in [3], models of Kolmogorov type in [4], etc.), biology (Wright-Fisher models in [5] and Fleming-Viot models in [6]), and economics (Black-Merton-Scholes equations in [7]) are described by degenerate parabolic equations [8].

The identification of the initial state of nondegenerate parabolic problems is well studied in the literature (see [9–11]). However, as far as we know, the degenerate case has not been analysed in the literature.

Problem (3) is ill-posed in the sense of Hadamard. To solve this problem, we propose two approaches.

Here, the last term in (5) stands for the so-called Tikhonov-type regularization ([12, 13]), ε being a small regularizing coefficient that provides extra convexity to the functional JT and ψb a priori (background) knowledge of the true state (the state to estimate). We consider that the values of ψb are given in each point of analysis grid-points.

The second approach is applied when there is a partial knowledge of values of ψb (example 20%); the regularization parameter is very difficult to determine. To overcome this problem, we propose a new approach, based on a hybrid genetic algorithm (married genetic with descent method gradient type). Finally, we make a comparison between the two mentioned approaches (with 20% of ψb).

First of all, we prove that problem (3) has at least one solution. The gradient of the functional J is calculated with the adjoint method. Numerical experiments are presented to show the performance of our approaches.

2. Problem Statement and Main Result

Firstly, we prove that problem (6) is well-posed, the functional J is continuous, and J is G-derivable in Aad.

We recall the following theorem.

Theorem 1 (see [14], [17], [18].)For all f ∈ L2(Ω × ]0, T[) and ψ0 ∈ L2(0,1), there exists a unique weak solution which solves problem (6) such that

Theorem 2. Let ψ be the weak solution of (6) with initial state ψ0. In noncoercive case, the function

Theorem 3. Let ψ be the weak solution of (6) with initial state ψ0. The function

Since a(x) = 0 at x = 0 and limx→0(λ/xβ) = +∞, the bilinear form B is noncoercive and is noncontinuous at x = 0.

We recall the following theorem.

Theorem 4 (see [15], [19].)If f = 0 then, for all ψ0 ∈ L2(Ω), problem (6) has a unique weak solution

Theorem 5. Let ψ be the weak solution of (6) with initial state ψ0. In subcritical potential case, the function

Theorem 6. Let ψ be the weak solution of (6) with initial state ψ0. The function

3. Proof

Proof of Theorem 2. Let be a small variation such that ψ0 + δψ0 ∈ Aad.

Consider δψ = ψδ − ψ, with ψ being the weak solution of (6) with initial state ψ0 and ψδ is the weak solution of (6) with initial state

Consequently, δψ is the solution of the variational problem:

Hence, δψ is the weak solution of (6) with f = 0. We apply the estimate in Theorem 1 with f = 0. This gives the following.

There is a constant CT such that

In addition, from (32) we have

We have , where α∈]0,1[, which gives Since the set Aad is bounded in , then Aad is a compact in L2(Ω). Therefore, J has at least one minimum in Aad.

Proof of Theorem 3. Let ψ0 ∈ Aad and δψ0 such that ψ0 + δψ0 ∈ Aad; we define the function

Hence, the function φ : ψ0 → ψ is G-derivable in Aad and we deduce the existence of the gradient of the functional J.

Proof of Theorem 5. Let δψ0 ∈ L2(Ω) be a small variation such that ψ0 + δψ0 ∈ Aad.

Consider δψ = ψδ − ψ, with ψ being the weak solution of (6) with initial state ψ0, and ψδ is the weak solution of (6) with initial state .

Consequently, δψ is the solution of variational problem

Take v = δψ; this gives

Proof of Theorem 6. Let ψ0 ∈ Aad and δψ0 such that ψ0 + δψ0 ∈ Aad; we define the function

Now, we are going to compute the gradient of J with the adjoint state method.

4. Gradient of J

Let us take P(x = 0) = P(x = 1) = 0; then we may write .

To calculate a gradient of J, we solve two problems: (6) and (76). The result solution of (6) is used in the second member of problem (76).

5. Discretization of Problem

Step 1 (full discretization). To resolve problem (6) and (76), we use the method θ-schema in time. This method is unconditionally stable for 1 > θ ≥ 1/2.

Let h be the steps in space and Δt the steps in time.

Let

Letting , finally we get

Step 2 (discretization of the functional one has).

We recall that the method of Thomas Simpson to calculate an integral is

Let the functions

Therefore,

6. Numerical Experiments and Results

In this section, we discuss two cases:

In case we have a priori knowledge ψb of in each point of analysis grid-points, we apply the Tikhonov approach to solve the minimization problem (8). The data ψb is assumed to be corrupted by measurement errors, which we will refer to as noise. In particular, we suppose that . Here, we study the impact of err (err = ‖e‖2) on the construction of the solution.

In case we have a partial knowledge of values of ψb (example 20%): firstly, we apply the hybrid approach to rebuild the initial state. Secondly, we make a comparison between both hybrid and Tikhonov approaches.

The tests have been performed in Matlab 2012A, on a Windows 7 platform.

6.1. Regularization Approach

-

let αi, β ∈ [0,1[ and α > 0

-

if

-

tk = αi and stop

-

if not

-

αi = ααi.

We do all the tests on Pc with the following configurations: Intel Core i3 CPU 2.27 GHz; RAM = 4 GB (2.93 usable).

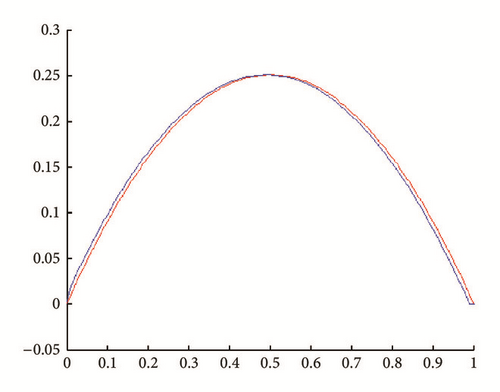

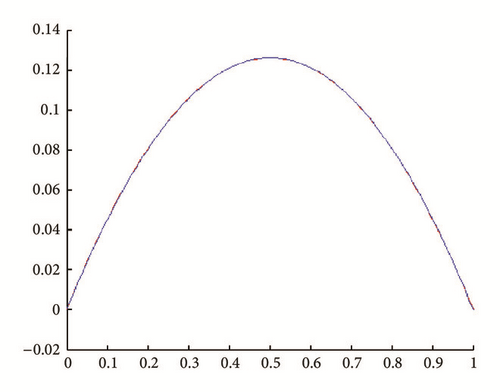

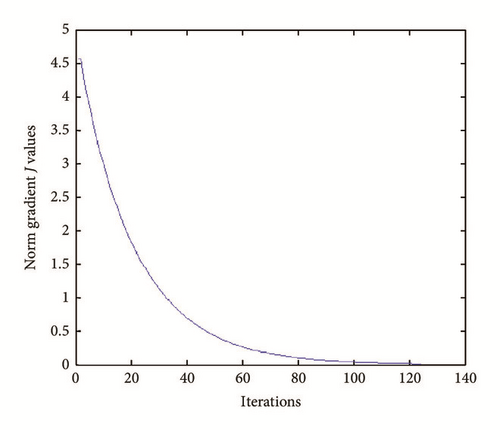

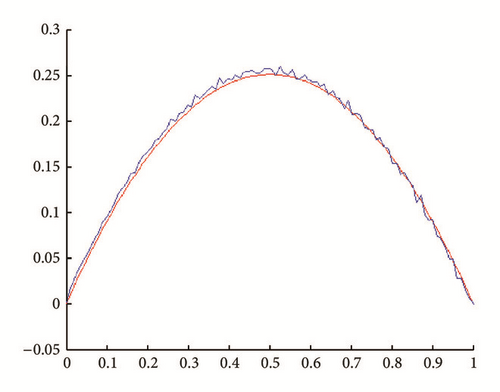

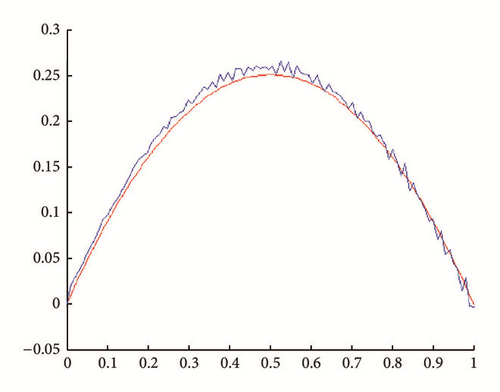

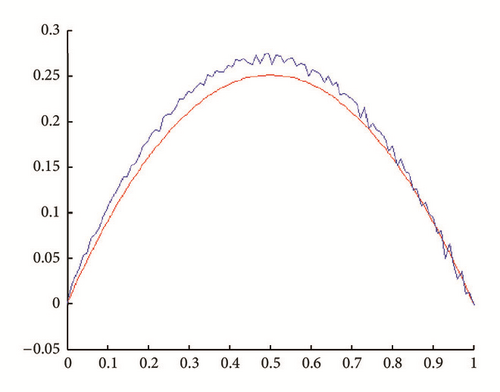

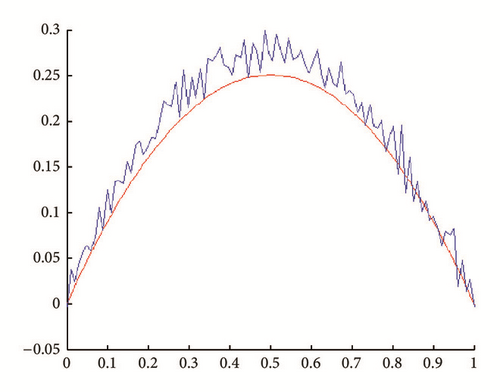

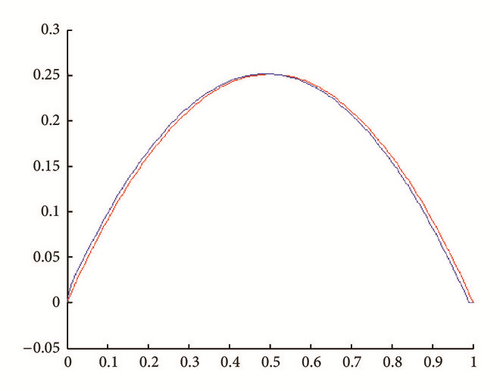

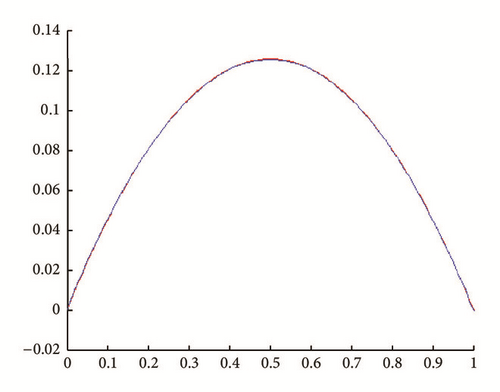

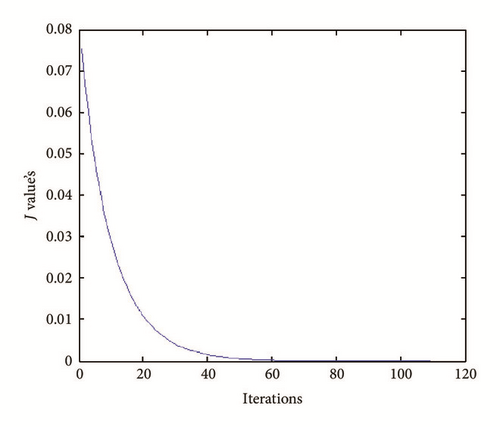

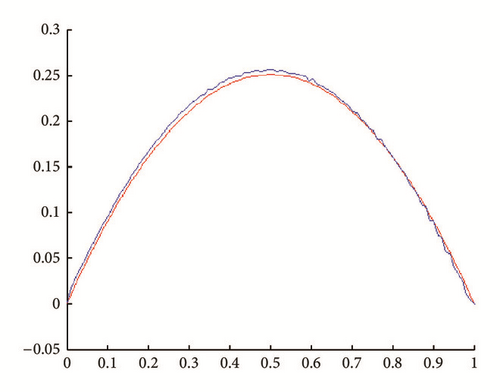

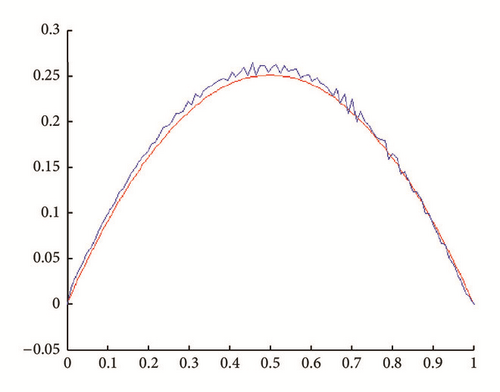

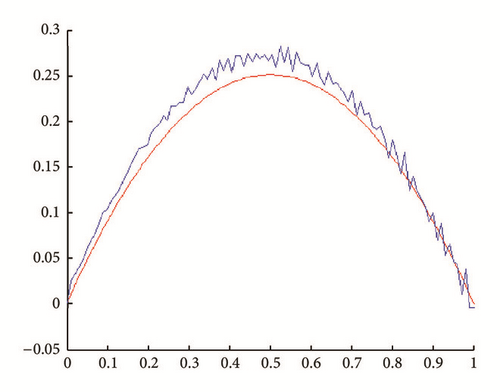

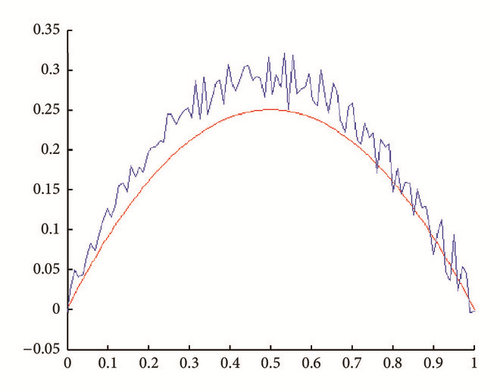

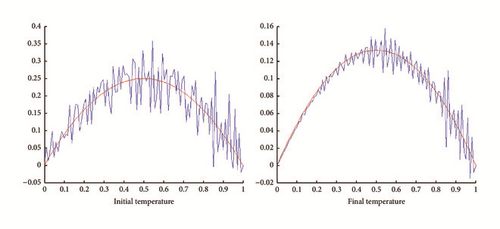

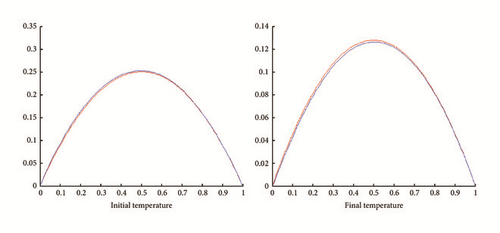

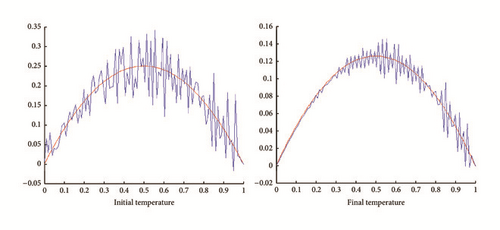

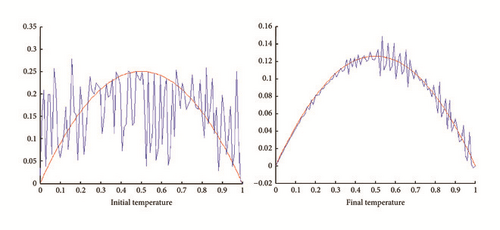

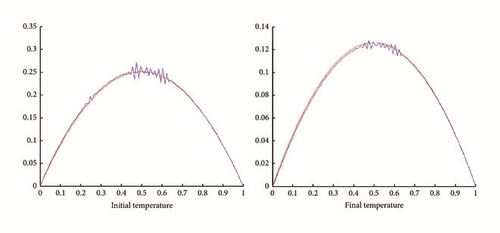

In all figures, the observed function is drawn in red and built function in blue.

Let N be number of points in space and M number of points in time.

6.1.1. The Noncoercive Case

Let α = 1/2, λ = 0 and parameters N = 100, M = 100.

(i) Tests with err = 0. See Figures 1, 2, 3, and 4.

(ii) Tests with err ≠ 0. In Figures 5, 6, 7, and 8, is drawn in red and ψ0 (rebuilt initial condition) in blue.

6.1.2. Sub Critical Potential Case

Let α = 1/2, λ = −(1 − α)2/4, β = 3/4 and the parameters N = 100, M = 100.

(i) Tests with err = 0. See Figures 9, 10, 11, and 12.

(ii) Tests with err ≠ 0. See Figures 13, 14, 15, and 16.

6.2. Hybrid Algorithm

-

(1) Initialize a population of individuals;

-

(2) Evaluate each individual in the population;

-

(3) While the stop criterion is not reached do

-

{

-

(4) Select individuals for the next population;

-

(5) Apply genetic operators (crossover, mutation) to produce new individuals;

-

(6) Evaluate the new individuals;

-

}

-

(7) return the best individual.

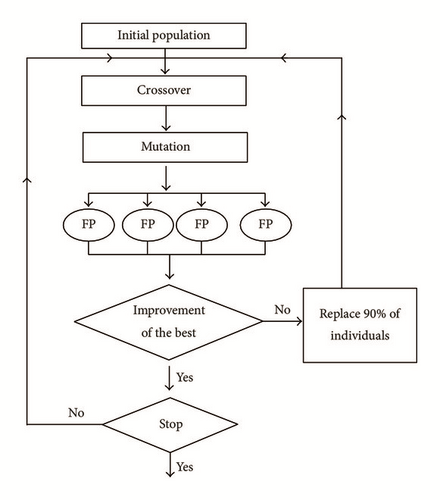

The hybrid methods combine principles from genetic algorithms and other optimization methods. In this approach, we will combine the genetic algorithm with method descent (steepest descent algorithm (FP)).

We assume that we have a partial knowledge of background state ψb at certain points (xi) i∈I, I ⊂ {1,2, …, N + 1}.

We assume the individual is a vector ψ0; the population is a set of individuals.

-

let αi, β ∈ [0,1[ and α > 0.

-

if

-

tk = αi and stop

-

if not

-

αi = ααi.

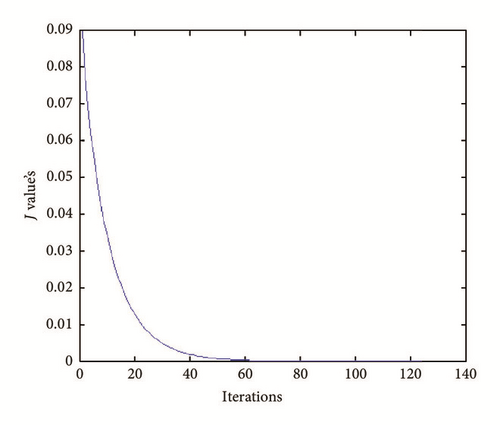

Consider we know 20% of values of background state (ψb), in this test we try to build the solution with the hybrid method.

In the figures below, the observed function is drawn in red and built function in blue.

Let N be number of points in space and M number of points in time.

6.2.1. The Noncoercive Case

Let α = 1/2, λ = 0 and parameters N = 100, M = 100, number of individuals = 30, and number of generations = 2000.

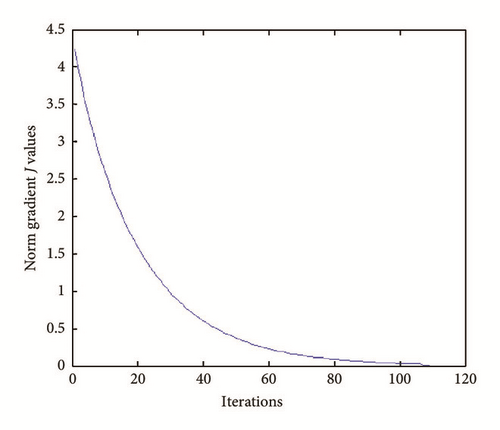

The test with simple descent gives Figure 18.

The test with genetic algorithm gives Figure 19.

Now we turn the hybrid algorithm. This gives Figure 20.

6.2.2. Subcritical Potential Case

Let α = 1/2, λ = −(1 − α)2/4, β = 3/4 and parameters N = 100, M = 100, number of individuals = 30, and number of generations = 2000.

The test with simple descent gives Figure 21.

The test with genetic algorithm gives Figure 22.

Now we turn the hybrid algorithm. This gives Figure 23.

6.3. Comparison between Hybrid Approach and Tikhonov Approach

Here, we assume that we know 20% of values of background state (ψb).

(i) Noncoercive Case. see Tables 1 and 2.

| ε | Minimum value of J | Elapsed time (seconds) |

|---|---|---|

| 10−08 | 1.106317 · 10−02 | 8.38 |

| 10−07 | 1.092014 · 10−02 | 24.47 |

| 10−06 | 6.630517 · 10−03 | 23.11 |

| 10−05 | 7.752620 · 10−03 | 21.50 |

| 10−04 | 7.857129 · 10−03 | 22.64 |

| 10−03 | 8.510799 · 10−03 | 18.95 |

| 10−02 | 8.733989 · 10−03 | 15.01 |

| 10−01 | 1.018406 · 10−02 | 17.33 |

| 1 | 1.552344 · 10−02 | 6.04 |

| Minimum value of J | Elapsed time |

|---|---|

| 6.581908 · 10−03 | 1 min |

| 5.850810 · 10−03 | 2 min |

| 3.362100 · 10−04 | 7 min |

| 1.071378 · 10−04 | 11 min |

| 8.739839 · 10−05 | 23 min |

| 5.958016 · 10−05 | 6 h 43 min |

| 6.175260 · 10−06 | 11 h 20 min |

The minimum value of J reached by the Tikhonov algorithm was 6.630517 · 10−03, whereas with the hybrid algorithm it was possible to reach the value 6.175260 · 10−06 in 11 h and 20 min with knowledge of 20% of ψb; if we take more than 20%, we got less than elapsed time.

(ii) Subcritical Potential Case. see Tables 3 and 4.

| ε | Minimum value of J | Elapsed time (seconds) |

|---|---|---|

| 10−08 | 1.113538 · 10−02 | 10.91 |

| 10−07 | 1.188092 · 10−02 | 8.68 |

| 10−06 | 1.099187 · 10−02 | 8.75 |

| 10−05 | 1.267204 · 10−02 | 7.91 |

| 10−04 | 9.648060 · 10−03 | 11.17 |

| 10−03 | 7.320995 · 10−03 | 11.61 |

| 10−02 | 9.188603 · 10−03 | 10.20 |

| 10−01 | 9.799159 · 10−03 | 9.57 |

| 1 | 1.042463 · 10−02 | 9.66 |

| Minimum value of J | Elapsed time |

|---|---|

| 7.605018 · 10−03 | 1 min |

| 7.505279 · 10−03 | 2 min |

| 1.762564 · 10−03 | 4 min |

| 9.407809 · 10−04 | 43 min |

| 2.981666 · 10−05 | 1 h 56 min |

| 1.378356 · 10−05 | 6 h 43 min |

| 8.203546 · 10−06 | 13 h 40 min |

The minimum value of J reached by the Tikhonov algorithm was 7.320995 · 10−03, whereas with the hybrid algorithm it was possible to reach the value 8.203546 · 10−06 in 13 h and 40 min with knowledge of 20% of ψb; if we take more than 20%, we got less than elapsed time.

7. Conclusion

In this paper, we have presented the regularization method and the hybrid method which are applied to determine an initial state from the point of measurements of parabolic degenerate/singular problem. These methods have proven efficiency to rebuild the solution. The proposed reconstruction algorithms are easily implanted.

The elapsed time of the hybrid method is long enough. To reduce it, in our coming work we will use the multiprogramming to run two approaches of parallelism.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.