Adaptive Algorithm for Multichannel Autoregressive Estimation in Spatially Correlated Noise

Abstract

This paper addresses the problem of multichannel autoregressive (MAR) parameter estimation in the presence of spatially correlated noise by steepest descent (SD) method which combines low-order and high-order Yule-Walker (YW) equations. In addition, to yield an unbiased estimate of the MAR model parameters, we apply inverse filtering for noise covariance matrix estimation. In a simulation study, the performance of the proposed unbiased estimation algorithm is evaluated and compared with existing parameter estimation methods.

1. Introduction

The noisy MAR modeling has many applications such as high resolution multichannel spectral estimation [1], parametric multichannel speech enhancement [2], MIMO-AR time varying fading channel estimation [3], and adaptive signal detection [4].

When the noise-free observations are available, the Nuttall-Strand method [5], the maximum likelihood (ML) estimator [6], and the extension of some standard schemes in scalar case to multichannel can be used for estimation of MAR model parameters. The relevance of the Nuttall-Strand method is explained in [7] by carrying out a comparative study between these methods. The noise-free MAR estimation methods are sensitive to the presence of additive noise in the MAR process which limits their utility [1].

The modified Yule-Walker (MYW) method is a conventional method for noisy MAR parameter estimation. This method uses estimated correlation at lags beyond the AR order [1]. The MYW method is perhaps the simplest one from the computational point of view [8], but it exhibits poor estimation accuracy and relatively low efficiency due to the use of large-lag autocovariance estimates [8]. Moreover, numerical instability issues may occur when it is used in online parameter estimation [9].

The least-squares (LS) method is another method for noisy MAR parameter estimation. The additive noise causes the least-squares (LS) estimates of MAR parameters to be biased. In [10] an improved LS (ILS) based method has been developed for estimation of noisy MAR signals. In this method, bias correction is performed using observation noise covariance estimation.

The method proposed in [10], denoted by vector ILS based (ILSV) method, is an extension of Zheng’s method [11] to the multichannel case. In the ILSV method, the channel noises can be correlated and no constraint is imposed on the covariance matrix of channel noises. Nevertheless this method has poor convergence when the SNR is low.

In [12], the ILSV algorithm is modified using symmetry property of the observation covariance matrix which is named an advanced least square vector (ALSV) algorithm. One step called symmetrisation is added to the ILSV algorithm to estimate the observation noise covariance matrix.

In [13, 14], methods based on errors-in-variables and cross coupled, Kalman and H∞, filters are suggested, respectively. These methods are also an extension of the scalar methods presented in [15] and [16, 17], respectively. In these methods, the observation noise covariance matrix is assumed to be a diagonal matrix. This assumption means that the channel noises are uncorrelated. These methods are not suitable for spatially correlated noise case.

Note that cross coupled filter idea consists of using two mutually interactive filters. Each time a new observation is available, the signal is estimated using the latest estimate value of the parameters, and conversely the parameters are estimated using the latest a posteriori signal estimate.

In this paper, we combine low-order and high-order YW equations in a new scheme and use adaptive steepest descent (SD) algorithm to estimate the parameters of the MAR process. We also apply the inverse filtering idea for observation noise covariance matrix estimation. Finally we can theoretically perform the convergence analysis of the proposed method. Using computer simulations, the performance of the proposed algorithm is evaluated and compared with that of the LS, MYW, ILSV, and ALSV methods in spatially correlated noise case. Note that we do not do comparisons with [13, 14] because they assume that the channel noises are uncorrelated.

The proposed estimation algorithm provides more accurate parameter estimation. The convergence behaviour of the proposed method is better than those of the ILSV and the ALSV methods.

2. Data Model

- (i)

The AR order p and the number of channels m are known.

- (ii)

The MAR parameter matrices Ai are constrained such that the roots of

()

- (iii)

The observation noise w(n) is uncorrelated with the input noise e(n); that is, E{w(n)e(k) T} = 0 for all n and k.

The main objective in this paper is to estimate A, Σw, and Σe using the given samples {y(1), y(2), …, y(N)}.

3. Steepest Descent Algorithm for Noisy MAR Models

The proposed estimation algorithm uses steepest descent method. It iterates between the following two steps until convergence: (I) given noise covariance matrix, estimate MAR matrices using steepest descent method; (II) given MAR matrices, estimate noise covariance matrix based on inverse filtering idea.

3.1. MAR Parameter Estimation Given Observation Noise Covariance Matrix

3.2. Application of Inverse Filtering for Estimation of Observation Noise Covariance Matrix Given MAR Matrices

Now, we assume that Σw and A are known and derive an estimate for Σe.

3.3. The Algorithm

The results derived in previous sections and subsections are summarized to propose a recursive algorithm for estimating Σw and Ai’s (i = 1,2, …, p). The improved least-squares algorithm for multichannel processes based on inverse filtering (IFILSM) is as follows.

Step 1. Compute autocovariance estimates by means of given samples {y(1), y(2), …, y(N)}:

Step 2. Initialize i = 0, where i denotes the iteration number. Then, compute the estimate

Step 3. Set i = i + 1 and calculate the estimate of observation noise covariance matrix :

Step 4. Perform the bias correction

Step 5. If

Step 6. Estimate Σe via (33):

4. Simulation Result

In simulation study, we have compared the IFILSM method with the other existing methods. Two examples have been considered: first frequency estimation and second synthetic noisy MAR model estimation. In all simulations, we set μ = 1 for the proposed IFILSM algorithm.

4.1. Estimating the Frequency of Multiple Sinusoids Embedded in Spatially Colored Noise

Example 1. We consider N = 500 data samples of a signal comprised of two sinusoids corrupted with spatially colored noise. Consider y(n) as follows:

| SNR | 5 dB | 5 dB | 10 dB | 10 dB |

| Methods | MSE1 (dB) | MSE2 (dB) | MSE1 (dB) | MSE2 (dB) |

| IFILSM | −42.18 | −47.04 | −51.11 | −60.06 |

| ILSV | −32.64 | −41.91 | −42.22 | −50.51 |

| ALSV | −32.35 | −42.76 | −42.33 | −51.36 |

| LS | −5.75 | −23.02 | −32 | −31.27 |

| MYW | −37.58 | −45.05 | −46.82 | −51.94 |

4.2. Synthetic MAR Processes

Example 2. Consider a noisy MAR model with p = 2, m = 2, and coefficient matrices

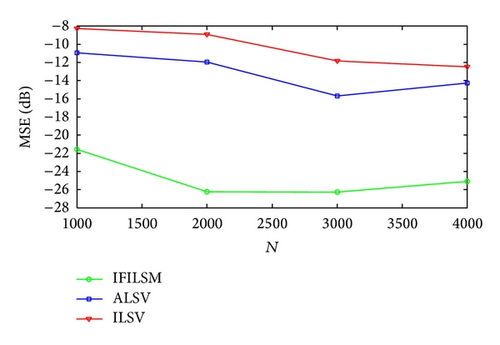

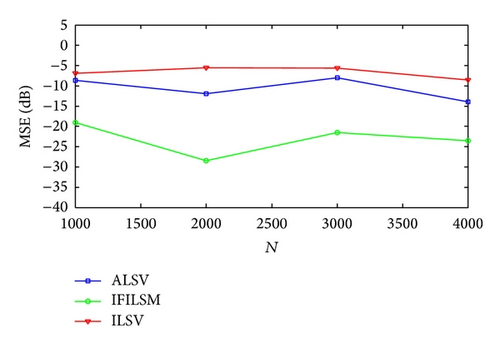

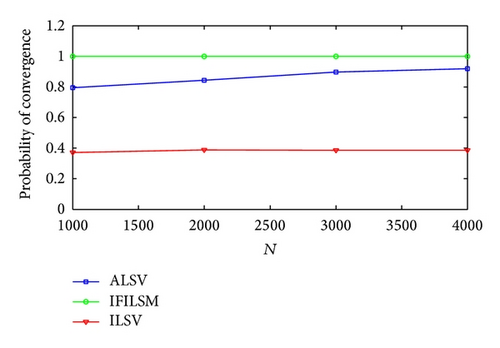

In Case 1 and Case 2 SNR for each channel is set to 15 and 10 dB, respectively. In this example data samples N are changed from 1000 to 4000 with step size 1000. The MSE and P values are plotted in Figures 1, 2, and 3 versus N, respectively. The probability of convergence is one for all algorithms in Case 1 and the figure is not plotted here. From the figures, we can see that the accuracy and the convergence of the IFILSM are better than the ILSV and ALSV methods. Note that the ILSV and ALSV methods have poor convergence when SNR is lower or equal to 10 dB. It can be seen from the figures that the performance of the all algorithms is constant over the sample size because autocovariance estimates of the observations are not changed in this rage of data sample size.

5. Conclusion

Steepest descent (SD) algorithm is used for estimating noisy multichannel AR model parameters. The observation noise covariance matrix is nondiagonal; this means that the channel noises are assumed to be spatially correlated. The inverse filtering idea is used to estimate the observation noise covariance matrix. Computer simulations showed that the proposed method has better performance and convergence than the ILSV and the ALSV methods.

Conflict of Interests

The author declares that there is no conflict of interests regarding the publication of this paper.

Appendix

The following result discusses the convergence conditions of the proposed algorithm.

Theorem A.1. The necessary and sufficient condition for the convergence of the proposed algorithm is to require that the step size parameter μ satisfy the following condition:

Proof. Defining estimation error matrix x(i) = A(i) − A, substituting A − AH − RH1RwA = 0 into (24), and using x(i) = A(i) − A, we obtain

If −1 < 1 − μ(1 − λi) < 1, then limi→∞x(i) = 0. In this case, we have 0 < μ < 2/(1 − λi), and their intersection is 0 < μ < 2/λmax(I − RH1Rw) = 2/(1 − λmin).