A Simple Gaussian Measurement Bound for Exact Recovery of Block-Sparse Signals

Abstract

We present a probabilistic analysis on conditions of the exact recovery of block-sparse signals whose nonzero elements appear in fixed blocks. We mainly derive a simple lower bound on the necessary number of Gaussian measurements for exact recovery of such block-sparse signals via the mixed l2/lq (0 < q ≤ 1) norm minimization method. In addition, we present numerical examples to partially support the correctness of the theoretical results. The obtained results extend those known for the standard lq minimization and the mixed l2/l1 minimization methods to the mixed l2/lq (0 < q ≤ 1) minimization method in the context of block-sparse signal recovery.

1. Introduction and Main Results

for block-sparse signal recovery, as a generalization of the standard lq minimization, where . Similar to the standard lq minimization problem, (6) is also a nonconvex problem for any 0 < q < 1, and finding its global minimizer is in general computationally impossible. However, it is well known that there are several efficient heuristic methods to compute local minimizers of the standard lq (0 < q ≤ 1) minimization problem; say, for example, [18, 22]. One can generalize those approaches to solve the mixed l2/lq minimization problem (6). In particular, we will adopt the iteratively reweighted least squares techniques in this paper.

In [17], Chartrand and Staneva conducted a detailed analysis of the lq (0 < q ≤ 1) minimization approach for the nonblock sparse recovery problem. They derived a lower bound of Gaussian measurement for exact recovery of a nonblock sparse signal. Furthermore, Eldar and Mishali [8] also provided a lower bound on block-sparse signal recovery in the Gaussian measurement ensemble for the mixed l2/l1 minimization. Along this line, we will provide in this paper a lower bound of Gaussian measurements for exact recovery of block-sparse signal through the mixed l2/lq (0 < q ≤ 1) norm minimization. The obtained results will complement the results of [8, 17] and demonstrate particularly that the block version of lq (0 < q ≤ 1) minimization can reduce the number of Gaussian measurements necessary for the exact recovery as q decreases.

To introduce our results, we first state the definition of block-RIP [8] as follows.

Definition 1 (see [8].)Let be an M × N measurement matrix. One says that Φ has the block-RIP over (then N = md) with constant if for every block k-sparse signal over such that

This new definition of block-RIP is crucial for our analysis of the mixed l2/lq (0 < q ≤ 1) minimization method. For convenience, we still use δk instead of , henceforth to represent the block-RIP constant of order k. With this notion, we can prove the following sufficient condition for exact recovery of a block k-sparse signal.

Theorem 2. Let be an arbitrary block k-sparse signal. For any given q ∈ (0,1], if the measurement matrix has the block-RIP (7) with constant

then the mixed l2/lq minimization problem (6) has a unique solution, given by the original signal x.

Condition (8) generalizes the condition of the mixed l2/l1 case in [8] to the mixed l2/lq (0 < q ≤ 1) case. Note that the right-hand side of (8) is monotonically decreasing with q, which shows that decreasing q can get weaker recovery condition. This shows further that fewer measurements might be needed to recover a block k-sparse signal whenever the mixed l2/lq (0 < q < 1) minimization methods are used instead of the mixed l2/l1 minimization method. For clarifying this issue more precisely, we can adopt a similar probabilistic method of [17] to derive a simple lower bound on how many random Gaussian measurements are sufficient for (8) to hold with high probability. The result to be verified is in Section 3.

Theorem 3. Let Φ be a M × N matrix with i.i.d. zero-mean unit variance Gaussian entries and N = md for some integer m. For any given q ∈ (0,1], if

then the subsequent conclusion is true with probability exceeding : Φ satisfies (8); therefore, any block k-sparse signal can be recovered exactly by the solution of the mixed l2/lq minimization problem (6).

More detailed remarks on this bound will be presented in Section 3.

The rest of the paper is organized as follows. In Section 2, we present several key lemmas needed for the proofs of our main results. All these lemmas can be regarded as generalizations of the standard non-block-sparse case to the block-sparse case. The proofs of Theorems 2 and 3 are given in Section 3. Numerical experiments are provided in Section 4 to demonstrate the correctness of the theoretical results. We conclude the paper then in Section 5 with some useful remarks.

2. Fundamental Lemmas

In this section, we establish several lemmas necessary for the proofs of the main results.

Lemma 4 (see [8].)Consider

for all x, x′ supported on disjoint subsets T, T′⊆{1,2, …, N} with |T | < k, |T′ | < k′.

In the next lemma, we show that the probability that block restricted isometry constant δk exceeds a certain scope decays exponentially in certain length of x.

Lemma 5 (see [8].)Suppose Φ is an M × N matrix from the Gaussian ensemble; namely, Φij ~ N(0, 1/M). Let be the smallest value satisfying the block-RIP of Φ over I = {d1 = d, d2 = d, …, dm = d} and N = md for some integer m. Then, for every ϵ > 0, the block restricted isometry constant satisfies the following inequality:

which holds with high probability 2e−mH(kd/N)ϵ, where H(p) = −plog(p)−(1 − p)log(1 − p) (0 < p < 1).

Lemma 6. Suppose Φ is an M × N matrix from the Gaussian ensemble; namely, Φij ~ N(0, 1/M). For any 0 < δ < 1, the following estimation

holds uniformly for with probability exceeding 1 − 2(12/δ) Le−M(1 + δ/2)mH(k/m)/k.

Proof. Note that [23] has verified the subsequent conclusion: if Φ is a random matrix of size M × N drawn according to a distribution that satisfies the concentration inequality , where c0(β) is a constant dependent only on β such that c0(β) > 0 for 0 < β < 1, then, for any set T with |T | = k < n and any 0 < δ < 1, there holds the estimation

with probability exceeding . By Lemma 5, the concentration inequality is clearly true for Gaussian measurement matrix. Thus Lemma 6 follows.

We also need the following inequality.

Lemma 7 (see [24].)For any fixed q ∈ (0,1) and ,

3. Proofs of Theorems

With the preparations made in the last section, we now prove the main results of the paper. Henceforth, we let Φ denote an M × N matrix whose elements are i.i.d. random variables; specifically, Φij ~ N(0, 1/M). We prove Theorem 2 by using the block-RIP and Lemmas 4 and 7.

Proof of Theorem 2. First notice that assumption (8) implies δ2k < 1 and further implies the uniqueness of x satisfying the observation y = Φx. Let x* = x + h be a solution of (6). Our goal is then to show that h = 0. For this purpose, we let xT be the signal that is identical to x on the index set T and to zero elsewhere. Let T0 be the block index set over the k nonzero blocks of x, and we decompose h into a series of vectors such that

Here is the restriction of h onto the set Ti and each Ti consists of k blocks (except possibly TJ). Rearrange the block indices such that , for any j ≥ 1.

From [9], x* is the unique sparse solution of (6) being equal to x if and only if

for all nonzero signal h in the null space of Φ. This is the so-called the null space property (NSP). In order to characterize the NSP more precisely, we consider the following equivalent form: there exists a constant γ(h, q) satisfying 0 < γ(h, q) < 1 such that

We proceed by showing that γ(h, q) < 1 under the assumption of Theorem 2.

In effect, we observe that

For any j ≥ 2, if we denote , , , then we have

By Lemma 7, we thus have

that is,

On the other hand, let

It is easy to see that

Since Φx = Φx*, we have Φh = 0, which means . By the definition of block-RIP, Lemma 4, (22), and (24), it then follows that

By the definition of block-RIP δ2k and by using Hölder’s equality, we then get

This together with (24) and (25) implies

Through a straightforward calculation, one can check that the maximum of f(t) occurs at t0 = (1 − q/2)/(2 − δ2k) and

If f(t0) < 1, then we clearly have γ(h, q) < 1 and finish the proof. However, f(t0) < 1 gives that

or, equivalently,

Note that (30) implies 0 < δ2k < 1/2 and

By simple calculations, we can check that, for any given q ∈ (0,1], inequality (30) is true as long as

that is, condition (8) is satisfied. With this, the proof of Theorem 2 is completed.

Now we adopt the probabilistic methods to analyze how condition (8) can be satisfied; particularly, how many measurements are needed for exact recovery of a block k-sparse signal.

Proof of Theorem 3. From Theorem 2, we only need to show that, under condition (9), (8) holds with high probability. In particular, we will proceed to determine how random Gaussian measurements are sufficient for exact recovery of block k-sparse signals with a failure probability at most . To this end, we let L = 2kd. Then by Lemma 6, an upper bound for the probability that any M × L submatrix of Φ fails to satisfy the block-RIP (30) is

By inequality , it suffices to show that we can have the following estimation:

So by a simple calculation,

Remark 8. From the right-hand side of (35), we can further see that, except the first term related to m/k, other terms in the numerator are only related to k and d, that is, to the numbers of nonzero blocks and the block size. Obviously, those terms have a smaller contribution to the number of measurements. Basically, Theorem 3 shows that the signal x with block-sparsity ‖x‖2,0 ≤ k can be recovered exactly by the solution of the mixed l2/lq minimization (6) with high probability provided roughly

Obviously, this result generalizes the well-known result in [8] on the Gaussian ensemble for the mixed l2/l1 minimization. We can further see from Theorem 3 that, when specified to q = 1, the bound (36) has the same order as Eldar’s result (O(klog(m/k)), see [8]). It is also obvious that C(q) is monotonically increasing with respect to q ∈ (0,1], which then implies that decreasing q allows fewer necessary measurements for exact recovery of block-sparse signals by the mixed l2/lq minimization method. Since, when block size d = 1, the mixed l2/lq minimization method degenerates to the standard lq minimization method, the presented result then provides a theoretical support to such experimental observation reported in the following section.

4. Numerical Experiments

With this, the IRLS algorithm we used can be summarized as follows (more details can be found in [11]).

Step 1. Set the iteration count t to zero and ϵ0 = 1. Initialize .

Step 2. Let

and update

Step 3. Terminate the iteration on convergence or when t attains a specified maximum number tmax.

Otherwise, set t = t + 1 and go to Step 2.

The above algorithm can be seen as a natural generalization of general sparse signal recovery algorithm to the block-sparse setting, which alternates between estimating x and redefining the weighting matrix W. Though this algorithm is for the unconstrained penalized problem (37), it can still give a good estimation on the minimum of the constrained problem (6) when we choose a sufficient small τ (such as τ = 10−6). In the following experiments, we set τ = 10−6 and terminate the algorithm if ϵ < 10−7 or .

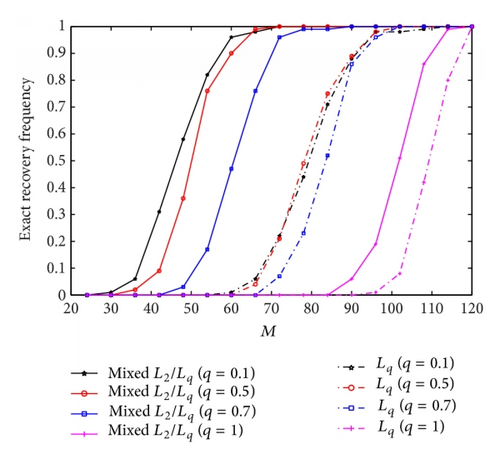

In our first experiment, we took the signal length N = 128, the block size d = 4, and the block-sparsity k = 6. For independent 100 trails, we first randomly generated the block-sparse signal x with values from a Gaussian distribution of mean 0 and standard deviation 1, and then we randomly drew a measurement matrix Φ from Gaussian ensemble. We also took the number of measurements varying from 24 to 124. The purpose of the experiment was then to check the correctness of Theorem 3. We considered four different values of q = 0.1,0.5,0.7,1 for both the mixed l2/lq minimization method and the standard lq minimization method.

The experiment result is shown in Figure 1. It is seen from Figure 1 that, for all the experiment runs, the smaller q requires the smaller number of measurements for exact recovery of block sparse signals. This observation is consistent with Theorem 3. On the other hand, for a fixed q, the mixed l2/lq method is clearly superior to the standard lq method in this block-sparse setting. For instance, the mixed l2/lq method with q = 0.1 only uses 50 Gaussian measurements for the exact recovery, while the number of measurements needed for lq method is around 90. This observation also partially supports the theoretical assertion of Theorem 3, namely, that incorporating the block structure into recovery method requires fewer measurements for exact recovery.

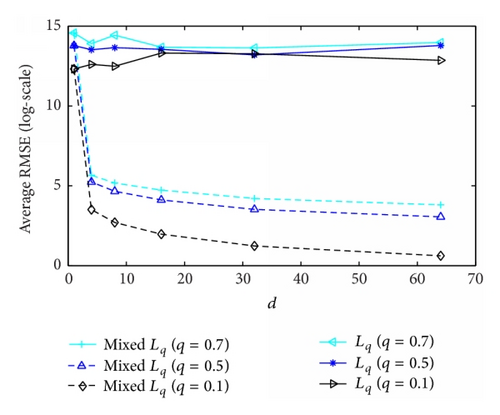

In our second experiment, we further studied the effect of block size d for block-sparse signal recovery. We set N = 256 and drew a measurement matrix of size 128 × 256 from Gaussian ensemble. In this experiment, the block size d was changed while keeping the total sparsity kd = 64 fixed. Figure 2 shows the average root mean squares error (RMSE). Here RMSR is defined as in the logarithmic scale over 100 independent random runs. One can easily see from Figure 2 that the recovery performance for the standard lq method is independent of the active block number k, while the recovery errors for the mixed l2/lq method are significantly better than the standard lq method when the active block number k is far smaller than the total signal sparsity kd. Since the total sparsity kd is fixed and larger d leads to smaller k, as predicted by Theorem 3, the necessary measurement number needed for exact recovery of a block k-sparse signal by the mixed method is also reduced. This also demonstrates the advantage of the mixed l2/lq method over the standard lq method.

5. Conclusion

In this paper, the number of Gaussian measurements necessary for the exact recovery of a block-sparse signal by the mixed l2/lq (0 < q ≤ 1) norm minimization has been studied. The main contribution is the derivation of a lower bound on the necessary number of Gaussian measurements for the exact recovery based on a probabilistic analysis with block-RIP. The obtained results are helpful for understanding the recovery capability and algorithm development of the mixed l2/lq norm minimization approach for sparse recovery and, particularly, facilitate the applications in the development of block-sparse information processing.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Acknowledgment

This work has been supported by the Natural Science Foundation of China under Grant nos. 61303168, 61273020, and 11171272.