Vision Target Tracker Based on Incremental Dictionary Learning and Global and Local Classification

Abstract

Based on sparse representation, a robust global and local classification algorithm for visual target tracking in uncertain environment was proposed in this paper. The global region of target and the position of target would be found, respectively by the proposed algorithm. Besides, overcompleted dictionary was obtained and updated by biased discriminate analysis with the divergence of positive and negative samples at current frame. And this over-completed dictionary not only discriminates the positive samples accurately but also rejects the negative samples effectively. Experiments on challenging sequences with evaluation of the state-of-the-art methods show that the proposed algorithm has better robustness to illumination changes, perspective changes, and targets rotation itself.

1. Introduction

Visual target tracking in uncertain environment is an important component in the field of computer vision [1]. In uncertain scene, the negative impact on quality of target is mainly caused by occlusion, pose changes, significant illumination variations, and so on. Therefore, discrimination method with a strong robustness against the target and environment changes is required for accurate tracking. Visual target tracking can be treated as a binary classification problem between targets and backgrounds, target candidate set of which is established by affine transformation, and classifier is then used to discriminate the target from candidate set [2]. Therefore, classifier should be not only well discriminated to targets but also capable of rejecting the discrimination of background feature and even has better robustness to occlusions, pose changes, and illumination variations.

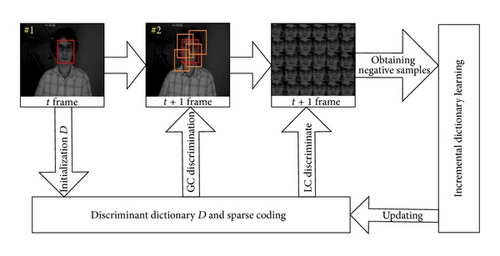

In this paper, an incremental tracking algorithm was proposed for resolving the target appearance variations and occlusions problems. The system chart as Figure 1. Object is represented with global and local sparse representation, and tracking task is formulated as sparse representation binary classification problem with dictionary incremental learning. For object representation, targets are treated as positive samples, whereas backgrounds are treated as negative samples. Then positive and negative samples are used to establish the discriminatory dictionary, where target appearance model is treated as linear combination with discriminatory dictionary and sparse coding. In the first frame, targets are affine-transformed to affine transformation subspace, where the target is found with the minimum reconstruction error. Global classifier with sparse representation is established to determine the global region of target from center-point collection, while sparse representation local classifier is used to set up discrimination to find the target location from global region. As we know, the appearance of the target itself and the external scenes vary in real time, so dictionary needs to be updated with features of the next frame by incremental learning to ensure tracking result accurately.

The rest of the paper is organized as follows. Section 2 reviews the related works. Section 3 proposes target motion model and sparse representation globe classifier and local classifier algorithm. Furthermore, dictionary learning and incremental updating are introduced in Section 4. Section 5 reports the experimental results. Finally, the conclusions are summarized in Section 6.

2. Related Works

Currently, according to the target appearance model, target tracking methods can be divided into two categories: generated and discriminative model methods. The generated model method use appearance model to replace the target observed template, tracking result get from the highest similarity search with the appearance model of the area. For example, the mean-shift [3] and the incremental tracking [4]. In [4], in order to make the algorithm adapt to the real-time changes of target appearance effectively, the target appearance models are incremental learned by a group of low-dimensional subspaces. Discriminative model method: cast the tracking as a binary classification problem. Tracking is formulated as finding the target location that can accurately separate the target from the background. In [5], online multiple instance learning methods improve the robustness of the target tracking system to the influence of occlusion. In [6], visual object tracking is construed as a numerical optimization problem and applies cluster analysis to the sampled parameter space to redetect the object and renew the local tracker. In [7], an ensemble of weak classifiers is trained online to distinguish between the object and the background, and the weak classifiers are combined into a strong classifier using AdaBoost; then, the strong classifier is used to label pixels in the next frame as either belonging to the object or the background.

Some scholars have access to a stable target tracking system, which takes advantage of sparse representation classification model for target tracking. In [8], the set of trivial template are constructed with occlusion and corruption, target candidate is sparsely represented by target and trivial templates at new frames, then, the smallest projection error is taken to find target during tracking. In [9], sample-based adaptive sparse representation is proposed to address partial occlusion or deterioration; object representations are described as sample basis with exploiting L1-norm minimization. In [10], the paper proposed a dynamic group sparsity and two-stage sparse optimization to jointly minimize the target reconstruction error and maximize the discriminative power. In [11], tracking is achieved by minimum error bound and occlusion detection, the minimum error bound is calculated for guiding particle resampling, and occlusion detection is performed by investigating the trivial coefficients in the L1-norm minimization.

In addition, to resolve the problems that the overcompleted dictionary cannot discriminate sufficiently, Xuemei utilized target template to structure dictionary [T, I, −I] [9, 11]; I and −I are not related unit matrix, and T is a small piece of target templates. In [12], the use of the learning obtained in the sparse representation dictionary is more effective than a preset dictionary. In [13] proposed a dictionary learning function, the dictionary was obtained by K-SVD algorithm and linear classifier. In [14], dictionary learning problem is deemed as optimization of a smooth nonconvex over convex sets and proposed an iterative online algorithm that solves this problem by efficiently minimizing at each step a quadratic surrogate function of the empirical cost over the set of constraints. On this basis, paper [15] proposes a new discriminative DL framework by employing the Fisher discrimination and criterion to learn a structured dictionary.

3. Sparse Representation Global and Local Classifier

3.1. Motion Model of Targets

3.2. Sparse Representation Classifier

3.3. Sparse Representation Global and Local Classifier

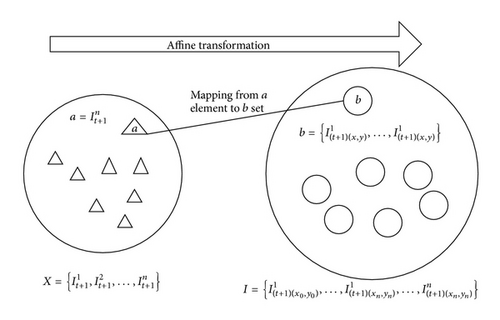

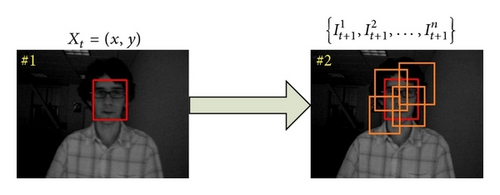

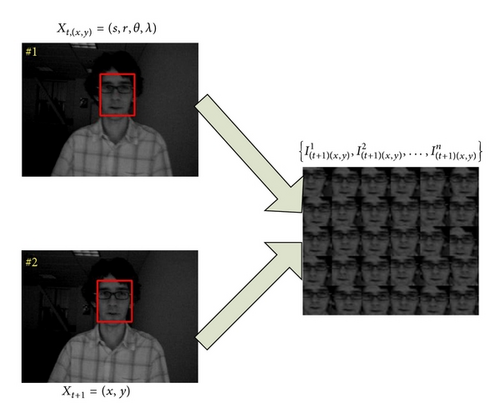

We divided the set of target states Xt = (x, y, s, r, θ, λ) into two parts: Xt = (x, y) and Xt,(x,y) = (s, r, θ, λ), Xt = (x, y) is center coordinates and Xt,(x,y) = (s, r, θ, λ) is local state in time t, is obtained by affine transform from Xt = (x, y), is obtained by affine transform from Xt,(x,y) = (s, r, θ, λ), and the relationship between two sets is as shown in Figure 2.

Each element of the set I can be obtained from the affine transformation of Xt = (x, y, s, r, θ, λ); usually, the element numbers in set I are very large, and computational cost for discriminating the set immediately is the key issue. All of the subsets b in set I can be obtained by the affine transformation of element a in set X illustrated in Figure 3. In order to reduce the computational cost, search element a from set X first, and, then, search element b from the subset that is mapping of element a. However, that implies the need for training the two classifiers to role set X and a collection of I, and the computational cost for classifiers is raised once again. In sparse representation classifier models, the method updating completed dictionary can achieve the purpose of training multiple classification and then reduce the computation cost for the classifiers trained.

The sparse representation local classifier is made by (3). The proposed algorithm is summarized in Algorithm 1.

-

Algorithm 1: Algorithm of Fisher discrimination dictionary learning.

-

Input: It is the tracking result of prior frame, is set of

-

candidate samples credible positions of the center-point coordinates in next

-

frame. D is Over-complete dictionary, T is frame numbers.

-

(1) for t = 1 : T

-

(2) SRGC

-

calculate =

-

(3) calculate identity(y) = arg mini {ei}; where ,

-

(4) obtaining the center point (x, y) from

-

(5) obtaining the by (x, y) and Affine transformation of It

-

(6) SRLC to ;

-

calculate ;

-

(7) calculate identity(y) = arg mini {ei}, where

-

Output:

4. Learning and Incremental Updating for Dictionary

According to the aforementioned code and discriminant function mentioned in last section, the coefficient of αGC and αLC could not have prominent sparsity if overcompleted dictionary has bad discriminant results as well; all of the samples could probably be chosen as the sparsest code, which is bad for the classification performance of reconstruction error function.

4.1. Dictionary Learning Using Biased Discriminant Analysis (BDA)

Give a dictionary D = [d1, d2, …, dn], where di is an n-dimensional vector and is the jth element of ith vector which is called atom of dictionary. A = [A+, A−] is the training sample set, where A+ and A− are the characterized and noncharacterized samples for objective, which are also called plus and minus samples.

Similar to [15], (D, X) is nonconvex for function J which is the convex function on set X when D is already known and also the convex function on set D when X is already known. So, J is in fact a biconvex function on sets D and X.

4.2. Dictionary Incremental Updating

is the old dispersity expression of plus sample set.

In first frames we need initialization; the target is framed manually, the is set of initial moment positive samples, is the set of initial moment negative sample, is the mean value of initial moment positive sample, compute S+ and S− by (6). We initialize all atoms p of dictionary D as random vector with l2-norm, solve X by solving (15), and then fix X and solve D by solving (16).

5. Experiments

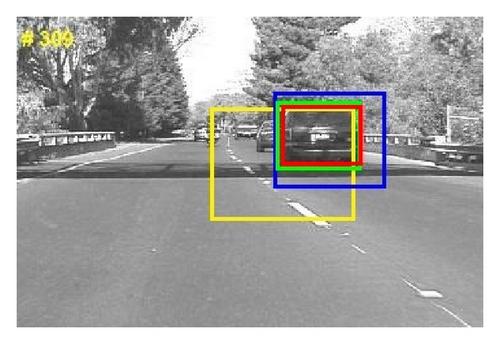

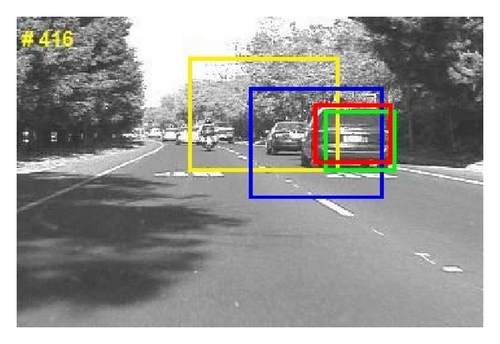

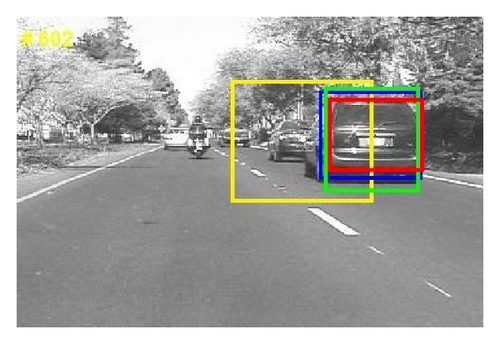

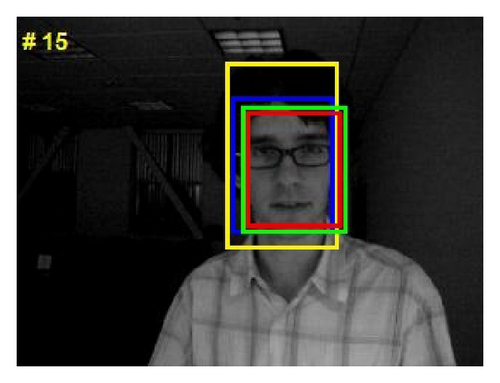

Experiments were performed with four video sequences, which included occlusion, illumination variation, appearance variation and other corruption factors. In the experiment, target location of the first frame is framed manually, and initial dictionaries were randomly generated, and track results were to be released as rectangular boxes. Figures 5, 6, 7 and 8 show a representative sample of tracking results. finally, target tracking method of this paper contrasts with incremental visual tracking (IVT) [4], multiple instance Learning (MIL) [5] and L1 tracker (L1) [8]. To further evaluate the proposed method, each method applies to four video sequences and then compares the track results, that need to be evaluated qualitatively and quantitatively. The paper uses gray histogram as a way of presentation characteristics of each method, and this is easy to test and judge the sensitivity of corruption. Besides, each run of the experiment used the same starting position, initial frame, frame number of video sequences, and software environment.

5.1. Qualitative Comparison

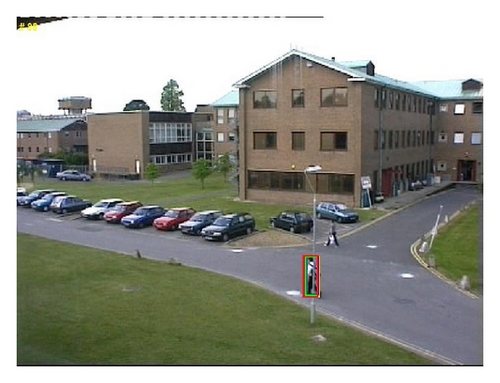

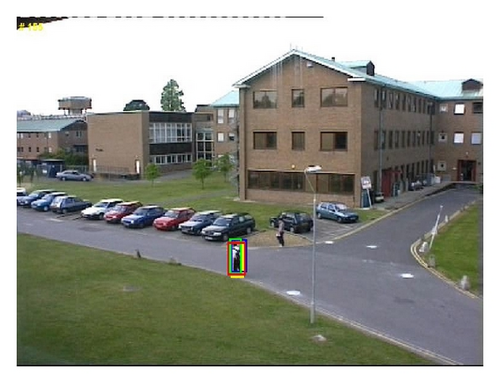

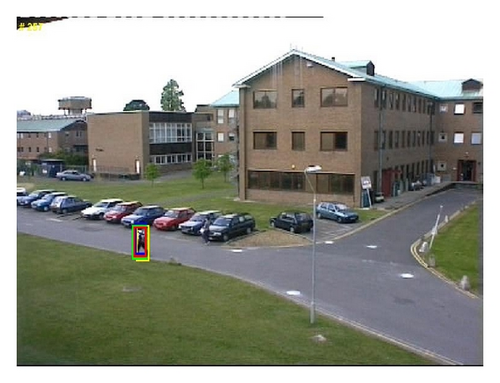

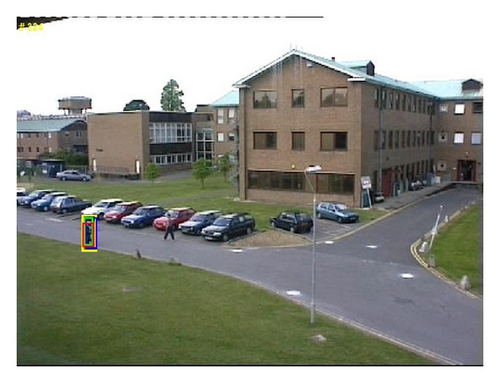

The test sequences, PETS01D1Human1, show that a person went to the extreme left side from the lower right corner of the screen, which telephone poles will cause short-term shelter to it. Tracking results are shown in Figure 5; the image frames are # 49, # 88, # 155, # 257, # 324, and # 405. All methods can effectively track the target, and the tests show that in the circumstances of the same light intensity, the same camera angle, and slight shelter, all methods can effectively track the target. It also indicates that the proposed method in the paper and the contrastive method are effective target tracking algorithms.

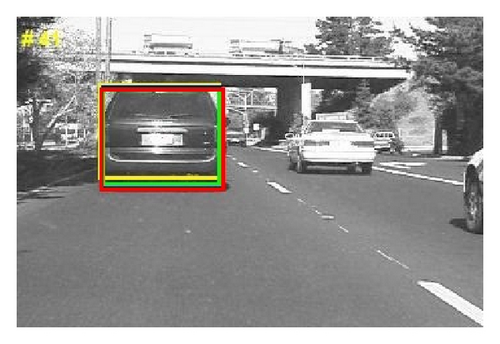

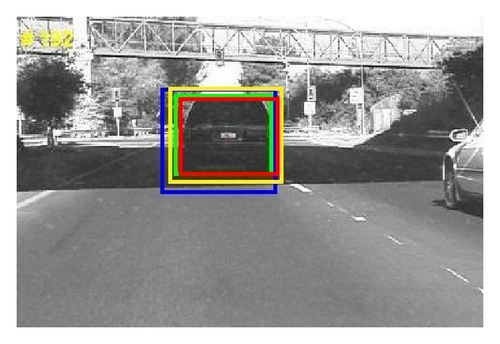

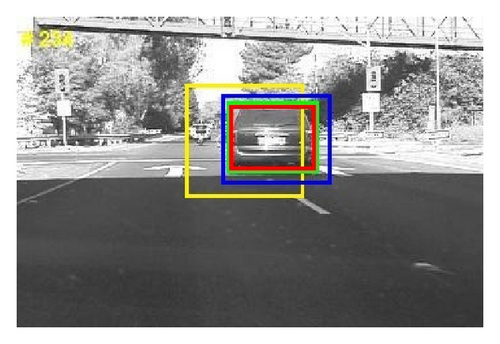

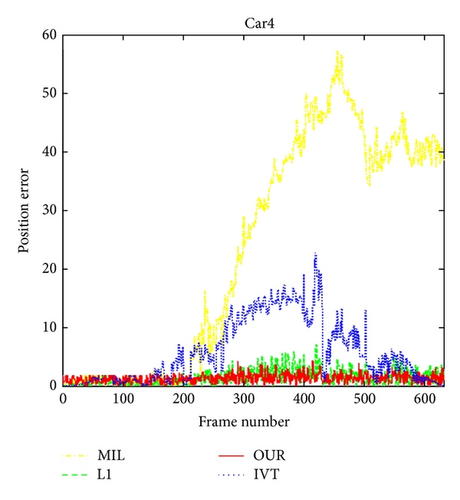

In the Car4 sequence, when the cars pass through the bridge and the shade, intensity of illumination altered obviously. Tracking results are shown in Figure 6; and the image frames are #41, #792, #234, #309, #416, and #602. When the cars go through the bridge, MIL will be ineffective significantly, but will not lose target; IVT will also be ineffective, but it can snap back. The method in this paper and L, compared with MIL and IVT, can locate the target accurately.

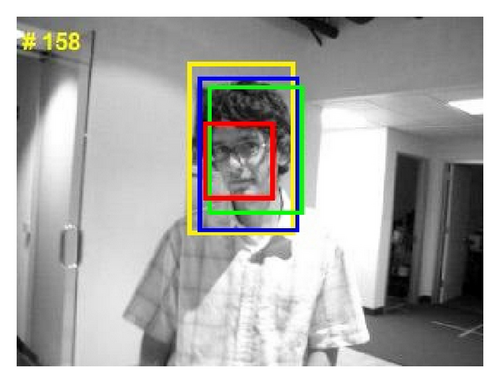

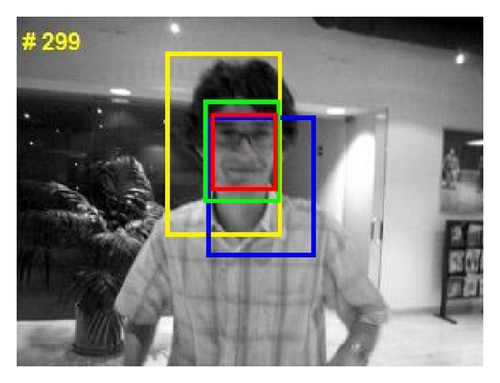

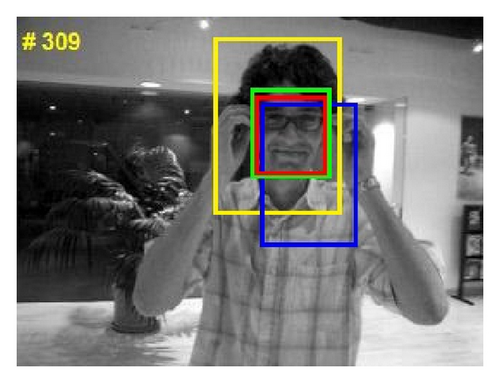

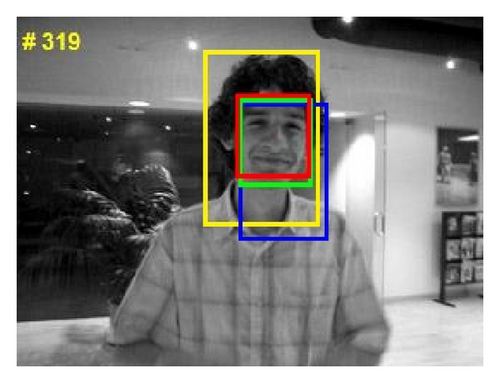

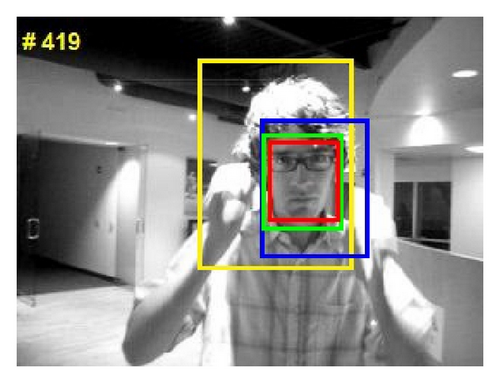

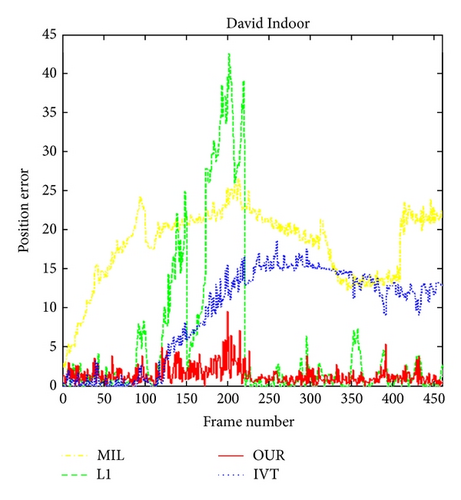

In the David Indoor sequence, the degeneration is include twice illumination change, expression change, and partial occlusion. The track result was shown in Figure 7, and the image frames are #15, #158, #299, #309, #319, and #419. The method in this paper can locate the target accurately; contrastively, the result of L1 is ineffective. The reason is that the target gray histogram was changed by light intensity, that affects the feature of image gray histogram; the methods of MIL and IVT may be more sensitive to the effects.

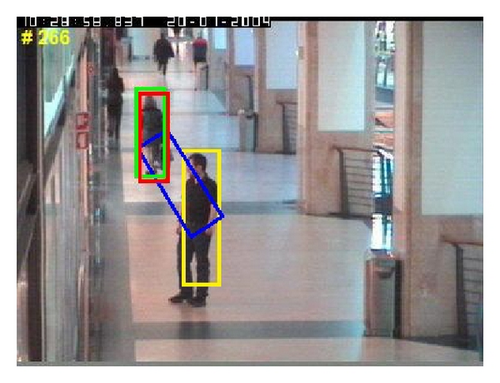

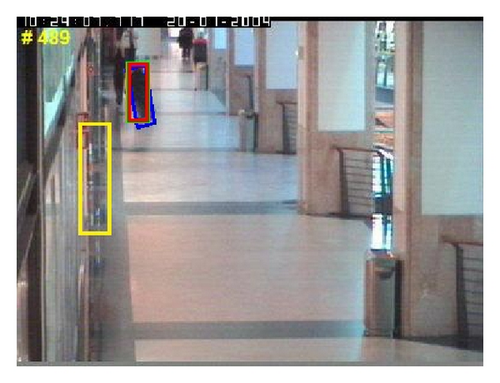

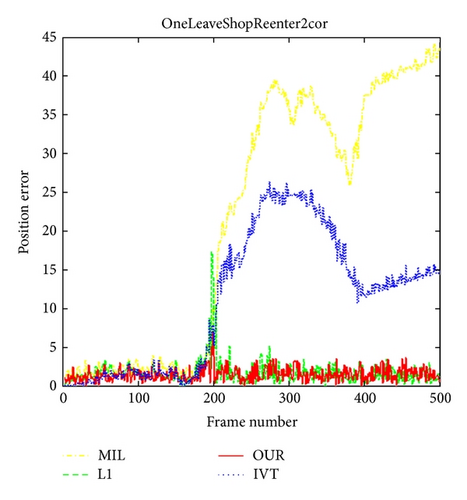

The OneLeaveShopReenter2cor sequence shows a woman walking through a corridor, when a man walks by, which lead to large occlusions Clothes with similar color are the occluder. The track result was shown in Figure 8; the image frames are #47, #198, #266, #374, #380, and #489. The method in this paper and L1 can locate the target accurately. When occlusion happened, MIL put the occluder as target and missed the target; The target is similar with the occluded,and then the IVT is difficult to discriminate object and occluded.

In conclusion, both the method in this paper and L1 can locate the target accurately. And they have strong robustness for occlusions, pose changes, significant illumination variations, and so forth.

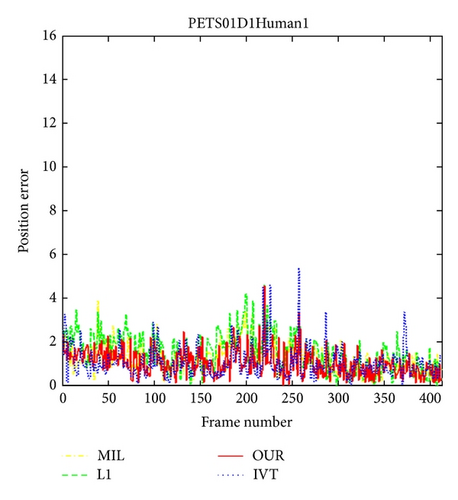

5.2. Quantitative Comparison

We evaluate the tracking performance by position error. The position Error is approximated by the distance between the central position of the tracking result and the manually labeled ground truth. Table 1 shows the statistical data of position error which includes maximum, mean and standard deviation. Figure 9 shows the errors of all four trackers.

| IVT | L1 | MIT | Proposed | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Max | Mean | Std | Max | Mean | Std | Max | Mean | Std | Max | Mean | Std | |

| OneLeaveShopReenter2cor | 26.32 | 11.26 | 8.82 | 7.33 | 2.46 | 1.72 | 44.21 | 21.67 | 16.68 | 6.65 | 2.02 | 1.86 |

| David Indoor | 18.76 | 9.43 | 5.98 | 42.59 | 57.64 | 9.93 | 18.76 | 20.51 | 4.67 | 6.71 | 1.45 | 1.21 |

| Car4 | 22.71 | 5.89 | 2.22 | 8.71 | 2.89 | 1.44 | 59.54 | 23.07 | 6.37 | 7.41 | 1.36 | 1.45 |

| PETS01D1Human1 | 5.98 | 0.93 | 0.87 | 5.09 | 1.68 | 0.97 | 6.12 | 1.16 | 1.14 | 5.95 | 1.39 | 0.91 |

From previous comparison results, we can see that proposed method can track the target more accurately in video sequence OneLeaveShopReenter2cor, David Indoor, and Car4 than other methods. The max, mean, and standard deviation of position errors are smaller than IVT and MIT. Therefore, in complex environment, our method has a better robustness. Comparing with L1, the result of tracking to sequence OneLeaveShopReenter2cor and Car4 shows that L1 has higher stability in the scene where illumination did not change significantly. However, the standard deviation of position error of L1 tracker in those sequences is smaller than proposed method, that L1 update capability is less than proposed method, when grayscale histogram distribution changed greatly. The dictionary in L1 is constructed by target template, so robustness of learned dictionary is better than it.

6. Conclusion

In this paper, a tracking algorithm was proposed based on sparse representation and dictionary learning. Based on biased discriminant analysis, we proposed an effective Incremental learning algorithm to construct overcompleted dictionary. Positive and negative samples are obtained during tracking process and are used for updating discriminant dictionary by biased discriminant analysis. Then we proposed sparse representation global and local classification for set of central points and set of local states. Compared to the state-of-the-art tracking methods, the proposed algorithm improves the discriminating performance of completed dictionary and the adaptive ability of appearance model. It has a strong robustness to illumination changes, perspective changes, and targets rotation itself.