Improving the Solution of Least Squares Support Vector Machines with Application to a Blast Furnace System

Abstract

The solution of least squares support vector machines (LS-SVMs) is characterized by a specific linear system, that is, a saddle point system. Approaches for its numerical solutions such as conjugate methods Sykens and Vandewalle (1999) and null space methods Chu et al. (2005) have been proposed. To speed up the solution of LS-SVM, this paper employs the minimal residual (MINRES) method to solve the above saddle point system directly. Theoretical analysis indicates that the MINRES method is more efficient than the conjugate gradient method and the null space method for solving the saddle point system. Experiments on benchmark data sets show that compared with mainstream algorithms for LS-SVM, the proposed approach significantly reduces the training time and keeps comparable accuracy. To heel, the LS-SVM based on MINRES method is used to track a practical problem originated from blast furnace iron-making process: changing trend prediction of silicon content in hot metal. The MINRES method-based LS-SVM can effectively perform feature reduction and model selection simultaneously, so it is a practical tool for the silicon trend prediction task.

1. Introduction

2. Formulation of LS-SVM

3. Solution of LS-SVM

In this section, we give a brief review and some analysis of the three mentioned numerical algorithms for solution of LS-SVM.

3.1. Conjugate Gradient Methods

Suykens et al. suggested the use of the CG method for the solution of (3.3) and proposed to solve two n order positive definite systems. More exactly, their algorithm can be described as follows.

Step 1. Employ the CG algorithm to solve the linear equations Hη = 1n and get the intermediate variable η.

Step 2. Solve the intermediate variable μ from Hμ = y by the CG method.

Step 3. Obtain Lagrange dual variables α = μ − bη and bias term .

The output of any new data x can subsequently be deduced by computing the decision function .

3.2. Null Space Methods

3.3. Minimal Residual Methods

It has been shown that rounding errors are propagated to the approximate solution with a factor proportional to the square of the condition number of coefficient matrix [12]; one should be careful with the MINRES method for ill-conditioned systems.

3.4. Some Analysis on These Three Numerical Algorithms

The properties of short recurrences and optimization [12] make the CG method the first choice for the solution of a symmetric positive definite system. Suykens et al. transformed the n + 1 order saddle point system (2.4) into two n order positive definite systems which are solved by CG methods. However, it is time consuming to solve two n order positive definite systems with large scales. To overcome this shortcoming, Chu et al. [8] transformed equivalently the original n + 1 order system into an n order symmetric positive definite system, and then the CG method can be used. This method can be seen as a null space method. Unfortunately, the transformation may destroy heavily the sparse structure and increase greatly the condition number of the original system. This can hugely slow down the convergence rate of the CG algorithm. Theoretical analysis about the influence of the transformation on the condition number is indispensable, but it is rather difficult. We leave it as an open problem. In this paper, the MINRES method is directly applied to solve the original saddle point problem of n + 1 order. Similar to the CG method, the MINRES method also has properties of short recurrences and optimization.

In light of the analysis mentioned above, the MINRES method should be the first choice for the solution of LS-SVM model, since it avoids solving two linear systems and destroying the sparse structure of the original saddle point system simultaneously.

4. Numerical Implementations

4.1. Experiments on Benchmark Data Sets

In this section we give the experimental test results on the accuracy and efficiency of our method. For comparison purpose, we implement the CG method proposed by Suykens and Vandewalle [6] and the null space method suggested by Chu et al. [8]. All experiments are implemented with MATLAB version 7.8 programming environment running on an IBM compatible PC under Window XP operating system, which is configured with Intel Core 2.1 Ghz CPU and 2 G RAM. The generalized used Gaussian RBF kernel k(x, z) = exp (−∥x − z∥2/σ2) is selected as the kernel function. We use the default setting for kernel width σ2, that is, set kernel width as the dimension of inputs.

We first compare three algorithms on three benchmark data sets: Boston, Concrete, and Abalone, which are download from UCI [13]. Each data set is randomly partitioned into 70% training and 30% test sets. We also list the condition numbers of coefficients matrices solved by three methods for the analysis of the computing efficiencies. As shown in Tables 1–3 the condition number for the CG method is the least one and the condition number for the null space method significantly increases.

| Boston data set, 506 samples, 13-d inputs, σ2 equals 13 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| log2C | Conjugate gradient method | Null space method | MINRES method | ||||||

| Cond† | CPU‡ | MSE* | Cond | CPU | MSE | Cond | CPU | MSE | |

| −5 | 4 | 0.3281 | 49.1027 | 366.9451 | 0.8438 | 49.1027 | 45.6283 | 0.2500 | 49.1027 |

| −4 | 8 | 0.4688 | 39.4132 | 369.0926 | 0.6250 | 39.4132 | 31.6150 | 0.3438 | 39.4132 |

| −3 | 15 | 0.3438 | 29.7686 | 388.1770 | 0.7656 | 29.7686 | 26.3388 | 0.3125 | 29.7686 |

| −2 | 28 | 0.4531 | 24.2532 | 460.1920 | 0.7656 | 24.2532 | 29.2813 | 0.3281 | 24.2532 |

| −1 | 60 | 0.2500 | 21.0322 | 474.6254 | 1.0625 | 21.0322 | 61.3493 | 0.4219 | 21.0322 |

| 0 | 116 | 0.3438 | 15.5875 | 566.2504 | 1.2500 | 15.5875 | 119.071 | 0.1875 | 15.5875 |

| 1 | 234 | 0.7188 | 13.6449 | 946.4564 | 1.1250 | 13.6449 | 239.374 | 0.4375 | 13.6449 |

| 2 | 472 | 0.9531 | 13.0252 | 1945.300 | 1.0625 | 13.0252 | 482.447 | 0.6875 | 13.0252 |

| 3 | 924 | 0.9375 | 10.9810 | 2244.342 | 1.4063 | 10.9810 | 944.042 | 0.6406 | 10.9810 |

| 4 | 1734 | 1.3594 | 10.3168 | 5229.460 | 1.2500 | 10.3168 | 1776.31 | 0.8906 | 10.3168 |

| 5 | 3801 | 1.5469 | 10.2063 | 10785.92 | 1.4844 | 10.2063 | 3876.97 | 1.1406 | 10.2063 |

| 6 | 7530 | 2.0469 | 11.3937 | 24998.71 | 1.9063 | 11.3937 | 7682.07 | 1.2969 | 11.3937 |

| 7 | 14618 | 2.4531 | 11.7750 | 47781.41 | 2.2188 | 11.7750 | 14932.2 | 1.6875 | 11.7750 |

| 8 | 29769 | 3.0625 | 12.9925 | 61351.85 | 2.9844 | 12.9925 | 30382.8 | 2.3750 | 12.9925 |

| 9 | 58387 | 3.4063 | 14.0194 | 101181.8 | 3.5938 | 14.0194 | 59619.0 | 2.6875 | 14.0194 |

| 10 | 119285 | 4.0313 | 17.2330 | 285440.0 | 4.8281 | 17.2330 | 121708 | 3.5313 | 17.2330 |

- Cond† denotes the condition number, CPU‡ stands for running time, MSE* is mean square error.

| Concrete data set, 1030 samples, 8-d inputs, σ2 equals 8 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| log2C | Conjugate gradient method | Null space method | MINRES method | ||||||

| Cond | CPU | MSE | Cond | CPU | MSE | Cond | CPU | MSE | |

| −5 | 7 | 2.0781 | 140.8498 | 738.137223 | 3.6719 | 140.8498 | 51.5204280 | 1.6406 | 140.8498 |

| −4 | 13 | 2.3594 | 111.2384 | 745.054714 | 3.6563 | 111.2384 | 39.7005872 | 1.8906 | 111.2383 |

| −3 | 25 | 2.8125 | 89.3458 | 796.627246 | 3.8281 | 89.3458 | 31.7895802 | 2.0938 | 89.3459 |

| −2 | 50 | 2.4844 | 74.4146 | 850.938881 | 3.9688 | 74.4146 | 51.4318149 | 2.0469 | 74.4146 |

| −1 | 102 | 3.0000 | 60.2984 | 954.604170 | 4.3906 | 60.2984 | 104.293122 | 2.2969 | 60.2984 |

| 0 | 199 | 3.4219 | 50.4491 | 1397.13474 | 4.8438 | 50.4491 | 202.202543 | 2.8281 | 50.4490 |

| 1 | 399 | 4.0625 | 43.5416 | 1737.97400 | 5.7188 | 43.5416 | 406.110983 | 3.2500 | 43.5416 |

| 2 | 787 | 4.8750 | 41.5463 | 2369.65769 | 6.4219 | 41.5463 | 799.643656 | 3.8594 | 41.5463 |

| 3 | 1561 | 6.3125 | 36.5797 | 5375.87469 | 7.3750 | 36.5797 | 1586.70628 | 4.2500 | 36.5797 |

| 4 | 3197 | 8.0000 | 33.4861 | 7342.75323 | 8.7188 | 33.4861 | 3247.47638 | 5.0156 | 33.4861 |

| 5 | 6411 | 10.3281 | 33.1452 | 18274.6591 | 10.8438 | 33.1452 | 6510.73913 | 6.2188 | 33.1452 |

| 6 | 12530 | 13.8750 | 33.4936 | 37192.6189 | 12.9063 | 33.4936 | 12732.7611 | 8.2813 | 33.4936 |

| 7 | 25614 | 18.5781 | 33.8690 | 73008.8645 | 15.8594 | 33.8690 | 26010.1838 | 11.0156 | 33.8690 |

| 8 | 51260 | 25.1250 | 32.6925 | 126475.189 | 19.5938 | 32.6925 | 52056.7280 | 14.8906 | 32.6925 |

| 9 | 101053 | 33.9531 | 35.1044 | 249234.605 | 25.2969 | 35.1044 | 102657.615 | 19.9219 | 35.1043 |

| 10 | 199734 | 46.3125 | 40.4777 | 557864.123 | 32.9219 | 40.4777 | 202961.396 | 27.0625 | 40.4777 |

| Abalone data set, 4177 samples, 7-d inputs, σ2 equals 7 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| log2C | Conjugate gradient method | Null space method | MINRES method | ||||||

| Cond | CPU | MSE | Cond | CPU | MSE | Cond | CPU | MSE | |

| −5 | 42.341 | 20.343 | 5.3623 | 2955.9655 | 39.7344 | 5.3623 | 369.9433 | 12.9531 | 5.3623 |

| −4 | 84.059 | 22.984 | 5.1143 | 3028.4495 | 41.1406 | 5.1143 | 332.0862 | 14.2344 | 5.1143 |

| −3 | 167.846 | 26.343 | 4.7978 | 3043.4615 | 42.6719 | 4.7978 | 306.5691 | 16.0156 | 4.7978 |

| −2 | 337.691 | 33.281 | 4.6923 | 3567.1431 | 46.8125 | 4.6923 | 338.1679 | 18.4688 | 4.6923 |

| −1 | 666.823 | 39.315 | 4.4360 | 4888.3227 | 50.8438 | 4.4360 | 667.7842 | 22.3125 | 4.4360 |

| 0 | 1327.351 | 47.531 | 4.4744 | 5355.5805 | 54.6563 | 4.4744 | 1329.291 | 26.2656 | 4.4744 |

| 1 | 2700.547 | 58.015 | 4.4217 | 9894.2450 | 59.8438 | 4.4217 | 2704.345 | 34.1719 | 4.4217 |

| 2 | 5275.703 | 74.859 | 4.3948 | 8239.2388 | 69.2031 | 4.3948 | 5283.506 | 42.5469 | 4.3948 |

| 3 | 10709.216 | 94.765 | 4.4169 | 18279.897 | 80.4219 | 4.4169 | 10724.46 | 54.7813 | 4.4169 |

| 4 | 21357.750 | 124.359 | 4.5053 | 24472.420 | 97.7500 | 4.5053 | 21388.43 | 71.3906 | 4.5053 |

| 5 | 42427.822 | 177.171 | 4.6144 | 105161.60 | 133.2656 | 4.6144 | 42489.70 | 103.6406 | 4.6144 |

| 6 | 85153.757 | 221.468 | 4.6857 | 185913.18 | 155.9219 | 4.6857 | 85276.97 | 129.3750 | 4.6857 |

| 7 | 171369.064 | 312.078 | 4.7145 | 212162.90 | 212.4531 | 4.7145 | 171614.1 | 181.8750 | 4.7145 |

| 8 | 344731.082 | 430.640 | 4.8621 | 705659.56 | 289.4531 | 4.8621 | 345216.0 | 260.5469 | 4.8621 |

| 9 | 681509.920 | 602.765 | 5.2294 | 1162595.7 | 395.6250 | 5.2294 | 682494.5 | 360.5625 | 5.2294 |

| 10 | 1363883.053 | 840.625 | 5.6517 | 3106655.0 | 549.4844 | 5.6517 | 1365853 | 488.6250 | 5.6517 |

The columns of Cond in Tables 1, 2, and 3 show that compared with the CG method the condition number for the MINRES method increases a bit, but much less than the condition number of the null space method. The orders of linear equations solved by the CG method, the null space method, and the MINRES method are n, n − 1, and n + 1, respectively. The condition numbers for the CG method and the MINRES method are very close, but we have to solve two systems of n − 1 order using CG methods. Hence, the running time of the MINRES method should be less than that of the CG method. CPU column in Tables 1–3 shows that the MINRES method-based LS-SVM model costs much less running time than the CG method and the null space method-based LS-SVM model in all cases of setting C. So the MINRES method-based LS-SVM model is a preferable algorithm for solving LS-SVM model. In the next subsection, we will employ the MINRES method-based LS-SVM model to solve a practical problem.

4.2. Application on Blast Furnace System

Blast furnace, one kind of metallurgical reactor used for producing pig iron, is often called hot metal. The chemical reactions and heat transport phenomena take place throughout the furnace as the solid materials move downwards and hot combustion gases flow upwards. The main principle involved in the BF iron-making process is the thermochemical reduction of iron oxide ore by carbon monoxide. During the iron-making period, a great deal of heat energy is produced which can heat up the BF temperature approaching 2000°C. The end products consisting of slag and hot metal sink to the bottom and are tapped periodically for the subsequent refining. It will take about 6–8 h for a cycle of iron-making [11]. BF iron-making process is a highly complex nonlinear process with the characteristics of high temperature, high pressure, concurrence of transport phenomena, and chemical reactions. The complexity of the BF and the occurrence of a variety of process disturbances have been obstacles for the adoption of modeling and control in the process. Generally speaking, to control a BF system often means to control the hot metal temperature and components, such as silicon content, sulfur content in hot metal, and carbon content in hot metal within acceptable bounds. Among these indicators, the silicon content often acts as a chief indicator to represent the thermal state of the BF, an increasing silicon content meaning a heating of the BF while a decreasing silicon content indicating a cooling of the BF [11, 14]. Thus, the silicon content is a reliable measure of the thermal state of the BF, and it becomes a key stage to predict the silicon content for regulating the thermal state of the BF. Therefore, it has been the active research issue to build silicon prediction model in the recent decades, including numerical prediction models [15] and trend prediction models [11].

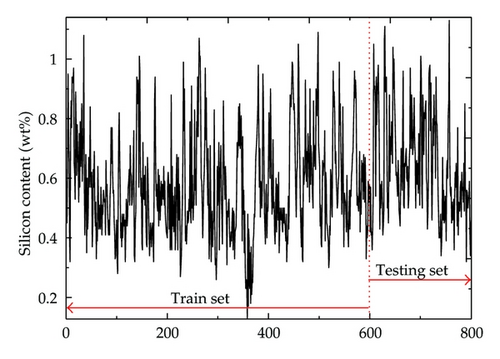

In this subsection, the tendency prediction of silicon content in hot metal is transformed as a binary classification problem. Samples with increasing silicon content are denoted by +1 whereas a decreasing silicon content is denoted by −1. In the present work, the experimental data is collected from a medium-sized BF with the inner volume of about 2500 m3. The variables closely related to the silicon content are measured as the candidate inputs for modeling. Table 4 presents the variables information from the studied BF. There are totally 801 data points collected with the first 601 points as train set and the residual 200 points as testing set. The sampling interval is about 1.5 h for the current BF. Figure 1 illustrates the evolution of the silicon content in hot metal.

| Variable name [unit] | Abbreviation | Range | F-score | Mean accuracy |

|---|---|---|---|---|

| Latest silicon content (wt%) | Si | 0.13–1.13 | 0.1269 | 81.786% |

| Sulfur content (wt%) | S | 0.012–0.077 | 0.0570 | 82.857% |

| Basicity of ingredients (wt%) | BI | 0.665–1.609 | 0.0229 | 81.786% |

| Feed speed (mm/h) | FS | 16.725–297.510 | 0.0132 | 83.214% |

| Blast volume (m3/min) | BV | 1454.30–5580.200 | 0.0054 | 83.747% |

| CO2 percentage in top gas (wt%) | CO2 | 7.921–22.892 | 0.0048 | 83.750% |

| Pulverized coal injection (ton) | PCI | 0.230–98.533 | 0.0037 | 83.214% |

| CO percentage in top gas (wt%) | CO | 9.267–27.374 | 0.0036 | 82.500% |

| Blast temperature (°C) | BT | 1086.100–1239.700 | 0.0031 | 83.571% |

| Oxygen enrichment percentage (wt%) | OEP | −0.001–14.688 | 0.0019 | 83.393% |

| H2 percentage in top gas (wt%) | H2 | 2.564–4.065 | 0.0005 | 83.214% |

| Coke load of ingredients (wt%) | CLI | 2.032–5.071 | 0.0004 | 82.857% |

| Furnace top temperature (°C) | TP | 62.703–264.130 | 0.0002 | 82.679% |

| Blast pressure (kPa) | BP | 59.585–367.780 | 0.0001 | 83.214% |

| Furnace top pressure (kPa) | TP | 8.585–199.790 | 0.0001 | 82.679% |

There are in total 15 candidate variables listed in Table 4 from which to select model inputs. Generally, too many input parameters will increase the complexity of model while too little inputs will reduce the accuracy of model. A tradeoff has to be taken between the model complexity and accuracy when selecting the inputs. Therefore, it is necessary to screen out less important variables as inputs from these 15 candidate variables. Here, the inputs are screened out by an integrative way that combines F-score method [16] for variables ranking and cross-validation method for variables and model parameters selection.

Mean accuracy in Table 4 stands for the average accuracy under ten-fold cross-validation experiments of LS-SVM model on some grid points with the best performance. In the current work, we first select the variable with highest F-score as model input and then add variables one by one according to their F-scores. Mean accuracy under all kinds of input variables can be achieved and the results are shown in Table 4. The following are shown by the mean accuracy column: (1) at the beginning, the mean accuracy increases gradually as more candidate variables are taken as model inputs; (2) the largest mean accuracy appears when CO2 is included within the input set; (3) when the mean accuracy is beyond the maximum, it will fluctuate as the residual variables are added by turns into the input set. These results indicate that, as the studied BF is concerned, the optimal input set is [Si, S, BI, FS, BV, CO2] with the model parameters setting (σ2, C) = (29, 28). Table 5 lists the LS-SVM model accuracy including with/without feature and model selection versions on testing set. In the case of without feature and model selection version, all candidate variables are selected as inputs, and we use the default setting for LS-SVM model; that is, set kernel width σ2 equal to the dimension of input variable and set regularized parameter C as 1. The information in the second row of this table, such as 34/42, denotes that there are 42 times predicted results that are ascending trend, and 34 times predictions are successful. The confidence level of the LS-SVM model without model and feature selection fluctuates severely between the ascending and descending prediction from 80.95% to 58.86%. The difference of confidence levels of LS-SVM model with model and feature selection between ascending and descending prediction is reduced to 2.19% indicating that model and feature selection procedure enhances the stability of the LS-SVM model obviously. As the last column of Table 5 shows, TSA of LS-SVM model with feature and model selection procedure is significantly improved compared with LS-SVM model without feature and model selection, so the selection procedure is indispensable for the current practical application. Table 6 lists the running time of three mentioned numerical algorithms when performing feature and model selection procedure. The cost time of the MINRES method is reduced significantly compared with the other algorithms. In a word, the feature and model selection procedure can be effectively performed for the MINRES method-based LS-SVM, and it is meaningful for practical using.

| Inputs | (σ2,C) | Ascend (99*) | Descend (101) | TSA† |

|---|---|---|---|---|

| 15 | (15, 1) | 34/42 = 80.95% | 93/158 = 58.86% | 127/200 = 63.5% |

| 6 | (29, 28) | 73/94 = 77.66% | 80/106 = 75.47% | 153/200 = 76.5% |

- 99* means 99 observations are ascending trend; TSA† stands for testing set accuracy.

| Algorithm | Conjugate gradient method | Null space method | MINRES method |

|---|---|---|---|

| CPU | 1948 | 2800 | 1488 |

5. Conclusions and Points of Possible Future Research

In this paper, we have proposed an alternative, that is, the MINRES method, to the solution of LS-SVM model which is formulated as a saddle point system. Numerical experiments on UCI benchmark data sets show that the proposed numerical solution method of LS-SVM model is more efficient than the algorithms proposed by Suykens and Vandewalle [6] and Chu et al. [8]. To heel, the MINRES method-based LS-SVM model including feature selection from extensive candidate and model parameter selection is proposed and employed for the silicon content trend prediction task. The practical application to a typical real BF indicates that the proposed MINRES method-based LS-SVM model is a good candidate to predict the trend of silicon content in BF hot metal with low running time.

However, it should be pointed out that despite the MINRES method-based LS-SVM model displaying low running time, lack of metallurgical information may be the root to the limited accuracy of the current prediction model. So there is much work worth investigating in the future to further improve the model accuracy and increase the model transparency, such as constructing predictive model by integrating domain knowledge and extracting rules. The extracted rules can account for the output results with detailed and definite inputs information, which may further serve for the control purpose by linking the output results with controlled variables. These investigations are deemed to be helpful to further improve the efficiency of predictive model.

Acknowledgment

This work was partially supported by National Natural Science Foundation of China under Grant no. 11126084, Natural Science Foundation of Shandong Province under Grant no. ZR2011AQ003, Fundamental Research Funds for the Central Universities under Grant no. 12CX04082A, and Public Benefit Technologies R&D Program of Science and Technology Department of Zhejiang Province under Grant No. 2011C31G2010136.