Computing the Square Roots of a Class of Circulant Matrices

Abstract

We first investigate the structures of the square roots of a class of circulant matrices and give classifications of the square roots of these circulant matrices. Then, we develop several algorithms for computing their square roots. We show that our algorithms are faster than the standard algorithm which is based on the Schur decomposition.

1. Introduction

Circulant matrices and their generalizations arise in many areas of physics, electromagnetics, signal processing, statistics, and applied mathematics for the investigation of problems with periodicity properties [1–3]. Also, numerical solutions of certain types of elliptic and parabolic partial differential equations with periodic boundary conditions often involve linear systems Ax = b with A a circulant matrix. For recent years, the properties and applications of them have been extensively investigated [4–9].

A matrix X is said to be a square root of A if X2 = A. The number of square roots varies from two (for a nonsingular Jordan block) to infinity (any involuntary matrix is a square root of the identity matrix). The key roles that the square root plays in, for example, the matrix sign function, the definite generalized eigenvalue problem, the polar decomposition, and the geometric mean, make it a useful theoretical and computational tool. The rich variety of methods for computing the matrix square root, with their widely differing numerical stability properties, is an interesting subject of study in their own right [10]. For these reasons, many authors became interested in the matrix square roots [11–14]. Although the theory of matrix square roots is rather complicated, simplifications occur for certain classes of matrices. Consider, for example, Hamiltonian matrices [15], semidefinite matrices [16], and so forth.

This paper is organized as follows. In Section 2, we review some basic properties of a class of circulant matrices. In Section 3, we investigate the structures of the square roots, then give the classifications of all the square roots of these circulant matrices. In Section 4, we develop some algorithms to compute the primary square roots of them. Finally, we present several numerical experiments in Section 5, exhibiting the efficiency of the proposed algorithms in terms of CPU time.

2. Preliminaries

Throughout this paper we denote the set of all n × n complex matrices by ℂn×n and the set of all real matrices by ℝn×n.

Definition 2.1 (see [4].)Let a = (a0, a1, …, an−1) T ∈ ℂn and k ∈ ℂ. In a k-circulant matrix

Another equivalent definition of a k-circulant matrix is as follows: A ∈ ℂn×n is a k-circulant matrix if and only if A = G−1AG, where G = Circk([0,1, 0, …, 0]).

Let n be an even number, A ∈ ℂn×n is a skew k-circulant matrix if A = −G−1AG and a Hermitian k-circulant matrix if , where denotes the elementwise conjugate of the matrix A.

If the circulant matrix A is similar to a block diagonal matrix (even a diagonal matrix), that is, if there exists an invertible matrix P such that P−1AP is a block diagonal matrix, the problem of computing the square roots of a circulant matrix can be reduced to that of computing the square roots of some small size matrices. It will help to reduce the costs of computation.

Lemma 2.2. If k ∈ ℝ, then all the matrices in Circk(a) are simultaneously diagonalizable, with the eigenvectors determined completely by k and a primitive nth root of unity.

By applying (2.2), (2.4), and (2.6), we have the following.

Lemma 2.3. If A is an n × n k-circulant matrix, then

By applying (2.2)–(2.6), we have the following.

Lemma 2.4. Let A be a skew k-circulant matrix. If n/2 is odd, then

By applying (2.2), (2.4), (2.6), (2.9), (2.10), and (2.11), we have the following.

Lemma 2.5. Let A be an n × n Hermitian k-circulant matrix and Q be defined by the relation (2.10). If n/2 is odd, then

Definition 2.6. Given a square matrix A, a function f : Ω ∈ ℂ → ℂ is said to be defined on the spectrum σ(A) of A if f and its derivatives of order l − 1 are defined at each element of σ(A). Here, l is the size of the largest Jordan block of A. If p(x) is the interpolating polynomial of all of these values, the function f of A is defined to be f(A): = p(A).

For instance, the square roots of a matrix A whose eigenvalues belong to exactly one Jordan block are functions of A. These are the primary square roots. On the other hand, choices of different branches of the square root for each Jordan block lead to the so-called nonprimary matrix square root functions of A.

In most applications, it is primary square roots that are of interest and simplicity, virtually all the existing theory and available methods are for such square roots [10]; thus, we will concentrate on primary roots in this paper.

Lemma 2.7 (see [10].)Let the nonsingular matrix A ∈ ℂn×n have the Jordan canonical form Z−1AZ = J = diag (J1, J2, …, Jp), and let s ≤ p be the number of distinct eigenvalues of A. Let

If s < p, A has nonprimary square roots. They form parametrized families

3. Square Roots

In this section we present some new results which characterize the square roots of the circulant matrices.

3.1. Square Roots of k-Circulant Matrices

It is known that the product of two k-circulant matrices is k-circulant, however, whether a k-circulant matrix has square roots which are also k-circulant or not? We have some answers to this question.

Theorem 3.1. Let A ∈ ℂn×n be a nonsingular k-circulant matrix and let X2 = A, where X are the primary functions of A. Then all square roots X are k-circulant matrices.

Proof. By assumption, X2 = A and X = f(A), here f(x) = x1/2, which is clearly defined on the spectrum of A, including the case that the eigenvalues of the matrix A are complex numbers. From Definition 2.6, we can construct a polynomial p such that p(A) = f(A). Using the fact that the sum and product of two k-circulant matrices are also k-circulant, the polynomial X = p(A) is a k-circulant matrix.

By Lemma 2.7 and Theorem 3.1, we get that any nonsingular k-circulant matrix always has a k-circulant square root.

In fact, if G−1BG = −B, then A = B2 implies G−1AG = A. That means k-circulant matrices may have square roots which are skew k-circulant. Although it is unknown whether it is true, if it has a skew k-circulant matrix, we have the following.

Theorem 3.2. Let A ∈ ℂn×n be a nonsingular k-circulant matrix. Assume that A has a skew k-circulant square root. (i) If n/2 is even, then each M and N in (2.7) admits a square root, respectively. (ii) If n/2 is odd, then the matrix M and N in (2.7) are similar.

Proof. let Y be a skew k-circulant matrix and Y2 = A.

(i) If n/2 is even, by Lemma 2.4, we have . That implies hold simultaneously, that is Y1 and Y2 are square roots of M and N, respectively.

(ii) If n/2 is odd, by Lemma 2.4 again, we have .

Note that Y2 = A means that Y1Y2 = M and Y2Y1 = N. Therefore, Y1 and Y2 are both nonsingular (due to the nonsingularity of M and N) and . That is to say, M and N in (2.7) are similar.

In general, a nonsingular k-circulant matrix A, besides the k-circulant square roots, possibly has other kinds of square roots (e.g., skew k-circulant square roots), which are nonprimary functions of A. The existence and the families of square roots depend on the spectrums of M and N. The following theorem gives a classification of all the square roots of a nonsingular k-circulant matrix.

Theorem 3.3. Let the nonsingular k-circulant matrix A ∈ ℂn×n has s distinct eigenvalues λi (i = 1 : s), then A has 2s k-circulant square roots that are the primary functions of A, given by

If s < n, A has nonprimary square roots. They form parametrized families

Proof. According to the hypothesis, A has s distinct eigenvalues. Then by Lemma 2.7, A has 2s square roots which are primary functions of A and take the form (3.1). By Theorem 3.1, the square roots are k-circulant matrices. By Lemma 2.7 again, we get the form (3.2).

Theorem 3.3 shows that the square roots of a nonsingular k-circulant matrix consist of two classes. The first class comprises finitely many primary square roots which are “isolated,” and they are k-circulant matrices. The second class, which may be empty, comprises a finite number of parametrized families of matrices, each family containing infinitely many square roots sharing the same spectrum, and the square roots in this class maybe k-circulant matrices or not.

3.2. Square Roots of Skew k-Circulant Matrices

Assume that J has s distinct eigenvalues. We have the following result.

Theorem 3.4. Let the nonsingular skew k-circulant matrix A ∈ ℂn×n have the Jordan decomposition (3.3), and let s ≤ l be the number of distinct eigenvalues of J. Then A has 4s square roots, which are primary functions of A, taking the following form:

If s < l, then A has nonprimary square roots which are of 4l − 4s parameterized families in the following form:

Proof. The proof consists in using Lemma 2.7 again and the fact that A has 2s distinct eigenvalues and 2l Jordan blocks.

Let us consider how to compute the primary square roots of a nonsingular skew k-circulant matrix. If n/2 is even, from Lemma 2.4, we can see that skew k-circulant matrices have the same deduced form (2.7). We can use Algorithm 4.2 (in Section 4) in this case. If n/2 is odd, we have that P−1AP takes the form (2.8). Exploiting this form, we have the following theorem.

Theorem 3.5. Let A ∈ ℂn×n be a nonsingular skew k-circulant matrix and let n/2 be odd. Assume that Z ∈ ℂn×n and are partitioned as follows:

- (A)

Z2Z3 is a square root of −(1/4)MN;

- (B)

Z1 is a fourth root of −(1/4)MN;

- (C)

Z4 is a fourth root of −(1/4)NM;

- (D)

Z2 is a solution of Z1Z2 + Z2Z4 = M.

- (E)

Z3 = M−1Z2N.

hold simultaneously, where P and M, N are defined by the relation (2.4) and (2.8), respectively.

Proof. The proof is similar to that of Theorem 3.5; see [14].

3.3. Square Roots of Hermitian k-Circulant Matrices

In fact, if , then A = B2 implies . That means Hermitian k-circulant matrices may have square roots which are still Hermitian k-circulant. Although it is unknown whether it is true, if it has a Hermitian k-circulant square root, we have the following.

Theorem 3.6. Let A be a nonsingular Hermitian k-circulant matrix, assume that A has a Hermitian k-circulant square matrix. (i) If n/2 is even, then each M and N in (2.7) admits a square root, respectively. (ii) If n/2 is odd, then A’s reduced form RA ∈ ℝn×n in (2.12) has a real square root.

Proof. (i) If n/2 is even, the case is similar to Theorem 3.2.

(ii) If n/2 is odd, let Y be a Hermitian k-circulant matrix and Y2 = A. Then, by Lemma 2.5, we have that RY = Q−1YQ and RA = Q−1AQ are real and , where Q is defined in (2.10). This means that RA has a real square root.

Theorem 3.7. Let the nonsingular Hermitian k-circulant matrix A ∈ ℂn×n have the Jordan decomposition (3.9). Assume that s ≤ l be the number of distinct real eigenvalues of JR, and t ≤ r be the number of distinct complex conjugate eigenvalue pairs.

If s ≤ l or t ≤ r, then A has 2s+2t square roots which are primary functions of A.

If s + t < l + r, then A has square roots which are nonprimary functions of A; they form 2l+2r − 2s+2t parameterized families.

Proof. The proof is similar to that of Theorem 3.4.

It is showed in Theorem 3.1 that all the primary square roots of a nonsingular k-circulant matrix A are k-circulant. But for nonsingular Hermitian k-circulant matrices, this conclusion does not hold anymore in general. However, if a square root of a nonsingular Hermitian k-circulant matrix A is a real coefficient polynomial in A, then this conclusion holds.

Theorem 3.8. Let A be a nonsingular Hermitian k-circulant matrix, then all square roots of A which are polynomials in A with real coefficients (if exist) are Hermitian k-circulant matrices.

Proof. Using the fact that the sum and product of two Hermitian k-circulant matrices are also Hermitian k-circulant, we complete the proof.

4. Algorithms

In this section we will propose algorithms for computing the primary square roots of the circulant matrix A in Section 3, which are primary functions of A.

Algorithm 4.1. Computes a primary square root of a nonsingular k-circulant matrix A ∈ ℂn×n.

Step 1. Compute the eigenvalues of A.

Step 2. Compute the square roots .

Step 3. Compute , .

Then, we obtain .

The cost of Step 1 is about O(nlog 2n) flops by discrete Fourier transform. The cost of Step 2 is O(n). The cost of Step 3 is about O(nlog 2n) flops by inverse discrete Fourier transform. So, it needs about O(nlog 2n) flops in total if we use the fast Fourier transform to compute a primary square root of a k-circulant matrix A (see Table 1).

| Step | Flops |

|---|---|

| 1 | O(nlog2n) |

| 2 | O(n) |

| 3 | O(nlog2n) |

| Sum | O(nlog2n) |

Algorithm 4.2. Computes a primary square root of a nonsingular skew k-circulant matrix A ∈ ℂn×n (n/2 is even).

Step 1. Compute the reduced form P−1AP = diag (M, N) in (2.7).

Step 2. Compute the Schur decompositions and , respectively, where TM and TN are two upper triangular matrices.

Step 3. Compute the upper triangular square roots SM = f(TM) and SN = f(TN), where TM has n/2 distinct eigenvalues and so does TN, here f = λ1/2 is defined on λ ∈ σ(diag (TM, TN)).

Step 4. Compute and .

Step 5. Obtain X = Pdiag (XM, XN)P−1.

The costs of Steps 1 and 5 in Algorithm 4.2 are about O(n2) flops. The main costs arise in the implementation of Steps 2–4. Those are about 7(1/12)n3 flops (see Table 2). It needs about 28(1/3)n3 flops in total if we use the Schur method (Algorithm 6.3 in [10]) to compute a primary square root of A directly, that means Algorithm 4.2 is about four times cheaper than the standard Schur method.

| Step | Flops |

|---|---|

| 1 | O(n2) |

| 2 | (25/4)n3 + O(n2) |

| 3 | (1/12)n3 + O(n2) |

| 4 | (3/4)n3 + O(n2) |

| 5 | O(n2) |

| Sum | 7(1/12)n3 + O(n2) |

Algorithm 4.3. Computes a primary square root of a nonsingular skew k-circulant matrix A ∈ ℂn×n (n/2 is odd).

Step 1. Compute the reduced form in (2.8).

Step 2. Compute the Schur decomposition T = UH(−(1/4)MN)U, where T is upper triangular.

Step 3. Compute the upper triangular fourth root S = f(T), where f = λ1/4 is defined on λ(T), then compute Z1 = USUH.

Step 4. Solve the Sylvester equation .

Step 5. Compute .

Step 6. Compute and .

Step 7. Compute Z4 = M−1Z1M.

Step 8. Form Z according to (3.8).

Step 9. Obtain X = PZP−1.

The costs of Steps 1 and 9 in Algorithm 4.3 are about O(n2) flops. The main costs are to implement Steps 2–8. In Step 2, it takes about (1/4)n3 flops for computing matrix-matrix multiplication and 3(1/8)n3 for computing the Schur decomposition of −(1/4)MN. In Step 3, it takes about (1/12)n3 flops to compute the upper triangular fourth root S = f(T) (use Step 3 in Algorithm 4.2 twice) and (3/8)n3 to form Z1. The cost of Step 4 amounts to about (1/4)n3 flops, see Bartels-Stewart Algorithm in [17]. In Steps 5 and 6, it needs to compute 4 matrix-matrix multiplications, which requires about n3 flops. Step 7 involves a matrix-matrix multiplication and a solution of a linear system of equations with multiple right-hand sides, which needs about (7/12)n3 flops. Thus, the whole sum is about 5(2/3)n3 flops (see Table 3), which means Algorithm 4.3 is about 5 times cheaper than the standard Schur method.

| Step | Flops |

|---|---|

| 1 | O(n2) |

| 2 | 3(3/8)n3 + O(n2) |

| 3 | (11/24)n3 + O(n2) |

| 4 | (1/4)n3 + O(n2) |

| 5 | (1/2)n3 + O(n2) |

| 6 | (1/2)n3 + O(n2) |

| 7 | (7/12)n3 + O(n2) |

| 8 | |

| 9 | O(n2) |

| Sum | 5(2/3)n3 + O(n2) |

Let n/2 be odd (when n/2 is even, we can use Algorithm 4.2 to compute a primary square root of a nonsingular Hermitian k-circulant matrix).

Algorithm 4.4. Computes a primary square root of a nonsingular Hermitian k-circulant matrix A ∈ ℂn×n.

Step 1. Compute the reduced form RA = Q−1AQ in (2.12).

Step 2. Compute the real Schur decomposition T = VTRAV, where T is a upper quasitriangular matrix.

Step 3. Compute S = f(T) (see [8] for more details), where T is upper quasitriangular with distinct eigenvalues and f = λ1/2 is defined on λ(T).

Step 4. Compute .

Step 5. Compute .

The costs of Steps 1 and 5 in Algorithm 4.4 are about O(n2) flops. The main costs are to implement Steps 2–4. In Step 2, it takes about 25n3 real flops for computing the real Schur decomposition of RA. In Step 3, it takes about (1/3)n3 real flops for S. The cost of Step 4 amounts to about 3n3 to form . Thus, the whole sum is about 28(1/3)n3 real flops (see Table 4). Note the fact that a complex addition is equivalent to two real additions and a complex multiplication is equivalent to four real multiplications and plus two real additions. So Algorithm 4.4 is approximately eight times cheaper than the standard Schur method.

| Step | Flops |

|---|---|

| 1 | O(n2) |

| 2 | 25n3 + O(n2) |

| 3 | (1/3)n3 + O(n2) |

| 4 | 3n3 + O(n2) |

| 5 | O(n2) |

| Sum | 28(1/3)n3 + O(n2) |

5. Numerical Experiments

We present numerical experiments for the comparison of the algorithms presented in this paper and the standard Schur method with respect to execution time.

All of our computations have been done using MATLAB 7.6.0(R2008a) with unit roundoff u = 2−53 ≈ 1.1 × 10−16 and executed in an Intel Pentium M Processor 740, 1.73 GHz with 1 GB of RAM.

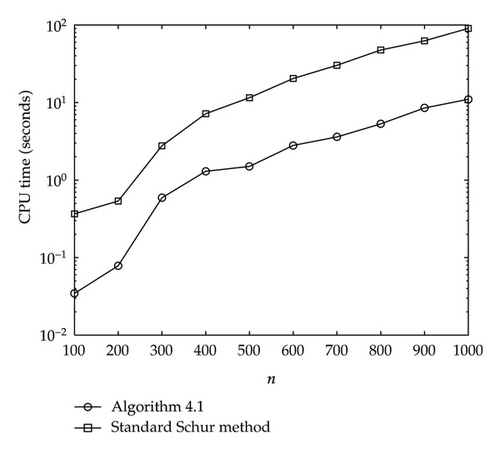

The execution (CPU) time for square roots with respect to n (order of matrix) for Algorithm 4.1 and the standard Schur method is shown in Figure 1.

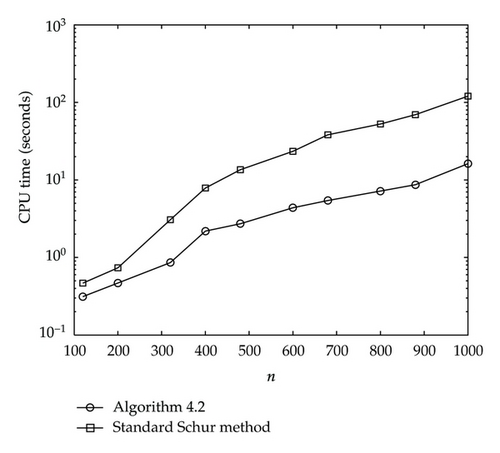

The execution (CPU) time for square roots with respect to n for Algorithm 4.2 and the standard Schur method is shown in Figure 2.

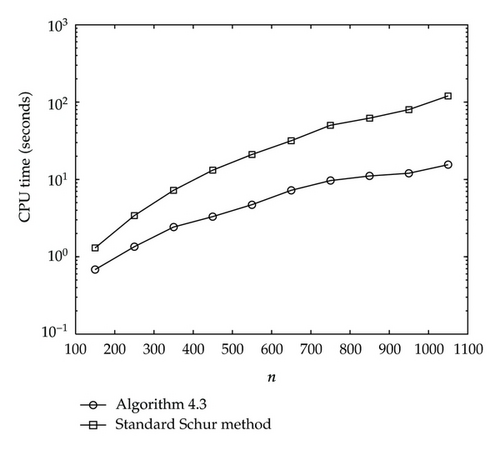

The execution (CPU) time for square roots with respect to n for Algorithm 4.3 and the standard Schur method is shown in Figure 3.

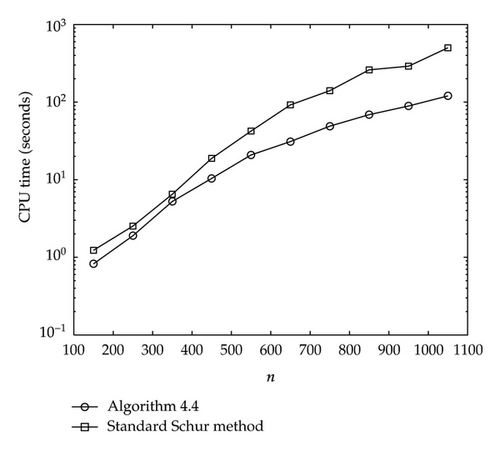

The execution (CPU) time for square roots with respect to n for Algorithm 4.4 and the standard Schur method is shown in Figure 4.

It is evident by the statements of Figures 1–4, the algorithms are clearly faster than the standard Schur methods for computing the circulant matrices in this paper.

Acknowledgments

The author would like to thank the editor and the referees for their helpful comments and valuable suggestions for improving this paper. A Project Supported by Scientific Research Fund of Zhejiang Provincial Education Department (No. Y201223607).