Iterative Algorithms for Solving the System of Mixed Equilibrium Problems, Fixed-Point Problems, and Variational Inclusions with Application to Minimization Problem

Abstract

We introduce a new iterative algorithm for solving a common solution of the set of solutions of fixed point for an infinite family of nonexpansive mappings, the set of solution of a system of mixed equilibrium problems, and the set of solutions of the variational inclusion for a β-inverse-strongly monotone mapping in a real Hilbert space. We prove that the sequence converges strongly to a common element of the above three sets under some mild conditions. Furthermore, we give a numerical example which supports our main theorem in the last part.

1. Introduction

A set-valued mapping M : H → 2H is called monotone if for all x, y ∈ H, f ∈ M(x), and g ∈ M(y) impling 〈x − y, f − g〉 ≥ 0. A monotone mapping M is maximal if its graph G(M) : = {(f, x) ∈ H × H : f ∈ M(x)} of M is not properly contained in the graph of any other monotone mapping. It is known that a monotone mapping M is maximal if and only if for (x, f) ∈ H × H, 〈x − y, f − g〉 ≥ 0 for all (y, g) ∈ G(M) impling f ∈ M(x).

2. Preliminaries

Lemma 2.1. The function u ∈ C is a solution of the variational inequality if and only if u ∈ C satisfies the relation u = PC(u − λBu) for all λ > 0.

Lemma 2.2. For a given z ∈ H, u ∈ C, u = PCz⇔〈u − z, v − u〉 ≥ 0, ∀ v ∈ C.

It is well known that PC is a firmly nonexpansive mapping of H onto C and satisfies

Lemma 2.3 (see [40].)Let M : H → 2H be a maximal monotone mapping, and let B : H → H be a monotone and Lipshitz continuous mapping. Then the mapping L = M + B : H → 2H is a maximal monotone mapping.

Lemma 2.4 (see [41].)Each Hilbert space H satisfies Opial′s condition, that is, for any sequence {xn} ⊂ H with xn⇀x, the inequality liminf n→∞∥xn − x∥ < liminf n→∞∥xn − y∥, hold for each y ∈ H with y ≠ x.

Lemma 2.5 (see [42].)Assume {an} is a sequence of nonnegative real numbers such that

- (i)

,

- (ii)

limsup n→∞δn/γn ≤ 0 or .

Lemma 2.6 (see [43].)Let C be a closed convex subset of a real Hilbert space H, and let T : C → C be a nonexpansive mapping. Then I − T is demiclosed at zero, that is,

- (A1)

F(x, x) = 0 for all x ∈ C;

- (A2)

F is monotone, that is, F(x, y) + F(y, x) ≤ 0 for any x, y ∈ C;

- (A3)

for each fixed y ∈ C, x ↦ F(x, y) is weakly upper semicontinuous;

- (A4)

for each fixed x ∈ C, y ↦ F(x, y) is convex and lower semicontinuous;

- (B1)

for each x ∈ C and r > 0, there exist a bounded subset Dx⊆C and yx ∈ C such that for any z ∈ C∖Dx,

- (B2)

C is a bounded set.

Lemma 2.7 (see [44].)Let C be a nonempty closed and convex subset of a real Hilbert space H. Let F : C × C → ℛ be a bifunction mapping satisfying (A1)–(A4), and let φ : C → ℛ be a convex and lower semicontinuous function such that C∩dom φ ≠ ∅. Assume that either (B1) or (B2) holds. For r > 0 and x ∈ H, then there exists u ∈ C such that

- (i)

Kr is single-valued;

- (ii)

Kr is firmly nonexpansive, that is, for any x, y ∈ H, ;

- (iii)

F(Kr) = MEP (F);

- (iv)

MEP (F) is closed and convex.

Lemma 2.8 (see [29].)Assume A is a strongly positive linear bounded operator on a Hilbert space H with coefficient and 0 < ρ ≤ ∥A∥−1, then .

Lemma 2.9 (see [38].)Let C be a nonempty closed and convex subset of a strictly convex Banach space. Let be an infinite family of nonexpansive mappings of C into itself such that ∩i∈NF(Ti) ≠ ∅, and let {λi} be a real sequence such that 0 ≤ λi ≤ b < 1 for every i ∈ N. Then F(W) = ∩i∈NF(Ti) ≠ ∅.

Lemma 2.10 (see [38].)Let C be a nonempty closed and convex subset of a strictly convex Banach space. Let {Ti} be an infinite family of nonexpansive mappings of C into itself, and let {λi} be a real sequence such that 0 ≤ λi ≤ b < 1 for every i ∈ N. Then, for every x ∈ C and k ∈ N, the limit lim n→∞Un,k exist.

In view of the previous lemma, we define

3. Strong Convergence Theorems

In this section, we show a strong convergence theorem which solves the problem of finding a common element of the common fixed points, the common solution of a system of mixed equilibrium problems and variational inclusion of inverse-strongly monotone mappings in a Hilbert space.

Theorem 3.1. Let H be a real Hilbert space and C a nonempty close and convex subset of H, and let B be a β-inverse-strongly monotone mapping. Let φ : C → R be a convex and lower semicontinuous function, f : C → C a contraction mapping with coefficient α (0 < α<1), and M : H → 2H a maximal monotone mapping. Let A be a strongly positive linear bounded operator of H into itself with coefficient . Assume that and λ ∈ (0,2β). Let {Tn} be a family of nonexpansive mappings of H into itself such that

- (C1):

;

- (C2):

{rn} ⊂ [c, d] with c, d ∈ (0,2σ) and .

Then, the sequence {xn} converges strongly to q ∈ θ, where q = Pθ(γf + I − A)(q) which solves the following variational inequality:

Proof. For condition (C1), we may assume without loss of generality, and ϵn ∈ (0, ∥A∥−1) for all n. By Lemma 2.8, we have . Next, we will assume that .

Next, we will divide the proof into six steps.

Step 1. First, we will show that {xn} and {un} are bounded. Since B is β-inverse-strongly monotone mappings, we have

Put yn : = JM,λ(un − λBun), n ≥ 0. Since JM,λ and I − λB are nonexpansive mapping, it follows that

Step 2. We claim that lim n→∞∥xn+1 − xn∥ = 0 and lim n→∞∥yn+1 − yn∥ = 0. From (3.2), we have

Step 3. Next, we show that lim n→∞∥Bun − Bq∥ = 0.

For q ∈ θ hence q = JM,λ(q − λBq). By (3.6) and (3.9), we get

Step 4. We show the following:

- (i)

lim n→∞∥xn − un∥ = 0;

- (ii)

lim n→∞∥un − yn∥ = 0;

- (iii)

lim n→∞∥yn − Wnyn∥ = 0.

Since JM,λ is 1-inverse-strongly monotone and by (2.3), we compute

So, we have ∥xn − un∥ → 0, ∥un − yn∥ → 0 as n → ∞. It follows that

Hence, we have

Moreover, we also have

Step 5. We show that and limsup n→∞〈(γf − A)q, Wnyn − q〉 ≤ 0. It is easy to see that Pθ(γf + (I − A)) is a contraction of H into itself.

Indeed, since , we have

Next, we show that limsup n→∞〈(γf − A)q, Wnyn − q〉 ≤ 0, where q = Pθ(γf + I − A)(q) is the unique solution of the variational inequality 〈(γf − A)q, w − q〉 ≥ 0 for all w ∈ θ. We can choose a subsequence of {yn} such that

Next we claim that w ∈ θ. Since ∥yn − Wnyn∥ → 0, ∥xn − Wnxn∥ → 0, and ∥xn − yn∥ → 0, and by Lemma 2.6, we have .

Next, we show that . Since , for k = 1,2, 3, …, N, we know that

Since , from (A4) and the weakly lower semicontinuity of φ, and . From (A1) and (A4), we have

Lastly, we show that w ∈ I(B, M). In fact, since B is β-inverse strongly monotone, hence B is a monotone and Lipschitz continuous mapping. It follows from Lemma 2.3 that M + B is a maximal monotone. Let (v, g) ∈ G(M + B), since g − Bv ∈ M(v). Again since , we have , that is, . By virtue of the maximal monotonicity of M + B, we have

Step 6. Finally, we prove xn → q. By using (3.2) and together with Schwarz inequality, we have

Since {xn} is bounded, where for all n ≥ 0. It follows that

Corollary 3.2. Let H be a real Hilbert space and C a nonempty closed and convex subset of H. Let B be β-inverse-strongly monotone and φ : C → ℛ a convex and lower semicontinuous function. Let f : C → C be a contraction with coefficient α (0 < α < 1), M : H → 2H a maximal monotone mapping, and {Tn} a family of nonexpansive mappings of H into itself such that

Then, the sequence {xn} converges strongly to q ∈ θ, where q = Pθ(f + I)(q) which solves the following variational inequality:

Proof. Putting A ≡ I and γ ≡ 1 in Theorem 3.1, we can obtain the desired conclusion immediately.

Corollary 3.3. Let H be a real Hilbert space and C a nonempty closed and convex subset of H. Let B be β-inverse-strongly monotone, φ : C → ℛ a convex and lower semicontinuous function, and M : H → 2H a maximal monotone mapping. Let {Tn} be a family of nonexpansive mappings of H into itself such that

Then, the sequence {xn} converges strongly to q ∈ θ, where q = Pθ(q) which solves the following variational inequality:

Proof. Putting f(x) ≡ u, for all x ∈ C in Corollary 3.2, we can obtain the desired conclusion immediately.

Corollary 3.4. Let H be a real Hilbert space and C a nonempty closed and convex subset of H, and let B be β-inverse-strongly monotone mapping and A a strongly positive linear bounded operator of H into itself with coefficient . Assume that . Let f : C → C be a contraction with coefficient α(0 < α < 1) and {Tn} be a family of nonexpansive mappings of H into itself such that

Then, the sequence {xn} converges strongly to q ∈ θ, where q = Pθ(γf + I − A)(q) which solves the following variational inequality:

Proof. Taking F ≡ 0, φ ≡ 0, un = xn, and JM,λ = PC in Theorem 3.1, we can obtain the desired conclusion immediately.

4. Applications

In this section, we apply the iterative scheme (1.25) for finding a common fixed point of nonexpansive mapping and strictly pseudocontractive mapping.

Definition 4.1. A mapping S : C → C is called a strictly pseudocontraction if there exists a constant 0 ≤ κ < 1 such that

Using Theorem 3.1, we first prove a strongly convergence theorem for finding a common fixed point of a nonexpansive mapping and a strictly pseudocontraction.

Theorem 4.2. Let H be a real Hilbert space and C a nonempty closed and convex subset of H, and let B be an β-inverse-strongly monotone, φ : C → ℛ a convex and lower semicontinuous function, and f : C → C a contraction with coefficient α (0 < α < 1), and let A be a strongly positive linear bounded operator of H into itself with coefficient . Assume that . Let {Tn} be a family of nonexpansive mappings of H into itself, and let S be a κ-strictly pseudocontraction of C into itself such that

Then, the sequence {xn} converges strongly to q ∈ θ, where q = Pθ(γf + I − A)(q) which solves the following variational inequality:

Proof. Put B ≡ I − T, then B is (1 − κ)/2 inverse-strongly monotone and F(S) = I(B, M), and JM,λ(xn − λBxn) = (1 − λ)xn + λTxn. So by Theorem 3.1, we obtain the desired result.

Corollary 4.3. Let H be a real Hilbert space and C a closed convex subset of H, and let B be β-inverse-strongly monotone and φ : C → ℛ a convex and lower semicontinuous function. Let f : C → C be a contraction with coefficient α (0 < α < 1) and Tn a nonexpansive mapping of H into itself, and let S be a κ-strictly pseudocontraction of C into itself such that

Then, the sequence {xn} converges strongly to q ∈ θ, where q = Pθ(f + I)(q) which solves the following variational inequality:

Proof. Put A ≡ I and γ ≡ 1 in Theorem 4.2, we obtain the desired result.

5. Numerical Example

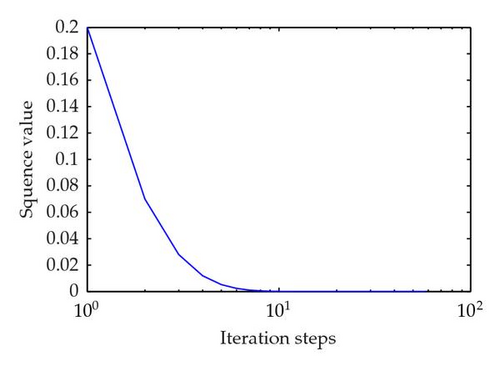

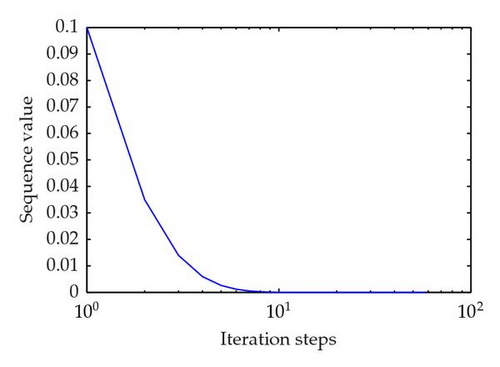

Now, we give a real numerical example in which the condition satisfies the ones of Theorem 3.1 and some numerical experiment results to explain the main result Theorem 3.1 as follows.

Example 5.1. Let H = R, C = [−1,1], Tn = I, λn = β ∈ (0,1), n ∈ N, Fk(x, y) = 0, for all x, y ∈ C, rn,n = 1, k ∈ {1,2, 3, …, N}, φ(x) = 0, for all x ∈ C, B = A = I, f(x) = (1/5)x, for all x ∈ H, λ = 1/2 with contraction coefficient α = 1/10, ϵn = 1/n for every n∈N, and γ = 1. Then {xn} is the sequence generated by

Proof. We prove Example 5.1 by Step 1, Step 2, and Step 3. By Step 4, we give two numerical experiment results which can directly explain that the sequence {xn} strongly converges to 0.

Step 1. We show

Indeed, since Fk(x, y) = 0 for all x, y ∈ C, n ∈ {1,2, 3, …, N}, due to the definition of Kr(x), for all x ∈ H, as Lemma 2.7, we have

Also by the equivalent property (2.2) of the nearest projection PC from H → C, we obtain this conclusion, when we take x ∈ C, . By (iii) in Lemma 2.7, we have

Step 2. We show that

Indeed. By (1.23), we have

Computing in this way by (1.23), we obtain

Since Tn = I, λn = β, n ∈ N, thus

Step 3. We show that

Indeed, we can see that A = I is a strongly position bounded linear operator with coefficient is a real number such that , so we can take γ = 1. Due to (5.1), (5.4), and (5.7), we can obtain a special sequence {xn} of (3.2) in Theorem 3.1 as follows:

By Lemma 2.5, it is obviously that zn → 0, 0 is the unique solution of the minimization problem

Step 4. We give the numerical experiment results using software Mathlab 7.0 and get Table 1 to Table 2, which show that the iteration process of the sequence {xn} is a monotone-decreasing sequence and converges to 0, but the more the iteration steps are, the more showily the sequence {xn} converges to 0.

| n | xn | n | xn |

|---|---|---|---|

| 1 | 1.000000000000000 | 31 | 0.000000000054337 |

| 2 | 0.200000000000000 | 32 | 0.000000000026643 |

| 3 | 0.070000000000000 | 33 | 0.000000000013072 |

| 4 | 0.028000000000000 | 34 | 0.000000000006417 |

| ⋮ | ⋮ | ⋮ | ⋮ |

| 19 | 0.000000301580666 | 39 | 0.000000000000184 |

| 20 | 0.000000146028533 | 40 | 0.000000000000091 |

| 21 | 0.000000070823839 | 41 | 0.000000000000045 |

| ⋮ | ⋮ | ⋮ | ⋮ |

| 29 | 0.000000000226469 | 47 | 0.000000000000001 |

| 30 | 0.000000000110892 | 48 | 0.000000000000000 |

| n | xn | n | xn |

|---|---|---|---|

| 1 | 0.500000000000000 | 31 | 0.000000000027168 |

| 2 | 0.100000000000000 | 32 | 0.000000000013321 |

| 3 | 0.035000000000000 | 33 | 0.000000000006536 |

| 4 | 0.014000000000000 | 34 | 0.000000000003208 |

| ⋮ | ⋮ | ⋮ | ⋮ |

| 19 | 0.000000150790333 | 39 | 0.000000000000092 |

| 20 | 0.000000073014267 | 40 | 0.000000000000045 |

| 21 | 0.000000035411919 | 41 | 0.000000000000022 |

| ⋮ | ⋮ | ⋮ | ⋮ |

| 29 | 0.000000000113235 | 46 | 0.000000000000001 |

| 30 | 0.000000000055446 | 47 | 0.000000000000000 |

Now, we turn to realizing (3.2) for approximating a fixed point of T. We take the initial valued x1 = 1 and x1 = 1/2, respectively. All the numerical results are given in Tables 1 and 2. The corresponding graph appears in Figures 1(a) and 1(b).

The numerical results support our main theorem as shown by calculating and plotting graphs using Matlab 7.11.0.

Acknowledgments

The authors would like to thank the Higher Education Research Promotion and National Research University Project of Thailand, Office of the Higher Education Commission (under the project NRU-CSEC no. 54000267) for financial support. Furthermore, the second author was supported by the Commission on Higher Education, the Thailand Research Fund, and the King Mongkut′s University of Technology Thonburi (KMUTT) (Grant no. MRG5360044). Finally, the authors would like to thank the referees for reading this paper carefully, providing valuable suggestions and comments, and pointing out major errors in the original version of this paper.