Construction of Optimal Derivative-Free Techniques without Memory

Abstract

Construction of iterative processes without memory, which are both optimal according to the Kung-Traub hypothesis and derivative-free, is considered in this paper. For this reason, techniques with four and five function evaluations per iteration, which reach to the optimal orders eight and sixteen, respectively, are discussed theoretically. These schemes can be viewed as the generalizations of the recent optimal derivative-free family of Zheng et al. in (2011). This procedure also provides an n-step family using n + 1 function evaluations per full cycle to possess the optimal order 2n. The analytical proofs of the main contributions are given and numerical examples are included to confirm the outstanding convergence speed of the presented iterative methods using only few function evaluations. The second aim of this work will be furnished when a hybrid algorithm for capturing all the zeros in an interval has been proposed. The novel algorithm could deal with nonlinear functions having finitely many zeros in an interval.

1. Introduction

The paper unfolds the contents as follows. A collection of pointers to known literature on derivative-free techniques will be presented in Section 2. This is followed by Section 3, whereas the central results are given and also by Section 4, where an optimal sixteenth-order derivative-free technique is constructed. Results and discussion on the comparisons with other famous derivative-free methods are presented in Section 5, entitled by Computational Tests. The only difficulty of iterative methods of this type is in the choice of the initial guess. Using the programming package MATHEMATICA 8, we will provide an algorithm to capture all the real solutions of a nonlinear equation in a short piece of time by presenting a hybrid algorithm in Section 6. The conclusions have finally been drawn in Section 7.

2. Selections from the Literature

The literature related to the present paper is substantial, and we do not present a comprehensive survey. The references of the papers we cite should be consulted for further reading. Consider iterative techniques for finding a simple root of the nonlinear equation f(x) = 0, where f : D⊆ℝ → ℝ for an open interval D = (a, b) is a scalar function and it is sufficiently smooth in a neighborhood of α ∈ D. The design of formulas for solving such equations is an absorbing task in numerical analysis [4]. The most frequent approach to solve the nonlinear equations consists of the implementation of rapidly convergent iterative methods starting from a reasonably good initial guess to the sought zero of f(x).

Many iterative methods have been improved by using various techniques such as quadrature formulas and weight function; see, for example, [5, 6]. All these developments are aimed at increasing the local order of convergence with a special view of increasing their efficiency indices. The derivative-involved methods are well discussed in the literature; see, for example, [7–9] and the references therein. But another thing that should be mentioned is that for many particular choices of the function f, specially in hard problems, the calculations of the derivatives are not possible or it takes a deal of time.

Thus, in some situations the considered function f(x) has an improper behavior or its derivative is close to 0, which causes that the applied iterative processes fail. That is why higher-order derivative-free methods are better root solvers and are in focus recently. The most important merit of Steffensen’s method is that it has quadratic convergence like Newton’s method. That is, both techniques estimate roots of the equation f(x) = 0 just as quickly. In this case, quickly means that the number of correct digits in the new obtained value doubles with each iteration for both. But the formula for Newton’s method requires a separate function for the derivative; Steffensen’s method does not. Now let us review some derivative-free techniques.

To see a recent paper including derivative-free methods with memory, refer to [13]. For further reading on this topic, refer to [14–19].

3. Construction of a New Eighth-Order Derivative-Free Class

Theorem 3.1. Assume f(x) to be a sufficiently continuous real function in the domain D. Then the sequence generated by (3.4) converges to the simple root α ∈ D with fourth-order convergence and it satisfies the follow-up error equation

Proof. See [20].

The given approximation (3.3) of the first derivative of the function in the second step of our cycle can be applied on any optimal second-order derivative-free method to provide a new optimal fourth-order method. For example, if one chooses a two-step cycle in which the first derivative of the function in the first step estimated by backward finite difference, and after that applies the presented approximation in the second step, then another novel optimal fourth-order method could be obtained. This will be discussed more in the rest of the work.

Remark 3.2. The simplified form of the approximation for the derivative in the quotient of Newton’s iteration at the second step of our cycle is as follows:

Inspired by the above approach, now we are about to construct new high-order derivative-free methods, which are optimal as well and can be considered as the generalizations of the methods in [2, 20, 21]. To construct such techniques, first we should consider an optimal fourth-order method in the first and second steps of a multistep cycle. Toward this end, we take into consideration the following three-step cycle in which Newton’s method is applied in the third step:

It is crystal clear that scheme (3.7) is an eighth-order method with five evaluations per iteration. The main question is that Is there any way to keep the order on eight but reduce the number of evaluation while the method be free from derivative? Fortunately, by using all four past known data, a very powerful approximation of f′(zn) will be obtained. Therefore, we approximate f(t) in the domain D, by a new polynomial approximation of degree four with a free parameter b4, as follows:

Hopefully, we have four known values f(xn), f(kn), f(yn), and f(zn). Thus, by substituting these values in (3.8), we obtain the following linear system of four equations with four unknowns:

Now, an efficient, accurate, and optimal eighth-order family, which is free from any derivative, can be obtained in the following form:

Remark 3.3. The simplified form of the approximation for the derivative in the quotient of Newton’s iteration at the third step of our cycle is as comes next:

Using Remark 3.3, we can propose the following optimal eighth-order derivative-free iteration in this paper with some free parameters:

Theorem 3.4. Assume that the function f : D⊆ℝ → ℝ has a single root α ∈ D, where D is an open interval. Then the convergence order of the derivative-free iterative method defined by (3.14) is eight and it satisfies the following the error equation:

Proof. To find the asymptotic error constant of (3.14), wherein cj = f(j)(α)/j!, j ≥ 1, and en = xn − α, we expand any terms of (3.14) around the simple root α in the nth iterate. Thus, we write . Accordingly, we attain

Noting that (3.14) is an extension of the Steffensen and the Ren et al. methods, we should here pull the attention toward this fact that (3.14) is also a generalization of the family given by Zheng et al. in [2]. As a matter of fact, our scheme (3.14) in this paper includes two free parameters (more than that of Zheng et al.), which shows the generality of our technique. Clearly, choosing a3 = b4 = 0 will result in the Zheng et al. method (2.4). Thus, it is only an special element from the family (3.14).

Remark 3.5. The introduced approximation for the first derivative of the function in the third step of (3.7) can be implemented on any optimal derivative-free fourth-order method without memory for presenting new optimal eighth-order techniques free from derivative. Namely, using (2.1) and (3.13) results in the following optimal eighth-order derivative-free method:

Remark 3.6. Using backward finite difference approximation for the first derivative of the function in the first step of our cycles will end in other new methods as comes next:

4. Higher-Order Optimal Schemes

Note that for the case of multipoint methods, it is more desirable to apply the symbolic calculations using one of the modern software in mathematics. Using Mathematica 8 gives us the unknown parameters by the command LinearSolve as in Algorithm 1.

-

Algorithm 1:

-

LinearSolve[{{k-x,((k-x) ∧2), ((k-x) ∧3),((k-x) ∧4) } ,

-

{ y-x, ((y-x) ∧2),((y-x) ∧3), ((y-x) ∧4) } , { z-x, ((z-x) ∧2),

-

((z-x) ∧3),((z-x) ∧4) } , { w-x, ((w-x) ∧2), ((w-x) ∧3),((w-x) ∧4)}},

-

{ f[k]-f[x]-r5∗(k-x) ∧4,f[y]-f[x]-r5∗(y-x) ∧4, f[z]-f[x]

-

-r5∗(z-x) ∧4,f[w]-f[x]-r5∗(w-x) ∧4}]//FullSimplify

Here to cut the long story short, we just provide the following theorem.

Theorem 4.1. The four-step method

Proof. The proof of this theorem is similar to the proofs of Theorems 3.1 and 3.4. Hence, it is omitted.

Remark 4.2. The simplified form of the approximation for the derivative in the quotient of Newton’s iteration at the fourth step of our cycle (without the index n) is as comes next:

Remark 4.3. Note that if we choose another optimal derivative-free eighth-order method in the first three steps of (4.4) and then implement the introduced approximation in the fourth-step, we will obtain a new technique of optimal sixteenth convergence rate to solve nonlinear scalar equations. In what follows, we give some of such optimal 16th-order schemes:

Remark 4.4. If one considers an n-step derivative-free construction in which the first n − 1 steps are any of the existing optimal derivative-free methods of order 2n−1, then by considering a polynomial approximation of degree n + 1 (with one free parameter, as in the idea above), as discussed above, an optimal derivative-free method of order 2n with the optimal efficiency index 2 will be attained.

Now, let us list the efficiency indices of some of the well-known methods with the contributed schemes in this study. The results of comparisons are made in Table 1. As it shows, our schemes are totally better than the existing methods in the literature in terms of efficiency index.

5. Computational Tests

The practical utilities of the main contributions in this paper are given by solving a couple of numerical examples and comparison with other well-known methods of different orders in this section. The main purpose of demonstrating the new derivative-free methods for nonlinear equations is purely to illustrate the accuracy of the approximate solution, the stability of the convergence, and the consistency of the results and to determine the efficiency of the new iterative techniques.

| Test functions | Simple zeros | Initial guesses |

|---|---|---|

| f1(x) = 3x + sin(x) − ex | α1 ≈ 0.360421702960324 | x0 = 0.2 |

| f2(x) = sin(x) − 0.5 | α2 ≈ 0.523598775598299 | x0 = 0.3 |

| f3(x) = x2 − ex − 3x + 2 | α3 ≈ 0.257530285439861 | x0 = 0.4 |

| f4(x) = x3 + 4x2 − 10 | α4 ≈ 1.365230013414097 | x0 = 1.37 |

| f5(x) = xe−x − 0.1 | α5 ≈ 0.111832559158963 | x0 = 0.2 |

| f6(x) = x3 − 10 | α6 ≈ 2.15443490031884 | x0 = 2.16 |

| α7 ≈ 1.679630610428450 | x0 = 1.4 | |

| f8(x) = cos (x) − x | α8 ≈ 0.739085133215161 | x0 = 0.3 |

The results are summarized in Tables 3 and 4, in terms of the required number of iterations to obtain an approximation of the root. In our numerical comparisons, we have used 2000-digit floating point in our calculations.

| Test functions | RM4 | (2.3) | (2.4) | (5.1) | (3.14) | (3.22) | (3.30) |

|---|---|---|---|---|---|---|---|

| |f1(x2)| | 0.4e − 13 | 0.1e − 57 | 0.4e − 45 | 0.2e − 52 | 0.1e − 44 | 0.2e − 57 | 0.2e − 65 |

| |f2(x2)| | 0.1e − 14 | 0.4e − 64 | 0.7e − 51 | 0.2e − 57 | 0.7e − 41 | 0.1e − 63 | 0.1e − 96 |

| |f3(x2)| | 0.4e − 20 | 0.1e − 83 | 0.2e − 82 | 0.7e − 82 | 0.2e − 61 | 0.5e − 83 | 0.5e − 79 |

| |f4(x2)| | 0.1e − 28 | 0.1e − 124 | 0.1e − 94 | 0.4e − 115 | 0.1e − 118 | 0.6e − 124 | 0.2e − 126 |

| |f5(x2)| | 0.6e − 15 | 0.1e − 59 | 0.1e − 34 | 0.6e − 49 | 0.1e − 53 | 0.1e − 53 | 0.1e − 73 |

| |f6(x2)| | 0.7e − 29 | 0.4e − 125 | 0.1e − 95 | 0.2e − 115 | 0.2e − 117 | 0.2e − 124 | 0.1e − 127 |

| |f7(x2)| | 0.1e − 4 | 0.2e − 24 | 0.1e − 14 | 0.5e − 9 | 0.3e − 26 | 0.1e − 33 | 0.1e − 16 |

| |f8(x2)| | 0.1e − 15 | 0.2e − 71 | 0.1e − 62 | 0.1e − 58 | 0.2e − 44 | 0.3e − 78 | 0.1e − 34 |

| Test functions | RM4 | (2.3) | (2.4) | (5.1) | (3.14) | (3.22) | (3.30) |

|---|---|---|---|---|---|---|---|

| |f1(x3)| | 0.3e − 54 | 0.1e − 466 | 0.1e − 363 | 0.1e − 422 | 0.3e − 361 | 0.5e − 463 | 0.6e − 529 |

| |f2(x3)| | 0.1e − 59 | 0.5e − 516 | 0.1e − 408 | 0.9e − 462 | 0.7e − 328 | 0.1e − 510 | 0.4e − 780 |

| |f3(x3)| | 0.2e − 84 | 0.1e − 676 | 0.3e − 668 | 0.1e − 663 | 0.1e − 497 | 0.1e − 673 | 0.2e − 640 |

| |f4(x3)| | 0.2e − 117 | 0.9e − 1004 | 0.2e − 761 | 0.3e − 927 | 0.1e − 955 | 0.2e − 999 | 0.6e − 1019 |

| |f5(x3)| | 0.6e − 60 | 0.5e − 478 | 0.2e − 275 | 0.7e − 391 | 0.1e − 427 | 0.2e − 429 | 0.7e − 591 |

| |f6(x3)| | 0.1e − 118 | 0.1e − 1008 | 0.3e − 768 | 0.7e − 930 | 0.2e − 946 | 0.1e − 1002 | 0.4e − 1030 |

| |f7(x3)| | 0.9e − 20 | 0.4e − 199 | 0.3e − 119 | 0.1e − 75 | 0.2e − 214 | 0.2e − 273 | 0.1e − 136 |

| |f8(x3)| | 0.3e − 66 | 0.1e − 578 | 0.3e − 507 | 0.1e − 476 | 0.3e − 360 | 0.1e − 634 | 0.4e − 283 |

As Tables 3 and 4 manifest, our contributed methods are accurate and efficient in contrast by the other high-order schemes in terms of required number of iterations. It is well known that convergence of iteration formula is guaranteed only when the initial approximation is sufficiently near to the root. This issue will be the main body of the next section. However, our class of derivative-free method is much better and has better convergence rate in contrast to the existing high-order derivative-free methods in the literature.

6. A Hybrid Algorithm to Capture All the Solutions

An important aspect in implementing the iterative methods for the solution of nonlinear equations and systems relies on the choice of the initial approximation. There are a few known ways in the literature [23] to extract a starting point for the solutions of nonlinear functions. In practice, users need to find out robust approximations for all the zeros in an interval. Thus, to remedy this and to respond on this need, we provide a way to extract all the real zeros of nonlinear function in the interval D = [a, b]. We use the command Reduce[] in MATHEMATICA 8 [24, 25]. Hence, we give a hybrid algorithm including two main steps, a predictor and a corrector. In the predictor step, we extract initial approximations for all the zeros in an interval up to 8 decimal places. Then in the corrector step, an eighth-order method, for example, (3.28), will be used to boost up the accuracy of the starting points up to any tolerance. We also give some significant cautions for applying on different test functions.

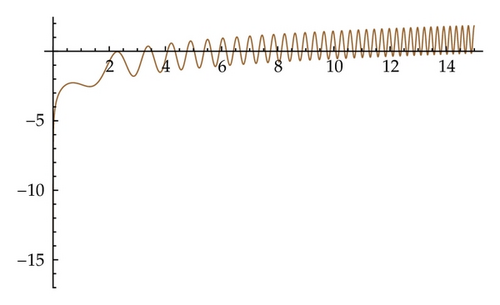

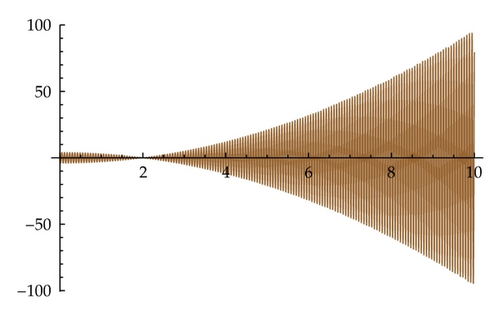

In what follows, we keep going by choosing an oscillatory function f(x) = −cos (2 − x2) + log (x/7) + (1/10) in the domain D = [0,15].

Let us define the function and the domain for imposing the Reduce[] command as in Algorithm 2.

-

Algorithm 2:

-

ClearAll[f, x, rts, initialValues, tol, a, b, j,

-

NumberOfGuesses, FD, nMax, beta, gamma, y, z, k,

-

fx, fk, fy, fz]

-

f[x_]:= Log[x/7] - Cos[x ∧2 - 2] + 1/10

-

a = 0; b = 15;

-

Plot[f[x], { x, a, b } , Background -> LightBlue,

-

PlotStyle -> {Brown, Thick}, PlotRange -> All,

-

PerformanceGoal -> "Quality"]

-

rts = Reduce[f[x] == 0 && a < x < b, x];

One may note that Reduce[] works with function of exact arithmetic. Hence, if a nonlinear function is the floating point arithmetic, that is, has inexact coefficients, thus we should write it in the exact arithmetic when we enter it into the above piece of code.

Now we store the list of initial approximations in initialValues, by the following piece of code, which also sort the initial points. The tol will specify that the accuracy of each member of the provided sequence to be correct up to utmost tol, decimal places (Algorithm 3).

-

Algorithm 3:

-

tol = 8;

-

initialValues = Sort[N[x /. { ToRules[rts]}, tol]]

-

Length[initialValues]

-

Accuracy[initialValues]

It is obvious that f is so oscillatory, and by the above predictor piece of Mathematica code, we attain that it has 69 real solutions. Note that the graph of the function f has been drawn in Figure 1. Now it is time to use one of the derivative-free methods given in Section 2 or 3 for correcting the elements of the obtained list. We use the method (3.28) in Algorithm 4.

-

Algorithm 4:

-

nMax = 2; beta = SetAccuracy[1, 2000];

-

gamma = SetAccuracy[0, 2000];

-

For[j = 1, j <= NumberOfGuesses, j++,

-

{x = SetAccuracy[initialValues[[j]], 2000],

-

Do[

-

fx = SetAccuracy[f[x], 2000];

-

k = SetAccuracy[x + beta fx, 2000];

-

fk = SetAccuracy[f[k], 2000];

-

FD = SetAccuracy[(fk - fx)/(beta fx), 2000];

-

y = SetAccuracy[x - fx/FD, 2000];

-

fy = SetAccuracy[f[y], 2000];

-

z = SetAccuracy[y - (fy/FD)*(1 + ((2 + beta FD)/(1

-

+ beta FD)) (fy/fx)), 2000];

-

fz = SetAccuracy[f[z], 2000];

-

FDxz = SetAccuracy[(fz - fx)/(z - x), 2000];

-

FDxy = SetAccuracy[(fx - fy)/(x - y), 2000];

-

FDxk = SetAccuracy[(fk - fx)/(k - x), 2000];

-

x = SetAccuracy[z - fz/(FDxz + ((FDxk - FDxy)/(k - y)

-

- (FDxk - FDxz)/(k - z)

-

- (FDxy - FDxz)/(y - z))*(x - z)

-

+ gamma (z - x)*(z - k)*(z - y)), 2000];

-

, {n, nMax}];

-

Print[Column[{

-

N[x, 128],

-

N[f[x]]}

-

, Background -> {{LightGreen, LightGray}},

-

Frame -> True]];

-

}

-

];

In Algorithm 4, we have the ability to choose the nonzero free parameter β, as well as the parameter gamma. Note that for optimal eighth-order methods and with such an accurate list of initial points, we believe that only two full iterations are required. Hence, we have selected nMax = 2. Note that if a user needs much more accuracy, thus higher number of steps should be taken. It should be remarked that in order to work with such a high accuracy, we must then choose more than 2000 decimal places arithmetic in our calculations.

Due to page limitations, we are not able to list the results for all 69 zeros. However, running the above algorithm could capture all the real zeros of the nonlinear functions. We also remark three points. One is that for many oscillatory function or for nonsmooth functions, the best way is to first divide the whole interval into some subintervals and then find all the zeros of the function on the subintervals. And second, in case of having a root cluster, that is, when the zeros are concentrated on a very small area, then it would be better to increase the first tolerance of our algorithm in the predictor step, to find reliable starting points and then start the process. And last, if the nonlinear function has an exact solution, that is to say, an integer be the solution of a nonlinear function, then the first step of our algorithm finds this exact solution, and an error-like message would be generated by applying our second step.

Now we are able to solve nonlinear equations with finitely many roots in an interval and find all the real zeros in a short piece of time. Finding robust ways, to capture the complex solutions along working with complex nonlinear functions, can be taken into account as future works.

7. Concluding Comments

In a word, a general way for producing optimal methods based upon the still unproved hypothesis of Kung-Traub [3] has been discussed fully. In these methods, it is necessary to begin with one initial approximation x0. The convergence results of the iterative sequences generated by new algorithms were provided. Our contributed techniques without memory reach the optimal efficiency indices 81/4 ≈ 1.682 and 161/5 ≈ 1.741.

In the limitation case, based on a polynomial of degree n + 1 for a method with n steps, we can obtain an iterative scheme with efficiency index 2, which is the highest possible efficiency in this field of study for methods without memory. By numerical experiments above, we verify that new methods are effective and accurate. We conclude that the new methods presented in this paper are competitive with other recognized efficient equation solvers.

Note that construction of some ways, which launch n-step Steffensen-type methods with memory with the order up to 2n + 2n−1 (50% of an improvement) requiring the same computational cost and applying elegant and quite natural accelerating procedure, could be considered as future works.

Note that the second aim of this paper was given by presenting a robust algorithm for solving nonlinear equations with finitely many roots in an interval. In fact, a way for extracting very robust initial approximations as the seeds to start the iterative Steffensen-type methods was presented. Now we are able to capture all the solutions with any desire number of decimal places.

Acknowledgment

The second author is an IEEE member.