New Nonsmooth Equations-Based Algorithms for ℓ1-Norm Minimization and Applications

Abstract

Recently, Xiao et al. proposed a nonsmooth equations-based method to solve the ℓ1-norm minimization problem (2011). The advantage of this method is its simplicity and lower storage. In this paper, based on new nonsmooth equations reformulation, we investigate new nonsmooth equations-based algorithms for solving ℓ1-norm minimization problems. Under mild conditions, we show that the proposed algorithms are globally convergent. The preliminary numerical results demonstrate the effectiveness of the proposed algorithms.

1. Introduction

The convex optimization problem (1.1) can be cast as a second-order cone programming problem and thus could be solved via interior point methods. However, in many applications, the problem is not only large scale but also involves dense matrix data, which often precludes the use and potential advantage of sophisticated interior point methods. This motivated the search of simpler first-order algorithms for solving (1.1), where the dominant computational effort is a relatively cheap matrix-vector multiplication involving A and AT. In the past few years, several first-order algorithms have been proposed. One of the most popular algorithms falls into the iterative shrinkage/thresholding (IST) class [6, 7]. It was first designed for wavelet-based image deconvolution problems [8] and analyzed subsequently by many authors, see, for example, [9–11]. Figueiredo et al. [12] studied the gradient projection and Barzilai-Borwein method [13] (denoted by GPSR-BB) for solving (1.1). They reformulated problem (1.1) as a box-constrained quadratic program and solved it by a gradient projection and Barzilai-Borwein method. Wright et al. [14] presented sparse reconstruction algorithm (denoted by SPARSA) to solve (1.1). Yun and Toh [15] proposed a block coordinate gradient descent algorithm for solving (1.1). Yang and Zhang [16] investigated alternating direction algorithms for solving (1.1).

Quite recently, Xiao et al. [17] developed a nonsmooth equations-based algorithm (called SGCS) for solving ℓ1-norm minimization problems in CS. They reformulated the box-constrained quadratic program obtained by Figueiredo et al. [12] into a system of nonsmooth equations and then applied the spectral gradient projection method [18] to solving the nonsmooth equation. The main advantage of the SGCS is its simplicity and lower storage. The difference between the above algorithms and SGCS is that SGCS did not use line search to decrease the value of objective function at each iteration and instead used a projection step to accelerate the iterative process. However, each projection step in SGCS requires two matrix-vector multiplication involving A or AT, which means that each iteration requires matrix-vector multiplication involving A or AT four times, while each iteration in GPSR-BB and IST is only two times. This may bring in more computational complexity. In addition, the dimension of the system of nonsmooth equations is 2n, which is twice of the original problems. These drawbacks motivate us to study new nonsmooth equations-based algorithms for the ℓ1-norm minimization problem.

In this paper, we first reformulate problem (1.1) into a system of nonsmooth equations. This system is Lipschitz continuous and monotone and many effective algorithms (see, e.g., [18–22]) can be used to solve it. We then apply spectral gradient projection (denoted by SGP) method [18] to solve the resulting system. Similar to SGCS, each iteration in SGP requires matrix-vector multiplication involving A or AT four times. In order to reduce the computational complexity, we also propose a modified SGP (denoted by MSGP) method to solve the resulting system. Under mild conditions, the global convergence of the proposed algorithms will be ensured.

The remainder of the paper is organized as follows. In Section 2, we first review some existing results of nonsmooth analysis and then derive an equivalent system of nonsmooth equations to problem (1.1). We verify some nice properties of the resulting system in this section. In Section 3, we propose the algorithms and establish their global convergence. In Section 4, we apply the proposed algorithms to some practical problems arising from compressed sensing and image restoration and compare their performance with that of SGCS, SPARSA, and GPSR-BB.

Throughout the paper, we use 〈· , ·〉 to denote the inner product of two vectors in Rn.

2. Preliminaries

Proposition 2.1. Let τ > 0 be any given constant. A point x* ∈ ℝn is a solution of problem (1.1) if and only if it satisfies

The above proposition has reformulated problem (1.1) as a system of nonsmooth equations. Compared with the nonsmooth equation reformulation in [17], the dimension of (2.6) is only half of the dimension of the equation in [17].

Proposition 2.2. For each τ > 0, there exists a positive constant L(τ) such that

Proof. By (2.10) and (2.12), we have

The following proposition shows another good property of the system of nonsmooth equations (2.6).

Proposition 2.3. There exists a constant τ* > 0 such that for any 0 < τ ≤ τ*, the mapping Hτ : ℝn → ℝn is monotone, that is

Proof. Let Dii be the ith diagonal element of ATA. It is clear that Dii > 0, i = 1, …, n. Set τ*≜mini{1/Dii}. Note that ATA is symmetric and positive semidefinite. Consequently, for any τ ∈ (0, τ*], matrix T(I − S) + τ(I − T + TS)ATA is also positive semidefinite. Therefore, it follows from (2.12) that

3. Algorithms and Their Convergence

In this section, we describe the proposed algorithms in detail and establish their convergence. Let τ > 0 be given. For simplicity, we omit τ and abbreviate Hτ(·) as H(·).

Algorithm 3.1 . (spectral gradient projection method (abbreviated as SGP)). Given initial point x0 ∈ ℝn and constants θ0 = 1, r > 0, ν ≥ 0, σ > 0, γ ∈ (0,1). Set k : = 0.

Step 1. Compute dk by

Step 2. Determine steplength with mk being the smallest nonnegative integer m such that

Remark 3.2. (i) The idea of the above algorithm comes from [18]. The major difference between Algorithm 3.1 and the method in [18] lies in the definition of yk−1. The choice of yk−1 in Step 1 follows from the modified BFGS method [26]. The purpose of the term is to make yk−1 be closer to H(xk) − H(xk−1) as xk tends to a solution of (2.6).

(ii) Step 3 is called the projection step. It is originated in [20]. The advantage of the projection step is to make xk+1 closer to the solution set of (2.6) than xk. We refer to [20] for details.

(iii) Since −〈H(xk), dk〉 = ∥H(xk)∥∥dk∥, by the continuity of H, it is easy to see that inequality (3.3) holds for all m sufficiently large. Therefore Step 2 is well defined and so is Algorithm 3.1.

The following lemma comes from [20].

Lemma 3.3. Let H : ℝn → ℝn be monotone and x, y ∈ ℝn satisfy 〈H(y), x − y〉>0. Let

The following theorem establishes the global convergence for Algorithm 3.1.

Theorem 3.4. Let {xk} be generated by Algorithm 3.1 and x* a solution of (2.6). Then one has

Proof. The proof is similar to that in [18]. We omit it here.

Remark 3.5. The computational complexity of each of SGP’s steps is clear. In large-scale problems, most of the work is matrix-vector multiplication involving A and AT. Steps 1 and 2 of SGP require matrix-vector multiplication involving A or AT two times each, while each iteration in GPSR-BB involves matrix-vector multiplication only two times. This may bring in more computational complexity. Therefore, we give a modification of SGP. The modified algorithm, which will be called MSGP in the rest of the paper, coincides with SGP except at Step 3, whose description is given below.

Algorithm 3.6 (modified spectral gradient projection Method (abbreviated as MSGP)). Given initial point x0 ∈ ℝn and constants θ0 = 1, r > 0, σ > 0, γ ∈ (0,1) a positive integer M. Set k : = 0.

Step 3. Let m = k/M. If m is a positive integer, compute

Lemma 3.7. Assume that {xk} is a sequence generated by Algorithm 3.6 and x* ∈ ℝn satisfies H(x*) = 0. Let λmax(ATA) be the maximum eigenvalue of ATA and τ ∈ (0,1/λmax(ATA)]. Then it holds that

Proof. Let xk+1 be generated by (3.8). It follows from Lemma 3.3 that (3.9) holds. In the following, we assume that xk+1 = zk. Then, we obtain

Now we establish a global convergence theorem for Algorithm 3.6.

Theorem 3.8. Let λmax(ATA) be the maximum eigenvalue of ATA and τ ∈ (0,1/λmax(ATA)]. Assume that {xk} is generated by Algorithm 3.6 and x* is a solution of (2.6). Then one has

Proof. We first note that if the algorithm terminates at some iteration k, then we have dk = 0 or ∥H(zk)∥ = 0. By the definition of θk, we have H(xk) = 0 if dk = 0. This shows that either xk or zk is a solution of (2.6).

Suppose that dk ≠ 0 and ∥H(zk)∥ ≠ 0 for all k. Then an infinite sequence {xk} is generated. It follows from (3.3) that

- (i)

liminfm→∞∥H(xmM)∥ = 0;

- (ii)

liminfm→∞∥H(xmM)∥ = ϵ > 0.

If (i) holds, then by the continuity of H and the boundedness of {xmM}, it is clear that the sequence {xmM} has some accumulation point x* such that H(x*) = 0. Since the sequence {∥xk − x*∥} converges, it must hold that {xk} converges to x*.

If (ii) holds, then by the boundedness of {xmM} and the continuity of H, there exist a positive constant C and a positive integer m0 such that

4. Applications to Compressed Sensing and Image Restoration

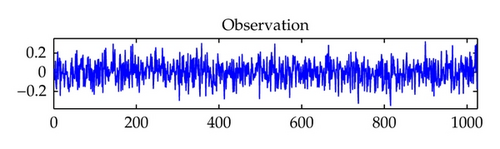

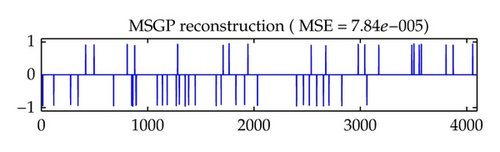

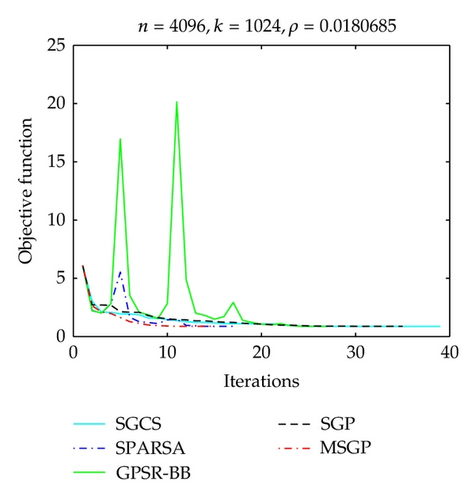

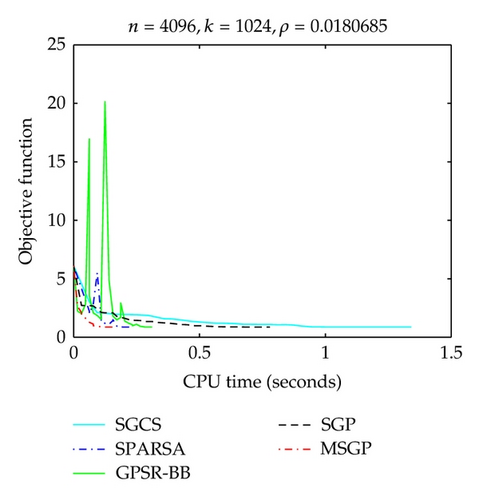

The original signal and the estimation obtained by solving (1.1) using the MSGP method are shown in Figure 1. We can see from Figure 1 that MSGP does an excellent job at locating the spikes with respect to the original signal. In Figure 2, we plot the evolution of the objective function versus iteration number and CPU time, for these algorithms. It is readily to see that MSGP worked faster than other algorithms.

| Ima | SGCS | SPARSA | GPSR-BB | SGP | MSGP | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Iter | Time | SNR | Iter | Time | SNR | Iter | Time | SNR | Iter | Time | SNR | Iter | Time | SNR | |

| House | 53 | 12.15 | 30.68 | 19 | 0.85 | 30.35 | 25 | 1.28 | 30.44 | 38 | 5.27 | 30.61 | 16 | 0.78 | 30.82 |

| Cameraman | 59 | 9.55 | 22.42 | 19 | 0.87 | 23.64 | 23 | 1.06 | 22.67 | 48 | 4.81 | 22.50 | 17 | 0.79 | 23.36 |

| Barbara | 150 | 234.05 | 22.95 | 29 | 7.08 | 23.72 | 41 | 12.78 | 22.93 | 62 | 42.12 | 23.06 | 26 | 6.92 | 23.09 |

It is easy to see from Table 1 that the MSGP is competitive with the well-known algorithms: SPARSA and GPSR-BB, in computing time and number of iterations and improves the SGCS greatly. Therefore we conclude that the MSGP provides a valid approach for solving ℓ1-norm minimization problems arising from image restoration problems.

Preliminary numerical experiments show that SGP and MSGP algorithms have improved SGCS algorithm greatly. This may be because the system of nonsmooth equations solved here has lower dimension than that in [17] and the modification to projection steps that we made reduces the computational complexity.

Acknowledgments

The authors would like to thank the anonymous referee for the valuable suggestions and comments. L. Wu was supported by the NNSF of China under Grant 11071087; Z. Sun was supported by the NNSF of China under Grants 11126147 and 11201197.