Sensitivity Analysis to Select the Most Influential Risk Factors in a Logistic Regression Model

Abstract

The traditional variable selection methods for survival data depend on iteration procedures, and control of this process assumes tuning parameters that are problematic and time consuming, especially if the models are complex and have a large number of risk factors. In this paper, we propose a new method based on the global sensitivity analysis (GSA) to select the most influential risk factors. This contributes to simplification of the logistic regression model by excluding the irrelevant risk factors, thus eliminating the need to fit and evaluate a large number of models. Data from medical trials are suggested as a way to test the efficiency and capability of this method and as a way to simplify the model. This leads to construction of an appropriate model. The proposed method ranks the risk factors according to their importance.

1. Introduction

Sensitivity analysis (SA) plays a central role in a variety of statistical methodologies, including classification and discrimination, calibration, comparison, and model selection [1]. SA also can be used to determine which subset of input factors (if any) accounts for most of the output variance (and in what percentage); those factors with a small percentage can be fixed to any value within their range [2]. In such usage, the focus is on determination of the important variables to simplification of the model; the original motivation for our research lay in a search for how to best arrive at such a determination. Although SA has been widely used in normal regression models to extract important input variables from a complex model so as to arrive at a reduced model with equivalent predictive power, it has limited use for selection of risk factors despite the presence of a large number of risk factors in survival regression models. The limited use of these methods to select appropriate subsets in survival regression models illustrates the desirability of development of a new method of SA-based variable selection to avoid the drawbacks of traditional methods and also simplify survival regression models by choosing the appropriate subsets of risk factors.

A considerable number of methods of variable selection have been proposed in the literature. The fundamental developments are squarely in the context of normal regression models and particularly in the context of multivariate linear regression models [3]. A comprehensive review of many variable selection methods is represented in [4]. Methods such as forward, backward, and stepwise selection and subset selection (Akaike information criterion (AIC)) and Bayesian information criterion (BIC)) are available; however none of these methods can be recommended for use in either a logistic regression model or in other survival regression models. They give incorrect estimates of the standard errors and P-values. They also can delete variables whose inclusion is critical [3]. In addition, these methods regard all the risk factors of a situation as equal, and they seek to identify the candidate subset of variables sequentially; furthermore, most of these methods focus on the main effects and ignore higher-order effects (interactions of variables).

New methods of variable selection, such as least absolute shrinkage and selection operator (LASSO) in [5], and the smoothly clipped absolute deviation (SCAD) method in [6], are at the center of attention recently in the field of survival regression models. These methods use the penalized likelihood estimation and the shrinkage regression approaches. These two approaches differ from traditional methods in their deletion of the nonsignificant covariates in the model by estimating their effects as 0. A nice feature of these methods is that they perform estimation and variable selection simultaneously, but, nevertheless, these methods suffer from some calculation and characteristics problems that are dealt with in more detail in [7, 8].

This study aims to use SA to extend and develop an effective, efficient, and time-saving variable selection method in which the best subsets are identified according to specified criteria without resorting to fitting all the possible subset regression models in the field of survival regression models. The remainder of this study is organized as follows: Section 2 gives the background of building a logistic regression model, and Section 3 deals with the proposed method. The results of implementing this method and logistic regression model are the subject of Section 4, and Section 5 consists of the discussion and conclusions.

2. Background of Constructing a Logistic Regression Model

Often the response variable in clinical data is not a numerical value but a binary one (e.g., alive or dead, diseased or not diseased). When the latter occurs, a binary logistic regression model is an appropriate method to present the relationship between the disease’s measurements and its risk factors. It is a form of regression used when the response variable (the disease measurement) is a dichotomy and the risk factors of the disease are of any type [9]. A logistic regression model neither assumes the linearity in the relationship between the risk factors and the response variable, nor does it require normally distributed variables. It also does not assume homoscedasticity, and in general has less stringent requirements than linear regression models. However, it does require that observations are independent and that the independent risk factors are linearly related to the logit of the response variable [10]. However, models involving the association between risk factors and binary response variables are found in various disciplines such as medicine, engineering, and the natural sciences. How do we model the relationship between risk factors and binary response variable? The answer to this question is the subject of the next subsections.

2.1. Constructing a Logistic Regression Model

The first step in modeling binomial data is a transformation of the probability scale from range (0, 1) to (−∞, ∞) instead of using the linear model for the response variable of the probability of success on risk factors. The logistic transformation or logit of the probability of success (π) is log {π/(1 − π)}, which is written as logit (π) and defined as the log odds of success. It is easily seen that any value of (π) in the range (0, 1) corresponds to the value of logit (π) in (−∞, +∞). Usually, binary data results from a nonlinear relationship between {π(x)} and (x), where a fixed change in (x) has less impact when {π(x)} is near (0 or 1) than when {π(x)} is near (0.5). This is illustrated in Figure 1 (see [11]).

2.2. Fitting Logistic Regression Models

2.3. Evaluating the Fitted Model

A simple model that fits adequately has the advantage of model parsimony. If a model has relatively little bias and describes reality well, it tends to provide more accurate estimates of the quantities of interest. Agresti [9] stated that “we are mistaken if we think that we have found the true model, because any model is a simplification of reality.” In light of this assertion, what then is the logic of testing the fit of a model when we know that it does not truly hold? The answer lies in the evaluation of the specific properties of this model by using criteria such as deviance, the R2, the Wald Score test, the Person chi-square, and the Hosmer-Lemshow chi-square tests; for more details, see [9, 10]. Usually the first stage of construction of any model presents a large number of risk factors. Inclusion of all of them may lead to an unattractive model from a statistical viewpoint. Thus, as an important step towards an acceptable model, a decision should be made early about the proper methodology to use to select the appropriate and important risk factors. Because traditional methods of selecting variables have many limitations in their applicability to survival regression models, a new method of variables selection will be developed by using GSA to select the most influential factors in the model. This is the subject of the following section.

3. Sensitivity Analysis to Select the Most Influencing Risk Factors

There are two key problems in variable selection procedure: (i) how to select an appropriate number of risk factors from the set of risk factors, and (ii) how to improve final model performance based on the given data. So answering these questions is the objective of our proposed method by applying GSA to select the influential risk factors in the logistic regression model.

3.1. General Concept of GSA

3.2. GSA in a Logistic Regression Model

(1) The first step is identification of the probability distribution f(x) of each covariate in the model. Usually sensitivity analysis starts from probability distribution functions (pdfs) given by the experts. This selection makes the use of the best information available of the statistical properties of the input factors. One of the methods used to obtain the pdfs starts with visualizing the observed data by examining its histogram to see if it is compatible with the shape of any distribution, as illustrated in Figure 2.

A visual approach is not always easy, accurate, or valid, especially if the sample size is small. Thus it would be better to have a more formal procedure for deciding which distribution is “best.” A number of significance tests are available for this such as the Kolmogorov-Smirnoff and chi-square tests. For more details, see [21].

(2) In the second step, the logistic regression model as in (1) and the information about the covariates obtained in step one are used to create a Monte Carlo simulation to generate the sample that will be used in the decomposition and to estimate the unconditional variance of response probability and the conditional variation for covariates as in (23) to (26).

4. Numerical Comparisons

The purpose of this section is to compare the performance of the proposed method with existing ones. We also use a real data example to illustrate our SA approach as a variable selection method. In the first examples in this section, we used the dataset and the results of the penalized likelihood estimate of best subset (AIC), bust subset (BIC), SCAD, and LASSO that were computed by [7] as a way to compare the performance of the proposed method with these methods.

4.1. The First Example

In this example, Fan and Li [7] applied the proposed penalized likelihood methodology to burn data collected by the General Hospital Burn Center at the University of Southern California. The dataset consists of 981 observations. The binary response variable Y is 1 for those victims who survived their burns and 0 otherwise. Risk factors are X1 = age, X2 = sex, X3 = log (burn area + 1), and binary variable X4 = oxygen (0 normal, 1 abnormal) was considered. Quadratic terms of X1 and X3, and all interaction terms were included. The intercept term was added, and the logistic regression model was fitted. The best subset variable selection with the AIC and the BIC was applied to this dataset. The unknown parameter λ was chosen by generalized cross-validation: it is 0.6932 and 0.0015, respectively, for the penalized likelihood estimates with the SCAD and LASSO. The constant a in the SCAD was taken as 3.7. With the selected λ, the penalized likelihood estimator was obtained at the sixth, 28th, and fifth step iterations for the penalized likelihood with the SCAD and LASSO. Table 1 contains the estimated coefficients and standard errors for the transformed data, based on the penalized likelihood estimators, and the calculation of the sensitivity indices obtained by using SimLab software to compare the performance of GSA as a variable selection method with other methods. The first five columns were calculated by [7].

| Methods | MLE | Best subset AIC | Best subset BIC | SCAD | LASSO | SA Si(STi) |

|---|---|---|---|---|---|---|

| Factors | ||||||

| Intercept | 5.51 (0.75) | 4.81 (0.45) | 6.12 (0.57) | 6.09 (0.29) | 3.70 (0.25) | Constant |

| X1 | −8.8 (2.97) | −6.49 (1.75) | −12.15 (1.81) | −12.2 (.08) | 0 (—) | 0.487 (0.536) |

| X2 | 2.30 (2.00) | 0 (—) | 0 (—) | 0 (—) | 0 (—) | 0.014 (0.125) |

| X3 | −2.77 (3.43) | 0 (—) | −6.93 (0.79) | −7.0 (0.21) | 0 (—) | 0.143 (0.218) |

| X4 | −1.74 (1.41) | 0.30 (0.11) | −0.29 (0.11) | 0 (—) | −0.28 (0.09) | 0.003 (0.034) |

| −0.75 (.61) | −1.04 (0.54) | 0 (—) | 0 (—) | −1.71 (0.24) | 0.013 (0.057) | |

| −2.7 (2.45) | −4.55 (0.55) | 0 (—) | 0 (—) | −2.67 (0.22) | 0.032 (0.091) | |

| X1X2 | 0.03 (0.34) | 0 (—) | 0 (—) | 0 (—) | 0 (—) | 0.014 (0.237) |

| X1X3 | 7.46 (2.34) | 5.69 (1.29) | 9.83 (1.63) | 9.84 (0.14) | 0.36 (0.22) | 0.362 (0.502) |

| X1X4 | 0.24 (0.32) | 0 (—) | 0 (—) | 0 (—) | 0 (—) | 0.001 (0.042) |

| X2X3 | −2.15 (1.61) | 0 (—) | 0 (—) | 0 (—) | −0.10 (0.10) | 0.016 (0.075) |

| X2X4 | −0.12 (0.16) | 0 (—) | 0 (—) | 0 (—) | 0 (—) | 0.003 (0.047) |

| X3X4 | 1.23 (1.21) | 0 (—) | 0 (—) | 0 (—) | 0 (—) | 0.019 (0.307) |

In addition to GSA indices, Table 1 consists of the results of two traditional methods of variable selection (AIC and BIC) and two new methods (LASSO and SCAD). The traditional method, best subset procedure via minimizing the BIC scores, chooses five of 13 risk factors, whereas the SCAD chooses four risk factors. The difference between them is that the best subset keeps X4. Neither SCAD nor the best subset variable selection (BIC) includes and in the selected subset, but both LASSO and the best subset variable selection (AIC) included them. LASSO chooses the quadratic terms of X1 and X3 rather than their linear terms. It also selects an interaction term X2X3, which may not be statistically significant. LASSO shrinks noticeably large coefficients. The last column in Table 1 shows that GSA selected the variables X1, X3, and X1X3, in addition to the intercept, which resembles the SCAD method, and differs from the other methods. According to the results in the last column of Table 1, the risk factors can be ranked according to sensitivity indices Si and STi. Age (X1) is the first and the most influential risk factor, with a percent of contribution of 0.487, and the second most important risk factor is the interaction between X1 and X3, with a percentage of contribution of 0.362. The third influential risk factor is the log (area of burn + 1) (X3) with a percentage of contribution of 0.143 as shown in Table 1. Consequently, we find that the proposed GSA variable selection method resembles SCAD in choosing the same risk factors.

4.2. The Second Example

A new dataset emerges from the original dataset prepared in [22] as a way to compare SA and the traditional method (backward elimination) as variable selection methods. Originally this study was undertaken to determine the prevalence of CHD risk factors among a population-based sample of 403 rural African-Americans in Virginia. Community-based screening evaluations included the determination of exercise and smoking habits, blood pressure, height, weight, total and high-density lipoprotein (HDL) cholesterol, and glycosylated hemoglobin, and other factors. The results of this study were presented as percentages of prevalence for most factors such as diabetes (13.6% of men, 15.6% of women), hypertension (30.9% of men, 43.1% of women), and obesity (38.7% of men, 64.7% of women), without building any models to study the relationship between CHD and its risk factors. For more details, see [8]. A new dataset was generated based on the first one as a way to calculate SA indices to extract the important risk factors for CHD from among these new factors, and then implement the logistic regression model to test the performance of the proposed method as follows.

- (1)

CHD (Y) 10-year percentage risk is generated according to Framingham Point Scores. This risk is classified as 1 if the percentage of the risk is ≥20% and 0 otherwise [23].

- (2)

Diabetes (debt, X1): According to the criteria published by American College of Endocrinology (ACE) & American Association of Clinical Endocrinologists (AACE) [24] the participant has diabetes 1 if the Stabilized Glucose >140 mg/dL or Glycosylated Hemoglobin >7% or both of them more than these limits, and he has no diabetes 0 otherwise.

- (3)

Total cholesterol (Chol, X2): if a participant has total cholesterol of >200 mg/dL, he will be given a 1 and a 0 otherwise [25].

- (4)

High density lipoprotein (HDL, X3): a participant with HDL of <40 mg/dL will be given a 1 and a 0 otherwise [25].

- (5)

Age (X4): standardized values are used (X − μ)/σ.

- (6)

Gender (Gan, X5): 1 is for a male and 2 for a female.

- (7)

Body mass index (BMI, X6): values for this standard are calculated from the following equation: BMI = height/(weight)2, and the participant gets 1 if BMI is >30 and a 0 otherwise [25].

- (8)

Blood pressure (hypertension, Hyp, X7): a participant has Hyp (1) if systolic blood pressure is >140 or if diastolic blood pressure is >90 or if both of them exceed these limits and 0 otherwise [25].

- (9)

Waist/hip ratio (X8), in addition to BMI, is a second factor in the determination of obesity.

4.2.1. The Important Risk Factors

Implementation of the GSA method for this dataset gave the results in Table 2, which shows the ranking of the risk factors in order of importance and the contribution of each one to explaining the total variance of the CHD response variable.

| Factors | Si | STi | ICi | Si (%) | Ranks |

|---|---|---|---|---|---|

| Diab | 0.2018 | 0.22657 | 0.008 | 12 | 6 |

| Chol | 0.2434 | 0.258 | 0.015 | 14 | 4 |

| HDL | 0.2243 | 0.25424 | 0.03 | 13 | 5 |

| Age | 0.2636 | 0.28507 | 0.022 | 15 | 3 |

| Gen | 0.0256 | 0.03844 | 0.013 | 1 | 7 |

| BMI | 0.5161 | 0.56173 | 0.046 | 30 | 1 |

| Hypt | 0.003 | 0.04207 | 0.039 | 0 | 8 |

| W/H | 0.2706 | 0.29714 | 0.027 | 15 | 2 |

| Sum. | 1.7484 | 1.96326 | 0.2 |

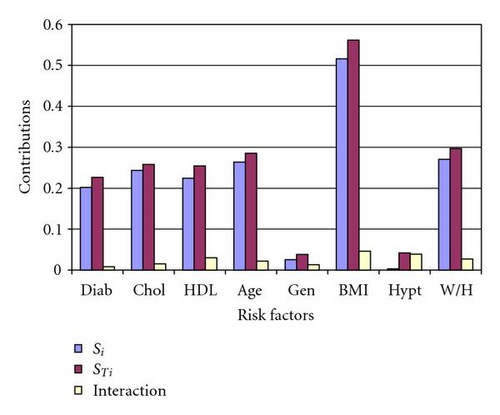

According to the first order of sensitivity indices Si, the BMI is the first and the most influential factor, and the waist-hip ratio ranks second. Both are components of the obesity factor. Age is the third influential factor and so on through the other factors as listed in Table 2. The total sensitivity index for a given risk factor provides a measure of the overall contribution of that risk factor to the output variance, taking into account all possible interactions with the other risk factors. The difference between the total sensitivity index and the first-order sensitivity index for a given risk factor is a measure of the contribution to the output variance of the interactions involving that factor; see (12) and (13). The second column in Table 2 shows the values of STi, which gives the same rank as Si for the risk factors. These indices point to the simple interaction between these risk factors as illustrated in the third column in the same table. Figure 3 shows the compression between the first order Si, the total STi sensitivity indices, and the interactions between risk factors.

4.2.2. Implementing the Logistic Regression Model

The efficiency of the proposed method of variable selection (GSA) can be measured by comparing its results as in (34) with the results gained from fitting the logistic regression model by using the backward elimination method (BEM). These results are shown in Tables 3 and 4.

| Step | −2Log L | Df | Sig. | df | Sig. | Nag. R2 | ||

|---|---|---|---|---|---|---|---|---|

| 6 | 357.813 | 7.268 | 3 | 0.064 | 8.465 | 8 | 0.389 | 0.30 |

| 7 | 359.021 | 6.061 | 2 | 0.048 | 0.055 | 2 | 0.973 | 0.25 |

| 8 | 360.189 | 4.892 | 1 | 0.027 | — | — | — | 0.20 |

| Steps | Risk factors | Sig. (P) | |

|---|---|---|---|

| Step 6 | CHOL | 0.538 | 0.061 |

| SAGE | 0.151 | 0.271 | |

| BMI | −0.325 | 0.241 | |

| Constant | −1.711 | 0.000 | |

| Step 7 | CHOL | 0.610 | 0.029 |

| BMI | −0.300 | 0.276 | |

| Constant | −1.758 | 0.000 | |

| Step 8 | CHOL | 0.605 | 0.030 |

| Constant | −1.946 | 0.000 | |

Table 3 shows the overall fitting criteria required for the last three steps of a logistic regression model fitted by the use of the BEM.

Also Table 4 shows the last three steps of iteration to choose the important risk factors. These results represent the sequential elimination of the factors, which requires eight steps to rank these risk factors according to their importance; however, the proposed method does not need these iterations.

5. Conclusions

The results in Tables 1 to 4 and (31) to (36) for the two examples confirm that the proposed method is capable of distinguishing between important and unimportant risk factors. The proposed method ranked the risk factors according to their decreasing importance as shown in Tables 1 and 2. In the example in which we compared the proposed method with those methods that are typically used, we found that its performance very much resembled the SCAD method in which the same risk factors are selected. From the first example, we found that the important risk factors are age, the area of the burns, and the interaction between them. In the second example we found that the obesity factors (BMI and W/H) are the most influential risk factor on the incidence of CHD, the second risk factor is age, and the third risk factor is the total cholesterol. These play the major roles, representing approximately 74% of the incidence of CHD. Thus, they are considered the most important risk factors according to their individual percentages of contribution in the incidence of CHD as shown in Table 1. Compression between the results of the fitting of the full logistic regression model as in (31) and the chosen models as in (34) and (36) confirm the efficiency of the proposed method in its selection of the most important risk factors. Equation (34) represents the best model, according to the model evaluation criteria, because it consists of the most influential risk factors. Therefore, a medical care plan and medical interventions should comply with this ordering of these factors. Also, to further confirm these results, one of the traditional variable selection methods was used (backward elimination method), which yields different results after eight steps, but the proposed method orders the risk factors without iteration and without the need to fit multiple regression models. Finally, these results together confirm and emphasize the importance of GSA as a variable selection method.

Acknowledgment

This work was supported by USM fellowship.