Computer-automated bird detection and counts in high-resolution aerial images: a review

Abstract

enBird surveys conducted using aerial images can be more accurate than those using airborne observers, but can also be more time-consuming if images must be analyzed manually. Recent advances in digital cameras and image-analysis software offer unprecedented potential for computer-automated bird detection and counts in high-resolution aerial images. We review the literature on this subject and provide an overview of the main image-analysis techniques. Birds that contrast sharply with image backgrounds (e.g., bright birds on dark ground) are generally the most amenable to automated detection, in some cases requiring only basic image-analysis software. However, the sophisticated analysis capabilities of modern object-based image analysis software provide ways to detect birds in more challenging situations based on a variety of attributes including color, size, shape, texture, and spatial context. Some techniques developed to detect mammals may also be applicable to birds, although the prevalent use of aerial thermal-infrared images for detecting large mammals is of limited applicability to birds because of the low pixel resolution of thermal cameras and the smaller size of birds. However, the increasingly high resolution of true-color cameras and availability of small unmanned aircraft systems (drones) that can fly at very low altitude now make it feasible to detect even small shorebirds in aerial images. Continued advances in camera and drone technology, in combination with increasingly sophisticated image analysis software, now make it possible for investigators involved in monitoring bird populations to save time and resources by increasing their use of automated bird detection and counts in aerial images. We recommend close collaboration between wildlife-monitoring practitioners and experts in the fields of remote sensing and computer science to help generate relevant, accessible, and readily applicable computer-automated aerial photographic census techniques.

Detección automática computacional de aves y conteos en imagines aéreas de alta resolución: una revisión

esConteo de aves realizados utilizando imágenes aéreas pueden ser mas precisos que los que utilizan observadores desde el aire, pero pueden consumir mas tiempo si las imágenes tienen que ser analizadas manualmente. Avances recientes en cámaras digitales y software de análisis de imágenes ofrecen un potencial sin precedentes para la detección computacional automática de aves y conteos en imágenes aéreas de alta resolución. Revisamos la literatura en este tema y ofrecemos una visión general de las principales técnicas de análisis en imágenes. Las aves que tienen un fuerte contraste con los fondos de las imágenes (e.g., aves brillantes en fondos oscuros) son en general las mas sensibles a las detecciones automáticas, en algunos casos solo requieren un software básico de analizador de imágenes. Sin embargo, las sofisticadas capacidades de los software de análisis modernos en imágenes basadas en objetos, proveen formas de detectar aves en situaciones mas desafiantes basadas en una variedad de atributos incluyendo el color, tamaño, forma, textura y contexto espacial. Algunas técnicas desarrolladas para detectar mamíferos pueden ser aplicables en aves, aunque el uso predominante de imágenes aéreas de infra rojo térmico para detectar grandes mamíferos tienen aplicabilidad limitada para las aves, debido a la baja resolución en los pixeles de las cámaras térmicas y el tamaño pequeño de las aves. Sin embargo, el incremento en la alta resolución de las cámaras de color y la disponibilidad de pequeños sistemas de aeronaves no tripuladas (drones) que pueden volar a bajas elevaciones, ahora hacen que sea posible detectar incluso pequeñas aves playeras en imágenes aéreas. Los continuos avances en la tecnología de la cámara y aeronaves no tripuladas, en combinación con software de análisis de imágenes cada vez más sofisticados, ahora hacen posible ahorrara tiempo y recursos a los investigadores involucrados en el monitoreo de las poblaciones de aves, mediante el aumento del uso de la detección y conteos de aves automatizado en imágenes aéreas. Recomendamos una estrecha colaboración entre los profesionales de monitoreo de fauna silvestre y expertos en el campo de la teledetección y la informática para ayudar a generar técnicas de censo relevantes, accesibles y de fácil aplicación automatizada computacionalmente utilizando fotografías aéreas.

Introduction

Monitoring bird populations is important for conserving and managing species, as well as for gauging broader ecosystem health using bird population trends as indicators (Kushlan 1993, Diamond and Devlin 2003, Frederick et al. 2009). Populations are often monitored using techniques such as point counts or line transects (Bibby et al. 1992), but other population surveys, particularly for large conspicuous species, involve aerial counts. Aerial surveys are particularly useful for rapid coverage of large areas, counting birds that are difficult to see from the ground, and accessing remote or otherwise challenging habitats such as roadless areas or wetland and marine environments (Kadlec and Drury 1968, Leonard and Fish 1974, Certain and Bretagnolle 2008, Kingsford and Porter 2009). Waterbirds, including waterfowl, seabirds, shorebirds, and wading birds, are the most frequent subjects of aerial surveys (Chabot and Bird 2015), although upland game birds (Rusk et al. 2007, Butler et al. 2008) and raptors (Good et al. 2007, Henny et al. 2008) have also been surveyed from the air.

Aerial counts and estimates of birds are obtained either by trained observers in aircraft or by collection and subsequent analysis of aerial images captured by on-board cameras. An advantage of the former approach is that it can yield faster results, particularly for large survey areas and/or large numbers of birds, whereas the latter approach can require labor-intensive manual review of images and counts of birds (Woodworth et al. 1997, Béchet et al. 2004, Kerbes et al. 2014). Although widely used, live observer estimates may be more variable among observers than image counts, and may also be less accurate, often by underestimating the number of birds in large concentrations (Erwin 1982, Boyd 2000, Frederick et al. 2003, Buckland et al. 2012, Hodgson et al. 2016). Count precision and consistency among observers are important for tracking long-term population trends and detecting fine-scale fluctuations. Additional advantages of aerial-image surveys are that they create a permanent record for future reference or reanalysis, and provide unique opportunities to investigate the spatial ecology of birds, e.g., density patterns (Henriksen et al. 2015) and flight heights (Johnston and Cook 2016). In addition, use of high-resolution aerial cameras allows survey aircraft to fly at higher altitudes than required for live observers to be able to identify birds, consequently reducing aircraft disturbance to the birds (e.g., flushing and diving) and associated sources of count bias (Goodship et al. 2015).

The need for efficient methods for counting birds in images is gaining renewed attention with the increased use of small unmanned aircraft systems (UAS), or drones, for bird monitoring (Chabot and Bird 2012, Sarda-Palomera et al. 2012, Linchant et al. 2015). UAS can provide precise, low-disturbance surveys of birds (Chabot et al. 2015, Ratcliffe et al. 2015, Vas et al. 2015, Hodgson et al. 2016). Unlike manned aircraft, however, UAS are obligate photographic survey platforms that usually collect large numbers of images (Drever et al. 2015, Dulava et al. 2015) due to their low flight altitude and small area footprint of individual images. The time required to manually review and analyze images may then negate any efficiency gained during data collection.

With digital cameras now ubiquitous in aerial surveys (Buckland et al. 2012) and continuously improving in performance and resolution (Bako et al. 2014), image-analysis software becoming ever more sophisticated, and computer processing speeds ever increasing, circumstances seem favorable for a breakthrough in the use of computer-automated bird censuses using aerial images. Efforts to automate bird detection and counts in aerial images using computer software go back nearly three decades (Gilmer et al. 1988, Bajzak and Piatt 1990) and continue to the present (Laliberte and Ripple 2003, Descamps et al. 2011, Groom et al. 2013). Morgan et al. (2010) presented a general overview of aerial photography theory and automated image analysis approaches in the context of ecological research and management, with a focus on landscape and habitat applications. However, few investigators have used these methods with birds, and aerial images taken to survey bird populations continue to be analyzed manually (e.g., Buckland et al. 2012, Kerbes et al. 2014, Goodship et al. 2015).

With the aims of better informing those involved in aerial surveys of birds about available analysis options and stimulating greater use of these methods, we reviewed the literature on automated approaches for counting birds in aerial images. Our objectives were to: (1) summarize available literature about using these methods for bird surveys, (2) provide an overview of the major image-analysis techniques, and (3) make recommendations to encourage development and dissemination of computer-automated aerial photographic bird survey techniques. In addition, we reviewed the literature involving automated detection of mammals in aerial images because the techniques used could potentially also be applied to birds.

Literature Search

We used a systematic approach to find literature on computer-automated bird and mammal detection and counting in high-resolution aerial images collected using manned and unmanned aircraft. Beginning with relevant papers already known to us, we searched their lists of references as well as their citing articles using Google Scholar's “Cited by” function to find additional relevant papers. We repeated these searches with each additional paper collected in this manner until no further relevant papers could be found. We considered English-language scientific journal and conference proceedings papers, scholarly book chapters, and technical reports by government agencies and environmental consulting companies. We also reviewed literature that we found on automated detection of birds in satellite images, although not in an exhaustive manner because our focus was on higher-resolution images acquired by aircraft.

Search Results

We found 35 papers involving computer-automated detection/counting of animals in aerial images collected by manned or unmanned aircraft, with 19 involving birds and 20 involving mammals. Four papers were published prior to 2000 and five from 2000 to 2009, with all focused on birds except one that involved both birds and mammals, and with one paper involving images collected by an unmanned aircraft. The remaining 26 papers have been published since 2010, with a marked increase in the proportion of papers involving mammals (73%). Since 2010, the proportion of papers involving images collected by unmanned aircraft has also increased, representing 42% of the papers involving birds and 54% of those involving mammals.

Of the papers involving birds (Table 1), 13 were in journals, five in conference proceedings, and one in a book, collectively spanning bird-specific publications (3), broader wildlife publications (6), still broader ecology or environmental science publications (3), remote sensing publications (6), and an oceanography publication. All but one paper involved waterbirds as subject species (Table 1), with four involving breeding colonies (penguins, flamingos, and gulls), nine involving inland or nearshore non-breeding birds (various waterfowl and wading birds), and four involving offshore birds (including sea ducks, cormorants, terns, loons, and shearwaters). The only nonwaterbird paper involved Domestic Chickens (Gallus gallus domesticus) imaged from a cherry picker intended to simulate a low-altitude UAS (Christiansen et al. 2014). The emphasis on waterbirds reflects the fact that many of these species are amenable to automated detection in aerial images due to their tendency to: (1) concentrate in relatively small areas (i.e., flocks and colonies) that are more manageable from an image-analysis perspective, (2) occur in habitats devoid of overhead concealment where they are readily visible in aerial images, and (3) appear in color aerial images as clear and consistent spectral contrasts of relatively consistent shapes and/or sizes that can be readily exploited in image analysis.

| References | Subject species and context | Aircraft type (altitude) | Image type (ground resolution) | Image analysis technique(s) | Image analysis software | Automated count errora |

|---|---|---|---|---|---|---|

| Gilmer et al. (1988) | Wintering Snow and Ross's geese on land | Manned (900 m) | Film photo (7.3 cm/pixel) | Spectral thresholding | n/a |

Sample mean: 6.6% Sample total: 2.1% |

| Bajzak and Piatt (1990) | Staging Snow Geese in fields | Manned (1400 m) | Film photo (n/a) | Spectral thresholding + size filtering | FORTRAN | Total count: 2.3% |

| Strong et al. (1991) | Wintering Snow Geese on water | Manned (1220 m) | 10-band multispectral (3.05 m/pixel) | Spectral analysis | n/a | Total count: 11.8% |

| Cunningham et al. (1996) | Staging Black Brant, Emperor Geese, and Snow Geese on water | Manned (n/a) | Film photo (n/a) | Spectral thresholding + size/shape/orientation filtering | DUCK HUNT | Sample mean: 4.2% |

| Laliberte and Ripple (2003) | Wintering Snow Geese (SG) on water and Canada Geese (CG) on land | Manned (n/a, 500 m) | Film photo (n/a) | Image smoothing or sharpening + spectral thresholding + size filtering | ImageTool |

SG sample mean: 2.8% SG sample total: 1.6% CG sample mean: 4.4% CG sample total: 4.1% |

| Trathan (2004) | Macaroni Penguin breeding colonies | Manned (500 m) | Film photo (5 cm/pixel) | Spectral thresholding + image smoothing + size filtering | Matlab Image Processing Toolbox |

Colony 1: 3.3% Colony 2: 6.0% Colony 3: 0% |

| Abd-Elrahman et al. (2005) | Unspecified white wading birds foraging in fields and canals | Unmanned (n/a) | Digital video (n/a) | Template matching based on spectral/size properties | Intel Open Source Computer Vision Library |

Sample mean: 10.5% Sample total: 6.3% |

| Milton et al. (2006) | Molting Common Eider flocks at sea | Manned (91–122 m) | Digital photo (2.8–3.8 cm/pixel) | Image segmentation + object classification based on spectral properties | eCognition | Sample photos: 0–15% |

| Groom et al. (2007) | Common Scoters (CS) and Common Eiders (CE) at sea | Manned (600 m) | Digital photo (10 cm/pixel) | Image segmentation + object classification based on spectral/size/shape properties and training samples | eCognition |

CS total count: 6.8% CE total count: 0.9% |

| Descamps et al. (2011) | Greater Flamingo breeding colonies | Manned (~300 m) | Film + digital photo (4–12.5 cm/pixel) | Stochastic ellipse shape fitting to locally bright or dark image elements | FLAMINGO |

Sample mean: 3.5% Sample total: 1.7% Whole colonies: 0.1–3.7% |

| Groom et al. (2011) | Nonbreeding Lesser Flamingos on water and land | Manned (455 m) | Film photo (8–10 cm/pixel) | Multiple segmentations + object classification based on iterative spectral thresholding and size/adjacency properties | Definiens Developer (eCognition) |

Water samples: 0.3–5.7% Land samples: 1.0–21.8% |

| McNeill et al. (2011) | Adélie Penguin breeding colonies | Manned (762 m) | Digital photo (≤50 cm/pixel) | Colony delineation based on fecal stain spectral properties + penguin classification based on spectral/shape properties | Semiautomated Counting of Penguins | n/a |

| Chabot and Bird (2012) | Staging Snow Geese in fields | Unmanned (183 m) | Digital photo (4.5 cm/pixel) | Spectral thresholding | Photoshop |

Flock 1: 0.9% Flock 2: 0.8% |

| Sirmacek et al. (2012) | Unspecified birds | Manned (n/a) | Unspecified true-color images (n/a) | Multiple segmentations based on spectral/spatial properties | n/a | Sample total: 28.5% |

| Grenzdorffer (2013) | Common Gull breeding colony | Unmanned (50–55 m) | Digital photo (~1.6 cm/pixel) | Supervised pixel-based spectral classification + size/adjacency filtering | ArcGIS |

Year 1: 4.8% Year 2: 2.4% |

| Groom et al. (2013) | Multiple species at sea: scoters, loons, cormorants, terns, shearwaters, and so on | Manned (475 m) | Digital photo (3–4 cm/pixel) | Automated cropping + multiple segmentations + object classification based on spectral/texture/context properties | eCognition |

Sample mean: 3.5% Sample total: 6.8% |

| Christiansen et al. (2014) | Domestic Chickens in fields | Unmanned (3–35 m) | Thermal video (n/a) | Template matching based on contour temperature profile and temporal video analysis | n/a |

3–10 m: 0.1–6.0% 10–20 m: 25.1–29.0% |

| Liu et al. (2015) | Wintering Black-faced Spoonbills on water and land | Unmanned (50–300 m) | Digital photo (1.3–9.5 cm/pixel) | Unsupervised spectral classification + spectral/size/shape filtering | ENVI |

50 m photo: 0% 200 m photo: 0.7% 300 m photo: 0.7% |

| Maussang et al. (2015) | Unspecified seabirds | Manned (305 m) | Digital photo (~3 cm/pixel) | Image segmentation + object classification based on training data | n/a | n/a |

- a Automated count errors are expressed as the percent difference in relation to manual counts of the aerial images. Because the manner of expressing quantitative results differed among references, we presented common metrics to facilitate comparisons, which, in some cases, required calculating these metrics based on data provided in the references. Sample mean = average count error in multiple image samples used for validation. Sample total = overall error of total count summed from all samples.

Most studies of birds involved the use of still images with varying resolution (Table 1), but a multispectral scanner (Strong et al. 1991), camcorder (Abd-Elrahman et al. 2005), and thermal-infrared camera (Christiansen et al. 2014) were each used in one study. The transition from traditional film cameras to digital cameras began in 2005, coinciding with the advent of professional-grade digital aerial cameras and consumer-grade digital cameras on UAS (Table 1). With the exception of Strong et al. (1991) who used a water-goose spectral “mixture model” to estimate the number of Snow Geese (Chen caerulescens) in 3-m-diameter pixels generated by their multispectral scanner, investigators in all other studies used images with a Ground Sampling Distance (GSD) ≤ 50 cm, and most GSDs were 10 cm or less (Table 1).

Of 20 papers involving mammals, only four were in wildlife, ecology, or environmental science publications, with the rest in remote sensing, computer science, robotics, oceanography, and multidisciplinary publications. Eleven papers focused on terrestrial species, and eight on marine species. Six papers involving mammals featured the use of thermal-infrared cameras.

Overview of Image-Analysis Techniques

Automated techniques for detecting and counting birds

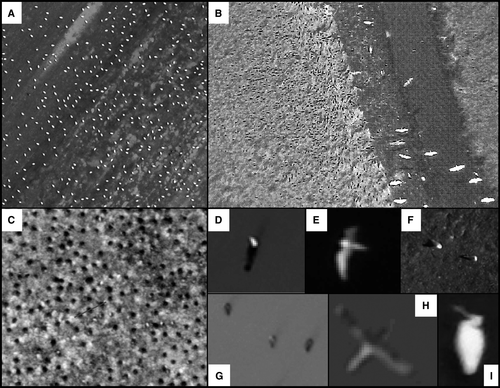

Examples of aerial images collected using manned and unmanned aircraft containing birds detected using computer-automated approaches are presented in Figure 1. The most straightforward way to analyze digital images is by the spectral values of their individual pixels. For automated bird detection and counting, this works best if the birds contrast strongly with the image background. If so, they can be readily isolated in the image using spectral thresholding, i.e., creating a binary image of pixels above and below a specified spectral threshold. Image-analysis software can then provide an instant tally of discrete segments (single isolated pixels or clusters of pixels) above or below the threshold. The first demonstration of this technique was with images of white Snow and Ross's (Chen rossii) geese in their southern wintering grounds (Gilmer et al. 1988, Table 1), although it can also be applied to dark birds on pale backgrounds. A variation of thresholding, sometimes called level slicing, involves setting an interval of spectral values within which pixels are isolated that can be manipulated in software using a sliding bar that allows users to set minimum and maximum values (Cunningham et al. 1996, Laliberte and Ripple 2003, Table 1). Thresholding can be performed on a grayscale version of an image, on any of the bands of a color image (e.g., red, blue, or green for standard true-color images), or on the combined color bands (based on hue, saturation, and brightness). Bird counts using spectral thresholding alone can be remarkably accurate in situations with high bird contrast where few to no other image elements share the same spectral range as the birds, and the birds are spatially separated (Chabot and Bird 2012) (Table 1, Fig. 1A). Standard image processing software such as Photoshop (Adobe Systems, San Jose, CA) or PaintShop Pro (Corel Corporation, Ottawa, Canada) is sufficient for this type of analysis, although more specialized software may offer greater flexibility (see below).

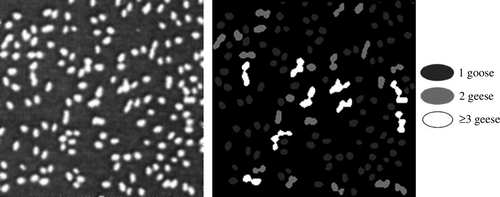

A common challenge arises, however, when other elements are in the same spectral range as birds. In the case of bright birds, for example, these might be pale rocks on the ground, or froth, wave crests, or glint on water. Short of manually cropping these elements out of the images, which may in some cases be accomplished reasonably fast if concentrations of birds can readily be carved out with a polygonal selection tool, a notion of “objects” beyond clusters of individual pixels must be introduced into the analysis to reduce errors. The most basic object-oriented attribute to characterize a cluster of pixels is its size in number of pixels. Bajzak and Piatt (1990) were the first to supplement spectral level slicing with a size-range filtering operation to better isolate clusters of bright pixels representing Snow Geese staging in farm fields (Table 1). As with spectral thresholding operations, automated size filtering and sorting subsequently became standard tools in basic image-analysis software applications used to count birds (Cunningham et al. 1996, Laliberte and Ripple 2003), including the ability to appropriately tally large pixel clusters composed of multiple birds bunched together (Fig. 2). In some cases, applying a smoothing (low-pass) or sharpening (high-pass) filter to the images prior to size filtering may help accentuate, or normalize, the size and/or coherence of pixel clusters representing birds that either contrast more weakly with the background or display internal spectral variation (Laliberte and Ripple 2003, Trathan 2004, Table 1).

Other basic object attributes can help to identify birds, including the shape of pixel clusters, particularly their roundness/compactness, which is a function of the ratio of perimeter to area. For example, filtering pixel clusters according to roundness has been used to isolate waterfowl on water surfaces (Cunningham et al. 1996) and Adélie Penguins (Pygoscelis adeliae) in breeding colonies (McNeill et al. 2011) from more elongate image elements of similar color and size (Table 1). For basic spectral thresholding, object size and shape filtering, automatic object tallying, and other common image processing operations such as smoothing and sharpening, one current software solution is ImageJ (National Institutes of Health, Bethesda, MD; https://imagej.nih.gov/ij/). This multiplatform freeware application was originally developed for medical purposes (e.g., microscope and radiological images), but has spawned a large community of users spanning a variety of fields, as well as numerous third-party plugins for expanded analysis options.

Filtering and sorting of pixel clusters based on object-oriented characteristics requires images of sufficiently fine resolution, with each bird comprised of multiple pixels, to be able to distinguish these characteristics. This is why automated analyses have typically been carried out with very high resolution images of ~10 cm/pixel or finer, depending on the size of the birds being analyzed (Table 1). It is also generally desirable for the angle of aerial images to be as vertical as possible so that subject size and shape remain consistent. The continuously increasing resolution of digital cameras and the ability to perform very low altitude surveys using small UAS are enabling detection of ever-smaller species, such as Dunlins (Calidris alpina) (Drever et al. 2015).

A variety of alternative computing approaches have also been developed for analyzing image spectral and object-oriented characteristics to automatically detect birds. Abd-Elrahman et al. (2005) developed a template-matching approach (Table 1) where customized algorithms were used to calculate a correlation coefficient (i.e., the degree of similarity based on overall color and size) between image elements and sample images of white wading birds (Fig. 1B), classifying all elements above a certain correlation threshold as birds. Grenzdorffer (2013) employed a traditional supervised pixel-based image classification routine (Table 1) in ArcGIS (ESRI, Redlands, CA), commonly used for land use/cover classification in remotely sensed images, to automatically detect and count Common Gulls (Larus canus) in a breeding colony in images acquired with a UAS (Fig. 1F). “Spectral signatures” of seven image classes including “bird” were first established by manually tracing representative “training samples” in the images, then all image pixels were automatically assigned to the class to which they were spectrally most similar. This approach typically involves some spectral overlap among the signatures of different classes and tends to produce results that are not as clear-cut as straightforward spectral thresholding, especially in very high resolution images (in this case, ~1.6 cm/pixel) characterized by a high degree of fine-scale spectral heterogeneity. Therefore, a series of post-classification operations was needed to refine results, including filtering out bird-classified objects that were too small or large to be part of gulls, then using a distance rule to merge close-together bird objects representing single birds that were split into multiple objects, and again filtering out remaining objects that were too small to be gulls.

Descamps et al. (2011) developed an entirely different “unsupervised” method to automatically count aggregations of birds based on their shape and spectral discreteness, using dense breeding colonies of Greater Flamingos (Phoenicopterus roseus) as test subjects (Table 1). Based on the underlying assumption that birds are ellipse-shaped in the images, they developed an algorithm that attempts to “fit” multitudes of ellipses onto the inputted image in a stochastic, iterative manner to achieve the arrangement that minimizes total “data energy.” Energy is reduced when spectral variation within individual ellipses is reduced, which occurs when they are correctly fitted onto actual birds represented by objects of local brightness maximum or minimum. The algorithm is therefore flexible to the exact size and spectral range of birds as long as they are ellipse-shaped and have sufficient contrast with the background. However, Descamps et al. (2011) suggested that this method was mainly effective for dense, localized aggregations of birds that can be manually cropped to the aggregation area prior to running the algorithm. Including areas outside an aggregation may result in erroneously counting other ellipse-shaped and spectrally discrete image elements that are not birds. The software application developed based on this algorithm, FLAMINGO, is available online for free (http://www.flamingoatlas.org/dwld_flamingo.php).

Most previous analysis methods will not work well in more complex situations involving sparsely distributed single birds, isolated flocks throughout large numbers of images, or multispecies detection. Large image sets tend to collectively contain a greater variety of background elements that could be mistaken for birds when using relatively simple detection attributes. In addition, variation in camera exposure and lighting conditions create spectral inconsistency among photos in both birds and background, even in a single aerial-survey flight. This is especially true with consumer-grade cameras, such as those usually used in UAS, that tend to have less consistent performance and spectral response than professional-grade aerial photogrammetric cameras. The methods described in many of the above-cited studies involve adjusting the spectral threshold value(s) on an image-by-image basis to account for this sort of inconsistency. This can take a few minutes per photo to determine the appropriate settings based on visual assessment of a live-updating preview image while adjusting the threshold range (Cunningham et al. 1996, Laliberte and Ripple 2003). Obviously, image-by-image threshold adjustments or other manual preprocessing operations such as cropping become increasingly inefficient as image sets grow larger.

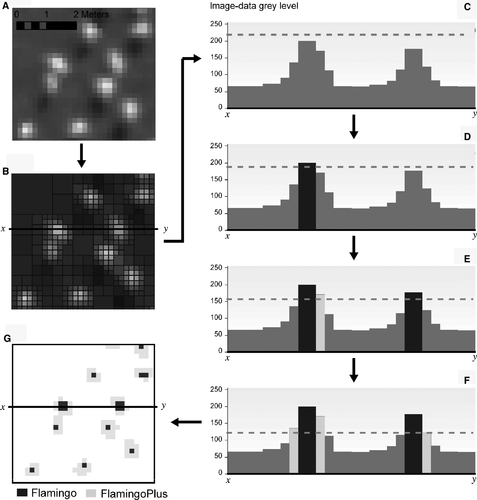

A valuable approach for addressing the aforementioned challenges presented by large images sets is true “object-based image analysis” (OBIA). True OBIA consists of first “segmenting” images into a mosaic of adjoining objects, then classifying the objects based on sometimes elaborate rule sets. Segmentation clusters spectrally similar adjacent pixels into discrete image objects using algorithms that detect boundaries between image features and analyze the extended neighborhood of each pixel rather than just the pixel itself or its immediate surroundings. The sensitivity of the segmentation process can be controlled by adjusting the scale and merge parameters, with smaller values resulting in more total objects created; this may result in millions of objects in a single large image. Various geometric rules can be applied to segmentation as well. For example, the loosest form of segmentation, “multiresolution”, generates objects of irregular sizes and shapes, whereas “quadtree” segmentation only creates square objects of 1, 4, 16, 64, 256 (and so on) pixels. Importantly, segmentation has a recognized propensity for delineating “real” objects of interest in images, including individual birds (Fig. 3).

OBIA has been used, for example, to analyze images of birds at sea (Milton et al. 2006, Groom et al. 2007, 2013, Table 1), which typically involves large photo sets where birds are sparsely distributed over the spectrally variable water surface. After using segmentation to separate birds from swaths of water and other conspicuous surface features, rule sets based on similar attributes to those covered above, including spectral range, size, shape, and additionally spectral “texture” and object neighborhood, can be used to automatically classify birds. Rule sets can be developed manually by selecting classification attributes and directly setting their values, via machine learning by inputting training samples, or through a combination of both (Groom et al. 2007).

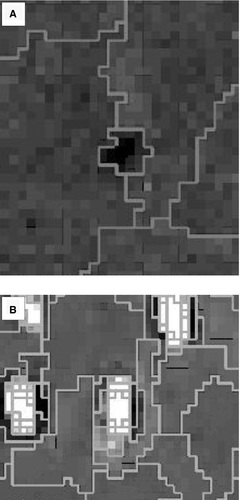

Another valuable aspect of OBIA is its high degree of programmability and scriptability, which has led to the development of creative and versatile image-processing workflows. For example, Groom et al. (2011) developed a routine that involves multiple “nested” segmentations followed by an iterative spectral thresholding and object classification loop (Fig. 4) to ultimately count 81,664 non-breeding Lesser Flamingos (Phoeniconaias minor) spread across water and land in a 5.25-km2 area captured in 31 aerial photos (Table 1). The precise mapping of each bird's location enabled subsequent analyses to characterize flock distribution patterns in relation to ecological variables such as food abundance and predation risk (Henriksen et al. 2015). Groom et al. (2013) developed a multispecies detection routine (Table 1) that can batch-process up to thousands of overlapping images acquired at sea, first performing a series of systematic cropping operations, then segmentations and classifications aimed at extracting objects of “local brightness [or darkness] contrast” that represent birds (either flying or on the water surface; e.g., Fig. 1D, E, G, and H), filtering out numerous confounding water surface features in the process. Liu et al. (2015) used OBIA successfully with very high-resolution (down to 1.3 cm/pixel) UAS images of wintering Black-faced Spoonbills (Platalea minor) (Fig. 1I).

The original and still widely used commercial OBIA software package is eCognition (Trimble Navigation, Sunnyvale, CA; formerly Definiens eCognition), but other commercial packages such as ENVI (Exelis Visual Information Solutions, Boulder, CO) and freeware applications such as InterImage (Laboratório de Visão Computacional, Rio de Janeiro, Brazil) have also emerged. Even traditional image-analysis platforms such as ArcGIS now possess tools to perform segmentation, and host third-party object-based classification extensions such as Feature Analyst (Textron Systems, Providence, RI).

Automated techniques for detecting and counting mammals

Automated analysis of images to detect mammals has involved a number of additional approaches, in part because the spectral and size/shape characteristics of mammals are not always as distinctive as those of birds. For example, the inferior performance of the automated detection and counting approach used by Laliberte and Ripple (2003) on images of caribou (Rangifer tarandus) than of Snow and Canada (Branta canadensis) geese was attributed to the more variable size of caribou and their inconsistent contrast with the background.

Terletzky and Ramsey (2014) tested a “change detection” approach (commonly used to detect landscape-scale changes in GIS applications) to inventory cattle and horses by comparing aerial images of the same pastures taken a day apart, during which time the animals had changed locations. Their technique correctly identified 82% of the animals, but was marked by a high commission error rate of 53%. Although we are not aware of this technique having been used for aerial photographic surveys of birds, Merkel et al. (2016) used an automated change-detection approach to estimate breeding success of cliff-nesting Thick-billed Murres (Uria lomvia) based on time-lapse photography captured by a stationary camera on the ground.

Other efforts have focused on marine mammals that, like offshore birds, tend to be sparsely distributed over large expanses that render manual image review very labor-intensive. Podobna et al. (2010), Schoonmaker et al. (2010, 2011) and Selby et al. (2011) developed techniques to detect whales at or near the water surface based on spectral characteristics, and Maire et al. (2013, 2015) and Mejias et al. (2013) developed OBIA approaches similar to those described above for birds to detect dugongs (Dugong dugon) in high-resolution UAS images. Maussang et al. (2015) proposed general-purpose algorithms for detecting miscellaneous marine animals in ocean-surface images. Additional aerial image analysis techniques used to detect mammals have been reported by Sirmacek et al. (2012), van Gemert et al. (2015), Terletzky and Ramsey (2016), and Torney et al. (2016).

Aerial thermal-infrared images have been used for automated detection of mammals, including rabbits (Christiansen et al. 2014), seals (Conn et al. 2014), hippopotamuses (Hippopotamus amphibius) (Lhoest et al. 2015), koalas (Phascolarctos cinereus) (Gonzalez et al. 2016), and captive bison (Bison bison), elk (Cervus canadensis), fallow deer (Dama dama), white-tailed deer (Odocoileus virginianus), and gray wolves (Canis lupus) (Chrétien et al. 2015, 2016). However, a major obstacle to using aerial thermal images to detect birds is the low-resolution of the cameras that, at best, record 640 × 480-pixel frames, combined with the generally smaller size of birds compared to mammals. For example, the resolution of aerial thermal images was found to be inadequate for detecting roosting Rio Grande Wild Turkeys (Meleagris gallopavo intermedia) (Locke et al. 2006), although other birds such as Malleefowl (Leipoa ocellata), Sandhill Cranes (Grus canadensis), and grouse (Centrocercus and Tympanuchus spp.) have been detected (Gillette et al. 2013, 2015, McCafferty 2013). The automated-detection method used by Christiansen et al. (2014) was only effective for chickens in thermal images acquired from ≤20 m in altitude. Very low altitude aerial surveys will generally be hindered by a correspondingly narrow field of view on the ground, a resulting increased probability of missing or double-counting subjects that have moved short distances between transects, and a greater chance of disturbing birds.

Automated analysis of satellite images to survey birds

In contrast to aerial images acquired using manned or unmanned aircraft, satellite images generally have a much lower ground resolution. Until recently, the finest resolution available was 60 cm/pixel (e.g., QuickBird images), but satellite image resolutions down to 25 cm/pixel are now available (e.g., WorldView-3), and this may further improve in the future. Following the demonstration of an image-analysis method to estimate the size of Emperor Penguin (Aptenodytes forsteri) colonies from QuickBird images by Barber-Meyer et al. (2007), several investigators have examined approaches for surveying penguins and other waterbirds using satellite images. Although individuals of some large species such as Emperor Penguins can be detected in higher resolution satellite images (Barber-Meyer et al. 2007, Fretwell et al. 2012), the main focus with smaller species and coarser images has been on detecting and estimating the size of colonies based on the extent of fecal staining (i.e., guano), which has a distinct spectral signature (Fretwell and Trathan 2009, LaRue et al. 2014). McNeill et al. (2011) also incorporated automated colony delineation based on fecal staining into their analysis routine for higher-resolution images acquired by aircraft (Table 1). Computer-automated detection of guano in satellite images has also been used to detect colonies of seabirds other than penguins (Fretwell et al. 2015). The overall coarser resolution of satellite images compared to images from aircraft lends itself better to traditional pixel-based spectral analysis, which these studies have employed almost exclusively, in most cases using the supervised Maximum Likelihood Classification approach with ArcGIS's Spatial Analyst extension. Other species that have been manually detected in satellite images include flamingos (Sasamal et al. 2008) and nests of Masked Boobies (Sula dactylatra) (Hughes et al. 2011).

Time and efficiency considerations

All of the computer-automated image-analysis techniques described above require a certain amount of time to set up (e.g., perform any cropping, determine the appropriate spectral threshold value(s) for each image, or develop segmentation and classification workflows) and execute (i.e., computer-processing time). An automated approach may not be worth using if it will require more total time (particularly in setup) than manual analysis of the images, although use of automated analysis may help avert repetitive motion syndrome resulting from tedious manual counts (Milton et al. 2006), and may reduce variation due to observer fatigue. Gilmer et al. (1988) estimated that their rudimentary spectral-thresholding technique using now long-outdated computer hardware and software was only worthwhile if individual images contained >2000 geese. The more streamlined method described by Laliberte and Ripple (2003) was faster than manual counts if >230 subjects were present, and Trathan (2004) reported that a computer-automated approach took half as much time as manual analysis to count a colony of ~11,000 penguins. Milton et al. (2006) similarly found that total analysis time for a sample of 35 photos (some containing several thousand birds) was reduced by half by using an automated approach to count molting Common Eiders (Somateria mollissima) at sea, although they only factored in setup time, arguing that computer processing time did not consume “person time.” The highly autonomous method introduced by Descamps et al. (2011), requiring virtually no setup beyond quick image cropping, took a total of 1:38 h (processing time) to count just under 40,000 flamingoes in five colonies, compared to 24:15 h for manual counts. Elaborate OBIA workflows such as those described by Groom et al. (2011, 2013) may be time-consuming to develop, but, if sufficiently versatile, can subsequently be applied to countless batches of images, year after year, thus making the initial time investment worthwhile. This may be especially useful for maintaining consistency in long-term monitoring that would alternatively involve a turnover of airborne observers.

Conclusion and Recommendations

There have been major advances over the past three decades in automated bird detection and counts in aerial images, from performing rudimentary spectral analysis of scanned film photographs with pre-desktop computers to estimate numbers of white birds to developing elaborate algorithms capable of detecting multiple species in thousands of digital images with complex backgrounds. The current convergence of technologies that foster potential for effective and efficient automated bird detection in aerial images—namely, high-performance digital cameras, sophisticated image analysis software, continuous advances in computer processing, and small autonomous UAS capable of collecting aerial images at very fine scales—is creating fertile conditions for significant expansion in the use of such techniques. These could collectively save incalculable time and resources among bird population monitoring practitioners, and allow new or expanded aerial photographic monitoring campaigns where they were previously deemed prohibitively inefficient.

One area where there is still room for advancements is in multispecies recognition because aerial surveys often detect more than one species. For example, images collected by Drever et al. (2015) were used to survey large numbers of multiple wintering species of waterbirds concentrated in the same area, including Dunlins, Black-bellied Plovers (Pluvialis squatarola), and several duck species where even males and females of the same species differ in size and appearance. The OBIA workflow developed by Groom et al. (2013) can detect multiple species, but is unable to identify birds to species level. The workflow outputs GIS layers containing polygons outlining birds of various species that were detected throughout the images, but these must ultimately be overlaid on the images and examined by experts to perform species labeling. For large volumes of images, this is still more efficient than also having to manually scrutinize the images to detect birds in the first place, but can nevertheless be very labor-intensive if there are large numbers of birds. In the only published example of true automated multispecies recognition that we found, Chrétien et al. (2015) developed an OBIA rule set capable of differentiating bison, elk, fallow deer, and gray wolves in UAS images acquired over a nature park.

Based on the literature we reviewed, a major shift to computer-automated aerial photographic bird censusing is not yet underway despite an increase in the number of relevant papers being published. Several factors may be preventing wider adoption of these approaches both for birds and mammals. Perhaps most notably, there seems to be a divide between papers published in wildlife/ecology-focused publications and those published in remote sensing- and computer science-focused publications. Although bird-related papers are more evenly distributed among publication disciplines, only four of the 20 mammal-related papers were published in wildlife, ecology, or environmental science publications. The results of research published in the remote-sensing and computer-science literature may not be reaching those involved in wildlife research and management. We therefore encourage investigators to look beyond publications in their own discipline when searching for potential approaches to automate animal detection and enumeration in aerial images.

Another potential barrier is the need for specialized skill sets to use many of the more sophisticated analysis methods. Efficient design of aerial surveys requires wildlife biologists to work with biostatisticians to help develop appropriate sampling frames, as well as with remote sensing and computer science experts to process the images. Similarly, remote sensing and computer science experts could facilitate developments in this field by collaborating with wildlife biologists to focus on subjects of current importance to wildlife management, and by ensuring that their manuscripts are written using minimal discipline-specific jargon to reach a broader audience. Overall, we believe that heightened interdisciplinary efforts in this area will help precipitate the shift toward increased use of computer-automated aerial photographic bird census techniques.

Acknowledgments

The production of this review was funded by the Canadian Wildlife Service, Environment and Climate Change Canada. Reproduction of figure materials from previously published works (Figs. 1-4) has been carried out with the consent of the corresponding authors of the original works and, where applicable, permission from their respective publishers and rightsholders: Elsevier Ltd. (Groom et al. 2013, Liu et al. 2015), John Wiley & Sons, Inc. (Laliberte and Ripple 2003, Trathan 2004), Taylor & Francis Group (Groom et al. 2011), the Remote Sensing & Photogrammetry Society (Groom et al. 2007), and the American Association for Geodetic Surveying and the Geographic and Land Information Society (Abd-Elrahman et al. 2005). Further reproduction of these materials is subject to the terms and conditions of their respective publishers and rightsholders.