Automated, machine learning–based alerts increase epilepsy surgery referrals: A randomized controlled trial

Abstract

Objective

To determine whether automated, electronic alerts increased referrals for epilepsy surgery.

Methods

We conducted a prospective, randomized controlled trial of a natural language processing–based clinical decision support system embedded in the electronic health record (EHR) at 14 pediatric neurology outpatient clinic sites. Children with epilepsy and at least two prior neurology visits were screened by the system prior to their scheduled visit. Patients classified as a potential surgical candidate were randomized 2:1 for their provider to receive an alert or standard of care (no alert). The primary outcome was referral for a neurosurgical evaluation. The likelihood of referral was estimated using a Cox proportional hazards regression model.

Results

Between April 2017 and April 2019, at total of 4858 children were screened by the system, and 284 (5.8%) were identified as potential surgical candidates. Two hundred four patients received an alert, and 96 patients received standard care. Median follow-up time was 24 months (range: 12–36 months). Compared to the control group, patients whose provider received an alert were more likely to be referred for a presurgical evaluation (3.1% vs 9.8%; adjusted hazard ratio [HR] = 3.21, 95% confidence interval [CI]: 0.95–10.8; one-sided p = .03). Nine patients (4.4%) in the alert group underwent epilepsy surgery, compared to none (0%) in the control group (one-sided p = .03).

Significance

Machine learning–based automated alerts may improve the utilization of referrals for epilepsy surgery evaluations.

Key points

- Providers were more likely to refer patients with epilepsy for a presurgical evaluation after receiving an automated alert.

- Natural language processing–based clinical decision support systems can be used to identify potential candidates.

- Alerts impacted referral patterns at a specialized epilepsy center but may have a bigger impact in community practices.

1 INTRODUCTION

Epilepsy affects more than 430 000 children in the United States.1 Up to one-third do not adequately respond to anti-seizure medications (ASMs).2, 3 Patients with drug-resistant epilepsy have an increased risk of premature death,4, 5 decreased quality of life,6 and impaired social development.7 Eliminating seizures minimizes these risks, but the chance of seizure freedom using pharmacotherapy alone is less than 10%.8 Surgical treatment increases the chances of seizure freedom to more than 60%,9 and should be considered in a timely fashion.10-12 However, the average time to surgery is 7 years in pediatrics and 20 years in adults.13

The electronic health record (EHR) contains information about a patient's medical history, social history, and clinical course; up to 50% of these data are only available in unstructured free text.14 Free-text contains information that can improve the quality of clinical care and research.15, 16 Natural language processing (NLP) and machine learning can be used for anlyzing analyzing free text.17 NLP is used for surveillance, adverse event detection,18 identifying patient medications,19 and extracting data from radiology reports.20 NLP can be applied to evaluate clinical notes and provide recommendations,21 but NLP models are frequently experimental and not integrated into practice.22 Only limited studies illustrate the direct application of NLP to clinical practice or clinical decision support systems.22-26 In the case of drug-resistent epilepsy, NLP can identify eligible patients and suggest neurosurgical consults earlier in the disease process.27, 28

Our group developed an NLP model to analyze free-text neurology office visit notes to identify potential candidates for resective epilepsy surgery.27 During development, the algorithm scored patients equitably29 and performed as well as epileptologists in a blinded, head-to-head comparison.30 Prior to implementation, it was validated prospectively in a clinical setting.31 We integrated this model with the EHR as a decision support tool for neurologists. The system screened patients with epilepsy before they were scheduled to visit a neurologist, and sent automated alerts to their providers if they were predicted to be a surgical candidate. The purpose of this study was to assess whether automated alerts improved the timeliness of referrals for a presurgical evaluation.

2 METHODS

This study was approved by the Cincinnati Children's Hospital Medical Center Institutional Review Board under approval number 2016-2932. The approval included a waiver of informed consent for patients and an assent document for providers.

2.1 Study design and participants

Providers were recruited from a large, pediatric epilepsy center in Cincinnati, OH, USA (Cincinnati Children's Hospital Medical Center; CCHMC). Attending neurologists and nurse practitioners were asked to participate, and all 25 consented. Children with epilepsy who were scheduled to visit an outpatient neurology clinic between April 16, 2017 and April 15, 2019, were prospectively enrolled. Inclusion criteria included at least two prior neurology visits at the time of enrollment with an International Classification of Diseases (ICD) code for epilepsy. Patients with previous neurosurgical procedures in the previous year were excluded.

2.2 Randomization

Patients identified as potential surgical candidates by the NLP were randomized 2:1 for their provider to receive an alert or no reminder prior to the patient's visit. Half of the alerts were emails, and the other half were in-basket messages that appeared in the EHR. Randomization was performed at the visit level. Patients who were not referred for a presurgical evaluation within 6 months were eligible to be re-randomized at subsequent visits. We used a computer-based random number generator for group allocation.

Study personnel completing the chart review and statistical analyses (B.D.W. and N.Z.) were not blinded to group allocation. To confirm the results of the chart review, an epileptologist (H.M.G.) systematically reviewed charts for all patients who were referred for a presurgical evaluation.

2.3 Natural language processing model

The NLP model was trained to identify potential surgical candidates using n-grams extracted from neurology office visit notes.27, 32 Patients who underwent a resective epilepsy surgery (e.g., lobectomy, hemispherectomy, corticectomy) after a minimum of two neurology office visits were included in the training cohort. Non-surgical patients who were seizure-free at the most recent follow-up visit were included as controls in the training cohort. A support vector machine with a radial basis function kernel was used to predict patients' surgical candidacy (surgical vs non-surgical) in a supervised learning paradigm. Unigram, bi-gram, and tri-gram features were extracted from their notes. Feature selection was performed using a correlation-based filter, and the number of n-grams included in the model was tuned as a hyperparameter using nested cross-validation. The model was updated each week as more patients became eligible for inclusion in the training cohort. Retrospective and prospective performance of the NLP model was published previously.31 Area under the receiver-operating characteristic (ROC) curve (AUC) was 0.79 (95% confidence interval [CI] = 0.62–0.96), sensitivity was 0.80 (95% CI = 0.29–0.99), specificity was 0.77 (95% CI = 0.64–0.88), positive predictive value was 0.25 (95% CI = 0.07–0.52), and negative predictive value was 0.98 (95% CI = 0.87–1.00).

2.4 Implementation of the natural language processing–based clinical decision support system

Patients were screened by the NLP model on Sunday evenings and alerts were subsequently sent to providers. All alerts were automated and integrated with the Epic EHR (Verona, WI). The email alert was sent to providers' institutional email address and contained the text, “This patient has been identified as a possible candidate for epilepsy surgery. Please create an Orders Only encounter for this patient and use the embedded Epilepsy Surg Consult flowsheet to indicate whether you would like an Epilepsy Surgical Consult; if yes, please also place the consult order (NEU139).” EHR alerts were sent to providers as an in-basket message, contained the same text as the email, and provided buttons to open an encounter.

One year prior to the study initiation, the alerting system was tested with six neurology providers and reminders were sent for 71 patients. Of the 71 patients who had a reminder sent during the test phase, 15 patients (21%) were included in the study, 5 (33%) of whom were randomized to the control group.

2.5 Outcomes

The primary outcome was the association between alerts and presurgical evaluations for drug-resistant epilpesy. To meet this endpoint, providers must have placed the order for a referral. Simply documenting that the patient was agreeable to surgical treatment was not sufficient. Pre-specified secondary outcomes included the proportion of patients who completed a presurgical evaluation and the proportion of patients who underwent epilepsy surgery. All providers who received an alert were followed up for additional patient exclusion reasons. Epilepsy surgery was defined as a lobectomy, amygdalohippocampectomy, hemispherectomy, posterior quadrant disconnection, vagus nerve stimulation (VNS), laser ablation, corpus callostomy, or hemispherotomy undertaken for the treatment of epileptic seizures. Tumor resections and ventricouloperitoneal shunt placements and corrections were not included. Stereo electroencephalograms and craniotomies with electrocorticography or grid placement were considered to be part of the presurgical evaluation, and not as epilepsy surgeries if not accompanied by subsequent resection or stimulation. VNS revisions and battery replacements were not included because they were considered standard follow-up care that was unlikely to be influenced by an alert.

During the first year of the study, providers who received an alert were contacted after their visit with the patient. If the patient was not referred during the visit, the provider was asked to specify the reason. Categories included: (1) the patient was referred previously to the epilepsy surgery program or already had surgery, (2) the patient's epilepsy did not fulfill International League Against Epilepsy (ILAE) criteria of intractability, (3) the patient was not an epilepsy surgery candidate, (4) a referral was considered but deferred at that time, and (5) epilepsy surgery was discussed but the patient or their family declined the procedure.

2.6 Statistical analysis

All randomized patients were included in this intent-to-treat analysis.33 Baseline characteristics were compared among the three treatment groups using an analysis of variance (ANOVA) for continuous variables and Fisher's exact test for categorical variables. Variables that were associated with referrals for surgical evaluation were included as covariates in the final model. For the primary outcome, we used a Cox proportional hazards model to estimate the hazard ratio (HR) of referrals for a presurgical evaluation after receiving an alert, and Wald's test to estimate the corresponding p-value for group comparisons. First, emails were compared to EHR alerts. Because the two alert types had similar effects, they were combined into one group for comparison with the control group. We verified that the proportional hazards assumption was satisfied (survival package in R).34 There were no violations. We used the survminer package to produce forest plots of the HRs and model fit after adjusting for age, the number of neurology visits, and the number of ASMs prescribed.35 A Kaplan–Meier curve was used to display the cumulative event rate.36 We defined the censoring date as the date of referral for a presurgical evaluation, patient death,1 or the end of the study period, whichever came first. If a patient in the control group crossed over to the alert group at a subsequent visit, we censored time at the date of the alert. This crossover design added power to the study and was valid because there was no carry-over effect when a patient crossed over from the control group to the alert group.37

Power was calculated using the chi-square test for differences between two proportions. We assumed that the odds ratio (OR) that corresponded to the greatest difference between interventions would be 2.7. It was estimated that 80% of identified patients would be eligible for surgical consult. To adjust for the three comparisons (control vs email, control vs EHR alert, and email vs EHR alert), power estimates were based on an alpha level of 0.017. We aimed to enroll 136 patients, which would have given sufficient power (86.5%) to detect the OR corresponding to a medium effect size (0.30). We analyzed the accuracy of prospective identification in a previous validation study31 and found that the positive predictive value (PPV) was lower than expected (25%). Therefore, we decided to increase the enrollment period from 1 to 2 years, followed by a 1-year observation window.

The protocol pre-specified that generalized estimating equations would be used to estimate the treatment effect of alerts after stratifying patients by the provider who received the alert. After the trial ended, we modified the statistical analysis plan because most patients were not referred at the visit that the alert was sent, as was originally expected. Most patients agreed to being evaluated at subsequent a visit, which lessened the validity of stratifying by provider. Therefore, we used the time until referral for presurgical evaluation as the primary outcome after randomization instead of whether the patient was referred for presurgical evaluation at the initial visit.

The study team manually reviewed the charts of all randomized patients to confirm dates of referrals, presurgical evaluations, and epilepsy surgeries. Manual reviews were compared to lists of referrals and surgeries used by the CCHMC Epilepsy Surgery Program to ensure that no events were missed. Demographic, visit, and medication data were extracted from the EHR.

Data were reported as mean ± standard deviation (SD) or frequency (%) as appropriate. We hypothesized that alerts would increase the likelihood of referral. There was no concern that alerts would decrease the likelihood of referral. Therefore, we considered one-sided p values less than .05 to be statistically significant. R statistical software version 3.6.1 was used for all analyses.38

3 RESULTS

3.1 Patients

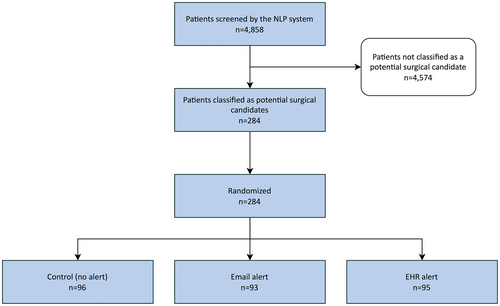

During the 2-year study period, 4858 patients were screened by the NLP system. Of those, 284 (5.8%) were classified as potential surgical candidates, randomized, and included in the intent-to-treat analysis. Ninety-six patients (33.8%) were assigned to the control group, 93 (32.7%) whose treating provider received an email, and 95 (33.5%) whose treating provider received an EHR alert (Figure 1). Sixteen patients (16.7%) in the control group were randomized to receive an alert at a later visit, bringing the total number of patients who received an alert to 204. There were no differences in demographic or baseline clinical characteristics between the three groups (Table 1). There were 158 male patients (55.6%) and 44 racial minorities (15.5%), and 11 (3.9%) patients who were Hispanic. Patients were 13.8 ± 8.4 years of age and were followed for a total of 542 person-years (median = 24.0 months).

| Control (n = 96) | Email alert (n = 93) | EHR alert (n = 95) | p Value | No referral (n = 261) | Referred for surgery (n = 23) | p Value | |

|---|---|---|---|---|---|---|---|

| Age (y) | 13.8 ± 8.1 | 13.6 ± 7.8 | 13.9 ± 9.4 | .98 | 14.1 ± 8.5 | 10.0 ± 6.3 | .03 |

| Male gender | 54 (56.2%) | 57 (61.3%) | 47 (49.5%) | .27 | 142 (54.4%) | 16 (69.6%) | .19 |

| Race | |||||||

| White | 79 (82.3%) | 81 (87.1%) | 79 (83.2%) | .12 | 221 (84.7%) | 18 (78.3%) | .47 |

| Black | 7 (7.3%) | 10 (10.8%) | 12 (12.6%) | 26 (10.0%) | 3 (13.0%) | ||

| Other | 10 (10.4%) | 2 (2.15%) | 4 (4.21%) | 14 (5.36%) | 2 (8.70%) | ||

| Hispanic ethnicity | 5 (5.21%) | 3 (3.23%) | 3 (3.16%) | .80 | 10 (3.83%) | 1 (4.35%) | >.99 |

| Insurance | |||||||

| Private | 49 (51%) | 47 (50.5%) | 46 (48.4%) | .86 | 131 (50.2%) | 11 (47.8%) | .77 |

| Public | 42 (43.8%) | 39 (41.9%) | 45 (47.4%) | 116 (44.4%) | 10 (43.5%) | ||

| Self-pay | 5 (5.21%) | 7 (7.53%) | 4 (4.21%) | 14 (5.36%) | 2 (8.70%) | ||

| Neurology visits | 13.1 ± 8.8 | 13.5 ± 8.5 | 11.5 ± 9.0 | .25 | 13 ± 8.9 | 9.0 ± 6.4 | .04 |

| Anti-seizure medications | 5.0 ± 2.3 | 5.2 ± 3.0 | 4.7 ± 2.8 | .42 | 4.8 ± 2.6 | 6.6 ± 3.4 | .002 |

- Note: Data are presented as mean ± standard deviation or number (%).

- Abbreviation: EHR, electronic health record.

Compared to patients not referred for a presurgical evaluation, patients who were referred for surgery were younger (10.0 ± 6.32 vs 14.1 ± 8.51 years old, respectively; p = .03), had fewer neurology visits (9 ± 6.4 vs 13 ± 8.9 neurology office visits, respectively; p = .04), and were prescribed more ASMs (6.6 ± 3.39 vs 4.8 ± 2.58 unique ASMs per patient; p = .002). No statistically significant associations were found between referrals for presurgical evaluations and patient gender, race, or insurance status (all p's > .05; Table 1).

3.2 Primary outcome

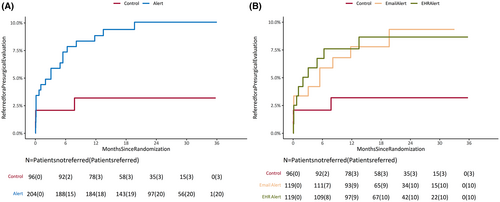

Patients were more likely to be referred for a presurgical evaluation if their provider received an alert (3.1% vs 9.8%; HR = 3.13, 95% CI: 0.93–10.5; one-sided p = .03) (Figure 2). This effect persisted after controlling for patient age, the number of neurology visits, and the number of ASMs prescribed as linear covariates (HR = 3.21, 95% CI: 0.95–10.82; one-sided p = .03; Figure 3).

Email and EHR alerts were equally effective (10 patients [8.4%] were referred following an email alert vs 10 [8.4%] following an EHR alert; HR = 1.00, 95% CI: 0.42–2.40; two-sided p > .99). Compared to no alert, emails were not significantly associated with a higher likelihood of surgical referral (HR = 2.75, 95% CI: 0.76–10.0; one-sided p = .06). A similar trend was observed for EHR alerts (EHR alert vs no alert: HR = 2.75, 95% CI: 0.76–10.0; one-sided p = .06).

3.3 Secondary outcomes

Of the 204 children whose provider received an alert, 20 (9.8%) were referred for a presurgical evaluation, 15 (7.4%) completed the evaluation, and 9 (4.4%) received epilepsy surgery (Table 2). Of the 96 children in the control group whose provider did not receive an alert, three (3.1%) were referred for a presurgical evaluation, two (2.1%) completed the evaluation, and none (0%) received epilepsy surgery (p = .05 and .03 for presurgical evaluations and surgeries, respectively).

| Control (n = 96) | Email alert (n = 119) | EHR alert (n = 119) | Any alert (n = 204) | OR (95% CI) | p Valuea | |

|---|---|---|---|---|---|---|

| Referred for surgery | 3 (3.13%) | 10 (10.8%) | 10 (10.5%) | 20 (10.6%) | 3.36 (0.96–18.1) | .03 |

| Underwent evaluation | 2 (2.10%) | 7 (7.53%) | 8 (8.42%) | 15 (7.98%) | 3.72 (0.84–34.2) | .05 |

| Underwent surgery | 0 (0%) | 4 (4.30%) | 5 (5.26%) | 9 (4.79%) | – | .03 |

| Surgery type | ||||||

| Resective | 0 (0%) | 1 (1.08%) | 3 (3.16%) | 4 (2.13%) | – | – |

| Non-resective | 0 (0%) | 3 (3.23%) | 2 (2.11%) | 5 (2.66%) | – | – |

- Note: Sample sizes for the alert groups included 16 patients who crossed over from the control group. The odds ratio (OR) for patients who underwent epilepsy surgery after receiving an alert could not be calculated because the upper bound of the 95% confidence interval (CI) included infinity.

- a One-sided p values were reported for the comparison of the control and “any alert” groups. Calculated using Fisher's exact test.

3.4 Provider feedback

During the first year of the study, providers were alerted by the system but declined referrals in 109 patients. Of the 109 patients, 4 (3.7%) were referred or had surgery in the past, 49 (45%) did not fulfill ILAE criteria for intractability, 6 (5.5%) had drug-resistant epilepsy but were not a candidate for surgery, 40 (37%) were considered for surgery but providers deferred the discussion until a later date, and 8 (7.3%) discussed surgery with their provider but declined the procedure. No response was given for two patients (1.8%).

4 DISCUSSION

Neurology providers were three times more likely to refer potential surgical candidates for a presurgical evaluation after receiving an automated alert from the machine learning–based clinical decision support system. After referral and evaluation, nine children whose provider received an alert continued on to have surgery. Four children received resective surgery, which eliminates seizures over 60% of the time.9, 13 In comparison, none of the patients in the control group received surgery.

This is the first randomized trial of an NLP-based intervention integrated directly into patient care, and the first artificial intelligence (AI)–based randomized trial in neurology. A recent systematic review of deep learning algorithms for medical imaging, the most developed subfield of medical AI, revealed a lack of randomized trials.39 At the time of the review publication in 2020, only six trials had been published.32, 40-44 Demonstration of clinical benefit from AI-based interventions is needed.45

These alerts were generated by an NLP algorithm that identified candidates for surgery based on free-text neurology notes.31 We previously evaluated this system in a prospective clinical setting. Sensitivity and specificity of the NLP was 80% and 77%, respectively, and the positive predictive value was 25%. Because providers are unlikely to refer patients who do not meet clinical criteria for surgical candidacy, we considered the upper bound for referrals in this trial to be 25%. However, even after receiving an alert, only 10% of patients were referred. Based on the responses from providers, 8% of patients declined the offer to be referred. Some patients with epilepsy, or their families, strongly oppose neurosurgical treatment, even if it is likely to eliminate their seizures.46 In addition, providers chose to defer referrals for 37% of patients. This group is an important future target for earlier intervention.

4.1 Limitations

Patients were blinded as to whether their providers received an alert, but it was not possible to blind providers to the intervention. Providers who received an alert for one patient may have been more likely to refer their other patients who did not receive an alert. This would have biased the results toward the null hypothesis. Over time, alerts may influence providers to refer more children for a presurgical evaluation earlier in the disease course, so there may come a time when the alerts are no longer needed. It was not possible to assess how the effect of alerts may have changed over this 2-year period because of the limited sample size. Second, we were not able to track when providers viewed the alerts. Alerts were sent on Sunday evenings, as requested by the providers, to allow for adequate time to consider the suggestion prior to a patient's visit. Third, the prospective accuracy of the NLP system was lower than expected.31 This limited the precision of our estimates of effect. There were cases when the NLP model failed to recognize patients who were eligible for surgery (sensitivity was 0.8031). These patients could still be considered for referral for a presurgical evaluation through standard clinical care pathways. Fourth, only one pediatric hospital was included, and this may limit generalizability of the results, although epilepsy progress notes can be classified across hospitals.47 Finally, we modified the statistical analyses reported here after completion of the trial in two ways: (1) we included referrals that happened any time after the alert was fired, instead of only observing referrals that came at the same visit the alert was sent, and (2) we reported one-sided p values instead of two-sided p values. The study was designed to detect whether alerts increased the rate of referrals, and there is no basis for suspecting that the opposite effect would occur (i.e., alerts should not decrease the rate of referrals). Although one-sided p values were less than .05, two-sided p values would have been greater than .05. To convert our one-sided p values to two-sided p values, readers may multiply the one-sided p values by two.

5 CONCLUSIONS

ML-based automated alerts increased the likelihood that neurology providers referred potential candidates for a presurgical evaluation. As a result, children with drug-resistant epilepsy increased their chances of undergoing surgery and eliminating their seizures. The impact of this intervention could be further improved by enhancing the accuracy of the NLP algorithm. Future studies should include additional epilepsy centers and investigate the impact of pairing provider alerts with patient education materials to further enhance the likelihood that patients agree to pursue their neurologist's recommendation for surgical evaluation.

AUTHOR CONTRIBUTIONS

JWD and JPP: Research project: Conception, Organization, Execution; Statistical Analysis: Review and Critique; Manuscript Preparation: Review and Critique. BDW: Research project: Organization; Statistical Analysis: Design, Execution, Review and Critique; Manuscript Preparation: Writing of the first draft. NZ and RDS: Statistical Analysis: Design, Execution, Review and Critique; Manuscript Preparation: Review and Critique. HMG, KHB, RF, FTM, and TAG: Research project: Execution; Manuscript Preparation: Review and Critique.

ACKNOWLEDGMENTS

This study was funded by a grant from the National Institutes of Health (NIH; AHRQ R21 HS024977-01) and National Institute of Neurological Disorders and Stroke (F31 NS115447). Support for RDS was provided by the National Heart, Lung, and Blood Institute (R01 HL141286). JWD and BDW had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

CONFLICT OF INTEREST STATEMENT

None of the authors have any conflicts of interest to disclose. Drs. Greiner, Glauser, and Pestian report a patent for the identification of surgery candidates using natural language processing (application num. 16/396,835), licensed to Cincinnati Children's Hospital Medical Center.