Attrition in Long-Term Nutrition Research Studies

A Commentary by the European Society for Pediatric Gastroenterology, Hepatology, and Nutrition Early Nutrition Research Working Group

T.S. is an invited guest member of the ESPGHAN Early Nutrition Research Working Group.

The authors report no conflicts of interest.

ABSTRACT

Long-term follow-up of randomised trials and observational studies provide the best evidence presently available to assess long-term effects of nutrition, and such studies are an important component in determining optimal infant feeding practices. Attrition is, however, an almost inevitable occurrence with increasing age at follow-up. There is a common assumption that studies with <80% follow-up rates are invalid or flawed, and this criticism seems to be more frequently applied to follow-up studies involving randomised trials than observational studies. In this article, we explore the basis and evidence for this “80% rule” and discuss the need for greater consensus and clear guidelines for analysing and reporting results in this specific situation.

BACKGROUND

The concept that nutrition during the first 1000 days of life is an important determinant of later health and developmental outcomes is widely accepted, and the potential public health and economic importance of interventions during this period is increasingly highlighted 1.. Evidence for later health effects of nutrition in humans can be obtained from mechanistic, observational, and controlled intervention studies. Ultimately, the most persuasive evidence may come from a combination of approaches yielding consistent results. Observational studies have the major strength of generating data relatively rapidly from large cohorts, particularly when outcome data are available from cohorts with data collected early in life. It is, however, recognised that these studies can only show “associations” between early life events and later outcomes and cannot be used to establish causal relations that may underpin interventions. Indeed, historically, the use of epidemiological studies in nutritional research has sometimes led to claims and recommendations which, when tested in randomised trials, could not be confirmed or were even shown to be wrong 2.-4..

Randomised clinical trials (RCTs) of nutritional interventions are regarded as the criterion standard for establishing causality and underpinning practice, but such studies are more time consuming and expensive to conduct. Moreover, randomised interventions changing one or more factors in the diet can often lead to unintended/necessary changes in other dietary factors. Therefore, even in RCTs, possible outcome effects may not easily be causally related to the randomised factor(s) in the intervention. Furthermore, although RCTs with long-term follow-up provide the strongest available evidence in practice for effects of early interventions on later outcomes, these are rarely the primary outcome measure, and the results could be regarded as generating hypothesis rather than establishing causality.

Investigating later effects of nutrition, whether in RCTs or prospective observational studies, necessitates long-term follow-up. Measuring a range of outcomes to identify differential effects relevant in defining risks and benefits of the intervention in different populations may be desirable. This must be, however, weighed against the risk of introducing error through multiple testing of outcomes with selective reporting of significant findings, because this may increase the prevalence of false-positive results in the literature.

It is increasingly recognised that performing long-term follow-up studies is challenging and that, for a number of reasons, cohort attrition—or “loss to follow-up”—is a universal problem. In pharmaceutical or therapeutic trials, participants usually have a medical condition and are highly motivated to remain under medical supervision and perhaps achieve a therapeutic advantage. In contrast, for healthy infants and children whose families volunteer to participate in a nutrition RCT, there is less personal motivation to remain in the study, and the payment of significant incentives is not ethically acceptable for these groups. Thus, the likelihood of loss to follow-up tends to be higher than for drug trials and increases with the duration of follow-up. In 2008, we discussed the issue of attrition in long-term nutrition studies, highlighting the fairly predictable attrition rate with increasing duration and its dependence on factors including the invasiveness and inconvenience of the protocol 5.. We considered statistical issues related to attrition and proposed transparent reporting criteria with explicit acknowledgment of attrition and the potential effect on results and conclusions. Our experience, however, is that it remains difficult to publish data from long-term follow-up studies and, in particular, that there is a common assumption that studies with <80% follow-up rates are invalid or flawed; this criticism seems to be more frequently applied to follow-up studies involving randomised trials than observational studies. Here, we explore the basis and evidence for this “80% rule” (or, more broadly, whether cutoffs for what constitutes an acceptable follow-up rate are desirable) and consider further how data from follow-up studies should be analysed, reported, and interpreted.

WHAT CONSTITUTES ATTRITION?

Although, strictly speaking, the term “attrition” covers any study subject not seen for follow-up, there are some important caveats. In RCTs, subjects are sometimes rendered “lost to follow-up” by being excluded if they fail to follow the protocol or stop the study intervention. This is a serious design error and should be avoided to allow a proper intention-to-treat analysis. It is also important to distinguish between true loss to follow-up and administrative censoring—when incomplete follow-up arises because some subjects have not yet attained a given age or endpoint. Administrative censoring, no matter how large, does not introduce bias and should be excluded from consideration of what constitutes an acceptable follow-up rate. Many statistical methods adequately deal with this type of incompleteness of follow-up 6..

CONSEQUENCES OF ATTRITION

The main statistical concern when attrition is present is the possible introduction of bias, because individuals who are followed up are rarely representative of the original cohort. In infant-feeding studies, follow-up rates may be higher for breast-fed as compared to formula-fed infants (eg, 7.); parental education and socioeconomic status are also often different. As previously discussed 5., bias is arguably more problematic when there is attrition in an observational study than in an RCT. For RCTs, the main issue is whether there is “differential” bias in randomised groups, and this can to some extent be examined; for example, by comparing characteristics of subjects retained or lost to follow-up in the different trial arms. Furthermore, although selective attrition can distort the balance of unmeasured confounders, this problem will tend to be smaller in RCTs in which groups are comparable at baseline. In contrast, bias in observational studies is less easily addressed; it is possible to analyse whether measured variables differ in subjects followed versus not followed, but impossible to exclude bias by unmeasured factors.

Attrition reduces the power of a study to detect an expected difference in an outcome variable. This is likely to be more problematic for RCTs because they will generally have a smaller sample size than observational studies. Finally, attrition may affect the generalisability of the findings if the cohort followed up is not representative of the baseline population.

The “80% Rule”

The concept that a minimum 80% follow-up rate must be achieved to ensure statistical validity seems to have originated from Evidence-Based Medicine: How to Practice and Teach EBM, in which it is often cited but not mathematically justified. It appears to be based on the premise that <5% attrition is unlikely to introduce bias, but >20% is likely to have such an effect, although such a simplistic approach is unlikely to be valid, and all loss to follow-up is not equal 8.-10.. For example, 5% attrition could have a significant impact on the results if all subjects lost to follow-up share characteristics, which differ from those remaining in the study, whereas 20% attrition may not introduce bias if the subjects who remain are representative of those lost; 5% attrition in 1 group in an RCT would be problematic, whereas 20% attrition may not be so problematic if equally spread between groups. Many scientific journals require authors to describe loss to follow-up as a time-dependent phenomenon with a figure based on the Consolidated Standards of Reporting Trials recommendations 11., but it could also be described by showing Kaplan-Meier cumulative incidence curves for the event dropout in different groups.

More important, most references to the need for 80% minimum follow-up are in the context of a relatively short period of follow-up (eg, weeks or months) and generally refer to the primary endpoint of an intervention trial. Many such studies are drug trials, where it is feasible and advantageous to achieve at least 80% follow-up for the primary outcome to accurately assess therapeutic response and health economic impact, as well as detecting side effects. If the outcome studied is, however, the physiological response to diet or effects on child growth, it may be less important to achieve 80% follow-up as long as attrition does not induce bias. We have found no discussion in the literature of longer-term follow-up studies, which would be more relevant to our field. A report on attrition and missing data, written by a group of eminent clinical trial statisticians 12., makes no reference to any particular cutoff for attrition; instead the emphasis is first on preventing attrition by careful study design, and then on investigating the impact of attrition on a study-by-study basis, and considering the statistical handling of missing data and methods for imputation.

Attrition in RCTs and Observational Studies

There appears to be a perception that attrition is more problematic for RCTs than for observational studies, which is surprising to us. For example, in the 10-year follow-up of a randomised trial in which 30% of the original cohort were studied, the manuscript was not accepted when analysed by original randomised group with an explanation of the likely impact of attrition on power and effect size, but it was accepted with little comment when presented as an observational analysis with a “post-hoc” comparison of diet groups 13.. This seems illogical because it could be shown that there was no evidence of differential bias in the randomised groups at follow-up. For a given attrition rate, the evidence from a smaller randomised trial is frequently misinterpreted as weaker than the evidence from a larger observational trial; however, there is no mathematical model to support this perception.

ANALYSING DATA FROM STUDIES WITH ATTRITION

Subject characteristics collected at baseline or during follow-up may be simultaneously associated with loss to follow-up and study outcome measures; these are potential confounders and can cause bias in the observed effects. A number of statistical methods can be used to correct for such variables. For instance, for repeatedly measured endpoints, (generalised) linear mixed models or weighted generalised estimating equations could be used. Alternatively, multiple imputations could be used, possibly in conjunction with the other methods. These methods can also deal with the situation wherein previous outcomes may be associated with later dropout. If the endpoint is the occurrence of an event, survival analysis could be used with inverse probability of censoring weighting to deal with informative censoring. There may, however, be confounders that were not measured, and various sensitivity analyses 12. can be performed to assess the potential impact of such factors, or how strong an unmeasured confounder would have to have been to remove or reverse the observed effect (eg, 14.). In its simplest terms, this could estimate how different the result would have to be in subjects not seen at follow-up to negate the difference between the groups observed in those who were studied.

PROPOSAL FOR REPORTING AND DISCUSSING STUDIES AFFECTED BY ATTRITION

Despite best efforts to avoid and minimise attrition, present experience suggests that it is to some extent inevitable in long-term follow-up studies. Despite this, these studies provide the best evidence we can generate to assess the long-term effects of nutrition and are an important component in determining optimal infant-feeding practices. We suggest that greater consensus and clear guidelines are required for reporting results, covering the main statistical issues.

Present checklists for reporting observational and RCT data include some reference to loss to follow-up, although specific guidance is not given. Thus, the STROBE statement (Strengthening the Reporting of Observational studies in Epidemiology) states “if applicable, explain how loss to follow-up was addressed” 11., but without specific guidance on how this should be done. The Consolidated Standards of Reporting Trials checklist for reporting RCTs requires a clear description of the number of subjects with outcomes measured at each point and reasons for dropouts but does not provide specific guidance on reporting potential effects of attrition on reported outcomes 15., 16.. The Cochrane Collaboration's tool for assessing risk of bias in systematic reviews 17. includes criteria for judging whether the risk of bias from the amount, nature or handling of incomplete data in a trial is “low,” “high,” or “unclear” (section 8.5d); interestingly, the criteria focus on the potential for the introduction of bias rather than on the amount of attrition per se.

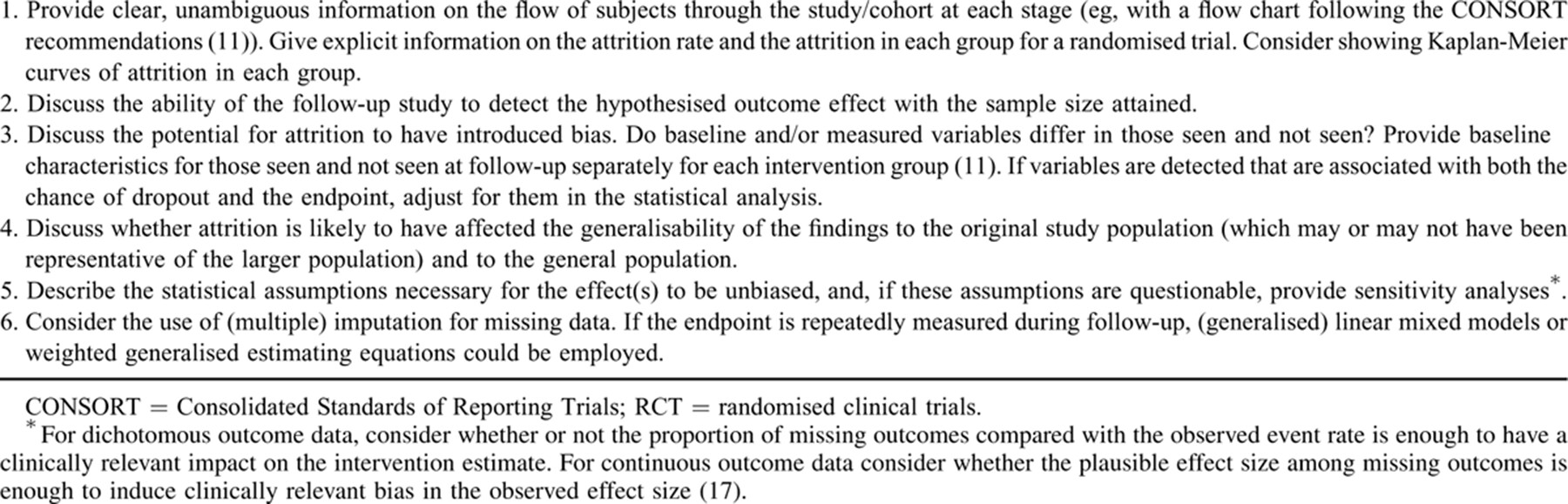

We suggest that it would be beneficial to provide more explicit guidance for reporting the effects of attrition in long-term RCTs and cohort studies to provide greater consistency and transparency, and to facilitate the assessment of studies assessed for inclusion in systematic reviews or meta-analyses (Table 1) 11., 17..