Formulating causal questions and principled statistical answers

Funding information: Fonds de recherche du Québec, Santé, Chercheur-boursier senior career award; Lorentz Center Leiden, Natural Sciences and Engineering Research Council (NSERC) of Canada, Discovery Grant #RGPIN-2014-05776; UK Medical Research Council Grant, MR/R025215/1; Vetenskapsrådet, 2016-00703

Abstract

Although review papers on causal inference methods are now available, there is a lack of introductory overviews on what they can render and on the guiding criteria for choosing one particular method. This tutorial gives an overview in situations where an exposure of interest is set at a chosen baseline (“point exposure”) and the target outcome arises at a later time point. We first phrase relevant causal questions and make a case for being specific about the possible exposure levels involved and the populations for which the question is relevant. Using the potential outcomes framework, we describe principled definitions of causal effects and of estimation approaches classified according to whether they invoke the no unmeasured confounding assumption (including outcome regression and propensity score-based methods) or an instrumental variable with added assumptions. We mainly focus on continuous outcomes and causal average treatment effects. We discuss interpretation, challenges, and potential pitfalls and illustrate application using a “simulation learner,” that mimics the effect of various breastfeeding interventions on a child's later development. This involves a typical simulation component with generated exposure, covariate, and outcome data inspired by a randomized intervention study. The simulation learner further generates various (linked) exposure types with a set of possible values per observation unit, from which observed as well as potential outcome data are generated. It thus provides true values of several causal effects. R code for data generation and analysis is available on www.ofcaus.org, where SAS and Stata code for analysis is also provided.

1 INTRODUCTION

The literature on causal inference methods and their applications is expanding at an extraordinary rate. In the field of health research, this is fuelled by opportunities found in the rise of electronic health records and the revived aims of evidence-based precision medicine. One wishes to learn from rich data sources how different exposure (or treatment) levels causally affect expected outcomes in specific population strata so as to inform treatment decisions. Neither the mere abundance of data nor the use of a more flexible model paves the road from association to causation.

Experimental studies have the great advantage that treatment assignment is randomized. A simple comparison of outcomes on different randomized arms then yields an intention-to-treat effect as a robust causal effect measure. However, nonexperimental or observational data remain necessary for several reasons. (1) Randomized controlled trials (RCTs) with experimental treatments tend to be conducted in rather selected populations, where the targeted effect is expected to be larger, while groups vulnerable to side effects, such as children or older patients with comorbidities, are often excluded. Informed consent procedures may also lead to restricted trial populations. (2) We may seek to learn about the effect of treatments actually received in these trials, beyond the pragmatic effect of treatment assigned. This calls for an exploration of compliance with the assignment and hence for follow-up exposure data, that is, nonrandomized components of treatment received. (3) In many situations (treatment) decisions need to be taken in the absence of RCT evidence. (4) A wealth of patient data is being gathered in disease registries and other electronic patient records; these often contain more variables, larger sample sizes, and greater population coverage than an RCT. These needs and opportunities push scientists to seek causal answers in observational settings with larger and less selective populations, with longer follow-up, and with a wider range of exposures and outcome types (including quality of life and adverse events).

Statistical causal inference has made great progress over the last quarter century, deriving new estimators for well-defined estimands using new tools such as directed acyclic graphs (DAGs) and structural models for potential outcomes.1-3 However, research papers—both theoretical and applied—tend to select an analysis method without formalizing a clear causal question first, and often describe published conclusions in vague causal terms missing a clear specification of the target of estimation. Typically, when this is specified, that is, there is a well-defined estimand, a range of techniques can yield (asymptotically) unbiased answers under a specific set of assumptions. Several overview papers and tutorials have been published in this field. They are mostly focused, however, on the properties of one particular technique without addressing the topic in its generality. Yet in our experience, much confusion still exists about what exactly is being estimated, for what purpose, by which technique, and under what plausible assumptions. Here, we aim to start from the beginning, considering the most commonly defined causal estimands, the assumptions needed to interpret them meaningfully for various specifications of the exposure variable, and the levels at which we might intervene to achieve different outcomes. In this way, we offer guidance on understanding what questions can be answered using various principled estimation approaches while invoking sensibly structured assumptions.

We illustrate concepts and techniques referring to a case study exemplified by simulated data, inspired by the Promotion of Breastfeeding Intervention Trial (PROBIT),4 a large RCT in which mother-infants pairs across 31 Belarusian maternity hospitals were randomized to receive either standard care or an offer to follow a breastfeeding encouragement program. Aims of the study were to investigate the effect of the program and breastfeeding on a child's later development. We generated simulated data to examine weight achieved at age 3 months as the outcome of interest in relation to a set of exposures defined starting from the intervention and several of its downstream factors. Although our motivating data stem from an RCT, the study also exemplifies questions faced in observational studies when considering downstream exposures, such as adherence to the program or starting breastfeeding. This happens because their relationship with the outcome is confounded by other variables. Our simulation goes beyond mimicking the “observed world” by also simulating for every study participant how different exposures strategies would lead to different potential responses. We call this the simulation learner PROBITsim and refer to the setting as the breastfeeding encouragement program (BEP) example.

Our aim here is to give practical statisticians a compact but principled and rigorous operational basis for applied causal inference for the effect of point (ie, baseline) exposures in a prospective study. We build up concepts, terminology, and notation to express the question of interest and define the targeted causal parameter. We will primarily focus on continuous outcomes where average treatment effects are of interest, although many of the concepts we discuss are valid in general. In Section 2, we lay out the steps to take when conducting this inference, referring to key elements of the data structure and various levels of possible exposure to treatment. Sections 2 also presents the potential outcomes framework with underlying assumptions and formalizes causal effects of interest. In Section 3, we describe PROBITsim, our simulation learner. We then outline various estimation approaches under the no unmeasured confounding assumption and under the instrumental variable assumption in Section 4. We explain how the approaches can be implemented for different types of exposures, and apply the methods in the simulation learner in Section 5. We end with an overview that highlights overlap and specificity of the methods as well as their performance in the context of PROBITsim, and more generally. R code for data generation, R, SAS, and STATA code for analysis, and slides that accompany this material and apply the methods to a second case study are available on www.ofcaus.org and the linked GitHub depository https://github.com/IngWae/Formulating-causal-questions.5

2 FROM SCIENTIFIC QUESTIONS TO CAUSAL PARAMETERS

Causal questions ask what would happen to outcome Y, had exposure A been different from what is observed. To formalize this, we will use the concept of potential outcomes6, 7 that captures the thought process of setting the treatment to values a set of possible treatment values, without changing any preexisting covariates or characteristics of the individual. Let be the potential outcome that would occur if the exposure were set to take the value a, with notation indicating the action of setting A to a. This definition is equivalent to Pearl's do operator, whereby the distribution f of Y when A is set to is denoted by .1 In what follows we will refer to A as either an “exposure” or a “treatment” interchangeably. Since individual-level causal effects can never be observed, we focus on expected causal contrasts in certain populations. In the BEP example there are several linked definitions of treatment; these include “offering a BEP,” “following a BEP,” starting breastfeeding, or “following breastfeeding for 3 full months.” Each of them may require a decision of switching the treatment on or off. Ideally this decision is informed by what outcome to expect following either choice.

It is important that causal contrasts should reflect the research context. Hence in this example one could be interested in evaluating the effectiveness of the program for the total population or in certain subpopulations. However, for some subpopulations the intervention may not be suitable and thus assessing causal effects in such subpopulations would not be useful.

- 1. Define the treatment and its relevant levels/values corresponding to the scientific question of the study.

- 2. Define the outcome that corresponds to the scientific question(s) under study.

- 3. Define the population(s) of interest.

- 4. Formalize the potential outcomes, one for each level of the treatment that the study population could have possibly experienced.

- 5. Specify the target causal effect in terms of a parameter, that is, the estimand, as a (summary) contrast between the potential outcome distributions.

- 6. State the assumptions validating the causal effect estimation from the available data.

- 7. Estimate the target causal effect.

- 8. Evaluate the validity of the assumptions and perform sensitivity analyses as needed.

Explicitly formulating the decision problem one aims to solve or the hypothetical target trial one would ideally like to conduct8 may guide the steps outlined above. In the following we expand on steps 1-5 before introducing the simulation learner in Section 3 and discussing steps 6-8 in Section 4.

2.1 Treatments

Opinions in the causal inference literature differ on how broad the definition of “treatment” may be. Some say that the treatment should be manipulable, like giving a drug or providing a breastfeeding encouragement program.9 Here, we take a more liberal position which would also include for example genetic factors or even (biological) sex as treatments. Whichever the philosophy, considered levels of the treatments to be compared need a clear definition, as discussed below.10

Treatment definitions are by necessity driven by the context in which the study is conducted and the available data. The causal target may thus differ for a policy implementation or a new drug registration, for instance, or whether the data are from an RCT or administrative data. In the BEP example we may wish to define the causal effect of a breastfeeding intervention on the babies' weight at 3 months.

- A1: (randomized) treatment prescription, for example, an encouragement program was offered to pregnant women.

- A2: uptake of the intervention, for example, the woman participated in the program (when offered), which may include talking to a lactation consultant, reading brochures on breastfeeding.

- A3: uptake of the target of intervention, for example, the mother started breastfeeding.

- A4: completion of the target of intervention, for example,the mother started breastfeeding and continued for 3 months.

Each of these treatment definitions Ak, k = 1, … , 4, refers to a particular breastfeeding event taking place (or not). A public health authority will be more interested in A1 because it can only decide to offer the BEP or not; an individual mother's interest will be in the effect of A2, A3, and A4 because she decides whether to participate in the program, to start, and to maintain breastfeeding. For any one, several possible causal contrasts may be of interest and are estimable. See Section 2.6.

It is worth noting that these various definitions are not all clear-cut. For example, while A4 = 1 may be most specific in what it indicates, A4 = 0 represents a whole range of durations of breastfeeding: from “none” to “almost 3 months.” In the same vein, A3 = 1 represents a range of breastfeeding durations that follow initiation, against A3 = 0 which implies no breastfeeding at all. The variation in underlying levels of treatment could be seen as multiple versions of the treatment; we consider this topic further in Section 3.2.

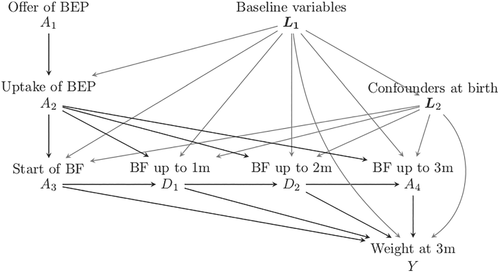

Intervening at a certain stage in the “exposure chain” likely affects downstream exposure levels, as reflected in Figure 1. This is the setup we have used to generate the simulation learner data set (see Section 3), with the BEP being only available to those randomized, and where uptake of the program increases the probability of A3 = 1 and, importantly, also increases breastfeeding duration among women who initiate breastfeeding. There are of course many further aspects of the breastfeeding process that could be considered when defining exposures that are downstream from an initial randomized intervention, for example, maternal diet, the timing and frequency of breastfeeding, exclusive vs predominant breastfeeding, and so on; however for didactic purposes, we shall omit such considerations.

2.2 Outcomes

Similar to the definition of the treatment, it is important to carefully define the outcome Y. In the BEP example, the outcome of interest could be the infant's weight at 3 months, or the increment between birth weight and weight at 3 months or whether the infant is above a certain weight at 3 months. Typically, the distribution of both the absolute weight and weight gain are of interest: a BEP may well increase mean weight at 3 months by 200 g but also increase the number of overweight infants. Clarity of which outcome definition corresponds to the question of interest is therefore crucial.

2.3 Populations and subpopulations

A causal effect will in most cases vary across subgroups due to its dependence on baseline characteristics (effect modification). One may then be interested in the causal effects in several relevant subpopulations. It is therefore important to identify and describe the (sub)population to whom a stated effect pertains. Researchers and policy-makers might want to study whether the breastfeeding intervention is substantially more effective for infants of less educated women who may be at highest risk of being born low weight. Alternatively they could be interested in the effect of treatment in the subpopulation of those who are actually exposed (the “treated,” as discussed above). The definition of these subpopulations involves conditioning on certain characteristics (respectively, education level and treatment received) and leads to focusing on conditional effects (see Section 4.1).

In the next section we will develop causal effects for the different subpopulations. In most settings we want to consider populations of individuals who have the possibility of receiving all treatment levels of interest. This restriction is referred to as the positivity assumption.11 It could be violated, for example, if the target population included women for whom breastfeeding is precluded (because of preexisting or pregnancy-related conditions). Studying the effect of breastfeeding in the subpopulation of infants whose mothers cannot breastfeed (or indeed a larger population that includes this subgroup) may be impossible due to missing information—and indeed irrelevant.

2.4 Potential outcomes

As stated above, a potential outcome is the outcome we would observe if an exposure were set at a certain level a, where indicates the action of setting A to a. This notion needs some additional considerations linking it to the treatments and outcomes definitions given above. Specifically there are two commonly invoked assumptions that help achieve this: no interference and causal consistency.

2.4.1 No interference

No interference means that the impact of treatment on the outcome of individual i is not altered by other individuals being exposed or not. At first sight this is likely justified in our setting: one baby's weight typically does not change because another baby is being breastfed. In resource poor or closely confined settings this could, however, be challenged. For instance, interference would happen when a child is affected by the consequences of a reduced immune system of other children who were not breastfed and hence becomes more susceptible to infectious diseases which may impact their weight at 3 months.

When the assumption of no interference is not met, the potential outcome definition becomes much more complex and involves the treatment assigned to other individuals.12 For example, if there were interference among infants living in the same household, the potential outcome of infant i would be defined not as but as , where infants i1 to belong to the same household as infant i and their breastfeeding status is set to take values (a∗, … , a†).

2.4.2 Causal consistency

The assumption of causal consistency relates the observed outcome to the potential outcomes. Consistency (at an individual level) means that when A = a, hence assuming consistency implies that the observed outcome in our data is the same as the potential outcome that would be realized in response to setting the treatment to the level of the exposure that was observed. This directly affects our interpretation of the estimated causal effect for the study population. It will also affect transportability to new settings in ways that may be hard to predict.

In practice this implies that the mode of receiving as opposed to choosing treatment level A = a per se has no impact on outcome. This may not be the case for many real-life settings. For example “starting breastfeeding” (A3 = 1) potentially has multiple versions as some mothers who initiate breastfeeding may continue to do so for at least 3 months, while others may discontinue sooner. Also, breastfeeding may be exclusive or supplemented, breast milk may be fed at the breast or with a bottle, and so on. Hence it is to be expected that setting A3 to be 1 may translate into different durations and types of breastfeeding, and thus may not lead to the same infant weight at 3 months as when starting breastfeeding is a choice. More generally, it is typically the case that a treatment can come in many variations at some level of resolution. To achieve consistency then a more precise definition of treatment is required, so that observing or setting it is more likely to generate comparable effects. When there are multiple versions of a treatment, one should be aware that the estimated effect averages over the mix of the different versions that occur in the data. To go beyond this and evaluate the effect of different components or different mixes thereof typically demands more assumptions and adapted data analysis. For further discussion see References 13 and 14.

These observations relate to the importance of a well-defined exposure15 and the need to be as precise as the data allow in our definition of treatment.16 Some authors have criticized the restriction imposed by this assumption (and hence by the potential outcomes approach to causal inference10). Being aware of the possibility of multiple versions of treatment should not deter us from pursuing the most relevant definition of treatment: instead it should lead us to greater precision and transparency in formulating the causal question and its transportability.

Note also that the assumption of consistency may be relaxed by rephrasing it at the distributional level (possibly conditional on baseline covariates), in the sense that consistency would concern, for example, the equality of the mean observed outcome of those with observed values A = a and the mean potential outcome had their treatment been set to a. Following this broader definition, any causal interpretation would be applicable only to settings where the distribution of the different versions of treatment equaled that in the analyzed sample.

2.5 Nested potential outcomes

The treatments considered here belong to a chain of exposures: when A1 is set, it has consequences for the “worlds” where A2, A3, and A4 act. Correspondingly, when A3 is set, A1, A2 become baseline covariates with consequences for the worlds that follow (see Figure 1). For example, in a world where a breastfeeding program is available (A1 is set to 1), starting breastfeeding (A3) may have a larger impact on weight at 3 months, because women who breastfeed having followed BEP may be more aware of the beneficial effects of breastfeeding and therefore continue breastfeeding for a longer period (see the paths from A2 to Y via D1, D2, and A4 in Figure 1). Although this article does not enter into the full framework of estimation for dynamic treatment strategies, we can benefit from additional definitions of potential outcomes that recognize the nested nature of the interventions.

Below we define worlds where setting A2 and A3 occurs under alternative scenarios that depend on how A1 was set (and, for A3, how A1 and/or A2 was set). These will be useful for the discussion in Section 2.6.

In the world where BEP is on offer to all (ie, when is set for everyone in the population), the potential outcomes of participating or not participating in the BEP are defined as and . Similarly in the world where BEP is not offered, we may consider the potential outcome of not participating in the BEP defined as . In our example we assumed that the program was only available to the intervention group (ie, is not defined), and that the intervention would only affect outcome if the program was actually followed (ie, ). (In other settings it is conceivable that the mere invitation to BEP comes with advice that may have a direct impact on outcome under ). Setting , here implies that A1 is set to 1; setting can, in the BEP example, happen independently of how A1 is set. The corresponding potential outcomes are therefore denoted by and ).

Similarly, when interest is in the causal effect of A3, the potential outcomes of starting or not starting breastfeeding in the world with BEP on offer are and , and in the world without BEP, they are and . We deliberately omitted setting/fixing the possible level here, because we let it follow the natural course after setting , meaning that women may or may not choose to follow the BEP, after receiving the offer. The effect of breastfeeding in the world where the BEP is offered, may differ from the effect when the BEP is not available, as the BEP may not only affect the probability to start breastfeeding, but also the duration of breastfeeding for those who start.

One could be tempted to evaluate in the study context, using all available data and ignoring A1 and hence effectively averaging over the observed A1, where, by experimental design, for half of the individuals treatment is available and for half it is not. Such a distribution of BEP offer is, however, not a realistic future scenario, and hence this particular average effect measure is usually of no direct relevance.

The effect of breastfeeding may be even larger in the world where all women follow the program (ie, is set, implying also as we assume BEP cannot be followed unless it is offered). Here the potential outcomes of starting breastfeeding or not are and . In the BEP example we assumed that the outcome, when not starting breastfeeding, did not depend on the offer of BEP (ie, there is no path from A1 to Y that does not involve A3). This means that = = , and we can use the simplified notation . Similarly we assumed that the outcome of completing 3 months of breastfeeding, , was independent of the values at which A1 and A2 were set (ie, there are no paths from A1 and A2 to Y that do not involve A4, hence this simplified notation, knowing that A3 is per definition 1 if A4 =1). Table 2 thus lists a selection of the potential outcomes that are relevant to the BEF example.

| Estimand | Definition |

|---|---|

| Effect of program offer () | |

| Average treatment effect | |

| Effect of program uptake () | |

| ATE2 | Average treatment effect |

| ATT2 | Average treatment effect among the treatedb |

| ATNT2 | Average treatment effect among the nontreatedb |

- aIntention-to-treat.

- bNote that the ATT and ATNT for can only be derived from the (random) subgroup A1 = 1 since the program is only available within the randomized trial and to those assigned to it being offered.

| Potential | A1 = 1 | A1 = 1 | A1 = 1 | A1 = 0 | A1 = 0 | Education | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| outcome | Interventions | Overall | A2 = 1 | A2 = 0 | A3 = 1 | A3 = 0 | A3 = 1 | A3 = 0 | Low | Int | High |

| BEP not offered | 6017 | 6047 | 5964 | 6149 | 5733 | 6274 | 5761 | 5914 | 6057 | 6141 | |

| BEP offered | 6115 | 6200 | 5964 | 6292 | 5733 | 6308 | 5923 | 6024 | 6155 | 6207 | |

| BEP not followed | 6017 | 6047 | 5964 | 6149 | 5733 | 6274 | 5761 | 5914 | 6057 | 6141 | |

| BEP followed | 6182 | 6200 | 6149 | 6308 | 5911 | 6329 | 6035 | 6128 | 6208 | 6226 | |

| No BF | 5827 | 5849 | 5788 | 5871 | 5733 | 5893 | 5761 | 5730 | 5854 | 5981 | |

| BEP not offered, BF started | 6214 | 6226 | 6193 | 6251 | 6133 | 6274 | 6153 | 6154 | 6248 | 6246 | |

| BEP offered, BF started | 6249 | 6282 | 6193 | 6292 | 6157 | 6308 | 6191 | 6207 | 6276 | 6262 | |

| BEP followed, BF started | 6277 | 6282 | 6270 | 6308 | 6212 | 6329 | 6225 | 6261 | 6292 | 6266 | |

| Duration BF = 3 months | 6351 | 6345 | 6362 | 6372 | 6307 | 6392 | 6311 | 6393 | 6339 | 6286 | |

- Abbreviations: BEP, breastfeeding encouragement program; BF, breastfeeding; int: intermediate.

- A2 = 1: women who followed the breastfeeding program.

- A2 = 0 and A1 = 1: women who were offered the breastfeeding program but did not follow it

- A3 = 1 and A1 = 1: women who started breastfeeding in the intervention group.

- A3 = 1 and A1 = 0: women who started breastfeeding in the control group.

- A3 = 0 and A1 = 1: women who did not start breastfeeding in the intervention group.

- A3 = 0 and A1 = 0: women who did not start breastfeeding in the control group.

- and : the potential outcome that would occur if randomization A1 were set to take the value 1, 0, respectively.

- and : the potential outcome that would occur if A2 were set to 1 (which implies that A1 is set to 1) or 0. We assumed that the effect of does not depend on whether BEP was available; A1 was set to 1 or 0.

- : the potential outcome under no breastfeeding.

- : The potential outcome under a double intervention with A1 set to 0 and A3 set to 1. Similar for .

- , the effect of completing 3 months of breastfeeding.

- Results for and are equal, because BEP only affects the outcome if the program is followed.

- Results for do not depend on whether A1 or A2 were set to 1 or 0 because BEP only affects Y via A3 and duration of breastfeeding, if started. Hence (). The effect of full 3 months of breastfeeding is not affected by BEP.

2.6 Causal parameters

The next step is to contrast potential outcomes under different settings of exposure variables. We do so by defining an estimand in a well-defined (sub)population. Individual causal effects cannot be computed since each individual can only be assigned to one treatment at a time as, via consistency, one and only one potential outcome can be observed. However, population summary measures can be estimated (under additional assumptions to be discussed below) for different groups, such as the total population or the subpopulation of treated (or untreated) individuals. Also, causal effects can be defined on different scales. In this article we focus on the mean difference as the contrast of interest.

Table 1 describes a selection of causal parameters for exposures A1 and A2. The first estimand for A1 listed in the table is the average treatment effect in the population (ATE1) and corresponds to the question “What would the average infant weight be at 3 months had all mothers been offered the BEP, vs the average infant weight had the mothers not been offered the program?” It is defined as , which is equal to the intention to treat effect (ITT) of the randomized trial.

There are several possible contrasts involving uptake of the intervention A2. We could target the causal question “What would the average infant weight be at 3 months had all mothers attended the BEP, vs the average infant weight had none of the mothers attended the program?” over the whole infant population, leading to . We might also consider this effect only within the population of women who chose to accept the offer and did attend the BEP. The latter would be the ATT. Because in our example the BEP is only available to those who are offered it, the treated population are those with A2 = 1 and A1 = 1; see Table 1. The effect in the population, ATE2 would be of overall interest to the developers of the BEP, as would the average treatment effect in the nontreated (ATNT2) because the latter would quantify the gain to be expected from a more convincing promotion campaign for the current program with larger attendance, that is, a greater P(A2 = 1|A1 = 1). By contrast, ATT2 might be of greater interests to mothers following BEP, as this would provide a measure of the expected benefit from their own uptake of the BEP offer.

Furthermore, causal effects may be heterogeneous across observable strata, for instance if the breastfeeding treatment has different causal effects depending on the education level of the mother. Thus causal effects specific to baseline subgroups would be of interest, for example, the average causal effect among those with low education could be compared with the average causal effect among those with high education. We can also define a causal effect conditional on multiple characteristics such as the expected causal effect of the program in the group of 30-year-old smoking mothers with a child born by caesarian section.

3 THE SIMULATION LEARNER

To illustrate concepts and support our learning, we generated data inspired by a real investigation but enriched by the generation of potential outcome data in addition to “observed” data. We took our inspiration from the Promotion of Breastfeeding Intervention Trial4 (PROBIT). PROBIT randomized mother-infant pairs in clusters to receive either standard care or a breastfeeding encouragement intervention. Unlike the main trial, our simulation randomized individual mother-infant pairs and focused on weight achieved at age 3 months, in a study population of babies surviving the first 3 months. Our simulation learner is therefore not a close replication of PROBIT, as we sought to highlight complexities that were not addressed in the original trial. Our aim was to discuss four (linked) definitions of treatment, for which different causal effects (ATE, ATT, etc) pertaining to corresponding treatment decisions would be of interest. This was achieved by generating realistic confounding patterns and interactions, the latter between some of the confounders and duration of breastfeeding. The confounders considered are depicted in Figure 1. In Appendix 1 (supplementary material) one can read how the mother's level of education and smoking status, just like the infant's birthweight was made to interact with breastfeeding duration to arrive at the causal effects on the expected weight at 3 months. Thus there is no direct relationship between the trial results and the causal estimates obtained from the simulated data (see Appendix 1 for more details).

3.1 Generating the variables

Figure 1 outlines the main relationships among the simulated variables. The baseline variables L1 were mother's age, location of living (urban vs rural and western versus eastern region), level of education (low, intermediate, high), maternal history of allergy, and smoking during pregnancy. The variables related to the infant's birth L2 were sex of child, birth weight, and birth by caesarian section. Thus, L1 are confounders of the relationship between A2 and Y, and (L1, L2) are confounders of the relationship between A3 and Y. The distribution of these variables was made to resemble that of the PROBIT study and the sample size n was set to 17 044, as in that study. Details of the data generation process can be found in Appendix 1 and in the material available at www.ofcaus.org; an overview is given below.

The offer of the program (A1) was assigned randomly, but the uptake of program (A2), starting breastfeeding (A3), and the duration of breastfeeding (A4) were all affected by variables at baseline (L1) or at birth (L2), with their union denoted by the vector L. We made the simplifying assumptions that L2 were unaffected by the program offer, that the program was only available to women in the intervention group, and that the intervention would only affect outcome if the program was actually followed. The odds of following the program after receiving an offer was assumed to depend on maternal age, education, and smoking during pregnancy, such that older and more highly educated women had a higher probability of following the program, while smokers were less likely to do so.

Following the program, that is, A2 = 1, was set to influence weight at 3 months in two ways: it increased the probability of starting breastfeeding, and increased the duration of breastfeeding if started. Older and more highly educated women and women who did not smoke during pregnancy were more likely to start breastfeeding, while having a child with lower birth weight or a baby girl decreased the probability of starting breastfeeding. The uptake of the program, higher age, higher education, not smoking, a higher birth weight, and maternal allergies were set to increase the total duration of breastfeeding, while delivery by caesarian or a having baby boy to lower it. The outcome (weight at 3 months) was set to be affected by the duration of breastfeeding and by the baseline and birth variables, some of which (smoking, education and birth weight) also modified the effect of breastfeeding.

For each woman in the simulated data set, we observed realized values of A1, A2, A3, and A4 and of the weight of the child after 3 months. In addition, several potential outcomes were generated representing the potential weight at 3 months of the child under different interventions on A1, A2, A3, and A4. This means that in our data set, for each woman the potential weight of her child at 3 months is known under different scenarios: if she had received the offer for the BEP, if she had not received the offer, if she had followed the program, if she had or had not started breastfeeding, and if she had continued breastfeeding for 3 months. Our simulations generated correlated potential outcomes, but the causal parameters introduced so far are not affected by this. We see this as an advantage since there is an intrinsic lack of information on the joint distribution of the potential outcomes in observed data. Table 2 gives the expected value of the different potential outcomes overall and in specific strata (subpopulations). These values were obtained from a very large simulated data set of five million observations and are here considered to represent the truth.

3.2 Different causal contrasts

From Table 2 we can derive several true causal contrasts. For example the average treatment effect (ATE) of the BEP offer is g. This effect may be of interest to policy makers as it is the overall mean change in infant weight at 3 months due to inviting expectant women to attend the BEP. Comparing the scenario where everyone actually receives the offer and follows the BEP with no program, the expected weight gain is g. Among women who actually follow the program (the treated), the effect of BEP uptake is = 153 g. The effect of participating in the BEP among women who have the opportunity to follow it but opt not to, is = 185 g. ATNT2 is larger than ATT2 because women who would benefit most from the BEP were, in our simulated data set, less inclined to follow it.

In this tutorial, we are treating A1, A2, A3, and A4 as point exposures, that is, as exposures to be examined separately, with any previous exposures in the chain treated as background variables. In other words, for each targeted treatment, we consider the time point at which it is implemented. We then ask about the impact of setting this treatment to a given value, conditional on background information. In the setting of our study: when A3, the decision to start breastfeeding is implemented, the values of A1 and A2 are already known and the baby has been born. The set of information carried by A1 and A2 could be treated as baseline information, like L, conditional on which the effect of starting breastfeeding is measured.

Alternatively, we could consider the joint impact of multiple interventions. Using the nested potential outcomes notation introduced in Section 2.5, we could address the question “What would the average infant weight at 3 months be, had all mothers started breastfeeding vs the average infant weight had they not started at all?” under different worlds where A1 and A2 are set to take different values. In the world without BEP, the answer would be = 387 g. In the world where the BEP is offered, the gain in weight at 3 months would be substantially higher: g. The weight gain in the world where everyone followed the program would be g. This is the largest effect because, in the simulation, BEP increases the mean duration of breastfeeding. In general there are greater average potential outcomes with increased intensity of the joint interventions.

The average treatment effect in the treated (with respect to A3) also differs between randomization worlds because more women among those randomized to receive the BEP will start breastfeeding than in the control group. The effect of breastfeeding in those who started breastfeeding and are in the intervention arm (ie, A1 = 1) is equal to g, and the effect of breastfeeding in those who started breastfeeding but are in the control arm (ie, A1 = 0) is g. The average effect of breastfeeding in those who did not start breastfeeding is g when the program is available and g when not.

We could also ask the question “What would the average infant weight at 3 months be, had all mothers breastfeed for 3 months vs the average infant weight had they not started at all?” As noted before, setting A4 = 0 will include a very heterogeneous set of breastfeeding behaviors, as well as not breastfeeding at all. A more refined question would restrict the comparison to a setting where there is no breastfeeding at all, that is, g.

When implementing an intervention, it is of interest to identify those subgroups for which the intervention is most beneficial. Table 2, for example, shows that the infants of mothers in the lowest stratum of education would gain more than those of mothers in the highest, both when the intervention is offering the program g and when the intervention is following the program g, as opposed to 66 and 85 g for women in the highest stratum of education.

Some of the causal effects described above are not realistic. For example, the largest causal contrast is the expected weight gain when every infant is breastfed for the full 3 months vs the expected weight gain when no one is breastfed (524 g above). However not all women can or wish to start breastfeeding (nor would all women willingly refrain from it). As alluded to in the discussion of positivity in Section 2.3, a woman who is very ill at the end of pregnancy may not have the option of breastfeeding her baby because of toxicity of prescribed medication or ill-health. It follows that considering the intervention where every woman continues breastfeeding for the full 3 months is even less realistic. It is important to define the causal question precisely in a pertinent population before turning to estimation.

4 PRINCIPLED ESTIMATION APPROACHES

The estimation approaches discussed here rely on further assumptions in addition to those outlined in Section 2.4. These can be classified according to whether or not they invoke the no unmeasured confounding (NUC) assumption which states that the received treatment is independent of the potential outcomes, given covariates L. Formally, the NUC assumption states: and , where, hereafter A denotes a binary exposure. In other words, the assumptions states that a sufficient set of variables L that confound the exposure/outcome relationship have been measured and are available to the analyst.

The estimation approaches that rely on the NUC assumption include standard outcome regression and propensity score (PS) based methods such as PS stratification, regression adjustment, matching, and inverse probability weighting. These are reviewed below. Alternatively, if an instrumental variable (IV) is available, IV methods can be used by also invoking additional assumptions in place of NUC. IV definitions an assumptions are described in Section 4.2.

4.1 Methods based on the no unmeasured confounders assumption

When a sufficient set of confounders L is measured, the causal effect of treatment can be estimated by comparing observed outcomes between the treated and untreated people with identical values for L. Such direct control for L may be done in different ways: by regression or stratification or matching. We discuss these approaches in the next subsections.

4.1.1 Initial data summary and the propensity score

Before proceeding with the analysis one should examine how treatment groups differ in their population mix—that is, examine the imbalance in covariates between treatment groups as exemplified in Appendix 2 (supplementary material). The existence of substantial residual imbalance could lead to residual confounding in the effect estimate and may call for a sensitivity analysis.

When L includes only few variables, this balance check can be achieved visually (eg, using balancing plots as in Appendix 2, Figures 2, 5, and 6) or by reporting mean or percentage differences between treatment groups for each variable, as in Appendix 2, Table 1. With high-dimensional L this information is preferably summarized through the propensity score. The propensity score (PS) is the probability of being treated conditional on the covariates, e(L) = P(A = 1|L).17 The PS is an important function of the covariates that reduces the (possibly high-dimensional) vector L into a scalar containing all measured information that is relevant for the treatment assignment in relation to the outcome. This property score enjoys the so-called balancing property, meaning that the covariate distributions of the treated and nontreated are exchangeable (the same) when conditioning on the PS. Intuitively, the role of the PS can be thought of as one of restoring balance between treated and untreated groups once conditioned upon. For example, if we were to compare all treated subjects with untreated subjects who all had the same value of the PS, the distribution of the covariates L would be the same, much like in a randomized trial. However unlike in a randomized trial, balance is not achieved between the treated and untreated groups for any covariates that were not included in the PS. The balancing property implies that all relevant confounding information in L is contained in e(L), so that if , then also . This implies that e(L) can be used instead of the full vector L.

The PS is estimated from the data, usually by fitting a parametric (eg, logistic regression) model for the probability of being treated given the confounding variables, although a variety of other approaches can be employed including tree-based classification.18 However derived, the adequacy of the estimated PS, , as a balancing summary of the confounder distributions across treatment groups must be evaluated19 by checking whether ). While balance of the joint distribution of the confounders L is required, in practice balance is often assessed for each confounder L ∈ L separately by comparing standardized mean differences, variance ratios, and other distributional statistics and plots such as empirical cumulative distribution plots, between the treated and untreated groups after weighting, stratification, or matching by the estimated PS.20 We illustrate some of these checks in Appendix 2. To date, variable selection for PS modeling is done largely on a trial and error basis, beginning with a model thought to contain all relevant confounders and adding higher order terms (polynomials, interactions) if balance appears not to have been achieved.21

The PS can also be used to examine the positivity assumption by checking for overlap of the propensity score distribution of those who are treated and those who are not. For this reason, automatic variable selection approaches (eg, stepwise) or prediction-based measures of fit (eg, C-statistic), which seek best prediction of treatment allocation when specifying the PS model, may not provide the best balance for the confounders and favor variables that are strongly predictive of the treatment, even if they are only weakly or not at all predictive of the outcome.22

4.1.2 Outcome regression

Assuming no interference, consistency, and NUC, in (2) is interpreted as the average causal effect of A, that is, ATE = A. In the presence of interactions in (1) is the causal effect of A in the reference category of L, that is, where L = 0 if f(L, A) = 0 occurs whenever L = 0.

To estimate causal parameters such as those shown in Table 1, the additional step of marginalizing ATEL over the distribution of L is needed,.

These estimands can be estimated by where n is the sample size. When there are no treatment-covariate interactions (ie, is a vector of zeroes), then the ATE equals and its standard error can be taken directly from the fitted model that does not include any interactions. Otherwise, a standard error accounting for the correlation between and as well as estimation of E[L] must be computed either analytically or via a bootstrap procedure.

Concerns about model misspecification may be reduced by using a more flexible model for the outcome. For example, we may consider transformations of L such as splines to specify f(L, A), leading to a less parametric model which, however, requires estimation of a greater number of parameters. An additional concern is the possibility that a chosen outcome model leads to extrapolations outside of the data cloud (in other words, to lack of positivity). Users should therefore be aware of this and adopt methods discussed above to assess whether lack of positivity is an issue.

4.1.3 Stratification and PS matching

- 1. Choose a matching criterion, Ci, i′ such as nearest neighbor, the Mahalanobis distance, or vector norm, and implement a matching method given the criterion. The criterion may be applied to L or to a summary such as the PS, .

- 2. Evaluate the quality of the matched sample by carrying out balancing checks described above.

- 3. If balance is not satisfactory, return to step 1.

There are several factors to consider in a matched analysis, such as the number of matches per individual, M; whether to match with or without replacement; if matching without replacement, whether to use greedy matching or the more computationally intensive optimal matching. A discussion of the relative merits and the impact of these choices on bias and variance can be found in a review by Stuart.26 If balance remains unsatisfactory or to increase robustness, outcome regression as described in Section 4.1.2 can be performed within the matched sample.

Several standard softwares include packages that implement matching and, in some cases, covariate balance checks. Note that the bootstrap should not be used to compute standard errors following matching, and that suitable standard errors depend on how the matching was carried out (eg, whether with replacement or not).27

4.1.4 Inverse probability weighting

As before, if there are many people with a given set of characteristics who are treated, but few who are not treated, then P(Ai = 0|L = li) will be “small” and its inverse “large” so that these people are upweighted. This approach is well-known in the survey sampling literature,29 where it is used to adjust for unequal sampling fractions—typically the oversampling of certain smaller but important subgroups in a population. When the weights are extreme, they may be truncated or normalized.30

- 1. Fitting the PS model, for example, logistic regression model for the probability of being treated given L.

- 2.

Calculating the weights:

- (a)

Use the fitted PS to predict the probability that a person received the treatment s/he did in fact receive.

- (b)

Set each individual's weight to one over the probability computed in (2a). “Stabilize” this weight by including the simple probability of being treated with the observed treatment in the numerator.

- (c)

Check the confounders' balance in the weighted sample. If balance is inadequate, return to step 1 and improve the PS model specification by involving the unbalanced confounders.

- (a)

- 3.

Fitting the outcome model: weighting each individual by the weights computed in (2b), fit a regression model for the outcome given the treatment. The treatment coefficient is an estimate of the ATE.

Following the estimation procedure above, standard errors must be computed analytically or via bootstrap to account for estimation of the weights. Robust or empirical standard errors provide reasonable coverage, although they do not explicitly account for the fitting of the PS model.

Care must be taken as, in practice, a small number of large weights can be highly influential, though this may be mitigated through ad hoc but effective solutions such as shrinking of the largest weights to a smaller value such as the 99th percentile of the weight distribution (often referred to as truncation or sometimes called “capping”).

4.1.5 A hybrid approach: Doubly robust estimation

Bang and Robins,31 building on Scharfstein et al,32 reformulated the augmented estimator, noting that it can be viewed as an unweighted regression that includes the inverse of the PS as a covariate. It appears that unlike for the PS model, one can use separate regularized regressions for the outcome and propensity score models to derive a doubly robust “g-estimator” with standard confidence intervals that are correct given the variable selection procedure (see, eg, References 33-36). The bias otherwise induced by shrinkage of the coefficients in penalized regression models is counteracted by propensity-based adjustments with doubly robust estimation.

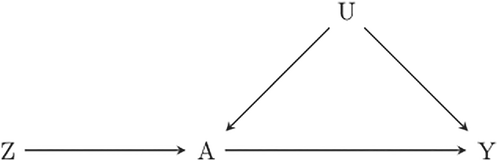

4.2 Instrumental variable based methods

All methods described so far yield valid estimates under the NUC assumption. This assumption is easily violated in observational studies, where the prognosis of patients tends to determine the choice of treatment and the reasons for a specific treatment choice are seldom completely registered or, more generally, the exposure level and outcome are influenced by unmeasured factors. One alternative approach is an instrumental variable (IV) analysis which can handle both measured and unmeasured confounding. Asymptotically unbiased estimation results once a “pseudo-random variable” or so called “instrumental variable” is identified and some additional assumptions hold. The method originates from econometrics37, 38, with extensions such as generalized difference in difference (DiD) methods and control function models39-41 These methods are also becoming increasingly popular in medical research. The literature on IV, with examples, is vast.42-46 We will discuss here the general IV assumptions, typical causal estimands, and the corresponding estimation procedures that are most commonly used. To focus on the principles here, our formalization below ignores measured baseline covariates (which we have been denoting L), although the approach extends quite naturally to conditioning on them. The unmeasured confounder(s) are denoted here by U.

An IV analysis aims to resemble that of a RCT, by using one or more variables (instruments) associated with treatment, but not in any other way related to the outcome. The instrument can be seen as a surrogate for randomization. This is depicted in Figure 2 where Z, the instrumental variable, is associated with A (the figure suggests a causal relation but that is not necessary, association is sufficient). The instrument Z is related to response Y only via the treatment A; and the instrument is independent of unmeasured confounders U.

Instrumental variable analysis can be used in trials to study the effect of noncompliance,37, 47, 48 as in our BEP example, where randomization to the offer of the breastfeeding program could be used as instrument for attending the program. Variation in preference for a certain treatment among physicians49, 50 or variation in treatment policies among medical centers51 are other examples of variables which can be considered close to pseudorandomization for treatment or policy assignment. When physicians have strong preferences for one or another treatment, identical patients may receive different treatments; a variable measuring the physician's preference, like the percentage of prescriptions A = 1 in a certain time window, could be used here as an instrument. Another popular IV approach is found in so-called “Mendelian randomization” studies where genetic variation takes the role of the instrumental variable.52, 53

4.2.1 The three core IV assumptions

- IV1

Z is associated with the treatment A of interest;

- IV2

Z is independent of any unmeasured confounders of the A → Y relationship;

- IV3

Z is independent of the outcome Y conditional on treatment A and unmeasured confounders U.

Unfortunately only assumption IV1 can be empirically checked in the data.54 Assumptions IV2 and IV3 are not verifiable in the data: only their plausibility can be examined. For example, the observation that Z is independent of all observed confounders makes assumption IV2 more plausible. Situations in which these assumptions are likely or unlikely to hold are discussed for Mendelian randomization and for physician's preference by several authors.42, 52, 53 When Z is an IV and the assumptions of no interference, consistency and positivity hold, IV-based estimation does not require the NUC assumption to lead to an estimator. However, an IV estimator on its own can only provide bounds for causal treatment effect.55, 56 These bounds are generally so wide that they are not useful. In order to obtain point estimates, additional assumptions are needed as discussed below.

4.2.2 Additional assumptions to obtain an effect estimate

In this SMM, the homogeneity assumption is less strong and only requires that does not depend on Z. For A = 0 we obtain , which is exactly the (mean) consistency assumption for . For A = 1 we obtain . Since because of the consistency assumption, the parameter in this SMM equals the ATT. Robins58 showed that in this model is exactly equal to the IV estimand (8). Baseline covariates L can be added to this model, including interactions between L and exposures A.47 Other homogeneity assumptions for IV estimation are possible, see Reference 43 for an overview.

An alternative assumption is monotonicity of the effect of Z on A. We discuss monotonicity briefly for a binary instrument Z that causally affects a binary treatment A. Defining as the value of A when Z is set to z ∈ {0, 1}, four types of individuals can be identified: (1) always takers: those with , that is, individuals who will take the treatment regardless of the value of the instrument; (2) never takers: those with ; (3) compliers: those with and ; and (4) defiers: those with and .

The above section shows that the interpretation of an IV analysis depends on the choice of the additional assumptions, under homogeneity assumptions the ATE or ATT is the estimand being targeted, while under monotonicity assumptions the CACE is the target estimand.

4.2.3 Standard IV estimation

The traditional IV estimator (10) can be equivalently obtained through a two stage linear regression (2SLS) approach. In the first stage, a linear (OLS) regression model is fitted with treatment A as dependent variable and the instrument Z as an independent variable (and optionally measured confounders L), yielding for each subject . In the second stage, a linear regression model is fitted to the outcome Y on (and possibly L65). The regression coefficient for is the IV estimator of the treatment effect.

Estimating coefficients in structural mean models can be done by defining a set of unbiased estimating equations. For the simple SMM (9) the solution is equal to the Wald estimator.47 This amounts more generally to G-estimation.23, 66

Many authors apply 2SLS methods to binary outcomes by fitting linear regression outcome models and hence yielding estimates of risk differences. This is not advisable when also including covariates L as the fitted model may predict outcome values >1 or <0. Extending the two-stage approach to a logistic regression outcome model is hampered by the nonlinearity of the logistic model. A two-stage approach with a linear model in the first stage and a logistic model in the second stage can only be used to obtain IV estimates of odds ratios if the outcome is rare. Otherwise, an alternative may be to use logistic structural mean models.59, 67, 68

4.2.4 When are IV methods useful?

We have discussed the IV assumptions needed to estimate causal treatment effects. Although many IV estimators are consistent, in finite samples instrumental variable estimators are generally biased. The bias depends on the sample size and on the strength of the instrument (ie, the correlation between Z and A).69 Furthermore, IV estimates are very sensitive to deviations from the IV assumptions. A small association between the unmeasured confounders and the instrument can lead to substantial bias especially if the instrument is weak.57, 69 Moreover, weak instruments yield very imprecise IV estimates and often (very) large sample sizes are needed to obtain informative results.70 This implies that instruments should be strongly correlated to the treatment. There is however a trade-off between the amount of unmeasured confounding and the strength of the instrument: an instrument cannot be strong if there is substantial unmeasured confounding57 and a strong instrument implies weak unmeasured confounding.

To summarize, an instrumental variable analysis may be useful in the following situations: (1) the amount of expected unmeasured confounding is substantial, (2) an instrument exists for which the core IV assumptions are plausible and additionally a fourth assumption to interpret the point estimate can be sensibly invoked, (3) the instrument is sufficiently strong, and (4) sample sizes are sufficiently large (when instruments are weak, required sizes may be in the order of several thousands of subjects). Otherwise methods assuming NUC should be considered, while also maximizing the number of measured confounders. Although approaches relying on NUC yield biased estimators if unmeasured confounding is present, the direction of the bias is often known and the size of the bias may be approximated in sensitivity analyses.

4.3 Choosing an estimation method

Table 3 reviews several points that go to the heart of which causal estimands are meaningful and relevant in the specific setting represented by our case study. An accompanying Table 4 summarizes the main assumptions that are invoked by the various methods reviewed in this section when aiming to estimate the ATE (in addition to no interference and causal consistency). The table is self-explanatory and highlights that the core difference lies in whether we are prepared or not to assume NUC, given a vector of measured confounders L. However it is worth stressing these additional points.

| Exposure | Estimand | Comments |

|---|---|---|

| A1 | ITT effect | Randomization ensures unbiased estimation using simple contrasts |

| A3 | ATE|A1 = 1, or ATE|A1 = 0 | Effect of starting breastfeeding in a world where all (or no) women are offered the program. If we do not condition on A1, then we mix the two populations (or two “worlds”), which would never coexist outside of a trial where only half of women are offered the intervention. Furthermore, A2 is an effect modifier. Thus, correct specification of the outcome model requires an A2A3 term, and the ATE must then marginalize over the distribution of A2. Note that the conditioning on A1 is not relevant for estimating the causal effect of A2, as A1 has the role of an instrument for A2, but not for A3 or indeed for A4 |

| A4 | ATE|A3 = 1 | There is no support in the data for an effect of A4 in women with A3 = 0. Note also that A4 = 0 is a mixture of durations of breastfeeding, potentially from 1 day up to just shy of 3 months. The consistency assumption implies that its estimated effect refers to settings with the same distribution of breastfeeding discontinuation times. An equivalent statement holds for the interpretation of A3 = 0 in the row above |

- Abbreviation: ITT = Intention-to-treat.

| Assumptions | |||||

|---|---|---|---|---|---|

| Correct specification of | |||||

| Method | NUC | Y model | PSa model | Core IV assumption | No Z-A interaction |

| Outcome regression | |||||

| conditional on L | |||||

| conditional on PS = e(L) | |||||

| Stratification by e(L) | |||||

| Matching by e(L) | |||||

| IPW by e(L) | |||||

| DR via L and e(L) | Either | Or | |||

| IV Z | |||||

- aEither of these if the outcome model is linear.

- Outcome regression assumes a correct specification of the outcome model.

- PS-matching and PS stratification assume that the PS balances the confounder distribution.

- IPW assumes that the PS model is correctly specified given a sufficient set of confounders.

- Linear outcome regression models that condition on the estimated PS, as opposed to the original vector of confounders L, require that either the outcome model or the PS model is correct and that the treatment effect does not vary with the PS.71

- The specification of the PS model should achieve balancing of the distribution of the measured confounders across treatment arms. Achieving this aim is substantially different from achieving treatment prediction, and hence the criteria used for the latter do not apply here.

- In general, outcome regression is more efficient than a PS-based method.

- The choice between PS-based methods (ie, stratifying, regression adjustment, matching, and IPW) depends on efficiency is an issue. Weighting may be inefficient (unless a doubly robust approach is used) if there are subjects with a very high or low PS value; matching has a trade-off between a close match (which implies loss of efficiency because not all subjects are matched) vs residual confounding. PS-regression adjustment has the advantage that it is robust against misspecification of the outcome model when the PS model is correctly specified. It can also be made more efficient with the inclusion of a selection of elements in L.23

- IV estimation replaces the NUC assumption with other rather stringent assumptions.

- IV methods yield estimates that are very inefficient when instruments are weak and suffer from small sample bias.69

With any given approach come choices in implementation that imply a trade-off between bias and variance. For example, in the context of PS matching, the use of smaller calipers to determine a match will reduce bias but may lead to a smaller matched sample and hence loss in efficiency. In PS-inverse weighting, the use of weight truncation to reduce the influence of a small number of points has the effect of decreasing the variance at the cost of introducing some bias. It is hence impossible to recommend a single “best” approach, but rather choices are specific to the context where researchers must balance bias, statistical efficiency, and in some cases computational efficiency.

5 RESULTS FROM THE SIMULATION LEARNER

We applied the methods discussed in the previous section to estimate the ATE and the ATT of A1, A2, and A3 on weight at 3 months using the data from the simulation learner PROBITsim. More details and the code used to produce the reported results are given in Appendix 2 and in the material available at www.ofcaus.org.

5.1 Effect of the randomized program offer (A1)

First we estimate the causal effect of the randomized offer of the BEP (A1) on weight at 3 months. This is simply the difference in mean weight at 3 months between those with A1 = 1 and A1 = 0 because A1 is randomized. This is also an estimate of the intention-to-treat (ITT) effect, in this case an “intention to educate,” and is most relevant for health policy makers. This estimate is 94.2 g (95% confidence interval: 76.4 to 112.0 g). It indicates that inviting all expecting mothers in the study population to attend this specific program increases their baby's weight, on average, by 94 g. The true value obtained from Table 2 was 98 g and is well within the confidence interval.

5.2 Effect of program uptake (A2)

Table 5 shows the estimated ATE for A2, which is the effect most directly relevant to women deciding whether or not to attend the program if offered. We also show the corresponding estimated ATT. In Section 3 we showed that the true ATE2 was greater than ATT2 (165.1 vs 152.8 g), whereby the treated, that is, the mothers who attended the program, were on average, more educated and their infants had higher weight at 3 months but smaller increases from attending the program. We estimated these target parameters under different assumptions and model specifications, starting from crude estimates where confounding is ignored ( g and g). We then controlled for measured confounding via outcome regression, adopting two alternative model specifications that included all the potential confounders for the A2 to weight at 3 months relationship: maternal age, education, allergy status, smoking during pregnancy, and area of residence. In the first specification we included a quadratic term for maternal age, and in the second we also included interactions between A2 and each confounder. The first led to g and the second to g, much closer to the true value of 165.1 g.

| Estimand | Estimation method | Estimate (SE) |

|---|---|---|

| ATE | ||

| True value | 165.1 | |

| Crude regression | 196.0 (9.6) | |

| Regression adjustment (without interactions) | 155.4 (9.5) | |

| Regression adjustment (with interactions) | 165.0 (9.7) | |

| PS stratificationb (six strata) | 165.0 (9.4) | |

| Regression with PSb | 156.2 (9.0) | |

| PS matching (one match)c | 155.7 (10.1) | |

| PS matching (three matches)c | 154.9 (10.1) | |

| PS IPWb | 164.7 (9.3) | |

| PS DR IPWb | 164.7 (9.7) | |

| IV | 146.2 (14.0) | |

| ATT | ||

| True value | 152.8 | |

| Regression adjustment (with interactions) | 148.7 (9.4) | |

| PS stratificationb (six strata) | 148.7 (9.6) | |

| PS matching (one match)c | 145.8 (9.8) | |

| PS matching (three matches)c | 145.4 (9.7) | |

| PS IPWb | 148.0 (9.6) |

- aThe variables controlled for in each of these analyses were: maternal age (linear and quadratic term), maternal education, maternal allergy status, smoking status in the first trimester (ie, before program allocation), and area of residence.

- bSE estimated by bootstrap with 1000 replications.

- cSE estimated according to Abadie and Imbens (2012), assuming that the conditional outcome variance is homoscedastic, that is, does not vary with the covariates or treatment. This is implemented in Stata with the option vce(iid). This assumption can be relaxed using the option vce(robust, nn(2)) for the one match analysis and vce(robust, nn(4)) for the three matches analysis.

When applying the PS-based methods, we fitted the PS model by logistic regression with the same confounders (including the quadratic term for maternal age). Stratification (over six strata) led to the same estimates as the more general outcome regression models ( = 165.0 and g), while matching, either to 1 or 3 other infants, led to slightly smaller and less precise estimates. Balance checks revealed that the PS model was well specified (see Appendix 2). Adopting inverse weighting or doubly robust estimation gave point estimates and standard errors close to those from outcome regression.

The reported IV estimate used A1 as the instrument and assumed no A1–A2 interaction to be interpreted as an ATE. This was estimated at 146.2 g and, as expected, has a very large estimated standard error.

5.3 Effect of starting breastfeeding (A3)

The estimated ATE and ATT for the effect of A3 on infant weight at 3 months are found in Table 6. As before they are obtained under different assumptions and using different methods. As their true values depend on whether the exposure is set in a world where the BEP is or not present, results are reported separately under these two scenarios.

| A1 = 0 | A1 = 1 | ||

|---|---|---|---|

| Estimand | Estimation method | Estimate (SE) | Estimate (SE) |

| ATE | |||

| True value | 386.8 | 422.3 | |

| Crude regression | 503.2 (11.6) | 582.0 (12.2) | |

| Regression adjustment (without interactions) | 384.3 (2.8) | 428.0 (3.3) | |

| Regression adjustment (with interactions) | 384.7 (3.3) | 425.3 (2.7) | |

| Regression with PSb | 384.4 (3.2) | 425.9 (3.3) | |

| PS stratificationb (6 strata) | 392.2 (4.1) | 442.0 (6.7) | |

| PS matching (one match)c | 386.5 (13.7) | 429.0 (17.4) | |

| PS matching (three matches)c | 380.7 (12.4) | 437.2 (15.2) | |

| PS IPWb | 384.7 (4.0) | 426.6 (6.9) | |

| PS DR IPWb | 384.8 (3.9) | 426.7 (7.1) | |

| ATT | |||

| True value | 380.1 | 421.4 | |

| Regression adjustment (with interactions) | 378.0 (2.9) | 421.7 (2.5) | |

| PS stratificationb (six strata) | 388.8 (5.1) | 438.3 (9.5) | |

| PS matching (one match)c | 384.3 (15.8) | 435.6 (21.2) | |

| PS matching (three matches)c | 387.9 (13.5) | 441.2 (18.0) | |

| PS IPWb | 381.9 (5.1) | 429.2 (10.1) |

- aThe variables controlled for in each of these analyses were: maternal age (linear and quadratic term), maternal education, maternal allergy status, smoking status in the first trimester (ie, before program allocation), area of residence, baby's birth weight (linear and quadratic term), whether birth was by caesarian section and, in the analyses restricted to A1 = 1, whether the mother attended the program.

- bSE estimated by bootstrap with 1000 replications.

- cSE estimated according to Abadie and Imbens (2012), assuming that the conditional outcome variance is homoscedastic, that is, does not vary with the covariates or treatment. This is implemented in Stata with the option vce(iid). This assumption can be relaxed using the option vce(robust, nn(2)) for the one match analysis and vce(robust, nn(4)) for the three matches analysis.

Note also that the true average potential outcome in the world where no program was offered but all mothers start breastfeeding was lower than in the world where BEP is offered to all mothers and they all start breastfeeding (Table 6, rows 8 and 9) because of the effect of the BEP on breastfeeding duration. This impacts on the causal effect of breastfeeding: when A1 is set at 0, that is, no BEP is available to anyone, the effect of starting breastfeeding is g and g; while when A1 set to 1, g and g.

The confounders of the A3 to weight at 3 months relationship include not only maternal age, education, allergy status, smoking during pregnancy, and area of residence (ie, those involved in the analyses of A2) but also the infant's sex, birth weight (including a quadratic term), and whether the infant was born by caesarian section. In the analyses concerning the world where A1 is set to be 1, A2 is also a confounder as it influences both A3 and infant weight.

There is little difference across the ATE estimates, obtained using either outcome regression or PS-based methods: the results are all very similar and standard errors, while variable, all still lead to the conclusion that A3 meaningfully and statistically affects the outcome. Balance checks for these two scenarios revealed that the PS model was relatively well specified in both, and there was good overlap in propensity of exposure between the groups defined by A2 and A3 (see Appendix 2).

We do not produce an equivalent IV estimate as there is no suitable IV for this effect, since A1 violates the second IV assumption: A1 influences the outcome not only via A3 but also via A2.

For the ATT estimates, regression adjustment seems to perform better than the other methods, especially in the world where A1 = 1. Of course, our simulation learner has generated just one relatively simple world model where both our outcome and propensity model are easy to specify.

6 DISCUSSION

We set out to discuss “the making of” a causal effect question involving a well-defined point exposure for which we seek to find the average treatment effect, possibly conditional on baseline characteristics. We have maintained an emphasis on the framing of the scientific causal question, and in considering many methods together, in their basic form, so as to compare and contrast the required assumptions of different principled estimation approaches for directly targeted estimands.

We applied the concept of principled estimation in turn to four different options for exposure levels which present themselves along the path from treatment prescription to completion. As we moved with the selected exposure along this path, the sufficient set of baseline confounders (and effect modifiers) became richer, and we had to account for what happened earlier in the path. In doing so, we saw that we cannot treat randomization as “once an instrument, always an instrument.” Rather, randomization (our A1) may act as an instrument for the effect of following the program (A2), but it violated the assumptions required for it to be an instrument when studying the effect of “starting breastfeeding” (A3). At every instance, thought is required to adapt to the new situation and estimate a relevant causal effect in a (sub)population of interest.

In a similar vein, confounders that act as effect modifiers could be conditioned on to estimate average causal effects within specific population strata (or by including interaction terms). Subsequently, we can average over their distribution in the population of interest. With additional averaging, we lose some ability to offer stratified evidence and provide personalized information but uphold a more global public health perspective. This pertains to both the ATE and ATT target.

For selected estimands, we showed how the various estimating approaches perform in their most basic form. We recognized that many of them operate under similar identifying assumptions. For example, the different propensity methods all assume correct specification of the PS model, and when choosing one of the methods one should consider additional issues. For the stratification, the choice of the number of strata and residual bias, for the matching the trade-off between finding matched individuals and the fineness of the matching, and for the inverse probability weighting, the size of the weights, truncation. Of course, differences remain in operating characteristics when key (untestable) assumptions are violated. The list of available approaches under the NUC assumption includes familiar standardized means derived from the classic regression of outcome on baseline covariates and the exposure. This need not perform worse, and can even be better than more novel PS-based methods that seek covariate balance after using the propensity score for regression, matching, stratification, or inverse probability weighting. Doubly robust methods may be expected to outperform others when one set of model assumptions is violated, but equally loses precision (increases error) when both the outcome regression and PS model are ill-fitting, and may be inefficient in finite samples when only one model is correctly specified.65

When we cannot find a sufficient set of confounders, instrumental variable approaches form an appealing alternative provided an instrument can be found. To interpret the resulting estimator additional assumptions are needed that are not always easy to justify; and one should consider whether those can lead to very broad confidence intervals. There are other alternative routes still, such as regression discontinuity approaches for instance,72 a variation on pseudorandomization that is found in specific designs.