A Gap in Reporting: Student Demographic Information in Academic Universal Screening Studies

ABSTRACT

Universal screening is an essential practice in any multi-tiered system of support, but without understanding the demographic makeup of students in studies that inform current practices, the effectiveness in which instruments and screening approaches work best for students of demographically diverse backgrounds cannot be assessed. In this systematic review of eight major school psychology journals from 2001 to early 2024, 39 academic universal screening studies were identified. We summarized the reporting practices of the demographic makeup of samples and the types of measures used. When compared to national averages, Black students and students receiving free or reduced-price lunch were underrepresented, while White students were overrepresented. Only six studies fully disaggregated results by racial and ethnic categories. Study results underscore the need for improved data reporting practices across school psychology journals.

Practitioner Points

-

Curriculum-based measurements are the most common type of universal screening measure.

-

Black students and students receiving free or reduced-price lunch were underrepresented in academic universal screening research, and White students were overrepresented.

-

Few studies disaggregate their results demographically so practitioners can see how different assessments classify student risk levels.

Universal screening is a core element of prevention focused frameworks such as Response-to-Intervention (RTI) or Multi-Tiered System of Support (MTSS). When following ideal RTI design, systematic screening can identify which students are meeting academic expectations and which students need intervention before the onset of more serious concerns (Jenkins et al. 2007). Educators conduct universal screening by administering a brief assessment to all students that target an academic domain or specific skill and students’ performance is compared to a pre-defined benchmark, or threshold to assess proficiency. Students found to be at-risk are matched to academic interventions targeting their skill deficits. Beyond assessing individual student risk status, overall trends in proficiency rates can be evaluated to determine whether classwide intervention is warranted (VanDerHeyden 2013).

When selecting a universal screening tool, consideration should be given to a measure's criterion validity and classification accuracy, as well as the outcome the tool is predicting to (Jenkins et al. 2007). Screeners should be correlated to measures that are known to assess the skill that is being purported to measure, but beyond this, the measures should correctly identify students who are at risk and who are not (Glover and Albers 2007; Jenkins et al. 2007). Most often priority is given to tools that have demonstrated the ability to predict risk status on measures that have high consequential validity (i.e., end of year state tests; Clemens et al. 2016). Consideration should also be given to the degree to which students in a given school match the normative sample that psychometric evidence was gathered from to ensure that the tool fit the needs of the students both developmentally and contextually (Glover and Albers 2007).

Beyond the general ideal properties of universal screening tools, universal screening practices can vary widely between schools (Mellard et al. 2009; Balu et al. 2015). Many types of measures have been used within universal screening systems, including Curriculum-Based Measurements (CBM; e.g., Deno 1985), Computer Adaptive Tests (CAT; e.g., Klingbeil et al. 2019a), previous academic year standardized tests (e.g., Klingbeil et al. 2021a), and even teacher referrals (e.g., Kettler and Albers 2013; VanDerHeyden and Witt 2005). In some schools, teacher referral may serve as the only method of screening (VanDerHeyden and Witt 2005). Some researchers may recommend the use of multiple screeners in a gated structure when initial screening failure rates are high (e.g., a student who scores below a certain score on one measure is screened using a second measure; VanDerHeyden 2013). Others recommend combining data from multiple sources to create composite indexes of performance (Nelson et al. 2016) or a gated process to selectively administer additional screening instruments (Compton et al. 2010). Regardless of the instrument used or the method in which screening is carried out, accurately identifying which students need support is the first step in almost all MTSS/RTI frameworks. If students are not being screened, or conversely, if the screening system is in place but is not properly categorizing students, resources cannot be properly distributed to the students in greatest need of intervention (Jenkins and Johnson 2008).

The evidence-based intervention movement in school psychology has highlighted the need to not only use high-quality, empirically validated instructional approaches to remediate academic skill deficits, but to identify which supports work for whom under what conditions (Kratochwill et al. 2012). Students from marginalized backgrounds, particularly students of color, tend to have access to lower-quality instruction and less-prepared teachers (DeMonte and Hanna 2014). A 2022 meta-analysis by Fallon and colleagues (2022) underscored these concerns when they found a general lack of academic intervention studies that included students of color as participants and even fewer that met WhatWorks Clearinghouse quality standards. A similar evidence-based movement has been called for in the school-based assessment literature (McGill 2019). Using evidence-based interventions without incorporating culturally responsive practices for students from culturally and linguistically diverse backgrounds may lead to suboptimal or unexpected outcomes (Cartledge et al. 2016). The same can be said for universal screening (Hosp et al. 2011). Failing to account for unique needs of culturally and linguistically diverse students may lead to over- or under-identification via universal screening, which can lead to negative consequences for students, as well as schools, when resources are misdirected (Glover and Albers 2007; Landry et al. 2022). It is important to understand effective current universal screening practices, but it is also necessary to understand the degree to which the research base that has yielded said recommendations included students from diverse demographic backgrounds.

Culturally and linguistically diverse groups have traditionally not been well-represented in educational research, particularly as it relates to evidence-based practices. As an example, Graves et al. (2021) conducted a systematic review on the effectiveness of interventions that met WhatWorks Clearinghouse (WWC) standards with reservations and without reservations for Black students. The researchers discovered that none of the studies included in the review disaggregated their data based on student race. Additionally, none of the interventions studied had been modified to be culturally responsive and beneficial for Black students (Graves et al. 2021). A recent review of single case design studies concluded that most demographic characteristics were drastically underreported, and students from many different demographic backgrounds were underrepresented across the literature (Van Norman et al. 2023).

As highlighted in Graves et al. (2021), student demographics were often reported in aggregate, creating questions as to how appropriate measures were for culturally and linguistically diverse groups of students. With regard to universal screening, of the 26 academic screeners for elementary school-aged students listed on the National Center for Intensive Intervention's (NCII 2021) website, only 10 reported fully disaggregated outcomes. Some screeners reported almost no demographic information. In a systematic review of early reading CBMs, the authors found that many studies did not report individual-level demographic variables of interest (January and Klingbeil 2020). Yeo (2010) determined that student demographic variables can be predictors of reading performance, but Kilgus et al. (2014) were unable to conduct moderator analyses on student race or ethnicity, gender, English Language Learner (ELL)/Limited English Proficiency (LEP) status, disability status, or socioeconomic status in their meta-analyses on oral reading CBM because too few studies had reported demographic characteristics.

Universal screening decisions in and of themselves are often not considered “high” stakes when they gauge a student's relative level of academic risk, but they are the first step in a process, such as RTI, that can indeed carry heavy social consequences (Jenkins and Johnson 2008). When operated ineffectively, even well-intended RTI processes can result in an unwarranted special education placement that can lead to inequities in educational access for students of color (Sullivan et al. 2015). More broadly, extensive research (e.g., Arias-Gundín and García Llamazares 2021; Rinaldi et al. 2011) has shown that early intervention and prevention confers great benefits to students from culturally and linguistically diverse backgrounds who are more likely to have experienced suboptimal schooling due to systematic barriers and institutional racism. More than 32 states have implemented legal mandates to conduct universal screening for reading difficulties (Dilbeck 2023), in part to help serve students from marginalized backgrounds more effectively. It is essential, therefore, to understand the types of measures being used in universal screening literature and the degree to which the evidence base surrounding their use can be generalized to students from diverse racial, ethnic, and linguistic backgrounds.

1 Purpose

The purpose of the current study was to examine the extent to which racially, culturally, and linguistically diverse students were represented in academic universal screening studies in the school psychology literature. The National Association of School Psychologists has directly placed social justice as a strategic priority of the organization (2017). In turn, it is incumbent on practitioners and scholars to be mindful of how they are contributing to the undoing the harm conferred to marginalized populations and advocate for improved outcomes for all students (Sullivan et al. 2022). It is critical to understand the degree to which students from historically marginalized backgrounds are represented in the literature that serves as the basis for universal screening practices. After gaining an understanding of the demographic makeup of students in academic universal screening studies, we hope that the results of this study can provide a benchmark to potentially improve upon the historical status of inclusive practices in the school psychology academic screening literature. A secondary purpose of the study was to identify the frequency of academic universal screeners and outcome measures used in research studies. In this study, two research questions were addressed. First, what are the most common academic universal screeners and academic outcome measures used in research studies? Second, what are the demographic makeups of samples in academic universal screening research studies?

2 Methods

2.1 Search Procedures

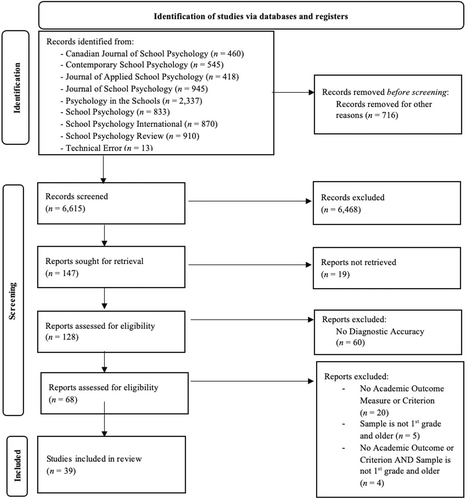

This study utilized a systematic review process of academic universal screening research published in eight major school psychology journals between January 2001 and January 2024. The results of the systematic review process are documented in a PRISMA flow diagram in Figure 1. Articles were extracted from the table of contents from the following School Psychology-focused journals: the Canadian Journal of School Psychology (n = 460); Contemporary School Psychology (n = 545), the Journal of Applied School Psychology (n = 418), the Journal of School Psychology (n = 945), Psychology in the Schools (n = 2337), School Psychology Quarterly/School Psychology (n = 833), School Psychology International (n = 870), and School Psychology Review (n = 910). Additional entries were entered as technical errors in 13 articles. A total of 716 articles were excluded at this stage. The initial total was 6615 entries. The table of contents for each journal labeled and organized the articles by type of article. Exclusion criteria included specific types of articles: About the Contributors (n = 1), Acknowledgment of Reviews (n = 3), Annotated Bibliography (n = 2), Announcement (n = 3), Annual Index (n = 3), Antiracism Statement (n = 1), Call for Papers (n = 3), Case Study (n = 2), Commentary (n = 109), Contents (n = 4), Contribution (n = 1), Discussion (n = 19), Editorial (i.e., Editorial, Editorial Board, Editorial Comment, Editorial Introduction, Editor's Note, Editorial Note, Editorial Notice, Guest Editorial, From the Editor, From the Guest Editor, Guest, Letter to the Editor, n = 189), Epilogue (n = 1), Erratum (i.e., Correction and Corrigendum; n = 37), Essay (n = 4), Ethics (n = 3), Introduction (n = 60), Issue Information (n = 89), Journal Update (n = 1), Notice (n = 1), Retraction Notice (n = 1), Review (i.e., Book Review, Test Review, Review, Reviewers List, n = 146), Short Communication (n = 1), and Tools for Practice (n = 32).

Next, a total of 6615 abstracts were screened to exclude articles that did not mention the words (a) universal screening or screening and (b) academic and/or (c) academic skills such as writing, math, reading, early literacy, oral reading fluency (ORF), or spelling. At this stage, 6468 articles were excluded. The intent was to identify universal screening studies specifically and not include studies that solely established predictive or concurrent validity. Next, 147 abstracts were further reviewed to identify articles that were empirically based (i.e., extant datasets, retrospective analyses, nonconceptual studies). At this stage, 19 articles were excluded, including meta-analyses and other systematic reviews.

A full-text review was conducted for 128 articles, first identifying if the articles included diagnostic accuracy analysis (i.e., classification accuracy, receiver operator characteristic [ROC] curve, area under the curve [AUC], sensitivity, specificity, etc.). Articles that only used validity, reliability, or growth modeling analyses were excluded (n = 60). The remaining articles (n = 68) were further reviewed to identify if an academic universal screener and outcome measure were utilized and that the study sample included elementary-aged participants and older. Any assessment that measured an academic construct such as CBM, CATs, and teacher ratings was categorized as an academic universal screener. At this stage, studies that measured academic behavior, such as engagement were excluded as well as studies involving primarily prekindergarten and kindergarten students. The following exclusion criterion were used at this stage: (a) did not include an academic universal screener and an academic outcome measure (n = 20), (b) did not include a sample of first grade and/or older students (n = 5), and (c) did not include either of these criteria (n = 4). Longitudinal and cohort studies where the students started in kindergarten and progressed through multiple grades were included.

The final 39 articles included in the review (a) were empirically based (b) utilized diagnostic accuracy statistics, (c) included an academic universal screener and academic outcome measure, and (d) included elementary-aged students. The articles included in the review are presented in a supplemental file along with the corresponding demographic characteristics in each article.

2.2 Data Extraction

Each article was coded to report information about sample, participant demographic information, measures, and analysis/results. Sample information included sample size, grade(s), setting, and school type. Participant demographic information included race/ethnicity, gender, ELL/LEP status, other language information, if students qualified for Free or Reduced-Price Lunch (FRL), other socioeconomic status information, and special education status. Information about measures included academic universal screener(s) used, outcome measures used, and time between administration of the universal screener and the outcome measure. The analysis and results section included information about whether the articles disaggregated the data and if they did disaggregate, how data were reported.

3 Results

3.1 Measures

3.1.1 Academic Universal Screeners

Across the articles in our sample, 97 different academic universal screeners were studied (Table 1). CBM was the most used type of screener (n = 57; 58.76%). The categories of academic universal screeners included CATs (n = 9; 9.28%), CBM (n = 57; 58.76%), other assessments (n = 19; 19.59%), other screeners (n = 4; 4.12%), other (n = 2; 2.06%), and rating scales/questionnaires (n = 6; 6.19%). Examples of CATs included Measures of Academic Progress (MAP) and Renaissance Learning assessments (e.g., Star Reading; aReading). Types of CBM included computational fluency and concepts and application for math, ORF, letter naming fluency (LNF), nonsense word fluency (NWF), and CBM Written Expression. The category other assessments included individually- and group-administered norm-referenced cognitive and achievement tests, state tests, and tests measuring academic skills (e.g., reading comprehension, mathematics, and word reading fluency). Examples of other assessments include the Comprehensive Tests of Phonological Processing (CTOPP), The Test of Silent Word Reading Fluency (TOSWRF), and Texas Primary Reading Inventory (TPRI). The category other screeners included measures such as a direct assessment writing screener, a problem validation screening, and a number sense brief. The category other included screeners that did not fall into the other broader categories: teacher referral and fall Fountas and Pinnell Benchmark Assessment System score. The rating scales/questionnaires category included commercially available scales and teacher created scales. A complete list of the specific instruments utilized in the articles are presented in a supplemental file. The academic universal screeners covered the following domains: math (n = 20; 20.62%), reading (n = 67; 69.07%), writing (n = 3; 3.09%), and reading and math (n = 7; 7.22%). See Supporting Information S1: Table B1 in the appendices for more information on all screeners utilized in studies.

| Universal Screeners | Outcome Measures | |||

|---|---|---|---|---|

| n | % | n | % | |

| Modality | ||||

| Achievement Tests | N/A | N/A | 16 | 29.09 |

| CATs | 9 | 9.28 | 5 | 9.09 |

| CBM | 57 | 58.76 | 12 | 21.82 |

| Other Assessments | 19 | 19.59 | N/A | N/A |

| Other Screeners | 4 | 4.12 | N/A | N/A |

| Other | 2 | 2.06 | N/A | N/A |

| Rating Scales/Questionnaires | 6 | 6.19 | N/A | N/A |

| State Tests | N/A | N/A | 22 | 40.00 |

| Domain | ||||

| Math | 20 | 20.62 | 9 | 16.36 |

| Reading | 67 | 69.07 | 38 | 69.09 |

| Writing | 3 | 3.09 | 3 | 5.45 |

| Reading and Math | 7 | 7.22 | 5 | 9.09 |

- Abbreviations: CBM, curriculum-based measurement; CATs, computer adaptive tests.

3.1.2 Outcome Measures

Across the articles in our sample, 55 different outcome measures were represented (Table 1). The modality and domain of the outcome measures were recorded. State tests were represented most frequently (n = 22; 40%). Other achievement tests (n = 16; 29.09%), CATs (n = 5; 9.09%), and CBM (n = 12; 21.82%), were also represented. Achievement tests included measures such as the Stanford Achievement Test and Woodcock Johnson Tests of Achievement III. CATs included in the review were the Smarter Balanced ELA assessment, MAP, and Terra Nova 2nd Ed. Examples of CBM used were Math CBM, Reading CBM, Maze, and CBM-R. Several states utilized their state test as an outcome measure such as Michigan, Minnesota, Pennsylvania, and Florida. Across the outcome measures, 11 were used in more than one study. The outcome measures covered the following domains: math (n = 9; 16.36%), reading (n = 38; 69.09%), writing (n = 3; 5.45%), and reading and math (n = 5; 9.09%).

3.2 Sample Information

Before addressing information related to student demographic characteristics, information regarding the participants such as sample sizes, grade(s), geographic information, and type of school studies were conducted in are reported. The median sample size was 543 students (SD = 6972.30; range = 67–34,855). There were three outliers regarding sample size in our sample (M = 19,417; M = 21,237; M = 34,855). Overall, students in first through eighth grade were represented in the sample. The distribution of grades included in studies was: 33.33% of the sample from Grade 1, 33.33% from Grade 2, 58.97% from Grade 3, 46.15% from Grade 4, 33.33% from Grade 5, 23.08% from Grade 6, 17.95% from Grade 7, and 15.38% from Grade 8. (Note: the percentages do not add up to 100 as multiple studies included multiple grades). In total, 10 studies covered one grade and 29 studies covered multiple grades. More specifically, three studies were longitudinal and followed students as they progressed through multiple grades (e.g., kindergarten to Grade 3).

Next, we identified whether the authors stated the educational-level and type of school the participants attended. The participants attended the following educational levels: elementary (n = 20; 51.28%), middle (n = 3; 7.69%), and both elementary and middle (n = 4; 10.26%). A large proportion of studies did not specific the level of the school from which the students were drawn (n = 12; 30.77%). Authors mentioned that the participants attended a public school (n = 7; 17.95%), private (n = 1, 2.56%), both public and private (n = 2; 5.13%), university-affiliated developmental research school (n = 1; 2.56%), a bilingual education program (n = 3; 7.69%), or did not specify (n = 3; 64.10%).

Authors reported the geographic locations of the schools in 92.31% (n = 36) of the studies. The general region of the United States was stated in 15 (41.67%) studies: Midwest or upper Midwest (n = 4; 26.67%), mid-Atlantic (n = 3; 20.00%), south (n = 3; 20.00%), southwest (n = 1; 6.67%), southeast (n = 1; 6.67%), upper plains (n = 1; 6.67%), more than one region (n = 2; 13.33%). Students from individual states were sampled as well in 17 (47.22%) studies: Pennsylvania (n = 4; 23.53%), Texas (n = 5; 29.41%), Wisconsin (n = 2; 11.76%), Florida (n = 2; 11.76%), Massachusetts (n = 1; 5.88%), Utah (n = 1; 5.88%), Delaware (n = 1; 5.88%), and Michigan (n = 1; 5.88%). The samples of two studies represented multiple states; one study encompassed 24 states, including Louisiana, Minnesota, New York, and Illinois, and one study represented Tennessee and Wisconsin. Last, two studies were conducted internationally, one in Oman and another in the Canary Islands.

3.3 Demographic Information

Information regarding the following demographic characteristics of the samples was reported: race/ethnicity, gender, FRL, ELL, and special education status. Race/ethnicity was not included in four (10%) studies, gender was not included in nine (23%) studies, FRL status was not included in 15 (38%) studies, ELL status was not included in 15 (38%) studies, and special education status was not included in eight (21%) studies. See Supporting Information S1: Table A1 in the appendices for gender and other demographic characteristics reported in each study. See Supporting Information S1: Table A2 in the appendices for racial and ethnic demographics of students reported in each study.

For studies that reported information for each demographic characteristic category, results were compared to national demographic trends calculated from publicly available data from the National Center for Education Statistics (e.g., not all studies reported gender demographic details, so those studies were excluded when comparing student gender representation). All data were gathered for students in grades 1–8, except for students with disabilities, where data were only available for students aged 3–21. For the studies that included demographic information, the weighted average of each demographic characteristic category was calculated (Table 2). The weighted averages for each demographic category from available data were compared to the national averages. Overall, the student demographic characteristics were well-represented in this sample of studies. White students were represented at approximately the same percentage for a given sample, compared to the national average (45.12% vs. 49.34%). The same was true for students that qualified for free or reduced priced lunch (48.1% vs. 52.1%) and the percentage of Black (16.43% vs. 13.75%), Hispanic or Latino students (26.29% vs. 25.77%), and Asian/Pacific Islander (7.82% vs. 5.73%) students. In contrast, ELL students (18.09% vs. 11.79%) were slightly overrepresented in the research literature. Students identifying as more than one race (2.71% vs. 4.6%), and students receiving special education services (10.34% vs. 12.76%) were underrepresented in the research literature.

| Weighted Average | Weighted Average | Average | |

|---|---|---|---|

| Demographic Categories | Full Sample | Trimmed Analysis | National |

| Race/Ethnicity | |||

| American Indian/Alaskan Native | 1.29 | 2.04 | 0.79 |

| Asian/Pacific Islander | 7.82 | 4.71 | 5.73 |

| Black/African American | 16.43 | 7.47 | 13.75 |

| Hispanic/Latino | 26.29 | 25.32 | 25.77 |

| Multiracial | 2.71 | 1.59 | 4.60 |

| Other/Not Specified/Unknown | 0.46 | 1.24 | 0.00 |

| White | 45.12 | 57.42 | 49.34 |

| Gender | |||

| Female | 49.00 | 49.70 | 49.00 |

| Male | 51.00 | 50.30 | 51.00 |

| FRL | 48.10 | 33.65 | 52.10 |

| ELL | 18.09 | 21.84 | 11.79 |

| Special Education Status | 10.34 | 10.86 | 12.76 |

- Note: FRL = free/reduced lunch; ELL = English language learner; Trimmed analysis = samples with outliers were excluded from this analysis.

When examining the articles included in the review, three articles contained samples that were considered outliers (i.e., 19,417, 21,237 and 34,855). These samples may have contributed to inaccurate representations of the demographic make-ups of the samples in the articles. We calculated weighted averages of the demographic characteristic categories excluding the three articles we considered outliers. These calculations are presented in Table 2 as “trimmed analysis.” Gender representation did not change, nor did special education status. The percentage of Hispanic/Latino students decreased slightly but remained commensurate with national demographics (25.32% vs. 25.77%). However, changes were seen among other race/ethnicity categories. After the trimmed analysis, White (57.42% trimmed vs. 49.34% nationally) and Native American/Alaskan Native students (2.04% vs. 0.79%) became overrepresented in the sample as opposed to being at approximately the same percentage before outliers were excluded. Black students previously were overrepresented in the samples of studies reviewed, but after the trimmed analysis, Black students were very underrepresented (from 16.43% to 7.47% in the trimmed analysis vs. a national 13.75%). Asian students also went from over- to underrepresented (from 7.82% to 4.71% in the trimmed analysis vs. a national 5.73%). Multiracial students (from 2.71% to 1.59% in the trimmed analysis vs. a national 4.6%) and ELLs (from 18.09% to 21.84% in the trimmed analysis vs. a national 11.79%) started as underrepresented and then became more so. The most dramatic difference was seen among students receiving FRL, dropping from 48.1% to only being representative of 33.65% of the sample compared to 52.1% nationally.

4 Discussion

The purpose of the current study was to give an overview of the types of academic universal screening tools and academic outcome measures utilized in current research, as well as to determine the extent to which students from culturally and linguistically diverse backgrounds were represented within the literature. The latter will inform the current state of evidence-based assessment practices by understanding the generalizability of results that inform universal screening practices. Doing so will also help universal screening researchers to critically reflect on their work to ensure they are meeting the needs of all students. We review the demographic information gathered from the studies included to national demographics to understand the similarities between the samples who were featured in research and the United States’ population.

4.1 Screeners and Outcome Measures

CBMs were the most frequently used type of screener, comprising of 58.76% of the measures that were used across studies. This is likely because CBMs are fast, easy to administer, and capture behaviors students are expected to demonstrate in the classroom (Kettler and Albers 2013). CATs were only represented nine times—the oldest was published in 2012 and all other papers were published in 2015 and beyond. Technical documents from vendors were not included in this review due to the focus on independently conducted research. The disparity between the volume of technical documents and published peer reviewed literature suggests the number of independent evaluations of CATs are limited relative to CBM. Nevertheless, CATs can be group-administered and have the potential to provide diagnostic information in greater detail than other types of screeners, more research is likely to be conducted with CATs in the future to coincide with their increase in popularity (Balu et al. 2015). Other categories of screeners were used across the entire range of years targeted in this review.

Reading screeners were the most popular domain (69.07% of included screening instruments); only three screeners assessed writing. Writing can be challenging to assess for several reasons, as students must have knowledge of a topic, adequate planning and revising ability, and an understanding of conventions of writing like spelling, word choice, and grammar (Wilson 2018). Several of the studies focusing on writing used automatic essay graders, which could reduce some of the subjectivity that a human grader might have when judging the overall quality of a student's written work (e.g., Wilson 2018; Wilson and Rodrigues 2020).

State tests were the most popular academic outcome measure, but no category of outcome measure was overwhelmingly represented among the articles reviewed. State tests may be selected for use in screening studies due to their alignment with the core curriculum that students are exposed to throughout the school year (e.g., Hosp et al. 2011; Klingbeil et al. 2019a, 2019b). Also, state tests are a measure that all students in a state are already administered and would not require researchers to administer an additional measure. Finally, state tests can be considered high stakes in their role in accountability decisions for schools and their historical ties to grade retention and graduation, which some argue has perpetuated inequities in educational outcomes for students from disadvantaged backgrounds (Acosta et al. 2020). Comparisons between states could also be taken with caution if content on state tests do not align. Few studies used writing or a combination of reading and math when predicting student performance on a measure.

4.2 A Comparison of Study Student Demographics to National Demographics

The amount and detail of reported demographic information varied across studies. Most studies were not conducted with the intent to evaluate screening practices with diverse student groups, but several studies (e.g., Basaraba et al. 2022; Pearce and Gayle 2009; Stevenson et al. 2016) sampled specifically from students who identified as ELL, students identified as Native American, or students with disabilities. Few studies (e.g., Shapiro et al. 2017; Stevenson et al. 2016) reported very vague or district-, not student-, level demographics across categories. Past systematic reviews and meta-analyses (e.g., January and Klingbeil 2020; Kilgus et al. 2014) have listed the limited level of demographic information reporting as a limitation in their work.

As stated previously, three studies included very large samples of students. Petscher and Kim (2011) sampled 34,855 students from culturally and racially diverse backgrounds, among whom over 75%–80% received FRL. Klingbeil et al. (2021a) analyzed data from 21,237 students across a demographically diverse school district in Texas. Paly et al. (2022) sampled from 19,417 students in a mid-size district in Texas, of whom over 60% were non-White. Utilizing a large sample of students can be very beneficial and in the cases of these studies, allowed for large numbers of students from culturally and linguistically diverse backgrounds to be included in research. Although positive, accessing large datasets may be unrealistic for most authors. When including the three large studies, the mean sample size across the articles reviewed was 3420 students, but the median sample size was 548. It appeared that the majority of researchers across the studies reviewed did not utilize samples larger than 5000, much less 20,000 or 30,000 students.

After excluding the three outlier samples in the trimmed analysis, the representation of demographic information shifted. The mean sample size dropped to 1381 students, though the median remained roughly the same at 541. Though some large samples were still included in this analysis, most researchers utilized a sample size of 1000 or less. This affected the weighted averages of each demographic category. These results from the trimmed analysis align more with the concerns reported by other researchers. When students from diverse demographic backgrounds are not included in research, it is not clear how accurate the measures will be as screening tools.

4.3 Studies That Reported Disaggregated Results

Aside from general concerns regarding the inclusion of students from culturally and linguistically diverse backgrounds in research, another concern is to what extent do these universal measures accurately categorize the risk status of students who are not predominantly White, from the middle- to upper-class, nondisabled, and English-speaking. Many of the authors of studies mentioned above that included samples that were large and diverse did not conduct bias analyses to evaluate the performance of the universal screening instruments specifically among student sub-groups. One method to address this concern is to disaggregate research results and run statistical analyses on specific subgroups within their population. Disaggregating data is important because it allows for a more equitable and non-colorblind approach that can help educators and researchers better tailor intervention programs and their decision-making process to the specific population of students they serve (National Forum on Education Statistics 2016). Unfortunately, disaggregation among research analyses does not always occur. In the sample of studies reviewed, only six studies disaggregated their data: Hall et al. (2022); Hosp et al. (2011); Landry et al. (2022); Pearce and Gayle (2009); Stevenson et al. (2016); and VanDerHeyden and Witt (2005). These studies tended to have a focus on a specific subgroup of students, which likely led to the increased need for data disaggregation. Once disaggregated, the data did show differences in the effectiveness of the various measures used across groups of students in these highlighted studies. Additionally, Baker et al. (2022) and Basaraba et al. (2022) utilized samples of nearly 100% Latine students, so all results essentially reflected results for a non-White population.

The most common method for disaggregation was comparing ELL students to non-ELLs (e.g., Hall et al. 2022; Hosp et al. 2011; Landry et al. 2022). Other studies disaggregated based upon other racial characteristics (e.g., Native American vs. White, Pearce and Gayle 2009; minority vs. White, VanDerHeyden and Witt 2005), student disability status (e.g., Hosp et al. 2011; Stevenson et al. 2016), or FRL status (e.g., Hosp et al. 2011; Stevenson et al. 2016). Results from these studies were generally similar between groups, but important differences were noted. The results of the study conducted by Hosp et al. (2011) indicated that the universal screening measure produced higher accuracy when determining which ELL students were at risk, but more ELL students may be missed in screenings compared to non-ELL students. Pearce and Gayle's (2009) findings were similar to those of Hosp et al. (2011) but pertained to Native American students. VanDerHeyden and Witt (2005) also compared a teacher referral system to the universal screening and determined the teacher-driven referrals tended to overidentify White students compared to an under-identification of minority students. Taken as a whole, it can be noted that certain student demographic groups could identified at different rates through universal screening systems, yet without these highlighted authors disaggregating their data, these important considerations would remain unknown. It is also important to note that out of the six disaggregated studies, only two disaggregated math results, and none disaggregated writing data. Though research should move to increased disaggregation practices regardless of subject, math screening studies should be an additional focus to ensure culturally and linguistically diverse students have access to opportunities to close proficiency gaps in the subject.

4.4 Implications for Research and Practice

4.4.1 Research

The results of this systematic review on academic universal screening studies indicated that students from certain demographic backgrounds, such as Black or Latine students or students receiving FRL, were underrepresented in academic universal screening study research, yet students from other demographic backgrounds, most pressingly White students, were overrepresented. Even when students from these demographic backgrounds were included in study samples, data were infrequently disaggregated so as to determine how accurately the screening measures classified student risk levels. Without an understanding of which students are included in universal screening research and how effectively measures were functioning for different student groups, the practice of universal screening cannot be fully effective (Hosp et al. 2011). Further research is impeded by a lack of demographic reporting. Meta-analyses cannot include moderator analyses on race or other student demographics when demographic information is not reported (e.g., January and Klingbeil 2020; Kilgus et al. 2014), so research on the effectiveness of broad types of measures used in screening is limited. With limited data reporting, studying intersectional results for students belonging to marginalized groups, such as Black females, is even more challenging.

Few studies included in this systematic review had very large sample sizes gathered from across districts or states (e.g., Diggs and Christ 2019; Klingbeil et al. 2021a; Petscher and Kim 2011); the samples gathered by many researchers appeared to be from several schools across a local school district (e.g., Goffreda et al. 2009; VanDerHeyden et al. 2018), some even directly affiliated with a university (e.g., Grapin et al. 2017). Recruiting a large, diverse sample for a study can be challenging, but smaller samples result in unequal representation across demographic categories. Creating partnerships with local school districts can be challenging in many ways, as schools may not see a need for the partnership or feel that the practices are realistic for their environment (Lewison and Holliday 1997). Not every district's student body may mirror the demographics of the United States, but it is important to seek out students who are representative of a national sample to increase generalizability and ensure that the measure is working in its intended manner.

Once data has been collected, researchers should disaggregate their data beyond making grade-level comparisons. Running diagnostic accuracy analyses across demographic subgroups is a common way to disaggregate data (e.g., Hosp et al. 2011; Pearce and Gayle 2009; Stevenson et al. 2016), but less complicated methods like comparing percentages of students correctly identified as at-risk or not at-risk can be used as well (VanDerHeyden and Witt 2005). One method of data disaggregation commonly used in the medical literature (e.g., Ma et al. 2015; Walter 2005; Zhang et al. 2002) that has not become as common in the school psychology literature is the partial ROC curve. The benefit of conducting a partial ROC curve analysis as opposed to a regular ROC curve analysis is that a partial ROC curve analysis computes different AUC values for each subgroup but still accounts for the dependency between groups. Researchers can further test these differences for statistical significance when the analyses are conducted. This method is not perfect (Walter 2005), but can be used in diagnostic decision-making (Ma et al. 2015).

Researchers can also encourage the practice of using special issues of journals to highlight topics of high need or interest. Three articles in 2022 were from a special issue from School Psychology Review on “Unlocking the Promise of Multitiered Systems of Support (MTSS) for Linguistically Diverse Students.” All articles within this special issue pertained to advancing equity through MTSS and the special considerations that should be made when working with multilingual learners. Across the previous 20 years, roughly 1.7 articles a year were published that met inclusion criteria. Having three articles in a special issue highlights the importance of the topic and indicates that these topics are growing in popularity over time. Additional special issues of journals could focus on performance of varying demographic groups of students on popular universal screening measures, and researchers could be required to fully disaggregate their results to be considered for inclusion.

Finally, researchers should encourage organizations like the NCII to require more rigorous standards for reporting data. Not all vendors report disaggregated data, and the general demographic data reported by others is very limited (NCII 2021). Increased standards for the types of data researchers are asked to report will add to transparency in quality of universal screening measures across the field. Large test vendors are more likely to have a diverse sample of students, but including students from a variety of demographic backgrounds is the baseline. Results of the current systematic review indicated that researchers often use universal screening measures created by large test vendors in their studies, so as researchers disaggregate their data, the alignment between results from these studies and the research conducted by test vendors should be further compared.

4.4.2 Practice

Practicing school psychologists can join in efforts to encourage websites like the NCII to increase reporting rigor, but becoming familiar with the measures listed on the website regardless of what is reported will help practitioners to become more critical consumers of universal screening measures. Understanding the local sample of students will help guide screening measure selection as not all measures are made with the same quality. Though less is known about the way universal screening measures function for subgroups of student outside of grade level, the brief findings of the six disaggregated studies highlighted previously indicated that some small differences may exist between the performances of students of different racial/ethnic backgrounds, socioeconomic statuses, or disability statuses on screening measures. Practitioners should interpret the results of individual studies with caution. When the students in a particular school or district are like the students sampled in a study, however, findings may generalize to the school's population. Regardless of the measure selected, VanDerHeyden and Witt's (2005) findings indicated that a teacher-driven referral system may be ineffective in some cases. Schools utilizing teacher referrals as their only screening method may wish to consider a systematic measure that represents the needs and demographics of their student body. Practitioners should also take caution when using researcher-created measures that have not been independently validated, even when study participants may be similar to students in their schools.

4.5 Limitations and Future Directions

There were several potential limitations of this study. First, since school psychologists are often key implementers of academic universal screening, school psychology journals were the focus of this systematic review. Eight of the most popular school psychology journals were selected, and a nearly 23-year period was reviewed. Though a wide selection of screening studies was identified, there are other universal screening studies that fit the inclusion criteria for the current study that were published in journals outside of school psychology, within school psychology but in other smaller journals, or outside of the selected years. Yet, a purpose of this study was to allow school psychology researchers to engage in the degree to which their work is achieving newly adopted expectations of practice related to advocating for social justice (National Association of School Psychologists 2017). Scholars have also made the call for researchers to engage in critical self-reflection in the manner they conceptualize and engage in research (Sullivan et al. 2022). This study provides a snapshot of the current state of affairs of the school psychology literature's contribution to academic universal screening practices. Future studies that expand this study could expand to screening for other area (e.g., behavior; social emotional functioning) and by extension be more inclusive of more journal databases. Even in the current study, changing inclusion criteria that might yield a different set of articles.

This study only included students in grades 1 through 8. Students in prekindergarten or kindergarten were excluded intentionally because screening does not occur as frequently for very young students and criterions used to assess proficiency vary considerably. High school students were unintentionally excluded. There were no studies on academic universal screening that included high school-aged students in the school psychology literature from 2001 onward. Throughout the screening process, some studies were identified that highlighted behavioral or mental health screenings for high school students (e.g., Dowdy et al. 2016; Kim et al. 2017), but academic universal screening did not have the same emphasis for this demographic. Other studies that may have included an academic screening component may not have required students to complete a separate academic universal screening measure but instead collected academic data like grades or teacher reports (e.g., Margherio et al. 2019). The research in academic universal screening for high school students is limited. On the NCII website (2021), there are only four vendors with universal screening measures validated for high school-aged students: Achieve3000's LevelSet (reading); Iowa Assessments (reading and mathematics); Lexia RAPID Assessment (reading); and STAR (reading and mathematics). This can be compared to the 26 measures available for elementary-aged students. Additional research should be conducted by independent researchers targeting academic universal screening for high school students to determine if the available measures have adequate diagnostic accuracy, include disaggregated data, and are practical in the settings for which they were developed.

Conflicts of Interest

The author declares no conflicts of interest.

Open Research

Data Availability Statement

The data that supports the findings of this study are available in the Supporting material of this article.