Core Concepts in Pharmacoepidemiology: Multi-Database Distributed Data Networks

Funding: The authors received no specific funding for this work.

ABSTRACT

Multi-database distributed data networks for post-marketing surveillance of drug safety and effectiveness use two main approaches: common data models (CDMs) and common protocols. Networks such as the U.S. Sentinel System, the Observational Health Data Sciences and Informatics (OHDSI) network, and the Data Analysis and Real-World Interrogation Network in Europe (DARWIN-EU) use a CDM approach in which participating databases are translated into a standardized structure so that a single, common analytic program can be used. On the other hand, the common protocol approach involves applying a single common protocol to site-specific data maintained in their native format, with analytic programs tailored to each data source. Some networks, such as the Canadian Network for Observational Drug Effect Studies (CNODES) and the Asian Pharmacoepidemiology Network (AsPEN), use a variety of approaches for multi-database studies. Regardless of the approach, distributed networks support comprehensive pharmacoepidemiologic studies by leveraging large-scale health data. For example, utilization studies can uncover prescribing trends in different jurisdictions and the impact of policy changes on drug use, while safety and effectiveness studies benefit from large, diverse patient populations, leading to increased precision, representativeness, and potential early detection of safety threats. Challenges include varying coding practices and data heterogeneity, which complicate the standardization of evidence and the comparability and generalizability of findings. In this Core Concepts paper, we review the purpose and different types of distributed data networks in pharmacoepidemiology, discuss their advantages and disadvantages, and describe commonly faced challenges and opportunities in conducting research using multi-database networks.

Summary

- Multi-database networks facilitate the conduct of drug utilization, effectiveness, and safety studies, enhancing prescription drug monitoring, facilitating regulatory decisions, and improving health.

- Multi-database networks primarily employ two approaches: common data models (CDMs), which standardize data structure, vocabularies, and data analytic code for consistent analysis; and common protocols, which implement protocols to site-specific, differently formatted and/or structured datasets.

- CDMs streamline analysis by creating uniformity across datasets, enhancing research reproducibility, and timely study completion, while common protocol models may allow for more flexibility by incorporating site-specific data elements.

- Regardless of the approach, multi-database networks encounter challenges that include varying times to data access and ethics approval, addressing data heterogeneity across jurisdictions, and managing complexities in standardizing evidence.

- Multi-database networks offer opportunities to increase study size and representativeness, facilitate collaboration, and generate robust evidence needed to inform decision-making.

1 Background and Historical Context

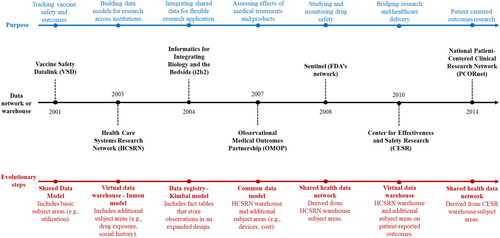

Multi-database distributed data networks play a crucial role in the post-marketing assessment of prescription drug utilization, safety, and effectiveness. Although some distributed data networks had already been established, several emerged in response to the rofecoxib (Vioxx) controversy, in which rofecoxib was voluntarily withdrawn from the market after it was determined that it was associated with an increased risk of myocardial infarction after 18 months of use [1]. An identified contributing cause of this controversy was a lack of continuous monitoring of accumulating evidence after the drug was brought to market [2], which led to a major reorganization of the Food and Drugs Administration's (FDA) post-approval safety surveillance system and, ultimately, to the creation of the FDA's Sentinel Initiative through the FDA Amendments Act of 2007 [3, 4]. This period coincided with the creation of Canada's Drug Safety and Effectiveness Network (DSEN), which launched the Canadian Network for Observational Drug Effect Studies (CNODES) [5, 6]. Other post-marketing distributed data networks have also since been established, including the European Medicines Agency (EMA)-funded Data Analysis and Real World Interrogation Network in Europe (DARWIN-EU) [7], the Asian Pharmacoepidemiology Network (AsPEN) [8, 9], the Observational Health Data Sciences and Informatics (OHDSI) network [10], the Vaccine monitoring Collaboration for Europe (VAC4EU) [11, 12], the SIGMA consortium [13, 14], the European Pharmacoepidemiology and Pharmacovigilance (EU PE&PV) Research Network [15, 16], the Medical Information Database Network (MID-NET) in Japan [17], and the Korea Institute of Drug Safety and Risk Management (KIDS) [18]. Some of these distributed data networks conduct post-marketing surveillance at the request of regulatory agencies and other government partners to facilitate the generation of real-world evidence for decision makers, while others support universities and research institutions to carry out drug safety and effectiveness research. All data networks facilitate comprehensive pharmacoepidemiologic studies by enabling secure, real-time analysis of diverse, large-scale health data from multiple sources without requiring centralized data aggregation and transfer of patient-level data. Specifically, federated analyses facilitate the analysis of multiple datasets in a fast and secure manner where data remain separate (Figure 1).

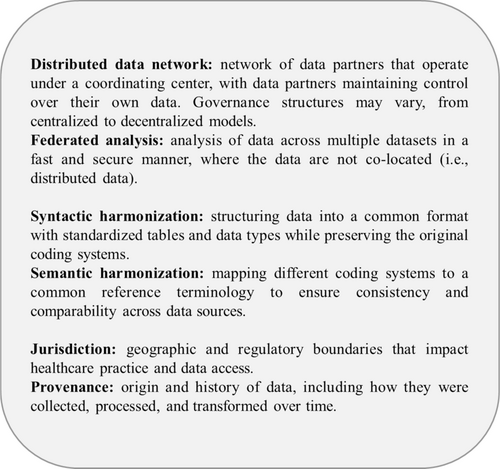

Although these networks share a common purpose, they use different approaches. Some networks use raw data shared with one central partner, then pooled and analyzed [19-22], some have implemented a distributed data framework with a common protocol and a common data model (CDM) (e.g., Sentinel, OHDSI, DARWIN-EU), also referred to as “general CDM” [19], while others use a common protocol framework with site-specific data models (e.g., CNODES) (Table 1). Distributed data network approaches are more common than pooling patient-level data due to legal confidentiality restrictions, particularly when data are required to cross jurisdictional borders. In a CDM-based distributed data framework, participating data partners curate their data into a standard format, allowing for the application of reusable programs developed and provided by a coordinating center. The use of CDMs started in 2001 with the development of the Vaccine Safety Datalink in the United States to investigate the safety of vaccines and subsequently expanded to build data models for research across institutions in 2003 [23]. One such data model is the Health Care Systems Research Network Virtual Data Warehouse (HCSRN–VDW) that incorporated more extensive subject areas including drug exposure, social history, demographics, tumor/specimen, laboratory, death, and vital signs [24]. In 2007, the Observational Medical Outcomes Partnership (OMOP) CDM was developed; it shares subject areas with the HCSRN-VDW but was developed to be more accessible for non-technical users and has additional subject areas [24]. Concurrently, Sentinel's CDM was planned in 2008 using the HCSRN-VDW as a starting point for the purpose of drug safety surveillance [24]. More recent developments include the development of the Center of Effectiveness and Safety Research (CESR) VDW and the National Patient-Centered Clinical Research Network (PCORnet) CDM, which expanded the HCSRN-VDW to include additional subject areas, notably problem lists, patient-reported outcomes, pregnancy records, and personal health records [24] (Figure 2). Some studies use multiple databases by loading only study-specific variables into a CDM for analysis, an approach that may be called ‘CDM with study-specific data’ [19].

| Network | Type | Countries of data partners | Data sources | Funding source |

|---|---|---|---|---|

| AsPEN | Common protocol (supplemented with OMOP CDM for some studies) | Australia, Canada, China, Hong Kong, Japan, Korea, Singapore, Sweden, Taiwan, Thailand, the US | Electronic health records, claims databases | Government funding |

| CNODES | Common protocol (supplemented with Sentinel CDM in some sites) | Canada (7 provinces), the UK (CPRD), the US (MarketScan) | Electronic health records, claims databases | Government funding (DSEN, CADTH) |

| DARWIN-EU | CDM (OMOP) | Belgium, Estonia, Finland, France, Germany, Spain, The Netherlands, UK | Electronic health records, claims databases, national registries | European Union grants, academic collaborations |

| OHDSI | CDM (OMOP) | Network of collaborators across 80 countries in 6 continents | Electronic health records, claims databases, national registries | Government grants, industry collaborations, Academic partnerships |

| Sentinel | CDM (Sentinel) | The US | Electronic health records, claims databases, national registries | Government funding (FDA) |

- Abbreviations: CADTH, Canadian Agency for Drugs and Technologies in Health; CDM, Common Data Model; DSEN, Drug Safety and Effectiveness Network; OMOP, Observational Medical Outcomes Partnership.

As with CDMs, common protocol frameworks involve the development of protocols by a study team at the coordinating center. However, the common protocol framework involves the implementation of these protocols at study sites who apply site-specific programs to data maintained in their native format. Although the literature commonly refers to this approach as a “common protocol” approach, both this approach and the CDM approach use protocols tailored to the study and may use standardized data analytics. Thus, this approach could be referred to as “site-specific data models” or “local analyses” [19]. One example of a network that uses common protocols is CNODES, established in 2011 by Canada's DSEN [5, 6].

In this Core Concepts paper, we review the purpose and different types of distributed data networks in pharmacoepidemiology, discuss their advantages and disadvantages, and describe commonly faced challenges and opportunities in conducting research using multi-database networks.

2 Purpose of Multi-Database Networks

Multi-database networks present an opportunity to manage and utilize data effectively and efficiently in diverse, complex, and large-scale environments. They provide a flexible, scalable, and resilient approach to managing data in a way that leverages the strengths of various database technologies while mitigating their individual limitations. One of the advantages of distributed data networks is the ability to access large real-world data sources that increase the study size when investigating the utilization, safety, and effectiveness of prescription drugs (Table 2). Although this Core Concepts paper focuses on studies of prescription drugs, multi-database networks also represent powerful tools to study surgical procedures and medical devices [37-39].

| Type of query | Strengths | Limitations | Examples |

|---|---|---|---|

| Utilization: real-world prescribing patterns |

|

|

|

| Utilization: off-label drug use |

|

|

|

| Utilization: impact of policy changes |

|

|

|

| Utilization: health surveillance |

|

|

|

| Safety: signal generation (detection) |

|

|

|

| Safety: signal evaluation (assessment) |

|

|

|

| Effectiveness |

|

|

|

2.1 Trends in Drug and Device Utilization

Data from large datasets and networks are often used for drug utilization studies to assess real-world prescribing practices across several jurisdictions, defined by geographic and regulatory boundaries that impact healthcare practice and data access (also sometimes referred to as provenance) (Figure 1). One study using the FDA's Sentinel System found low utilization of spironolactone in patients with heart failure, despite trial evidence of reduced heart failure hospitalization [25]. Observing these trends in the post-market setting provides the opportunity to identify potential treatment gaps in patients that could be explored further and provide the basis for interventions or policies to improve the use of medicines in the community. Another study using the OHDSI network described patterns of use of second-line antihyperglycemic therapies across eight countries [26]. This study found a higher uptake of such therapies among patients without cardiovascular disease than among those experiencing heart disease, despite international guidelines acknowledging their cardioprotective effect, underscoring the need to align drug use with guideline recommendations [26]. Another study assessed the use of treatment of attention deficit hyperactivity disorder (ADHD) using population databases from 13 countries and 5 regions, indicating an increased prevalence in the use of ADHD treatment with large regional variability, highlighting the need to implement evidence-based guidelines in practice across jurisdictions [40].

Utilization studies from multi-database networks are especially important to detect off-label and inappropriate drug use or treatment patterns in populations that are commonly excluded from large clinical trials. They confer a benefit in assessing such trends across different ethnicities and healthcare jurisdictions. For instance, the CNODES network examined the off-label use of domperidone, a prokinetic drug, among postpartum women with low milk supply [27]. Off-label use of domperidone consistently increased in this population to promote lactation until 2011, after which a drop in the use was observed following advisories issued by Health Canada [27]. Additionally, the Sentinel system is currently investigating the impact of label changes on the utilization of diuretics containing hydrochlorothiazide [41]. These studies thus also allow the examination of the impact of policy changes on drug utilization.

Utilization studies are also an essential component of public health surveillance. The DARWIN-EU system was recently queried to explore treatment patterns in patients with systemic lupus erythematosus, with results indicating common use of hydroxychloroquine and glucocorticoids as first-line therapies and mycophenolate mofetil and methotrexate second-line treatments [28].

In addition, multi-database studies are particularly useful for examining utilization trends in different policy environments. One study compared the utilization of Alzheimer's medications in South Korea and Australia, finding important differences due to healthcare policies, with the cost of treatment increasing in Korea and decreasing in Australia every year [42]. In South Korea, strict reimbursement criteria and patent protections limited access to newer drugs, while Australia's universal health coverage ensured broader access through more generous reimbursement policies. These studies highlight the importance of multi-database studies in revealing how different policy environments impact medication use and inform more effective healthcare planning and policy development.

2.2 Drug Safety

Several surveillance systems exist worldwide to detect adverse drug events that use existing electronic health record datasets and administrative claims data, with several systems linking different sources of data [43]. Distributed data networks can enhance drug safety studies by enabling access to larger datasets leading to greater statistical precision (especially for rare outcomes) and better geographic coverage and representativeness [44]. As with single database studies, however, it is essential that, regardless of provenance of the data, that they are fit for purpose and complete with respect to all necessary variables. Moreover, multi-database networks allow for the study of newly marketed drugs earlier by accruing enough information on a range of patients faster compared with single database studies.

Multi-database networks can be deployed for two distinct purposes with respect to drug safety: signal generation (or detection) [29] and signal evaluation (or assessment) [30, 31, 45]. The former typically involves untargeted hypothesis generation and the analysis of a large number of potential outcomes to determine if any of them suggest a concern. Signal generation involving the use of a distributed data approach allows for rapid assessment, and larger study sizes allow for a bigger pool of potential adverse events in a more representative sample [46]. Once a signal has been detected, a robust pharmacoepidemiologic assessment is often performed. Here, a common protocol approach with or without a CDM may be employed. These approaches can be tailored to the research question, population, and setting and can help understand the risk-to-benefit profile and differences in subgroups of the population (Figure 3).

2.3 Effectiveness

Comparative effectiveness studies conducted within multi-database networks serve a crucial purpose in evaluating the relative benefits and risks of different healthcare interventions across diverse patient populations and healthcare settings. In effectiveness studies that use active comparators with an expected small treatment effect, leveraging data from multiple sources, such as electronic health records, claims databases, and registries, can achieve a large study size, enabling the detection of clinically meaningful differences in outcomes. In addition, despite the availability of already marketed treatment options, most prescription drugs are approved on the basis of placebo-controlled trials. While these trials offer important information about the acuity of these investigational products, they may be less relevant to patients, clinicians, and other knowledge users such as reimbursement agencies who are particularly interested in the comparative effects of different treatment options.

In one study using the OHDSI network, investigators studied the comparative effectiveness of five first-line anti-hypertensive drugs in reducing cardiovascular outcomes as such evidence is lacking from clinical trials [35]. The study found comparable effectiveness for most drugs, with thiazide-like diuretics demonstrating superiority over angiotensin-converting enzyme inhibitors and non-dihydropyridine calcium channel blockers associated with less favorable outcomes [35]. Such a study allows for a global assessment of the effectiveness of approved drugs and evaluation of the applicability of evidence-based guidelines in clinical practice. Another study assessed the effectiveness of COVID-19 vaccines in preventing long COVID symptoms using the OMOP CDM across 4 European countries, with findings suggesting a reduced risk among vaccinated individuals [36]. This international assessment highlights the importance of multi-database networks in addressing urgent research questions, such as in a global pandemic, in a timely manner.

3 Types of Networks

3.1 Distributed Data Networks With Common Data Models

In distributed data networks that use general CDMs, data partners transform their native data into a standardized format, structure, and terminology while allowing for the flexibility of adding data that may only be available to some partners [48]. A detailed common protocol and a single analytic program are developed by the coordinating center and are subsequently implemented by each of the data partners, with summary datasets or model outputs returned to the coordinating center for synthesis and meta-analysis. Various CDMs are used in different research networks. For example, the CDM used by Sentinel is an organizing CDM that employs syntactic harmonization that organizes the raw data into standardized data structures consisting of separate tables, each with specific data types such as demographics and diagnoses, without any data transformation [49]. Partners can query these tables to generate files providing comprehensive medical histories [43]. FDA's Sentinel underlying CDM is also used for NIH studies [50]. The Sentinel CDM has since been adapted to Canadian data by CNODES, allowing for international collaborations between the two networks.

Other networks use CDMs that employ both syntactic and semantic harmonization. The OMOP CDM, used by OHDSI and DARWIN-EU, standardizes data into a common structure in addition to translating native medical terminologies into a single standard terminology (semantic harmonization). The standardized data sets are designed to promote interoperability across various healthcare systems globally [49]. The transformation process to the OMOP CDM, which preserves the source codes and standardized ones, consists of six steps, each of which may take 4 to 115 days to implement, with the greatest time spent on mapping vocabulary terms and performing quality assessment [51]. CDMs such as the Sentinel and OMOP emphasize global interoperability, allowing for cross-country research collaborations while ensuring data privacy through the sharing of aggregated results only [52, 53]. Other CDMs exist that have a more targeted clinical focus, such as the NorPreSS initiative that standardizes Nordic health data for pregnancy drug safety studies [54] and the ConcePTION Network [55], initially developed for medication safety during pregnancy and breastfeeding in the European Union, although it has been used in non-pregnancy settings as well. AsPEN is another researcher-driven network that has used various approaches, including the OMOP CDM, common protocol approach, and a hybrid modified standardized analytic program approach. Hunt et al. compared two CDMs, OMOP, a mapping CDM, and ConcePTION, an organizing CDM, and found differences in the estimated risk of cardiovascular outcomes in patients taking direct oral anticoagulants or vitamin K antagonists [56]. Such varying results could lead to potentially different regulatory decisions, underscoring the need for further research comparing approaches to multi-database studies through common protocols with or without CDMs and different CDMs.

CDMs require substantial investment in the upfront data infrastructure and regular performance checks but allow for minimal site-specific programming, faster analyses, and greater reproducibility across sites. To adopt a CDM, it is necessary for data custodians to either transform their data into the CDM or provide access to investigators to do the transformation prior to the start of conducting studies. This has not been feasible at all sites given the different data custodian and research ethics requirements across jurisdictions. Balancing the benefits of standardization with the need for tailored analyses is crucial to ensure that research using CDMs effectively captures the complexity of real-world data.

3.2 Distributed Data Networks With Common Protocols and Site-Specific Data Models

In the common protocol approach with site-specific data models, participating centers conduct the data management and analyses in their native database formats while following a common protocol rather than applying reusable programs to standardized data. As with studies using CDMs and standardized analytics, site-specific results are often collated and meta-analyzed. However, when standardization is not used, harmonization occurs through the implementation of the common protocol via multiple analysts. Although the use of a common protocol greatly reduces heterogeneity in design and analysis compared to independently conducted observational studies, some adaptation of the protocol is often required by participating sites to account for differences in data capture, structure, and coding systems used.

CNODES is an extensive network with data from Canada, the United Kingdom, and the United States [57] that uses a common protocol approach. After receiving a query from a government partner, the CNODES Coordinating Center assigns a team who develops a protocol that is implemented by investigators and analysts at collaborating sites, with analyses returned to the coordinating center.

Common protocol networks have their strengths and limitations. They require minimal upfront investment in data infrastructure over what is already in place and allow for tailored, database-specific studies. However, the site-specific programming requires substantial effort and raises potential concerns regarding reproducibility across sites. One challenge in utilizing common protocols with site-specific data is the variability in datasets stemming from differing coding systems, data curation practices, and the execution of site-specific analyses [58]. In some cases, the efficiency of a common protocol can be increased by using standardized analytics which may involve data standardized into a CDM [48]. When AsPEN was under development in 2013, the investigators conducted a feasibility study that examined the association between antipsychotic use and the onset of diabetes with the intent of using a common protocol model which required participant countries to write and execute a code in their own data sets [9]. This approach proved to be cumbersome and demonstrated high variability in coding and programming ability across sites, leading the investigators to shift to a CDM approach in which analytic code was distributed to data partners [9].

The optimal choice of CDM or common protocol with study-specific data models may come down to the research question being posed, the proposed study design, and the available infrastructure. For example, many drug utilization studies and safety and effectiveness questions that utilize traditional designs such as the active comparator new user design can easily be addressed using CDMs, allowing for more rapid evidence generation given the use of CDMs and the application reusable programs or existing tools. One study replicated two CNODES protocol studies using Sentinel's CDM reusable programs and showed that results from preprogrammed analytic tools are comparable to those from tailored protocols and can robustly assess post-market drug safety [59]. However, using the approaches of a common protocol implemented on different databases or of an organizing CDM that does not mandate direct mapping of data into standardized terminologies give rise to concerns regarding the differences in specificity, sensitivity, and positive and negative predictive values of codes. These differences can be exacerbated when employing data from different sources such as from general practitioners, hospitals, claims, or pharmacists.

4 Challenges in Interpreting Evidence From Multi-Database Networks

Interpreting evidence from multi-database networks, regardless of the approach used, presents numerous challenges, particularly in understanding and handling outlier results in jurisdictions, characterizing heterogeneity, and effectively communicating results. Outliers in the data can obscure true findings, requiring careful analysis to determine if they are data artefacts or genuine clinical differences. Identifying outliers involves statistical techniques and contextual knowledge to discern errors from actual variations. Researchers must then decide whether to exclude, adjust, or further investigate these outliers to avoid biased conclusions. Where possible, such decisions (or the processes used to make such decisions) should be pre-specified.

Characterizing heterogeneity is another substantial challenge, as variations can arise from differences in healthcare practices across jurisdictions. For instance, in one AsPEN study, the Korean dataset showed a unique negative association for olanzapine and hyperglycemia, potentially influenced by stigma around mental health issues leading to delayed treatment seeking [60]. One CNODES study found a higher relative risk of hospitalization for community-acquired pneumonia from proton-pump inhibitors in Nova Scotia compared to other sites, likely due to different formulary restrictions [61]. Heterogeneity can also stem from methodological differences in how analyses are conducted at various sites, specifically with the common protocol approach, where it is possible that different analysts may interpret the common protocol in different ways. This source of diversity should be accounted for during protocol development, ensuring that analytical approaches are clearly articulated. It is therefore important to acknowledge the expected sources of heterogeneity from different databases in one network prior to analyzing data and when comparing results, essentially adopting a “triangulation” mentality that enables us to interpret and reconcile varying results more effectively [62]. Gini et al. have proposed identifying nine dimensions of each database: organization accessing the data source, data originator, prompt, inclusion of population, content, data dictionary, time span, healthcare system and culture, and data quality [63].

Additionally, time to data access and research ethics approval represents a key challenge for both CDM and common protocol studies when conducting multi-jurisdictional studies. Networks may need to pre-specify whether or not they will include an individual site's data, which may be available on different time-tables, based on the informational needs of the government requester for regulatory/other decision-making [58].

5 Opportunities to Improve Research With Multi-Database Networks

Multi-database studies offer numerous advantages and opportunities for improved post-marketing surveillance, particularly in fostering efficient coordination and communication among investigators, analysts, and decision-makers. This communication includes direct discussions between researchers and policy-makers to ensure that the proposed question is answerable using pharmacoepidemiologic methods and that the proposed approach will produce policy-relevant results. There is also a move towards ‘networks of networks’, where international collaborations between networks would allow for the early assessment of marketed drugs (including those used to treat rare diseases) and the examination of rare but serious side effects. For instance, one study conducted a pooled analysis of results from networks and databases in Canada, Denmark, Germany, Spain, and the United Kingdom to assess the risk of bleeding in patients with atrial fibrillation taking direct oral anticoagulants compared with vitamin K agonists [30]. This pooling resulted in a high study size and enough precision to assess this important clinical question across different countries and specific anticoagulants [30].

6 Conclusions

Multi-database distributed data networks are essential for the post-marketing surveillance of drug utilization, safety, and effectiveness. These networks utilize diverse methodologic frameworks, including CDMs and protocol-based approaches applied to site-specific data models, to enable comprehensive and timely analysis of health data from multiple sources. Despite challenges in standardization and heterogeneity, these networks offer substantial opportunities for improving drug safety monitoring, utilization studies, and comparative effectiveness research. By enhancing data sharing, methodological rigor, and cross-country collaborations, multi-database networks play a pivotal role in generating robust real-world evidence for informed healthcare decision-making.

6.1 Plain Language Summary

Researchers use networks of large healthcare databases from different regions or healthcare systems to study how safe and effective medications are in real-world settings. These networks generally rely on one of two main approaches. The first, used by networks such as the U.S. Sentinel System, the Observational Health Data Sciences and Informatics (OHDSI) network, and the Data Analysis and Real-World Interrogation Network (DARWIN EU), translates all participating databases into a shared structure so that a common analytical program can be used across them. The second approach keeps the data in its original format but applies the same research plan to each database, using tailored analysis programs for each one. Some networks, such as the Canadian Network for Observational Drug Effect Studies (CNODES) and the Asian Pharmacoepidemiology Network (AsPEN), use a mix of these approaches. These distributed data networks enable the study of medication use, safety, and effectiveness in large and diverse populations, uncover prescribing trends, and assess the impact of policy changes. They help researchers detect potential safety concerns earlier and better understand how treatments perform in real-world settings. However, differences in how data are recorded and structured can make it challenging to compare results across databases. This paper reviews how distributed data networks operate, outlines their benefits and limitations, and highlights key challenges and opportunities in conducting research across multiple data sources.

Author Contributions

R.H. wrote and revised the manuscript. K.B.F., M.W.C., N.P., N.B., X.L., J.M., R.W.P., and D.P.A. reviewed the manuscript and provided important intellectual content. All authors were involved in outlining the contents of the manuscript. K.B.F. is the guarantor.

Acknowledgments

R.H. was supported in part by the doctoral award from the Fonds de recherche du Quebec–santé (FRQS; Quebec Foundation for Research—Health) and the Eileen and Louis Dubrovsky Graduate Fellowship Award of the Lady Davis Institute of the Jewish General Hospital. K.B.F. is supported by a William Dawson Scholar award from McGill University. R.W.P. holds the Albert Boehringer I Chair in Pharmacoepidemiology at McGill University.

Disclosure

D.P.A.'s department has received grants from Amgen, Chiesi-Taylor, Gilead, Lilly, Janssen, Novartis, and UCB Biopharma. His research group has received consultancy fees from UCB Biopharma paid to the University. Additionally, Janssen has funded or supported training programmes organised by D.P.A.'s department. D.P.A. also serves on the Board of the EHDEN Foundation. R.H. is employed as a consultant in heal the economics and outcomes research at STATLOG Inc., unrelated to this work. R.W.P. has received consulting fees from Biogen, Merck, and Pfizer, unrelated to this work. X.L. received educational and investigator initiate research fund from Janssen and Pfizer; internal funding from the University of Hong Kong; consultancy fee from Pfizer, Merck Sharp & Dohme, Open Health, The Office of Health Economics; she is also the former non-executive director of ADAMS Limited Hong Kong; all outside the submitted work. All other authors have no relationships to disclose.