Envisioning the Future of AI in Urodynamics: Exploratory Interviews With Experts

ABSTRACT

Background

Urodynamics monitors various parameters while the urinary bladder fills and empties, to diagnose functional or anatomic disorders of the lower urinary tract. It is an invasive and complex test with technical challenges, and it needs rigorous quality assessment and training of clinicians to avoid misdiagnosis. Applying AI to urodynamic pattern recognition and noisy data signals seems promising.

Aim

To understand desirable and appropriate applications, this study aims to explore the envisioned future of AI in urodynamics according to experts in AI and/or urodynamics.

Method

Ten semi-structured interviews were conducted to explore expectations, trust and possibilities of AI in urodynamics. Content analysis with an inductive approach was performed on all data.

Results

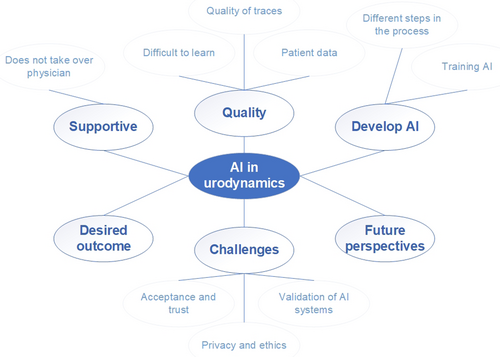

The analysis resulted in seven overarching themes: difficulties with urodynamics, quality of urodynamics, AI will be supportive, development and training of AI systems, desirable outcomes, challenges, and envisioning the future of AI in urodynamics.

Discussion and Conclusion

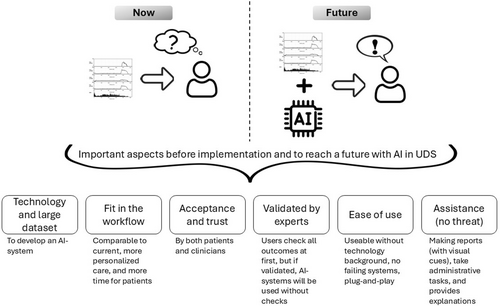

In the vision of experts, urodynamics practices will change with the introduction of AI. In the beginning, clinicians will probably desire to check AI-made outcomes of UDS tests to gain trust the system. After experiencing the value of these systems, clinicians might let the system independently provide suggested UDS analyses, and they will use more of their time to spend on their patients.

1 Introduction

Urodynamics (UDS) uses a collection of measurements to test the functionality of the bladder, urethral, and pelvic floor muscles based on fluid mechanics, electrophysiology, and sensors [1]. To diagnose functional or anatomic disorders of the lower urinary tract, UDS monitors various parameters while the bladder fills and empties [2]. UDS is complex with technical challenges and it needs rigorous quality assessments to avoid misdiagnosis [3]. Clinicians who interpret UDS require sufficient training and expertise, and the interobserver agreement levels of UDS vary [4]. Automated approaches may help in UDS analysis [5]. Artificial Intelligence (AI) is used more frequently in healthcare at large [6, 7], and has been increasingly applied in urology [8-11]. According to Chen et al. (2019) [8], the application of AI in urology can achieve high accuracy, provide thorough and reliable predictions of disease diagnosis and outcomes, and automate manually intensive tasks. Disadvantages are low external validation or generalizability, and obstacles towards implementation in clinical settings [8].

AI algorithms are also increasingly researched to apply to UDS [1]. Applying AI in cases of pattern recognition and noisy data signals, features of UDS, seems promising [5]. As Gammie et al. (2023) [5] argue, some of the advantages of AI in UDS are good performance in noisy environments, pattern recognition in unstructured environments, extraction of influential variables, and potential reduction of variability between clinicians. AI assisted UDS analysis has the potential to improve detection of patterns that are hard to visually recognize, and increase consistency and objectivity. Especially, objectivity and consistency are difficult to achieve with conventional UDS due to variation in skills of operators and interpreters [5].

A review by Liu et al. (2024) [1] summarized and selected the most appropriate algorithms for different tasks in UDS. They showed that some algorithms can improve the interpretation of urination sound changes, and that algorithms are mostly applied in diagnosing urinary tract dysfunction, which sometimes achieves accuracy comparable to clinicians [1]. Overall, AI seems promising to enhance person-centred care, in examination, diagnostics, and prognostics by detecting new patterns of diseases or treatment responses [1, 8]. However, there is a long way to go towards full clinical applicability, acceptance, and use, and currently, it seems only possible to use labelled data sets to analyse specific diagnostic questions [5]. Furthermore, there is little research on the adoption in real-life clinical settings. To understand the desirable and appropriate applications in actual UDS, this study aims to explore the envisioned future of AI in UDS according to experts in AI and/or UDS.

2 Materials and Method

2.1 Settings and Recruitment

As a first exploration we chose to focus on experts in the field of AI and/or UDS. The aim of the interviews was to explore their expectations, trust, and possibilities towards AI in UDS. Participants were recruited by a urologist from the Netherlands, all knowledgeable in UDS. They did not need to be knowledgeable about AI, however all had an interest in AI. Eight experts were invited and agreed to participate and with the use of snowball sampling, two additional experts were added. All experts have a high educational level, six had a full medical background in urology, and four had a technical and/or research background with experience in the medical field of urology/UDS specifically. Nine experts were male and one was female. Five experts were located in the Netherlands, two in the United Kingdom, one in Italy, one in Belgium, and one in the United States of America.

2.2 Data Collection

Ten semi-structured interviews were conducted by one researcher with a background in technology and medicine, but no expertise in UDS. The interview protocol was developed by the researchers in collaboration with a urologist (Supporting Information 1). With the interviews we evaluated whether different experts see value in the development and implementation of AI in UDS. The interviews contained the topics of automation in UDS, implementation, expectations, benefits and challenges, and future perspectives. Based on the conversations, additional questions were added to the protocol leading into discussing themes brought in by the experts. All interviews were conducted online with the use of Microsoft Teams and lasted for 30 min. Extensive notes were taken by the researcher. A member check was performed by sharing these notes with the experts and inviting them to provide feedback.

2.3 Data Analysis

We applied a content analysis on all data with an inductive approach to observe and combine various aspects into overarching themes [12]. Open coding was used to identify relevant themes, starting with the data of two experts. The same researcher who conducted the interviews, performed the analysis and discussed the themes within the team. A coding matrix was developed comprising all themes and sub-themes (Figure 1). This coding matrix was used to continue coding all data. At the end, the researchers discussed the coding within the team to finalize the findings. Software package Atlas.ti 23 was used during the data analysis.

3 Results

Based on the data analysis, we defined six topics: difficulties with UDS, AI will be supportive, development and training of AI systems, desirable outcomes, challenges, and envisioning the future of AI in UDS (Table 1).

| Theme | Quotes from experts |

|---|---|

| Difficulties with UDS |

|

| AI will be supportive |

|

| Development and training of AI systems |

|

| Desirable outcomes |

|

| Challenges: acceptance, trust, ethics and more |

|

| Envisioning the future of AI in UDS |

|

3.1 Difficulties With Urodynamics

Recognition of UDS patterns and performing the tests is sometimes described as difficult. Regarding the analysis, it is possible that different physicians have different conclusions. Therefore, most experts agree that AI would potentially overcome this challenge. It might help interpretation, and it might increase the information a physician gets from traces. Also, AI might reduce the need for specialized UDS training. However, the data collected with test can have variable quality, including the numbers as well as the curves. Another difficulty in UDS is performing tests in a uniform manner and collecting more or less clear patterns that are usable for the AI system. Identification of the ‘good’ and ‘bad’ traces is essential, and a method is needed to decide whether the AI output is acceptable or not. At least in the beginning, clinicians will probably check the traces themselves to take responsibility. The need to validate AI outcomes might lessen when the system has proven itself. Quality is also connected to good practice. To develop AI, it seems necessary to search for generalization and understand how to achieve more uniform data. According to the experts, a common goal among different organisations with standardization will push initiatives forward.

3.2 AI Will Be Supportive

AI systems are tools to help personnel and will not take over their jobs. The AI could bring different physicians on the same page and provide more information about ‘grey-zones’ in analysing patterns. Due to this vision as a supportive tool, most do not expect anxiety towards the AI system. They expect that AI will be a partner in diagnostics and treatment, and in that sense improve care and life of patients. This kind of supportive tool will provide more ‘space’ for different tasks, hopefully less administrative and more patient related tasks. To be supportive, the AI should firstly provide clear reports regarding the findings, and secondly connect these findings with symptoms of patients. In obtaining more reliable interpretation of the UDS, it is crucial to include information about the patients and their symptoms. A report will preferably show the curves with explanations to increase the ‘ease of use’ of the report. Most do not want information about technical details, but there were some experts who mentioned that knowing some details regarding the technical performance might assist in the detection of flaws, and knowledge of when the AI malfunctions.

3.3 Developing and Training of AI Systems

It is necessary to train AI how to deal with all information/data of UDS and patients and therefore large data sets are required. The data from UDS contains a combination of numerical and visual data. The traces are considered as ‘good’ traces and ‘bad’ traces, which are both needed to train the AI so it can recognise each. Currently, there is a method available to score the quality of traces [13], which should become part of the AI system. Taking all views of experts towards development and training of AI, it will be necessary that: 1) the AI is validated and knows normal versus abnormal, uses pattern recognition and trace quality criteria, 2) the AI provides a likely diagnosis based on UDS traces, which went through quality check, 3) the AI uses patient reported information to correlate UDS findings with patient data, among others symptoms, images, laboratory tests, voiding diaries, or medical records, and 4) the AI assists in discussions about diagnostics and treatment towards personalised care.

3.4 Desirable Outcomes

There are several desirable outcomes. First, AI systems will be useful if they produce clear reports of findings against patient complaints. Every step needs to be explicable and the findings need to match with the patients’ symptoms. As part of the report, it would be desirable to receive ‘visual cues’ attached to traces, like arrowed markings or highlights, to show and explain to patients. Second, AI systems will automate the analysis process. Most expect that there is no treatment plan attached yet, but it would be great if this will be added in the future. Third, AI systems will learn to detect more than a human can, combining a large number of findings and search for new relationships between them. Fourth, AI systems will improve patient care, and clinicians will have more time to spend on care instead of administrative tasks. Last, eXplainable AI (XAI) will add value to transparency and interpretability. It should not focus on mathematics, but make it ‘clinically understandable’. XAI assists in detecting flaws, and provides insight for discussions among colleagues with different expertise.

3.5 Challenges: Acceptance, Trust, Ethics and More

Acceptance by clinicians probably follows from trust in the system. The issue of trust is often raised due to biases in the systems and lack of knowledge about exactly what goes in and what comes out. Each user might initially ‘test’ the systems and check the results often. Most important is to know if the system works and if it will remain working. Also, rare clinical manifestations have to be detected. XAI could help with acceptance, because the clinician can have a ‘look under the hood’ to see how it is done. Clinicians hesitate to hand over tasks and trust others (human or machine) unless it seems done in a similar manner as they would have done it. Furthermore, an easy-to-use interface is crucial for proper integration in clinical settings. Most believe that a patient will not feel unsafe due to AI, they will trust that the system is validated and that a clinician knows how to interpret the outcome.

Other challenges include knowing how to deal with the ethics of data and consent of patients across multiple centres. Who owns the data and how to train nurses and physicians working or acquiring the data? When the AI is validated and becomes available, it is crucial to integrate this in the workflow as a ‘normal’ tool within the UDS toolkit. Current hardware infrastructures in organisations and costs are challenging, because it is often difficult to verify that costs are worth investing in compared with the benefits.

3.6 Envisioning the Future of AI in Urodynamics

In the envisioned future, the machine will give a suggested diagnosis and treatment plan. It will tell you ‘why’ based on clinical evidence-based research. AI will help to provide more connections between data than a human. The dream is to have a supportive system, ready and easy to use, so that we no longer have to spend time and energy on tasks which do not need to be done by a clinician, and get more space for valuable clinician-patient contact. The workflow of clinicians would change in a positive way and contact with patients always remains. Overall, AI in UDS will change the diagnostic process, it will automate certain tasks, incorporate large data-sets, and enhance personalised medicine. The patient journey becomes faster and more efficient, and remains human-centred.

4 Discussion

With this study we aimed to understand the desirable and appropriate applications of AI in UDS. Therefore, this study explored the current status and the envisioned future of AI in UDS according to experts (Figure 2). In the vision of experts, UDS practices will change with the introduction of AI. AI systems are seen as supportive tools in acquiring and analysing UDS. The systems will not replace human urologists. An AI system that will be accepted, should be verified by experts or can be verified during use, will provide (visual) reports to enhance understanding for clinicians and patients, and is easy to use. At first, the clinicians will probably desire to check AI-made outcomes to understand and trust the system. In the future, the system will independently provide UDS analyses and perform tasks now requiring a lot of time but not related to care provision. In this way, there will be more time to spend on and in contact with patients.

4.1 Reflection on Literature

Like Chen et al. (2019) [8] argue, AI systems can arm clinicians with early, accurate, and individualized decision-making. Most current developed algorithms to become applied in UDS focus on improving the interpretation of UDS [1]. The analysis and interpretation of UDS test data is a first step, but the experts see as well a huge value in the addition of unstructured data such as patient information/data. An AI system including both UDS test and patient data might be less easy to develop and all these different data types might all become a black-box in an AI system, but it may be necessary to reach optimal results and personalized decision-making. Also appropriately understanding the outcome provided by the AI system can be hard to understand by the clinician. XAI is thus seen as helpful in enhancing understandability. It can provide insight in the algorithm, help in detecting flaws, and provide food for discussion with others. Knowledge of the different test and patient data types as part of the input of an AI system might become useful as part of XAI. The XAI should provide a narrative and visualizations to explain the ‘why’ behind a decision. Although visualization tools such as SHAP, LIME or GrandCAM can be applied [14-16], the aspect of black-boxing can still become a difficulty in decision-making, because not only the technology but also the embedding in care practices and the associated changes in decision-making remain opaque [17]. As explained by the experts, it may not be necessary to understand the actual algorithm, but it is necessary to acquire understanding of the AI process of decision-making and get explanations or visualizations that are clinically relevant and useful in contact with patients. Following research on human-centered XAI, an ethical XAI framework to enhance, among others, understandability decision-making processes could be useful to define needed ways towards explainability in healthcare [18].

In earlier research on validation of algorithms, they concluded that there is a lack of external validation of results across datasets [8]. The experts claim that it might be needed to use not only one large data set, but obtain and use data from multiple centres. Earlier studies similarly argue on the need for large data sets and data from multiple centres to develop and implement AI with acceptable performance levels and the potential to become applicable for all population and lower urinary tract symptoms [5, 6, 19, 20]. According to Hobbs (2022) [6] it is necessary to compare interrater performance between AI systems and clinicians, but not all experts agree with this vision. Of course validation is necessary, but can be acquired in different ways and they envision a future with AI that is actively used in practice and checked by clinicians at first to validate and gain trust, and then the AI could act autonomously in the end.

Besides validation, several ethical topics were discussed such as responsibility, confidentiality, and privacy, which is fully in line with recent literature on AI and urodynamic data [5]. Furthermore, other critical topics in AI research are bias and generalizability. Where earlier researchers often focussed on how algorithms mirror human bias [5, 21], the experts in our study discussed more about the generalizability aspect. There are different issues with generalizability of UDS data such as different clinicians performing the tests and different procedures in different centres. Due to differences in skill levels of clinicians, the data as well as the possible analysis have a large quality variability. This could be improved with the envisioned future of AI in UDS, because the experts envisioned that AI will provide a quality check on obtained test data, based on current quality guidelines. This would not only improve the quality of tests, but also the quality of analysis and interpretations.

4.2 Strengths, Limitations, and Recommendations

A main strength of this study is the inclusion of experts with a variety of expertise, and who work in different clinical centres across Europe and the United States. Furthermore, an open vision could be applied due to the adoption of a researcher with only little knowledge of UDS. It could be seen as a limitation that most experts had no expertise in AI, resulting in less realistic visions on the future. However, the purpose of this study was to map ideas about AI and UDS, and what would be beneficial for real-life care practices. Due to the structure of the conversations, this aim was reached and the participants had the opportunity to add any topic which seemed relevant to the conversation.

It is recommended to involve AI engineers and clinicians in this study. Futurist Roy Amara argued that the potential of new technologies is overestimate in the short term, but underestimated in the long term [22]. Therefore, future research can take the visions presented in this study into account during development and implementation, and expand them with visions provided by technical and clinical experts after introduction and use in actual UDS practices.

5 Conclusion

UDS is a complex test with technical challenges, and it needs rigorous quality assessment to avoid misdiagnosis. AI algorithms are developed with different purposes and results to assist with UDS. However, the desirable and appropriate applications of AI in UDS is unknown. In the vision of experts, UDS practices will change with the introduction of AI, but these changes are mainly assistive and will not replace clinicians. In the beginning, clinicians will probably desire to check AI-made outcomes of UDS tests to gain trust. After experiencing the value of these systems, clinicians might let the system independently provide suggested UDS analyses, and they will use their time to spend on their patients.

Author Contributions

Catharina Margaretha van Leersum and Kevin Rademakers contributed to the design and preparation of the study. Catharina Margaretha van Leersum conducted the interviews and analysed the data. Member check (involving all experts) took place after developing a first draft of the findings. Catharina Margaretha van Leersum constructed the final full manuscript. All authors commented on the manuscript and approved with the final version for submission.

Acknowledgments

The authors would like to thank all experts for collaboration during the interviews and providing valuable insight.

Ethics Statement

The authors have nothing to report.

Consent

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

All relevant data are in the manuscript and its supporting materials. The datasets that support the findings and conclusion of this study are available from the corresponding author on reasonable request. The data are not publicly available due to privacy and/or ethical restrictions.