Assessing the utility of computer vision for age determination of Gulf Menhaden

Abstract

Objective

In this work, we assess the potential of computer vision techniques for age estimation of Gulf Menhaden Brevoortia patronus scales. Scales are the primary structure used for the age determination of Gulf Menhaden, and the ageing process can be labor intensive. Gulf Menhaden is the second-largest fishery by weight in the United States, with average annual landings from 2018 to 2022 of 449,540 metric tons, and is assessed with age-structured models that require information about the age structure of the catch and the stock.

Methods

We used convolutional neural networks and deep neural networks to classify the age from images of Gulf Menhaden scales from three different sets of images of scales. The first set of data consists of images of scales from fish at ages 0 and 1 year. The second set of data consists of images of scales from fish at ages 0–4 years. The last set of data consists of images of scales from fish of ages 0, 1, and 2 years and includes only images of scales for which there is agreement by readers of age estimates derived from analyzing sagittal otoliths and scales from the same individual.

Result

The classification of ages was best when using a convolutional neural network model on the first data set. The poorest classification was for the model using deep neural networks with the second data set.

Conclusion

Although we show that computer vision has promise for age determination from fish scale samples, our results indicate that considerable work must be done for wide adoption of the approach. With the continuous enhancements of computer vision models, improvements in the quality of scale images, and the accumulation of larger sets of scale images that can be used to train machine learning models, we believe that using computer vision can serve to reduce processing time and increase the accuracy of age estimates.

INTRODUCTION

Determining the ages of fish is fundamental for stock assessment and management. This information is necessary for understanding the effects of fishing on harvested populations and is a necessary component when employing age-structured models for describing and predicting the dynamics of the stock and the fishery (Quinn and Deriso 1999). Information about temporal patterns in a stock's age composition can help elucidate patterns of recruitment (Brosset et al. 2020) and stock productivity (Brennan et al. 2019). Because of the necessity and utility of age information for stock and fishery assessment, a significant amount of effort is expended by resource management agencies for its collection.

Age determination is performed by readers who examine structures that form rings (termed “annuli”) as the organism grows (Campana 2001; Vitale et al. 2005). The choice of structure is contingent on the organism's life history, the structure's ability to act as an independent chronometer, ease of processing, and the precision of the resulting age estimate. The primary structure that is used to determine an individual fish's age is the otolith, but scales and other “hard” parts are often used (e.g., fin rays, spines; Zymonas and McMahon 2009). Otoliths are the traditional structure of choice for age determination, in part because of their utility for determining the age of long-lived fishes (Secor et al. 1995). In some cases, however, the use of scales is advantageous because it requires reduced processing effort (Boxrucker 2011).

Resource management agencies collect and process fish by sampling the stock and the fishery (van der Meeren and Moksness 2003; Fisher and Hunter 2018). From 1964 to 2015, age determination was conducted for over 400,000 Gulf Menhaden Brevoortia patronus that were collected and processed from the purse-seine fishery (SouthEast Data Assessment and Review 2013, 2018). These efforts are time consuming and expensive (Moen et al. 2023). In this work, we investigate the effectiveness of computer-vision approaches for classifying images of known-age Gulf Menhaden. We seek to understand whether automated approaches allow some reduction in the age-determination effort and to understand the precision of the estimates. The use of computer vision is widespread in medicine, marketing, agriculture, and many other fields (Davies 2017). Recent work has shown that it can be successful in other aspects of fishery science, including monitoring bycatch by automated analysis of trawl video data (French et al. 2020), establishing the provenance of hatchery-reared fish (Kemp and Doherty 2022), and classifying age from otoliths (Moen et al. 2018).

Convolutional neural networks (CNN) are deep learning models that are commonly used for image classification (Szeliski 2022). Convolutional neural networks apply convolution layers to extract hierarchical features that assist in classifying the whole image. Model parameters, referred to as node weights, are estimated by making predictions based on the input image in a forward network propagation. Statistical learning is accomplished by minimizing the error between the model prediction and the observed data. Minimization, in turn, is done by calculating a gradient of a loss function, and this is used to update the model parameters (LeCun et al. 2015; Davies 2017; Szeliski 2022). Recently, computer vision has been used in fisheries in the context of providing automation for ageing (Fisher and Hunter 2018; Politikos et al. 2022). Support-vector machines and neural networks have been used in assessing the performance of statistical classifiers of images of Plaice Pleuronectes platessa otolith with an accuracy of 0.88 (Fablet and le Josse 2005). Additionally, images of Greenland Halibut Reinhardtius hippoglossoides, Australasian Snapper (also known as Silver Seabream) Pargus auratus, and Hoki (also known as Blue Grenadier) Macruronus novaezelandiae otoliths have been classified using a CNN; however, the classification accuracies were less than 50% (Moen et al. 2018). These results indicate that CNN have promise for age determination, but much work must be done before the approach has widespread use for determining fish ages. The objective of this work is to investigate the utility of computer vision in classifying the ages of images of Gulf Menhaden scales.

METHODS

Gulf Menhaden scales were obtained from fish that were collected by the state agencies of Texas, Louisiana, Mississippi, and Alabama, USA, from 2016 to 2018 (n = 1500). We recorded fork length (FL, mm) and removed scales from each sample fish for analysis. A set of 10 scales was collected from each fish from immediately above and anterior to the pectoral fin. The extracted scales were then mounted on glass slides. Mounting involved sandwiching the scales between two glass microscope slides. For each set of scales, the most readable (undamaged and easiest to read) was used for age determination and a digital image was taken of this scale. Images were obtained by magnifying the most readable scale on a Zeiss Stemi 2000-C Stereo Microscope with a Canon EOS Rebel T7i digital camera using incident light. Digital image handling was performed using MicroManager 2.0. Digital images were stored as JPEG images with 5184 × 3456 resolution. The images ranged in size from ~5 to ~8.4 MB. Of the total sample of fish, n = 1130 provided readable scales. The scale images were normalized to keep size consistency. Normalization was done by the addition of extra pixels of value 0, mimicking an empty, black background. For a subset of fish (n = 100) the right sagittal otolith was extracted for age determination. The otoliths were mounted onto a microscope slide using Cytoseal mounting medium. Age for both scales and otoliths was determined for the sampled fish by two trained readers. Both structures were read “blind” such that no auxiliary information about the individual (e.g., location and date of collection and fork length) was used to guide age estimation.

We partitioned the scale images into three different sets for evaluation. Each set of data consisted of the image of the scale and the estimated age (year) derived from scales, otoliths, or both. The first set of data was composed of scale images and ages of individuals ages 0 and 1 year (we term this set “S01”; n = 500). The second set of data was composed of scale images and ages of individuals ages 0 to 4 years (we term these data “S04”; n = 530). The third set of data was composed of scale images and ages of individuals ages 0 to 2 years (we term this set “SOT”; n = 97). This set of data included only records in which the otolith- and scale-derived age estimates were the same. Of the n = 100 fish with otolith- and scale-derived age estimates, n = 97 yielded identical estimates of age.

One deep neural network (DNN) model and one CNN model were used to classify fish age from the images of scales from each of the three sets of data. During the training phase, the DNN and CNN models recognize (learn) image features that are influential in classifying age. Learning is done from repetitive actions (training) on the collection of image samples. Model parameterization for training in this study was done by assigning 10% of the images to the validation phase and 90% of the images to the training phase. The choice of image for the validation and training phase was random.

The model architecture was used to balance model complexity and reliability in data representation and outcome stability. The number of layers in the architecture was chosen because it has been shown that data representation by a neural network is most invariant to input transformations at layers that are farthest from the data input (Montavon et al. 2018). Therefore, maximizing the number of layers would stabilize representation and allow for a more consistent outcome. However, maximizing the number of layers also implies more complex models, which take longer to train.

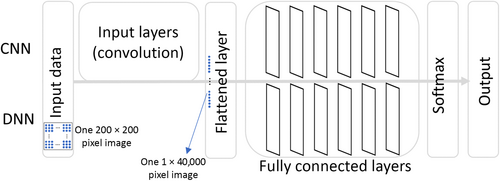

The DNN model architecture consisted of a flattened input layer, six fully connected layers of 30 × 30 nodes, and the softmax layer. The CNN model was three layers (an input layer, a full connected layer, and an output set of layers). The fully connected and output layers were those that were used in the DNN model. The CNN input layer consisted of a sequence of three convolution layers (3 × 3 kernel) and max-pooling layers, followed by another convolution layer and ending with a flattened layer. The flattened CNN layer served as the input to the fully connected layer (Figure 1). To keep the comparisons consistent, a ReLU activation function, the Adam optimizer, the He-Normal initializer, a batch size of 32, and the learning rate of 0.001 were used throughout model training (Kapoor et al. 2022; Adams 2024). Epochs (cycles where all training data are used exactly once) were used to enable the comparison of the progress during training (in terms of time) of each model's training and validation accuracy. Each model was initially run once for 100 epochs to ensure that the models ran to stabilization.

The predictive ability of each model was evaluated using a suite of performance metrics including classification accuracy, recall, precision, and F1 scores (Natekin and Knoll 2013). We evaluated accuracy using a confusion matrix and provided information to understand how the frequency of the predicted ages compares to the frequency of the observed ages. The recall is the ratio of the frequency of the true positive to the sum of the true positive's frequency and the false negative's frequency. Recall indicates the proportion of the actual positives that the model correctly identified. Similarly, precision is the ratio of the frequency of the true positive to the sum of the true positive's frequency and the false positive's frequency. The precision measurement's value indicates the model's correctness level for those predicted to be positive. The F1 value is a function combining precision and recall, a balance between the precision and recall estimates, correcting for the uneven distribution of observed classes.

Multiple independent runs in replicates of n = 60 for both the DNN and CNN models, each on the three sets of data, were evaluated for prediction consistency. After each epoch, the current model training and validation accuracy were extracted for analysis. Of the 60 replicates, 30 were randomly assigned for analyzing mean training accuracy and 30 for validation accuracy. Prediction consistency was tested with a two-factor analysis of variance (ANOVA) using the training and validation accuracies as response variables The factors for the ANOVA were the model type (levels DNN or CNN) and the set of data (levels S01, S04, and SOT).

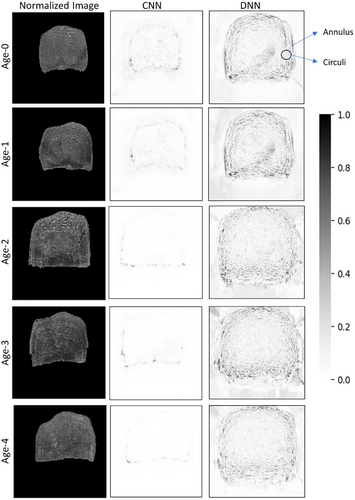

Saliency maps were evaluated to understand the representation of image features from a fully trained model. The saliency maps were used for information on what image features were most important for classifying the scale images, ensuring that expected features, such as scale annuli, are responsible for determining the model outcomes. Saliency maps provide a visual representation of the spatial support for a class in an image by determining the gradient of the image output in relation to the image input, producing visual features on the input image representing different influence levels when determining the image class. More specifically, saliency maps use gradient-based methods (Amorim et al. 2023; Gomez and Mouchère 2023; Tjoa et al. 2023), computing the gradient of the output score with respect to the pixels of the input image. The gradient is shown by highlighting regions that are most influential for the decision-making process for a given age-class. Saliency maps were generated for qualitative evaluation, specifically to understand whether the prediction of age, using the image, was influenced by the patterns of circuli on the scale. The saliency maps were generated using the fully trained CNN and DNN models.

RESULTS

The confusion matrices indicated a lower frequency of misclassification for age-0 and age-1 individuals for each set of scale images for each model than for age-2+ individuals (Table 1). The CNN model also outperformed the DNN model for the SOT data set (9 of 11 vs. 9 of 16 correctly classified for age 2). Both the CNN and DNN models performed poorly for classification of age-2+ fish samples. When classifying the age-3 samples, both models tended to overestimate the ages (39 for age-4 fish vs. 44 for age-3 fish and 38 for age-4 fish vs. 51 for age-3 fish). Misclassification frequencies for age-4 fish were not as high. For the CNN model, 64 age-4 fish were identified correctly out of 94. The DNN had a greater frequency of misclassification, correctly classifying 48 of 96 images of age-4 fish. Classification accuracy, precision, recall, and F1 score also varied depending on the data that were used and the modeling approach (Table 2). The best performance was observed for the CNN model analysis of the S01 data. The DNN model had poorer performance, relative to the CNN model, for each set of data that was analyzed. The S04 data set exhibited the poorest performance, with the lowest accuracy, precision, recall, and F1 score.

| Data | Model | Predicted age (year) | Actual age (year) | ||||

|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | |||

| S01 | CNN | 0 | 92 | 7 | |||

| 1 | 10 | 104 | |||||

| DNN | 0 | 86 | 18 | ||||

| 1 | 28 | 81 | |||||

| SOT | CNN | 0 | 8 | 1 | 0 | ||

| 1 | 1 | 11 | 2 | ||||

| 2 | 0 | 8 | 9 | ||||

| DNN | 0 | 4 | 1 | 0 | |||

| 1 | 1 | 13 | 7 | ||||

| 2 | 0 | 5 | 9 | ||||

| S04 | CNN | 0 | 95 | 11 | 1 | 5 | 3 |

| 1 | 7 | 58 | 17 | 1 | 2 | ||

| 2 | 0 | 25 | 45 | 17 | 3 | ||

| 3 | 7 | 4 | 19 | 44 | 22 | ||

| 4 | 9 | 0 | 3 | 39 | 64 | ||

| DNN | 0 | 80 | 3 | 2 | 6 | 1 | |

| 1 | 27 | 42 | 29 | 7 | 2 | ||

| 2 | 2 | 20 | 55 | 14 | 14 | ||

| 3 | 5 | 3 | 13 | 51 | 31 | ||

| 4 | 4 | 0 | 4 | 38 | 48 | ||

| Data | Model | Accuracy | Precision | Recall | F1 score |

|---|---|---|---|---|---|

| S01 | CNN | 0.920 | 0.937 | 0.912 | 0.924 |

| DNN | 0.784 | 0.818 | 0.743 | 0.779 | |

| SOT | CNN | 0.700 | 0.740 | 0.700 | 0.700 |

| DNN | 0.650 | 0.656 | 0.650 | 0.651 | |

| S04 | CNN | 0.611 | 0.616 | 0.611 | 0.611 |

| DNN | 0.551 | 0.553 | 0.551 | 0.544 |

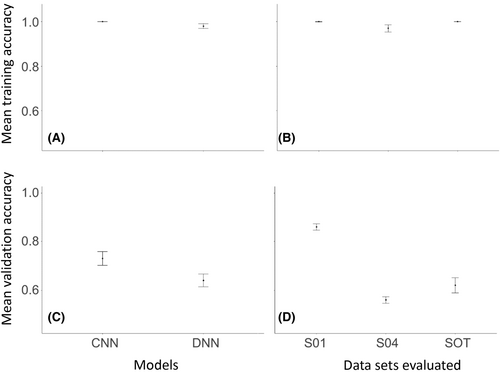

The greatest mean training and validation accuracies were for the CNN model run on the age-0 and age-1 Gulf Menhaden scale data (S01). Overall, training accuracy was consistently greater than validation accuracy (Table 3). The difference between the training and validation set was smallest for the S01 data set. The greatest difference between training and validation accuracies was observed for the S04 data, especially when evaluated with the DNN model. The estimates of standard deviations were greatest for the SOT data; this data had the fewest number of images examined. All the model runs for prediction consistency on the three data sets reached mean training accuracies after stabilization that were greater than 0.95 (Figure 1), although mean validation accuracies were lower. The DNN models tended to have more variable accuracies before model stabilization. The CNN models, on the other hand, were more stable after reaching high training accuracies (Figure 2).

| Data | Model | Mean of training accuracy | SD of training accuracy | Mean of validation accuracy | SD of validation accuracy |

|---|---|---|---|---|---|

| S01 | CNN | 1.00 | <0.001 | 0.93 | 0.028 |

| DNN | 1.00 | 0.004 | 0.81 | 0.033 | |

| SOT | CNN | 0.99 | 0.057 | 0.64 | 0.086 |

| DNN | 1.00 | <0.001 | 0.61 | 0.115 | |

| S04 | CNN | 1.00 | <0.001 | 0.61 | 0.036 |

| DNN | 0.96 | 0.031 | 0.53 | 0.028 |

The greatest correspondence of training and validation accuracy was for the CNN model evaluation of the S01 data, especially for epoch numbers greater than 25 (Figure 2A). The second greatest correspondence of training and validation was found using the DNN model on the S01 data set, for epoch numbers greater than 30 (Figure 2B). Alternatively, the DNN model that was run on the full data set was the most variable, reaching mean accuracies for training of 0.93 and mean validation accuracy of only 0.51 (Table 1; Figure 2F). For both the CNN and DNN models, the validation accuracy was relatively lower than the training accuracy for the SOT and S01 data (Figure 2C–F).

The ANOVA modeling of the mean training and validation accuracies indicated that there were significant differences in both metrics among models and sets of data (Table 4A, B). Both model type and data set were significant (p < 0.01) predictors. The results of the ANOVA analyses of mean training accuracy (examination of the magnitude of F-values) indicated that the data type was of similar importance to the model type for predicting mean values (Table 4A). Conversely, the results of the ANOVA analyses of mean validation accuracy indicated that the factor type was of greater importance than the model type for predicting mean values (Table 4B), although both factors were statistically significant. The greater mean value for training accuracy of the CNN model, for all data, also exhibited less variability (95% CI) than the DNN model did (Figure 3A). The mean training accuracy of the S04 data exhibited the greatest variability (Figure 3B). The mean validation accuracy was greatest for the CNN model (Figure 3C); the CNN and DNN models had similar variability of this estimate. The S01 data exhibited the lowest variability for the mean validation accuracy (Figure 3D).

| Factors | df | Sum of squares | Mean square | F-value | p-value |

|---|---|---|---|---|---|

| (A) | |||||

| Model | 1 | 0.016 | 0.016 | 9.707 | <0.01 |

| Data | 2 | 0.030 | 0.015 | 9.095 | <0.01 |

| Residuals | 176 | 0.293 | 0.002 | ||

| (B) | |||||

| Model | 1 | 0.334 | 0.334 | 42.530 | <0.01 |

| Data | 2 | 3.045 | 1.522 | 194.020 | <0.01 |

| Residuals | 176 | 1.381 | 0.008 | ||

The saliency maps were generated from randomly chosen, but representative, scale images of single age-0 to age-4 scales (Figure 4). There was a difference in the feature representation of the CNN and DNN models. Each model used features from the scale, as opposed to other areas of the image, such as the background. Specifically, the CNN model made classification decisions based on fewer image features than did the DNN. Consistently, the CNN models showed near-zero values in the interior regions of the scale images for all age-classes except age-0 and age-4 fish. Both the CNN and DNN models used information from the periphery of the scale. The DNN model also used information from central areas. The saliency map of age-2+ samples exhibited no obvious or clearly defined patterns, such as features that were coincident with circuli or broad patterns of annual bands, for either the CNN or DNN models. The saliency map from the CNN model for age-0 samples used some information from the center portion of the scale, unlike the saliency map for the CNN model for the age-1 scales.

DISCUSSION

The primary findings of this work are that the most accurate classification was provided by the CNN model that evaluated age-0 to age-1 scale images. These images of younger fish scales had patterning of annuli that were generally better defined and had greater contrast. The saliency maps, to some extent, indicated that there was better contrast of annuli on the scale images of the age-0 to age-1 fish. Conversely, the set of data with older fish had poor performance metrics for both the CNN and DNN models. The resulting saliency maps detected less obvious features on the scales from older fish.

The accuracy of age classification for each set of scale images was greater for the CNN models, likely due to the additional complexity provided by the convolutional layers (Szeliski 2022). In this work, the difference between the CNN and the DNN models was the existence of the convolutional layer (for CNN). The convolutional layer in CNN models serves to detect spatial hierarchies from image features (Yamashita et al. 2018). Simple features are extracted first, followed by increasingly complex image features. Therefore, the convolutional layer creates several feature-activation maps before it reaches the flattened layer. The convolution layers of the CNN model, because they were the only distinctive feature relative to those of the DNN model, were responsible for the improved classification of the scale images that were used in this study. A disadvantage of using CNN models is training time. In general, the training times for the CNN model are longer, making them less attractive for cases where the input images have clear features, leading to more accurate classification outcomes. When such images are used for input, DNN models may be as effective as CNN models, without the burden of extra training time that is required by the more-complex CNN model.

Traditionally, the most-popular models that are used in computer vision, especially for static images, are DNN and CNN (Szeliski 2022). One of the downsides of these approaches is the requirement of large amounts of labeled data that are needed to train the model. When collecting large amounts of labeled data (images of the structure and estimated age) is prohibitive, different models may be appropriate, such as transformers. Transformers, models that learn relationships between elements in a sequence, are surpassing the performance of even CNN models in computer vision (Gidaris et al. 2018; Khan et al. 2022; Zamir et al. 2022; Zhai et al. 2022). Transformers have been widely used in text classification, translation, natural language processing, and question–answer tasks (Vaswani et al. 2017). Its use in still images, however, is incipient but promising (Li et al. 2023). Because transformers assume little prior knowledge about the structure of a problem (LeCun et al. 2015), unlike CNN models, they can be pretrained on unlabeled data (Vaswani et al. 2017; Wang et al. 2020), saving time and effort from manual (human) reading. Pretraining tasks encode generalizable image features that can be fine-tuned in a supervised manner on downstream training tasks. Transformers have not yet been used for determining the ages of fishes, but we believe this approach has promise.

The estimates of model performance for the four metrics that we evaluated were similar. This may not always be the case, especially when the number of scales within an age-class differs. In this study, classification balance was forced by selecting an equal number of scale images for each age-class. This approach, however, is not always feasible. Random sampling of the length composition of fish will result in a skewed distribution for age composition. When there is an unbalanced set of images, classification metrics, such as precision and recall, may become the primary metrics for understanding the adequacy of models. For example, if model accuracy is high but recall low, a high rate of false negatives may be suspected and corrective action, such as over- or undersampling may be necessary. Alternatively, if the cost of errors in age classification is high, multiple runs may be performed, each time with a random selection of images, yielding multiple models with different parameterizations. In turn, such models may be used to classify images during production by using the average from the estimates of each model for a final age determination.

In this work we show that the mean training accuracies were adequate for both models and for each set of data. However, mean validation accuracies were only acceptable for the S01 data set. The lowest mean validation accuracy was for the S04 data set that was evaluated with a DNN model, indicating overfitting. “Overfitting” means that there is a lack of model generalization and poor performance of classification for images that the model has not seen before. Remedies for overfitting include larger data sets or data with less within-class variability (Jiang 2022). The S01 data set comprised scale images of better annuli definition, making them more suitable for generating models with higher performance.

Another potential method for reducing overfitting is data augmentation (Shorten and Khoshgoftaar 2019). This technique is used to reduce overfitting of machine learning models by randomly increasing the diversity of the training data. Data augmentation, however, must be used with caution, as the model may learn to recognize specific augmented patterns rather than generalize well to unseen data. Additionally, augmentation techniques may introduce unrealistic variations that distort the original data, resulting in a decrease in model accuracy. Data augmentation requires careful planning on what to augment, ensuring that it enhances model robustness without introducing unintended biases from choosing features that resemble annuli, defeating the purpose of augmentation randomness. As computer vision using fish scales is not yet commonplace, data augmentation at this stage is discouraged.

One advantage of using computer-vision models for determining fish ages is the minimization of reader bias. Scale reader agreement can be poor, even when trained and experienced readers are employed (Wakefield et al. 2017). What remains to be determined is the agreement between computer-vision model predictions and human readers. This work does not attempt to answer that question, but certainly, if computer vision becomes widespread, the accuracy of human and computer vision must be assessed. It is likely that some collaboration of human and computer vision could be employed (Tschandl et al. 2020). Although computer-vision applications for determining fish age are incipient, our work points to the potential of computer vision becoming important as existing image libraries are expanded and new ones are created. With the increasing use of computer vision, models can become more performant. Model development may benefit from increased usage. With more models available, transfer of learning for the determination of fish age may become feasible, as more models in the proper domain may be able to catalyze the development of more-complex models to allow for accurate estimates.

The effectiveness of automating age determination by using fish scales is contingent on addressing a number of issues. We believe that a promising approach is to enhance image quality; scales images could be processed to have greater contrast of annuli. This could include processing scales using dye to produce detectable marks (Gelsleichter et al. 1997; Lü et al. 2020). Additionally, processing could include varying light levels to enhance the annuli of scales prior to analysis (Moen et al. 2023). A variety of techniques, such as image degraining, Gaussian grayscale denoising, or deblurring can be automated and used in the early process of the ageing workflow. If image-processing techniques are applied in a consistent way, this would further serve to eliminate reader bias. Both image quality and image preprocessing are directly related to model performance.

There is potential for widespread use of computer vision for determining fish age, but the promise of the method may only be realized when a workflow from data acquisition to model deployment and evaluation is created (Sys et al. 2022). There is a need for hosting and maintenance of large digital and publicly accessible image libraries. Campana (2001) recognized the value of such libraries for training and validation efforts. We aver that an additional benefit of Web-based, publicly available, high-resolution libraries of tagged images can hasten the development of species-specific computer-vision models. A hinderance to the development and widespread use of computer-vision models for age determination is the limited availability of tagged images of scales and otoliths. In the absence of sufficient data for many species, transfer of learning from similar species may be a viable option, as has been done with Greenland Halibut (İşgüzar et al. 2024).

As we show in this work and as described in the computer literature, overfitting of models, even models with high training accuracy, is a concern. Out-of-distribution detection (Huang et al. 2020) and uncertainty-estimation techniques (Dusenberry et al. 2020) must be considered when fishery scientists seek to employ automated models for fish ageing. Thus, a necessary workflow for age automation will include the ability to identify model limitations. For example, we found a contrast between DNN and CNN with respect to classification accuracy. Another requirement for the success of automation of fish ageing is the inclusion of stakeholders who are involved in the data collection, assessment, and policymaking, with the consequent building of trust in the process. To this extent, transparency in model outcomes is essential. For example, the saliency maps that we show in this work can be used to identify specific instances where the model may be more likely to fail. If attention is paid to the above points, using computer vision with existing models to automate age estimation may not only save time and financial resources, but also allow a larger number of fish to be analyzed.

ACKNOWLEDGMENTS

The authors confirm contribution to the article as follows: study conception and design, Leaf and Riedel; data collection, Leaf; analysis, Riedel; interpretation of results, Leaf and Riedel; and draft manuscript preparation, Riedel and Leaf. Both authors reviewed the results and approved the final version of the manuscript. This work was made possible by funds conferred to the author by the Mississippi-Alabama Sea Grant Consortium (Project number: R/HCE-07). We thank Amanda Rezek, Jennifer Potts, and Amy Schueller at the National Oceanic and Atmospheric Organization's Southeast Fisheries Science Center for their help to move this work to completion and especially for sharing their expertise and skill in age determination of Gulf Menhaden.

CONFLICT OF INTEREST STATEMENT

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this article.

ETHICS STATEMENT

This study was conducted within the ethical guidelines of the institution and country in which it was performed.

Open Research

DATA AVAILABILITY STATEMENT

Data are available upon request from the authors.