Family health history collected by virtual conversational agents: An empirical study to investigate the efficacy of this approach

Abstract

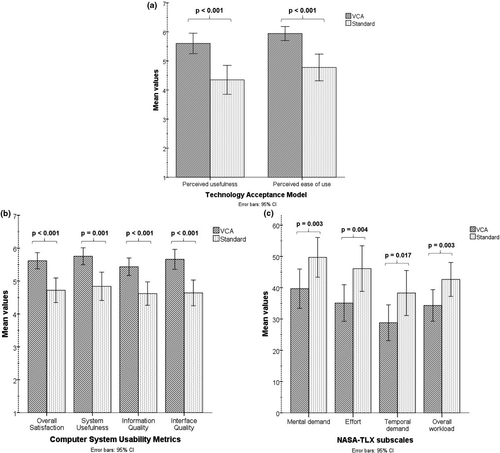

Family health history (FHx) is one of the simplest and most cost-effective and efficient ways to collect health information that could help diagnose and treat genetic diseases at an early stage. This study evaluated the efficacy of collecting such family health histories through a virtual conversational agent (VCA) interface, a new method for collecting this information. Standard and VCA interfaces for FHx collection were investigated with 50 participants, recruited via email and word of mouth, using a within-subject experimental design with the order of the interfaces randomized and counterbalanced. Interface workload, usability, preference, and satisfaction were assessed using the NASA Task Load Index workload instrument, the IBM Computer System Usability Questionnaire, and a brief questionnaire derived from the Technology Acceptance Model. The researchers also recorded the number of errors and the total task completion time. It was found that the completion times for 2 of the 5 tasks were shorter for the VCA interface than for the standard one, but the overall completion time was longer (17 min 44 s vs. 16 min 51 s, p = .019). We also found the overall workload to be significantly lower (34.32 vs. 42.64, p = .003) for the VCA interface, and usability metrics including overall satisfaction (5.62 vs. 4.72, p < .001), system usefulness (5.76 vs. 4.84, p = .001), information quality (5.43 vs. 4.62, p < .001), and interface quality (5.66 vs. 4.64, p < .001) to be significantly higher for this interface as well. Approximately 3 out of 4 participants preferred the VCA interface to the standard one. Although the overall time taken was slightly longer than with standard interface, the VCA interface was rated significantly better across all other measures and was preferred by the participants. These findings demonstrate the advantages of an innovative VCA interface for collecting FHx, validating the efficacy of using VCAs to collect complex patient-specific data in health care.

1 INTRODUCTION

A patient's family health history (FHx) is one of the most important factors that help clinicians diagnose and manage disease risks at an early, more treatable stage. Despite its value, an FHx is often underutilized in a clinical setting because medical professionals often lack the time and expertise to collect a detailed one (Ginsburg, Wu, & Orlando, 2019; Reid, Walter, Brisbane, & Emery, 2009; Wu et al., 2019). To address this limitation, various government, academic, and commercial groups have developed a wide variety of FHx tools that help patients organize their FHx on their own (Welch et al., 2018). Since these tools are relatively new approaches for FHx data collection, they are in early stages of development with different tools using different approaches to collect such information (Welch et al., 2018). Although the core FHx information collected like patient information is consistent across tools as they have been standardized through the American Health Information Community and Health Level 7, the number and types of diseases collected by the tools are different (Feero, Bigley, & Brinner, 2008; ‘HL7 Standards Product Brief - HL7 Version 3 Implementation Guide: Family History/Pedigree Interoperability, Release 1 | HL7 International’, n.d.). This could be possibly due to different approaches the tools take to collect the FHx data. Many of these tools are web-based applications that use a variety of web forms, tables, and/or a series of screens with questions to collect data from the users with some of them displaying a pedigree (Welch et al., 2018).

In addition, even though researchers have found that these computer-interfaced FHx tools are generally acceptable to patients (Ratwani, Fairbanks, Hettinger, & Benda, 2015), they have seen limited use outside of the research setting (Hulse, Taylor, Wood, & Haug, 2008; Welch, O’Connell, & Schiffman, 2015). Although 37% of the participants in a study conducted by Welch et al. (2015) indicated they had collected their FHx, only 3% of them had used a web-based FHx tool, emphasizing the minimal use of such technology by patients. Furthermore, there are concerns about the usability and appropriateness of these tool for underserved populations and those with literacy issues (Ponathil, Ozkan, Bertrand, Welch, & Madathil, 2018; Wang et al., 2015). To improve the use of FHx in health care, we need to explore new approaches for collecting this important health history information.

Recent research has begun exploring the use of artificial conversational entities (i.e., chatbots) and/or virtual assistants as an alternative approach for collecting the FHx (‘ItRunsInMyFamily’, n.d.; Wang, Gallo, Fleisher, & Miller, 2011). These conversation-based user interfaces are an emerging trend in consumer-facing technology (e.g., Apple's Siri and Amazon Alexa) and more recently in healthcare IT (‘Chatbots Will Serve As Health Assistants - The Medical Futurist’, 2017; Hoermann, McCabe, Milne, & Calvo, 2017). Among their most important advantages is their ease of use, a quality that can improve user engagement and data collection (Gutierrez, 2016; Holtgraves & Han, 2007). Specific to these tools, instead of users having to work through the multiple pages of web forms, tables, drop downs, radio buttons, and questions typical of the standard interface FHx tools (Welch et al., 2018), a dialog-based interface engages with end-users intuitively through natural conversational interaction about their FHx similar to how genetic counselors, the gold standard for FHx collection, obtain such information from patients (Corti & Gillespie, 2015; Shawar & Atwell, 2007).

Since the use of conversational entities is relatively new, research in this area is limited. Previously, researchers have developed chatbots such as ALICE for intelligent foreign language conversation and tutoring and as relational agents for establishing long-term relationships with minority older adults living in urban neighborhoods (Bickmore, Caruso, & Clough-Gorr, 2005; Fei & Petrina, 2013; Höhn, 2017). Simulation of this human-like communication leads to trust in these virtual agents and a desire to continue using them. As another example, Yaghoubzadeh, Kramer, Pitsch, and Kopp (2013) developed Billie, a conversational agent that functions as a daily calendar assistant for the elderly and for younger cognitively impaired people. Although the elderly participants were relatively less willing to use such a system, they recognized its usefulness in providing the required assistance. More specific to health care, a personalized chat-based healthcare assistant, Quro, was developed to facilitate triage and condition assessment based on user input (Ghosh, Bhatia, & Bhatia, 2018). Although no user feedback was collected in terms of its usability, this system predicted user conditions correctly with an average precision of 0.82. Using such technology has the potential to indirectly reduce the burden on overworked clinicians while at the same time reducing medical care costs and increasing patient convenience.

However, the use of conversational agents for health history data collection is a relatively novel concept. A recently developed FHx tool, VICKY, consists of an animated computer character that asks users a series of questions about their FHx. Results from a randomized trial found that VICKY identified a larger number of health conditions overall compared to the Surgeon General's My Family Health Portrait (Wang et al., 2015). Another tool uses a chatbot to collect FHx from users (Ponathil, Ozkan, Bertrand, Welch, & Madathil, 2019; Welch, Dere, & Schiffman, 2015; Welch, O’Connell, Qanungo, Halbert-Hughes, & Schiffman, 2015). While this chatbot does not use an animated computer character like VICKY, it engages the users in a conversational dialog within the user interface to collect and then display their FHx data. In a qualitative study conducted by Schmidlen, Schwartz, DiLoreto, Lester Kirchner, and Sturm (2019), they found most patients to be supportive of using chatbots to share genetic information with relatives and to interact with healthcare providers for care coordination. However, further investigation in a controlled setting is needed to compare and assess the usability, workload burden, patient preferences, and satisfaction of virtual conversational agents (VCA) versus the standard interface tools in FHx collection. This research will advance the state of the science of FHx tools and to build evidence for new innovative approaches for collecting data in health care. To address this need, this study evaluated user interfaces for collecting FHx.

2 METHODS

Specifically, this study evaluated and compared the conversational approach with the standard interface tool to FHx collection using a within-subject experimental design. The study was conducted at the Clemson University's Human Systems Integration Laboratory from December 2017 to March 2018 and was approved by Clemson University's Institutional Review Board (IRB2017-288).

2.1 Study apparatus

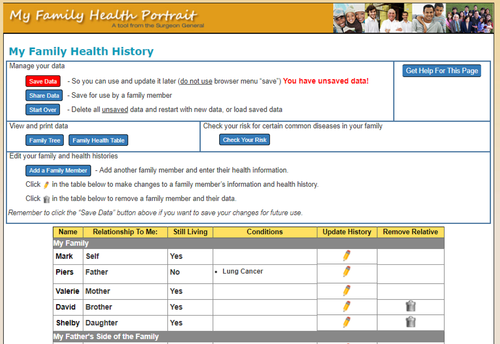

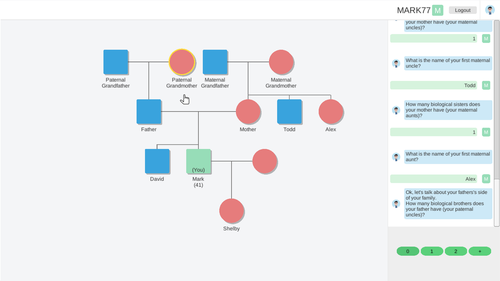

This study used two FHx tool interfaces: standard and VCA. The standard interface was the current version of the Surgeon General's My Family Health Portrait (‘My Family Health Portrait’, n.d.), which consists of a table of relatives and pop-ups to use for entering information about them as shown in Figure 1 (Feero et al., 2014; Peace, Bisanar, & Licht, 2012). This interface followed the most common method of incorporating disease information by adding the relatives first and then the disease associated with the person. The interface also generated a family pedigree but not on the default page. The VCA approach consisted of a conversational dialog in a chat form beside the family pedigree in the center of the screen as shown in Figure 2. The VCA interface, inspired by version 2 of it runs in my family application, was constructed using Microsoft C Sharp (C#) on Unity engine (‘ItRunsInMyFamily’, n.d.). A team of clinicians, genetic counselors, and human factors professionals along with a biomedical informatics specialist and a computer scientist contributed to the development of VCA. The team, with knowledge in the field of health care and user interface design, developed, tested, and iterated the interface. The family pedigree was in the center of the screen showing the relations and disease information as shown in Figure 2. Appendices S2 and S3 showcase the stimuli used for the VCA approach and the standard interface. The study participants accessed the interfaces through the Mozilla Firefox browser using a desktop with a mouse, a keyboard, and a 17.5-inch monitor in a quiet, controlled laboratory-based setting. All sessions were screen recorded. These recordings were further analyzed to compute the task completion times.

2.2 Participants

A priori power analysis based on the outcomes of perceived usefulness and ease of use (see below in measures for relevant scales) was conducted to calculate the sample size with medium population effect size (d = 0.5) at a significance level of 0.05 and power of 0.9. This analysis suggested a sample size of 36 participants. However, we recruited fifty participants from the Clemson area via email, flyers, and word of mouth for this study to account for outliers and other possible issues. Participants had to be at least 18 years old and have basic computer skills. They were excluded if they used only tablets and not desktops or laptop computers. None of the participants had previous experience with electronic FHx tools. Twenty-two percent of the participants had 5–10 years of experience using computers while the remaining 78% had more than 10 years. The participants were compensated with a $20 gift card for completing the study.

2.3 Procedure

For this within-subject experimental design, the independent variable was the interface used. The order that the interfaces were presented was randomized and counterbalanced. First, the researchers greeted the participants and briefed them on the study procedure. After signing the written consent to participate in the study, the participants completed a pre-test questionnaire and were then provided with a printed fictional FHx scenario, which included personal information, family history, and previous cancer history in the family. A fictional FHx scenario was provided to standardize the tasks across the participants. The detailed FHx scenario can be found in Appendix S5.

The researcher then asked the participants to complete the following tasks (see Appendices S2 and S3 for the respective stimuli on the screen for the VCA and standard interfaces): (a) create a user profile (Figures 5, 6, 14, and 15), (b) add the fictional FHx (Figures 7, 9–12, 16, and 17), (c) re-access the platform (Figures 8, 19, and 20), (d) edit the information (Figures 9–12, 17, and 18), and (e) share the information with a family member (Figures 13 and 21). Once the participants completed the tasks using the first interface, they were given a questionnaire about their satisfaction with it and were then asked to share their experience of working on the interface in a retrospective think-aloud session. The participants were allowed to access the interface in the retrospective think-aloud session while they explained their interaction with it and when they were asked qualitative questions based on their interactions.

Participants were encouraged to take a short break prior to completing the assigned tasks using the second interface following the same procedure. After experiencing both applications, participants were asked to indicate which interface they preferred. The researchers observed the challenges the participants faced while performing the tasks and recorded the number of errors made. See Appendix S4 for a flowchart of the procedure.

2.4 Measures

The pre-test questionnaire assessed demographic data as well as experience using the Internet and related applications. The number of seconds taken to complete the tasks for each tool was calculated based on the time between when the participant entered the interface to begin the first task until the participant shared the information with a family member at the end of the fifth task. Since the individual tasks were designed to follow each other, the end point of a task was considered as the starting point of the next one. Time to complete Task 1 (create a user profile) was calculated from the point the participant entered the interface until the submission of the final personal information. Task 2 (add fictional FHx) was calculated from the end point of Task 1 until the final information for the last family member was entered. Task 3 (re-access the platform) ended when the participant returned to the page with the family history from the login/home page. Task 4 (edit the information) completion time was calculated until the participant completed editing the previously entered information. Since Task 5 (share the information) was the final one, the time to complete it was calculated until the participants’ last click, that is, when the participant shared the information with a family member.

- Incorrect response: Incorrectly answering the questions asked by the virtual assistant or incorrect data entry while using the standard interface to enter the family information provided. For example, indicating a family member to be alive when he/she is dead or choosing the wrong disease from the disease list.

- Improper attempt: Failing to complete the required set of steps or the data entry needed to complete the task. For example, moving to the next step without completing the data entry process of a disease or entirely skipping the entry of the disease information.

- Other errors: Errors that were not included in the previous two types. For example, editing data already correctly entered.

Some actions were not considered as an error including participants saving the information multiple times, clicking on the help button, and typographical errors. See Appendix S6 for an example of an error based on each interface.

Each experimental condition, that is, VCA and standard interfaces, was followed by a brief questionnaire on the perceived usefulness and ease of use of the application based on the Technology Acceptance Model (TAM) measured on a 7-point Likert scale from extremely unlikely to extremely likely (see Appendix S7), the NASA Task Load Index (NASA-TLX) measured on a scale from 0 to 100, and the IBM Computer System Usability Questionnaire (CSUQ), the items of which were measured on a 7-point Likert scale from strongly agree to strongly disagree (Davis, 1989; Hart & Staveland, 1988; Lewis, 1995). For cognitive tasks, a NASA-TLX workload score of 13.08 was considered to be low, 46 was average, and 64.90 was high (Grier, 2015). After using both interfaces and completing these questionnaires, the participants completed a final post-test survey questionnaire ranking the interfaces in terms of preference, with 1 being the more preferred and 2 the less (Madathil, Alapatt, & Greenstein, 2010; Wismer, Madathil, Koikkara, Juang, & Greenstein, 2012). The retrospective think-aloud data were collected via a semi-structured interview format with the interview guidelines developed based on pre-defined probes. One of the two researchers asked these questions while the other collected the data in the form of written notes.

2.5 Data analysis

Descriptive statistics were used to compare the time taken to complete the task; the number of errors made; and the results from the TAM scale, IBM-CSUQ scale, NASA-TLX test scores, and the final preference questionnaire. Paired t tests were conducted at a 95% confidence level to determine the significant differences between the VCA and the standard interfaces. A Wilcoxon signed-rank test was conducted at a 95% confidence level to determine the median differences between the participants’ preference of the interfaces. IBM SPSS Statistics 24 was used to conduct the analysis.

3 RESULTS

A total of 50 adults participated in this study, 25 males and 25 females. The participants tended to be more highly educated and have more experience using computers than the typical US population (Cohn et al., 2010): 8% of them had high school degrees, 6% had some college, 4% had two-year college degrees, 36% had four-year college degrees, 36% had master's degrees, and the remaining 10% had doctoral degrees. The time required to complete the entire study was approximately 75 min.

3.1 Completion time

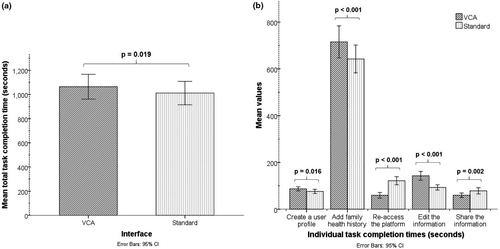

Participants took 53 s longer (95% CI [8.87, 96.29], p = .019) using the VCA interface than the standard interface to complete the entire assigned task, with 66% (33 of 50) of the participants being slower on the VCA interface. With respect to individual task completion time, participants completed Tasks 1, 2, and 4, that is, creating a user profile, adding the fictional FHx, and editing the information, more slowly using the VCA interface than the standard interface. More specifically, participants took 11 s longer (95% CI [3.33, 18.47], p = .006) using the VCA interface to create a user profile, with 72% (36 of 50) of the participants completing it more slowly on this interface. Participants using the VCA interface took 72 s longer (95% CI [35.90, 109.85], p < .001) to add the FHx, with 78% (39 of 50) of the participants completing it more slowly on this interface. Participants using the VCA interface took 50 s longer (95% CI [36.27, 62.98], p < .001) to edit the information, with 94% (47 of 50) of the participants completing it more slowly on this interface.

Participants completed Tasks 3 and 5, that is, re-accessing the platform and sharing the information, faster using the VCA interface than the standard interface. Participants using the VCA interface took 62 s fewer (95% CI [45.07, 79.58], p < .001) to re-access the platform, with 84% (42 of 50) completing it faster on this interface. Participants using the VCA interface took 18 s fewer (95% CI [7.08, 30.03], p = .002) to share the information, with 56% (28 of 50) completing it faster on this interface. Additional task completion time results are provided in Table 2 in Appendix S1, and the graphical representation is shown in the Figure 3.

3.2 Perceived usefulness and ease of use

The reliability of perceived usefulness (Cronbach's α = 0.98) and perceived ease of use (Cronbach's α = 0.96) was high. We found that the VCA approach had higher technology acceptance based on the Technology Acceptance Model relative to two metrics, perceived usefulness and perceived ease of use, than the standard interface as shown in Figure 4a (Davis, 1989). The participants had a perceived usefulness score of 1.25 units higher (95% CI [0.58, 1.92], p < .001) for the VCA interface, with 66% (33 of 50) of them rating it more useful. The participants had a perceived ease of use score of 1.17 units higher (95% CI [0.61, 1.72], p < .001) for the VCA interface, with 68% (34 of 50) rating the interface easier to use. Additional TAM scores are provided in Table 3 in Appendix S1.

3.3 System usability

The reliability scores for overall satisfaction (Cronbach's α = 0.97), system usefulness (Cronbach's α = 0.97), information quality (Cronbach's α = 0.92), and interface quality (Cronbach's α = 0.90) were high. The VCA approach scored significantly higher across all system usability metrics, overall satisfaction, system usefulness, information quality, and interface quality than the standard interface as shown in Figure 4b (Lewis, 1995). The overall satisfaction score among the participants was 0.90 units higher (95% CI [0.48, 1.32], p < .001) for the VCA interface, with 68% (34 of 50) of the participants giving this interface higher overall satisfaction ratings. The system usefulness score among the participants was 0.92 units higher (95% CI [0.42, 1.41], p = .001) for the VCA interface, with 64% (32 of 50) of the participants giving this interface higher system usefulness ratings. The information quality score among the participants was 0.81 units higher (95% CI [0.44, 1.19], p < .001) for the VCA interface, with 68% (34 of 50) of the participants giving this interface higher information quality ratings. The interface quality score among the participants was 1.02 units higher (95% CI [0.54, 1.50], p < .001) for the VCA interface, with 62% (31 of 50) of the participants giving this interface higher interface quality ratings. Additional IBM-CSUQ scores are provided in Table 4 in Appendix S1.

3.4 NASA-TLX scores

Based on the NASA-TLX measure, the VCA interface had lower perceived workload, temporal demand, mental demand, and effort, all of which positively affect a user's effectiveness and performance, than the standard interface as shown in Figure 4c (Hart & Staveland, 1988). The overall workload among the participants was 8.32 units lower (95% CI [3.01, 13.63], p = .003) for the VCA interface, with 68% (34 of 50) of the participants giving this interface a lower workload rating. The temporal demand among the participants was 9.5 units lower (95% CI [1.74, 17.26], p = .017) for the VCA interface, with 52% (26 of 50) of the participants giving this interface a lower temporal demand rating. The mental demand among the participants was 10.00 units lower (95% CI [3.61, 16.39], p = .003) for the VCA interface, with 60% (30 of 50) of the participants giving this interface a lower mental demand rating. The effort among the participants was 11.00 units lower (95% CI [3.75, 18.25], p = .004) for the VCA interface, with 62% (31 of 50) of the participants giving this interface lower effort ratings. Additional NASA-TLX scores are provided in Table 5 in Appendix S1.

3.5 Preference

Seventy-two percent (36 of 50) of the participants preferred the VCA interface, while twenty-eight percent (14 of 50) preferred the standard interface. There was a statistically significant difference in the preference for the VCA interface compared to the standard interface, z = 3.11, p = .002.

3.6 Non-significant results

There was not a statistically significant mean difference in the number of errors made by the participants between the two interfaces, t(49) = 0.17, p = .87. Additionally, there was not a statistically significant mean difference in the level of frustration among the participants between the two interfaces, t(49) = 1.86, p = .068. Additional information is provided in Table 6 in Appendix S1.

3.7 Retrospective think-aloud session

The think-aloud data were analyzed to identify the most frequent and common responses by the participants. In terms of the VCA interface, most of the participants liked the guidance provided by the virtual assistant as they felt an added connection with the interface when the assistant asked questions. They also liked the color contrast and effective use of symbols within the interface. Some participants mentioned that the interface required too many clicks especially during the editing task. However, they rationalized this criticism by suggesting the system may not be used every day, thus mitigating this drawback.

With the standard interface, the comments were frequently unfavorable for most of the tasks except for editing the information. Although the participants mentioned the icons are old-fashioned, they liked them because they were basic and easy to understand. They also found the method for editing the information by clicking on the icons and entering the information easier than having to go through the question–answer format of the VCA interface. However, they did not like having to read the long lines of written information to complete other tasks. They also did not like to concentrate and spend the time it took to enter the information correctly and to finding the appropriate buttons.

A sample of the participant comments during the retrospective think-aloud sessions are shown in Table 1.

| Comments | |

|---|---|

| Virtual conversational agent interface | ‘I like that someone asking me questions. That made it easy’. |

| ‘It was more user-friendly. You can see easily what is happening. Crosses, color changes were good’. | |

| ‘Too many clicks required’. | |

| ‘Answering too many questions is kind of boring. But you don’t use this interface every day. So, I think not a big deal’. | |

| Standard interface | ‘I had to pay a lot of attention and spent a long time’. |

| ‘There is too much written information. They are too long so I did not read most of them’. | |

| ‘Icons are simple and clear, but they are kind of old-fashioned’. | |

| ‘Tabular form was simple and clear’. |

4 DISCUSSION

4.1 Practical implications

Electronic FHx tools are an important way to collect this type of information directly from patients. However, for users to adopt healthcare-related IT systems, they must allow for efficient and effective interaction (Agnisarman et al., 2017; Agnisarman, Ponathil, Lopes, & Chalil Madathil, 2018; Ponathil, Agnisarman, Khasawneh, Narasimha, & Madathil, 2017). To date, there has been limited research focused on comparing the efficacy and usability of various FHx tool interfaces (i.e., VCA and standard interfaces) (Facio et al., 2010; Owens, Marvin, Gelehrter, Ruffin, & Uhlmann, 2011). To address this need, we conducted a within-subject controlled study with two platforms for collecting FHx: standard web forms and conversational chat-based interaction, focusing on examining the users’ performance and preference as well as their usability ratings. We found that the VCA interfaces performed markedly better across all measures assessed except time. Even though the overall time taken was slightly higher, the participants, through both quantitative and qualitative feedback, reported lower levels of mental demand and temporal demand as well as effort and overall workload in completing the tasks using the VCA interface while perceiving this interface useful and easy to use.

We found time to be a highly variable measure and that minor changes to the interface design and conversation flow could potentially reduce the time required to use the interface. For instance, we observed users required more clicks in the VCA interface to edit information because they were required to answer multiple questions, compared to editing the information in the standard interface by clicking on the pencil icon and entering the data. Making modifications or creating a hybrid interface would potentially reduce the clicks and, thus, the task completion time. Interestingly, several users in the think-aloud session mentioned they felt the standard interface to be more time-consuming despite their longer completion time for the VCA interface. The feeling of having a conversation with a virtual agent could have led these users to become deeply immersed in the tasks, leading them to perceive that this interface took less time. Overall, these results affirm the advantage of VCA approaches over standard interface, resulting in 3 out of the 4 participants preferring it.

Our results complement the findings of Wang et al. for their virtual agent VICKY, which showed the acceptability and accuracy of their virtual agent in collecting FHx was superior to a standard interface (Wang et al., 2015). The VICKY study obtained general feedback and accuracy using a randomized trial (n = 70), while our study focused on usability using formal usability measures (e.g., TAM, NASA-TLX, CSUQ). In addition, the results of Schmidlen et al. (2019) showed that the patients are backing the use of such chatbots to share FHx information with relatives and healthcare providers. Taken together, these three studies demonstrate the importance of chatbots in the near future and that such VCA approaches for collecting FHx data are easier to use and preferred by users over standard web interfaces. Earlier studies evaluating the usability of standard FHx tool interfaces have shown mixed results; however, none of these was a comparative study (Berger, Lynch, Prows, Siegel, & Myers, 2013; Hulse et al., 2011; Owens et al., 2011). For example, the participants in the study conducted by Owens et al. (2011) were asked if the standard interface (My Family Health Portrait) was understandable and easy to use, with no comparison to an alternative. The Wang study and ours demonstrate that in a comparative study, standard interfaces for FHx collection are less preferred by users than the alternative (i.e., VCA interface). These findings should continue to shape the direction of future FHx tool development, likely prompting developers to invest in VCA approaches.

Furthermore, this study encourages the use of a VCA for collecting complex patient-entered data in health care. Current research on the use of VCAs in health care is primarily focused on delivering therapy or education rather than on collecting data (Crutzen, Peters, Portugal, Fisser, & Grolleman, 2011; Gardiner et al., 2017; Hoermann et al., 2017). Collecting data for health care and research directly from patients is challenging (Chalil Madathil et al., 2013; Holden, McDougald Scott, Hoonakker, Hundt, & Carayon, 2015). Using VCAs to overcome this challenge could possibly lead to new opportunities in health care. The findings from this study suggest that VCA interfaces have the potential to inform data collection design interventions in primary care settings, patient portals, and other electronic health systems that require extensive data from the user.

4.2 Study limitations

The study has several limitations. Since the participants were recruited on and around a college campus, the sample included primarily well-educated people. Since the primary focus of this study was the comparison between the interfaces, the education level and age of the participant were not recruitment criteria. However, our findings are similar to the VICKY study, which focused on poor, underserved populations (Wang et al., 2015). Also, users were asked to enter fictional data provided to them at the time of the study. Not knowing the family members may have resulted in increased task completion times and errors and a scenario not representative of the real world. However, if participants used their own FHx information, it would have been challenging to compare measures (e.g., time) across participants or analyze them as a group as each participant would have entered different information and faced different challenges, and the learning effects may have been more pronounced. Finally, the study was conducted in a controlled setting with the fictional data and a convenient sample, potentially limiting the generalizability of the findings. Evaluating the interfaces in practice with the patients’ own data and with people who have used such tools could help study their effectiveness.

4.3 Research recommendations

Further development and research on VCA interfaces are warranted for FHx collection and other areas of health care. The first step related to using FHx is collecting the data followed by providing the decision support system for the users. A number of tools are being developed such as MeTree and My Family Health Portrait that provide support for clinical decisions by supplying risk assessment and recommendations (Orlando et al., 2013; Owens et al., 2011). These tools collect the data entered by the users and provide risk estimates for various hereditary conditions. However, these tools follow the standard interface design and, as seen from this study, may not be preferred over the VCA interface. Future work could incorporate such decision support systems in this VCA interface or vice versa. The VCAs could ask additional questions to the users related to their genetic history for a particular disease and provide an output with the risk assessment. This information can be further incorporated into their electronic health records which can be viewed by their clinician over time. Such a system could be the simplest and least expensive form of genetic assessment compared to the traditional method of face-to-face or phone conversations with medical service providers, while being effective, accurate, easy to use, and efficient for the clinicians.

Future studies focusing on the data collection aspect of the FHx could recruit a highly diverse sample of individual users with actual FHx scenarios to increase the representativeness with respect to age, education level, socio-economic status, technology competency, gender, race, ethnicity, and disease. We can look into how the different population demographics consider the perceived usability, acceptance, and preference of these FHx tools. Future work could also utilize real FHx data and using the same measures as those in this study (e.g., TAM, NASA-TLX, CSUQ) compare the result to ours.

In addition, future comparisons of VCA to other data collection methods could be conducted using an implementation science framework. In general, a number of different implementation science frameworks like RE-AIM (Reach, Effectiveness, Adoption, Implementation, and Maintenance) and Proctor are being applied to determine the impact of such individual research interventions on practice and to estimate their impact on public health (Dzewaltowski, Estabrooks, & Glasgow, 2004; Glasgow, McKay, Piette, & Reynolds, 2001; Proctor et al., 2009; Sweet, Ginis, Estabrooks, & Latimer-Cheung, 2014). The specific aspect evaluated in this study, VCA versus standard interface, helps confirm which strategy is more effective. These findings could be incorporated in the larger implementation science framework to evaluate the real-world effectiveness of VCAs.

5 CONCLUSION

Our results suggest the VCA interface approach reduces the workload on the users, and at the same time, they perceived the interface to be more usable and satisfactory compared to the standard interface. The users liked the immersive feeling of having a conversation with the virtual agent over the standard interface using the tabular, pop-up method for data entry. The VCA interfaces appear to be a promising new approach which could alter the data collection design interventions within interfaces in the field of health care.

ACKNOWLEDGMENTS

This work was supported in part by funding from the National Cancer Institute (5K07CA211786) and the Hollings Cancer Center's Cancer Center Support Grant (P30CA138313) from the Medical University of South Carolina. Dr. Firat Ozkan was supported through the post-doctoral research program at The Scientific and Technological Research Council of Turkey. This research was conducted while AP was pursuing his master's degree. The study was approved by Clemson University's Institutional Review Board (IRB2017-288).

AUTHOR CONTRIBUTIONS

AP, FO, and KCM had full access to all the data in the study. AP, FO, and KCM are responsible for study concept and design. AP, FO, BW, JB, and KCM contributed to scenario development and validation. AP and FO conducted the experimental study. AP and FO performed the data analysis. AP, FO, and KCM wrote the manuscript. All authors provided critical revisions of the important intellectual content in the manuscript.

COMPLIANCE WITH ETHICAL STANDARDS

Conflict of interest

AP, FO, JB, and KCM declare that they have no conflict of interest. BW is a co-founder of Doxy.me and itrunsinmyfamily. He contributed to the research concept and manuscript review but did not participate in data collection or analysis.

Human studies and informed consent

All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2000 (5). Informed consent was obtained from all participants for being included in the study.

Animal studies

No animal studies were carried out by the authors for this article.