Gene Variant Databases and Sharing: Creating a Global Genomic Variant Database for Personalized Medicine

For the 25th Anniversary Commemorative Issue

ABSTRACT

Revolutionary changes in sequencing technology and the desire to develop therapeutics for rare diseases have led to the generation of an enormous amount of genomic data in the last 5 years. Large-scale sequencing done in both research and diagnostic laboratories has linked many new genes to rare diseases, but has also generated a number of variants that we cannot interpret today. It is clear that we remain a long way from a complete understanding of the genomic variation in the human genome and its association with human health and disease. Recent studies identified susceptibility markers to infectious diseases and also the contribution of rare variants to complex diseases in different populations. The sequencing revolution has also led to the creation of a large number of databases that act as “keepers” of data, and in many cases give an interpretation of the effect of the variant. This interpretation is based on reports in the literature, prediction models, and in some cases is accompanied by functional evidence. As we move toward the practice of genomic medicine, and consider its place in “personalized medicine,” it is time to ask ourselves how we can aggregate this wealth of data into a single database for multiple users with different goals.

The Burden of Data from Large-Scale Sequencing Projects

We have come a long way since the first draft of the human genome was completed in 2001 [http://www.genome.gov; Venter, 2000; Lander et al., 2001; Venter et al., 2001]. Genomic data have revealed the complexity of the human genome, and the concept of one gene-one disease has changed. This has implications in all areas of medicine and is not limited to rare diseases [Macarthur, 2012]. Data from large-scale sequencing projects, such as the 1000 Genomes Project and projects focused on data aggregation, such as the ExAC database, are now freely available for use in research and diagnostic settings [Watt et al., 2013; Lanthaler et al., 2014]. These projects have made two things clear: (1) certain regions of the human genome still cannot be sequenced with adequate depth or simply have not been assigned a sequence based on their content, strand orientation, or errors in assembly; and (2) there are thousands of rare variants in the general population that are difficult to classify. With respect to the latter, two influential papers published in 2015 are now widely used by research and diagnostic groups to classify variants. The Gunter et al. publication [MacArthur et al., 2014] in Nature focuses on guidelines for investigating the causality of sequence variants in human disease by integrating gene and variant level evidence to support causality. The American College of Medical Genetics and Genomics [Richards et al., 2015] first published recommendations for sequence interpretation in 2005, and then again in 2008, with the most recent revision coming in 2015, which takes into account new gene discoveries and the significant advances in sequencing and introduces the five-term classification system [Maddalena et al., 2005; Richards et al., 2008; Richards et al., 2015]. These guidelines are specifically directed toward inherited diseases testing in clinical laboratories, though they have also been used for somatic variant classification. Guidelines for classifying somatic variants are expected to be available in 2016 through a workgroup from the Association for Molecular Pathology. It is clear from both the Gunter et al. and Richards et al. recommendations that these guidelines must be used with caution and in reference to gene–disease association considering the still significant lack of variant data from many populations.

Variant Databases

Inherited Diseases

Inherited genetic disorders range from an incidence of 1:3,000 to 1:200,000 or more. These are categorized as rare and ultrarare disorders. Since the early discoveries of genes such as CFTR, DMD, FMR1, and PAH, there have been various efforts to create gene-/disease-specific mutation databases. These efforts started with locus-specific databases (LSDBs) [Fokkema et al., 2005, 2011; Pan et al., 2011; Bean et al., 2013; International Alport Mutation et al., 2014; Lanthaler et al., 2014] made by individual research groups for genes such as TP53 [Forbes et al., 2011]. In the last decade as sequencing costs dropped, new genes were rapidly discovered. This made it necessary to create gene-/disease-specific databases, and the Human Gene Mutation Database (HGMD) spearheaded much of this effort [Cooper et al., 1998; Krawczak et al., 2000; Cooper et al., 2006; George et al., 2008; Adzhubei et al., 2013; Peterson et al., 2013; Rivas et al., 2015]; however, these efforts were not directed toward the collection of variants that are clinically curated. Databases like the HGMD act as a storehouse of genetic variation published in different databases. LSDBs have been more focused collecting curated variants from various genes in gene-specific databases. Overall, it is clear from available databases that only 25%–30% of variants can be neatly classified as “pathogenic” or “benign,” with the remaining variants being classified as “variants of unknown clinical significance.”

Somatic Disease

Variant aggregation in somatic disease is focused mainly on variants in the COSMIC database, the cancer genome sequencing projects, and publications for developing targeted therapies for different cancers [Stoupel et al., 2005; Higgins et al., 2007; Forbes et al., 2010; Forbes et al., 2011; Medina et al., 2012; Sachdev et al., 2013; Watt et al., 2013]. Although several variants have now been identified for their effects in different drug therapies, a great many variants still cannot be interpreted in a clinical context and fall into the category of “variants of unknown clinical significance.”

The Case for Data Submission and Variant Curation from Clinical Laboratories

Clinical laboratories develop new tests based on discoveries reported in the literature [Bean et al., 2013]. These tests are largely “evidence based” for their disease causality. After the initial report of the gene discovery, most of the sequencing of a gene takes place in a clinical setting, yet these data do not find their way into the literature either due to lack of interest from the journals or the clinical laboratory in publishing the data. A vast amount of curated sequence data lies within the clinical laboratories. Many organizations, including the NIH, have taken steps to initiate the collection of this data through efforts like the ClinGen consortium [Peterson et al., 2013; Landrum et al., 2016].

US-Based Laboratories

Advances in genomic sequencing in the past decade have facilitated large-scale clinical laboratory sequencing of more than 2,000 disease-associated genes, exomes, and genomes worldwide. These data have typically not been published or deposited into public databases and have therefore not been accessible to other clinical laboratories, researchers, and clinicians. Most clinical laboratories use ad hoc data-mining processes for variant interpretation and access large genomic data sets, such as dbSNP, 1000 Genomes, or the Broad ExAC database, published literature, locus-specific databases, data from experts performing research in these areas, and also internal laboratory data. Differences in interpretation can occur between laboratories and are caused not only by differences in the available data, but also the lack of standards surrounding variant classification. The Clinical Genome (ClinGen) Resource aims to improve our understanding of genomic variation through data sharing and collaboration, starting with aggregating sequence and structural variants in the National Center for Biotechnology Information (NCBI)'s publicly available ClinVar knowledge base [Peterson et al., 2013; Rehm et al., 2015; Landrum et al., 2016]. More recent submissions to ClinVar have increased the number of variants to >90,000, providing a fast-growing resource for the community, as well as a substrate for catalyzing the much-needed harmonization of clinical variant classification standards. The ClinVar efforts have focused largely on submission from US-based laboratories from a grant supported by NHGRI. Clinical laboratories typically do not have dedicated efforts to make these submissions; so external funding is required to drive them.

The Human Variome Project

The Human Variome Project was the first large-scale effort to aggregate variants from clinical laboratories. The efforts from HVP are set up through country-specific nodes to collect variant data from various genes from different laboratories [Oetting, 2011; Stanley et al., 2014; Oetting et al., 2016]. This effort is extremely important since we still have so little knowledge of the rare variants versus disease-causing genomic variation in the different world populations. The ExAC database from the Broad Institute, which has aggregated variant data from over 66,000 exomes, has revealed many errors in the pathogenicity assignment of variants, but it is still not enough to classify rare variants in different populations.

Paucity of Submissions from Clinical Laboratories

As we move toward realizing the full potential of the human genome for personalized medicine, it is critical that we understand the impact of genomic variation in health and disease. The efforts made so far by groups in the US and Australasia and European countries have been tremendous in terms of aggregating, but are extremely fragmented. This is due mainly to a lack of funding to create a unified database, lack of engagement from key stakeholders, and the use of different IT formats and frameworks for collecting data. According to the NIH Genetic Testing Registry, several hundred laboratories are performing genetic testing, but only 25 have submitted data a total of ∼90,000 variants to ClinVar. Clinical laboratories generally receive very limited information about a patient's clinical presentation when a test is ordered, making it impossible to deposit accurate phenotypic data into these databases. Therefore, caution must be exercised when trying to correlate phenotype with database genotype data since the database may contain serious errors in reporting phenotype. In addition, we must remember that our understanding of gene–disease causality is not static and interpretations may change with time.

Resolving Variants of Unknown Clinical Significance

Most clinical laboratories use ad hoc data mining processes for variant interpretation and use large genomic data sets discussed above as well as their internal laboratory data. Differences in interpretation can occur between laboratories due not only to differences in data available to a laboratory but also to a lack of standards for variant classification. The NHGRI-funded Clinical Genome (ClinGen) Resource aims to improve understanding of genomic variation through data sharing and collaboration, starting with aggregating sequence and structural variants in the National Center for Biotechnology Information (NCBI)'s publicly available ClinVar knowledgebase. Emory Genetics Laboratory (EGL), ARUP, Laboratory for Molecular Medicine (LMM), and University of Chicago tested this process by submitting over 20,000 variants collectively in genes associated with various disorders [ASHG abstract with: http://www.ncbi.nlm.nih.gov/clinvar/, accessed 03/11/2016]. Of the 15,000 variants submitted by EGL, comparison to other laboratories classifications revealed discrepancies for 82 variants (LMM), 15 variants (ARUP), and 28 (University of Chicago). Out of the 43 differences between EGL, ARUP, and U of Chicago, more than 95% were minor differences (likely benign/ benign) and 5% were more significant differences (VOUS/benign or pathogenic). All minor differences were resolved based on additional data available in the laboratories (e.g., functional data, direct communication with a research investigator or in house testing of additional family members). Of the more significant differences, 97% were resolved. Those that could not be resolved were mainly based on differences in laboratory specific classification rules. An analysis of LMM/EGL discrepancies revealed three common reasons, including (1) threshold differences (e.g., the minor allele frequency above which a variant is classified as benign), (2) reporting differences influencing classification stringency (e.g., the inclusion of likely benign variants on clinical reports leading to a lower threshold for classifying them as such), and (3) the weight given to computational predictions. Of 82 variants compared, only one was deemed a discrepancy that could not be resolved without additional clinical expert input (an atypical Fabry disease variant). More recent submissions to ClinVar have increased the number of variants >90,000, providing a rapidly growing resource for the community and providing a substrate for catalyzing the much needed harmonization of clinical variant classification standards.

Big and Niche Pharma and Rare Disease

Rare diseases, though individually rare, are collectively common, and big pharma, which had until recently shied away from pursuing targeted therapeutic approaches for genetic disease, is now interested in developing drugs to treat these diseases. Many niche pharma companies have drugs in clinical trials, with a prominent example being the range of treatment options in different phases of clinical trials for Duchenne muscular dystrophy [Falzarano et al., 2015]. Also, the recent discovery of gene-editing approaches, such as the CRISPR/Cas system, has triggered further interest in developing approaches to treat rare diseases [Wojtal et al., 2016]. This has clearly necessitated the creation of a unified global database for gene–disease association and their variants.

Patient Registries and Disease Advocacy Groups

Many patient advocacy groups have taken the lead in creating patient registries, driven mainly by patients who register themselves by submitting their gene test results and clinical details. These data are self-reported and at times curated by physicians. Most of these databases are driven toward “clinical trial readiness” for new therapeutic strategies, which are coming up rapidly. It is clear from these registries that patients are driving the effort of “personalized medicine” and are no longer interested in keeping their results to themselves, but are willing to participate in such databases, which can give them access to new clinical trials and help them become part of a community that shares the goals and concerns of their particular disease [Peterson et al., 2013].

Privacy Issues in Databases

Most of the databases, which aggregate data from the literature or present gene-level data, do not contain any patient-level information or full clinical histories to avoid any identification issues. Clinical laboratories probably risk the most exposure and have policies in place for depositing data in various databases. Privacy issues naturally limit the amount of information that can be deposited into databases.

The Need for an Aggregated Global Genomic Variant Database

The multiple fragmented efforts to deposit, collect, store, and interpret data have created conflict in the community and resulted in the use of resources that could otherwise be directed toward a single streamlined effort with a uniform format for data collection and reporting. Many new efforts, such as the Global Alliance for Genomics and Health, are focused on developing unified strategies for responsible, voluntary, and secure sharing of genomic and clinical data. Despite a tremendous amount of work, there are still multiple parallel approaches being taken in the community at times leading to collecting duplicate data, which creates errors in our estimation of gene-variant burden in the population.

Given global Web accessibility, it is impossible to limit the creation of databases. Today, only 5,200 out of the 22,000 genes in the human genome are known to be disease associated. Many more discoveries are expected to come at a rapid pace in the next decade. It is also clear that some genes previously shown to be disease associated no longer cause disease and are either redundant functionally or may be implicated in an as yet unknown disorder.

High-quality, accurate classification of the clinical significance of an identified sequence variant is key in personalized genomic medicine. The increasing availability of population data on genetic variation covered above has greatly improved our ability to interpret normal sequence variation; however, sequence data from most patients with genetic disease resides with the clinical laboratories. It is critical that clinical laboratories recognize the importance of the data they hold and share these data with the medical community.

What Will a Global Genomic Variant Database Look Like?

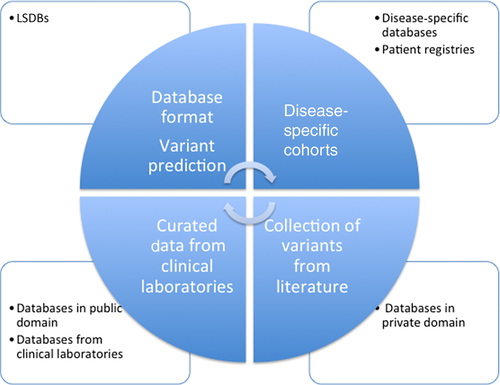

We must design a global approach to gather genetic information in different databases and registries into a common global database, which would not only act as a repository, but also give appropriate recognition to efforts already in place (Fig. 1). This can be done through microattribution, which needs to be recognized by funding agencies as a positive effort made by the investigator. Without support, investigators may not submit the data to databases due to efforts focused on publications, which is needed to secure grants for research purposes.

Who Can Fund a Global Genomic Variant Database?

Funding for the various databases comes either from the organization creating the databases or from large funding agencies. Pharmaceutical companies, which need access to genomic data and patients, harness the information via collaborative efforts with patient advocacy groups or joint efforts with different entities. The total amount of funds that goes into these efforts is large, and it is clear that if a common fund were created through a nonprofit entity, it could probably achieve the goals of a global database for the community.

Should the Database Be Freely Accessible and How Can We Make It Sustainable?

In considering the creation of a global database, it is important to put mechanisms in place to make it sustainable. This can be achieved in multiple ways by creating different levels of accessibility to the depth of data that needs to be accessed. It is important that the data are freely available to patient advocacy groups and patients, but entities using the data for clinical interpretation or developing therapeutic strategies could gain fee-based access to the database. This approach will make the database sustainable and support the huge effort required to create and maintain the database.

Summary

Technological advances have made it necessary for the community to consider approaches to the large-scale collection of data from genome sequencing and clinical outcomes. It is time to investigate how we can collect data from individual data sets and the current pattern, where data exist in silos and are kept in a nonstandardized format. Large community efforts to aggregate data should be supported to create a global database, which would have multiple levels of data and grant different levels of accessibility to users to make it sustainable. Above all, it is critical that the key stakeholders come together now to drive the implementation of a global genomic variation database.