Right-hemispheric processing of non-linguistic word features: Implications for mapping language recovery after stroke

Abstract

Verbal stimuli often induce right-hemispheric activation in patients with aphasia after left-hemispheric stroke. This right-hemispheric activation is commonly attributed to functional reorganization within the language system. Yet previous evidence suggests that functional activation in right-hemispheric homologues of classic left-hemispheric language areas may partly be due to processing nonlinguistic perceptual features of verbal stimuli. We used functional MRI (fMRI) to clarify the role of the right hemisphere in the perception of nonlinguistic word features in healthy individuals. Participants made perceptual, semantic, or phonological decisions on the same set of auditorily and visually presented word stimuli. Perceptual decisions required judgements about stimulus-inherent changes in font size (visual modality) or fundamental frequency contour (auditory modality). The semantic judgement required subjects to decide whether a stimulus is natural or man-made; the phonologic decision required a decision on whether a stimulus contains two or three syllables. Compared to phonologic or semantic decision, nonlinguistic perceptual decisions resulted in a stronger right-hemispheric activation. Specifically, the right inferior frontal gyrus (IFG), an area previously suggested to support language recovery after left-hemispheric stroke, displayed modality-independent activation during perceptual processing of word stimuli. Our findings indicate that activation of the right hemisphere during language tasks may, in some instances, be driven by a “nonlinguistic perceptual processing” mode that focuses on nonlinguistic word features. This raises the possibility that stronger activation of right inferior frontal areas during language tasks in aphasic patients with left-hemispheric stroke may at least partially reflect increased attentional focus on nonlinguistic perceptual aspects of language. Hum Brain Mapp, 2013. © 2012 Wiley Periodicals, Inc.

INTRODUCTION

In the past two decades, behavioral and functional imaging studies of word processing have shown that subjects implicitly process stimulus-inherent perceptual features irrelevant to a given task [Kappes et al., 2009; Price et al., 1996; Schirmer, 2010]. Automatic processing of inherent stimulus characteristics has been demonstrated for semantic [Collins and Cooke, 2005; Hinojosa et al., 2010; Kuchinke et al., 2005; MacLeod, 1991; Price et al., 1996] and nonlinguistic stimulus content [Appelbaum et al., 2009; Roser et al., 2011]. While functional imaging studies of implicit linguistic processing tended to reveal predominant involvement of left hemispheric language areas [Price et al., 1996], implicit processing of nonlinguistic stimulus features such as emotional prosody provided some evidence for right hemispheric involvement [Appelbaum et al., 2009; Roser et al., 2011]. So far, linguistic processing has never been directly contrasted with perceptual processing, using the same word stimuli within the same group of subjects.

The potential contribution of concomitant perceptual processes to right-hemispheric activation during linguistic tasks is particularly relevant to neuroimaging studies of functional recovery from aphasia following left-hemispheric stroke. In this context, right-hemispheric activation in regions homologous to left lateralized language areas has been interpreted as compensatory linguistic processing due to reorganization within the language network [e.g., Saur et al., 2006; Thiel et al., 2006; Winhuisen et al., 2005]. Studying the potential contribution of perceptual nonlinguistic processes on activation of the right hemisphere may help to explain bilateral or right-dominant activation patterns during language processing in persons with aphasia after brain damage.

This study used functional MRI (fMRI) to clarify the role of the right hemisphere in the perception of nonlinguistic word features in healthy individuals. The experimental task was inspired by Devlin et al. [2003] who investigated the contribution of the left IFG to semantic processing by contrasting semantic with phonologic judgements using the same set of words. Additionally, a low-level perceptual control task was implemented in that study in which subjects were asked to judge the length of a line drawn underneath each written stimulus. We extended the experimental task used by Devlin et al. [2003] in three ways. (i) Given the focus of the study on stimulus-related perceptual processing, we modified the perceptual control condition to a task in which the perceptual judgements had to be performed on the word stimuli themselves. By embedding the perceptual manipulation into the word stimuli we were able to explore task-induced changes in activation for perceptual relative to linguistic processing on the basis of identical word stimuli. (ii) We directly compared perceptual processing to each of the two linguistic conditions (i.e., semantic or phonologic decisions). This enabled us to identify right-hemispheric regions that were sensitive to perceptual word features independent of the type of linguistic processing (semantic or phonologic processing). (iii) We presented stimuli not only in the visual but also in the auditory modality. Perceptual manipulations consisted of a change in fundamental frequency of a spoken word or a change in font size of a written word. Therefore, the study design was suited to identify modality-specific as well as modality-independent effects of nonlinguistic perceptual word processing.

Since stimuli were identical across the linguistic and the perceptual tasks, we reasoned that any BOLD signal changes between conditions should be attributable to the type of cognitive process (i.e., perceptual versus linguistic process). Furthermore, relative to semantic or phonologic processing, we expected that right hemispheric activation should increase in the perceptual task, in which subjects were asked to explicitly direct their attention to auditory and visual perceptual stimulus characteristics.

MATERIALS AND METHODS

Subjects

Fourteen right-handed German native speakers with no history of neurological disorder or head injury (seven females, 27–61 years old, mean age 41.9; seven males, 31–69 years old, mean age 49.1) participated. Right-handedness was tested with the 10-item version of the Edinburgh Handedness Inventory [Oldfield, 1971]. All subjects gave written informed consent prior to the investigation and were paid for participation. The study was performed according to the guidelines of the Declaration of Helsinki and approved by the Ethics Committee of the University Hospital Hamburg-Eppendorf.

Experimental Design

Figure 1 illustrates the experimental design. The study used a three-factorial within-subject design with the factors task (semantic, phonologic, or nonlinguistic perceptual judgments), modality (spoken vs. written word stimuli), and perceptual manipulation (manipulated vs. nonmanipulated word stimuli). Word stimuli were divided into two sets (Set A and Set B) each containing 56 unique stimuli. Stimuli in Set A were matched to those in Set B with respect to word frequency, number of letters, and imageability, and contained an equal number of man-made vs. natural and two- vs. three-syllable words. For the first half of the subjects, Set A was presented auditorily and Set B was presented visually. After half of the subjects had been tested, Set A was switched with Set B such that Set A was now visually presented, whereas Set B was presented in the auditory modality. Thus, all of the 112 stimuli were presented in both modalities, in order to minimize stimulus-induced differences in the planned comparisons between modalities.

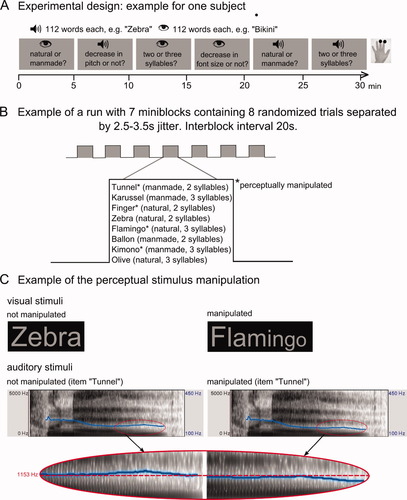

A: Experimental design, example for one subject. The experiment consisted of three auditory and three visual runs. The order of runs was counterbalanced across subjects. B: Example of a run. Each miniblock was preceded by the same brief instruction. In total, there were three runs for each modality, such that each stimulus was repeated three times, once per task per modality. Within miniblocks, stimuli were pseudorandomized such that there were no more than three repetitions of stimuli with the same feature (e.g., manmade). Original stimuli were in German. C: Examples of manipulated and nonmanipulated stimuli. In the visual perceptual task, font size decreased across the length of the word. In the auditory perceptual task, vocal pitch was decreased 13 halftones towards the end of the word. Pictured are two spectrograms of an original stimulus item. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

Experimental stimuli were divided into six runs with 56 stimuli each (Fig. 1A,B). Each subject received three “auditory” and three “visual” runs. In the three auditory runs, the same 56 auditory stimuli (e.g., set A) were presented while subjects performed a different task in each run (semantic, phonologic, or nonlinguistic task, respectively). In the same manner, three of the six runs presented the other 56 stimuli visually (e.g., set B) three times while subjects performed a different task in each run. Each word only appeared once within a run. The order of auditory and visual runs was pseudorandomized (with the constraint of no more than two runs of the same modality in a row) and counterbalanced across participants. An example of an individual sequence is given in Figure 1A.

Task and presentation modality were held constant across runs. Within runs, stimuli were arranged into seven “mini-blocks.” Each mini-block was preceded by the same spoken or written instruction, according to the modality and task of the given run. To increase design efficiency, miniblocks were separated by 6 periods of 20 s of silent rest during which the screen remained dark and no stimuli were presented. Each mini-block contained eight stimuli (four three-syllable and four two-syllable words for which the semantic, phonologic and nonlinguistic perceptual characteristics had been completely crossed) which were separated by a randomly assigned stimulus onset asynchrony of between 2.5 and 3.5 s. Subjects initiated the presentation of the stimuli in the mini-block by button press. The order of mini-blocks within each run and the order of stimuli within mini-blocks were pseudorandomly assigned for each subject. Each run took about 4.5–5 min to complete.

Before scanning, the experiment was explained and subjects had a training session outside the scanner during which subjects practiced all of the tasks with at least ten practice trials per task. Different practice items were used to explain the three different tasks per modality, to focus subjects' attention on the task and, as much as possible, guard for potential “spill-over” and recognition effects resulting from using the same stimuli repeatedly across the three runs per modality.

Tasks

Subjects performed three different tasks across the visual and the auditory modality. In the perceptual auditory task, subjects decided whether or not there had been a decrease in vocal pitch towards the end of the word. In the perceptual visual task, subjects had to indicate whether or not font size had decreased towards the end of the word. The phonologic task required a decision on whether the word stimulus had two or three syllables. In the semantic task, subjects decided whether a word represented a natural or man-made item. Subjects were instructed to respond as quickly and as accurately as possible by pressing one of two buttons on a response box with their left middle and index finger, respectively.

Word Stimuli

In analogy to the study by Devlin et al. [2003], the stimulus material was held constant while the task was varied. To enable subjects to make perceptual judgements about inherent surface features of the auditory and visual word stimuli, we applied two specially piloted perceptual auditory and visual manipulations across participants and stimuli in both modalities, as described below. At the same time, we made sure that the nonlinguistic perceptual manipulation of the word features did not affect the processing of semantic and phonologic stimulus characteristics.

In summary, 112 two- and three-syllable German nouns representing concrete natural and man-made items were chosen as stimuli. Word frequency was at least 1 per million (with the exception of 11 items which had a frequency of <1 on a Standard German word frequency count); average frequency was 12 per million tokens. In piloting, about 300 frequent, unambiguous nouns from the CELEX lexical database for German (Centre for Lexical Information, Max Planck Institute for Psycholinguistics, The Netherlands) were selected. No compound nouns, hyperonyms or foreign words were included. Thirty German native speakers (15 females, age 24–47 years, mean age 29.0 years) independently categorized each item as either man-made or natural, rated each item's imageability on a 4-point scale ranging from 1 (concrete) to 4 (abstract), and provided the number of syllables for each item. Only those words which (i) at least 29 out of 30 pilot subjects correctly classified as being either man-made or natural, (ii) received an average imageability rating of <1.6, and (iii) reached >90% agreement (i.e., agreement among at least 27 of 30 pilot subjects on the intended syllable count) were included. Since more two-syllable than three-syllable words passed the above validation criteria, we were able to select the two-syllable nouns that most closely matched the three-syllable words in terms of imageability ratings and number of letters. In total, 56 three-syllable nouns and 56 two-syllable nouns were selected.

Auditory versions of the words were recorded by an experienced female speaker and had an average duration of 0.74 s/0.85 s (two-syllable words in Set A/Set B) and 0.87 s/0.86 s (three-syllable words in Set A/Set B). Half of the auditory stimuli were manipulated using the sound editing program Adobe Audition 2.0. (www.adobe.com/products/audition). The manipulation consisted of an audible yet unobtrusive decline (13 halftones) in vocal pitch towards the end of the word. In analogy to the auditory condition, the font size of the letters was manipulated for half of the visual stimuli such that it changed linearly from an initial 70 pt to a final 50 pt font size (Type Albany AMT) across the length of the word, to result in a noticeable yet unobtrusive change in the visual appearance of the word (Fig. 1C). Both manipulations were piloted before implementation to ensure that they were perceptually noticeable without impeding performance on the linguistic tasks.

Stimulus Presentation and Response Collection

Auditory word stimuli were presented via MR-compatible headphones (MR Confon, Magdeburg, Germany). Sound volume was individually adjusted for each subject. Visual stimuli appeared in light gray on a dark screen. Visual stimulus duration was set to the mean duration of the auditory word stimuli, which changed slightly when the auditory and visual stimulus lists were switched. Stimuli were projected centrally via LCD projector onto a screen placed behind the head coil. Subjects viewed the screen via a mirror on top of the head coil (10° × 15° field of view). Presentation of stimuli and task sequence, and collection of motor responses were controlled by the software “Presentation” (Version 11.0, Neurobehavioral Systems, www.neurobehavioralsystems.com). The start of each session was announced by brief auditory or visual instructions. Throughout scanning subjects kept their eyes open.

Magnetic Resonance Imaging

Functional MRI was performed on a 3T Siemens TRIO system (Siemens, Erlangen, Germany). Approximately 200 brain volumes were acquired per fMRI run with 38 contiguous axial slices covering the whole brain (3 mm thickness, no gap) using a gradient echo-planar (EPI) T2*-sensitive sequence (TR 2.52 s, TE 30 ms, flip angle 90°, matrix 64 × 64 pixel). The first three volumes were discarded to allow for T1 equilibration effects. Following the acquisition of the functional images, a high-resolution (1 × 1 × 1 mm voxel size) structural MR image was acquired for each participant using a standard three-dimensional T1-weighted MPRAGE sequence.

Analysis of Behavioral Data

Reaction times (RTs) were computed from the onset of the auditory or visual stimulus. To analyze RTs for correct responses, we performed a three-way repeated measures ANOVA (SPSS software, version 13, Chicago, IL), using the within subjects factors “task” (three levels: semantic vs. phonologic vs. perceptual), “modality” (two levels: auditory vs. visual), and “manipulation” (two levels: manipulated vs. nonmanipulated). The third factor was included to investigate potential influences of the perceptual stimulus manipulation on linguistic processing. The Greenhouse-Geisser correction was used to correct for nonsphericity when appropriate. Conditional on a significant F-value we performed post-hoc paired t-tests to characterize the differences among experimental conditions that resulted in significant main effects or interactions in the ANOVA. Error trials were analyzed separately. As Kolmogorov-Smirnov tests indicated that error rates were not normally distributed, Bonferroni-corrected nonparametric Wilcoxon signed-rank tests were used. Finally, since subjects' age ranged from 27 to 69 years, we tested for a linear relationship between age and RT. For the behavioral data, the level of significance was set to P < 0.05.

Analyses of the fMRI Data

Task related changes in the blood oxygen level dependent (BOLD) signal were analyzed using Statistical Parametric Mapping (SPM5; Wellcome Department of Imaging Neuroscience, www.fil.ion.ucl.ac.uk/spm) implemented in MATLAB 7.1 (The Mathworks Inc., Natick, MA) [Friston et al., 1995; Worsley and Friston, 1995]. Preprocessing used the normalization routine as implemented in SPM5 which combines segmentation and coregistration of the individual T1-weighted image, bias correction, and spatial normalization [Crinion et al., 2007]. First, all functional EPI image slices were corrected for different signal acquisition times by shifting the signal measured in each slice relative to the acquisition of the middle slice. Next, all volumes were spatially realigned to the first volume to correct for motion artefacts. The individual T1-weighted image was then segmented using the standard tissue probability maps provided in SPM5, and coregistered to the mean functional EPI image. The information resulting from a second segmentation of the coregistered T1-image was then used to normalize all of the functional EPI images. All normalized images were then smoothed using an isotropic 10-mm Gaussian kernel to account for intersubject differences.

Statistical analyses of the functional images were performed in two steps. At the first level, the six runs per subject (semantic, phonologic, and perceptual processing in the auditory and visual modality, respectively) were modeled as six separate sessions, each consisting of at least three regressors. One regressor modeled onset and actual duration of the instruction events. The second and third regressors modeled the onsets of the nonmanipulated and manipulated word items within each run, respectively, as individual events within miniblocks. This enabled us to examine potential differential influences of the perceptual manipulation on the semantic, phonologic, and perceptual tasks. Only those trials to which subjects gave the correct response were included. The onsets of erroneously judged word events plus those to which subjects gave no response (i.e., missing responses) were modeled as a separate regressor of no interest. All event onsets in each regressor were convolved with a canonical hemodynamic response function as implemented in SPM5. Voxel-wise regression coefficients for all conditions were estimated using the least squares method within SPM5, and statistical parametric maps of the t statistic (SPM{t}) were generated from each condition. At this step we computed the contrast of each of the six (three auditory, three visual) conditions against rest. As each condition was modeled by three regressors (i.e., first regressor containing instruction events; second regressor containing nonmanipulated word events, third regressor containing manipulated word events), the first level models, computed for each individual subject, resulted in at least 18 (3 regressors for each of the six conditions) and up to 24 separate regressors (when subjects had made at least one error in each of the conditions).

Only the regressors of the correctly judged word items (i.e., one regressor for manipulated, one for nonmanipulated items) were used to compute the contrast images which contributed to the second level analyses, treating participants as random effect. The 12 contrast images per subject, each computed against rest, were composed of 3 (perceptual, phonological, semantic) × 2 (auditory, visual) × 2 (manipulated vs. nonmanipulated) images. To examine effects of task and perceptual manipulation, the 12 contrasts were entered into a within-subject ANOVA model, using the “flexible factorial design” option in SPM 5 with correction for nonsphericity. Subjects' individual reaction times were averaged across manipulated and nonmanipulated items in each condition and entered as a covariate to model differences in RT between the experimental conditions (e.g., between responses to auditory or visual word stimuli). This ANOVA model was used to contrast the BOLD signal level in the experimental conditions of interest, and to identify common activations across conditions (e.g., common increases in BOLD signal evoked by auditory and visual word stimuli). For the latter, we applied conjunction analyses under the conjunction null hypothesis [Nichols et al., 2005]. The conservative “conjunction null” tests for a logical AND of effects; that is, it tests whether there is an effect at a predetermined level of significance in all contrasts that are entered into the conjunction [Nichols et al., 2005].

We were interested in identifying brain regions showing modality-specific and modality-independent increases in activity with perceptual word processing as opposed to linguistic word processing. To this end we first computed conjunctions of task-related activation in the auditory and the visual modality for each type of judgement (i.e., semantic, phonologic, or perceptual judgement) relative to resting baseline, regardless of stimulus manipulation. The conjunctions were set up to test for common activation during a given task that occurred consistently, regardless of modality and regardless of whether or not stimuli were manipulated.

Next we set up differential tests, contrasting perceptual with linguistic processing. In each modality, we contrasted perceptual judgements with semantic and phonologic judgements, respectively. After having computed the differential effects of perceptual compared to linguistic processing separately for the two modalities, we entered the resulting four contrast images (i.e., perceptual vs. phonological and perceptual vs. semantic, in both the auditory and visual modality) into a conjunction analysis to identify modality-independent activation due to perceptual relative to linguistic (i.e., semantic and phonologic) processing. This conjunction was computed at a threshold of P < 0.01 (uncorrected).

In an additional set of interaction analyses, we explored the impact of perceptual stimulus manipulation (referring to the manipulated or nonmanipulated surface features of the stimuli) on functional activation during the different tasks (i.e., semantic, phonologic, or perceptual task) because we expected a selective effect of stimulus manipulation on perceptual nonlinguistic judgements but not on the linguistic judgments.

To ensure that the observed activation differences were not driven by differences in task-related functional deactivation in the respective comparison condition, we inclusively masked all differential contrasts and interactions with the contrast of the respective condition of interest compared to rest [cf. Cohen et al., 2004]. The threshold for these inclusive masks was set at P < 0.05 (uncorrected). Apart from the conjunction examining modality-independent activation, all contrasts were computed at an uncorrected threshold of P < 0.001, applying an extent threshold of at least 25 contiguous voxels. Family-wise error correction for multiple comparisons was performed at a cluster level with P < 0.05 as significance criterion. The SPM anatomy toolbox (Version 1.3b) was used for anatomical localization of regional activation peaks which are reported as peak Z-scores and the corresponding Talairach coordinates in standardized MNI space [Eickhoff et al., 2005].

RESULTS

Behavioral Data

Errors

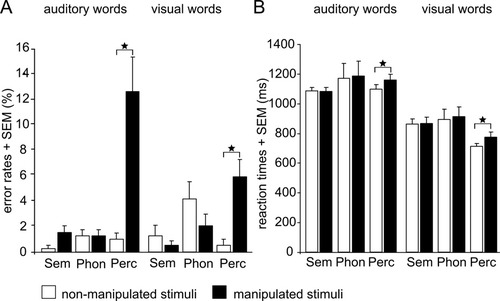

The group data of each experimental condition are listed in Supporting Information Table 1. Overall, subjects were highly accurate in performing the tasks (mean accuracy 97%, range: 95–100%). Most of the errors were made for manipulated stimuli in the vocal pitch decision with an error rate of 12.5%, followed by font size decision on manipulated stimuli with an error rate of 5.9% and the phonologic decision on nonmanipulated visual stimuli with an error rate of 4.0% (Fig. 2A). Error rates for the other tasks and percentage of missing responses were negligible (<2.5%).

Average error rates (A) and reaction times for correct reponses (B) for 14 subjects. Error bars represent one standard error of the mean (SEM). Both panels show significant influences of stimulus manipulation within the perceptual condition. For other significant effects, please refer to the text. *P < 0.05; two-tailed; ms, milliseconds; Sem, semantic; Phon, phonologic; Perc, perceptual task.

Subjects made significantly more errors in response to manipulated compared to nonmanipulated words only in the auditory (Z = 3.20; P = 0.001) and visual perceptual task (Z = 2.87; P = 0.004). By contrast, the manipulation of the visual and auditory stimuli had no effect on error rate in both linguistic tasks (P > 0.23), indicating that the perceptual manipulations did not affect accuracy of phonologic and semantic decisions. Wilcoxon tests further revealed significantly increased error rates for auditorily manipulated stimuli in the perceptual task compared to both the semantic (Z = 3.07; P = 0.002) and the phonologic task (Z = 3.20; P = 0.001). A similar effect was present in the visual modality with increased errors for visually manipulated stimuli during perceptual relative to semantic (Z = 2.85; P = 0.002) and phonologic decisions (Z = 2.27; P = 0.023), but the latter did not survive a Bonferroni correction for multiple comparisons. Finally, we found a trend towards increased error rates for nonmanipulated stimuli in the visual phonologic compared to the perceptual task (Z = 2.59; P = 0.008) which did not survive Bonferroni correction.

Reaction times

Mean reaction times across tasks and stimulus type ranged from 701 ms to 1169 ms (overall mean 968 ms; SD 174.83 ms; please refer to Supporting Information Table 1 and Fig. 2B for details). Repeated measures ANOVA showed a main effect of modality (F1,13 = 195.76, P < 0.0001) indicating overall shorter reaction times for visual than auditory stimulus presentation. Reaction times were longer for decisions made on manipulated relative to nonmanipulated stimuli (main effect of stimulus manipulation: F1,13 = 11.24, P = 0.005). This effect was mainly driven by an increase in RT in the perceptual condition in which the stimulus manipulation had to be judged, as reflected by a task-by-manipulation interaction pooled across modalities (F2,26 = 5.77, P = 0.008). Indeed, post-hoc pair-wise comparisons revealed increased reaction times for the manipulated vs. nonmanipulated stimuli only in the perceptual task (t13 = 3.21; P = 0.003), but neither in the phonologic task (P = 0.12) nor in the semantic task (P = 0.89; Fig. 2B).

Finally, we found a task-by-modality interaction (F2,26 =5.34, P = 0.011; Fig. 2B) pooled across manipulated and nonmanipulated stimuli. This interaction was caused by task-related differences in reaction times within the visual modality. Participants responded significantly faster in the font size task than in either the semantic (t13=4.67, P = 0.0001) or the phonologic task (t13=2.99, P = 0.01). The difference between the visual semantic and the visual phonologic task was not significant, however (P = 0.42). No significant differences in reaction times were found among the three tasks in the auditory modality (all P > 0.10). Correlational analysis revealed no systematic influence of age on reaction times [Pearson r = −0.25, P = 0.38 (two-tailed)].

Functional Brain Activation

Modality-independent main effects of task

For all three tasks, conjunction analyses of the respective main effects across modality showed modality-independent activation in motor core regions related to visuomotor mapping and the generation of button presses, including the cerebellum, primary motor cortex, and anterior cingulate cortex. For semantic decisions, additional activation was present in left middle temporal cortex and fusiform gyri bilaterally, as well as in left inferior parietal cortex. Further, the inferior and posterior middle frontal gyri showed widespread activation in the left as well as the right hemisphere, which extended into anterior aspects of the inferior frontal gyri (Supporting Information Fig. 1A). For phonologic decisions, activation in the inferior and posterior middle frontal gyri bilaterally was located more posteriorly, whereas there was widespread activation in inferior parietal cortices bilaterally (Supporting Information Fig. 1B). Perceptual decisions lead to activation changes in the above mentioned regions. In addition, making perceptual judgements was associated with modality-independent activation in right inferior frontal areas (Supporting Information Fig. 1C).

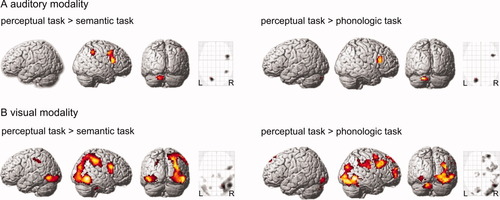

Differential effects of task within and across modalities

Our primary interest was to identify brain regions in which perceptual judgements were associated with a stronger activation than linguistic (i.e., semantic and phonologic) judgements on the same stimuli. Thus, we first contrasted nonlinguistic perceptual decisions with the two types of linguistic decisions individually within modality. In the auditory domain, perceptual processing relative to semantic processing resulted in activation in the left cerebellum, and in a larger cluster of activation centering in pars triangularis and extending into pars opercularis of the right IFG. Additionally, a smaller cluster of activation was located in the right inferior parietal lobe. Perceptual relative to phonologic processing evoked comparable activation in the left cerebellum and in right IFG (Supporting Information Table 2 and Fig. 3A). In the visual domain, perceptual relative to semantic processing resulted in large clusters of increased activation predominantly in right occipital and inferior parietal cortex as well as in pars opercularis of the right IFG. Perceptual relative to phonologic decisions resulted in a similar activation pattern, albeit in less pronounced increases in right inferior parietal cortex (Supporting Information Table 2 and Fig. 3B).

A: Contrasts of the auditory perceptual with the auditory semantic and phonologic conditions, respectively. B: Contrasts of the visual perceptual with the visual semantic and phonologic conditions, respectively. All contrasts computed at P < 0.001 uncorrected, using an extent threshold of 25 contiguous voxels (whole brain analysis) and a family-wise error correction at P < 0.05 at the cluster level, and inclusively masked by main effects of auditory (or visual, respectively) perceptual processing at P < 0.05 (uncorrected). [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

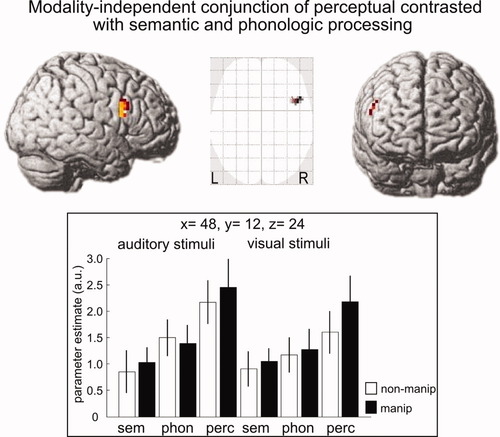

We then computed a conservative conjunction analysis to examine modality-independent increases in activity with perceptual word processing compared to linguistic word processing. The conjunction was based on the four individual contrasts of the perceptual relative to the semantic and phonologic tasks for auditory and visual stimuli, as described above and depicted in Figure 3. This conjunction revealed right hemispheric activation for perceptual relative to linguistic processing in a single right hemispheric cluster of 38 contiguous activated voxels. This cluster was located in pars opercularis of the right IFG (see Fig. 4; peak Z-score 4.51, peak stereotactic x, y, z-coordinates [48, 12, 24]). The parameter estimates of BOLD signal changes in this area indicate that perceptual processing resulted in comparable increases in activation during visual and auditory processing (Fig. 4).

Conjunction of the perceptual compared to the phonologic and the semantic tasks, in the auditory and the visual modality, respectively (i.e., conjunction of the four contrasts depicted in Fig. 3). Contrasts inclusively masked by main effects of auditory and visual perceptual processing at P < 0.05 (uncorrected). Statistical threshold P < 0.01 (uncorrected, whole brain analysis). Parameter estimates derived from peak activated voxel in the right frontal gyrus, with the coordinates [48, 12, 24]. Original contributing contrasts are shown in Figure 3. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

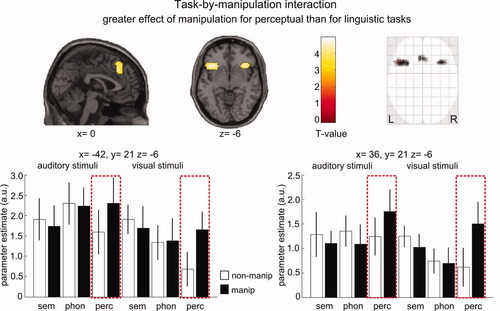

Finally, we explored whether manipulating the stimuli had an effect on the level of activation during the semantic and phonologic tasks, in the auditory and the visual modality. This was done by directly contrasting manipulated with nonmanipulated stimuli in the respective conditions. These contrasts did not reveal any increase in functional activation at the given statistical threshold. However, when semantic and phonologic processing were pooled into a single “linguistic” condition, we observed bilateral increases of functional activation in left superior middle temporal gyrus and right superior middle temporal gyrus for auditory stimuli only (please refer to Supporting Information Fig. 2). To quantify these effects, we computed an interaction of the factor “manipulation” and the linguistic and nonlinguistic tasks. This analysis, which tested for a selective effect of manipulation in the perceptual (relative to the semantic and phonologic) task, revealed activation of pars orbitalis and adjacent anterior insula of the left IFG (cluster extent 161 voxels, peak Z-score 4.72, stereotactic x, y, z-peak coordinates [−42, 21, −6]), of the right anterior insula (cluster extent 79 voxels, peak Z-score 4.53, stereotactic x, y, z-coordinates [36, 21, −6]) and of anterior cingulate cortex (cluster extent 117 voxels, peak Z-score 4.29, stereotactic x, y, z-coordinates [−3, 33, 39]; Fig. 5). In these regions, brain activity showed a selective increase for manipulated items in the perceptual task, during which subjects made explicit judgements regarding nonlinguistic perceptual changes. This selective increase in activation for manipulated words in the perceptual task was modality independent (Fig. 5). No brain region showed a selective increase in neuronal response to perceptual manipulation in the linguistic relative to the perceptual tasks.

Task-by-manipulation interaction, testing for differential effects of the perceptual manipulation depending on the given task. Statistical threshold P < 0.001 (uncorrected, whole brain analysis) using an extent threshold of 25 contiguous voxels and a family-wise error correction at P < 0.05 at the cluster level. Interaction inclusively masked by main effects of manipulated auditory and visual perceptual processing at P < 0.05 (uncorrected). Parameter estimates shown for peak activated voxels in the left and right anterior insula. Red dotted lines indicate greater effect for manipulated compared to nonmanipulated stimuli in the perceptual conditions. a. u., arbitrary units. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

DISCUSSION

The present study was designed to investigate right-hemispheric involvement in the processing of stimulus-inherent perceptual word features. Using an identical set of auditory and visual word stimuli, we compared task-related BOLD signal changes while healthy subjects made perceptual and linguistic decisions. Perceptual decisions were nonlinguistic as participants had to detect a change in font size (visual stimuli) or fundamental frequency contour (auditory stimuli) which occurred in half of the trials.

The main novel finding of this study is that the right hemisphere is involved in the perceptual processing of nonlinguistic word features. When subjects focused their attention on perceptual changes in font size in the visual stimuli, there were widespread increases in occipital, inferior temporal, inferior parietal and inferior frontal areas in the right hemisphere, compared to both linguistic tasks (i.e., semantic and phonologic judgements). Likewise, in the auditory stimuli we observed right-hemispheric increases in right inferior frontal and parietal areas when subjects focused their attention on auditory perceptual changes in frequency contour, relative to linguistic features such as semantics or phonology. Accordingly, the conjunction analysis which integrated the activation patterns induced by auditory or visual word stimuli confirmed that judgements on perceptual nonlinguistic features consistently activated the pars opercularis of the right IFG. Pars opercularis is commonly considered to be one of the two areas making up Broca's area [i.e., BA 44, pars opercularis, and BA 45, pars triangularis; for recent contributions see Amunts et al., 2010 or Anwander et al., 2007]. In our study, the maximal “perceptual” activation [x, y, z coordinates in MNI space: 48, 12, 24] clearly falls within the boundaries of pars opercularis as defined by two widely used anatomical reference tools, the matlab-based “Anatomy Toolbox” [Eickhoff et al., 2005] and the WFU PickAtlas Tool (version 2.4. Wake Forest University School of Medicine). The location of our perceptual activation peak in pars opercularis of the right inferior frontal gyrus also corresponds well to the definition of Broca's right homologue as described by Amunts et al. [1999].

Activation in the pars opercularis of right IFG was generally comparable in the auditory and visual modality, and across manipulated and nonmanipulated word items. Therefore, we argue that activation of this right-hemispheric inferior frontal area reflects a general “perceptual mode” of processing surface features of word stimuli. This assumption is supported by the results of an additional analysis which examined differential effects of the manipulation on the perceptual and linguistic tasks. As expected, perceptual manipulation of word stimuli induced a selective increase in signal change only during perceptual processing, when the task required subjects to explicitly direct their attention to perceptual surface features of the stimuli. The resulting bilateral pattern of activation in insular and anterior cingulate cortex (ACC) was spatially distinct from the right inferior frontal areas showing a general association with perceptual processing, as described above. These activations in the anterior insula and the ACC can be attributed to increased task difficulty because perceptual decisions in trials with manipulated stimuli were consistently associated with higher error rates and longer reaction times, compared to perceptual decisions on nonmanipulated stimuli.

In the semantic and phonologic conditions, however, error rates and reaction times did not differ between manipulated and nonmanipulated items, indicating that perceptual manipulations of the word stimuli did not adversely interfere with linguistic processing. It may be assumed that subjects became aware of the perceptual manipulation even during the semantic and phonologic tasks, as they rehearsed all three tasks (including the perceptual task) before starting the experiment. Yet error rates on the linguistic tasks were low, and response times (particularly in the visual condition) were fast, suggesting that subjects were able to effectively focus on the relevant stimulus features of the given task. In the linguistic conditions, somewhat elevated error rates were observed only in the phonological judgement task for visually presented stimuli. This affected nonmanipulated more than manipulated stimuli, however. It may be the case that some subjects initially based their syllable judgment in the visual condition simply on word length—a strategy bound to lead to errors because (in anticipation of this strategy) we intentionally included some three-syllable words with relatively few letters.

It is notable that we found right inferior frontal activation across all tasks and both modalities in an overall conjunction of the main effects of semantic, phonologic and perceptual processing (as shown in Supporting Information Fig. 1D). This finding suggests that even when not explicitly asked to do so, subjects may have automatically processed the nonlinguistic perceptual surface features inherent in the word stimuli. This interpretation is further substantiated by the finding that the peak activation increases were found in a right inferior frontal region, namely pars opercularis of the right IFG, when subjects explicitly shifted their attention from linguistic toward perceptual stimulus characteristics.

Since participants made more errors in the perceptual task especially when the word stimuli were manipulated, one could argue that the right-hemispheric increases in activation might reflect activity related to monitoring or error related processing. We think that this possibility is unlikely for several reasons. First, only correct responses were included in the functional analyses. Second, there was no difference in reaction time between the perceptual and the linguistic conditions, at least for words presented auditorily (for visually presented words, reaction times were even faster in the perceptual than the linguistic conditions). Third, the increase in right-hemispheric activation during perceptual decisions was also present in trials using nonmanipulated word stimuli (please refer to Fig. 4). In these trials, error rates were comparable to the linguistic condition. Therefore, we infer that the right IFG becomes increasingly engaged in word processing the stronger the attentional focus shifts towards perceptual nonlinguistic stimulus features.

Our finding is in accordance with previous reports of right inferior frontal involvement in vocal pitch processing. According to Zatorre and colleagues [1992], the early acoustic analysis of speech stimuli takes place in the temporal lobes. However, any additional task-related requirement (such as attending to and making a judgement about changes in vocal pitch) may involve neural systems that are different from those involved in auditory perceptual analysis per se. Right prefrontal cortex may be part of a distributed network involved in the maintenance of pitch information in auditory working memory [Zatorre et al., 1992]. This interpretation fits well with the demands of our experimental auditory perceptual task, and matches later reports of pitch and auditory working memory [e.g., Zatorre, 1994; Wilson, 2009]. Consistent with the proposal that the right hemisphere is involved in mediating pitch information in auditory working memory, a right-hemispheric contribution has been suggested to emerge when auditory stimuli are recognizable as words, and when they are spoken with affective prosody [Grimshaw et al., 2003; see also Pihan, 2006, for a review]. A role of the right hemisphere in processing nonlinguistic prosodic features is underlined by neuropsychological findings as well. For example, persons with right hemisphere damage have difficulty detecting mismatches between emotional prosody and the semantic content of sentences [e.g., Lalande et al., 1992].

The present study further extends previous work by showing that right inferior frontal cortex is sensitive to stimulus aspects beyond the auditory modality – namely, to changes in the visual surface structure of written stimuli. While there has been extensive research examining the processing of written word or pseudoword stimuli under conditions in which visual processing is artificially impeded (as when words are masked or degraded, presentation rates are shortened, or words are presented in unfamiliar formats such as vertical letter alignment [for reviews, see, e.g., Massaro and Cohen, 1994; Kouider and Dehaene, 2007; Pelli et al., 2007], there are few studies examining the processing of surface features of written words under relatively natural processing conditions [e.g., Bock et al., 1993; Qiao et al., 2010]. Recent fMRI findings show that compared to printed words, easily readable handwritten words cause additional activity in ventral occipitotemporal cortex, particularly in the right hemisphere, while difficult handwriting also mobilizes an attentional parietofrontal network bilaterally [Qiao et al., 2010]. Our finding of increased right inferior frontal engagement in the perceptual processing of visual surface features is in agreement with this and other reports proposing a distinct involvement of the right hemisphere in processing perceptual features of written stimuli in a more holistic way, rather than in processing specific, parts-based orthographic characteristics [e.g., Marsolek et al., 2006].

In their review, Corbetta and Shulman [2002] propose segregated networks in the brain for visual attention: one system in superior frontal and intraparietal cortex governing top-down selection for specific stimuli; the other, localized in inferior frontal and temporoparietal areas strongly lateralized to the right hemisphere, a stimulus-driven system which may be involved in the reorientation of attention towards unattended or low-frequency events [Corbetta and Shulman, 2002]. It may have been the latter system which in the present study was involved in detecting the stimulus-inherent changes in font size – and, perhaps, even in detecting the changes in fundamental frequency contour.

Although the present study only involved healthy individuals, our findings are of potential relevance to the interpretation of language-related activation in the right-hemispheric Broca homologue in patients who recover from aphasia after left-hemispheric stroke. Poststroke activation in right inferior frontal cortex has previously been linked to compensatory or even maladaptive processing within the language system [Saur et al., 2006; Naeser et al., 2005; for a review, see Thompson and den Ouden, 2008]. The results of our study offer a third complementary explanation for right hemispheric activation during language processing, namely increased perceptual processing of nonlinguistic features of language stimuli. This interpretation is in line with a recent study by van Oers et al. [2010] suggesting that right-hemispheric activation after left-hemispheric stroke may reflect an up-regulation of nonlinguistic cognitive processing. An increased focus on nonlinguistic features might be particularly relevant when subjects listen to or read stimuli without any specific (or just basal) response requirements, leaving subjects the possibility to shift their attention towards processing the surface features of stimuli. This may be likely especially when deeper linguistic processing is hampered due to a language impairment.

Acknowledgements

This work was supported by the Bundesministerium für Bildung und Forschung (BMBF Grant no. 01GW0663). H.S. was supported by a structural grant to NeuroImageNord (BMBF Grant no. 01GO 0511) and a grant of excellence by the LundbeckFonden on the Control of Action “ContAct” (Grant no. R59 A5399).