Adaptive Responses to Adversity Drive Innovation in Human Evolutionary History

ABSTRACT

Thinking is costly. Nonetheless, humans develop novel solutions to problems and share that knowledge prosocially. We propose that adversity, not prosperity, created a dependence on innovation in our ancestors who were forced through fitness valleys to develop new behaviors, which shaped our life history characteristics and a new evolutionary trajectory. Driven by competitive exclusion into novel habitats, and unable to reduce costs associated with finding appropriate food sources once there, our Pliocene ancestors adopted a diet different from our forest-dwelling great ape cousins. In a reimagining of classic foraging models we outline how those individuals, pushed into an ecotone with lower fitness, climbed out of the fitness valley by shifting to a diet dependent on extractive foraging. By reducing handling costs through gregarious foraging and emergent technology, our ancestors would have been able to find new optima on the fitness landscape, decreasing mortality by reducing risk and increasing returns, leading to extended life cycles and social reliance.

1 Introduction

People find mental effort inherently aversive [1]. Innovation takes time and energy, often with delayed returns to investment; so why do it? Here we attempt to explore the motivations for innovation in our lineage. We lay out a general model to explain the fitness dis-advantages that may have pushed our ancestors away from an ape-like life history pattern and behavior and toward one that is marked by a high degree of sociality and a reliance on extrasomatic adaptations (technology). If the unique human life history pattern depends on a dietary shift to calorie-dense, skill-intensive resource packages [2], we must ask what led to this shift and how new cognitive, behavioral, and social challenges altered the evolutionary trajectory of our lineage. At the heart of the model are the basic assumptions of many evolutionary models, fitness matters and it is in serving the matters of fitness that all else flows.

We use optimality models to examine our assumptions of the fitness landscape and evolutionary processes at the point of divergence between our lineage from chimpanzees. Near the time of divergence, beginning in the late Miocene and continuing into the Pliocene, C4 grasses began to spread across eastern Africa, likely resulting in the decline of continuous forests [3, 4]. We assume that, as a result, competitive exclusion was higher than in previous times. That high degree of competitive exclusion led to dietary shifts for individuals living in patchier and more discontiguous ecotones. With greater travel costs between forest patches, individuals would have had to turn to reducing handling costs to meet their energetic requirements. Faced with lower fitness initially, individuals who could innovate to access new food sources were able to pass through a fitness valley to arrive at a new local optimum with a dependence on innovation and extractive foraging.

As the archeological and paleontological records preferentially store the memories of the deep past mostly in stone and bone, so too have our models and narratives preferenced some sets of behaviors over others. As Le Guin so eloquently summarizes, “It is hard to tell a really gripping tale of how I wrested a wild-oat seed from its husk, and then another, and then another, and then another…No, it does not compare, it cannot compete with how I thrust my spear deep into the titanic hairy flank while Oob, impaled on one huge sweeping tusk, writhed screaming, and blood spouted everywhere in crimson torrents, and Boob was crushed to jelly when the mammoth fell on him” [5, p. 166]. Yes, meat matters, and yes women hunt, but when we look to the models of decision-making used to explain the origins of our lineage, the balance has focused mostly on men's encounter-contingent hunting for medium-to-large sized game (for alternatives see: [6-8]).

What has been vastly under-examined in foraging models are the handling costs not just of post-encounter acquisition of the meat, or seeds, or tubers, but the costs of preparing those foods for consumption including obtaining the tools, strength, size, experience, knowledge, and/or skill to be able to do so. Economic man had the pen in his hand when he considered who provided the ingredients of his dinner, but not who cooked it let alone did the dishes [9]. In this paper, we discuss how handling costs—the time, energy, and knowledge needed to extract nutrients from the thing—must be considered to understand the division between our lineage and that of other apes. We use a model grounded in optimality logic to show how adaptation to extracted foods with higher handling costs shaped our heritage and may have been driven by those individuals closest to failure. Advantages for synergistic, gregarious foraging in this context would support investments in technology and reduce mortality for adults and juveniles, thus creating the selective forces that led to drastic changes in our evolutionary history.

2 Behavioral Ecology and Optimality Models With Special Attention to Handling Costs

We will rely on theoretical models developed by economists and biologists to understand decision-making and its resulting behavioral variation [10]. These scholars, adherents to a Darwinian perspective, recognize that our suite of behavioral and cognitive predispositions have been inherited from our ancestors who were able to survive and successfully reproduce. In its broadest sense, then, optimal foraging theory seeks to answer the basic question of how best to make a living in a given environment. Implicit within is the assumption that observed behavior is the outcome of selection pressures driving foragers to respond to environmental conditions in a way that yields the most benefit.

The most widely used of these models, the “encounter-contingent prey choice” or “diet-breadth” model [11] aims to identify which prey items one would expect to be included in a forager's diet if the desired goal is to maximize net rate of energy intake given the set of resources available, and other external constraints. Foraging decisions are distilled into simple yes/no, pursue/forgo choices that depend upon the profitability of the item at hand versus the assumed net rate of return if she passed up the resource and continued searching for something else. Post-encounter profitability is calculated by quantifying an item's energetic content (kcals) then dividing that by the amount of time spent handling the item (i.e., pursuit/harvest/capture and processing for consumption or storage; correcting for failed pursuits). A forager should decide to pursue a resource on-encounter if the post-encounter profitability of that resource is greater than the anticipated net rate of foraging return per unit foraging time. The net rate includes the costs of searching for foods as well as the costs for handling.

Of course, not all prey are encountered individually. Many resources are situated in clumps/groups/patches, and thus the prey choice model can be altered to examine patchily distributed resources as well. In the “patch choice” model [12] the decision is shifted to whether one should enter a patch, and if so, how long to stay. Here the patches themselves are the unit of exploitation, and they are either foraged within or bypassed based on the average net return across all available patches. Patch profitabilities are linked to time spent handling within the patch, often analyzed by the marginal value theorem [13]. The questions for the forager are which patches to expoit and how long to stay in each patch before moving on to the next. Where travel time is known between patches, this will impact the decision of how long to stay and forage amidst diminishing returns. In both the prey and patch models, tradeoffs are made between time spent looking for resources (search for individual items or travel between patches) and time spent handling those resources once encountered. A forager can increase net returns by either improving encounter rates with higher value prey items and patches, or by reducing handling costs.

Reducing search time can increase net returns directly through reducing the costs of finding prey. One way to reduce search costs is to alter the distribution of foods by creating environments amenable to them. For example, use of a salt lick may draw ungulates to a desired location, reducing the amount of time a hunter may spend tracking them. Alternatively, a farmer may plant crops close to their home to decrease the amount of time spent finding those foods in the wild. One could also imagine moving yourself closer to the food, and this is indeed what many foraging groups do on a seasonal basis (e.g., [14]). To reduce time spent handling a resource post-encounter often requires the invention of processing aids such as digging tools, anvils for cracking nuts, bags for easier collection, or obtaining sharp edged objects for cutting.

When anthropologists discuss human diet selection through the lens of optimality models, they often refer to diets as being either narrow or broad. To do so, they develop a list of available resources, calculate the profitability/post-encounter return rates for those items, and then rank each item based on those returns. Profitability/post-encounter return rates do not consider search costs, but include only the cost of time needed to procure and process the resource (processing costs such as cooking time, firewood gathering, and transport to camp are rarely included in post-encounter return rates [but see: [15, 16]]). Search costs are generally assumed to be averaged across all food sources. A narrow diet implies that food items with high post-encounter returns are readily available and as such, a forager is able to focus their diet on a smaller set of prey items. A broad diet includes all the highest-ranked items but also adds many that have lower post-encounter returns; as higher ranked resources become harder to find, lower ranked items must be added. Broader diets imply lower net returns partly as a function of higher search and handling costs.

As diets become more broad, rate maximization is constrained because it becomes more difficult to reduce search time (either the food has become less abundant, or you have already moved the food closer to you) leaving only costs associated with handling to vary. Under these conditions, “innovations that increase handling efficiency [tools/technology] will have their greatest effect. In fact, investments in handling improvements will be the ONLY way to achieve higher food-acquisition rates.” (emphasis ours [17, p. 64]). When a forager finds herself in an environment where she is unable to reduce search costs, we predict she will invest in reducing handling costs via innovation to maintain a minimally acceptable return rate.

3 A Model for Individual Innovation

Using the same cost/benefit logic of the diet breadth model, “tech investment” models explore constraints related to tool manufacture and use-life [18]. Trade-offs in these models include how and where to get raw materials, how much transport and processing of those materials is reasonable, and how much time should be spent on tool development, construction and maintenance. Like all optimality models, “tech investment” applications also assume a time allocation problem, that is, one cannot simultaneously invest in innovations and also pursue additional prey. A second assumption in tech investment models is that the initial investment costs in manufactuing the tech must be justified by similarly high procurement costs for the resource. These considerations bring us back to the question at the heart of this paper: under what conditions does investment in novel solutions prove to be the best use of one's time and energy?

Following optimality logic, technological investment models ask, “does spending time innovating now translate into higher net returns than continuing to use the same old method?” Formulations based on the classic prey model tackle this problem by replacing the yes/no food pursuit decision variable with a yes/no technological investment variable [19, 20]. The choice to invest depends on estimated overall returns; if those returns are adequately low, and if the time spent in investment (cost) leads to profitabilities that are higher than the average expected return, one should invest. This formulation considers improvements to existing innovations where various forms of one type of innovation fall on the same gain curve. However, if conditions change such that the current technology and/or technique no longer provide adequate returns, an investment in an entirely alternative strategy may be necessary. A second application of the model explores the point at which investments in different innovations are predicted using separate gain functions for unique types of innovation [21]. These curve-estimate models focus on overall handling costs and predict the time at which it becomes optimal to switch to a new handling strategy. While these models differ in outcomes and predictions, what is clear from each is that handling costs matter, in fact they drive investment in technology.

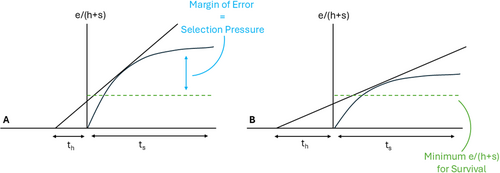

We can further tweak these models, using them conceptually rather than mechanistically, to examine how changes to external conditions shape thresholds for handling times and point to individual motivations for innovation. The model we propose (Figure 1) follows the basic tenets of the MVT in that time spent in one activity will be dictated by diminishing returns in another—in this case optimal investment in handling time (th) can be derived by time in search (ts) in a given landscape (high net return vs. low net return). We assume that gain curves differ both across individuals (according to ability, knowledge, technology) and across environments. As dictated by basic biology, each forager must meet a minimum threshold of net energetic gain to survive and/or reproduce. In this case, the links between survival and selection pressure are theoretical and not clearly dictated in a 1:1 fashion. However, as has been demonstrated mathematically, the amount of change in fitness parameters that is required to allow selective forces to push organisms to new adaptive peaks is very small [22]. In Figure 1 we show two outcomes for a single individual, one with a steep gain curve, meaning low investments in search provide high returns and the reverse, where utility curves are lower per time spent in search. In both cases the individual has to meet the same minimum e/(h + s) threshold. In each scenario, the tangent to the utility curve sets expectations for how much time a forager should tolerate in handling activities to maximize net returns.

Where the margin of error, in terms of meeting minimum caloric thresholds, is large, individuals can invest more time in search to meet their needs. However, individuals with lower margins of error will not be able to simply “find” more food. Therefore, those individuals with lower utility curves must invest more time in handling activities. In fact, failure to invest time in handling may lead to a drop in net returns below the survival threshold. For individuals in this situation, the only way to move into a higher gain curve is to invent technologies that improve the efficiency of handling activities. Given that individuals vary in their ability to meet minimum thresholds, we expect tolerance to investment in technology to vary between individuals. Those closer to failure will be more likely to spend more time engaged in behaviors related to handling. This pattern is well documented in the animal behavior literature [23, 24] where individuals who are low status or otherwise driven to neophilic states by hunger or increased caloric needs (pregnancy/lactation, or an inability to acquire calories on one's own [infants and juveniles]) are more likely to exhibit novel foraging behaviors.

Finally, we must re-emphasize that foraging return rates are merely proxy currencies through which to estimate the fitness gains of behavior [25]. We can assume that more calories in, per unit time, as a result of investment in extractive foraging behavior would result in greater survival and reproductive success, but this pattern would have to play out across the lifecycle, and must not only consider rate-maximizing behavior. Although rate-maximizing and risk minimizing diets tend to look fairly similar, fitness gains through risk-minimization may be especially important for reducing mortality. Below, we discuss the split between ourselves and apes in light of a context in which individuals must develop new technology (i.e., control of fire, wooden and stone tools, and containers) to persist.

4 Falling Into the Fitness Valley: Apes on the Edge

4.1 Ecological Change and Competitive Exclusion

In sub-Saharan Africa, grassland expansion began in the late Miocene (as early as ~10 MYA [26]). This shift was almost certainly driven by the occurrence of frequent and cyclical fires and declining atmospheric CO2 levels [26, 27]. By the Late Pliocene/Early Pleistocene open grasslands, which are especially prone to seasonal burning, had become the predominant biome [28]. Numerous lines of evidence point to increasingly mosaic landscapes and savanna expansions in the East African Rift System at or near this timeframe (~2.8 MYA), the effect of which largely influenced the structure of mammalian populations in eastern Africa, including hominins [29]. Isotopic, lipid, and carbonate data all indicate pronounced shifts from woodland to open savanna settings mediated by changing levels of precipitation from 2.4 MYA [30]. These climatic fluctuations continued, with areas of highest hominin diversity becoming especially unstable from 2.0 to 1.8 MYA [31, 32]. Concurrent with these climatic fluctuations, a new family of fire adapted plants, geoxyles, arose suggesting that by 2 MYA the common occurrence of fire had become a selective force among savanna flora as well as fauna [33]. It is within the broader ecological context of environmental instability and flux that we place first australopithecines and later, archaic hominins.

Modern genetic reconstructions place the split of hominins and chimpanzees sometime between 7.5 and 6.5 MYA [34, 35]. This divergence coincides with the first wave of grassland expansion in eastern Africa. Ideas about what pushed the split have often invoked ecological partitioning, and to better understand why it was hominins and not chimpanzees that ended up in more variable habitats, theorists [36, 37] have leaned on the concept of competitive exclusion first articulated by Gause [38]. As outlined in The Struggle for Existence, where sympatric species are food-limited, “competition will take place between two species for the utilization of a certain limited amount of energy” [38, p. 6]. And “as a result of competition two similar species scarcely ever occupy similar niches but displace each other in a manner that each takes possession of certain peculiar kinds of food and modes of life in which it has an advantage over its competitor.” [38, p. 19]. We argue that competitive exclusion and consequent niche differentiation drove the split of hominins and chimpanzees, with hominins finding themselves on the unpredictable side of the ecological divide, occupying a very different “adaptive plateau.”

Once there, continued existence depended on adopting new strategies. It is clear that substantial changes in locomotion and perhaps social behavior (as indicated by canine reduction) marked the lives of the earliest hominins (Ardipithecus spp.). While Ardipithecus appears to have kept one foot in the forest, so to speak, their direct ancestors mark a more substantial commitment to terrestrial locomotion and a dietary and occupational niche outside of the forest. One branch of those ancestors, living in east Africa, the Paranthropines, seem to have doubled down on making a living consuming abundant, but low-quality foods such as graminoids [39]. Ultimately, this strategy appears to have been untenable over time. The others, namely gracile Australopiths, seem to have fared better. Below, we argue that the strategies of our successful Australopith ancestors began with a foraging strategy dedicated to extractive foraging. Later, around 3–4 MYA these Australopiths would diverge again, one radiation becoming the genus Homo [40]. The phylogenetic history of that split appears to have been significantly shaped by interspecific competition rather than by ecological conditions [41] suggesting that emergent species were already adept at managing in a varied, mosaic landscape. The idea that some Australopithecines developed novel strategies such as tool use and “cumulative culture” [42] to overcome the increasingly demanding ecological hurdles of living on the savanna during the second wave of aridification has been proposed and built on by many (e.g., [43-45]).

4.2 Living on the Edge: Lessons From Savanna-Dwelling Chimpanzees

Chimpanzees (Pan troglodytes verus) living in arid habitats, such as the Fongoli community in southeastern Senegal, face environmental pressures similar to those of early hominins. Compared to apes living in forested environments, savanna-dwelling chimpanzees have substantially larger home ranges, alter their movement to minimize travel costs between forest patches, and use novel strategies to mitigate effects of heat exposure [46]. For these primates, life is dictated by access to water and food; both of which are sparse and dispersed.

In terms of foraging strategies, chimpanzees living in arid settings are also notably different from their forest counterparts. For example, apes living in arid or unstable environments use tools more often than those in stable environments [47]. At Fongoli, chimpanzees consume far more insect prey than frugivorous chimps living in forest settings [48] and have been observed hunting vertebrate prey with tools [49]. In addition to the wooden spears used to target bushbabies (Galago senegalensis), the Fongoli chimps use stone or wood anvils to crack open baobab fruits and grasses and sticks to extract termites [50]. Peak baobab fruit cracking (proto-tool use; Box 1) corresponds with the peak of the dry season when other fruits are less abundant [50]. During this time chimpanzees often travel significant distances between fruiting baobab trees expending considerable energy in search. Even among chimpanzee populations living in less arid landscapes, duration of tool use was predicted by distance traveled and previous feeding time [57].

Box 1. Defining innovation, technology, and tools.

Significant anthropological debate has focused on what an invention or technological development is, what types of objects or ideas constitute inventions/technologies, and how inventions/technologies are developed. Here we adopt the following definitions:

Culture: information that is learned and shared, considered cumulative when acquiring complexity beyond the ability of a single individual to invent.

Innovation: testing and putting new inventions to use; an invention that has been adopted by others.

Invention/technology: the development of a new idea, technique, that alters or modifies some existing behavior, action, or object by design.

Proto-tool/proto-tool use: indirect use of an object [i.e. object not held or directly manipulated] to change the position, action, or condition of another object.

Social learning: the use of information from one's social environment.

Tool/tool use: the application of an invention/technology to the direct manipulation of one object to change the position, action, or condition of another object.

Any species is capable of invention and innovation, and many also exhibit behaviors that appear to reflect a culture. However, technologies maintained by cumulative culture are more difficult to identify. Many primate communities use simple plant and stone tools (though customary use is found only among a few species [51]). While it may be tempting to argue that primate tool making constitutes a similar degree of cognitive and social complexity as that assumed for hominins, there is reason to believe that the mechanism among apes is much different. Several studies have now demonstrated that nonhuman ape cultures can be achieved without social learning [52-54] and lack evidence for cumulative processes ([55] but see [56]).

In general, species that depend on extractive foraging tend to be more innovative during times of necessity [23, 58]. In a cross-species survey of observed instances of innovation, Laland and Reader [59] calculate that approximately half took place following an ecological challenge—a dry season, food shortage, or habitat degradation. Unsurprisingly, the “tool use via necessity” hypothesis [60] has been supported across several studies (for a review, and critiques see: [51]).

5 What to Invest in: Extractive Foraging on the Savanna

We imagine our early mosaic/savanna-dwelling relatives achieved lower foraging returns and had a narrower margin of error than forest-dwelling counterparts. The situation might have been similar to that of the modern savanna-dwelling chimpanzees outlined above. Savanna-dwelling chimpanzees range three times as far as forest dwelling populations [46]; a spatial reality dictated by the dispersed nature of reliable resources. Traveling such great distances to access both food and water leads to diminishing returns. As our model suggests, there comes a crucial tipping point in which search costs can no longer be reduced and a shift to reducing handling costs must have been necessary to meet minimum daily caloric requirements. One possibility, then, is to take advantage of abundant and available foods that require more time post-encounter. Many of these types of foods are embedded (i.e., within a hard shell or husk, underground, or within another structure) and thus take extra time to extract and/or prepare for consumption. Many primates take advantage of these types of foods, and some even use tools to do so (Box 1). However, few rely on these foods for the bulk of their calories the way that humans do. For most species, intensive extractive foraging is only done in times of scarcity [61]. On the contrary, evidence suggests that difficult-to-acquire sessile resources were THE foods that sustained the earliest members of our lineage (e.g., [62-64]).

One way to raise the returns for these foods is via co-foraging. When conspecific competition is removed, gregarious foraging can have multiple benefits including predator defense, increased consumption, and social learning opportunities [65]. Synergistic foraging includes tasks done in coordination with others that increase returns beyond what is possible by individual effort, and it can be easier to sustain than other mechanisms of cooperation [66]. Some have speculated that synergistic selection leads to the emergence of biological complexity, and multi-level selective processes [67] and in this sense, tolerance for others via modulated social agency might underpin those processes.

As described by Hawkes [8, p. 766], “savanna foods of interest do not promote scramble or interference competition where more consumers reduce everyone's return rates because amounts available for consumption increase with extraction and processing effort. Deeply buried geophytes allow increasing rather than diminishing returns for additional effort as digging next to someone else helps you loosen the soil. Return rates are higher for accumulating piles and bulk processing rather than extracting, processing, and consuming items one at a time.” Small and closely-related foraging groups can reap the largest rewards in this scenario, with as few as six people achieving substantial gains via mutual harvesting [66, 68]. A reliance on high cost, but high return strategies may only be possible in cooperative foraging contexts [69].

Gregarious foraging can simultaneously increase returns, while minimizing the risk of falling below minimum resource thresholds through the mechanisms of food sharing and tolerated theft. Among modern foraging populations, meat, honey, and large plant packages like tubers are the most widely shared resources [70]; they have decreasing marginal returns to foragers, and may also be prone to tolerated theft [71]. Collaborative foraging may simultaneously provide synergistic benefits and opportunities for tolerated theft creating contexts for the evolution of cooperative behavior. In an experimental context, participants on university computers first reduced their chances of starvation in a virtual world of variable returns through tolerated theft, but then shifted to a cooperative strategy of reciprocal giving over time [72]. Each of these strategies can reduce the risk of falling below resource thresholds which subsequently lowers mortality at all ages.

6 Seeking New Territory: The Social Learning Fitness Valley

Thus far we have considered the benefits of gregarious foraging and the ways that dependence on extractive foraging can alter social contexts. Besides increasing return rates, a gregarious foraging context can also decrease handling costs by making it easier to observe and imitate others [73]. Individuals who could avoid the start-up costs of figuring out extractive foraging techniques on their own could increase return rates by cutting down on learning time. Socially learned and shared information that is more complex than any individual can invent in a lifetime can subsidize the costs of invention by learning from others, maintaining and inspiring innovations without each individual having to start from scratch [74]. While it is easy to maintain culture once it is common in a population, it is very difficult for a dependence on social learning and cumulative culture to invade a population. Individuals must live in social groups, experience a moderately variable environment, have heightened propensities for social engagement/cooperation, and they must engage in adaptive behaviors that are moderately difficult for individuals to learn on their own [74].

Using technology is more than just developing tools themselves, it also involves knowledge of the food source and when, where, and how to deploy the tool. Social learning can be a short-cut to acquiring difficult-to-invent skills to help reduce handling costs. If investing in technology can reduce handling costs, then investing in social learning can reduce tech investment costs. These behavioral adaptations can be reflected not just in material and/or symbolic culture but in local ecological knowledge about how to find and procure burrowing prey, process plants, and locate water as well. We refer back to our original argument that the archeological record is necessarily biased towards bones and stones–but much socially learned information is not preserved via those materials. Use wear patterns on Oldowan stone tools dating as far back as 3 mya indicates they were used not just to process animal and plant foods (including underground storage organs [75]), but also possibly to manufacture organic implements such as wooden tools, twine, and carrying devices [76]. It is around this same time with the rise of the genus Homo that we begin to see changes in stature and brain size that indicate shifts in hominin life history consistent with reduced juvenile and adult mortality through intragroup care, as we will discuss below. Culture likely added additional selection pressures down the line for changes in hominin cognition and sociality (cf. [77]).

Models developed to estimate the social and ecological conditions that favor invention and innovation show that when groups are large, the environment varies a moderate amount, and selection pressure is high, individuals engage in a mix of individual and social learning strategies—the pattern of which is likely the catalyst for cumulative cultural evolution [78]. Individuals who could use some social learning would have been better off than others who did not [79]. These conditions reflect the circumstances we expect unlucky hominins to have experienced during the Pliocene, indicating that a reliance on social learning and the development of material technologies may be much older than expected from the archeological record.

7 Climbing to a New Local Optimum: Life History Shifts in Response to Extractive and Collective Foraging

We now imagine a social ape focused on extracted foods in an arid setting, with spatially and temporally varying returns. Gregarious foraging likely reduced handling costs and facilitated returns to tech investment, potentially using simple social learning to further reduce handling costs. Under these conditions, we would expect to see not just an increase in the total resource budget, but a reduction in the risk of falling below resource minimums (see Figure 1). This would create shifts in life history patterns due to a decrease in mortality across all ages.

Food sharing is more effective at reducing mortality risk than changes in diet breadth alone [68, 80]. Decreasing the risk of mortality correlates with longer lives, larger body size achieved by increased time spent growing, and a longer juvenile/adolescent phase before beginning reproduction [81]. Among other apes, youngsters begin to develop permanent teeth and begin to forage for themselves about twice as soon as human infants; young chimps spend about 30% of their time foraging for themselves by the time they are 2 years old [82]. However, those same youngsters continue to be subsidized by mothers' milk until age 6 [82]. On the other hand, the foods available to savanna-dwelling hominins likely required strength, endurance, knowledge, and skill to obtain and would not have been available to weanlings. Thus it is paradoxical that for members of Homo, children appear to have weaned at earlier ages than other apes [83]. While modern human children can forage for themselves in the right ecological and social settings (among the Hadza, children aged five can collect about 50% of their daily calories) they still need significant subsidies which continue into their teens [84]. Among our genus, postweaning calories must have come from other individuals [85, 86].

A shift to a setting in which provisioning is key to survival entailed a monumental change in life history parameters for our genus. If, for example, older female kin provided support to their daughters' offspring, those longer-lived individuals may have contributed more longevity genes to future generations—thus creating a selection advantage for longer lifespans (among both males and females). The grandmother hypothesis [63, 87], predicts that the downstream effects of such a transition include: longer lengths of time in each developmental period, larger overall body and brain sizes (though both are proportional to length of life as allometrically scaled [88]), a shift in the operational sex ratio (creating a situation in which human populations include more reproductively active males than females [89, 90]—setting the stage for very different optimal mating strategies between the sexes [91, 92]), and an increasing demand on enhanced social capacities beginning from infancy [93, 94]. This is one well-developed example of how intragroup food transfers can select for longer developmental periods, with expected feedback on investments in sociality.

This returns us to the concept of handling costs. In the model, we propose that some individuals will spend more time handling than others based on their ability to procure resources optimally. In the scenario above, it is females and their dependent offspring, as well as those that provision them, that might be near the threshold for meeting caloric needs due to handling demands. While this scenario focuses on the role of plant foods and their handling challenges, it is also the case that among hunters, 80% of game brought back to camps is handled (prepared, distributed, cooked) by individuals other than the hunter [15]—often women. Thus, for any exercise in modeling foraging outcomes and their evolutionary impacts it is important to consider the full extent of handling tasks and who carries them out. Many applications of foraging models consider only the prey choice decision at hand in predicting human social and technological dynamics. But here we suggest that post-encounter processing and transfers also impact foraging decisions and ultimately shape the evolutionary trajectory of our species.

8 Future Directions: Modeling Inflection Points in Deep History

The theoretical basis for innovation that we have laid out above can serve as a particularly useful tool for investigating the timing and patterning of humans' most impactful innovations. We nominate several particularly salient “deep history” events below and suggest approaches to the study of these events that explore how changing ecological and demographic conditions could serve as an impetus—by creating a fitness valley—for propelling innovation. Those events include the use of extractive foraging aids (digging sticks and intentionally shaped stone tools for cutting), the use of fire to alter foraging landscapes and release bionutrient availability of harvested foods, and the invention of portable containers to ease handling costs and buffer risk.

Simple tools such as the digging stick may have been the first objects hominins used to survive on the savanna. However, very few wooden objects have been preserved in the deep archeological record. In Africa, the oldest wooden tools recovered come from the Kalambo Falls site in Zambia and are dated to 390–324 KYA [95]. The next oldest known wooden implements, from the spear horizon at the Schöningen site in northern Germany, are around 200 KYA [96]. Despite their absence in the record, it is certain that their use came much earlier in our lineage. Modeling climatic conditions and dietary transitions in hominin species may help researchers narrow the window of time in which we expect wooden tools such as digging sticks to have been adopted as a regular foraging aid. We suggest the period of time in which australopiths became committed to savanna settings, as outlined above, as a probable inflection point.

Given the paucity of organic digging tools in the Pleistocene archeological record, we turn to that most visible of material technology, the stone tool. The earliest known stone tools, of the Lomekwian tradition, are dated to 3.3 MYA [97]. The Lomekwian tradition is followed by Oldowan technologies, which are well established in the archeological record from 2.58 to 1.6 MYA [43]. The emergence of Acheulean technology marks another step in technological advancement. Acheulean objects appear in the archeological record from 1.7 MYA and reflect an improvement on Oldowan forms in that the symmetrical design and strategic percussion techniques suggest increased motor skill and foresight [98]. Each of these timelines are likely associated with fitness valleys experienced by our ancestors. We suggest the Lomekwian tradition emerged during a period of intensified use of embedded savanna resources and is perhaps associated with forms of competitive exclusion between sympatric hominin species. Oldowan technology appears to arrive on the scene at the same time that renewed waves of variability hit the savanna but likely builds on the social capacity to share knowledge related to tool manufacture. It has also been suggested that Oldowan technology was motivated by woodworking demands [99], and if so, may be linked to the manufacture of digging sticks. If this was the case, we would expect its emergence to follow relatively soon after our ancestors became committed to foraging in tuber-rich settings.

Humans' use of fire sets us apart from all other organisms. Evaluations of the energetic demands of early members of our species demonstrate that “Homo sapiens was unable to survive on a diet of raw wild foods.” [100, p. 1]. Given that, resolving questions of how, when, and why our predecessors began using fire as a tool are especially pressing. Earliest archeological evidence for discrete and controlled instances of fire—date from 1.7 to 1.5 MYA [101]. Anatomical evidence from Homo erectus such as smaller teeth, jaws, and guts suggests that the transition to cooked diets happened at an even earlier point in time [102]. Those adaptations must have taken many generations to become common among the population thus predating their consistent appearance in the paleoanthropological record. Parker et al. [103] suggest that the transition to savanna living, coupled with a reliance on tubers would have set the stage for the demand for cooking to arise. Other research demonstrates that fire-altered landscapes, such as those common on the savanna provide opportunities for primate foragers to obtain higher net returns [104, 105]. Taking advantage of fire-modified landscapes passively would have primed our ancestors for the first fire innovation, active manipulation of the landscape, which would have been followed by the invention of fire for cooking.

Based on the logic above, the most likely time for the uptake of fire as a landscape modification tool would have been as Australopiths became tightly tied to mosiac/savanna landscapes. Fire-mediated improvements in search would have been a necessary first step to overcoming a fitness valley in low-return ecotone. Intentional cooking could have then reduced handling costs. For example, many types of tubers available on the savanna are resistant to digestion without processing via fire or are at least much easier to peel and chew after brief roasting [106]. If our dependence on these resources grew over time, then this scenario presents another inflection point in the archeological record which would demand innovation—that is, active fire control and cooking.

As for containers? As LeGuin so aptly describes, “If you haven't got something to put it in, food will escape you—even something as uncombative and unresourceful as an oat. You put as many as you can into your stomach while they are handy, that being the primary container; but what about tomorrow morning when you wake up and it's cold and raining and wouldn't it be good to have just a few handfuls of oats to chew on and give little Oom to make her shut up, but how do you get more than one stomachful and one handful home? So you get up and go to the damned soggy oat patch in the rain, and wouldn't it be a good thing if you had something to put Baby Oo Oo in so that you could pick the oats with both hands? A leaf a gourd a shell a net a bag a sling a sack a bottle a pot a box a container.” [5, p. 154]. It's an obvious fact that portable containers are useful—are perhaps one of humanity's finest and earliest inventions [107]. But who created them, and why, and how?

Among contemporary hunters and gatherers “carrying devices are universal” [107, p. 301]. But like wooden digging sticks, these tools were almost certainly made from organic materials that did not preserve well. The oldest example of woven fibers possibly used in the construction of a carrying bag appear in the Neanderthal sequence at Abri du Maras, France dated from 52 to 41 KYA [108] older types of containers made from ostrich and marine shell to contain pigment and water have been documented across southern Africa. While we will probably never recover the earliest forms of portable container that does not negate our ability to generate expectations for when they were invented, and why.

9 Conclusions

As we write this section, it is cold and raining and we are grateful we do not have to visit the oat patch to feed ourselves and our family today. Ultimately, a higher quality diet due to a dependence on extractive foraging and the tools to do it effectively has bought us time—for socializing, for growing and learning, for innovation. Extractive foraging brought with it new selection challenges and co-evolutionary processes, many of which cannot be recorded in the fossil record. The models presented above give insight into the motivations to invent, and recognize not just the impetus for innovation, but the potential impacts of seemingly humble technology. One hopes that modern definitions of technology do not blind us to the active interfaces with the material world used by our ancestors.

Models that seek to explain brain expansion in the hominin clade, show that expansion is largely driven by ecological challenges but is also linked to degree and cost of accumulated cultural knowledge in the population. We suggest that gregarious foraging of extracted foods, made more efficient by working with and learning from others, decreased adult mortality through reducing the risk of shortfalls and increasing overall returns. The concordant shifts in life history benchmarks that necessitate longer periods of somatic investment make brain expansion an eventuality of a novel fitness landscape, rather than a pre-existing condition for intelligence or innovation. The conceptual model we outline herein suggests that our own intelligence didn't arise out of some form of exceptionalism, rather it is the predictable outcome of finding oneself between a rock and a hard place.

Acknowledgments

We thank editor John Lindo for guidance, and two anonymous reviewers and many colleagues for their thoughtful and careful feedback on earlier versions of this manuscript. We also thank the University of Denver and Boise State University for various forms of support provided for writing and research. Travel to the 2024 EAA Conference to present this project was supported by an Internationalization grant awarded to NM Herzog from the University of Denver.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

The authors have nothing to report.