A Multi-Scale Time Adaptive Fusion Network for Transformer Fault Diagnosis

ABSTRACT

Transformer fault diagnosis is crucial for the safe operation of power systems, enabling quick and accurate fault type identification. However, traditional methods struggle with extracting multi-scale temporal features and high-order feature representations, limiting their ability to handle complex dynamic data patterns. To address this, this paper proposes a multi-scale temporal adaptive fusion network (MSTAFN). The MSTAFN model first generates a time position vector through a temporal information encoding (TIE) module, capturing multi-scale temporal features. The adaptive high-order hybrid network (AHOHN) module then fuses multi-scale temporal data with transformer features using a hybrid attention mechanism, extracting temporal variation patterns. To enhance high-order feature representation, the high-order feature extraction (HOFE) module introduces nonlinear activation and higher-order operations to capture complex relationships between features. The adaptive feature reconstruction (AFR) module dynamically adjusts the feature fusion ratio, optimizing information integration. Finally, the multi-scale temporal fusion (MSTF) module balances the fusion of multi-scale temporal features and global dependencies, adapting to different tasks and data distributions. Extensive experiments on publicly available datasets demonstrate that the MSTAFN model outperforms comparison models across multiple evaluation metrics, proving its effectiveness and superiority in transformer fault diagnosis.

1 Introduction

Power transformers are core components of modern power systems, and their operational status directly affects the safety and stability of the entire system. However, due to the complex operating environment and diverse fault types of transformers, accurate fault diagnosis is crucial for ensuring their stable operation [1, 2]. Traditional fault diagnosis methods for power transformers include frequency response analysis (FRA) [3], short circuit reactance (SCR) [4], low-voltage impulse (LVI) [5], and ultrawideband (UWB) methods [6]. While each of these methods has its advantages, they also have certain limitations. For instance, dissolved gas analysis (DGA) and vibration analysis are two common diagnostic techniques. DGA identifies fault types by analyzing the composition and ratio of dissolved gases, making it suitable for oil-immersed transformers, but it cannot be applied to dry-type transformers. On the other hand, vibration analysis is mainly used to detect mechanical issues such as winding looseness or core problems, but it may not effectively diagnose other types of faults.

With the development of machine learning and sensor technologies, intelligent algorithms such as support vector machines (SVM) [7], Bayesian networks [8], and extreme learning machines (ELMs) [9] have been applied to transformer fault diagnosis. However, these methods are mainly based on shallow learning algorithms. Although they can improve diagnostic accuracy to some extent, their data analysis capabilities are relatively weak, and their performance in real-world industrial environments is limited. In contrast, deep learning methods have become the mainstream approach for transformer fault diagnosis due to their powerful learning and feature extraction capabilities. Common deep learning networks, such as deep belief networks (DBNs) [10], long short-term memory (LSTM) networks [11], and convolutional neural networks (CNN) [12], have been widely applied in the field of fault diagnosis.

However, traditional CNNs suffer from the degradation problem when increasing network depth, which negatively impacts the model's diagnostic performance. To address this issue, He et al. proposed residual networks (ResNet) [13], which significantly alleviate the degradation problem of deep networks by introducing residual modules with shortcut connections. The ResNet structure has been proven to perform exceptionally well in fault diagnosis and has become a widely used tool in this field [14-18]. In addition to CNNs and ResNets, graph convolutional networks (GCNs) [19-21] have also been applied in transformer fault diagnosis. By constructing a graph structure and extracting high-order features, GCNs can capture the complex nonlinear relationships between dissolved gases and fault types, offering higher diagnostic accuracy and generalization ability when dealing with small samples and complex data.

In recent years, attention mechanisms have been widely used in transformer fault diagnosis [22-24], as they help focus on key features while suppressing irrelevant information. Zhou et al. [22] proposed a fusion residual attention diagnostic (FRAD) model, which generates and fuses vibration signal images through the design of a Gramian-guided filtering module (GGFM) and improves the deep ResNet by introducing an up-dimensioning convolutional block attention module (UCBAM), thereby enhancing the accuracy of power transformer fault diagnosis. Ding et al. [23] proposed a time–frequency transformer (TFT) model, designing a new tokenizer and encoder module to extract effective features from the time-frequency representation of vibration signals, and constructed an end-to-end fault diagnosis framework based on the TFT. However, these attention-based methods have limitations in multi-scale temporal feature extraction, primarily because they lack an effective time information encoding mechanism. They often rely on fixed attention mechanisms that cannot flexibly adapt to changes across different time scales, making it difficult to consider subtle differences between time scales when processing complex dynamic data. Additionally, these methods struggle to effectively integrate complex interactions between high-order features, which are crucial in transformer fault diagnosis as they reveal deep nonlinear relationships within fault patterns. To address these limitations in traditional methods, this paper proposes the multi-scale temporal adaptive fusion network (MSTAFN).

- The time information encoding module introduces learnable parameters and a dynamic modulation mechanism, allowing the encoding process to adjust frequency and phase based on specific fault data. This enables better capture of multi-scale dynamic relationships and effectively represents nonuniform periodicity and complex behaviors.

- The AHOHN module is designed to integrate temporal and data features using a hybrid attention mechanism to capture multi-scale variations. The HOFE module deepens feature interactions through nonlinear activation and higher-order operations. The AFR module is applied to dynamically adjust feature fusion, optimizing the integration process for different tasks and data.

- The MSTF module uses an adaptive fusion factor to adjust the contribution ratio between BiGRU and multi-scale memory residual network (MSMRN) features, ensuring optimal integration of temporal modeling and multi-scale feature extraction. Experimental results show that the MSTAFN method outperforms comparison models across various metrics, demonstrating its effectiveness and superiority.

2 Related Work

2.1 Diagnosis Method Based on Physical Principles

Pramanik et al. [3] proposed an innovative FRA method for detecting transformer winding faults, using two sinusoidal excitations with equal amplitude but opposite polarity. This method compares the impedance response differences between the two ends of the transformer, without needing a healthy reference. Palani et al. [4] introduced a fault detection method that combines real-time voltage, current, and power comparisons with high-resolution sampling, allowing accurate detection of winding faults during short-circuit tests without reference data. Christian et al. [5] expanded detection methods using three transfer function-based approaches, offering new ways to detect mechanical displacement in transformers. Similarly, Mortazavian et al. [6] proposed a fault detection method based on SAR imaging and Kirchhoff migration algorithms, effectively detecting mechanical deformations in high-voltage transformer windings.

While these methods can identify faults without healthy data, their applicability is limited by specific experimental setups and data conditions. Additionally, factors like winding design and aging may affect detection accuracy across different transformers or operating conditions.

2.2 Diagnosis Methods Based on Machine Learning

Zhang et al. [14] further introduced a wide ResNet method with incremental learning capability, allowing the model to continue learning and optimizing as new fault data becomes available, enhancing its adaptability. Xing et al. [15] developed a multi-modal deep residual filtering network (DRFN) for online fault diagnosis of T-type three-level inverters, which improves fault recognition accuracy by integrating multi-modal information. Xiong et al. [16] designed an adaptive denoising residual network (AD-ResNet), which improves fault diagnosis accuracy for rudder actuators by removing noise interference. Gituku et al. [17] apply refined composite multi-scale fuzzy entropy (RCMFE) for feature extraction and employ a self-organizing fuzzy (SOF) classifier for cross-domain fault diagnosis of bearings.

While these methods have made significant contributions to fault diagnosis in various devices, they often fail to autonomously focus on key information directly related to fault recognition. Instead, they are more likely to be influenced by irrelevant or noisy data, which can reduce diagnostic accuracy and generalization capability.

2.3 Hybrid Diagnostic Model Based on Deep Learning

In recent years, CNNs have driven the development of fault diagnosis methods using image processing. Liu et al. [18] proposed a motor fault diagnosis method based on multi-scale kernel residual CNN, enhancing fault sensitivity. Li et al. [19] converted vibration signals into 2D images and applied semi-supervised learning for fault diagnosis in rotating machinery. Wang et al. [25] used a symmetric point pattern (SDP) to generate images for bearing fault classification, demonstrating the effectiveness of image-based approaches. Hong et al. [26] applied CNN to vibration images for transformer fault diagnosis.

Researchers have further improved diagnosis accuracy by integrating attention mechanisms. Zhou et al. [22] proposed a FRAD model, using a Gramian-guided filtering module to fuse vibration signal images with a deep ResNet for transformer fault detection. Ding et al. [23] introduced a TFT model to extract features from vibration signals' time-frequency representation, creating an end-to-end diagnosis framework. Gangtao et al. [24] combined 1D-CNN and channel attention mechanisms for transformer fault detection and used generative adversarial networks (GANs) to address data limitations, improving performance and generalization.

These methods have significantly advanced fault diagnosis, especially by combining image processing with deep learning and attention mechanisms, boosting accuracy and adaptability to complex fault patterns.

3 Methods

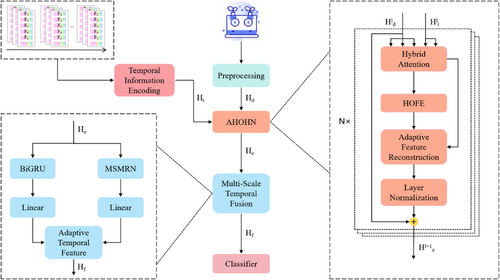

The MSTAFN model framework is shown in Figure 1. First, the temporal position vector is obtained through the temporal information encoding module. The transformer data features and time position vector are then input into the AHOHN module, which uses hybrid attention to integrate temporal and data features, capturing correlations between time steps and features. HOFE module captures complex nonlinear relationships to improve feature representation. Next, the adaptive feature fusion module dynamically adjusts the fusion ratio based on feature importance, optimizing information integration. Residual connections retain original features and enhance information flow. The data then passes through the MSTF module, where a BiGRU captures bidirectional temporal dependencies, and the MSMRN extracts multi-scale global features. Then the adaptive fusion module combines the outputs of the BiGRU and MSMRN, optimizing the contribution of each feature for efficient understanding and accurate predictions. Finally, the results are output through the classifier.

3.1 Temporal Information Encoding

Traditional time position encoding methods use fixed frequency distributions, making it difficult to adapt to specific tasks or data characteristics, especially in transformer fault diagnosis. This limits their ability to capture complex nonlinear relationships and multi-scale variations in nonstationary time series. To overcome this, we propose an adaptive trigonometric position encoding method. By introducing learnable parameters and a dynamic modulation mechanism, the model can adjust frequency and phase to better capture complex temporal features. The trigonometric functions provide nonlinearity, and the learnable parameters help the model adapt to multi-scale dynamic relationships, improving fault diagnosis accuracy and robustness. The calculation formula is shown in Equation (1)

The learnable parameter controls the scaling and adjustment effect on the input . This modulation function smoothly adapts to the nonlinear growth characteristics of high-dimensional data, while enabling multi-scale normalization. It effectively prevents the excessive growth of high-dimensional features, ensuring numerical stability and enhancing the robustness of the model.

3.2 Adaptive High-Order Hybrid Network

3.2.1 Hybrid Attention

The values , , and are derived from the data features , while and are computed from the time position vectors . is the feature dimension of . After their summation, the hybrid attention mechanism effectively integrates both the time position and data feature dependencies, capturing dynamic relationships while incorporating both background dynamics and key patterns. By combining the data feature vectors, a unified feature representation is generated, providing high-quality input for subsequent tasks and enhancing the model's expressive power.

3.2.2 High-Order Feature Enhancement

Here, represents the learnable weight vector, denotes the bias vector, and ReLU is applied as the activation function . is a tunable hyperparameter that controls the contribution of the higher order feature interaction to the final feature representation.

3.2.3 Adaptive Feature Reconstruction

The weights and are dynamically determined by the fusion of the original features, allowing for flexible adjustment based on task requirements. and represent the learnable weight vector. is used as an activation function. Specifically, when fault patterns exhibit clear feature interactions, such as the complex coupling between dissolved gas concentration, temperature, and current, the weight increases, emphasizing the role of high-order features. This helps the model more accurately capture these intricate interactions. In contrast, in simpler fault scenarios, such as those characterized solely by an increase in gas concentration, the weight decreases, highlighting the importance of original features and preserving essential first-order information to avoid unnecessary complexity.

3.3 Multi-Scale Temporal Feature Fusion

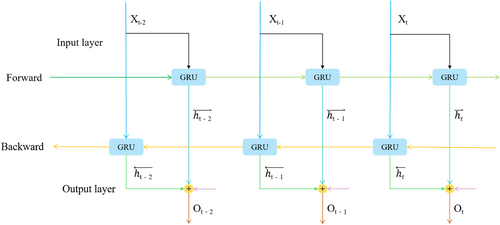

3.3.1 BIGRU Model

The network framework of the BiGRU is shown in Figure 2. In transformer fault diagnosis, after the AHOHN has processed the data, complex feature extraction and nonlinear relationships have been modeled. However, these features still lack a comprehensive capture of temporal information. To address this issue, the BiGRU model is employed to process both forward and backward temporal information, allowing the model to fully exploit bidirectional dependencies within the feature sequence. This compensates for the important contextual information that might be missed in a unidirectional network. Specifically, the BiGRU can capture the dynamic changes and long-term dependencies of fault signals, enhancing the model's ability to model temporal features. In scenarios where the transformer undergoes gradual aging or when fault characteristics exhibit periodic fluctuations, the BiGRU can more accurately identify fault patterns.

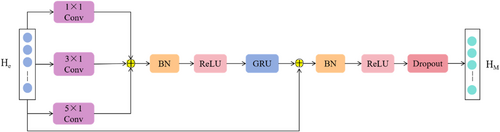

3.3.2 MSMRN Model

The MSMRN models data from different time windows through a multi-scale mechanism, enabling the capture of relationships between short-term local features and long-term global trends. At the same time, the ResNet effectively mitigates the vanishing gradient problem through skip connections, ensuring the training stability of deep networks and enhancing the model's ability to express nonlinear features. The structure of the MSMRN is shown in Figure 3.

Specifically, the MSMRN preserves key fault features at different time scales, enhancing its ability to recognize transformer fault patterns. It captures multilevel variations in fault signals during transformer operation, such as the coupling of short-term fluctuations with long-term trends. This improves the accuracy and robustness of fault diagnosis, providing more comprehensive support for transformer condition assessment and fault prediction.

3.3.3 Adaptive Temporal Fusion

The BiGRU and MSMRN modules each have unique advantages in transformer fault diagnosis. BiGRU excels at extracting temporal features of transformer fault signals, capturing time dependencies and dynamic variations in the data. On the other hand, the MSMRN model focuses on multi-scale feature modeling, enabling it to capture both short-term local variations and long-term global trends in the signal. While both modules offer distinct strengths, using them individually may lead to the neglect of certain important features, limiting the comprehensive representation of fault characteristics.

3.4 Classifier

4 Experiments and Result Analysis

4.1 Dataset

This study conducts experimental analysis based on a 220 kV voltage dataset from the State Grid Corporation of China and previous literature [27, 28]. The dataset includes multiple features, such as the concentrations of dissolved gases (H2, CH4, C2H2, C2H4, C2H6). In the data preprocessing stage, we performed data cleaning and retained 718 valid samples. These samples represent seven distinct fault states: Normal, Low-Temperature Overheating, Medium-Temperature Overheating, High-Temperature Overheating, Partial Discharge, Low Energy Discharge, Low Energy Discharge With Overheating, High Energy Discharge, and High Energy Discharge With Overheating.

To train and evaluate the model performance, we first sorted the dataset chronologically and then split it into a training set and a testing set in an 8:2 ratio, with 80% of the samples used for training and 20% for testing. The sample distribution across different fault states is shown in Table 1. By splitting the dataset in this time-sequenced manner, we ensured data independence, avoiding future data leakage, and enabling a comprehensive evaluation of the model's generalization ability and predictive performance across various fault states.

| Status | All samples | Training samples | Testing samples |

|---|---|---|---|

| Normal | 52 | 40 | 12 |

| LT | 99 | 80 | 19 |

| MT | 73 | 58 | 15 |

| HT | 168 | 134 | 34 |

| PD | 105 | 84 | 21 |

| LD | 42 | 34 | 8 |

| HD | 179 | 143 | 36 |

4.2 Experimental Setup

The MSTAFN model was implemented using the PyTorch framework and trained on an NVIDIA GeForce GTX 1080 Ti GPU. The model parameters were determined through multiple comparative experiments. During training, the batch size was set to 64, and the model was trained for a total of 100 epochs. The Adam optimizer was used with an initial learning rate of 0.001. The convolutional kernels of the MSMRN were set as [1 × 1, 3 × 1, 5 × 1], and the weight parameter for high-order features was set to 0.45.

4.3 Loss Function

4.4 Evaluation Metrics

4.5 Results and Analysis

4.5.1 Comparative Experiments

The performance metrics results of the proposed MSTAFN model and the comparison model on the transformer fault dataset are shown in Table 2. The experimental results show that the MSTAFN model achieved optimal performance across all evaluation metrics, including A, P, R, and F1, with values of 81.29%, 84.97%, 84.67%, and 84.82%, respectively. Compared to the best-performing GCN model, MSTAFN improved A, P, R, and F1 by 1.98%, 3.56%, 4.00%, and 3.78%, respectively. These significant improvements are attributed to the innovative design of MSTAFN and its comprehensive modeling of deep features. Traditional models often fail to effectively extract complex features or model relationships between samples, leading to poor performance on complex data. For example, XGBoost has an accuracy of only 64.14%, the lowest among all models, while KNN, though slightly better than other traditional methods, still shows a significant gap compared to deep learning models. This highlights the limitations of traditional methods in dynamic feature modeling and capturing complex patterns. CNNs, by using convolution operations, can extract local features but fail to effectively model global relationships between samples. Siamese networks improve performance by modeling the similarity between pairs of samples, but they still have limited capability in capturing global feature interactions. In contrast, GCNs model the global relationships between samples using adjacency vectors, significantly improving the understanding of the data structure, with an accuracy of 79.31%. However, GCN still struggles to capture the complex dynamic patterns in the data, showing limitations in dynamic temporal feature modeling.

| Model | A (%) | P (%) | R (%) | F1 (%) |

|---|---|---|---|---|

| MLP [29] | 67.21 | 70.17 | 69.67 | 69.92 |

| XGBoost [30] | 64.14 | 67.59 | 66.19 | 66.88 |

| SVM [31] | 68.79 | 71.54 | 69.46 | 70.48 |

| KNN [32] | 70.04 | 72.46 | 71.59 | 72.02 |

| CNN [33] | 75.63 | 78.52 | 77.27 | 77.89 |

| Siamese Network [34] | 77.13 | 79.53 | 78.34 | 78.93 |

| GCN [20] | 79.31 | 81.41 | 80.67 | 81.04 |

| MSTAFN | 81.29 | 84.97 | 84.67 | 84.82 |

MSTAFN effectively overcomes these challenges through its innovative design. First, the time information encoding module uses learnable parameters and dynamic modulation mechanisms to capture complex temporal features, addressing the limitations of other models in temporal dynamics modeling. Unlike traditional methods such as CNN and GCN, MSTAFN can more flexibly capture multi-scale temporal features, compensating for GCN's shortcomings in capturing dynamic patterns. Second, the AHOHN module captures complex nonlinear relationships through deep interaction between time positions and data features, significantly enhancing feature modeling capabilities. This makes MSTAFN superior to GCN and other methods in modeling higher order relationships. Furthermore, the multi-scale time fusion module, combining BiGRU and MSMRN, excels in capturing multi-scale temporal information and global dependencies, further improving the model's adaptability to complex dynamic data. Finally, the adaptive feature fusion module dynamically adjusts the fusion ratio of different features, ensuring the efficiency and robustness of the information integration process, which gives MSTAFN an advantage over other models in dynamic feature modeling. The synergistic effect of these modules enables MSTAFN to better model dynamic temporal features and high-order relationships in complex data.

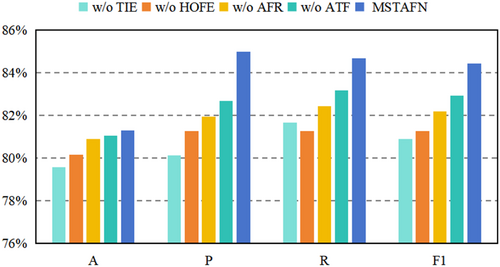

4.5.2 Ablation Study

- TIE: The temporal information encoding module was removed.

- HOFE: The high-order feature enhancement module was removed.

- AFR: The adaptive feature reconstruction was removed.

- ATF: The adaptive temporal fusion was replaced with the traditional concatenation and linear operations.

- In transformer fault diagnosis, the TIE module incorporates time position information through learnable parameters and dynamic modulation mechanisms, effectively capturing complex temporal features such as frequency and phase. This compensates for the limitations of traditional models in dynamic time modeling. Removing this module significantly weakens the model's ability to capture key temporal features, especially when dealing with dynamic changes in frequency and phase, thus reducing diagnostic accuracy and reliability.

- After integrating data features with temporal information, the HOFE module strengthens high-order interactions between features, enabling the capture of complex dynamic patterns and enhancing the model's ability to express nonlinear relationships. It significantly improves the model's adaptability and robustness in nonlinear fault scenarios, such as frequency drift and abrupt signal changes. Without the HOFE module, the model struggles to capture complex feature interactions, leading to reduced diagnostic accuracy for nonlinear fault patterns and instability in high-noise or complex scenarios, ultimately affecting overall fault diagnosis performance.

- The AFR module uses a dynamic weighting mechanism to balance high-order and original features, improving the model's adaptability and diagnostic accuracy across diverse fault scenarios. Without it, the model fails to adjust the feature fusion ratio, resulting in poor representation of key features in complex and simple scenarios and reducing overall diagnostic performance.

- The adaptive time fusion module dynamically adjusts the contribution of higher-order features and original features, effectively handling the complex dynamic patterns in transformer faults. Compared to traditional concatenation and linear transformation methods, it captures nonlinear relationships and deep interactions in time-series data more effectively. By optimizing the feature fusion strategy, this module significantly enhances the model's accuracy, especially when dealing with varying fault patterns.

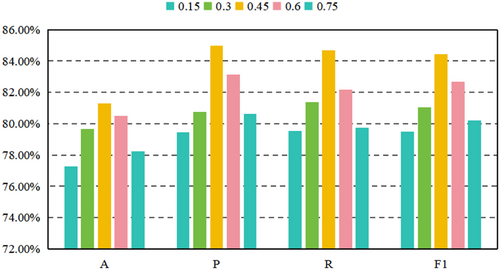

4.5.3 The Impact of Different Values on Classification Results

The experimental results for different high-order feature coefficients values are shown in Figure 5. When the value of is small, the model tends to focus more on extracting linear features, which weakens the contribution of higher-order interactive features. This limits the model's ability to capture complex time-series characteristics or nonlinear fault patterns, resulting in lower performance. As increases, the model progressively incorporates more high-order interaction features, enhancing its ability to capture dynamic relationships between features, particularly in terms of the nonlinear feature associations present in transformer fault data. This leads to a significant improvement in diagnostic performance.

However, when exceeds the optimal value of 0.45, the weight of high-order interaction features increases further, diminishing the contribution of linear features to the overall modeling. This can introduce excessive complex feature interactions or even noise, causing the model to lose its ability to effectively represent global features. Additionally, too many high-order features may amplify irrelevant patterns in the transformer data, leading to a decrease in the model's ability to identify key fault patterns. Thus, it is evident that a balance between linear features and high-order interaction features is essential for diagnostic tasks. When , it strikes the optimal balance between global trend modeling and high-order feature representation, allowing the model to effectively and efficiently capture complex fault patterns. This balance not only underscores the importance of but also highlights the critical role of well-designed high-order interactions in improving diagnostic performance.

4.5.4 The Impact of Different Values on Classification Results

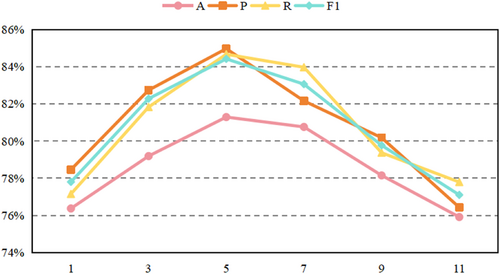

To further investigate the impact of different numbers of AHOHN layers on fault data, we conducted experiments with values set to 1, 3, 5, 7, 9, and 11, as shown in Figure 6.

The results show that as increases from 1 to 5, the model's performance gradually improves. This is because a shallower network can only capture basic local features and is insufficient for fully extracting complex temporal dynamics and nonlinear feature interactions. As the number of layers increases, the model benefits from a layered structure and mixed attention mechanisms, allowing it to better integrate temporal information and the correlations between features. The high-order feature enhancement module also captures the nonlinear relationships in the data more effectively, significantly boosting the model's diagnostic capabilities.

However, when the number of layers exceeds 5, model performance starts to decline. This is primarily due to the high network complexity, which leads to feature redundancy and noise amplification, diluting the expression of key features. Additionally, the increased number of parameters complicates optimization, potentially causing the model to fall into local optima. A deeper network is also more prone to overfitting the detailed features of the training data, rather than general patterns, which reduces robustness on the test set. In summary, when N is set to 5, the model achieves the optimal balance between feature extraction capability and complexity. It can effectively capture the complex features in transformer fault data while avoiding issues such as feature redundancy and overfitting.

5 Conclusion

Transformer fault diagnosis is critical for the safe and stable operation of power systems, as it enables rapid and accurate fault type identification, guiding maintenance operations effectively. Traditional methods struggle with multi-scale temporal feature extraction and high-order feature representation, limiting their ability to handle complex dynamic data. To address this, we propose the MSTAFN. The MSTAFN model generates time-position vectors using a temporal information encoding module and then fuses these vectors with transformer data features through the AHOHN module, capturing temporal change characteristics. It also enhances high-order feature expression and captures complex nonlinear relationships between features, improving the model's ability to understand and model the data. The MSTF module dynamically adjusts the fusion ratio of multi-scale temporal features and global dependencies, ensuring adaptability to different tasks and data distributions. By integrating various feature information, MSTAFN achieves accurate transformer fault classification, significantly improving diagnostic accuracy. In the future, we plan to optimize the model for more complex environments, focusing on challenges such as missing data, noise, and real-time performance, while exploring its application in diagnosing faults in other power equipment to enhance the safety and efficiency of power systems.

Author Contributions

XuMing Liu: conceptualization. XiaoKun He: data curation. YongLin Li: methodology.

Ethics Statement

This research does not involve any human, animal, or plant experiments.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.