Advancing Glaucoma Diagnosis Through Multi-Scale Feature Extraction and Cross-Attention Mechanisms in Optical Coherence Tomography Images

ABSTRACT

Glaucoma is a major cause of irreversible vision loss, resulting from damage to the optic nerve. Hence, early diagnosis of this disease is crucial. This study utilizes optical coherence tomography (OCT) images from the “Shahroud Eye Cohort Study” dataset which has an unbalanced nature, to diagnose this disease. To address this imbalance, a novel approach is proposed, combining weighted bagging ensemble learning with deep learning models and data augmentation. Specifically, the glaucoma data is expanded sixfold using data augmentation techniques, and the normal data is stratified into five groups. Glaucoma samples were subsequently merged into each group, and independent training was performed. In addition to data balancing, the proposed method incorporates key architectural innovations, including multi-scale feature extraction, a cross-attention mechanism, and a Channel and Spatial Attention Module (CSAM), to improve feature extraction and focus on critical image regions. The suggested approach achieves an impressive accuracy of 98.90% with a 95% confidence interval of (96.76%, 100%) for glaucoma detection. In comparison to the earlier leading methods ConvNeXtLarge model, our method exhibits a 2.2% improvement in accuracy while using fewer parameters. These results have the potential to significantly aid ophthalmologists in early glaucoma detection, leading to more effective treatment interventions.

1 Introduction

Glaucoma damages the optic nerve, leading to vision loss [1]. It is the second leading cause of permanent blindness worldwide [2]. Glaucoma is a progressive optic neuropathy that is associated with impairments in the visual field. According to estimations, the number of individuals between the ages of 40 and 80 with glaucoma will rise from 64.3 million in 2013 to 76 million in 2020, and is projected to reach 111.8 million by 2040 [3].

Previous studies have identified several risk factors associated with developing glaucoma, including age, race, gender, intraocular pressure, diabetes, and a family history of the disease [4, 5]. Research conducted on populations aged 40 and above reports a prevalence of glaucoma ranging from 1.44% to 4%. Notably, over 50% of glaucoma patients were unaware of their condition. Additionally, the first phase of the Shahroud Eye Cohort Study (SHECS) revealed that older age and higher intraocular pressure were associated with a greater likelihood of developing glaucoma [6].

Retinal image analysis is a diagnostic tool used in the identification of glaucomatous optic neuropathy where it assesses indicators such as a high cup disc ratio (CDR), optic disc hemorrhage, and retinal nerve fiber layer (RNFL) defects [7]. Recently, various advanced tools have been developed to aid in glaucoma diagnosis, including optical coherence tomography (OCT). OCT is a non-invasive and non-contact imaging technology that allows the quantitative assessment of retinal structures, providing objective measurements of the cornea and the optic nerve head (ONH) [8]. Additionally, the detection of RNFL thinning and the loss of ganglion cells in the early stages using OCT can facilitate the early diagnosis of glaucoma [9]. RNFL thinning occurs even in the early stages of glaucoma and is therefore considered a sensitive indicator of glaucomatous damage. Macular thickness decreases in eyes affected by glaucoma, whereas in normal eyes, the macula includes the area around the fovea with the highest density of retinal ganglion cells (RGCs) [10]. OCT can diagnose glaucoma with high sensitivity and specificity by examining the optic disc and macular region [11]. Diagnosing glaucoma requires measuring various OCT scan parameters, including disc topography, peripapillary RNFL thickness, RGC [12] layer thickness (including the inner plexiform layer) [13], and ganglion cell complex (GCC) thickness [14].

Accurate and early diagnosis of glaucoma remains a critical challenge in ophthalmology, particularly due to data scarcity and imbalanced datasets. One of the significant challenges in existing methods for glaucoma diagnosis is the imbalanced datasets, characterized by a scarcity of cases in the glaucoma class and a limited availability of data pertaining to glaucoma. These issues are recognized as challenges in classifying imbalanced data, and various methods have been proposed to address them. However, another important challenge is the low accuracy and sensitivity in the early stages of the disease. Many traditional algorithms encounter difficulties in detecting subtle changes in retinal structures and often depend on manual feature extraction, a process that is both time-consuming and susceptible to errors. These problems make timely and accurate diagnosis of glaucoma a major challenge. Previous studies have attempted to address these issues through various deep learning techniques, but they often struggle with generalization due to the limited availability of glaucoma data and the complexity of retinal structural changes.

Nowadays, the advantages of deep learning models in medical image processing have become increasingly prevalent [15-17]. But deep learning requires a large amount of data to minimize over-fitting and improve performance [18-20]. However, obtaining large datasets for medical images of rare diseases is challenging. Therefore, using deep learning for diagnosing rare diseases in medical images can prove challenging. Consequently, a more effective classification strategy is essential for handling small datasets. For instance, in [21] the glaucoma dataset comprises only 2.34% of the total, highlighting a significant challenge in handling highly imbalanced datasets.

The main focus of this article is to address the challenges of highly imbalanced data and limited availability of glaucoma images through a novel, multi-faceted approach. The dataset consists of a normal-to-glaucoma image ratio of over 30:1. To address this, the glaucoma data were expanded sixfold using data augmentation, while the normal data were divided into five categories. Glaucoma data were then added to each category to reduce the imbalance, enabling the training of five separate models. In addition to this data strategy, the proposed method introduces three key innovations. First, multi-scale feature extraction was implemented to capture information at varying resolutions, enhancing the model's ability to detect both localized and global patterns. Second, the cross-attention mechanism was integrated to learn relationships between features across different scales, refining contextual understanding. Third, the CSAM was employed to improve feature selection, focusing on critical channels and regions relevant to glaucoma. These innovations, combined with a weighted ensemble learning technique for integrating individual models, significantly improve diagnostic accuracy. This approach not only enhances the timely and accurate diagnosis of glaucoma but also provides a robust framework for addressing similar challenges in the diagnosis of rare diseases with small and imbalanced datasets.

1.1 Contributions of This Study

- Data augmentation technique: The issue of dataset imbalance, characterized by a 30:1 ratio of normal to glaucoma samples, is addressed through the implementation of a structured augmentation methodology and systematic dataset partitioning.

- Multi-scale feature extraction: A novel approach is introduced to effectively capture both localized and global retinal changes.

- Cross-Attention Mechanism: Improved contextual understanding across different feature scales.

- CSAM: Enhanced feature selection focusing on glaucoma-relevant patterns.

- Weighted ensemble learning: A robust classification strategy that integrates multiple trained models for improved performance.

In the following sections of this paper, the proposed method will be explained in the second part. Results will be presented in the third section. Finally, discussion and conclusions will be provided in the fourth and fifth sections.

2 Proposed Method

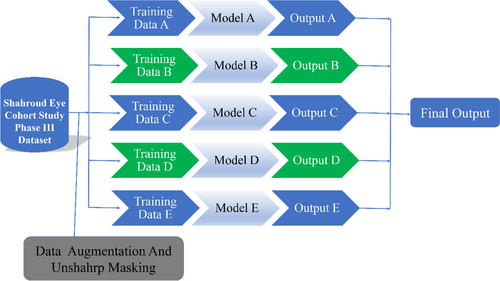

In this study, we addressed the challenges of limited glaucoma image availability and significant class imbalance between normal and glaucoma (abnormal) images. Data augmentation techniques, such as flips and scaling, were applied to increase the number of glaucoma images. Despite this, the imbalance persisted. To mitigate this, normal data were partitioned into five folds, and all augmented glaucoma data were included in each fold. This enabled the training of five independent models using a convolutional neural network (CNN), and a weighted voting mechanism was employed for final classification, forming an ensemble learning framework.

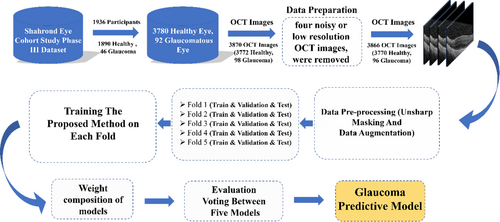

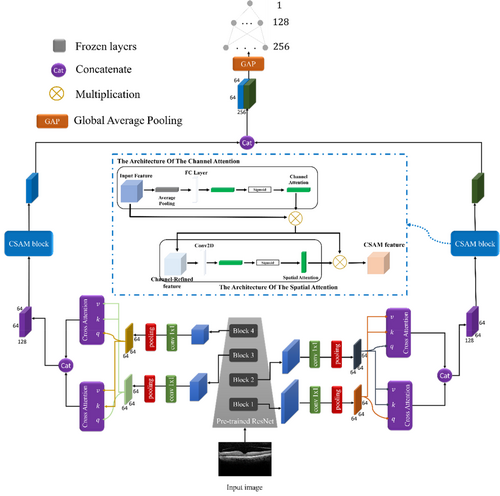

To further enhance the model's performance and feature extraction capabilities, we integrated key components into the architecture: multi-scale feature extraction, a cross-attention mechanism, and the CSAM. These components were designed to capture features at multiple resolutions, refine contextual relationships, and focus on critical image regions, significantly improving the model's accuracy and interpretability [22]. The proposed method consists of two main stages: training and testing. Figure 1 illustrates the major steps in our approach, which are detailed in the following sections.

2.1 Dataset

In this study, data from the third phase of the SHECS were used [23]. The SHECS, initiated in 2009, aimed to diagnose visual disorders and eye diseases in Shahroud County, northeast Iran. In the third phase, OCT imaging was performed using the Heidelberg Spectralis OCT device for 2660 participants. Ophthalmologists conducting the examinations in this phase considered clinical evaluations, participants' medical histories, medications taken, and the results of fundus photographs and perimetry. Glaucoma diagnosis was definitively established for 46 participants based on these assessments. A total of 1936 individuals participated in the study, consisting of 3780 healthy eyes and 92 glaucomatous eyes. Among the individuals who had OCT images, 1890 individuals had intraocular pressure (IOP) below 20 mmHg and were not on anti-glaucoma medication, categorizing them as non-glaucoma participants. After reviewing the OCT images and excluding four images due to noise or low resolution, a total of 3770 healthy OCT images and 96 glaucomatous OCT images were obtained. It is noteworthy that the number of OCT images is not equal to the number of eyes because, for some eyes, more than one OCT image was acquired, while for others, no OCT image was obtained. In the proposed model dataset, 60.2% of the population were female, with an average age of 51 years. Patients submitted images with prior consent, following the ethical principles and standards outlined in the Helsinki Declaration. Selection of all patients was based on specific criteria and clinical findings determined by specialists during examinations. Additionally, all images in the dataset were annotated by experienced ophthalmologists.

2.2 Data Preparation

- Electronic noise: This type of noise occurs when there are random electrons within the OCT imaging system or noise from electrical signals in the environment.

- Reflective noise: This happens when there is an imperfect or inadequate reflection from various tissues inside the eye.

- Thermal noise: It arises due to the thermal motion of molecules and atoms within the OCT device or its environment.

- Motion noise: This noise occurs when either the OCT device or the patient's eye unintentionally moves during the imaging process.

- Noise due to inappropriate transparency areas: This noise may occur when there are inappropriate transparency areas within the eye or areas with inadequate image quality during imaging.

2.3 Data Pre-Processing

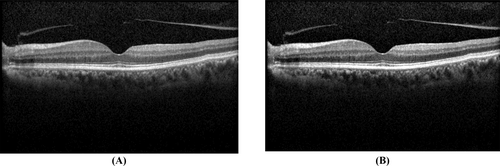

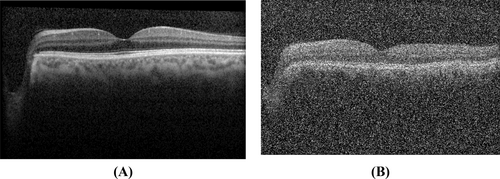

After data preparation, pre-processing was performed to enhance image quality and improve model performance. The pre-processing pipeline consists of two main steps: Unsharp masking for image enhancement and data augmentation to address class imbalance. These steps were applied to all images in the dataset, as illustrated in Figure 2.

-

Data Augmentation

Data augmentation is a technique used in image processing that can be used to increase OCT data. In OCT imaging, data augmentation artificially expands the size of the training dataset by applying various modifications to the original images. This approach enhances the performance and generalization of deep learning models that are trained on OCT images. Some common data augmentation techniques used in OCT image analysis include:

- Rotation: Rotating the image at a specific angle to simulate different scanning orientations in OCT images.

- Flipping: Flipping the image horizontally or vertically creates mirrored images.

- Scaling: Resizing the image to simulate different magnification levels.

- Translation: Shifting the image horizontally or vertically to simulate different scan positions in OCT images.

- Adding noise: Adding random noise to the image to enhance the model's robustness against noise in real-world conditions.

-

Unsharp masking for image enhancement

Unsharp masking is a widely used image enhancement technique that increases contrast and sharpness, making subtle retinal structures more distinguishable. This technique enhances OCT images by emphasizing fine details, which are crucial for detecting early-stage glaucoma.

As shown in Figure 4, Unsharp masking significantly enhances the clarity of OCT images. The figure presents examples of this technique applied to both normal and glaucoma images, highlighting how contrast and fine structures are improved. This enhancement aids the deep learning model in extracting more relevant features for classification [24].

In the testing phase, after data pre-processing, the proposed model was used to classify the images into two classes: normal and glaucoma.

2.4 Proposed Model Architecture

-

Pre-trained ResNet backbone

The architecture employs a pre-trained ResNet model as the primary feature extractor. ResNet's success in handling vanishing gradients through its deep residual connections makes it an ideal choice for medical imaging tasks, where fine-grained structural details are critical. By leveraging a pre-trained network, the model benefits from transfer learning, effectively using features learned from large-scale datasets.

In our design, the ResNet backbone is segmented into four hierarchical blocks, each progressively refining feature representations. Feature maps extracted from these blocks undergo a transformation via convolutions to reduce dimensionality while preserving spatial information. This dimensionality reduction is essential for computational efficiency and facilitates integration with subsequent multi-scale processing layers. The pre-trained ResNet enables the model to extract rich semantic features, capturing the subtle morphological changes in retinal layers indicative of glaucoma.

-

Multi-scale feature extraction

Given the varied spatial resolutions and structural features in OCT images, the architecture incorporates a multi-scale feature extraction strategy. This step ensures that the model captures details from both local (fine) and global (coarse) perspectives. Feature maps from different stages of the ResNet backbone are pooled and aligned to a uniform dimensionality using convolutional layers. This operation ensures compatibility among feature maps of varying resolutions while retaining essential spatial and contextual information.

The multi-scale design enables the model to effectively analyze small, localized RNFL thinning while simultaneously considering global structural changes, such as variations in ONH morphology. By combining information from different scales, the model builds a comprehensive understanding of glaucomatous patterns.

-

Cross-attention mechanism

To further enhance the feature representation, the architecture integrates a cross-attention mechanism. This module operates on the multi-scale feature maps and learns dependencies across different resolutions. By incorporating cross-attention, the model can focus on complementary information derived from varying receptive fields.

Specifically, the cross-attention module employs query (q), key (k), and value (v) matrices to compute attention weights. These weights guide the model in identifying critical regions and relationships between feature maps, enabling the network to prioritize features that contribute most to distinguishing glaucomatous patterns. Unlike self-attention mechanisms, cross-attention promotes interaction between feature maps of different scales, enriching the spatial and contextual understanding of the input image. The outputs of the cross-attention module are subsequently concatenated, creating a unified and discriminative feature representation.

-

Channel and Spatial Attention Module (CSAM)

The CSAM is a pivotal component of the architecture, designed to enhance feature selectivity by focusing on informative channels and spatial regions. The CSAM module combines two complementary attention mechanisms:

Channel attention: The channel attention mechanism selectively emphasizes important channels within feature maps, highlighting features most relevant to the diagnosis of glaucoma. This process begins with global average pooling to generate a channel descriptor vector, summarizing the global context for each channel. This descriptor is passed through two fully connected layers with non-linear activation functions (ReLU and Sigmoid), resulting in a set of attention weights. These weights are applied to the feature maps through element-wise multiplication, amplifying significant channels while suppressing irrelevant ones.

Spatial attention: The spatial attention mechanism identifies the most critical regions within the OCT images by generating a spatial descriptor map. This map is computed through a convolutional operation on the input feature maps, followed by activation layers (ReLU and Sigmoid). The spatial attention weights are then multiplied with the input feature maps, enabling the model to focus on regions exhibiting glaucomatous damage, such as localized RNFL thinning or ONH changes.

The CSAM module combines these two attention mechanisms to create a refined feature representation, effectively addressing the inherent complexity and variability in OCT images. By enhancing both channel-level and spatial-level feature discriminability, the CSAM block significantly improves the model's ability to detect subtle pathological changes.

-

Integration and classification

The outputs from the CSAM modules are concatenated and passed through fully connected layers to reduce the dimensionality of the feature representation. These layers are configured with dropout and batch normalization to mitigate overfitting and stabilize learning. The final classification layer employs a softmax activation function, outputting probabilities for the two classes (normal and glaucomatous). This classification stage ensures that the model effectively translates the learned feature representations into accurate predictions.

In the final stages of the network, before the dense layers, we utilized the Global Average Pooling (GAP) operator to reduce the feature map to 256 neurons. GAP operates by computing the average of each feature map, effectively transforming the spatial dimensions of the feature map into a single value per feature. This helps to significantly reduce the number of parameters and computational complexity, while also mitigating the risk of overfitting. By using GAP, the model is able to focus on the most important global features across the entire image, ensuring that the spatial information is summarized efficiently before being passed to the fully connected layers for final classification or prediction.

The integration of the pre-trained ResNet backbone, multi-scale processing, cross-attention mechanisms, and CSAM modules enables the proposed architecture to excel in the analysis of OCT images. The model not only achieves robust feature extraction but also enhances interpretability, allowing clinicians to concentrate on critical regions highlighted by the attention mechanisms. This revised architecture exhibits superior performance in glaucoma diagnosis, effectively addressing significant challenges such as feature variability, data imbalance, and the subtlety of pathological changes within retinal structures.

2.5 Final Model

Class imbalance in a binary classifier refers to having different numbers of samples between the two classes. In such a distribution, one class (minority class) has fewer samples, while the other class (majority class) has more samples. Such imbalance can arise due to various reasons, such as the nature of the data, prevalence of each class in the study population, and errors in data collection. The class distribution in our dataset was highly imbalanced, with the minority class being the glaucoma-positive cases and the majority class being the glaucoma-negative cases. According to the literature, training the classifiers on imbalanced datasets can lead to higher accuracy for the majority class. However, achieving high accuracy for the minority class remains challenging [25]. In this study, data augmentation and the Bagging Ensemble Learning method were employed to address the challenges posed by imbalanced datasets [26].

To produce the final model, 91 images were allocated for testing data and 3775 images were allocated for training data, based on the images in the database. We categorized the normal data into five folds and included all glaucoma data after data augmentation in each fold, resulting in five folds of data. Then, we built a CNN-based model for each fold, which led to making five independent models. Then, we built a model using CNN for each fold, which led to making five independent models. Then an ensemble learning technique based on a weighted voting mechanism was utilized to determine the class of each data point.

We utilized one-way analysis of variance (ANOVA), a well-known statistical analysis tool, to investigate the differences in means among different groups.

2.6 Hyper Parameter Tuning

The parameter tuning process in the proposed model was conducted systematically to optimize its performance. Key hyperparameters such as learning rate, batch size, number of epochs, and the architecture-specific parameters of the attention modules were fine-tuned using a grid search approach. This process was performed on a validation dataset to identify the optimal configuration that minimized the loss and maximized the classification accuracy.

- Learning rate: The learning rate was tested within the range of 0.0001 to 0.01, with the optimal value set at 0.001.

- Batch size: A batch size of 32 was selected after experimenting with values ranging from 16 to 64, balancing training stability and computational efficiency.

- Number of epochs: The model achieved convergence within 50 epochs, monitored using early stopping based on validation performance.

- CSAM parameters: The weights of the channel and spatial attention modules were fine-tuned by initializing them using Xavier initialization and optimized during training using the Adam optimizer.

2.7 Performance Metrics

Precision is a metric utilized to assess the performance of a binary classification model, particularly within the context of binary classification problems. This metric computes the ratio of true positive predictions to the total number of positive predictions made by the model.

Recall or true positive rate (TPR) is a critical metric employed to evaluate the performance of a predictive model. It quantifies the model's ability to accurately identify true positive instances and is particularly significant in domains such as disease detection, where the accurate identification of all positive cases is essential. Recall effectively assesses the model's coverage of true positive instances.

The F1-score is an evaluation metric that integrates precision and recall into a singular numerical representation, thereby offering a unified measure of performance. This metric is particularly beneficial in contexts where it is essential to achieve a balance between precision and recall, as reliance on either metric in isolation may not yield a comprehensive assessment of the model's effectiveness.

The true negative rate (TNR) is a metric utilized to assess the performance of classification models. It quantifies the model's capability to accurately identify negative instances. Specifically, TNR indicates the effectiveness of the model in minimizing false positive detections.

Balanced accuracy is an evaluation metric for classification models that calculates the average accuracy (Accuracy) for each class. This metric is especially useful when the data is imbalanced, meaning that there is a significant variation in the number of samples in each class.

Matthews correlation coefficient (MCC) is a statistical metric used to evaluate the performance of a binary classification model. It measures the quality of predictions by taking into account all four components of a confusion matrix: true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN). Also, MCC is particularly useful in datasets that are imbalanced, where the number of instances in the two classes differs significantly.

- TP: The number of glaucoma images that have been correctly classified.

- TN: The number of healthy images that have been correctly classified.

- FP: The number of glaucoma images that have been incorrectly classified.

- FN: The number of healthy images that have been incorrectly classified.

| Actual positive | Actual negative | |

|---|---|---|

| Predicted positive | TP | FP |

| Predicted negative | FN | TN |

All data used for this study were anonymized and the authors did not have access to the participants' identity information. The SHECS was approved by the Ethics Committee of Shahroud University of Medical Sciences (Reference number: IR.SHMU.REC.1398.039). A written informed consent was obtained from all participants after receiving sufficient information about the study and experiments.

3 Results

Due to the limited size of the glaucoma image dataset and the unsatisfactory results without data augmentation, which led to model overfitting, we opted not to present the results of models without data augmentation. This paper's two main innovations are the introduction of the CSAM module and the application of weighted ensemble learning. Consequently, the following subsections present the results obtained through the model with the CSAM module, the model without the CSAM module, and the integration of the CSAM module with weighted ensemble learning into the model. Additionally, the final model is compared with previous works.

The experiments were conducted separately in two stages. In the first stage, all OCT images in the database were categorized into two classes: diseased and healthy, and the proposed model was trained accordingly. In this instance, 10% of the data was allocated for the test set, and 90% for the training set. Subsequently, 10-fold cross validation was employed for training, yielding 10 runs for each category where the best model on the validation data was obtained.

In the modeling stage, 10% of the data (91 OCT images) were randomly selected for the test set, consisting of 11 glaucoma images and 80 healthy images. The results of all models on this dataset are reported. The remaining images comprised 85 glaucoma images and 3690 healthy images. Subsequently, the remaining glaucoma images were augmented sevenfold using data augmentation algorithms, including rotation, flipping, cropping, translation, and noise addition.

After data augmentation, the number of normal datasets increased to approximately six times that of the glaucoma datasets. Moreover, since the number of models for ensemble learning must be odd, the normal OCT image dataset was partitioned into five groups. Subsequently, all glaucoma datasets were included in each group. Each group then underwent 10-fold cross-validation during training, resulting in the creation of 10 models per group. The top-performing model from these 10 models was selected for evaluation. The experimental results for the best model from each group on the validation data are presented in Table 2.

| Accuracy % | Precision % | Recall % | F1_score % | MCC % | |

|---|---|---|---|---|---|

| Best model | |||||

| Fold1 |

97.52 (94.32, 100) |

85.71 (78.52, 92.90) |

92.31 (86.84, 97.78) |

88.89 (82.43, 95.35) |

87.57 (80.79, 94.34) |

| Fold2 |

95.04 (90.58, 99.50) |

76.92 (68.26, 85.58) |

76.92 (68.26, 85.58) |

76.92 (68.26, 85.58) |

74.15 (65.15, 83.14) |

| Fold3 |

96.69 (93.01, 100) |

84.62 (77.21, 92.03) |

84.62 (77.21, 92.03) |

84.62 (77.21, 92.03) |

82.76 (74.99, 90.52) |

| Fold4 |

95.04 (90.58, 99.50) |

73.33 (64.24, 82.42) |

84.62 (77.21, 92.03) |

78.57 (70.14, 87.00) |

76.03 (67.25, 84.80) |

| Fold5 |

95.87 (91.78, 99.96) |

75.00 (66.10, 83.90) |

92.31 (86.84, 97.78) |

82.76 (75.00, 90.52) |

81.00 (92.93, 89.06) |

Furthermore, to provide a comprehensive inspection of the models' performance, Table 3 presents the average results from experiments on validation data, accompanied by the standard deviation index for each set of 10 models per fold.

| Accuracy % | Precision % | Recall % | F1_Score % | MCC % | |

|---|---|---|---|---|---|

| Validation | |||||

| Fold1 | |||||

| Fold2 | |||||

| Fold3 | |||||

| Fold4 | |||||

| Fold5 | |||||

Based on the findings from Table 3, we ranked the models and assigned corresponding weights. The model based on fold1, achieving the highest accuracy, was assigned a weight of 5, while models 3 and 5 were given weights of 3 each, and models 2 and 4 received weights of 1 each. This resulted in a total weight sum of 13. Employing the weighted ensemble learning technique, we applied a decision threshold of 7 or higher for classifying the output class.

In Bagging Ensemble Learning, the evaluation model usually incorporates a voting mechanism to combine predictions from the base models. Majority voting is the most common voting mechanism for classification models. Each base model makes a prediction, and the class with the most votes is selected as the final prediction. Therefore, the label of the class that receives the highest number of votes from the base models is considered the predicted class label for the ensemble. In this study, we employed “weighted ensemble learning” to determine the final result from the output of the final layer.

The performance of each of the five models, as well as the final model on the test dataset, is detailed in Tables 4 and 5.

| Fold1 | Fold2 | Fold3 | Fold4 | Fold5 | Ensemble | |

|---|---|---|---|---|---|---|

| True diagnosis | 89 | 86 | 88 | 86 | 87 | 90 |

| False diagnosis | 2 | 5 | 3 | 5 | 4 | 1 |

| Accuracy percent (95% CI) | 97.8 (92.29, 99.73) | 94.51 (87.64, 98.19) | 96.7 (90.67, 99.31) | 94.51 (87.64, 98.19) | 95.6 (89.13, 98.79) | 98.90 (96.76, 100) |

- Note: The bold values highlight the best performance in each row. For the True diagnosis row, the bold value represents the highest number, which indicates the most correct predictions among the folds. In the False diagnosis row, the bold value indicates the lowest number, since fewer false diagnoses are better. Finally, in the Accuracy percent (95% CI) row, the bold value shows the highest accuracy, meaning the best overall performance.

| Accuracy % | Precision % | Recall % | F1_score % | TNR % | MCC % | Balanced accuracy % |

|---|---|---|---|---|---|---|

| 98.90 | 91.67 | 100 | 95.65 | 98.75 | 95.14 | 99.37 |

| (96.76, 100) | (85.99, 97.35) | (100, 100) | (91.53, 99.87) | (96.47, 100) | (90.72, 99.55) | (97.74, 100) |

We used the same evaluation dataset for both the proposed model and the pre-trained networks to assess and compare their performance. These pre-trained networks were trained by the researchers involved in this study. In doing so, all layers of the models except the final layer were frozen, and the weights of the final layer were updated using the training data. The evaluation results on the test dataset are detailed in Table 6.

| Model | Parameters (millions) | Accuracy % | Precision % | Recall % | F1-score % | Balanced accuracy % | MCC % |

|---|---|---|---|---|---|---|---|

| Dense Net201 | 20.2 |

93.41 (88.31, 98.50) |

72.73 (63.57, 81.88) |

72.73 (63.57, 81.88) |

72.73 (63.57, 81.88) |

84.48 (77.04, 91.91) |

68.98 (59.47, 78.48) |

| InceptionResNetV2 | 59.9 |

94.51 (89.82, 99.19) |

54.55 (44.32, 64.78) |

100 (100, 100) |

70.58 (61.21, 79.94) |

97.05 (93.57, 100) |

71.65 (62.38, 80.91) |

| MobileNetV2 | 4.3 |

96.70 (93.02,100) |

72.73 (63.57, 81.88) |

100 (100, 100) |

84.21 (76.71, 91.70) |

98.19 (95.45, 100) |

83.72 (76.13, 91.30) |

| ResNet 152 | 60.4 |

94.51 (89.82,99.19) |

90.91 (85.00, 96.81) |

71.42 (62.13, 80.70) |

80.00 (71.78, 88.21) |

85.06 (77.73, 92.38) |

77.62 (69.05, 97.12) |

| ConvNeXtLarge | 197.8 |

96.70 (93.02,100) |

81.82 (73.89, 89.74) |

90.00 (83.83, 96.16) |

85.71 (78.51, 92.90) |

93.76 (88.79, 98.72) |

83.98 (76.44, 91.51) |

| Resnet 101 | 44.7 |

87.91 (81.21,94.60) |

54.55 (44.32, 64.78) |

50.00 (39.72, 60.27) |

52.17 (41.90, 62.43) |

71.83 (62.58, 81.07) |

45.33 (35.10, 55.55) |

| Our model | 1.68 |

98.90 (96.76, 100) |

91.67 (85.99, 97.35) |

100 (100, 100) |

95.65 (91.53, 99.84) |

99.37 (97.74, 100) |

95.14 (90.72, 97.12) |

- Note: The bold values indicate the best performance for each metric. For all columns except Parameters (millions), the bold values represent the highest score, since higher values for metrics like accuracy, precision and others indicate better model performance. However, for the Parameters column, the bold value represents the lowest number, as fewer parameters mean the model is more lightweight and efficient.

Upon comparing the results of the proposed model with those of pre-trained networks, it was found that the proposed model achieved an accuracy of 98.90%, surpassing pre-trained models by 2.2%. Ensemble learning methods integrate predictions from multiple individual models to enhance overall accuracy and mitigate errors. In contrast, pre-trained models are neural networks trained on extensive datasets for specific tasks. While pre-trained models can excel in specific scenarios, they may not consistently outperform ensemble learning methods in all contexts. Ensemble learning methods excel in capturing diverse aspects of data and learning from multiple perspectives, thereby enhancing overall model performance. Their capability to harness the strengths of multiple individual models and integrate predictions effectively often leads to superior performance compared to pre-trained models, as they mitigate errors and exploit collective insights more effectively.

In this study, we calculated mean, SD, F-value, and p value to determine whether there are any significant differences between the means of five groups and indicated no statistically significant differences among the groups under examination.

3.1 Ablation Study

To evaluate the contribution of each component in the proposed architecture, we conducted an ablation study. This systematic evaluation isolates the effects of the pre-trained ResNet backbone, multi-scale feature extraction, cross-attention mechanism, and CSAM module on the model's overall accuracy. The results are summarized in Table 7.

| Backbone | Multi-scale | Cross attention | CSAM module | % Accuracy |

|---|---|---|---|---|

| X | 85.32% (80.12%, 90.52%) | |||

| X | X | 90.47% (86.45%, 94.49%) | ||

| X | X | X | 94.15% (90.67%, 97.63%) | |

| X | X | X | X | 98.90% (96.76%, 100%) |

Backbone only: Using only the pre-trained ResNet backbone achieves a baseline accuracy of 85.32%, which indicates its ability to extract generic features but highlights the need for additional mechanisms to handle specific glaucoma-related patterns.

Adding multi-scale feature extraction: Introducing multi-scale processing improves accuracy to 90.47%, demonstrating the importance of capturing features across various resolutions, which is crucial for analyzing both localized and global retinal changes.

Including cross-attention: Adding the cross-attention mechanism further enhances accuracy to 94.15%, emphasizing the significance of learning relationships between features from different scales for a comprehensive feature representation.

Full model with CSAM: Integrating the CSAM module boosts accuracy to 98.90%, validating its role in refining feature representations by selectively emphasizing important channels and spatial regions.

The results clearly show that each component contributes significantly to the model's performance, with the complete architecture yielding the best results. This ablation study highlights the necessity of a cohesive integration of all components to achieve state-of-the-art accuracy in glaucoma detection using OCT images.

3.2 Robustness Analysis Against Noise and Low-Contrast Images

To comprehensively evaluate the robustness of the proposed model, we conducted experiments by introducing Gaussian noise and Salt and Pepper noise into the dataset [27].

To construct a robust classification framework, we categorized the normal data into five folds while ensuring that all glaucoma data were included in each fold. This resulted in five distinct folds of data. For each fold, we trained an independent CNN-based model, leading to the creation of five separate models.

- Gaussian noise with variance levels (σ = 0.1, 0.3, 0.6)

- Salt and Pepper noise with noise densities (d = 0.1, 0.3, 0.6)

As observed in Tables 8 and 9, the ensemble-based strategy effectively stabilizes classification accuracy despite increasing noise levels, confirming the robustness of the proposed approach.

| Accuracy % | Precision % | Recall % | F1_score % | TNR % | MCC % | Balanced accuracy % | |

|---|---|---|---|---|---|---|---|

| σ = 0.1 | 96.70 | 90.00 | 81.81 | 98.13 | 81.81 | 83.98 | 81.81 |

| σ = 0.3 | 95.60 | 91.81 | 81.81 | 97.50 | 81.81 | 79.32 | 81.81 |

| σ = 0.6 | 94.50 | 71.42 | 90.90 | 96.81 | 90.90 | 77.62 | 90.90 |

| Noise density | Accuracy % | Precision % | Recall % | F1_score % | TNR % | MCC % | Balanced accuracy % |

|---|---|---|---|---|---|---|---|

| 0.1 | 95.60 | 81.81 | 81.81 | 97.50 | 81.81 | 79.32 | 81.81 |

| 0.3 | 94.50 | 75.00 | 81.81 | 96.85 | 81.81 | 75.22 | 81.81 |

| 0.6 | 9340 | 72.72 | 72.72 | 96.25 | 72.72 | 68.98 | 72.72 |

3.3 Computational Complexity Analysis

-

Theoretical complexity:

The model comprises a ResNet101 backbone with complexity O (N × H × W × C), where N is the number of filters, and additional modules such as the CSAM and Cross-Attention introduce matrix operations with complexity O (C2 × HW).

-

Empirical results:

We evaluated the execution time on input sizes 224 × 224 × 3. The time taken was 0.035 s on a single NVIDIA GPU (RTX 5000).

The increase in execution time for larger inputs is expected due to the computational cost of attention mechanisms. However, the computational complexity remains efficient as demonstrated in Table 6, and the total number of parameters in our proposed model is 1.68 million, which ensures a low computational burden compared to other state-of-the-art models.

4 Discussion

This study proposed an ensemble learning technique for the effective classification of imbalanced data in a private OCT image database used for diagnosing glaucoma. The Ensemble Learning Bagging method was employed to manage the imbalanced dataset. Data analysis and modeling were conducted using convolutional deep neural networks.

According to Table 6, the experimental results indicated that the proposed model, when used in the weighted ensemble learning technique, enhances the diagnosis of glaucoma with an accuracy of 98.90%, a precision of 91.67%, a recall of 100%, an F1-score of 95.65%, a TNR of 98.75%, and a balanced accuracy of 99.37%. Additionally, the proposed model's accuracy in diagnosing glaucoma is 2.2% higher than that of the pre-trained models used in this study. This significant improvement is primarily attributed to the inclusion of key components, such as the multi-scale feature extraction phase, the cross-attention mechanism, and the CSAM module, as evidenced by the results of the ablation study (Table 8). Each component demonstrated a measurable contribution to the overall performance, emphasizing their critical role in achieving state-of-the-art diagnostic accuracy.

Furthermore, the proposed model has fewer parameters compared to the pre-trained models, making it more efficient. The multi-scale feature extraction module allows the model to effectively capture patterns at varying resolutions, while the cross-attention mechanism improves the contextual integration of features from different scales. Additionally, the CSAM module enhances both channel- and spatial-level focus, allowing the network to concentrate on the most relevant features for glaucoma detection. These advancements collectively refine the model's feature representation capabilities, leading to the observed performance gains.

The utilization of OCT images in diagnosing glaucoma presents several advantages, such as high resolution, quantitative measurements, early detection capabilities, non-invasiveness, objective analysis, subgroup differentiation, treatment monitoring, and compatibility with artificial intelligence technologies. These attributes render OCT a valuable asset in the clinical assessment and management of glaucoma. Numerous studies have explored diverse methods for diagnosing glaucoma, with Table 7 outlining specifics from prior research in this area. Unlike prior studies, this work uniquely integrates advanced attention mechanisms, such as the cross-attention and CSAM modules, with multi-scale feature extraction to enhance the diagnostic process. This combination results in significant improvements over existing methods.

Moreover, the proposed model demonstrates a capacity for generalization across imbalanced datasets, which is critical in medical imaging scenarios where pathological cases are often underrepresented. The ensemble learning strategy further stabilizes predictions by mitigating the effects of class imbalance, and the improved feature representations from the novel components enable better handling of subtle variations in OCT images. These aspects ensure the model's robustness and clinical applicability.

4.1 Comparison With Recent Studies on Feature Selection and ML/DL Techniques for Eye-Related Diseases

-

A novel hybridized feature selection strategy for the effective prediction of glaucoma in retinal fundus images:

This study proposed a hybrid feature selection strategy to identify significant features from retinal fundus images, enabling precise glaucoma prediction. Our model extends this approach by integrating multi-scale feature extraction and cross-attention mechanisms, allowing the network to focus on critical regions with enhanced spatial and contextual understanding.

-

Feature subset selection through nature inspired computing for efficient glaucoma classification from fundus images:

This study utilized nature-inspired algorithms for feature subset selection, improving the efficiency of glaucoma classification. In contrast, our model incorporates the CSAM, which not only selects critical features but also enhances channel-level and spatial-level feature representation.

-

Efficient feature selection based novel clinical decision support system for glaucoma prediction from retinal fundus images:

A clinical decision support system was developed with a focus on efficient feature selection. Our approach combines transfer learning using a pre-trained ResNet backbone and ensemble learning techniques, achieving robust performance in glaucoma detection while addressing data imbalance.

-

Performance analysis of machine learning techniques for glaucoma detection based on textural and intensity features:

This work analyzed various ML techniques based on textural and intensity features for glaucoma detection. Building on this, our model integrates textural and intensity features while enhancing feature representation through multi-scale processing and cross-attention mechanisms.

| Reference | Dataset | Classifier | Best performance measures (%) | Advantages/disadvantages |

|---|---|---|---|---|

| [28] | Fundus Image | CNN | Accuracy = 97.18 | The article highlights advantages such as enhanced diagnostic accuracy through multi-scale attention blocks in CNNs, facilitated by effective data augmentation techniques that broaden the diversity of training datasets. However, the method's complexity in model interpretation and the requirement for significant computational resources are notable disadvantages. |

| [29] |

Fundus Images, Personal Data, Genome Data |

SVM MKL | AUC = 86.6 | The advantages of this article include the potential for automated and efficient diagnosis of glaucoma using medical imaging informatics, which can streamline the diagnostic process and improve patient outcomes. However, the disadvantages may include the need for robust validation studies to ensure the accuracy and reliability of the automated diagnosis system, as well as potential challenges in integrating the technology into existing clinical workflows. |

| [30] | Visual Field Repots | SVM, RF, K-NN, CNN |

Accuracy = 87.6, Sensitivity = 93.2, Specificity = 82.6 |

The advantages of this article include the potential for automated and efficient diagnosis of glaucoma using medical imaging informatics, which can streamline the diagnostic process and improve patient outcomes. However, the disadvantages may include the need for robust validation studies to ensure the accuracy and reliability of the automated diagnosis system, as well as potential challenges in integrating the technology into existing clinical workflows. |

| [31] | Fundus Image | SVM, NB | Accuracy = 92.65, Sensitivity = 100, Specificity = 92.0 | The advantages include the potential for accurate and automated classification of glaucoma stages, while its disadvantages may include limitations in generalizability and applicability to diverse patient populations. |

| [32] | Clinical Variables | MLR, ANN |

Accuracy = 84.0, Sensitivity = 78.3, Specificity = 85.9 |

The advantages are the potential for accurate classification without the need for a visual field test, while its disadvantages may include limitations in generalizability to diverse patient populations and the need for further validation studies. |

| [33] | Medical History Data, Fundus Image | RNN (ResNet101) |

Accuracy = 96.5, Sensitivity = 99.8, Specificity = 99.9 |

The advantages of the potential for accurate and efficient detection of glaucomatous optic neuropathy, while its disadvantages may include the need for high-quality image data and potential challenges in interpreting the deep learning model's decisions. |

| [34] | VF test Parameter, RNFL Thickness, General Ophthalmic Examination | RF, DT, SVM, KNN |

Accuracy = 98, Sensitivity =98.3, Specificity = 97.5 |

Advantages the article showcases the development of machine learning models for the diagnosis of glaucoma, demonstrating the potential for improved accuracy, efficiency, and objectivity in glaucoma diagnosis using data-driven approaches. Disadvantages, and challenges such as the need for extensive validation of diverse datasets, integration into clinical practice, and consideration of regulatory approval may impact the widespread adoption and implementation of these models in real-world healthcare settings. |

| [35] | Fundus Images | DT, QDA, LDA, SVM, KNN, PNN | Accuracy = 95.8 | The advantages of this article include the innovative use of local configuration pattern features for glaucoma risk detection, while its disadvantages may include the complexity of feature extraction and potential challenges in generalizing the algorithm to diverse populations. |

| [36] | Fundus Images | SVM |

Accuracy = 95.0, Sensitivity = 93.33, Specificity = 96.67 |

The advantages of this article include the potential for automated diagnosis with advanced spectral and wavelet features, while its disadvantages may involve the complexity of feature extraction and interpretation. |

| [37] | Fundus Images, Clinical Data | Multi Branch Neural Network | Accuracy = 99.24, Sensitivity = 97.91, Specificity = 93.59 |

The advantages of this article include of glaucoma diagnosis based on both hidden features and domain knowledge through deep learning models, showing promise for leveraging both data-driven insights and clinical expertise to enhance the accuracy and interpretability of glaucoma diagnosis. Disadvantages include challenges may include the complexity of integrating domain knowledge into deep learning models, the need for robust validation on diverse datasets, and considerations for model transparency and explain ability in clinical decision-making processes. |

| [38] | Fundus Images | ANN, SVM, AdaBoost |

Accuracy = 98.0, Sensitivity = 100, Specificity = 97.0 |

The article showcases the benefits of automated segmentation and classification of retinal features for glaucoma diagnosis, offering a potentially efficient and objective method for identifying key biomarkers associated with the disease. Disadvantages include potential limitations that may include the need for validation on larger datasets, considerations for model generalizability across different populations, and integration into clinical workflows for practical implementation in glaucoma diagnosis and management. |

| [39] | OCT Images | Calculates the Cup to Disc Ratio (CDR) with image processing | Accuracy = 94 | The advantages of this article include the use of a simple and widely recognized metric for glaucoma diagnosis and the potential for quick and cost-effective assessment of glaucoma risk. However, the disadvantages may include limitations in accuracy and sensitivity compared to more advanced imaging techniques, and the reliance on a single metric for diagnosis which may not capture the full complexity of glaucoma. |

| [40] | OCTA Images | AdaBoost | Accuracy = 94.3 | The advantages of this article include the potential for non-invasive and efficient detection of glaucoma using advanced imaging technology, and the ability to assess both structural and vascular changes in the eye. However, the disadvantages may include challenges in standardizing image acquisition and interpretation, as well as the need for validation studies to ensure the accuracy and reliability of the automated detection system. |

| [41] | OCT Images | CNN | Accuracy = 95.90 | The advantages of this article include the potential for accurate and efficient diagnosis of glaucoma using advanced deep-learning techniques and wide-field imaging technology. However, the disadvantages may include the need for large and diverse datasets for training the deep learning model and potential challenges in generalizing the model to different populations or clinical settings. |

| [42] | Fundus Images | CNN | Accuracy = 97.74 | This article's advantages include that En-ConvNet improves glaucoma detection accuracy through ensemble learning, leveraging noninvasive fundus images for efficient, automated screening with better generalization and early detection potential. However, the disadvantages are that the model demands high computational resources, risks over-fitting, and lacks interpretability. Its performance is sensitive to image quality and potential data imbalance issues. |

| [27] | Fundus Images |

Ensemble of deep convolutional neural networks |

Accuracy = 99.6 | This article's advantages include that Using CNNs for glaucoma assessment enables automated, accurate detection from non-invasive fundus images, improving screening efficiency and aiding early diagnosis. However, the disadvantages are that the CNN models require large datasets and high computational power, may over-fit with limited data, and often lack interpretability for clinical decision-making. |

- Abbreviations: ANN: artificial neural networks, K-NN: K-nearest neighbor, LDA: linear discriminant analysis, MKL: multi kernel learning, MLR: multi logistic regression, NB: Naïve Bayes, OCTA: optical coherence tomography angiography, PNN: probabilistic neural networks, QDA: quadratic linear regression, RF: Random Forest, RNFL: retinal nerve fiber layer, RNN: residual neural network, SAP: standard automated perimetry, SVM: support vector machine.

These advancements highlight the practical relevance and superiority of our approach, offering a comprehensive framework for glaucoma detection that leverages both feature selection and advanced DL/ML techniques.

4.2 Evaluating the Impact of Ensemble Learning in Medical Images

The proposed method achieved an accuracy of 98.90%, which is comparable to previous ensemble-based approaches in medical image analysis. For instance, a COVID-19 detection model achieved 98.5% accuracy using an ensemble of deep learning models [43], and a malaria classification system attained 97.8% accuracy with a custom CNN model [44]. These findings highlight the effectiveness of ensemble techniques in medical imaging, further supporting our approach in glaucoma detection.

4.3 Discussion on Limitations, Future Scope, Threats to Validity, and Assumptions

-

Limitations:

Although the proposed model demonstrates high accuracy in glaucoma detection, certain limitations must be acknowledged. The study uses a dataset that is relatively imbalanced, which, despite augmentation, may not represent all variations in real-world clinical scenarios. Additionally, the model's performance has not yet been validated across diverse populations or imaging devices, potentially limiting its generalizability.

-

Future scope:

Future work will focus on expanding the dataset to include images from diverse populations and imaging modalities to improve the model's robustness and applicability. Moreover, integrating explainable AI techniques could enhance the model's interpretability, facilitating its adoption in clinical settings.

-

Threats to validity:

The primary threats to validity include the reliance on a single dataset and the potential over-fitting due to data augmentation. To mitigate these risks, cross-validation and ensemble learning techniques were employed. However, further testing on independent external datasets is required to ensure reliability.

-

Assumptions:

This study assumes that the quality of OCT images and the labeling provided in the dataset are accurate and consistent. Any variability in image quality or mislabeling could affect the model's performance and its applicability in real-world settings.

4.4 Discussion on Scalability and Interoperability

-

Scalability:

The proposed model is designed to process large volumes of data and handle images of varying resolutions. In our dataset, the original images come in different sizes; however, for consistency in training, all images are resized to a fixed input resolution of 224 × 224 × 3. This standardization ensures uniform feature extraction and stable training.

-

Interoperability:

The model is compatible with standard medical image formats such as DICOM, ensuring seamless integration with existing imaging devices. Moreover, the model's architecture facilitates the export of outputs to electronic health record (EHR) and electronic medical record (EMR) systems, streamlining its adoption in clinical workflows. To enhance usability, simple and intuitive user interfaces are designed to visually present the model's outputs, highlighting critical regions identified by the attention mechanisms. This design ensures that clinicians can easily interpret the results and focus on areas indicative of glaucomatous damage.

DICOM images offer distinct advantages in deep learning, enhancing the performance of models in the medical field. First, DICOM images contain detailed medical information such as measurements, positions, and descriptions, which can enhance the analysis and interpretation of images for disease detection and diagnosis, thus improving the accuracy of deep learning models [45]. Second, DICOM images are of high quality due to digital storage and specific compression algorithms, resulting in images with superior detail compared to standard images [46]. This quality is essential for precise analysis and diagnosis of diseases and disorders. These advantages significantly impact the efficiency and accuracy of deep learning models in medical applications. It should be noted that the lack of access to DICOM images for processing OCT images in this study is a limitation. Deep learning has become increasingly prevalent in various medical imaging domains, demonstrating its versatility and effectiveness in tasks such as segmentation and classification [16, 17]. However, the application of deep learning in OCT imaging, particularly for glaucoma diagnosis, remains an area with unique challenges and opportunities.

5 Conclusion

This study introduced a weighted ensemble learning technique to address imbalanced data in deep learning tasks, using Phase III OCT images from the Shahroud Eye Cohort. The proposed model achieved superior diagnostic accuracy (98.90%) compared to traditional transfer learning methods, largely due to the integration of multi-scale feature extraction, cross-attention mechanisms, and the CSAM module. These components enhanced feature refinement and focus, enabling more precise glaucoma detection.

While the model effectively distinguishes between individuals with glaucoma and healthy controls, it does not yet address glaucoma subtypes. Future research should focus on developing models for subtype diagnosis and integrating multimodal data, such as demographic, genetic, and visual field information, to enhance diagnostic performance. Additionally, validating the model on diverse datasets from different populations and imaging devices is crucial for improving its generalizability and real-world applicability.

Beyond these aspects, several research directions remain open for further exploration. Developing explainable AI (XAI) frameworks could enhance model transparency, fostering greater trust among clinicians. The integration of OCT imaging with complementary modalities such as fundus photography and perimetry data may further improve diagnostic accuracy. Moreover, optimizing the model for real-time applications in portable imaging systems could facilitate early detection in resource-limited settings. Another promising direction is extending the model to monitor glaucoma progression over time, enabling personalized treatment strategies. Addressing variability in image quality, resolution, and noise from different imaging systems is also essential for ensuring robust performance across diverse clinical environments.

This study highlights the potential of advanced attention mechanisms and OCT imaging in creating robust diagnostic tools for ophthalmology, paving the way for improved clinical outcomes. By addressing the outlined challenges and research gaps, future studies can contribute to the development of more interpretable, scalable, and clinically viable AI-driven solutions for glaucoma diagnosis and management.

Author Contributions

Hamid Reza Khajeha: software, writing – original draft, data curation, conceptualization. Mansoor Fateh: writing – review and editing, supervision, conceptualization. Vahid Abolghasemi: resources, writing – review and editing. Amir Reza Fateh: validation, visualization, writing – review and editing. Mohammad Hassan Emamian: validation, formal analysis, resources. Hassan Hashemi: resources, validation. Akbar Fotouhi: resources, validation.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

The Python code used in this study, along with 50 OCT images of glaucoma and 50 OCT images of healthy subjects, has been made publicly available on GitHub at the following link: https://github.com/hmdkhajehashecs/SHECS_OCT. Regarding the full dataset, as mentioned in the Data Availability Statement, the complete dataset cannot be shared publicly due to privacy and ethical restrictions. However, it is available upon request from the corresponding author for research purposes, subject to institutional and ethical guidelines.