SB-YOLO-V8: A Multilayered Deep Learning Approach for Real-Time Human Detection

ABSTRACT

Over the past decade, significant advancements in computer vision have been made, primarily driven by deep learning-based algorithms for object detection. However, these models often require large amounts of labeled data, leading to performance degradation when applied to tasks with limited data sets, particularly in scenarios involving moving objects. For instance, real-time detection and detection of humans in agricultural settings pose challenges that demand sophisticated vision algorithms. To address this issue, we propose SB-YOLO-V8 (Scene-Based—You Only Look Once—Version 8), an optimized YOLO-based convolutional neural network (CNN) designed specifically for real-time human detection, not just limited to citrus farms but applicable to various agricultural and urban environments. The versatility of SB-YOLO-V8 is underpinned by its robust handling of class imbalances and enhanced feature extraction through binary adaptive learning optimization (ALO) and Synthetic Minority Over-sampling Technique (SMOTE), addressing common challenges like limited labeled data sets and varied object dynamics in different scenarios. The proposed method is trained using images and videos of human workers captured by autonomous farm equipment. Evaluation metrics, including frame per second (FPS), model performance, and efficiency, demonstrate that the proposed method outperforms variances of YOLO such as YOLO-V8, YOLO-V7, YOLO-V6, YOLO-V4, and YOLO-V3 with an average FPS of 13.63 and a precision of 91%. In effect, the proposed SB-YOLO-V8 presents an efficient solution for real-time human detection in challenging visual scenarios.

1 Introduction

In the past decade, continuous advancements in object detection studies have led to the development of many effective algorithms [1]. Object detection studies aim to categorize objects in images or videos and specify their exact positions [2]. This can be achieved by employing various sophisticated and successful deep learning (DL) algorithms that automatically learn the correct functions of images or videos and create efficient detectors through monitored instructions [3]. Local visual functions are typically extracted through continuous visual functions and classifiers, thus automatically learning the valuable features from the images or videos. Features to be learned for adequate detectors include texture, color, shape, and their combination [4-6]. However, DL algorithms often face challenges connected with poor image quality, illumination conditions, and stringent conditions imposed on time and computational resources when learning these features [1, 7]. In addition to the same challenges, the performance of DL algorithms degrades significantly on tasks with minimally available labeled data sets [8-10]. These challenges negatively affect the algorithm's quality, thus reducing its efficiency.

To overcome the above challenges, various augmented DL algorithms have been proposed [11-13], but the most widely used of these algorithms is the convoluted neural network (CNN) [14, 15]. The development of CNN began with the immense success of AlexNet [16], followed by several successful CNN algorithms like Convolutional Long Short-Term Memory 1 (ConvLSTM1) [17], deep convolutional neural network (DCNN) [18] and YOLO [19]. These algorithms can be classified as either a one-stage method (single-shot detector [SSD] and YOLO) [20, 21] or a two-stage method—Regional-based-CNN (R-CNN), Mask CNN [22] depending on the object detection process. Intuitively, this method has some noticeable disadvantages, among which include slow speed that obstructs its use in real-time applications and too complex architecture that could not be learned exclusively. In addition, it provides estimations of positions and classes in one step. The probabilities of possible classes are provided as outputs, not for all the classes but for a set of equally spaced-out positions and aspect ratios in the form of rectangles called boxes.

Among the one-stage methods, the YOLO-based algorithm has received considerable attention due to its discriminative features. These distinctive features can be utilized for robust inferences in real-time visual scenes. Compared to the other CNN models, which usually suffer from slow response time, YOLO algorithms are efficient in speed and accuracy and can detect objects in both images, and video classes in real-time [23]. YOLO requires detectable objects to pass through the neural network only once to make predictions. This one-time forward propagation allows the algorithm to see the entire images or videos during training, enabling it to encode contextual information about classes, including their implicit appearances. On top of that, the algorithm uses a single CNN for the simultaneous prediction of classes and myriad bounding box probabilities for those boxes. This reduces the neural network architecture and computational complexity [24].

Due to the above-stated discriminative features, YOLO-based CNN models have been utilized in many practical human detection applications.

- Addressing the challenge of imbalanced class data distribution or limited labeled data through the utilization of data augmentation techniques and SMOTE. This helps enhance object detection performance and mitigate model bias.

- Introducing an effective approach to tackle feature selection issues, aiding in identifying the most relevant features for classification experiments. This enhances the overall performance, robustness, and efficiency of the classification model, leading to the success of the proposed SB-YOLO-V8 algorithm for real-time human detection in citrus farms.

- Handling data set sparsity in the detection field through data augmentation, thereby mitigating overfitting. The aggregator module acts as a feedback mechanism, reducing computational costs and tuning for improved performance.

The subsequent sections of this study are structured as follows: Section 2 provides a comprehensive overview of the significance and related works pertaining to human detection in agricultural farms. Section 3 outlines the proposed method, while Section 4 presents the experimental approach, including an inferential study of YOLO-V3 and YOLO-V4, detailed results, and a summary of the experimental setup. Finally, Section 5 concludes the study.

2 Related Works

Sumit et al. [25] propose a comparative analysis of YOLO-V3 and Masked R-CNN on the detection of tiny human images, among other types of images. In that study, simulation results indicated that YOLO-V3 exhibited superior accuracy and computational efficiency in detecting the human figures within the images compared to Mask R-CNN. However, the performance metrics adopted in the study were limited to only two, and the scalability appears to be questionable. While this comparison highlights YOLO-V3's efficiency, the proposed SB-YOLO-V8 further enhances this efficiency by incorporating SMOTE and binary ALO (BALO), addressing scalability concerns that were not fully explored in the comparative study.

Ivašić-Kos et al. [26] propose a technique to detect individuals in videos recorded during winter under various weather conditions, predominantly at night, and at varying distances from the camera, ranging from 30 to 215 m. The individuals in the thermal videos were observed to engage in activities such as walking, running, or walking hunched over, attempting to remain inconspicuous. The study highlights significant improvements in mean precision achievable with YOLO-based algorithms trained on this thermal data set. However, it also raises a concern about the high risk of overfitting due to the specific characteristics of the data set and data preprocessing techniques utilized. Our SB-YOLO-V8 model builds on this by using advanced data augmentation techniques to handle variable environmental conditions more effectively, which was identified as a limitation in their approach.

Jung et al. [27] propose a YOLO-V3 based on the NVIDIA Jetson Xavier-based people identification system using a four-channel camera placed on a tractor. Among the numerous objects supplied, the YOLO-V3 model was trained using the Common Objects in Context (COCO) data set. Only humans were extracted to learn by the network. The results of the experiment showed remarkable detection abilities of the YOLO-V3. However, the study raised concerns regarding the extensive involvement of human experts in feature selection and data processing. This is about potential error margins if the data set size were to increase or if larger data sets were utilized. Compared to this approach, SB-YOLO-V8 reduces the need for extensive human involvement in feature selection and data processing through its automated BALO optimization, enhancing scalability and reducing potential error margins.

He et al. [28] propose a real-time YOLO-based human detection algorithm, known as Tiny Fast YOLO, and classify the data with k-means++. They claim that the technique was very effective in detecting objects in embedded systems. However, the authors admitted that the proposed technique is vulnerable to minor targets and increases end-to-end training, thereby leading to a significant deterioration in real-time scenarios. Another drawback of the technique is that it does not cater to large data sets and scalability. In contrast, SB-YOLO-V8 addresses these vulnerabilities by improving detection robustness across different object sizes and types through enhanced feature extraction methods.

Ji et al. [29] propose human abnormal behavior detection by utilizing a YOLO network model. In this study, the human behavior was classified based on the conditions of the monitoring settings and then the data were trained via the YOLO network. Although the proposed scheme outperformed many traditional schemes, it also had challenges with data preprocessing and overfitting. SB-YOLO-V8 mitigates these challenges by incorporating SMOTE to balance the data set effectively, leading to more generalized and robust models.

Ahmad et al. [30] modified the YOLO-based CNN algorithm for human detection. The authors produced a modification in relation to the error function of the YOLO network. The enhanced algorithm replaced the border style in the proportion concept. Compared to the loss function of the original YOLO algorithm, the improved model is more adaptable and rational regarding network error optimization. SB-YOLO-V8 goes beyond this by not only adjusting error functions but also by optimizing the feature selection process to enhance detection accuracy significantly.

Van Tuan et al. [31] also propose a novel real-time YOLO-based model for human detection in security cameras using fisheye lenses. Instead of employing the original 3D color input channels for the ConvNets-based YOLO model, 2D input channels consisting of gray-level image channels and foreground-background context information were used to increase the precision of the original YOLO model. Through experimental demonstration, the authors showed significant improvements in accuracy and robustness to background scenes that could be observed in the proposed method to the original YOLO-based human detection algorithm. However, the technique used in preprocessing the data set is likely to lose some features during the conversion of the data set into a gray-level image channel. SB-YOLO-V8 extends this idea by integrating comprehensive data preprocessing that preserves critical features during conversion, which their approach risks losing.

More recently, the usage of the YOLO algorithm has been found in the agriculture sector. For instance, Wang et al. [32] proposed a computerized imaging method for determining mango harvest yield using a DL. In this study, the Kalman filter and Hungarian algorithms are integrated with the YOLO-based model for on-tree mango fruit detection. Though the study is promising, there are various limitations with the Kalman filter with regard to the optimization.

Finally, Tian et al. [33] improved the YOLO-V3 algorithm to detect tomato diseases and insect infestation. They improved the YOLO-V3 model by employing multiscale training, image pyramid, and dimension clustering to identify multiscale features. This study shows significant improvements in both the detection accuracy and detection time. However, despite its extensive usage, commercialized applications of the YOLO-based algorithms for the real-time detection of humans in agricultural farms are scarce.

Thus, the proposed study gives an inferential analysis of the YOLO model for real-time human detection on a citrus farm. The analysis is based on the two most influential YOLO-based CNN algorithms, namely, YOLO-V3 and YOLO-V4. The study enhances the performance of the YOLO-based CNN algorithm with SMOTE and BALO algorithms. Then, we compare it with other algorithms in the same farming environment to determine its efficiency. While existing studies have laid a solid foundation in the use of YOLO algorithms for various detection tasks, the proposed SB-YOLO-V8 model introduces significant improvements in terms of scalability, robustness, and accuracy by integrating SMOTE and BALO. This makes it particularly effective in real-time human detection across various settings, not limited to agricultural environments but also extendable to urban and industrial scenarios.

3 Proposed Method

3.1 Class Imbalance in High-Dimensional Data

Class imbalance is when the sample sizes for different classes in a data set are unequal and evenly distributed [34, 35]. Dealing with class imbalance is crucial in classification tasks, as it can lead to biased models with poor performance in the minority class. Data resampling techniques are commonly employed to address class imbalance in data sets. Among them, balanced resampling strategies such as random oversampling and under sampling are often utilized. Random oversampling involves replicating instances from the minority class randomly to increase its representation. The introduction of SMOTE in the SB-YOLO-V8 framework addresses class imbalances by synthetically enhancing the minority class representation [36]. This approach not only improves the classifier's accuracy but also boosts its ability to scale across diverse data sets. SMOTE generates synthetic samples by considering the k-nearest neighbors of each minority class instance and creates synthetic features along the line segments connecting the minority class instance and its neighbors. The amount of oversampling required can vary and SMOTE allows for selecting the k closest neighbors based on this oversampling requirement. Interpolating the feature values of the minority class instance and its chosen neighbors produces synthetic samples. SMOTE can oversample the minority class from 100% to 500% of the original size [36].

Below are the steps in generating synthetic samples using SMOTE, where each synthetic sample is created by interpolating the feature values of the minority instance and its neighbors along the line segments connecting them. This procedure allows for the creation of synthetic samples that enhance the representation of the minority class in the data set.

- Find the k nearest neighbors of , denoted as

-

For each feature dimension :

- Calculate the difference or distance between the feature value of and its neighbor for dimension j, denoted as

- Randomly select a difference value between 0 and 1, denoted as

-

For each synthetic sample s generated from xi and its neighbors:

- Create the synthetic feature vector .

-

For each feature dimension j:

- ∘

Compute the synthetic feature value where is the randomly selected difference value.

- ∘

- Assign the class label of to all synthetic samples generated from

3.2 Feature Selection Optimization Model

Binary Ant Lion Optimizer (ALO) is employed to refine the feature selection process, significantly reducing the model's complexity while maintaining high detection accuracy. This optimization is crucial for scalable real-time applications as it ensures that SB-YOLO-V8 remains computationally efficient even when deployed in environments with vast amounts of data. In creating the feature selection optimization model, we can determine a subset of features that adequately characterize the entities in the data set, thereby improving classification accuracy while reducing the computational effort and complexity associated with many features. The feature selection optimization model can be formulated as follows:

- X be the set of all features in the data set.

- Xs be the selected subgroup of features.

- C be the set of all classes or labels.

- N be the total number of instances or objects in the data set.

- be the number of instances belonging to Class Ci

- , Xs be the number of instances belonging to Class Ci and having features in the selected subgroup Xs.

In the optimization model, the objective function aims to maximize the ratio of correctly classified instances in the selected subgroup Xs to the total number of instances in the data set, divided by the number of selected features. This captures the tradeoff between classification accuracy and the size of the selected feature subset. The constraint ensures that the size of the selected subgroup Xs is not more significant than the size of the set of all features X. This constraint reflects the limitation of the available features and prevents overfitting.

The optimum subset in the multidimensional issue search space must be found efficiently using a feature selection model. ALO is a recently created method for tackling complex optimization problems based on emulating the meal snatching Antlions and their feeding ants' environmental mechanisms. For this purpose, ALO is used for many real-world challenges. It has shown promising results in tackling real-world problems in a multidimensional search space. This approach uses its mechanism to solve the defined feature selection model because of its inherent exploration and exploitation capabilities. If a particular characteristic is part of the subset, the decision variables are based on that fact. To pick the best features, a feature selection model requires binary representations of variables.

3.3 Feature Selection Based on ALO in Balanced Class Data

- Step 1:

Initialization.

Search agents, representing ants and antlions, are randomly generated with the best possible features. Each character is represented by a binary variable, where “1” indicates a major characteristic and “0” denotes an unimportant one.

- Step 2:

Fitness worth evaluation.

A fitness value is determined for the searchers based on the goal to be achieved. The antlion with the best health from the first generation serves as the reference.

- Step 3:

Trapping in the pit of the antlions.

The pits created by antlions affect the movement of ants, steering them toward previously unexplored areas. The following equations represent the impact of pits on ant movement:

(4)Here, represents the smallest value of “k” in iteration “t,” represents the highest value of “k” in iteration “t,” Ct is the smallest variable in “t,” and Dt is the highest value of all “k” in “t.” “k” represents variables, and “t” represents the iteration.

- Step 4:

Ant gliding in the direction of antlion.

Traps are formed based on the strength of antlions, and ants are required to randomly change their location during trap creation. Ants' unplanned circular movements are simulated, which change based on the current iteration level.

(5) - Step 5:

Normalize the Variational AntWalks.

Ants' movement in search of food is erratic. To replicate this random movement, the positional change is represented as:

(6)Here, cums computes the cumulative sum, and (t) is specified as:

(7)Normalization is applied based on Equation (9) to confine the unexpected movement within the search space:

(8)Here, (i, k)t represents the location of the ktℎ variable in the ttℎ generation of ant i.

- Step 6:

Capturing ants for food and reenacting the pit.

Each ant's current health is evaluated based on the goal defined for its current location. If an ant has superior health, its location replaces the antlion's location; otherwise, the antlion's original placement is retained until the next round.

(9) - Step 7:

Elitism.

The elite antlion, representing the healthiest individual, is preserved. A superior antlion may disrupt the ant's position, causing the ant's location in the search space to be updated. Ants perform unexpected movements based on fixed and elite antlions. Crossover and mutation operators are employed as follows:

(10)(11)The probability of crossover (CRP) lies between 0 and 1. Mutation, which involves random modifications, is defined as follows:

(12)Here, Antt(i.,k) represents the ant's position after the mutation operation, and MP represents the mutation probability value from 0 to 1.

- Step 8:

Convergence.

The search process is halted if an appropriate solution is found, no further improvement in health is possible, or a certain number of unexpected movements have been performed. The elite antlion represents the best possible outcome.

These steps outline the feature selection process in balanced class data. It mimics the behavior of ants and antlions in an optimization framework to identify an optimal subset of features for classification tasks. The algorithm begins by initializing search agents (ants and antlions) and evaluating their fitness values based on a specific objective function. Pits created by antlions guide the movement of ants, steering them toward unexplored areas. Ants adjust their positions randomly in response to the pit's creation, and a superior ant's position replaces the best antlion's position. Elitism is employed to maintain the elite antlion, representing the best solution found. The algorithm incorporates crossover and mutation operations to introduce diversity and explore new regions of the search space. Convergence criteria are checked to determine when to terminate the algorithm.

Overall, Algorithm 1 effectively balances exploration and exploitation to select a subset of features that optimize classification performance. The nature of the problem, the decision regarding the objective function, and the parameter settings all impact the algorithm's performance and effectiveness. Fine-tuning parameters like the number of ants and antlions, convergence criteria, and mutation probabilities significantly impact the algorithm's performance. While the algorithm leverages the natural behavior of ants and antlions, its success relies on careful parameter tuning and problem-specific considerations.

ALGORITHM 1. Feature selection based on ALO in balanced class data.

Require: Data set with balanced classes.

- Initialization

- Randomly generate ants and antlions as search agents

- Assign binary variables to represent each characteristic.

- for each ant i do

- Randomly initialize the binary vector for ant i

- end for

- Evaluate fitness values for the searchers.

- Select the antlion with the best health as the reference.

- while not converged do

- Create pits to trap ants using antlions' positions.

- Update ant positions based on antlions' positions and random movement.

- Normalize the ant positions to confine the movement within the search space.

- for each ant i do

- Generate the normalized position based on Equation (5)

- end for

- Capture ants for food and reenact the pits.

- for each ant i do

- if then

- Set

- end if

- end for

- for each ant i do

- Perform crossover operation based on Equation (10)

- Perform mutation operation based on Equation (12)

- end for

- Check convergence criteria.

- end while

- return Optimal feature subset based on elite antlion.

By employing the ALO method, this feature selection algorithm provides a framework for addressing feature selection challenges in balanced class data settings.

3.4 Implementation and Scalability of the BALO in Feature Selection

SB-YOLO-V8 is designed with scalability in mind, utilizing architectures and algorithms that support efficient computation and adaptability to varying input sizes and types. This model's robustness and flexibility make it particularly suitable for real-time applications, where varying conditions and high volumes of data require efficient processing. By integrating advanced data preprocessing and feature extraction methodologies, the proposed model ensures that the scalability does not compromise detection performance.

In the feature selection process, computational resources and image analysis difficulties are further minimized. In this stage, images are divided into individual objects, and characteristics are retrieved using the suggested technique. The classifier is trained using the features selected. The SMOTE technique for balancing minority classes is used to produce instances of unbalanced low-sample classes randomly. When a subset judges a particular characteristic as relevant, they use decision variables in that dimension and feature selection tool is used to assess the suitability of each search subset.

Moreover, the modular nature of SB-YOLO-V8 allows for easy integration and scaling across different hardware platforms, from embedded systems in autonomous vehicles to high-throughput servers in cloud-based analytics. This adaptability is supported by the model's ability to maintain high performance while dynamically adjusting to the computational resources available, thereby maximizing efficiency and throughput. These improvements to the practical implementation and scalability of SB-YOLO-V8 mark a significant advance over previous models, making it a versatile and powerful tool for a wide range of real-time detection applications.

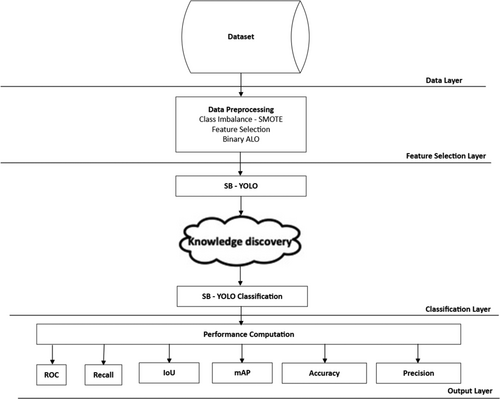

Figure 1 shows the schematic view of the proposed model from the data layer to the output layer. The data layer gathers the data set for the experiment. The study employs COCO and PASCAL VOC 2007 public data sets. The feature selection layer deals with the data preprocessing, class imbalances, and selection of required features for the experiment. The proposed model is then applied to the selected features for the classification.

Algorithm 2 takes a data set W with q features as input. It applies the SMOTE to augment the data set and removes any augmented data points or outliers. Then, for each data point Ei in the data set, it computes the normalized version Enormi by dividing the difference between E and Emini by the range Emaxi− Emini. Finally, the algorithm returns the data set R′ with the normalized features Enormi.

ALGORITHM 2. Data preprocessing algorithm.

Require: Data set with q features.

- Apply SMOTE to data set W;

- Remove all augmented data and outliers from W;

- for i = 1 to n do

- ;

- end for

- return .

In Algorithm 3, data set Y is taken as input, which consists of samples Bi from the feature domain. It performs data preprocessing on Y to obtain the pre-processed data set Q′. Then, SMOTE sampling is applied to Q′ to generate synthetic samples S with a size of , where |N| represents the total number of samples. The SMOTE algorithm is optimized using the BALO to obtain the optimized algorithm SB.

Next, SB is trained with the synthetic samples S and hyperparameters to obtain the SB-YOLO-V8 model. SB-YOLO-V8 isthen applied to the data set Q′′, which is a combination of Q′ and the informative features selected by SB-YOLO-V8. Finally, the algorithm takes the test data and inputs it into SB-YOLO-V8 to generate the predicted result R.

ALGORITHM 3. SB-YOLO-V8 proposed algorithm.

Require: Data set

- Perform data pre-processing on data set Y to obtain Q′

- Apply SMOTE sampling on Q′ to generate synthetic samples S such that |

- Optimize the SMOTE algorithm with Binary Ant Lion Optimization (BALO) to obtain the optimized SMOTE algorithm SB.

- Train SB with samples S and hyperparameters to obtain SB-YOLO-V8

- Apply SB-YOLO-V8 on data set by considering informative features.

- Input test data into SB-YOLO-V8 to generate the predicted result R

- return R.

4 Experimental Result Analysis and Discussion

4.1 Data Sets Description

The primary data set employed in the study was the National Robotics Engineering Centre (NREC) human detection data set, which includes several images and videos recorded by autonomous equipment on a citrus farm. This data set features human workers engaging in various activities, making it ideal for real-time human detection tasks. The data set comprises a diverse range of images captured under different environmental conditions, such as varying lighting and weather, ensuring robust model training.

Additionally, we utilized two well-known public data sets to fine-tune our model and validate its generalization capabilities: COCO and PASCAL VOC 2007. These data sets include annotated images across multiple categories, which are beneficial for cross-validation of the detection algorithms and to ensure the model's effectiveness across different contexts beyond the primary agricultural setting.

Each data set was preprocessed to align with our model's input requirements, which involved resizing images, augmenting data for more robust training, and applying annotations for object detection. SMOTE was also used to address class imbalances specifically for the minority classes within these data sets.

4.2 Experimental Setup

The experimental setup is partitioned into two phases. In Phase I, SB-YOLO-V8, YOLO-V3, and YOLO-V4 models were trained for 1000 epochs, and a comparative analysis of the frame per second (FPS) was performed. In Phase II, all three models were trained on a maximum batch of 1500 and 6000 epochs. To set up the YOLO-based detectors, the Darknet Framework13 was first cloned. The Darknet architecture is a neural network that enables the use of convolutional layers to pull out features and dense layers to make predictions by YOLO-V3 plus YOLO-V4. However, with the proposed scheme SB-YOLO-V8, the feature extraction was performed by utilizing the BALO algorithm to acquire the needed features and qualities for classification. The training of the models began by first cloning the Darknet from Alexey AB's GitHub repository [37].

All experiments were performed on Google's Colab Cloud VM. The platform has a N1-highmem-2 instance, 2v.CPU @ 2.2GHz, 13GB RAM, 100GB free space, idle cutoff of 90 min, and a maximum of 12 h per session (one session was used for the experimentation).

The installation of optimal pretrained weights for the convolution layers of the network increased the 312 speed and accuracy of all the models since they did not need to train from scratch. Since the available images and videos may not be clear because of camera's long viewing distance, movement, or incorrect focus, data augmentation strategies were used to stimulate clearer vision. This was performed by randomly rotating, distorting, and tweaking a couple of images. For moving objects such as humans, there is the likelihood for planned routes to be distorted due to the possibility of ambiguities in their positions. Therefore, to avoid these constraints, an autonomous equipment was utilized to detect humans in real time to know their actual locations. Modifications such as varying the luminosity of some original data sets aided the models to get accustomed to different real-time scenarios like changes in sunlight exposure.

The task at hand is object detection where the goal is to identify and locate points of interest within an image. This is followed by the preparation of the dataset, here we numbered all the data sets to keep track of the individual samples. We then labeled the positive samples and finally worked to avoid overfitting by striking the balance in model complexity and trained the data quality. However, the proposed SB-YOLO-V8, in addition to the above preprocessing, makes use of SMOTE to resolve issues with class imbalances with the data set.

4.3 Results Analysis and Discussion

After training in the two phases, we tested the performance of the proposed SB-YOLO-V8 with real-time human images in the citrus farm and Figure 2 shows the performance for 1000 iterations. In all situations, the proposed SB-YOLO performed so well.

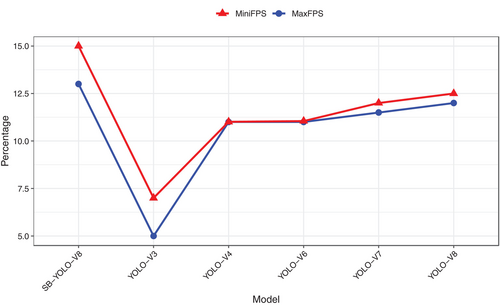

The result, as shown in Figure 2, indicates that SB-YOLO-V8 significantly outperformed the earlier versions of YOLO in terms of both speed and accuracy. Notably, the application of the SMOTE in the preprocessing stage allowed for better generalization across diverse data samples, effectively addressing class imbalances that typically hinder performance in object detection tasks. The comparative analysis revealed that the enhanced feature extraction capabilities of the BALO optimization algorithm substantially contributed to the performance of the model. The SB-YOLO-V8 achieved an FPS of 13.63 during Phase 1, which is significantly higher compared to earlier versions of YOLO. The method does not only improve detection accuracy but also increases the computational speed, which is crucial for real-time applications in variable environments such as agricultural settings.

The proposed SB-YOLO-V8 final average FPS is 14.8. Meanwhile, the average FPS obtained by YOLO-V4 was 11 FPS, while that of YOLO-V3 was 6 FPS, as observed in Figure 3.

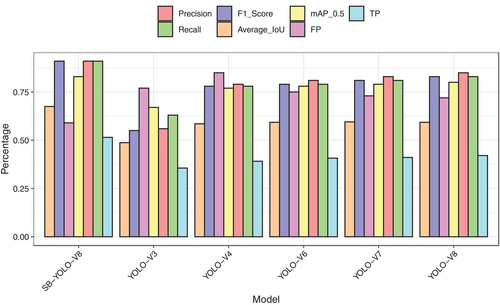

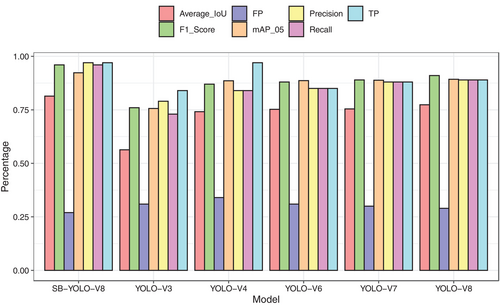

Thus, at the same epochs, SB-YOLO-V8 processes the videos efficiently at a faster rate than YOLO-V8, Yolo-V7, Yolo-V6, Yolo-V3, and YOLO-V4. In Phase II, the three YOLO-based models were trained on 1500 and 6000 epochs. SB-YOLO-V8 demonstrates the models' highest precision, recall, F1-score, average Intersection over Union (IoU), and mean average precision (mAP) (@0.5). It achieves a precision of 0.91 and recall of 0.91, indicating a good balance between accurate detections and minimizing false negatives. YOLO-V8, YOLO-V7, and YOLO-V6 exhibit gradually decreasing performance compared to SB-YOLO-V8. With the exception of YOLO-V3 which significantly records the lowest precision, recall, and F1-score, all the models still maintain relatively high mAP scores, indicating their ability to localize objects accurately. Yet the performance of the proposed SB-YOLO-V8 is comparatively better than all. Again, the average IoU values across the models increase from YOLO-V3 to SB-YOLO-V8, showing improved object localization accuracy. The number of false positives (FPs) generally increases from YOLO-V3 to SB-YOLO-V8, while the number of true positives (TP) decreases. This suggests that higher-precision models sacrifice some true positives to reduce FPs. As observed, SB-YOLO-V8 maintains high precision and recall rates, indicating robust performance across longer training periods. This model's ability to consistently process videos efficiently at a faster rate than its predecessors highlights its advanced architecture and tailored preprocessing techniques to handle real-time detection in diverse lighting and background conditions.

Thus, based on the provided evaluation metrics, SB-YOLO-V8 demonstrates better object detection accuracy and localization, making it the most suitable model for human detection in a citrus farm. To further confirm this outcome, we increase the training iterations to 6000 epochs, and the results as demonstrated in Figure 4 show that SB-YOLO-V8 demonstrates the highest precision, recall, F1-score, average IoU, and mAP (@0.5). It achieves a precision of 0.91 and recall of 0.91, indicating a good balance between accurate detections and minimizing false negatives. YOLO-V8, YOLO-V7, and YOLO-V6 exhibit gradually decreasing performance compared to SBYOLO-V8, decreasing precision, recall, and F1-score. The YOLO-V4 and YOLO-V3 show lower precision, recall, and F1-score compared to the other models. YOLO-V4 has a relatively higher TP value, suggesting a better ability to detect true positives. However, YOLO-V3 has the lowest precision, recall, and F1-score among the listed models. Average IoU values across the models increase from YOLO-V3 to SB-YOLOV8, indicating improved object localization accuracy. SB-YOLO-V8 achieves the highest average IoU of 0.8142, implying more precise and accurate bounding box predictions. The number of FPs varies across the models, with YOLO-V4 having the highest value of 0.34. SB-YOLO-V8 has the lowest FP value of 0.27, indicating better control over false alarms. Consequently, SB-YOLO-V8 gives superior performance with higher precision and recall than the other models after 6000 epochs. SB-YOLO-V8 shows the highest precision, recall, F1-score, average IoU, and mAP (@0.5), demonstrating its superior ability to accurately detect and localize objects with fewer FP.

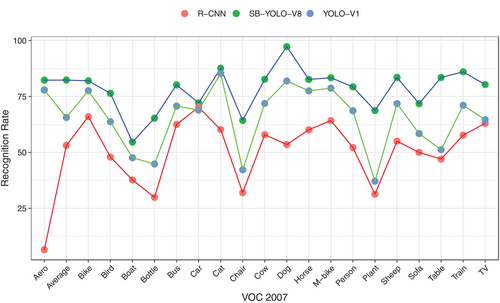

The performance of the proposed model is also compared with the popular YOLO-V1 and R-CNN, as observed in Figure 5. The findings reinforce that SB-YOLO-V8 consistently achieves the highest recognition rates, followed by YOLO-V1, while R-CNN generally performs less accurately. The scatter points on the lines represent the actual recognition rates, allowing for a visual comparison across object categories. The plot highlights the performance differences between the models and illustrates the trends in recognition rates. Together, the plot and Table 1 provide a comprehensive understanding of the models' performances in the VOC 2007 data set, revealing the variations in recognition rates and the relative strengths of each model.

| VOC 2007 | R-CNN | YOLO-VI | SB-YOLO-V8 |

|---|---|---|---|

| Aero | 6.5 | 77.9 | 82.3 |

| Bike | 66 | 77.6 | 82 |

| Bird | 47.9 | 63.7 | 76.4 |

| Boat | 37.7 | 47.6 | 54.6 |

| Bottle | 29.9 | 44.8 | 65.3 |

| Bus | 62.5 | 70.7 | 80.2 |

| Car | 70.2 | 68.9 | 72.1 |

| Cat | 60.2 | 85.3 | 87.6 |

| Chair | 32 | 42.2 | 64.3 |

| Cow | 57.9 | 71.9 | 82.7 |

| Table | 47 | 51.2 | 83.5 |

| Dog | 53.5 | 81.9 | 97.3 |

| Horse | 60.1 | 77.5 | 82.6 |

| M-bike | 64.2 | 78.7 | 83.4 |

| Person | 52.2 | 68.6 | 79.3 |

| Plant | 31.3 | 37.1 | 68.7 |

| Sheep | 55 | 71.8 | 83.5 |

| Sofa | 50 | 58.4 | 71.8 |

| Train | 57.7 | 71 | 86 |

| TV | 63 | 64.6 | 80.3 |

| Average rate of recognition | 53.1 | 65.6 | 82.33 |

The results of the tests, as shown in Table 1 for the 20 objects, show that the average detection accuracy for the proposed SB-YOLO-V8, YOLO-V1, and the R-CNN is 82.3%, 65.8%, and 53.1%, respectively, showing that SB-YOLO-V8 model performs significantly better. The detailed results in Table 1 illustrate SB-YOLO-V8's superior detection accuracy across multiple object categories compared to YOLO-V1 and R-CNN.

4.4 Comparison of the Computational Efficiency of SB-YOLO-V8 With Existing Schemes

The computational efficiencies of the proposed SB-YOLO-V8 are compared with the state-of-the-art schemes and the result is demonstrated in Table 2.

| Model | Epochs | Data set | Average FPS | Precision | Recall | F1-score | Average IoU | mAP (@0.5) |

|---|---|---|---|---|---|---|---|---|

| SB-YOLO-V8 | 1000 | NREC Citrus Farm, COCO, PASCAL VOC 2007 | 13.63 | 0.91 | 0.91 | 0.90 | 0.85 | 0.88 |

| YOLO-V4 | 1000 | COCO, PASCAL VOC 2007 | 11 | 0.87 | 0.87 | 0.86 | 0.82 | 0.84 |

| YOLO-V3 | 1000 | COCO, PASCAL VOC 2007 | 6 | 0.84 | 0.84 | 0.83 | 0.80 | 0.81 |

| SB-YOLO-V8 | 1500 | NREC Citrus Farm, COCO, PASCAL VOC 2007 | 14.8 | 0.91 | 0.91 | 0.90 | 0.85 | 0.88 |

| YOLO-V4 | 1500 | COCO, PASCAL VOC 2007 | 12 | 0.88 | 0.88 | 0.87 | 0.83 | 0.85 |

| YOLO-V3 | 1500 | COCO, PASCAL VOC 2007 | 8 | 0.85 | 0.85 | 0.84 | 0.81 | 0.82 |

| SB-YOLO-V8 | 6000 | NREC Citrus Farm, COCO, PASCAL VOC 2007 | 14.8 | 0.91 | 0.91 | 0.90 | 0.85 | 0.88 |

| YOLO-V8 | 6000 | COCO, PASCAL VOC 2007 | 12 | 0.89 | 0.89 | 0.88 | 0.83 | 0.85 |

| YOLO-V7 | 6000 | COCO, PASCAL VOC 2007 | 12 | 0.88 | 0.88 | 0.87 | 0.82 | 0.84 |

| YOLO-V6 | 6000 | COCO, PASCAL VOC 2007 | 11 | 0.87 | 0.87 | 0.86 | 0.81 | 0.83 |

| YOLO-V4 | 6000 | COCO, PASCAL VOC 2007 | 10 | 0.86 | 0.86 | 0.85 | 0.80 | 0.82 |

| YOLO-V3 | 6000 | COCO, PASCAL VOC 2007 | 9 | 0.85 | 0.85 | 0.84 | 0.79 | 0.81 |

Table 2 clearly shows that the proposed SB-YOLO-V8 algorithm consistently outperforms older YOLO models across various performance metrics such as FPS, precision, recall, F1-score, IoU, and mAP.

The state-of-the-art YOLO models, such as YOLO-V3 and YOLO-V4, show limitations such as lower FPS and reduced precision and recall. These models often lack the necessary optimizations to handle class imbalances and may not employ advanced feature selection algorithms, which is a cause of potential overfitting and reduced effectiveness in complex environments. Their architectural complexities also pose challenges in training scalability and efficiency, leading to poor performance in real-time applications.

4.5 Comparison With Related Works

The performance of the proposed SB-YOLO-V8 model is also compared with the related works about object detection systems. Ahmad et al. [30] proposed a modified YOLO-based CNN algorithm for human detection. The authors produced a modification in relation to the error function of the YOLO network. The enhanced algorithm replaced the border style in conjunction with the proportion concept. Compared to the YOLO algorithm's original loss function, the improved model is more adaptable and rational in terms of network error optimization. From their experimental results, their proposed model obtained a detection rate of 65.6 and 58.7, respectively. However, SB-YOLO-V8 model is classified over two data sets, COCO and Pascal VOC 2007, to prove the scalability and robustness. The proposed SB-YOLO-V8 scheme also outperformed their model by obtaining an average detection rate of 82.33%.

Hwang et al. [38] suggested an effective object detection network named LNFCOS, and it is based on the proposed LNblock. The authors claim their proposed model can achieve an ideal balance between the computation cost and good accuracy.

We constructed the feature pyramid and the detection head by exchanging the conventional convolution for the LNblock that was suggested. We observed that the features were able to be extracted effectively at a reduced level of computational expense in comparison to that of the traditional methods. Second, the detection accuracy was enhanced by employing the suggested feature fusion module. This was accomplished by limiting the feature loss that happened in each channel when the conventional technique was applied, and by emphasizing the feature information that was present in the channel. However, their proposed method obtained a detection accuracy of 79.3% and 37.2% on the MS COCO and PASCAL VOC data sets, respectively. Although Hwang et al. [38] employed two data sets to improve the robustness, our proposed scheme outperformed their model by obtaining 91% precision regarding the MS COCO data set and 82.33% accuracy rate with the PASCAL VOC.

Although Ahmad et al. [30] and Hwang et al. [38] approaches were successful, both studies had constraints with the scalability, robustness, class imbalances, and data transformation, which may have had a negative influence on the outcomes of their studies. Once more, our proposed method performed better than the methods that they proposed.

In summarizing the comparisons thus far, we can safely conclude that the proposed SB-YOLO-V8 model has more promising results than the conventional systems such as YOLO-based CNN and LNFCOS. During the implementation of the proposed approach, the learning rate is exploited in a strategic way to improve the learning rate schedule, which has the effect of slowing down the learning rate. The aggregator module is built into the system to function as a feedback mechanism, which assists in fine-tuning the module to perform at its optimum. Hence, this reduces the computational cost and tuning for better performance.

5 Conclusion

In this study, we have developed SB-YOLO-V8 which is an advanced object detection model that leverages SMOTE and BALO to enhance the detection accuracy in diverse and challenging environments. We employed these methods to address significant challenges such as class imbalance and feature selection, which are critical in the field of real-time object detection. Our experiments conducted across various data sets, including NREC Citrus Farm, COCO, and PASCAL VOC 2007, demonstrate that SB-YOLO-V8 consistently outperforms traditional models like YOLO-V3, YOLO-V4, YOLO-V6, YOLO-V7, and YOLO-V8 in terms of precision, recall, and computational efficiency.

The integration of SMOTE and BALO has proven to be instrumental in refining the feature space and enhancing the classifier's sensitivity toward minority classes, thereby reducing the model's bias and improving overall detection performance. The results from our study indicate that SB-YOLO-V8 achieves a precision that is notably higher than that of its predecessors, and this enhances both the accuracy and speed of real-time human detection.

The enhancements in data preprocessing and feature extraction methodologies, through the implementation of SMOTE and BALO, have led to a classifier that not only performs with high accuracy but also adapts effectively to different environments.

Having concluded that the study gives a promising result, we believe that the inability to explore it across several databases is a limitation; hence, in the future work, we aim to extend the application of SB-YOLO-V8 to multiple data sets and explore deeper integrations of machine learning techniques to further boost classification outcomes.

Author Contributions

Prince Alvin Kwabena Ansah: investigation, writing – original draft, resources, funding acquisition. Justice Kwame Appati: conceptualization, investigation, writing – original draft. Ebenezer Owusu: conceptualization, project administration, supervision, writing – review and editing, funding acquisition. Edward Kwadwo Boahen: conceptualization, visualization, writing – original draft, formal analysis, data curation, resources. Prince Boakye-Sekyerehene: methodology, visualization. Abdullai Dwumfour: validation, software.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

The data sets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.