Parallel framework for dynamic domain decomposition of data assimilation problems: a case study on Kalman Filter algorithm

Abstract

We focus on Partial Differential Equation (PDE)-based Data Assimilation problems (DA) solved by means of variational approaches and Kalman filter algorithm. Recently, we presented a Domain Decomposition framework (we call it DD-DA, for short) performing a decomposition of the whole physical domain along space and time directions, and joining the idea of Schwarz's methods and parallel in time approaches. For effective parallelization of DD-DA algorithms, the computational load assigned to subdomains must be equally distributed. Usually computational cost is proportional to the amount of data entities assigned to partitions. Good quality partitioning also requires the volume of communication during calculation to be kept at its minimum. In order to deal with DD-DA problems where the observations are nonuniformly distributed and general sparse, in the present work we employ a parallel load balancing algorithm based on adaptive and dynamic defining of boundaries of DD—which is aimed to balance workload according to data location. We call it DyDD. As the numerical model underlying DA problems arising from the so-called discretize-then-optimize approach is the constrained least square model (CLS), we will use CLS as a reference state estimation problem and we validate DyDD on different scenarios.

1 INTRODUCTION

Data assimilation (DA, for short) encompasses the entire sequence of operations that, starting from observations/measurements of physical quantities and from additional information—such as a mathematical model governing the evolution of these quantities—improve their estimate minimizing inherent uncertainties. DA problems are usually formulated as an optimization problem where the objective function measures the mismatch between the model predictions and the observed system states, weighted by the inverse of the error covariance matrices.1, 2 In operational DA the amount of observations is insufficient to fully describe the system and one cannot strongly rely on a data driven approach: the model is paramount. It is the model that fills the spatial and temporal gaps in the observational network: it propagates information from observed to unobserved areas. Thus, DA methods are designed to achieve the best possible use of a never sufficient (albeit constantly growing) amount of data, and to attain an efficient data model fusion, in a short period of time. This poses a formidable computational challenge, and makes DA an example of big data inverse problems.3-6 There is a lot of DA algorithms. Two main approaches gained acceptance as powerful methods: variational approach (namely, 3DVAR and 4DVAR) and Kalman filter (KF).7-9 Variational approaches are based on the minimization of the objective function estimating the discrepancy between numerical results and observations. These approaches assume that the two sources of information, forecast and observations, have errors that are adequately described by stationary error covariances. In contrast to variational methods, KF (and its variants) is a recursive filter solving the Euler-Lagrange equations. It uses a dynamic error covariance estimate evolving over a given time interval. The process is sequential, meaning that observations are assimilated in chronological order, and KF alternates a forecast step, when the covariance is evolved, with an analysis step in which covariance of the filtering conditional is updated. In both kind of methods the model is integrated forward in time and the result is used to reinitialize the model before the integration continues. For any details interested readers are referred to References 10-12.

- DD step: we begin by partitioning along space and time the domain into subdomains and then extending each subdomain to overlap its neighbors by an amount. Partitioning can be adapted according to the availability of measurements and data.

- Filter Localization and MOR: on each subdomain we formulate a local DA problem analogous to the original one, combining filter localization and MOR approaches.

- Regularization constraints: in order to enforce the matching of local solutions on the overlapping regions, local DA problems are slightly modified by adding a correction term. Such a correction term balances localization errors and computational efforts, acting as a regularization constraint on local solutions. This is a typical approach for solving ill posed inverse problems (see, for instance, 33).

- Parallel in time: as the dynamic model is coupled with DA operator, at each integration step we employ, as initial and boundary values of all reduced models, estimates provided by the DA model itself, as soon as these are available.

- Conditioning: localization excludes remote observations from each analyzed location, thereby improving the conditioning of the error covariance matrices.

To the best of our knowledge, such ab initio space and time decomposition of DA models has never been investigated before. A spatially distributed KF into sensor based reduced-order models, implemented on a sensor network where multiple sensors are mounted on a robot platform for target tracking, is presented in References 20-22.

1.1 Contribution of the present work

The introduction of a dynamic redefining of the DD (we call it DyDD), aimed to efficiently deal with DA problems where observations are nonuniformly distributed and general sparse is the main focus of the present work. Indeed, in such cases a static and/or a priori DD strategy could not ensure a well-balanced workload, while a way to repartition the domain so that subdomains maintain a nearly equal number of observations plays an essential role in the success of any effective DD approach. We present a revision of DD-DA framework such that a dynamic load balancing algorithm allows for a minimal data movement restricted to the neighboring processors. This is achieved by considering a connected graph induced by the domain partitioning whose vertices represent a subdomain associated with a scalar representing the number of observations on that subdomain. Once the domain has been partitioned, a load balancing schedule (scheduling step) should make the load on each subdomain equals to the average load providing the amount of load to be sent to adjacent subdomains (migrations step). The most intensive kernel is the scheduling step which defines a schedule for computing the load imbalance (which we quantify in terms of number of observations) among neighboring subdomains. Such quantity is then used to update the shifting of the boundaries of adjacent subdomains (Migration step) which are finally re mapped to achieve a balanced decomposition. We are assuming that load balancing is restricted to the neighboring domains so that we reduce the overhead processing time. Finally, following Reference 23 we use a diffusion type scheduling algorithm minimizing the Euclidean norm of data movement. The resulting constrained optimization problem leads to normal equations whose matrix is associated to the decomposition graph. The disadvantage is that the overhead time, due to the final balance among subdomains, strongly depends on the degree of the vertices of processors graph, gien by the number of neighboring subdomains of each subdomain. Such overhead represents the surface-to-volume ratio whose impact on the overall performance of the parallel algorithm decreases as the problem size increases.

1.2 Related works

There has been widespread interests in load balancing since the introduction of large-scale multiprocessors.24 Applications requiring dynamic load balancing mainly include parallel solution of a PDE by finite elements on an unstructured grids25 or parallelized particle simulations.26 Load balancing is one of the central problems which have to be solved in designing parallel algorithms. Moreover, problems whose workload changes during the computation or it depends on data layout which may be unknown a priori, will necessitate the redistribution of the data in order to retain efficiency. Such a strategy is known as dynamic load balancing. Algorithms for dynamic load balancing, as in References 27-30, are based on transferring an amount of work among processors to neighbors; the process is iterated until the load difference between any two processors is smaller than a specified value, consequently it will not provide a balanced solution immediately. A multilevel diffusion method for dynamic load balancing,31 is based on bisection of processor graph. The disadvantage is that, to ensure the connectivity of subgraphs, movement of data between nonneighboring processors can occur. The mentioned algorithms do not take into account one important factor, namely that the data movement resulting from the load balancing schedule should be kept to minimum.

1.2.1 Organization of the work

The present work is organized as follows. As we apply the proposed framework to CLS model which can be seen as prototype of variational DA models, in order to improve the readability of the article, in Section 2 we give a brief overview of DA methods, that is, both KF and Variational DA, the variational formulation of KF and finally we give a brief description of CLS model. In Section 3, we describe main features of DD-DA framework and its application to CLS model. DyDD is presented in Section 4, through a graphical description and the numerical algorithm. Validation and performance results are presented in Section 5 and, finally, in Section 6 we give conclusions and future works.

2 THE BACKGROUND

In order to improve the readability of the article, in this section we give a brief overview of DA methods, that is, both KF and Variational DA, then we review CLS model as prototype of DA models. To this end, we also review the variational formulation of KF, that is, the so-called VAR–KF formulation, obtained minimizing the sum of the weighted Euclidean norm of the model error and the weighted Euclidean norm of the observation error.

2.1 Kalman filter

3 VAR DA MODEL SET UP

Definition 1.(The 4D–DA inverse problem).33 Given the vectors and the block matrix a 4D–DA problem concerns the computation of

We also introduce the regularized 4D–DA inverse problem, that is, the 4D–VAR DA problem.

Definition 2.(The 4D–VAR DA problem). The 4D–VAR DA problem concerns the computation of:

- (a)

by truncating Taylor's series expansion of J at the second order, giving a quadratic approximation of J, let us say JQN. Newton'methods (including LBFGS and Levenberg–Marquardt) use JQD. The minimum is computed solving the linear system involving the Hessian matrix ∇2J, and the negative gradient − ∇ J.

- (b)

by truncating Taylor's series expansion of J at the first order which gives a linear approximation of J, let us say let us say JTL. Gauss–Newton's methods (including Truncated or Approximated Gauss–Newton uses JTL). The minimum is computed solving the normal equations arising from the local Linear Least Squares problem.

Both approaches will employ the tangent linear model and the adjoint operator of the observation mapping and of the model of interest.36

Remark: Computational kernel of variational approaches (namely, 3D-VAR and 4D-VAR) is a linear system, generally solved by means of iterative methods; the iteration matrix is related to matrix Q, which usually has a Gaussian correlation structure.6 Matrix Q can be written in the form Q=VVT, where V is the square root of Q, namely, it is a Gaussian matrix. As a consequence, products of V and a vector z are Gaussian convolutions which can be efficiently computed by applying Gaussian recursive filters as in Reference 37.

In our case study we carry out on CLS model, we apply KF and DD-KF to CLS model, then in this case it results that matrix Q is the null matrix while matrix R is diagonal.14

3.1 CLS problem

We refer to as solution in least squares sense of system in (14).

Remark: Besides covariance matrices of errors, main components of KF algorithm are dynamic model and observation mapping. These are two main components of any variational DA operator and state estimation problem, too. In this regard, in the following, as proof of concept of DD-DA framework, we start considering CLS model as a prototype of a variational DA model, at a given time. CLS is obtained combining two overdetermined linear systems, representing the state and the observation mapping, respectively. Then, we introduce VAR-KF method as reference data sampling method solving CLS model problem. VAR-KF will be decomposed by using the proposed framework. That said, any interest reader who wants to apply DD-DA framework in a real-world application, that is, with a (PDE-based) model state and an observation mapping, once the dynamic (PDE-based) model has been discretized, he should rewrite the state estimation problem under consideration as a CLS model problem (cfr Section 3.1) and then to apply CLS algorithm. In other words, she/he should follow the discretize-then-optimize approach, common to most DA problems and state estimation problems, before employing DD-DA and DyDD framework.38

4 DD-FRAMEWORK

As DyDD is the refinement of initial DD-DA, in the following we first give mathematical settings useful to define the domain decomposition framework. Then, the following section we focus on DyDD.

4.1 DD set up

Definition 3. (Matrix Reduction)Let be a matrix with m, n ≥ 1 and Bj the jth column of B and Ij = {1, … , j} and Ii, j = {i, … , j} for i = 1, … , n − 1; j = 2, … , n, and i < j for every (i, j). Reduction of B to Ij is:

Definition 4. (Vector Reduction)Let be a vector with t ≥ 1, n > 0, s = n − t and I1, r = {1, … , r}, r > n and n > t. The extension of w to Ir is:

We introduce reduction of J, as given in (17).

Definition 5. (Model Reduction)Let us consider , , the matrix and the vector defined in (15), I1 = {1, … , n1}, I2 = {1, … , n2} with n1, n2 > 0 and the vectors . Let

For simplicity of notations we let .

4.2 DD-CLS problems: DD of CLS model

We apply DD approach for solving system S in (14). Here, for simplicity of notations, we consider two subdomains.

Definition 6. (DD-CLS model[12])Let S be the overdetermined linear system in (14) and , the matrix and the vector defined in (15) and , , be the weight matrices with m0 > n and m1 > 0. Let us consider the index set of columns of A, I = {1, … , n}. DD-CLS model consists of:

- DD step. It consists of DD of I:

()where s ≥ 0 is the number of indexes in common, |I1| = n1 > 0, |I2| = n2 > 0, and the overlap sets()If s = 0, then I is decomposed without using the overlap, that is, I1 ∩ I2 = ∅ and I1, 2 ≠ ∅, instead if s > 0, that is, I is decomposed using overlap, that is, I1 ∩ I2 ≠ ∅ and I1, 2 = ∅; restrictions of A to I1 and I2 defined in (21)()

- DD-CLS step: given , according to the alternating Schwarz method in Reference 11, DD-CLS approach consists in solving for n = 0, 1, 2, … the following overdetermined linear systems:

()by employing a regularized VAR-KF model. It means that DD-CLS consists of a sequence of two subproblems:()()where Ii is defined in (21) and is defined in (20), is the overlapping operator and is the regularization parameter.

Remark 1.If I is decomposed without using overlap (i.e., s = 0), then and can be written in terms of normal equations as follows

Remark 2.Regarding the operator , we consider and , and we pose

Remark 4.For DD-CLS model we considered, DD of , that is, the index set of columns of m A, similarly we can apply DD approach to 2D domain , where J = {1, … , (m0 + m1)} is the rows index set of A. Subdomains obtained are I1 × J1 = {1, … , n1} × {1, … , m1} and I2 × J2 = {n1 − sI + 1, … , n} × {m1 − sJ + 1, … , (m0 + m1)}, where sI, sJ ≥ 0 are the number of indexes in common between I1 and I2, J1 and J2, respectively. Restrictions of A to I1 × J1 and I2 × J2 are and .

Remark 5.The cardinality of J, that is, the index set of rows of matrix A, represents the number of observations available at time of the analysis, so that DD of I × J allows us to define DD-CLS model after dynamic load balancing of observations by appropriately restricting matrix A.

5 DyDD: DYNAMIC DD-DA FRAMEWORK

For effective parallelization of DD-DA, domain partitioning into subdomains must satisfy certain conditions. First the computational load assigned to subdomains must be equally distributed. Usually, computational cost is proportional to the amount of data entities assigned to partitions. Good quality partitioning also requires the volume of communication during calculation to be kept at its minimum.39 We employ a dynamic load balancing scheme based on adaptive and dynamic redefining of initial DD-DA aimed to balance workload between processors. Redefining of initial partitioning is performed by shifting the boundaries of neighboring domains (this step is referred to as Migration step).

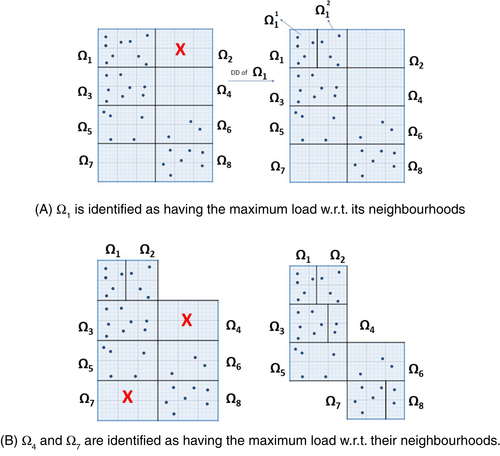

- DD step: starting from the initial partition of provided by DD-DA framework, DyDD performs a check of the initial partitioning. If a subdomain is empty, it decomposes subdomain adjacent to that domain which has maximum load (decomposition is performed in two subdomains). See Figure 1.

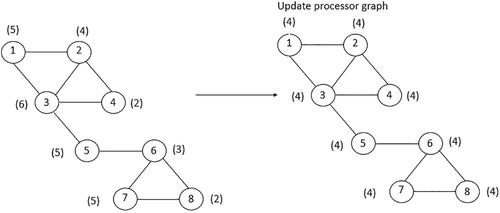

- Scheduling step: DyDD computes the amount of observations needed for achieving the average load in each subdomain; this is performed by introducing a diffusion type algorithm (by using the connected graph G associated to the DD) derived by minimizing the Euclidean norm of the cost transfer. Solution of the Laplacian system associated to the graph G gives the amount of data to migrate. See Figure 2.

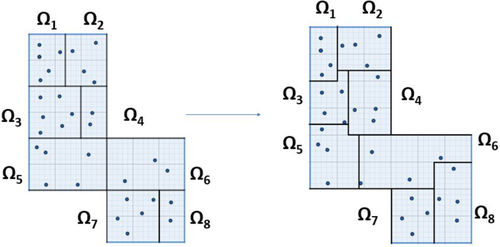

- Migration step: DyDD shifts the boundaries of adjacent subdomains to achieve a balanced workload. See Figure 3.

- Update step: DyDD redefines subdomains such that each one contains the number of observations computed during the scheduling step and it redistributes subdomains among processors grids. See Figure 4.

6 VALIDATION RESULTS

Simulations were aimed to validate the proposed approach by measuring performance of DyDD algorithm as shown in Table 13. Performance evaluation was carried out using Parallel Computing Toolbox of MATLABR2013a on the high-performance hybrid computing (HPC) architecture of the Sistema Cooperativo Per Elaborazioni scientifiche multidiscipliari data center, located at University of Naples Federico II. More precisely, the HPC architecture is made of 8 nodes, consisting of distributed memory DELL M600 blades connected by a 10 Gigabit Ethernet technology. Each blade consists of 2 Intel [email protected] GHz quadcore processors sharing 16 GB RAM memory for a total of 8 cores/blade and of 64 cores, in total. In this case for testing the algorithm we consider up to nsub = 64 subdomains equally distributed among the cores. This is an intranode configuration implementing a coarse-grained parallelization strategy on multiprocessor systems with many-core CPUs.

DyDD set up. We will refer to the following quantities: : spatial domain; n = 2048: mesh size; m: number of observations; p: number of subdomains and processing units; i: identification number of processing unit, which is the same of the associated subdomain; for i = 1, … , p, deg(i): degree of i, that is, number of subdomains adjacent to ; : identification of subdomains adjacent to ; : number of observations in before the dynamic load balancing; : number of observations in after DD step of DyDD procedure; : number of observations in after the dynamic load balancing; : time (in seconds) needed to perform DyDD on p processing units; Tr(m): time (in seconds) needed to perform repartitioning of ; overhead time to the dynamic load balancing.

Regarding DD-DA, we let be local problem size and we consider as performance metrics, the following quantities: denoting sequential time (in seconds) to perform KF solving CLS problem; denoting time (in seconds) needed to perform in parallel DD-KF solving CLS problem after DyDD; being the overhead time (measured in seconds) due to synchronization, memory accesses, and communication time among p cores; denoting KF estimate obtained by applying the KF procedure on CLS problem after DyDD; denoting estimate obtained by applying DD-KF on CLS problem after DyDD; denoting the error introduced by the DD-DA framework; , which refers to the speed-up of DD-DA parallel algorithm; which denotes the efficiency of DD-DA parallel algorithm.

In the following tables we report results obtained by employing three scenarios, which are defined such that each one is gradually more articulated than the previous one. It means that the number of subdomains which are adjacent to each subdomain increases, or the number of observations and the number of subdomains increase. In this way the workload re distribution increases.

Example 1.First configuration: p = 2 subdomains and m = 1500 observations. In Case1, both and have data, that is, observations, but they are unbalanced. In Case2, has observations and is empty. In Tables 1 and 2, respectively, we report values of the parameters after applying DyDD algorithm. This is the simplest configuration we consider just to validate DyDD framework. In both cases, lfi(1) and lfi(2), that is, number of observations of and , are equal to the average load and = 1. As the workload re distribution of Case 1 and Case 2 is the same, DD-DA performance results of Case 1 and Case 2 are the same, and they are reported in Table 9, for p = 2 only. In Table 3, we report performance results of DyDD algorithm.

| p | i | deg(i) | lin | lfin | iad |

|---|---|---|---|---|---|

| 2 | 1 | 1 | 1000 | 750 | 2 |

| 2 | 1 | 500 | 750 | 1 |

- Note: Both subdomains have data but they are unbalanced. We report values of p, which is the number of subdomains, i the identification number of processing unit, deg(i) degree of i, that is, number of subdomains adjacent to , lin(i) which is number of observations in before dynamic load balancing, lfi(i) number of observations in after dynamic load balancing, iad identification of subdomains adjacent to .

| p | i | deg(i) | lin | lr | lfin | iad |

|---|---|---|---|---|---|---|

| 2 | 1 | 1 | 1500 | 1000 | 750 | 2 |

| 2 | 1 | 0 | 500 | 750 | 1 |

- Note: is empty. We report values of p, that is, number of subdomains, i identification number of processing unit, deg(i) degree of i, that is, number of subdomains adjacent to , lin(i) which is number of observations in before dynamic load balancing, lr(i) number of observations in after DD step of DyDD procedure, lfi(i) number of observations in after dynamic load balancing, iad which is identification of subdomains which are adjacent to .

| Case | Tr(m) | OhDyDD(m) | ||

|---|---|---|---|---|

| 1 | 4.11 × 10−2 | 0 | 0 | 1 |

| 2 | 3.49 × 10−2 | 4.00 × 10−6 | 1.15 × 10−4 | 1 |

Example 2.Second configuration. In this experiment we consider p = 4 subdomains and m = 1500 observations, and four cases which are such that the number of subdomains not having observations, increases from 0 up to 3. In particular, in Case 1, all subdomains have observations. See Table 4. In Case 2, only one subdomain is empty, namely, . See Table 5. In Case 3, two subdomains are empty, namely, and are empty. See Table 6. In Case 4, three subdomains are empty, namely, , for j = 1, 2, 3, is empty. See Table 7. In all cases, reaches the ideal value 1 and , i = 1, 2, 3, 4. Then, DD-DA performance results of all cases are the same and they are reported in Table 9 for p = 4. In Table 8, we report performance results of the four cases.

| p | i | deg(i) | lin | lfin | iad |

|---|---|---|---|---|---|

| 4 | 1 | 2 | 150 | 375 | [ 2 4 ] |

| 2 | 2 | 300 | 375 | [ 3 1 ] | |

| 3 | 2 | 450 | 375 | [ 4 2 ] | |

| 4 | 2 | 600 | 375 | [ 3 1 ] |

- Note: All subdomains have data. We report values of p, which is the number of subdomains, i identification number of processing unit, deg(i) degree of i, that is, number of subdomains adjacent to , lin(i) the number of observations in before dynamic load balancing, lfi(i) the number of observations in after dynamic load balancing, iad identification of subdomains which are adjacent to .

| p | i | deg(i) | lin | lr | lfin | iad |

|---|---|---|---|---|---|---|

| 4 | 1 | 2 | 450 | 450 | 375 | [ 2 4 ] |

| 2 | 2 | 0 | 225 | 375 | [ 3 1 ] | |

| 3 | 2 | 450 | 225 | 375 | [ 4 2 ] | |

| 4 | 2 | 600 | 600 | 375 | [ 3 1 ] |

- Note: is empty. We report values of p, which is number of subdomains, i, that is, identification number of processing unit, deg(i), that is, degree of i, that is, number of subdomains which are adjacent to , lin(i), that is, number of observations in before dynamic load balancing, lfi(i) number of observations in after dynamic load balancing, iad identification of subdomains adjacent to .

| p | i | deg(i) | lin | lr | lfin | iad |

|---|---|---|---|---|---|---|

| 4 | 1 | 2 | 0 | 300 | 375 | [ 2 4 ] |

| 2 | 2 | 0 | 450 | 375 | [ 3 1 ] | |

| 3 | 2 | 900 | 450 | 375 | [ 4 2 ] | |

| 4 | 2 | 300 | 600 | 375 | [ 3 1 ] |

- Note: and are empty. We report values of p, which is the number of subdomains, i identification number of processing unit, deg(i), that is, degree of i, that is, number of subdomains adjacent to , lin(i) number of observations in before dynamic load balancing, lfi(i) number of observations in after dynamic load balancing, iad identification of subdomains which are adjacent to .

| p | i | deg(i) | lin | lr | lfin | iad |

|---|---|---|---|---|---|---|

| 4 | 1 | 2 | 0 | 500 | 375 | [ 2 4 ] |

| 2 | 2 | 0 | 250 | 375 | [ 3 1 ] | |

| 3 | 2 | 0 | 250 | 375 | [ 4 2 ] | |

| 4 | 2 | 1500 | 500 | 375 | [ 3 1 ] |

- Note: , , and are empty. We report values of p, that is, number of subdomains, i identification number of processing unit, deg(i) degree of i, that is, number of subdomains which are adjacent to , lin(i) the number of observations in before dynamic load balancing, lfi(i) number of observations in after dynamic load balancing and iad identification of subdomains which are adjacent to .

| Case | Tr(m) | OhDyDD(m) | ||

|---|---|---|---|---|

| 1 | 5.40 × 10−2 | 0 | 0 | 1 |

| 2 | 5.84 × 10−2 | 2.35 × 10−4 | 0.4 · 10−2 | 1 |

| 3 | 4.98 × 10−2 | 3.92 × 10−4 | 0.8 · 10−2 | 1 |

| 4 | 4.63 × 10−2 | 5.78 × 10−4 | 0.1 · 10−1 | 1 |

| p = 1 | n = 2048 | m = 1500 | T1(m, n) = 5.67 × 100 | |

|---|---|---|---|---|

| p | nloc | |||

| 2 | 1024 | 4.95 × 100 | 1.15 × 100 | 5.73 × 10−1 |

| 4 | 512 | 2.48 × 100 | 2.29 × 100 | 5.72 × 10−1 |

- Note: We report values of p, which is the number of subdomains, n mesh size, nloc, that is, local problem size, m number of observations, sequential time (in seconds) to perform KF solving CLS problem, time (in seconds) needed to perform in parallel DD-DA solving CLS problem with DyDD, and the speed-up and efficiency of DD-DA parallel algorithm, respectively. We applied DyDD to all cases of Example 1 and Example 2 and obtained the perfect load balance, as reported in Tables 3 and 8, respectively. As the workload distribution is the same, DD-DA performance results are the same in all cases of Example 1, then we show results for p = 2, only. In the same way, for all cases of Example 2, we show results for p = 4, only.

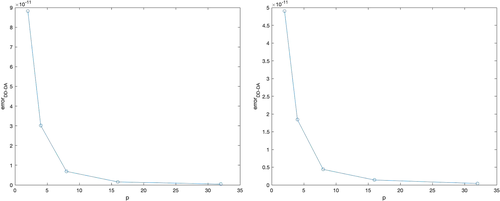

Example 3.We consider m = 1032 observations and a number of subdomains p equals to p = 2, 4, 8, 16, 32. We assume that all subdomains has observations, that is, for i = 1, … , p, lin(i) ≠ 0; has p − 1 adjacent subdomains, that is, nad := deg(1) = p − 1; has 1 adjacent subdomain, that is, for i = 2, … , p, deg(i) = 1; finally i = 1, … , p, we let the maximum and the minimum number of observations in be such that lmax = maxi(lfin(i)) and lmin = mini(lfin(i)). Table 10 shows performance results and Figure 5 reports the error of DD-DA with respect to KF.

| p | nad | lmax | lmin | ||

|---|---|---|---|---|---|

| 2 | 1 | 6.20 × 10−3 | 516 | 515 | 9.98 × 10−1 |

| 4 | 3 | 2.60 × 10−2 | 258 | 257 | 9.96 × 10−1 |

| 8 | 7 | 9.29 × 10−2 | 129 | 128 | 9.92 × 10−1 |

| 16 | 15 | 1.11 × 10−1 | 71 | 64 | 8.88 × 10−1 |

| 32 | 31 | 1.36 × 10−1 | 39 | 32 | 8.21 × 10−1 |

- Note: depends on nad(≡ deg(1)), that is, as nad(≡ deg(1)) increases (consequently p increases), then decreases. For i = 1, … , p, subdomain has observations, that is, lin(i) ≠ 0, consequently we do not need to perform repartitioning of , then Tr(m) ≡ 0.

Example 4.We consider m = 2000 observations and p = 2, 4, 8, 16, 32 we assume that has observations, that is, for i = 1, … , p, lin(i) ≠ 0; and have 1 adjacent subdomain, that is, deg(1) = deg(p) = 1; and have 2 adjacent subdomains, that is, for i = 2, … , p − 1, deg(i) = 2. In Table 12, we report performance results and in Figure 5 the error of DD-DA with respect to KF is shown.

Finally, regarding the accuracy of the DD-DA framework with respect to computed solution, in Table 11 (Examples 1-2) and in Figure 5 (Examples 3-4), we get values of errorDD-DA. We observe that the order of magnitude is about 10−11 consequently, we may say that the accuracy of local solutions of DD-DA and hence of local KF estimates, are not impaired by DD approach.

| p | errorDD-DA |

|---|---|

| 2 | 8.16 × 10−11 |

| 4 | 8.82 × 10−11 |

From these experiments, we observe that as the number of adjacent subdomains increases, data communications required by the workload repartitioning among subdomains increases too. Accordingly, the overhead due to the load balancing increases (for instance, see Table 8). As expected, the impact of such overhead on the performance of the whole algorithm strongly depends on the problem size and the number of available computing elements. Indeed, in Case 1 of Example 1 and of Example 2, when p is small in relation to nloc (see Table 9) this aspect is quite evident. In Example 4, instead, as p increases up to 32, and nloc decreases the overhead does not affect performance results (see Table 12). In conclusion, we recognize a sort of trade-off between the overhead due to the workload repartitioning and the subsequent parallel computation (Table 13).

| p | n = 2048 | m = 2000 | T1(m, n) = 4.88 × 100 | ||

|---|---|---|---|---|---|

| p | nloc | ||||

| 2 | 1024 | 4.10 × 10−3 | 4.71 × 100 | 1.04 × 100 | 5.18 × 10−1 |

| 4 | 512 | 4.29 × 10−2 | 2.61 × 100 | 1.87 × 100 | 4.67 × 10−1 |

| 8 | 256 | 1.07 × 10−1 | 8.43 × 10−1 | 5.79 × 100 | 6.72 × 10−1 |

| 16 | 128 | 1.42 × 10−1 | 3.46 × 10−1 | 1.41 × 101 | 8.81 × 10−1 |

| 32 | 64 | 3.49 × 10−1 | 1.66 × 10−1 | 2.94 × 101 | 9.19 × 10−1 |

| Procedure DyDD-Dynamic Load Balancing(in: p, , out: l1,…,lp) |

| %Procedure DyDD allows to balance observations between adjacent subdomains |

| % Domain is decomposed in p subdomains and some of them may be empty. |

| % DBL procedure is composed by: DD step, Scheduling step and Migration Step. |

| % DD step partitions in subdomains and if some subdomains have not any observations, partitions adjacent subdomains with maximum load |

| %in two subdomains and redefines the subdomains. |

| % Scheduling step computes the amount of observations needed for shifting boundaries of neighboring subdomains |

| %Migration step decides which subdomains should be reconfigured to achieve a balanced load. |

| % Finally, the Update step redefines the DD. |

| DD step |

| % DD step partitions in |

| Define ni, the number of adjacent subdomains of |

| Define li: the amount of observations in |

| repeat |

| % identification of , the adjacent subdomain of with the maximum load |

| Compute : the maximum amount of observations |

| Decompose in two subdomains: |

| until (li ≠ 0) |

| end of DD Step |

| Begin Scheduling step |

| Define G: the graph associated with initial partition: vertex i corresponds to |

| Distribute the amount of observations li on |

| Define deg(i) = ni, the degree of node i of G: |

| repeat |

| Compute the average load: |

| Compute load imbalance: |

| Compute L, Laplacian matrix of G |

| Call solve(in:L, b, out:) % algorithm solving the linear system |

| Compute , the load increment between the adjacent subdomains and . is the nearest integer of |

| Define , number of those subdomains whose configuration has to be updated |

| Update graph G |

| Update amount of observations of : |

| until %, that is, maximum load-difference is deg(i)/2 |

| end Scheduling step |

| Begin Migration Step |

| Shift boundaries of two adjacent subdomains in order to achieve a balanced load. |

| end Migration Step |

| Update DD of |

| end Procedure DyDD |

7 CONCLUSIONS

For effective DD-DA parallelization, partitioning into subdomains must satisfy certain conditions. First the computational load assigned to subdomains must be equally distributed. Usually the computational cost is proportional to the amount of data entities assigned to partitions. Good quality partitioning also requires the volume of communication during calculation to be kept at its minimum. In the present work we employed a dynamic load balancing scheme based on an adaptive and dynamic redefining of initial DD-DA aimed to balance load between processors according to data location. We call it DyDD. A mechanism for dynamically balancing the loads encountered in particular DD configurations has been included in the parallel framework we implement for solving large-scale DA models. In particular, we focused on the introduction of a dynamic redefining of initial DD-DA in order to deal with problems where the observations are nonuniformly distributed and general sparse. This is a quite common issue in DA. We presented first results obtained by applying DyDD in space of CLS problems using different scenarios of the initial DD-DA. Performance results confirm the effectiveness of the algorithm. We observed that the impact of data communications required by the workload repartitioning among subdomains affects the performance of the whole algorithm depending on the problem size and the number of available computing elements. As expected, we recognized a sort of trade-off between the overhead due to the workload repartitioning and the subsequent parallel computation. As in the assimilation window the number and the distribution of observations change, the difficulty to overcome is to implement a load balancing algorithm, which should have to dynamically allow each subdomain to move independently with time, that is, to balance observations with neighboring subdomains, at each instant time. We are working on extending DyDD framework to such configurations.

ACKNOWLEDGEMENTS

The authors thank the reviewers for their valuable comments.

Biographies

Luisa D'Amore Luisa D'Amore has the degree in Mathematics, and the Ph.D. in Applied Mathematics and Computer Science. She is professor of Numerical Analysis at University of Naples Federico II. She is member of the Academic Board of the Ph.D. in Mathematics and Applications, at University of Naples Federico II where she teaches courses of Numerical Analysis, Scientific Computing and Parallel Computing. Research activity is placed in the context of Scientific Computing. Her main interest is devoted to designing effective numerical algorithms solving ill-posed inverse problems arising in the applications, such as image analysis, medical imaging, astronomy, digital restoration of films and data assimilation. The need of computing the numerical solution within a suitable time, requires the use of advanced computing architectures. This involves designing and development of parallel algorithms and software capable of exploiting the high performance of emerging computing infrastructures. Research produces a total of about 200 publications in refereed journals and conference proceedings.

Rosalba Cacciapuoti Rosalba Cacciapuoti received the degree in Mathematics at University of Naples Federico II. She is a student of the PhD course in Mathematics and Applications at the University of Naples, Federico II. Her research activity is focused on designing of parallel algorithms for solving data assimilation problems.