How can we apply decision-making theories to wild animal behavior? Predictions arising from dual process theory and Bayesian decision theory

Abstract

Our understanding of decision-making processes and cognitive biases is ever increasing, thanks to an accumulation of testable models and a large body of research over the last several decades. The vast majority of this work has been done in humans and laboratory animals because these study subjects and situations allow for tightly controlled experiments. However, it raises questions about how this knowledge can be applied to wild animals in their complex environments. Here, we review two prominent decision-making theories, dual process theory and Bayesian decision theory, to assess the similarities in these approaches and consider how they may apply to wild animals living in heterogenous environments within complicated social groupings. In particular, we wanted to assess when wild animals are likely to respond to a situation with a quick heuristic decision and when they are likely to spend more time and energy on the decision-making process. Based on the literature and evidence from our multi-destination routing experiments on primates, we find that individuals are likely to make quick, heuristic decisions when they encounter routine situations, or signals/cues that accurately predict a certain outcome, or easy problems that experience or evolutionary history has prepared them for. Conversely, effortful decision-making is likely in novel or surprising situations, when signals and cues have unpredictable or uncertain relationships to an outcome, and when problems are computationally complex. Though if problems are overly complex, satisficing via heuristics is likely, to avoid costly mental effort. We present hypotheses for how animals with different socio-ecologies may have to distribute their cognitive effort. Finally, we examine the conservation implications and potential cognitive overload for animals experiencing increasingly novel situations caused by current human-induced rapid environmental change.

Research Highlights

-

Comparison of dual process theory and Bayesian decision theory shows several similarities.

-

Animals respond with quick, heuristic decisions when situations are routine and predictable.

-

Greater mental effort is required for novel or unpredictable situations.

Abbreviations

-

- AI

-

- artificial intelligence

-

- BDT

-

- Bayesian decision theory

-

- DPT

-

- dual process theory

-

- HIREC

-

- human induced rapid environmental change

-

- O

-

- outcome

-

- R

-

- response

-

- S

-

- stimuli

1 INTRODUCTION

Why did the monkey cross the road? To answer this question, a behavioral ecologist would examine the context of this movement decision, the monkey's behaviors before and after, and the patterns of past decisions made in similar contexts. For example, if there was a large fruit tree across the road, we may infer that our focal female was hungry and crossed the road to feed. If she crossed with other group members, we might infer that she was averse to the risk of encountering a predator in the open area of the road, or was averse to being left behind. If she departed right after the calls of a neighboring group could be heard up ahead, and she often participated in intergroup fights with that group, we may infer that she crossed to try and displace the neighboring group from a food patch that she valued. Conversely, we may be overthinking our focal female's decision-making process. Perhaps in this case, she is simply using a rule-of-thumb such as, “follow the alpha no matter the situation.” How can we ever truly understand why one of our study subjects made any decision? As behavioral ecologists, interested in elucidating the proximate and ultimate explanations for animal behavior, understanding of decision-making processes is vital.

Decision making processes are shaped by evolution and natural selection acts on animals based on the outcomes of their decisions (McNamara & Houston, 1980). As primatologists that study wild monkeys and sometimes conduct field experiments, we have often wondered about the decision-making processes that our study subjects undergo. Sometimes participants in our foraging experiments show immediate responses that vary little within and among individuals, while other times they show widely variable responses that improve with experience (e.g., Arseneau-Robar et al., 2022; Teichroeb & Aguado, 2016). How can we best understand their behavior? The literature on decision-making in psychology, neuroscience, and related fields presents a vast number of models, subsumed into bodies of theory. It is difficult to assess which of these presents the best framework to use when examining the decision-making process from a behavioral ecology standpoint. The social and ecological contexts introduce a plethora of factors for animals to focus their limited attention on (Dukas, 2002, 2004) and make decisions about, both at the level of the group and the individual (Pelé & Sueur, 2013). However, these four categories: social decisions, ecological decisions, group-level decisions, and individual-level decisions, have different bodies of theory where work is usually focused, and framed at both ultimate and proximate levels of explanation (e.g., social: game theory, Axelrod & Hamilton, 1981; Giraldeau & Caraco, 2000; ecological: optimal foraging theory, Krebs & Stephens, 2019; group: self-organization, Couzin & Krause, 2003, quorum threshold model, Sumpter & Pratt, 2009; individual: sequential sampling models, Ratcliff et al., 2016). In addition, researchers typically focus on the outcome of decisions, because these are measurable, and it is more difficult to examine the decision-making process in the “black box” of an animal's mind (Trimmer et al., 2008).

Our goal in this paper is to consider decision-making processes in wild animals by reviewing two prominent decision-making theories, dual process theory (DPT) and Bayesian decision theory (BDT). Although these theories were developed to explain decision-making in humans, the continuity of evolutionary processes in neural development (Cisek, 2022) suggests that similar psychological constructs may also occur in other animals. We examine the similarities between these two theories and how they may apply to animal decision-making in the wild, taking a cognitive ecology approach (Dukas, 1998a; Real, 1993). In particular, we seek to answer the question, when are wild animals likely to respond to a situation with a quick heuristic decision and when are they likely to spend more time and energy on the decision-making process? To answer this question, we draw from the literature and utilize examples from our foraging experiments in the field and in captivity. We consider how animals with different socio-ecologies may have to distribute their mental effort in their daily lives. Finally, we reflect on how current human-induced rapid environmental change may impact animal decision making, cognitive load, and overload.

2 DUAL PROCESS THEORY

The idea that the mind is divided into two different systems dates back at least as far as Plato, and has been written about by many well-known theorists, including Descartes, Leibniz, and Freud (Frankish & Evans, 2009). Today, this idea is formalized in DPT in social psychology (Sloman, 1996), which is not without controversy (see criticisms below). A slew of dual process models began to flood the literature in the 1980s (Table S1), with the most neutral terminology referring to Types 1 and 2 processes of cognition (although Systems 1 and 2 are often used; Evans & Stanovich, 2013; Kahneman & Frederick, 2002; Stanovich, 1999). Type 1 processes are those that occur automatically, with little to no effort, while Type 2 processes are controlled, analytic, and high effort. Evans (2008) attempted to unify the various dual process models by showing how their descriptors cluster (Table 1). Cognitive processes are typically considered to be Type 1, or “automatic,” if they are either unintentional, efficient, uncontrollable, or unconscious (Gawronski & Creighton, 2013). Thus, Type 1 processes are associated with prior knowledge or belief (i.e., implicit or procedural memory) and with heuristic processes that arise from the quick perception of situations. Type 2 processes are suggested to be slow and deliberate, and to require working memory (Evans & Stanovich, 2013; Evans, 2003; Squire, 1986; but see: Thompson & Newman, 2020; Thompson, 2013).

| Type 1 | Type 2 |

|---|---|

| Labels | Labels |

| Automatic | Conscious |

| Experiential | Rational |

| Heuristic | Analytic |

| Reflexive | Controlled |

| Stimulus-bound | Reflective |

| Holistic | Higher cognition |

| Descriptors | Descriptors |

| Un(pre)conscious | Logical |

| Rapid | Slow |

| Low effort | High effort |

| Intuitive | Deliberate |

| Autonomous | Explicit |

| Implicit | Inhibitory |

| Associative | Sequential |

| Pragmatic | Low capacity |

| Contextualized | Evolutionary recent |

| Individually universal | Linked to language |

| Evolutionarily old | Uniquely human |

It is a recurring hypothesis throughout DPT that Type 1 cognition evolved earlier and that we share it with other animals, whereas Type 2 is more recently evolved, associated with language and a theory of mind, and occurs only in humans (Epstein & Pacini, 1999; Evans & Over, 1996; Evans, 2008; Reber, 1993; Stanovich, 1999). In actuality, the evolutionary order of the development of dual-process capabilities is not understood and the proposal that Type 2 functioning is only possible in humans has not stood up to scrutiny. Many animal species, including insects, birds, and mammals, show evidence of a distinction between stimulus-bound and higher-order cognition (Kelly & Barron, 2022; Toates, 2006; Tomasello & Call, 1997). Animal evidence for a range of cognitive skills that would fall into Type 2 is diverse, including learning, cultural traditions, forethought, use of memory, as well as rule-based and abstract reasoning (e.g., insects: Chittka, 2017; Cope et al., 2018; Perry & Barron, 2013; birds: Clayton & Dickinson, 1998; Clayton et al., 2001; Hunt, 1996; mammals: Breuer et al., 2005; Janmaat et al., 2013; Kinani & Zimmerman, 2015; McGrew, 2010; Meulman & van Schaik, 2013; Osvath and Osvath, 2008; Ottoni & Izar, 2008; Yurk et al., 2002), and great apes and jays show advanced social cognitive abilities such as sensitivity to the desires and beliefs of others (Krupenye & Call, 2019). Thus, Evans (2009) suggests that it is better to think of Type 2 as uniquely developed in humans rather than only occurring in humans.

For humans, Type 1 cognition is suggested to be highly accessible and always at play, with the intuitive judgments that arise from it occupying a position between perceiving stimuli and more deliberate, effortful reasoning (Kahneman, 2003; Sloman, 2002). The immediate impressions made by this system are most accessible based on the physical salience of available cues, such as their size, distance, loudness, similarity, causal propensity, surprisingness, and affective valence (Kahneman & Frederick, 2002; Kahneman, 2003). Indeed, overriding these Type 1 assumptions is the goal of research trying to undo stereotypical thinking and unconscious bias (e.g., racism, sexism) in human societies (e.g., Corcoran et al., 2009; Gregg et al., 2006). Type 2 is suggested to monitor (either in parallel or sequentially) the impressions of Type 1 and at times override and inhibit the intuitions arising from it, allowing for deeper thinking and correction of immediate judgments (Sloman, 2002; Trimmer et al., 2008). Thus, Type 1 is often considered pre-attentive, rapidly assessing and forming impressions and associations that allow selection of phenomena that require Type 2 processing (Stanovich, 2011). For example, in social interactions, humans use Type 1 processing to rapidly assess emotional information from facial expressions and body language, determining potential threats, and deciding quickly where to redirect their gaze and attention for further action that would require Type 2 processing (Carretié et al., 2007; Frith, 2012; Mogg et al., 1995; Winston et al., 2003).

Drawing on dual-process theories of learning (e.g., Reber, 1993), Kahneman (2003) suggests that the accessibility of Type 1 to an individual is a continuum. When an individual has learned a challenging task through slow Type 2 processes and has become very experienced and skilled, Type 1 can take over and allow for automatic, reflexive responses and intuitive decision making (Kahneman, 2003; Osman, 2004). This is a top-down way to learn a cognitive skill, where explicit (declarative) knowledge is refined with practice to become easily accessed procedural knowledge (Anderson, 1982; Sun et al., 2001). A good example is learning how to drive. At first, simultaneously operating the vehicle and obeying the rules of the road is difficult but soon we perform these actions with little thought. Examples of apparent Type 2 processes proceeding to Type 1 can also be seen in over-trained laboratory animals. Research done with rats showed that over-training them on a rewarding lever-pressing activity transforms this originally goal-directed behavior into a simple habit that is autonomous of its original goal (Dickinson, 1985). Similarly, with extended training, bumblebees (Bombus terrestris audax) switch from a conceptual solution to a task to a simple heuristic solution (MaBouDi et al., 2020). Learning cognitive skills can also proceed in a bottom-up fashion, where a series of complex actions are learned through associative learning. Here, individuals select actions over time that lead to positive reinforcement and avoid those that lead to negative reinforcement. This type of skill learning does not require explicit (declarative) knowledge initially to build procedural knowledge (Scott, 2016; Sun et al., 2001), which may be the way that many animals learn cognitive skills.

2.1 Why would two types of cognition be necessary?

Why would natural selection develop two cognitive processes, when energy to devote to neural tissue is finite and useable for other purposes? Evidence from the development of AI has shown that systems that utilize a similar dual framework achieve the best performance. For example, AlphaGo has shown great success in winning the strategy game of Go by combining a quick deep neural network with a slower tree search. Analogous to Type 1 processing, the deep neural network rapidly learns the correlation between the configuration of the board and its policy values (i.e., the reward) (Kelly & Barron, 2022; Silver et al., 2016). Analogous to Type 2 processing, the slower tree search takes in the current state of the game board and models the outcome of possible future moves. While both systems can function separately, optimal performance was seen when they were allowed to interact, with the deep neural network rapidly assessing the board configuration (i.e., defining the problem space) and constraining the tree search to moves that were more likely to be beneficial (Kelly & Barron, 2022; Silver et al., 2016). Feedback could also go in the other direction, with moves that the tree search determined to be beneficial being input into the neural network, improving the function of the network over time; analogous to Type 2 processing being transferred to Type 1 through practice and repetition (Kelly & Barron, 2022; Silver et al., 2016).

It is important to remember however, that AI is developed without the constraints that evolution bestows upon animal brains (Cisek, 2022; Pessoa et al., 2021), so examples from neuroarchitecture that actually evolved through selective processes are more informative. Research on the mushroom bodies of insect brains provides such an example and shows that their dual-process systems allow the filtering of background stimuli to focus attention on certain aspects of the environment (e.g., the location of food and water), giving them the ability to learn (reviewed in: Kelly & Barron, 2022). Here, Type 1 systems provide “attentional filtering” that allows Type 2 processes to be more efficient, making them extremely beneficial in insect evolution.

2.2 Criticisms of DPT

Dualistic decision models have been extremely popular in the cognitive and behavioral sciences (Gawronski & Creighton, 2013) and often provide a satisfactory explanation for research results (Evans, 2012). In neuro- and behavioral economics, DPT has particularly influenced research in the Heuristics and Biases tradition (the study of judgment and decision-making under risk and uncertainty) and the refinement of interpersonal and intertemporal choice tradition (the study of time preferences and impulse control) (Grayot, 2020). However, the last two decades have brought intense scrutiny to DPT and criticisms have been building (Gigerenzer & Regier, 1996; Grayot, 2020; Keren & Schul, 2009; Keren, 2013; Kruglanski & Gigerenzer, 2011; Osman, 2004). Of the criticisms that have been articulated, the most important for our purposes are the following: (1) DPT was developed in an ad hoc way, to explain a “known” phenomenon, and thus is often presented as irrefutable. (2) The models are often conceptually vague and imprecise, which explains why many research findings can be accommodated within them. (3) The dual systems proposed by DPT do not seem to be discreet. Regarding this third criticism, it has been pointed out that it is not obvious exactly what distinguishes Type 1 from Type 2 processing (Newstead, 2000; Osman, 2004) and if there are two systems, it is unclear how they interact, with scholars arguing for both parallel and sequential operation (Evans, 2008; Keren & Schul, 2009). Certain neuroanatomical areas have not been specifically identified for each system (Keren, 2013; Osman, 2004) and indeed, evidence indicates that brain regions associated with both systems overlap and crosscut one another (Evans & Stanovich, 2013; Grayot, 2020; Keren & Schul, 2009; Mugg, 2016). (4) Importantly, DPT was not developed with consideration of evolutionary processes and their effects on biological systems. This evidence all suggests that rather than two distinct systems, cognitive processes are more of a continuum from pre-attentive processing to greater degrees of investment and analyses, and that a binary view is incorrect (Keren & Schul, 2009; Kruglanski & Gigerenzer, 2011; Newstead, 2000). Grayot (2020) also importantly points out that although DPT often works well to explain behavior in laboratory settings, its applicability outside of these controlled conditions has been questioned.

3 BAYESIAN DECISION THEORY

BDT is another important psychological construct for understanding decision-making. BDT focuses on the fact that decisions are almost always made under uncertainty with a probability of risk (Ellsberg, 1961) and uses probabilistic inference (LaPlace, 1820) by applying Bayesian statistics to the decision-making framework. BDT expects that an individual should have preferences for actions based on “the expected utility of their consequences and the conditional expected utility of their consequences given their performance” (Bradley, 2007; Jeffrey, 1983; Savage, 1972: p. 234). Within decision theory, the best outcome of a decision is called the utility function U(outcome), so maximizing U should be the goal of any decision. In a particular situation, each decision made (a) will lead to an outcome (x), such that a set of decisions will actually have a probability distribution around the goal of maximizing U denoted by: p(outcome = x|decision = a) (Körding, 2007). Thus, in an attempt to make the best decision an individual should combine the utility function with the distribution of outcomes from their past decisions, to get an expected utility: E[Utility (decision)] = the sum of possible outcomes of p(outcome|decision) U(outcome). Adding Bayesian statistics to this framework allows the addition of new sensory information on the current state of, or current beliefs about, the decision-making environment (a likelihood), to be integrated with this set of information from the past (the prior) to create a posterior distribution using Bayes' rule, and finally a cost function is added, which is the quantity the decision-maker would like to minimize (Körding, 2007; Ma, 2019; Figure 1). This should lead to the most accurate decisions, given that the decision-maker is always in a position of uncertainty. Therefore, BDT allows a way for our beliefs to be combined with our utility function and provides a mathematical framework for decision-making that can be used to build psychological models (Körding, 2007; McNamara & Chen, 2022).

BDT predicts that individuals should make near optimal decisions in stable environments because their knowledge of the prior distribution should be accurate and by using Bayesian updating procedures, they can update their assessment of the current situation before making a choice (McNamara et al., 2006). We do not have to assume that animals are doing complicated mathematics because natural selection should favor those that behave as if they are using Bayesian updating (Trimmer et al., 2011), and the same can be said of humans (Domurat et al., 2015; Wu et al., 2017). Thus, under predictable distributions of outcomes, simple heuristics can be learned or can evolve to produce decisions that can approach Bayesian optimums with little cognitive cost to the decision-maker (Gigerenzer & Todd, 1999; Lange & Dukas, 2009; McNamara & Houston, 1980; Trimmer et al., 2011). Indeed, Higginson et al. (2018) have shown that a simple rule for how intensely to forage, which is based solely on an animal's physiological state (i.e., energy reserves), performs almost as well as optimal Bayesian learning.

The probability of breast cancer is 1% for a woman at age 40 who participates in routing screening. If a woman has breast cancer, the probability is 80% that she will get a positive mammography. If a woman does not have breast cancer, the probability is 9.6% that will also get a positive mammography. A woman in this age group had a positive mammography in a routine screening. What is the probability that she actually has breast cancer? ____%

Ten out of every 1,000 women at age 40 who participate in routine screening have breast cancer. Eight of every 10 women with breast cancer will get a positive mammography. Ninety-five out of every 990 women without breast cancer will also get a positive mammography. Here is a new representative sample of women at age 40 who got a positive mammography in routine screening. How many of these women do you expect actually have breast cancer? ___ out of ___.

In this format, based on sampling with experience, the base rate is preserved but contained in the joint frequencies and thus can be ignored, which greatly simplifies the calculation (Gigerenzer & Hoffrage, 1995; McDowell & Jacobs, 2017). Gigerenzer and Hoffrage (1995) found that when the problem is framed this way, the correct Bayesian solution was given by 46% of participants, relative to 16% in the textbook version of the question. Subsequently, extensive research has shown that how information is given, and its structure is important in Bayesian inference and this has been called the natural frequency facilitation effect (reviewed in: Brase & Hill, 2015; Johnson & Tubau, 2015; McDowell & Jacobs, 2017). Performance in humans is further increased when visual aids or interactive, experiential problems are presented (McDowell & Jacobs, 2017).

3.1 Criticisms of BDT

The criticisms of BDT are not as substantive as those of DPT, perhaps because BDT is newer. Bayesian modelers have been accused of not sufficiently considering alternative models (Bowers & Davis, 2012), while Bayesian models have been criticized as overly flexible, difficult to falsify (Bowers & Davis, 2012), and not representing actual probabilities (Block, 2018; Howe et al., 2006). Those using BDT have argued against these criticisms, differentiating between weak and strong modeling procedures (Ma, 2019; McNamara & Chen, 2022). Like DPT, BDT has also been criticized because the exact neural processes that implement Bayesian decisions are not clear (Block, 2018; Jones & Love, 2011), however this is currently a promising and active area of research (Ma, 2019). Finally, Jones and Love (2011) argue that BDT does not adequately consider the computational constraints individuals face due to limited processing and memory (i.e., bounded rationality, Simon, 2000), which we discuss further below.

4 COMMONALITIES BETWEEN DPT AND BDT

DPT and BDT intersect in several key areas. Both assume agents are rational, both emphasize the importance of considering the values and probabilities of different outcomes when making decisions, and both recognize the role of cognitive biases and heuristics. However, DPT assumes that individual's use logical inference and stresses that biases and errors in judgments are frequent (De Neys & Pennycook, 2019; Frankish, 2010), while BDT assumes that individual's use probabilistic inference and are relatively good at making decisions based on this (Poole, 2000).

Importantly, DPT and BDT both assume that routine, frequent, commonly encountered situations lead to the evolution of quick heuristic decision-making. The similarities between these approaches are most evident when DPT theorists apply “conflict resolution paradigms” in testing their models, as these work by cueing participants to a probability distribution that should illicit an intuitive response, and then introducing a conflict that goes against this probability to initiate Type 2 processing (Evans, 2007). This literature, as well as the dual process learning literature that examines how a skill learned via Type 2 can slowly be allocated to unconscious Type 1 processing, both demonstrate that the binary (0–1) view of cognitive processing used in DPT is unlikely to be correct and indeed is often not utilized by DPT theorists themselves (Keren & Schul, 2009). Any trigger of conscious analyses in DPT is labeled as Type 2 processing (i.e., the binary representation of no thinking vs. some thinking), but this Type 2 process could represent anything from a quick check of the impressions of Type 1 to the intensive processing needed to solve a long math equation or to write this article. This variation in cognitive effort is more accurately thought of as a continuum rather than one entity (Keren & Schul, 2009; Newstead, 2000), and if one conceives of Type 2 processing as a continuum (or a distribution of mental effort), it fits nicely into the BDT framework of probabilistic reasoning (Sih, 2013).

5 WILD ANIMAL DECISION-MAKING

What, if anything, can our overview of DPT and BDT tell us about how wild animals make decisions? Animals are motivated to move around and make decisions in their habitat due to the four “F's” (Dill, 2017), food (distribution and abundance), fornication (mating opportunities), fear (fleeing or avoiding risks), and fighting (conspecific competition) (Finnerty et al., 2022). Within these varied situations, when are decisions more likely to be made with quick heuristic decisions versus more intense processing? Or put another way, what triggers the switch from intuition to deliberation? Note that for the remainder of the paper we consider Type 2-like processes as involving a continuum of cognitive or mental effort, the degree of which depends on the situation or problem encountered. We define mental effort following Shenhav et al. (2017: pp. 100–101) as, “mediating between a) the characteristics of a target task and the subject's available information processing capacity and b) the fidelity of the information-processing actually performed, as reflected in task performance”.

Both DPT and BDT would predict that quick, immediate reactions should occur when primed by predictable features of the environment (Toates, 2006). In line with this, Carpenter and Williams (1995) found that situations that occur with a higher probability are more likely to result in quick Type 1-like decisions for people. Thus, commonly encountered, routine situations should lead to the development of a heuristic reflexive action that allows the decision-maker to react quickly with little thought. It follows then that novel environmental circumstances or situations that occur rarely could be expected to trigger more intensive processing because there is little precedent (yet) for how to deal with them (Trimmer et al., 2008). Indeed, evidence from animals supports use of a decision-making framework where they evaluate the probability of a given outcome and base their decisions on the distribution of outcomes from past situations that were like the current situation (Valone, 2006; Lange & Dukas, 2009).

In associative dual-process models of behavior, habitual, Type-1-like responses in animals are assumed to develop via Thorndike's (1911) law of effect, where the experience(s) of receiving a reward after a certain response strengthens the association between the context and the response, such that later, the same context directly primes the response (Wit & Dickinson, 2009). In a paradigm used often in laboratories, animals first learn, via goal-directed action, that in the context of certain Stimuli (S), a Response (R) leads to a desired Outcome (O) (R-O association). With repeated experience, the R-O contingency is degraded, and a Stimuli-Response (S-R) association ends up leading to the expression of the behavior, even in the absence of the desire or need for the Outcome (i.e., the behavior becomes habitual and no longer goal-directed) (Dickinson, 1985, 1994; Wit & Dickinson, 2009). A hallmark of this framework is that the outcome becomes devalued (Wit & Dickinson, 2009). For wild animals, survival is a constant struggle to attain resources and avoid predators, outcomes that are unlikely to be devalued, even if they are the result of apparent habitual behavior. However, importantly this research shows that animals can alter their habitual behavior and it becomes goal-directed once again when the stimuli or reinforcer is less predictable or when the context changes (Bouton, 2021). If we assume that goal-directed behavior requires more mental effort, then in this situation in DPT terms, animals would be switching from Type 1 back to Type 2. Thus, greater processing is suggested to be needed for cues that have an unpredictable or uncertain relation to an outcome (Hogarth et al., 2008; Kaye & Pearce, 1984; Pearce & Hall, 1980; Wilson et al., 1992). This is related to the Rescorla-Wagner model of Pavlovian conditioning (Rescorla & Wagner, 1972; Wagner & Rescorla, 1972), which has a great amount of support (Miller et al., 1995). This model shows that the amount of associative learning that occurs on a trial-by-trial basis decreases as an outcome is fully predicted by a stimulus. However, when a stimulus is surprising relative to an outcome, associative learning is faster (Courville et al., 2006; O'Reilly, 2013), and presumably requires some mental effort.

Similarly, mismatches between expectations and reality (or between prior and posterior beliefs) are suggested by many DPT theorists to trigger Type 2 analytic thinking. When a conflict is detected between the assessment of the situation and the quick, intuitive decision of Type 1, Type 2 processes are suggested to be engaged (e.g., De Neys & Glumicic, 2008; Evans, 2007, 2009; Pennycook, 2017). In conflict resolution paradigms, when humans are given a problem where the correct answer goes against prior cueing of the probability of that being the correct answer (i.e., a conflict is present), relative to non-conflict problems they show greater response times, less confidence in their answer, and activation in brain regions that are thought to detect and mediate conflict (reviewed by: Bago & De Neys, 2017).

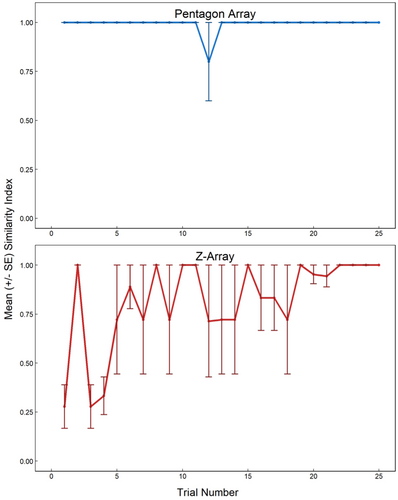

Field experiments can provide valuable insights into how animals make decisions. Our research on wild vervet monkey (Chlorocebus pygerythrus) foraging decision-making in small-scale navigation arrays shows that the computational complexity of the problem presented to the monkeys determines whether they will immediately respond with heuristic responses or with evidence of greater deliberation and mental effort. Our foraging arrays test optimal foraging theory (Krebs & Stephens, 2019) and require the animals to find the shortest path through a set of baited targets, thereby saving energy in acquiring a reward. These mimic the combinatorial optimization problem of the Traveling Salesman and arrays with fewer targets or with simple solutions that adhere to heuristic strategies are more easily solved than those with more platforms and set ups that do not lend themselves to simple decision rules. When we used a simple pentagon foraging array where all five platforms were baited identically and the shortest path was a circle around the platforms consistent with at least two heuristic rules (Kumpan et al., 2019; Teichroeb & Aguado, 2016), the monkeys immediately used this optimal path and rarely deviated from it (Figure 2). However, when we used a more difficult array with six platforms (i.e., the Z-array in Teichroeb & Smeltzer, 2018) that were again baited identically, the vervets had to work harder to find short, relatively optimal routes, and they sampled more options before settling on a route that they utilized with greater frequency over time (Figure 2). We interpret this as evidence of greater mental effort in the Z-array to find paths that were closer to optimal than those they initially used.

Other studies on humans (Franco et al., 2021; Murawski & Bossaerts, 2016) and nonhuman primates (Hong & Stauffer, 2023) have also demonstrated that as computational complexity increases, mental effort and deliberation time increase, and performance decreases. Hong and Stauffer (2023) found that rhesus macaques (Macaca mulatta) in their laboratory sought to optimize their access to rewards and applied different algorithms depending on the complexity of the problem presented. Importantly, as problems increased in complexity, the monkeys showed concomitant increased tendencies to satisfice and indeed each had a satisficing threshold, where time and effort invested in finding the optimal solution was considered too great. Satisficing is defined as finding solutions that are subjectively satisfactory even though they are not optimal (Simon, 1956). Thus, when increased cognitive effort was not worth the marginal gain in the reward above that which would be attained using a simple heuristic, satisficing was observed (Trimmer, 2016).

Our foraging experiments comparing multiple primate species have also demonstrated that species that are solving a problem resembling what they experience in their natural daily foraging, respond with quick, invariable, near-optimal, heuristic solutions. Whereas species that do not typically feed on stationary, renewing resources like our foraging platforms, show a pattern of iterative trial-and-error learning and improvement over time, indicative of greater mental effort (Kumpan et al., 2022). Thus, dietary niche influenced whether an animal was prepared via their past experiences and evolutionary history (McNamara & Houston, 1980; McNamara et al., 2006) to respond to our experimental arrays with something akin to Type 1-like or Type 2-like processes.

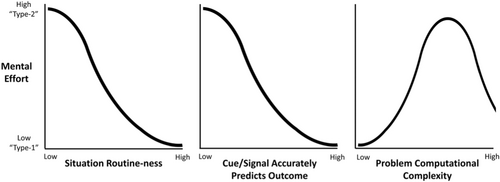

Taken together, this evidence all suggests that fast, intuitive, Type 1-like responses should be seen for routine situations, for predictable signals/cues that have a certain relationship (i.e., known and constant) with an outcome, and for easy problems that experience or evolutionary history has prepared the individual for. Whereas, mental effort should increase as situations become more novel, signals/cues become less predictable or surprising, when these cues have an uncertain relationship to an outcome, and when problems increase in computational complexity (Figure 3). With the caveat that when perceived computation complexity is too great, an easy, satisficing heuristic may be preferred to save on effort (Hong & Stauffer, 2023; Trimmer, 2016).

6 ECOLOGICAL AND SOCIAL CONTEXTS TRIGGERING INCREASING COGNITIVE EFFORT

Animals utilize their sampling of ecological and social information to form rational expectations about the future and generate decision rules (Garber et al., 2009). Because Type 2-like decision-making is more likely to occur when individuals find themselves in novel, unpredictable circumstances, animals that live in more complex ecological or social environments may not be able to rely on heuristic decision-making as often as those in simpler environments. Here we assess and present hypotheses for how ecological and social factors may trigger Type 2-like cognition differently for animals with variable socio-ecologies.

6.1 Ecological considerations

Ecological landscapes are complex, and animals are constantly adapting to their changing environments by altering their behavior (Tomasello & Call, 1997). According to Farina (2009), more heterogenous habitats and the use of resources located using different sensory modalities increases the cognitive complexity of landscapes. Examining these variables on a broad scale for animals in different dietary niches, we see that habitat heterogeneity is influenced by (1) plant and animal species richness, (2) seasonal variation in resource abundance, and (3) home range size. Plant and animal species richness decreases, and seasonal variance in resource availability increases, with latitude and altitude (Jinsheng & Weilie, 1997; Moeslund et al., 2013), but if animals are adapted to their environment and seasonality is predictable, simple heuristics may suffice for decision-making in these habitats. Home range size is largely determined by an animal's body mass and diet, where larger animals need bigger ranges and range size is greatest for carnivores, smaller for omnivores, and smallest for herbivores (Tucker et al., 2014). This order of range size needs based on diet also correlates generally with the predictability of food resources and to some degree with the sensory modalities needed to find and use them. For carnivores, food is mobile, unpredictable, and sparsely distributed (Carbone et al., 2007; Kelt & Van Vuren, 2001) and must be located using multiple sensory modalities (Leavell & Bernal, 2019). Omnivores experience a mix of spatially stable, predictable food sources and mobile, unpredictable prey and due to this, may need to utilize even more sensory modalities than carnivores to locate different food sources. The herbivore category can be broken down into several smaller categories, like frugivore, granivore, nectivore, and folivore, all with their own set of adaptations. Frugivores, granivores, and nectivores typically rely on ephemeral, clumped food sources that vary seasonally in their spatiotemporal availability, and due to this, they often have moderately large home ranges (Milton, 1981). Whereas folivores and grazing herbivores feed on vegetation that is more predictable in space and time and less patchily distributed and their home ranges tend to be small (Tucker et al., 2014). It could be argued that folivores and grazing herbivores also locate their food using fewer sensory modalities than frugivores, granivores, and nectivores, but this depends on the species and their exact niche and adaptations. There is a lot of evidence within the primate order that relying on fruit presents more complex problems to animals than relying on leaves (Milton, 1981) and that this has contributed to the cognitive development of frugivores (DeCasien et al., 2017; DeCasien & Higham, 2019). Overall, these considerations suggest that carnivores and omnivores are forced to engage in Type 2-like cognitive effort more so than animals relying solely on plant foods.

Regarding the seasonality of resources, the harshness of the low-availability seasons may be important in determining the need for greater mental effort. Animals that can predict resource access can buffer nutritional stress in seasons of low food abundance (Zuberbühler & Janmaat, 2010). Phenological synchrony among trees of similar species adds an element of temporal periodicity that helps make an environment more predictable. However, depending on how extreme seasonality is in various habitats, periods of low food availability may require resource switching and lead to the need for Type 2-like cognitive processing. For example, in the tropical forests of Central and South America, primates consume nectar during dry seasons when fruit abundance is low. Nectar can serve as a good resource for sugar and water along with micronutrients but can be costly to acquire because it is in small, depletable patches. Thus, we would expect that primates relying on nectar should decrease the cost of acquiring it to balance the energetic gains available (Garber, 1988). Indeed, tamarins (Saguinus fuscicollis and S. mystax) were found to use a conserved set of sequential steps to access patches of nectar from Symphonia globulifera flowers. Their movement towards these resources incorporated the use of learning and memory to reduce travel and directly approach the reward and they traveled farther to reach feeding sites of higher preference, bypassing low preference sites, which might indicate decision making based on expectation of reward (Garber, 1988). The self-control needed to bypass an immediate food reward in favor of moving towards others, where a greater or a different reward is expected, suggests the use of cognitive effort (Janson, 2007).

Low productivity areas and seasonal environments may increase information-seeking behavior by animals, which involves metacognition (i.e., knowing what you do not know and seeking to improve it; Roberts et al., 2012). Information seeking can provide precise updates about the current environment and be used to update cognitive maps (Hunt et al., 2021; Poucet, 1993). Although optimal foraging constrains animals to attempt to maximize their energy intake on shorter time scales, exploratory movements over the longer term allow them to gather information that may reduce the overall variance in energetic output (Spiegel & Crofoot, 2016). For example, Janmaat and Chancellor (2010) observed that gray-cheeked mangabeys (Lophocebus albigena johnstonii) appeared inefficient in exploring a newly colonized area, covering large swaths to gather information and become acquainted with the resources. However, the group showed logical rules in decision making, trading off the risk of predation for increased foraging efficiency by traveling in a more spread-out fashion in the newly colonized forested area and revisiting certain fig trees often to update their information on the fruiting state of trees. While this example seems to involve Type 2-like processes, information seeking can also be about Bayesian updating information on food availability in the range that would later support more heuristic, Type 1-like decision-making. For instance, information is attained and updated by animals during their routine foraging by assessing resources enroute, or nearby, utilized food sources for future foraging (Janmaat et al., 2014; Janson & Byrne, 2007; Schmidt et al., 2010). This updating helps the forager anticipate the amount of food that will be available and its regeneration potential to control and pattern their access to resources (Tujague & Janson, 2017). Indeed, agent-based models show that foraging efficiency increases when animals carry chronological memory that integrates the synchronicity in intra-species resource production, rather than just associative memory (Robira et al., 2021).

6.2 Group movements to find resources

Group-living is beneficial for avoidance of predation and cooperative resource defense, but also constrains individual decision-making because there is a need for the group to reach a consensus when making group movement decisions. In social species, group movements are a collection of individual decisions that often result in a consensus about when and where to move, and these individual decisions could be based on simple reactive rules or on more context-dependent complex reasoning (Conradt & List, 2009; Williams et al., 2022). Heuristics are often used to maintain group cohesion, as many group movements follow self-organization principles, where individuals use simple spatial heuristics that lead to emergent, global group-level decisions (reviewed in: Couzin & Krause, 2003; Conradt & Krause, Couzin, et al., 2009; King & Sueur, 2011; Sueur & Deneubourg, 2011). For example, in starling (Sternus vulgaris) murmurations, individuals use simple rules to coordinate movements with seven neighboring birds, minimizing the effort and costs of cohesion (Bialek et al., 2008; Young et al., 2013). These self-organization principles may be most important during predation events when all group members are at risk and have the same goal (stay alive!), so immediate and decisive action is needed (Trimmer et al., 2008). The heuristic in this case may be akin to the selfish herd principle—stay close to your neighbors and avoid the periphery (Hamilton, 1971).

Self-organizing heuristics can lead to accurate outcomes, even with only a few informed leaders. Dyer et al. (2009) showed that a few informed individuals could accurately guide both small and large human groups towards a target without obvious communication. The impact of informed leaders is amplified by the participation of uninformed or unbiased individuals (Couzin et al., 2011; West & Bergstrom, 2011). In these cases, it may only be the leader that must engage in mental effort. However, in many group movements, members can choose between roles (Petit & Bon, 2010) as leaders, followers, or influencers (i.e., individuals whose actions cause behavioral change in other group members, Strandburg-Peshkin et al., 2018), so their chosen role may either involve little thought or more intensive cognitive effort. When a group member initiates movement to a new foraging patch, others may use simple heuristics to determine if the destination and timing are optimal for their needs (e.g., Diffusion Model: Pelé & Sueur, 2013, Optimal Foraging strategies: Davis et al., 2022, Mimetism: Meunier et al., 2006, choice popularity: Tomlin, 2021). If the consensus costs are high, Type 2-like processes may be initiated, and an individual may attempt to lead the group in another direction rather than support the current leader. Leading, especially when there are conflicts of interest, could be time consuming and costly to the leader (e.g., mental energy, predation risk, and lost foraging time) and possibly to the group (e.g., increasing time to reach consensus). Thus, in group movements, individuals must balance their current needs with potential risks (Pelé & Sueur, 2013) by using a combination of Type 1-like and Type 2-like decision-making. When there are conflicts in the timing and direction of group movements between individuals, although more individuals may need to engage in more intensive cognitive processing, there are evolved democratic strategies that groups use to come to a consensus and mitigate conflicts of interest (e.g., Davis et al., 2022; King & Cowlishaw, 2009; Strandburg-Peshkin et al., 2015).

6.3 Social complexity

The complexity of animals’ social groupings may also influence the amount of daily cognitive effort they require when making decisions. Social organization (as defined by Kappeler & van Schaik, 2002 to include group size, spatiotemporal cohesion, and sex ratio) contributes to how predictable social life is for animals. Small, cohesive groups can develop routines easily and reach consensus more quickly (Papageorgiou & Farine, 2020). Larger groups have more potential for individual behavior to disrupt routines and conflicts of interest are more likely to arise (Strandburg-Peshkin et al., 2015). As group cohesion decreases, the possibility for disruption in routine events is also increased, thus individuals in multilevel societies and especially those in fission-fusion societies may have to engage in greater mental effort more often. Group stability lends itself to predictability but when animal aggregations are transitory or membership is inconsistent, relationships among group members are unclear (Parrish & Edelstein-Keshet, 1999). Thus, individuals may not know when they can feed near another individual, or who will, and who will not, direct aggression towards them, which suggests they have to pay attention and adjust continually.

Sex segregated groups have no mating conflict and individual needs are mostly aligned, so these types of groups can probably rely on heuristic decision-making more often. When groups contain both males and females there is the potential for mating conflict and sexual coercion. In monogamous and polygynous groups with a single male, sexual conflict is less likely as the females have little choice in their mate (Fedigan, 1992), although they can attempt extra-group copulations, instances that may call for strategic Type 2-like thinking for both the female and the male in her group (Schillaci, 2006). Polygynandrous groups with multiple males and multiple females present the biggest potential for unpredictable situations and mate choice leading to greater need for mental effort (Shultz & Dunbar, 2007). Attraction to the largest, loudest, or flashiest mate may reflect a simple Type 1-like consideration where the primary contribution to reproduction is genetic material. The development of bright and loud secondary sexual characteristics in many species indicates that within the context of mate choice, bigger is often better (Ryan et al., 2019). A female just has to update her knowledge of the variance in male quality for the current year, and based on her experience from previous years, she can make a relatively good mate choice (McNamara et al., 2006), although the amount of effort she has to put in to learning male variance and her acceptance threshold may vary depending on conditions (Collins et al., 2006). Solitary species and lek-forming species go through a similar mate choice process once mates are found and can be compared.

However, reproductive decision making can also involve analytic and calculated choices (Akre & Johnsen, 2014; Castellano et al., 2012; Kelley & Kelley, 2014; Stumpf & Boesch, 2005), especially when the decision-maker will remain in the same social group with their mate, who may provide fitness-altering resources, such as food availability, social capital, and infant care and protection (Archie et al., 2014; Silk et al., 2009; van Schaik & Kappeler, 1997). Reproductive decision making can include considerations of the perceived costs and benefits of the exchange, which may be influenced by factors such as attractiveness (e.g., size, strength, or perceived value), public information (e.g., dominance rank, outcomes of intrasexual contests, and outcomes of intersexual courtship displays), and private information (e.g., reproductive stage or condition of the decision-maker) (Buston & Emlen, 2003; Castellano et al., 2012; Noë & Hammerstein, 1995). Threats to offspring, such as infanticidal males or predators, are also key factors shaping reproductive and dispersal decisions. The need to confuse paternity among several males (Hrdy, 1979) but also concentrate paternity certainty in a dominant male, if he will remain high ranking, may require a series of strategic decisions and be especially important for animals with long life histories and altricial young (Sicotte et al., 2017; Stumpf & Boesch, 2005; Teichroeb et al., 2009, 2012; Zhao et al., 2011). However, it is important to consider that evolved strategies can also lead to these complex patterns, and they may not necessarily be the result of proximate decision-making.

Social structure, or the patterning of social relationships within the group (Kappeler & van Schaik, 2002), can also affect how routine and predictable everyday life is. If relationships are strictly differentiated by factors like rank and kinship, social interactions tend to be very predictable. Dominance hierarchies can function with simple rules (Hemelrijk, 2011; Hobson et al., 2021), as can choices of which individuals to form coalitionary relationships with (Range & Noë, 2005). It may only be at times of instability in the hierarchy or when new individuals immigrate into the group that mental effort must increase until dominance relationships are again settled. However, low-ranking individuals may need to utilize cognitive effort to outwit dominant individuals for contestable resources. Our field experiments have shown that how individual's experience intraspecific feeding competition can affect how frequently they need to utilize Type 2-like decision-making. In a recent experiment on wild vervet monkeys (C. pygerythrus), we showed that foraging monkeys engaged in complex decision-making when there was a risk of losing food to conspecific competitors (Arseneau-Robar et al., 2022). When choosing which route to take, decision-makers quickly incorporated information on who was in the audience, their relative dominance rank and distance (i.e., travel time) from the foraging array, as well as their own ability to extract food resources that required handling before competitors could arrive (Arseneau-Robar et al., 2022). This sensitivity to competitors in the audience has also been demonstrated in food-caching species (Clarke & Kramer, 1994; Emery, Dally and Clayton, 2005; Lahti & Rytkönen, 1996; Lahti et al., 1998; Samson & Manser, 2016), where kleptoparasitism is common, and suggests that feeding competition can increase mental effort in certain situations, especially for low-ranking individuals that may lose resources. Further analyses of our vervet experiment also showed that dominant males, who rarely experienced contest competition at the platforms, paid little attention to the food-handling techniques used by others. Consequently, they were slow to learn the efficient food-handling techniques that their lower-ranking group members innovated (Arseneau-Robar et al., 2023).

In primatology and evolutionary psychology, there is a rich body of literature arguing for the importance of group size and social complexity in driving the evolution of large brain size (e.g., Byrne & Whiten, 1988; Dunbar & Shultz, 2007; Dunbar, 1998; Humphrey, 1976; Jolly, 1966) and counter arguments and evidence against this idea (e.g., Barrett et al., 2007; DeCasien & Higham, 2019; DeCasien et al., 2017; Reséndiz-Benhumea et al., 2021). Our review of DPT and BDT suggests that if social complexity is routine and predictable, it will not require intensive cognitive processing on a daily basis, although learning the rules that govern the society may be challenging to young individuals, requiring a long juvenile period (Uomini et al., 2020).

7 ANIMAL DECISION MAKING AND COGNITIVE OVERLOAD IN THE ANTHROPOCENE

Animals possess a finite cognitive processing capacity, and they must be selective about the information they pay attention to (Dayan et al., 2000; Dukas, 2002, 2004; Evans, 2006). Cognitive constraints limit the ability to perceive information, or to process it (Farina et al., 2005; Lebiere & Anderson, 2011; Real, 1991), and make it necessary to control the amount of information taken in (Faisal et al., 2008), which is done via attentional mechanisms that sort incoming sensory stimuli relative to its relevance to the organism at that time and place (Dukas, 1998b; Evans, 2006; Kahneman, 1973; Simon, 2000). Psychological theories of attention suggest that there is a maximum amount of information that can be attended to at once, due to the cognitive load accrued (Dayan et al., 2000; Kahneman, 1973). Problem solving contributes to cognitive load, meaning that organisms must divide their limited cognitive resources between information gathering, information processing, and decision making or problem solving (Sweller, 1988). When decision-makers attempt to incorporate more information than they can process, they suffer from cognitive overload, where paradoxically, more information and more options leads to less accurate decision-making (Schwartz, 2004).

Human and animal studies have shown that increased cognitive load impacts decision-making. For example, Whitney et al. (2008) found that creating a cognitive load with a working memory task increased participants’ acceptance of risky decision making, which was interpreted as evidence of satisficing to minimize cognitive effort. DPT studies on humans have found that Type 2 decision-making, or Type 2 monitoring of Type 1, is impaired by distractions or concurrent involvement in another cognitive task (Pashler, 2000; Phillips-Wren & Adya, 2020). In animals encountering a predator, decision-makers are slower to respond when they are distracted by other environmental stimuli, such as anthropogenic noise (Chan et al., 2010; Purser & Radford, 2011).

Increased cognitive load requires greater investment in working memory. Memory is the ability to store information from the past so that it can be retrieved later and is typically divided into working (or short-term) and long-term memory. Whereas long-term memory can contain a great deal of information, working memory is limited to a small number of representations that include newly perceived sensory information and information activated from long-term memory (Evans & Stanovich, 2013; Ma et al., 2014; Turi et al., 2018). Working memory provides the most relevant and up-to-date information for an individual's decision making. There are many models that have been proposed to conceptualize the limits of working memory in humans. (Whether these also apply to animals is an open question, but there is good evidence for similar working memory in mammals and birds, Nieder, 2022). The most persuading models are the slot model and the resource model, which are both well-supported and argue that working memory is limited by the amount and quality of the information it can retain (Cowan, 2010; Ma et al., 2014; Saults & Cowan, 2007). The resource model is especially interesting as it proposes that individuals can prioritize stimuli and increase the precision of their recall of certain stimulus. Stimuli are prioritized by their salience, such as their loudness, brightness, threat-level or overall prominence within the environment. However, there is a trade-off in that the recall of stimuli that are not prioritized is lessened (Ma et al., 2014).

Recall of short-term memories declines over time and becomes less reliable when memories are not used, unless they are consolidated into the long-term memory through repetition (Cowan, 2010; Saults & Cowan, 2007; Turi et al., 2018). Importantly, heuristic decision-making uses long-term memory (Dougherty et al., 2003; Kahneman & Tversky, 1973; Tversky & Kahneman, 1973), to gain an understanding of priors and build conditioned responses through repeated exposure to a given stimuli (Bouton, 2021). By using heuristics based on past memories, animals avoid overloading their working memory and can make faster decisions (Taffe & Taffe, 2011; Turi et al., 2018). The accuracy of these decisions depends on how well cue-response systems are matched with their environment (Sih, 2013).

The major issue many animal populations are now facing, in what has been conceptualized as the Anthropocene (Behie et al., 2019), is that rapid environmental changes are causing a mismatch in the environment they are experiencing versus the one they evolved in. Sih (2013) defines the current human-induced rapid environmental change (HIREC) as encompassing habitat change, exotic species introductions, human harvesting, and climate change. Recent research has been increasingly dedicated to understanding how animals are adjusting to HIREC. Most of this work has shown that animals first response is to change their behavior and that species that are the most plastic in their behavior and physiology fair the best under changing conditions and in novel environments (reviewed in: Hendry et al., 2008; Snell-Rood et al., 2018; Wong & Candolin, 2015). However, behavioral responses to HIREC can also be maladaptive and lead animals to fall into ecological or evolutionary traps, where habitat choices and evolved responses caused reduced survival and reproduction in the current context (Robertson & Hutto, 2006; Schlaepfer et al., 2002).

Sih (2013) lays out a useful framework for understanding animal responses to HIREC, showing that animals’ evolved cue-response systems (Type 1-like heuristics) are disrupted by the novel situations presented by HIREC; but the keys to understanding how animals will respond is to determine the degree of mismatch between their earlier experiences and their current environment, as well as their ability to learn to deal with new conditions (Greggor et al., 2019; Sih, 2013). Novel situations that are encountered only once and are fatal do not allow for learning; however, if animals experience new stimuli and situations several times and are able to learn how their past decisions led to differing outcomes, they may be able to learn ways to deal with HIREC (although there are constraints to learning, see Greggor et al., 2019).

Learning processes require mental effort and animals must deal with this, as well as their complex social and ecological environments, that include new pressures from HIREC. Given our review of DPT and BDT, what are the chances that animals are increasingly suffering from cognitive overload in the Anthropocene and what is the effect on their decision-making? It is useful to categorize the uncertainty that animals may experience in the Anthropocene by the two constructs of uncertainty: (1) risk, or expected uncertainty, and (2) ambiguity, or estimation uncertainty (Dayan & Long, 1998; Ellsberg, 1961; Knight, 1921; Payzan-LeNestour & Bossaerts, 2011; Preuschoff & Bossaerts, 2007). The first type of uncertainty, risk, arises from environmental stochasticity, and cannot be predicted by an animal; however, they can learn about new risks of mortality caused by HIREC (e.g., collisions with vehicles, introduction non-native predators, electrocution on power lines) by observing conspecific and hetero-specific behavior and mortalities (Sih et al., 2010). Ambiguity occurs due to the animal's incomplete knowledge of the environment, and can be improved by obtaining information (O'Reilly, 2013). Indeed, Dall et al. (2005) have suggested that animals must adjust their behavior and redirect their time and energy to information gathering to adapt to changing ecological circumstances, but this requires Type 2-like mental effort.

The many distractions and novel cues that animals encounter in environments affected by HIREC, and the increased need for learning and information gathering, are bound to be highly cognitively demanding. The research reviewed above all suggests that animals increased cognitive load under HIREC will result in riskier, more error-prone decisions, and a greater reliance on heuristic, Type 1-like processes. Having to fall back on heuristic decision-making would not be a problem under stable, predictable environmental conditions where intuitive responses tend to be both correct and fast (Bago & De Neys, 2017; Evans, 2019), but under novel, changing conditions, cue-response systems are likely to be mismatched (Greggor et al., 2019; Sih, 2013), and certain cues may supersede all others to lead to maladaptive decisions (Blumstein & Bouskila, 1996; Lima & Dill, 1990). The impact of inaccurate decision-making under HIREC is increasingly obvious and its eventual effects on different animal populations is not fully understood (Pollack et al., 2022), making this an urgent area for further research.

8 CONCLUSIONS

Our review of DPT and BDT showed that there are several similarities in these approaches. Both theories suggest that wild animals are likely to respond with quick heuristic decisions when they have been primed by experience or evolutionary history and the situation is routine and predictable, and/or signals/cues have certain relationships with the outcome. Both theories also suggest that more intensive, time-consuming cognitive effort should result from novel, surprising, computationally complex situations, and signals/cues with unpredictable or uncertain relationships to an outcome. Considering this, we discussed the amount of cognitive effort animals with different socio-ecologies may have to exert in different situations. In stable systems, evolution and learning prepares animals with accurate cue-response systems that suggest that heuristic Type 1-like decision-making should always be our null hypothesis. However, current rapid human-induced environmental changes are leading to a plethora of novel situations for animals and bombarding them with cues that can cause cognitive overload and a greater tendency to satisfice and rely on heuristic decision-making. This is likely one reason that maladaptive decision-making occurs and leads animals into ecological and evolutionary traps.

AUTHOR CONTRIBUTIONS

Julie A. Teichroeb: Conceptualization (equal); Data curation (equal); Funding acquisition (equal); Project administration (equal); Supervision (equal); Visualization (equal); Writing—original draft (equal); Writing—review & editing (equal). Eve A. Smeltzer: Conceptualization (equal); Writing—original draft (equal); Writing—review & editing (equal). Virendra Mathur: Conceptualization (equal); Writing—original draft (equal); Writing—review & editing (equal). Karyn A. Anderson: Conceptualization (equal); Writing—original draft (equal); Writing—review & editing (equal). Erica J. Fowler: Conceptualization (equal); Writing—original draft (equal); Writing—review & editing (equal). Frances V. Adams: Conceptualization (equal); Writing—original draft (equal); Writing—review & editing (equal). Eric N. Vasey: Conceptualization (equal); Writing—original draft (equal); Writing—review & editing (equal). L. Tamara Kumpan: Conceptualization (equal); Writing—original draft (equal); Writing—review & editing (equal). Samantha M. Stead: Conceptualization (equal); Writing—review & editing (equal). T. Jean M. Arseneau-Robar: Conceptualization (equal); Data curation (equal); Visualization (equal); Writing—original draft (equal); Writing—review & editing (equal).

ACKNOWLEDGMENTS

During the writing of this manuscript, the authors were supported by funding from the Natural Sciences and Engineering Research Council of Canada (RGPIN-2023-03613). We are grateful to Dr. Amanda Melin and two anonymous reviewers that helped improve the paper.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest.

ETHICS STATEMENT

This work adhered to the American Society of Primatologists (ASP) Principles for the Ethical Treatment of Non-Human Primates and Code of Best Practices for Field Primatology. The authors declare no conflict of interest.

Open Research

DATA AVAILABILITY STATEMENT

This is a review paper and does not contain any original data.