Computational facial analysis for rare Mendelian disorders

Abstract

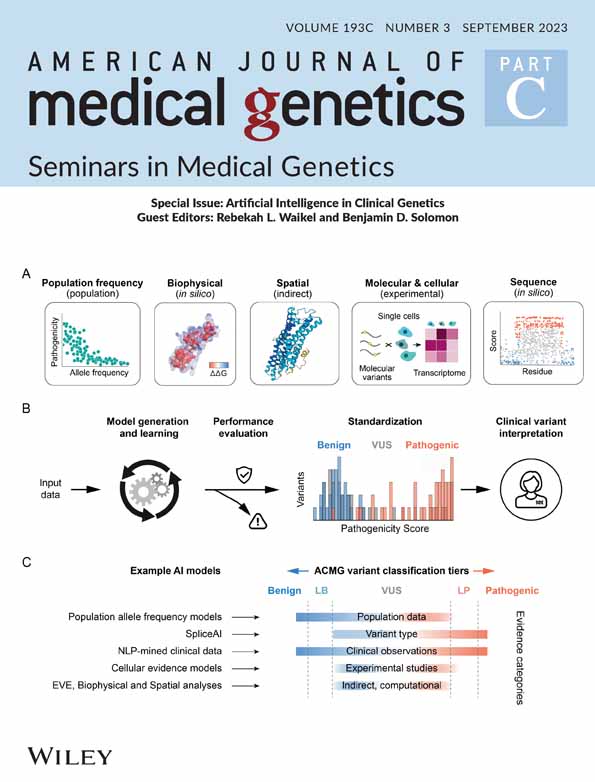

With the advances in computer vision, computational facial analysis has become a powerful and effective tool for diagnosing rare disorders. This technology, also called next-generation phenotyping (NGP), has progressed significantly over the last decade. This review paper will introduce three key NGP approaches. In 2014, Ferry et al. first presented Clinical Face Phenotype Space (CFPS) trained on eight syndromes. After 5 years, Gurovich et al. proposed DeepGestalt, a deep convolutional neural network trained on more than 21,000 patient images with 216 disorders. It was considered a state-of-the-art disorder classification framework. In 2022, Hsieh et al. developed GestaltMatcher to support the ultra-rare and novel disorders not supported in DeepGestalt. It further enabled the analysis of facial similarity presented in a given cohort or multiple disorders. Moreover, this article will present the usage of NGP for variant prioritization and facial gestalt delineation. Although NGP approaches have proven their capability in assisting the diagnosis of many disorders, many limitations remain. This article will introduce two future directions to address two main limitations: enabling the global collaboration for a medical imaging database that fulfills the FAIR principles and synthesizing patient images to protect patient privacy. In the end, with more and more NGP approaches emerging, we envision that the NGP technology can assist clinicians and researchers in diagnosing patients and analyzing disorders in multiple directions in the near future.

1 INTRODUCTION

Rare disorders affect more than 6% of the global population (Ferreira, 2019). Due to the vast differential of the diseases, reaching a diagnosis is challenging and often called a “diagnostic odyssey” (Baird et al., 1988). Therefore, clinicians must examine all information, such as clinical description, frontal image, and exome data, to diagnose the patient. Because a proportion of patients with facial dysmorphism present a specific facial pattern (facial gestalt), recognizing the facial gestalt can help clinicians to come up with a diagnosis. However, it highly relies on the clinician's experience. If the clinician has never seen the disorder before, it is nearly impossible to recognize the facial gestalt.

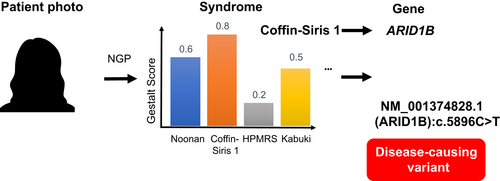

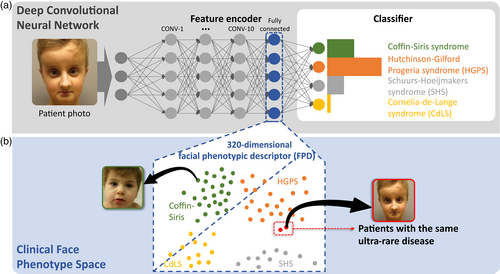

With the recent advancements in computer vision and machine learning, many next-generation phenotyping (NGP) approaches have been developed to facilitate the diagnosis by two-dimensional facial image analysis (Cerrolaza et al., 2016; Dingemans et al., 2022; Dudding-Byth et al., 2017; Ferry et al., 2014; Gurovich et al., 2019; T.-C. Hsieh et al., 2022; Hustinx et al., 2023; Liehr et al., 2018; Porras et al., 2021; Shukla et al., 2017; van der Donk et al., 2019). The NGP approaches provide a list of similarity scores to the syndromes by analyzing the patient's frontal image (Figure 1). It can dramatically reduce disease search space and further be integrated into the exome variant prioritization pipeline (Hsieh et al., 2019).

Because the NGP approach, such as GestaltMatcher (Hsieh et al., 2022), can quantify the facial phenotypic similarity between two patients, we are able to delineate the facial phenotype of the disease. The similarities among the patients can quantify facial gestalt presenting in a cohort by measuring their facial phenotypic similarity. Moreover, the clustering analysis of patients can be used to detect the sub-structures underlying a disease-causing gene, which further facilitates the lumper and splitter decision.

Although the NGP technology is powerful and effective, many limitations remain. Firstly, researchers often question its reproducibility. Because faces are sensitive data, many NGP approaches cannot make their training data accessible in the publication. This limitation slows down the development of NGP for facial analysis. Therefore, GestaltMatcher Database (GMDB) was proposed as the first medical imaging database for rare disorders that fulfills the FAIR principle, which is findable, accessible, interoperable, and reusable (Lesmann, Lyon, et al., 2023). GMDB has been used to develop, and benchmark two NGP approaches (Hustinx et al., 2023; Sümer et al., 2023).

Moreover, another limitation is the privacy issue. As facial images can easily identify a specific person, patients are often unwilling to consent to the use of facial images due to privacy concerns. Hence, allowing data sharing while preserving patient privacy has become an important topic. First, we can synthesize the patient images using the generative adversarial network (GAN) (Duong et al., 2022) or diffusion models (Pinaya et al., 2022). In addition, another solution is anonymizing the photo by removing the general personal features while preserving the dysmorphic facial features. Although the aforementioned solutions have been proven in other research fields, it still requires proof-of-concept works for rare disorder research in the future.

In the end, NGP approaches are not limited to 2D facial image analysis. Many approaches have been proposed that predict the disorders based on 3D facial image analysis (Bannister et al., 2023; Hallgrímsson et al., 2020). However, this review paper mainly focused on 2D image analysis.

- The key NGP approaches starting from CFPS (Ferry et al., 2014), DeepGestalt (Gurovich et al., 2019), to GestaltMatcher (Hsieh et al., 2022);

- The analysis that NGP approaches can perform (1) disorder prediction, (2) variants prioritization and interpretation, and (3) facial phenotype delineation for research purposes;

- Future directions and discussion: enabling global collaboration for the FAIR database to facilitate NGP development and synthesizing patient images to protect privacy.

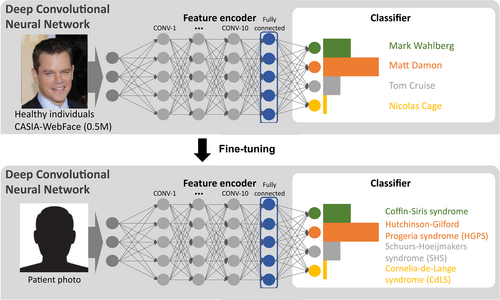

2 DEEP LEARNING DRIVES THE NGP DEVELOPMENT FOR DISORDER PREDICTION

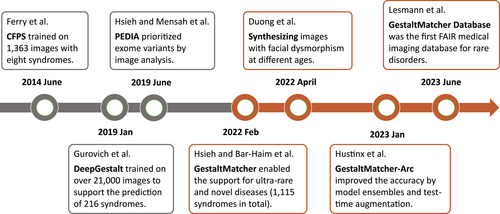

With the advancement of deep learning in facial recognition, NGP technology has significantly progressed over the last 10 years (Figure 2 and Table 1). In 2014, Ferry et al. presented Clinical Face Phenotype Space (CFPS) formed by the feature vectors extracted from the model trained on 1363 patients with eight syndromes and 1500 healthy controls (Ferry et al., 2014). Later, DeepFace (Taigman et al., 2014) showed the first time human-level identification accuracy when benchmarked on the Labeled Faces in the Wild dataset (LFW) (Huang et al., 2007). Since then, many deep convolutional neural networks (DCNN) architectures have been proposed to improve prediction accuracy, such as VGGFace (Parkhi et al., 2015), SphereFace (Liu et al., 2017), CosFace (Wang et al., 2018), and ArcFace (Deng et al., 2019). However, the training scale of the typical face recognition model is enormous, from 0.5 million images (CASIA-WebFace [Yi et al., 2014]) to 17 million images (Glint360K [An et al., 2021]). The rare disorders dataset cannot reach this scale to allow us to train the model from scratch.

| Resource | Link | Type | Note |

|---|---|---|---|

| CFPS (Ferry et al., 2014) | https://github.com/ChristofferNellaker/Clinical_Face_Phenotype_Space_Pipeline | NGP approach | Disorder prediction. |

| DeepGestalt (Gurovich et al., 2019) | https://www.face2gene.com/ | NGP approach | Disorder prediction. |

| PEDIA (Hsieh et al., 2019) | https://www.pedia-study.org/ | NGP approach | Prioritizing exome variants by facial image analysis. |

| GestaltMatcher (Hsieh et al., 2022) | https://www.gestaltmatcher.org/ |

NGP approach | Disorder prediction, patient matching, and quantifying the similarity between patients with one or multiple disorders. |

| GestaltMatcher-Arc (Hustinx et al., 2023) | https://github.com/igsb/GestaltMatcher-Arc | NGP approach | Utilizing test-time augmentation, model ensembles, and upgrading network architectures to improve the GestaltMatcher performance. |

| Image synthesis (Duong et al., 2022) | https://github.com/datduong/Classify-WS-22q-Img | NGP approach | Using StyleGAN to synthesize patient images at different ages. |

| FaceMatch (Dudding-Byth et al., 2017) | https://facematch.org.au/home | Database | Matching patients with a similar facial phenotype and genetic condition (with photo). |

| MyGene2 | https://mygene2.org/MyGene2/ | Database | Connecting patients with a similar phenotype and genetic condition (with photo). |

| GestaltMatcher Database (Lesmann, Lyon, et al., 2023) | https://db.gestaltmatcher.org/ | Database | FAIR medical imaging database enabling clinicians to browse patient images and providing a resource for developing the NGP approach. |

In 2019, a breakthrough NGP approach, DeepGestalt, was published by FDNA Inc. (Gurovich et al., 2019). It trained on over 17,000 patient images with 216 different disorders. The main difference between DeepGestalt and the methods before was that it utilized transfer learning to fine-tune the model further to learn the facial dysmorphic features better. The model training consisted of two steps (Figure 3). The first step was to train the DCNN on the 0.5 million images in CASIA-WebFace (Yi et al., 2014) to perform the individual classification in order to learn the general facial features. The second step was based on the previous step. They replaced the last layer (classification layer) from the different individuals with different disorders and kept the other parameters. Then the model was kept training on 17,000 images with 216 disorders. With transfer learning, DeepGestalt learned the facial dysmorphic features from the patient images without needing a huge patient dataset like the typical face recognition dataset such as CASIA-WebFace. Instead, DeepGestalt was built on top of it. Moreover, it used the ensemble method to crop the face into different regions (upper face, middle face, lower face, and full face) and trained the model for each region. Ultimately, it averaged the prediction values over all the regions to improve prediction accuracy and stability. On the test set of 502 images, DeepGestalt demonstrated 91% of top-10 accuracy and outperformed the human expert on specific disease classification tasks. DeepGestalt has been running behind the Face2Gene platform (https://www.face2gene.com/) for several years, and it is used by clinicians in their daily diagnostic process.

3 GESTALTMATCHER OVERCOMES THE LIMITATION OF RARE DISORDERS

Although DeepGestalt is powerful and efficient for diagnosing patients with facial dysmorphism, it still has three main limitations (Hsieh et al., 2022). First, DeepGestalt cannot support ultra-rare disorders. The model behaves like a human expert who needs to see the images to learn about the disorder. If the model did not see the disorder before or only saw very few images of that disorder, the model cannot support these disorders. Hence, scalability is the second limitation. A classification approach like DeepGestalt cannot support novel disorders. It can only predict the disorders trained in the model (in the classification layer of Figure 4). If we want to support a novel disorder not in the model, we need to include this novel disorder in the training set and retrain the model, which requires considerable time and money. The last limitation is that DeepGestalt cannot measure the similarity between two patients in order to perform patient matching, cohort-wise facial similarity analysis, and lumper and splitter analysis. Therefore, Hsieh and Bar-Haim et al. (Hsieh et al., 2022) proposed GestaltMatcher to overcome these limitations.

GestaltMatcher can be seen as an extension of the DeepGestalt approach. It utilized the same architecture as the one in DeepGestalt, but it used the feature encoder of DeepGestalt to encode the image to a 320-dimensional facial phenotype descriptor (FPD) (Figure 4). The FPDs later spanned the CFPS, and the cosine distance in CFPS quantified the similarity between the two images. With this approach, they can match and predict all disorders with at least one patient in the CFPS. They were no longer restricted to the disorders trained in the model. For example, they presented the scalability of increasing the supported syndromes from 299 to 1115. Moreover, the similarity between images can be used to measure the facial similarity between a given cohort or two cohorts. It led to the main difference between DeepGestalt and GestaltMatcher, which enables the researchers to analyze facial gestalt presenting in a cohort (novel disease gene) or the difference between the two disorders. Originally, DeepGestalt was mainly used for diagnostic purposes. GestaltMatcher can be further used for research purposes.

The concept of DeepGestalt and GestaltMatcher were crucial for both disorder prediction and research purposes. However, DeepGestalt and GestaltMatcher were based on the architecture and the training dataset from Yi et al. (Yi et al., 2014). Afterward, there were more advanced architectures and larger training datasets available. Therefore, based on the original version of GestaltMatcher (GM-Hsieh-2022), Hustinx, et al. (Hustinx et al., 2023) first upgraded the architecture to iResNet and used Glint360K (An et al., 2021) as the pre-trained dataset. Test-time augmentation and model ensemble were further applied. The improvement of prediction accuracy was increased by 100% compared to GM-Hsieh-2022. This work was a good example of how the open-source approach with the open-source dataset can be used for researchers to propose new methods with the same benchmark dataset.

4 NGP FACILITATES VARIANTS PRIORITIZATION AND INTERPRETATION

NGP approaches have proven the capability to narrow down the search space for candidate disorders, but also the possibility to be integrated into the variant prioritization pipeline. In 2019, Hsieh et al. (Hsieh et al., 2019) presented the work, Prioritization of Exome Data by Image Analysis (PEDIA), by integrating gestalt score generated by DeepGestalt, feature scores from Phenomizer and BOQA (Bauer et al., 2012; Köhler et al., 2009), with variants deleteriousness scored with CADD (Kircher et al., 2014). PEDIA achieved 99% of top-10 accuracy, 10% higher than without using the gestalt score. Furthermore, the PEDIA approach has been applied to the German nationwide rare disease project and proved that facial analysis improved the efficiency of the exome variants prioritization (Schmidt et al., 2023).

Moreover, GestaltMatcher could suggest to the clinician to perform the whole genome sequencing when no pathogenic variants were found. Brand et al. presented a patient with a 4.7 kb deletion in KANSL1 (Brand et al., 2022). Initially, the authors did not find the pathogenic variants with exome sequencing. However, GestaltMatcher indicated a high facial similarity to Koolen-de Vries syndrome. After performing the whole genome sequencing, the author identified the 4.7 kb deletion in KANSL1. Therefore, genome sequencing can be indicated if there is a high facial gestalt score and the exome sequencing analysis is inconclusive.

Although many researchers have shown the advantage of including facial analysis in variant classification, an objective way of interpreting the facial phenotypic match is still lacking. In the ACMG variant classification guideline, a phenotypic match is considered the supporting information PP4 (Patient's phenotype or family history is highly specific for a disease with a single genetic etiology) (Richards et al., 2015). However, it is usually based on subjective assessment. Therefore, Lesmann et al. (Lesmann, Klinkhammer, & Krawitz, 2023) showed that the gestalt score could be integrated with the Bayesian classification framework proposed by Tavtigian et al. (Tavtigian et al., 2018). With the likelihood ratio calculated by the Bayesian framework, the facial phenotypic match can be upgraded to “Moderate” or even “Strong” from “Supporting.” With the facial phenotypic match, the Variant of Uncertain Significance (VUS) could be elevated to likely pathogenic. Hence, there is a need to discuss the potential of adding “PM7” for the phenotypic match quantified by the computational approach.

5 FACIAL PHENOTYPE DESCRIPTORS DELINEATE FACIAL GESTALT OF DISORDERS

The facial phenotypic similarity between two patients is quantified by the cosine distance between two FDPs encoded by GestaltMatcher. This similarity can be utilized to measure pairwise similarity and cohort-wise similarity that provide the understanding of the similarity from two different angles. In 2019, Marbach et al. presented two unrelated patients with the same de novo variant in LEMD2 matched by the facial descriptors extracted from the DeepGestalt model (Marbach et al., 2019). It was one of the first examples that the NGP approach facilitated patient matching and objectively assessed the facial similarity presented in a cohort. It was also the proof-of-concept of the GestaltMatcher approach.

5.1 Measuring facial gestalt of a disorder

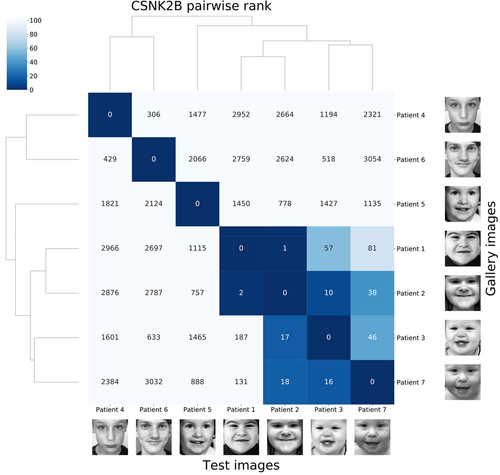

Afterward, GestaltMatcher was utilized to measure the facial similarity of the cohort with the same disorder. Asif et al. were the first ones to utilize GestaltMatcher to describe the facial similarities of the patients with a new intellectual disability-craniodigital syndrome (IDCS) associated with CSNK2B, which is distinct from POBINDS (OMIM #618732) (Asif et al., 2022). The pairwise rank matrix in Figure 5 shows the similarities among the seven CSNK2B patients compared to the control cohort (3533 images with 816 disorders in Face2Gene internal database). With the rank matrix, we can investigate the similarity between two patients in a real-world scenario. For example, when testing patient 2, patient 1 was on the first rank. It indicated that in the CFPS with 3533 images and seven CSNK2B patients, patient 1 was the most similar (nearest) patient to patient 2. The clustering dendrogram further revealed that patients 1, 2, 3, and 7 with the new IDCS formed a cluster different from patients 4, 5, and 6, which manifests POBINDS. Later on, many genes were analyzed by GestaltMatcher (Aerden et al., 2023; Blackburn et al., 2023; Ebstein et al., 2023; Guo et al., 2022). Instead of only visualizing the pairwise comparison, Blackburn et al. presented an objective measurement showing that 53.72% of the random sampling of CUL3 patients presented a similar facial phenotype. The authors first calculated the similarity threshold to distinguish whether the cohort with similar facial gestalt from the random cohort was based on the pairwise distance distribution of the patients in GMDB (Blackburn et al., 2023). If more than 50% of the random sampling of a given cohort (CUL3 in this case) was below the distance threshold, it indicated that this cohort presented a similar facial phenotype. Conversely, if more than 50% of the random sampling were above the threshold, the patients in this cohort had facial phenotypes similar to the random selection.

5.2 Comparing facial phenotypic similarity among multiple disorders

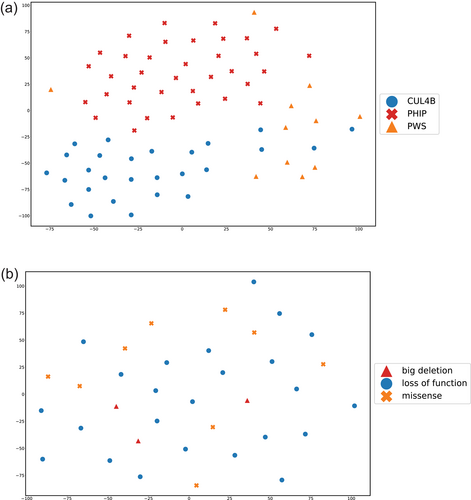

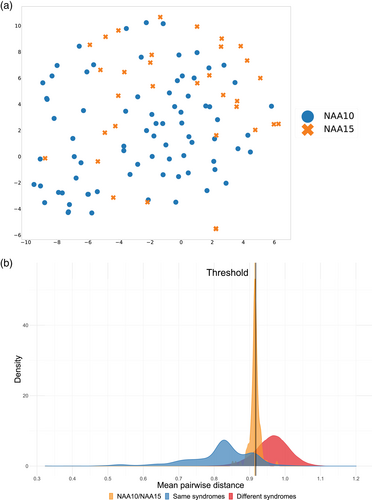

The same concept of similarity measurement can also be applied to compare different disorders. Kampmeier et al. utilized tSNE to visualize the clustering among the patients with PHIP, CUL4B, and Prader-Willi syndrome (PWS; OMIM #176270) in two-dimensional space (Kampmeier et al., 2023). The tSNE plot showed that three groups formed three clusters, although some CUL4B patients were similar to PWS patients. Besides, the authors further perform the same analysis based on the different variant types in PHIP cohort to investigate whether we can distinguish patients based on the mutation types (Figure 6). Moreover, Lyon et al. utilized GestaltMatcher to show that the patients with NAA10-related neurodevelopmental syndrome and NAA15-related neurodevelopmental syndrome shared a similar facial phenotype (Lyon et al., 2023). The tSNE plot in Figure 7 showed that the patients with NAA10 and NAA15 are similar and did not distribute in two clusters. The authors performed random sampling to calculate the distance distribution between NAA10 and NAA15 patients (orange distribution in Figure 7b). Then they compared the distribution coming from the same disorder (blue distribution in Figure 7b) and the one from two different disorders (red distribution in Figure 7b). In the end, 67% of the sampling was below the threshold, indicating two disorders sharing a similar facial phenotype. The similarity between the two disorders can contribute to the lumping and splitting decision. Averdunk et al. found that the facial phenotype of patients with disease-causing variants in CRIPT was highly similar to Rothmund-Thomson syndrome (RTS; OMIM #618625) (Averdunk et al., 2023). Together with other genetic information, the authors suggested that the disorder associated with CRIPT could be merged into RTS phenotypic series.

6 ENABLE GLOBAL COLLABORATION FOR THE FAIR MEDICAL IMAGING DATASET TO ACCELERATE THE NGP DEVELOPMENT

One of the reasons that facial recognition progressed so fast over the last 10 years was the open dataset. All researchers could use the same benchmark dataset to propose a new approach. However, it was the main bottleneck in the research field of rare disorders because it is impossible for a single institute to collect a dataset with the same scale as the healthy individual dataset. The dataset used to develop DeepGestalt was the largest patient image dataset (more than 30,000 images) in the Face2Gene platform. The dataset consisted of London Medical Database (Winter & Baraitser, 1987) and the patient images uploaded to the Face2Gene platform. Nevertheless, this internal dataset cannot be shared with other institutes.

Hence, in 2019, Nellåker et al. proposed the Minerva Initiative to enable global collaboration for an open and shareable medical imaging database (Nellåker et al., 2019). Unfortunately, this project was not continued. In 2023, Lesmann et al. (Lesmann, Lyon, et al., 2023) developed GestaltMatcher Database (GMDB), which fulfills the FAIR principle. The FAIR principle stands for findable, accessible, interoperable, and reusable (Wilkinson et al., 2016). Until June of 2023, GMDB consisted of 7533 patients with 792 disorders. The majority of the data was collected from 2058 publications. Besides, the authors collected 1018 frontal images of 498 unpublished patients from users worldwide. This dataset has recently been utilized in developing many new NGP approaches (Hustinx et al., 2023; Sümer et al., 2023). In addition, as we know, most data comes from individuals of European ancestry. Global collaboration can also help us solve the long-standing data bias in ethnic backgrounds. Therefore, GMDB was a good example that could accelerate NGP development. In addition, GMDB enabled the clinicians to browse the patient images of each disorder which could assist clinicians in learning the facial phenotype of the disorder quickly without the need to search the images themselves. In the end, we envision more and more researchers willing to contribute to an open and shareable dataset, and this kind of data source can be connected to the other facial imaging database such as FaceMatch (https://facematch.org.au/) and MyGene2 (https://mygene2.org/MyGene2/).

7 SYNTHESIZE PATIENT IMAGES TO PROTECT PATIENT PRIVACY

Privacy concern is often the bottleneck in obtaining a patient's image and sharing the data because our faces quickly identify us. Over the last few years, Generative adversarial networks (GAN) have been used to synthesize fake facial images such as StyleGAN and CycleGAN (Karras et al., 2020, 2018; Zhu et al., 2017). Recently, diffusion models have also proven the high performance of facial image synthesis (Kim et al., 2023). For example, a website such as this person does not exist (https://thispersondoesnotexist.com/), can generate a synthesized facial image, which is hard to distinguish whether it is real or fake. The same concept can also be applied to the rare disorder: this patient does not exist.

In 2022, Duong et al. utilized StyleGAN (Karras et al., 2020) to synthesize patient facial images with Williams syndrome and 22q11.2 deletion syndrome at different ages (Duong et al., 2022). Based on this work, we can train the network to learn the dysmorphic features of the disorder. Technically, we can synthesize the face with any disorder and assign the parameters such as ethnicity, age, and sex. However, future analysis on a larger dataset with more disorders is required. In addition, the synthesis of disorder with only a handful of images will be challenging.

Another direction for protecting patient image is to anonymize the patient image. DeepPrivacy2 (Hukkelås & Lindseth, 2023) demonstrated how to use GAN to anonymize human figures and faces, and GANonymization further showed the ability to anonymize the faces while preserving the facial expression (Hellmann et al., 2023). As an extension, it could remove the identifying, individual facial features while preserving facial dysmorphic features. Nevertheless, applying this concept to patients with rare disorders still requires proof of concept.

8 OTHER FUTURE DIRECTIONS

Despite the fact that NGP approaches have proven helpful in diagnosing patients, it is still often considered the “black box.” Therefore, it is crucial to utilize eXplainable AI (XAI) techniques such as layer-wise relevance propagation (LRP) (Montavon et al., 2019), local interpretable model-agnostic explanations (Lime) (Ribeiro et al., 2016), Grad-CAM (Selvaraju et al., 2017), Occlusion sensitivity map (Zeiler & Rob, 2014) to understand how the NGP approach makes the decision of predicting disorder. These algorithms can highlight relevant regions of the face images, which contribute to the decision providing insight into the model's prediction.

Although this article mainly focused on 2D facial images, 3D image analysis has emerged over the last few years. Hallgrímsson et al. proposed automated disorder prediction with patients’ 3D images (Hallgrímsson et al., 2020). Recently, Bannister et al. further compared the performance of the model trained on 2D and 3D images (Bannister et al., 2023). In the analysis, the model of the 3D image outperformed the model of the 2D image. However, we envision more analysis focusing on investigating the difference between 2D and 3D images. For example, the 3D image contains the depth information missing in 2D images. It remained questionable whether this information influenced the prediction performance. Nevertheless, the continuation of collecting patients’ 3D images will inevitably substantially improve the syndrome prediction in the future.

9 CONCLUSIONS

NGP technology for the rare disorder has rapidly progressed over the last decade. It can not only assist the clinicians in reaching a diagnosis but also can delineate the facial gestalt of disorders. To push this technology to the next level, we envision that all the databases and approaches can be connected through Beacon (Fiume et al., 2019), Phenopacket (Jacobsen et al., 2022), and Matchmaker Exchange (Buske et al., 2015) to assist clinicians and researchers in many directions in the next few years.

ACKNOWLEDGMENTS

The author would like to thank the Universitätsklinikum Bonn for the support of this article, and the patients and their families for contributing to all the study covered in this article. Open Access funding enabled and organized by Projekt DEAL.

CONFLICT OF INTEREST STATEMENT

There is no conflict of interest.

Open Research

DATA AVAILABILITY STATEMENT

Data sharing is not applicable to this article as no new data were created or analyzed in this study