Estimation of Bridge Girder Cumulative Displacement for Component Operational Warning Using Bayesian Neural Networks

Abstract

The main girders of suspension bridges experience significant deformation due to temperature variations, wind dynamics, and vehicle loads, causing movement at the girder ends and friction among components such as bearings, expansion joints, and viscous dampers. Early warning of the component anomaly is vital for preventive maintenance. This paper develops a two-stage framework for predicting girder end displacement to facilitate anomaly detection. First, a Bayesian neural network is employed to predict girder end cumulative displacement, accounting for uncertainties inherent in the prediction process. Second, an anomaly detection algorithm utilizing a Mahalanobis distance–based approach is implemented to provide warnings to operations based on both measured and predicted data. The effectiveness of the proposed approach is validated using data collected from multiple loads and displacement responses of a suspension bridge. The analysis reveals that the GEV distribution is highly proficient in capturing the underlying pattern of the cumulative displacement indicator, enabling the establishment of an appropriate threshold. This method proves successful in identifying anomalies in critical components such as viscous dampers, enhancing predictive and preventive maintenance practices and contributing to the longevity and safety of bridge infrastructure.

1. Introduction

Failures of critical elements, including expansion joint, bearing, and damper located close to the girder ends of suspension bridges and cable-stayed bridges, have witnessed a notable rise in recent years [1–3]. These failures have taken many by surprise, primarily because they were not foreseen during the initial design phase. As a result, it has become customary for bridge operators to conduct annual inspections specifically targeting these critical components [4–6]. This proactive approach aims to detect any potential issues early on, allowing for timely intervention and maintenance to ensure the continued safe operation of the bridge.

While visual inspection continues to be the primary method prescribed for assessing the condition of bridges in numerous countries [7–9], its effectiveness in detecting failure in the aforementioned critical components under comprehensive operational conditions remains limited. These conditions encompass temperature fluctuations, wind dynamics, and the dynamic loads imposed by vehicular traffic. Consequently, achieving accurate and reliable detection of anomalies in such components has proven challenging.

To address this challenge, widely deployed SHM systems offer a feasible solution. These systems utilize advanced sensor technologies to continuously monitor the structural responses of bridges in real time [10–13]. By collecting and analyzing data on parameters such as vibration, strain, and displacement, SHM systems enable engineers to assess the condition of critical components more effectively. Furthermore, SHM systems can provide insights into the structural integrity of bridges under diverse operational scenarios, including variations in environmental conditions and traffic patterns.

Machine learning has emerged as a powerful tool in the field of SHM, playing a pivotal role in the detection, diagnosis, and warning of structural component damages [14–16]. One main advantage of machine learning in SHM is to extract complex patterns and relationships from heterogeneous sensor data [17–19]. Sun et al. [20] delved into the utilization of the isolation forest algorithm to identify damper malfunctions in long-span cable-supported bridges. This data-driven algorithm, based on decision trees, proves effective in structural damage detection. Ni et al. [21] developed a Bayesian approach for alerting to damage in bridge expansion joints, formulating a probability anomaly indicator to assess joint condition. Similarly, Zhang et al. [22] combined Bayesian and Markov-switching theories to detect expansion joint damage, enhancing estimation accuracy and efficiency through the employment of the expectation–maximization algorithm. Raja et al. [23] introduced a simplified vibration-based method for bridge bearing condition assessment, utilizing a radar-generated dataset in a noncontact format for model establishment. Furthermore, Sun et al. [24] devised a hierarchical convolutional neural network (CNN) to forecast bridge longitudinal displacement and conduct condition assessments of bearings and expansion joints. This allows for the development of robust and adaptive damage detection algorithms capable of accurately identifying damage even with noise and variability in environmental conditions.

In the realm of machine learning, Bayesian methods represent a group of probabilistic approaches that leverage Bayes’ theorem to model uncertainty, make predictions, and undertake diverse tasks. Zhang et al. [25] employed Bayesian linear regression to develop a model to correlate vehicle loads with strain using SHM data. This model facilitated an evaluation of the bridge’s health condition by examining the correlation between the WIM system and the SHM system. Wang and Ni [26] presented the BDLM for predicting structural responses, which adeptly handles both stationary and nonstationary time-series data while capturing the evolving nature of structural strain responses over time. Yan et al. [27] proposed structural anomaly detection based on a Bayesian inference strategy by accommodating measurement uncertainty and the probabilistic model. Yuen et al. [28, 29] proposed a resilient sensor placement methodology grounded in Bayesian inference. This approach accounts for potential sensor failures that may occur within sensory systems. Huang et al. [30] used sparse Bayesian learning to detect structural stiffness losses and recover missing data. This approach leverages sparse joint learning across multiple models, harnessing information concerning relationships in both temporal and spatial domains.

Bayesian neural networks (BNNs) are a subset of machine learning techniques that incorporate Bayesian principles into neural network models. This means that BNNs treat model parameters as probability distributions rather than fixed values, allowing them to model uncertainty and provide probabilistic predictions. One of the primary contributions of BNNs to the field of machine learning lies in their capacity to quantify uncertainty associated with predictions. In contrast to traditional neural networks, which yield single-point estimates, BNNs generate probability distributions over potential outcomes. This capability is particularly valuable in applications where comprehending the level of prediction uncertainty holds significant importance. BNNs can be more robust to overfitting because they account for parameter uncertainty, since it incorporates a form of regularization through the prior distribution over weights.

The application of BNNs in SHM is an innovative and promising approach that addresses several key challenges in this field. BNNs provide probabilistic predictions, which means they can estimate the uncertainty associated with structural health assessments. Arangio and Bontempi [31] introduced a BNN-based approach for damage detection in bridges, illustrating the significant progress made in damage detection and model identification. Yin and Zhu [32] devised a specialized algorithm for the efficient design of BNN architectures in structural model updating. This approach harnesses the power of probabilistic finite element models, offering improved accuracy and efficiency compared to finite difference approximations. Vega and Todd [33] harnessed BNNs to advance SHM and facilitate cost-informed decision-making. BNNs exhibit the capability to learn from small, noisy datasets and demonstrate robustness against overfitting, setting them apart from traditional neural networks.

In the SHM community, there has been a continuous endeavor to achieve anomaly detection of bridges or their components. Also noted as outlier detection in machine learning terminology, the detection of anomaly or damaged state often involves unsupervised machine learning [34–36]. Owing to its logical simplicity and computation efficiency, Mahalanobis distance–based approaches emerge as approving among others [37–39]. Mosavi et al. [40] developed an unsupervised method for railway wheel flat detection, using acceleration data processed with Mahalanobis distance. It is achieved through a two-step process involving confidence boundary construction and damage classification. Sarmadi and Karamodin [39] proposed an anomaly detection approach using the KNN algorithm and Mahalanobis-squared distance, which was verified with modal frequencies of two bridge models.

Complicated environmental and loading conditions in full-scale cable-supported bridges may render damage detection a formidable task. To detect potential damages or anomalies in given datasets, it is imperative to define an accurate threshold for damage indicators, thus avoiding false alarms or missed detection. This requires a precise estimation of the distribution of the damage indicators. Especially, the assumption of a normal distribution of the training dataset could bring additional hurdles in accurately identifying damage due to the varying distribution of real measurements of damage indicators [41, 42].

This study introduces BNNs for estimating the cumulative displacement (CMD) of longitudinal girder ends as part of operational warning systems for bridge components such as viscous dampers. BNNs are well suited to accurately predict the CMD under uncertainties. The predicted results are then used to apply Mahalanobis distance for estimating the CMD indicator. The fitting of the GEV distribution accurately captures the underlying pattern of the Mahalanobis distance-based CMD indicator. Furthermore, a large number of datasets representing CMDs are generated using a Monte Carlo scheme to quantify the threshold for damage detection. The developed method can identify unusual patterns from the expected behavior by modeling the probability distribution of sensor data. This capability is essential for the early detection of damage in the operational condition of cable-supported bridges. This can help reduce false alarms in bridge health monitoring systems. It sheds light on reliable decision-making in the asset management process for bridge maintenance.

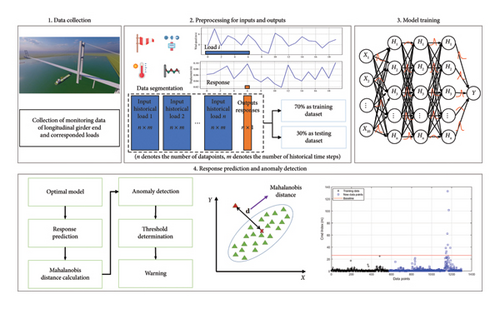

2. Proposed Approach

Cable-supported bridges are more sensitive than common girder bridges when they are subjective to ever-changing climate environment and traffic loads. They cause deformation of the main girder, which can induce damage in the expansion joint and bearing. The springs connecting the surface center beam and the support bar of expansion joints are often subject to damages. And frequency friction of the girder on the PTFE surface causes durability problems of bearings. During maintenance, engineers traditionally perform visual assessments. Nowadays, innovative techniques leverage measured data to extract structural health indicators, transforming raw response metrics into meaningful damage diagnostics.

- 1.

Data collection: In the initial phase, data pertaining to the longitudinal girder end displacement of a monitored bridge are gathered. These data are collected from both the upstream and downstream sides over a specified time period, ensuring a comprehensive dataset for analysis.

- 2.

Data preprocessing: Subsequently, the collected data undergo essential preprocessing steps to enhance its quality and utility. This preprocessing phase includes denoising, normalization, and segmentation. To reduce the impact of environmental noise, effective denoising techniques are applied. Before segmentation, the measured responses are normalized using the Z-score criteria, mitigating the influence of a wide range of values. In addition, the time-series responses are reconstructed, utilizing the historical and current loads as input and the current response as output. This forms the foundation of the training and testing dataset, with a split ratio of 7:3.

- 3.

BNN model training and architecture optimization: The third phase involves the training of the BNN model and the optimization of its architecture. Weight distribution within the model is determined based on its performance with the testing data, ensuring that the model adapts effectively to the dataset. Furthermore, optimal hyperparameters for the model’s architecture are identified using the control variates method, enhancing its predictive capabilities.

- 4.

Response estimation and operational warning: The final segment encompasses the estimation of future longitudinal girder end displacement and the alerting of their operational conditions. Leveraging the trained BNNs, the framework provides accurate estimations for future displacement scenarios and raises operational alerts when anomalous conditions are detected. The Mahalanobis distance cumulative longitudinal displacement of the girder is selected as an indicator to assess the performance of structural components.

Anomaly detection involves tracking longitudinal displacements, essentially spotting atypical data points that notably diverge from expected patterns. Although the CMD could be directly derived from the measured girder end displacement, the proposed method has some advantages: (1) BNNs offer robust uncertainty quantification, which is crucial for understanding the level of confidence in predictions, especially in fluctuating conditions with inherent uncertainties due to environmental and load variations. (2) BNNs can predict displacement patterns over time, helping to identify subtle deviations that might not be apparent when examining CMD alone. (3) Incorporating predictive modeling within SHM increases robustness and provides probabilistic insights in operational conditions.

3. Algorithms for Displacement Prediction and Anomaly Detection

3.1. BNNs

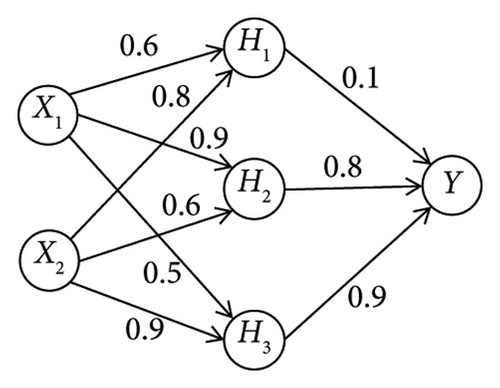

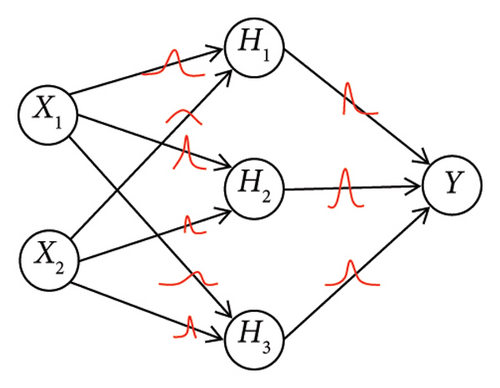

BNNs are a type of artificial neural network that incorporates Bayesian inference techniques into the modeling process. They are designed to provide uncertainty estimates along with predictions, making them useful in engineering scenarios where uncertainty quantification is important, such as in structural diagnosis, autonomous inspection, and response forecasting. BNNs are an extension of traditional backpropagate neural networks with some key differences: (1) Weight uncertainty: BNNs have distributions over weights, which capture uncertainty about the model’s parameters, as shown in Figure 2; (2) Bayesian inference: It allows BNNs to calculate not only point estimates (such as traditional neural networks) but also probabilistic estimates. BNNs will produce a distribution on possible outputs rather than a single output.

3.1.1. Variational Bayesian-Based Backpropagation

The training process in BNNs contains the Bayesian inference to assist the probabilistic modeling, instead of simply optimizing the neural network’s weights using backpropagation and gradient descent. A variational distribution, typically parameterized by learnable parameters, is introduced to approximate the true posterior distribution over weights. This distribution aims to match the true posterior as closely as possible. Variational Bayesian-based backpropagation is valuable in applications where understanding and quantifying confidence or uncertainty in predictions is essential.

Equation (4) is the variational objective (). The first term is the KL divergence (DKL) between the variational posterior and the prior, indicating the alignment of the weights with the prior distribution. The value of the second term hinges on the training dataset and quantifies the fitness between the model and data with the expected value under the variational distribution (). Optimizing objective equation (4) can be likened to a conventional regularization approach for practitioners, involving a trade-off between these two cost components.

3.1.2. Unbiased Monte Carlo Gradients

3.1.3. Minibatch Gradient Descent

As long as choosing π ∈ [0, 1]m and ensuring that , the expression serves as an unbiased estimation of . To elaborate further, if setting each πi = 2m−1/2m − 1, it results in a particular weighting scheme that prioritizes aligning with the prior distribution during the initial stages of a single training epoch. As training progresses, it gradually shifts focus toward fitting the data. This strategic adjustment contributes to enhancements in overall model performance.

3.2. Mahalanobis Distance–Based Anomaly Detection

3.2.1. Mahalanobis Distance

Mahalanobis distance is a statistical technique used to identify anomalous data points in a dataset. This method is an extension of the Euclidean distance but takes into account the correlation with reference samples of baseline. It is a way to calculate the distance between a point and a distribution in a multidimensional space. There are several key advantages of Mahalanobis distance–based anomaly detection. First, it takes into account the covariance among features, which is often crucial in real-world high-dimensional datasets where dimensions may be correlated. Second, it performs robustly across datasets with varying scales and units for different features by standardizing the distances. This capability enables it to effectively pinpoint anomalies that might not be discernible when evaluating each dimension in isolation.

- 1.

Mean and covariance calculation: Initially, the mean and covariance matrix of the dataset are calculated, which are the average of the dataset and the dispersion and correlation among features.

- 2.

Mahalanobis distance calculation: For a new data point, its Mahalanobis distance is calculated from the mean. The Mahalanobis distance quantifies the standard deviation separating a data point from the mean, accounting for the covariance between features. The formula for Mahalanobis distance for a data point (xSHM) with mean (μSHM) and the inverse of the covariance matrix (S−1), as shown in equation (10).

() - 3.

Anomaly threshold determination: Establishing a threshold is necessary to ascertain whether a data point qualifies as an anomaly. Data points exceeding this threshold in Mahalanobis distance are flagged as anomalies.

- 4.

Anomaly detection: When a new data point is presented, its Mahalanobis distance is calculated. If the distance surpasses the threshold, the data point is classified as an anomaly; otherwise, it is considered a normal data point.

3.2.2. Determination of Anomaly Threshold

In this section, the threshold determination is carried out in a statistical way using the CMD calculated from monitoring data. Apart from the widely adopted Gaussian distribution, GEV distribution is becoming more feasible due to its ability in capturing the extreme values in the tail of distributions.

When ξGEV = 0, the distribution is Type I or Gumbel extreme value distribution; when ξGEV > 0, the distribution is Type II or Fréchet extreme value distribution; and when ξGEV < 0, the distribution is Type III or Weibull extreme value distribution.

Detecting anomalies in structural response requires a significant number of samples to achieve a proper threshold, which is crucial for precisely identifying damage and distinguishing it from the normal state. After quantifying the GEV distribution, a Monte Carlo method–based scheme is carried out to take the 97% threshold for anomaly warning. Herein, the number of one million samples is taken as the standard number.

4. Data Acquisition From Bridge Health Monitoring System

4.1. The Bridge for Verification of the Method

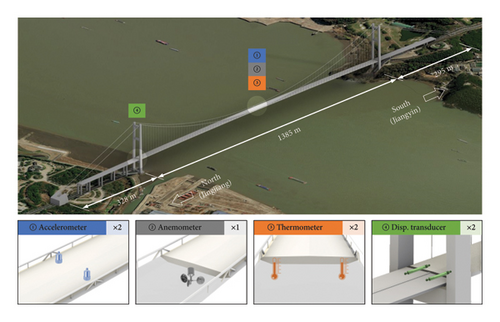

Jiangyin Yangtze River Bridge stands as a suspension bridge in Jiangsu, China, with a substantial center span stretching 1385 m. Its opening to traffic in 1999 marked the first kilometer-level bridge in China. Functioning as a six-lane expressway, the bridge has a clearance height beneath the structure measuring 50 m.

Distinguished by nonsuspended north and south spans, the former extends 336.50 m, while the latter measures 309.34 m. The main cable has 169 parallel wire strands at the main span, maintaining a sag-to-span ratio of 1/10.5. Each strand comprises 127 galvanized steel wires with a 5.35-mm diameter. The adjacent suspending points are separated at 16 m, featuring two suspenders at each connection with the steel box girder. Suspender lengths surpassing 10 m consist of parallel wire strands, while those under 10 m employ stranded wire ropes.

A streamlined steel box girder carries the live loads, standing at 3.0 m in height. The top and bottom plate widths measure 29.50 and 22.94 m, respectively, culminating in a total width of 36.90 m. Tower heights differ, with the north tower reaching 183.85 m and the south tower at 186.85 m. The north and south anchorages adopt gravity-type concrete design and embedded concrete structure, respectively.

Subsequent to its 1999 inauguration for traffic, Jiangyin Yangtze River Bridge experienced the implementation of a SHM system to capture real-time data on environmental conditions and structural dynamic behavior. The array of sensors incorporated into this system encompasses anemometers, temperature gauges, strain sensors, GPS antennas, displacement gauges, and accelerometers. The selected sensors for this study are illustrated in Figure 3 for reference. Notably, the displacement gauges are strategically positioned longitudinally at the north and south towers of the steel box girder. These sensors employ a steel wire to record the displacements, operating at 20 Hz. Each cross section accommodates two temperature sensors on decks, totaling four sensors per section. Operating within a temperature range of −30°C to 85°C, these sensors continuously capture temperature data at 1 Hz.

4.2. Data Acquisition

To validate the proposed approach, an extensive set of continuous measurements spanning 12 days is gathered from the SHM of Jiangyin Bridge. The original data from the monitoring system undergo preprocessing to yield a dataset with a one-minute resolution for all parameters. This dataset configuration, comprising a significant volume of data, strikes a balance between providing ample datasets for training deep learning models and effectively characterizing various loads. A total of 20,160 data points are prepared to assess the efficacy of the developed method. The input variables encompass structural temperature, wind speed, and wind direction. Concurrently, the output variable targeted for prediction is the displacement of the bridge bearings.

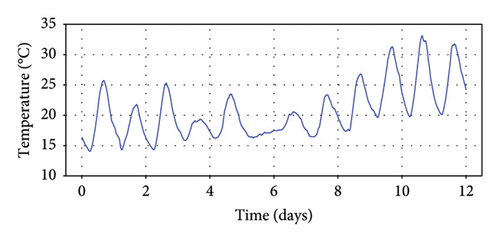

4.2.1. Measured Loads

The fluctuation in temperature holds substantial significance in the design considerations of cable-supported bridges, necessitating careful and precise quantification. The input for the developed model is derived from the one-minute mean values of these temperature measurements. Figure 4 illustrates the 12-day dataset, revealing a discernible daily pattern influenced by the subtropical monsoon climate. Throughout this period, the recorded temperatures have exhibited a range from 14°C to 33°C, exemplifying the climatic variability in the area of the bridge.

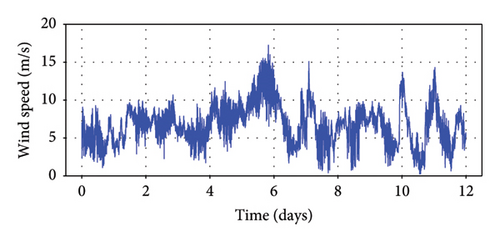

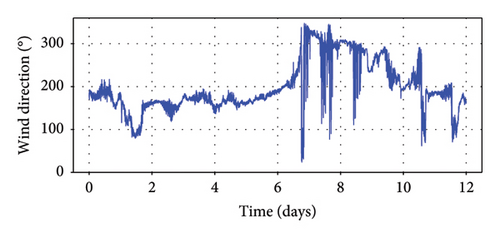

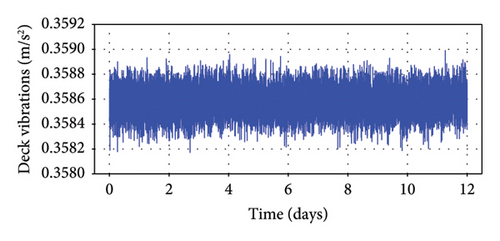

Due to their extended span and inherent flexibility, suspension bridges are particularly responsive to the impact of wind forces. A supersonic anemometer was positioned on the west side at the midspan. The signals captured by this instrument undergo processing at a one-minute interval. The wind speed and direction depicted in Figure 5 illustrate the distribution of measurements acquired over 12 days. Notably, the one-minute mean wind velocity can reach levels exceeding 18 m/s, indicative of moderately intense wind forces. For reference, the zero-degree reference is assigned to the north wind, while the east wind is denoted at 90°. Analysis of the wind direction reveals prevailing wind directions primarily in the northwest and west orientations. Figure 6 shows the measured vibrations of the main girder from the accelerometers, and it indicates the bridge is under normal operations in these days.

4.2.2. Longitudinal Displacements of Girder

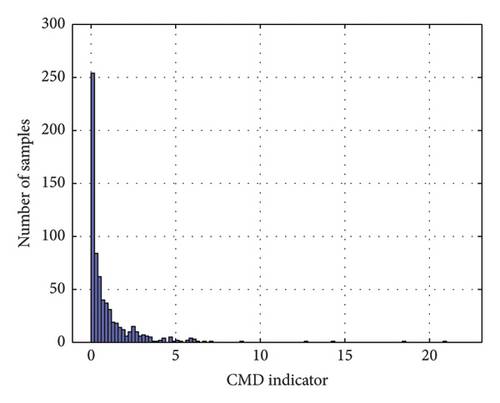

CMD emerges as a health condition indicator, reflecting the accumulated longitudinal girder movement within a specific time period. The distribution of the CMD indicator is visually represented in Figure 7.

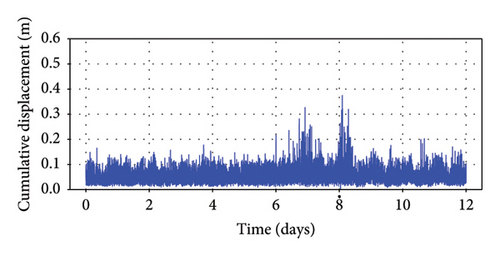

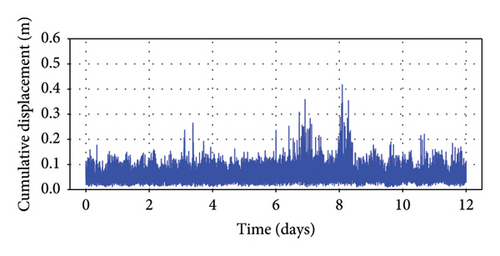

To obtain monitoring data for longitudinal displacement, measurements are taken from both the upstream and downstream locations of the northern extremity of the steel box girder. These extracted values are then utilized to calculate the CMDs at the girder ends for each minute. The results of these displacement calculations are depicted in Figure 8, where it is noteworthy that the amplitude of CMD within 1 minute may exceed 1 m.

5. Implementation and Discussion

The BNN model is trained utilizing the aforementioned loads and the longitudinal girder end displacement responses over a 12-day period, encompassing both the upstream and downstream sides of the structure. Denoising techniques are applied to mitigate noise interference. Historical load data are leveraged to enhance prediction accuracy. To ensure an appropriate denoised training and testing dataset, a split ratio of 7:3 is employed.

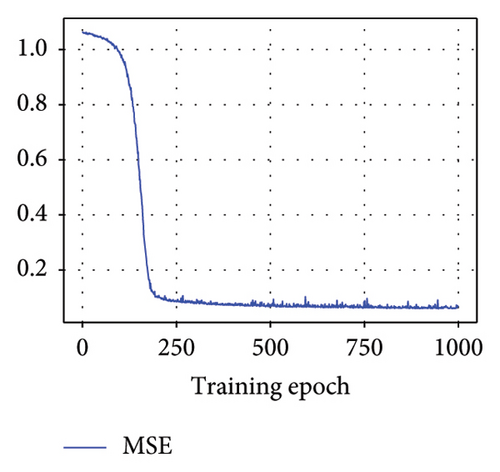

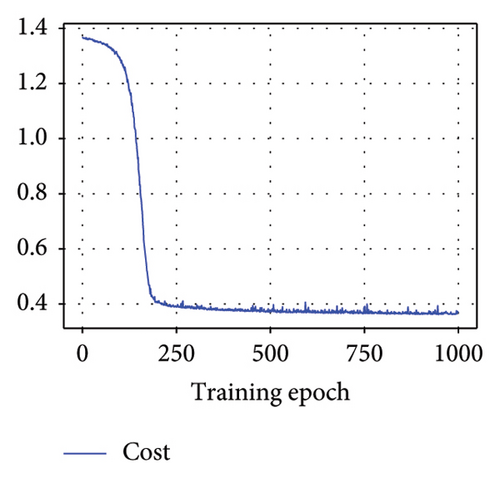

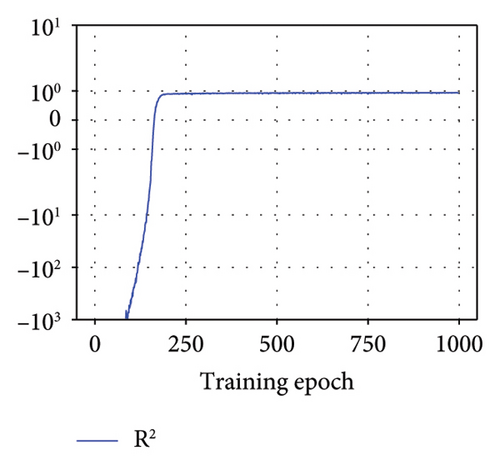

During the model training, MSE, total cost, and R2 were employed to fine-tune the BNN model and identify optimal parameters. A desirable model exhibits a low MSE value, indicating minimal estimation error, and a high R2 value, signifying a strong resemblance between predicted and actual responses. In addition, BNNs utilized the KL divergence between the variational posterior and the prior as a metric to quantify the model. This divergence measure offers insights into the alignment between these two probability distributions. A low total cost, as estimated by equation (6), serves as an indicator of the model’s strong performance in the realm of probability estimation.

5.1. Optimal Model Parameters

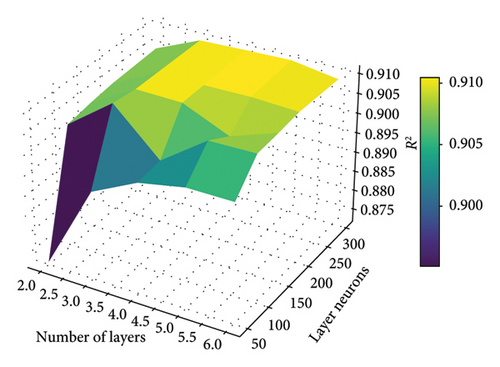

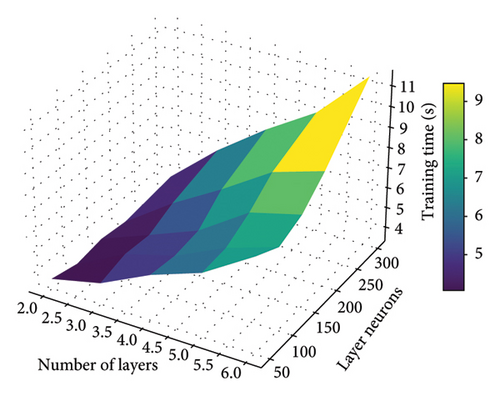

In order to guarantee the accuracy of the proposed BNN model in reconstructing longitudinal girder end displacement responses, we delve into the optimization of architectural parameters. Among these parameters, the number of hidden layers and neurons within each hidden layer is pivotal as they significantly impact the model’s architecture and performance. In Figure 9, several potential configurations for these parameters are investigated to assess their influence on model performance. The determination process is aided by employing R2 and training time consumptions for comparison purposes.

The evaluation of how changes in the number of hidden layers affect the model’s performance covers a range from 2 to 6 hidden layers. For the assessment of the number of neurons, we explored values ranging from 50 to 300. These comparative results of prediction accuracy and training time are depicted in Figures 9(a) and 9(b), respectively.

As the number of hidden layers increased, the estimation performance actually increased. Notably, the model with 3 hidden layers exhibited superior performance in displacement estimation while maintaining a good training efficiency. Beyond that, increasing the number of hidden layers led to seldom increase of prediction accuracy on the test dataset while increasing the training time. For layer neurons, it can be observed that an initial increase in the number of neurons improved displacement estimation performance. However, beyond a certain point, further increments in the number of neurons yielded limited gains in prediction accuracy. Consequently, the model with 150 neurons in each hidden layer emerged as the best-performing choice, offering a balance between performances and maintaining a relatively simple model architecture. Therefore, the optimal values for the number of hidden layers and the number of neurons are determined to be 3 and 150, respectively.

5.2. Model Tuning Procedure

Figure 10 displays the MSE, total cost, and R2 metrics for the untrained test dataset with the optimal model. This study computes the average MSE, total cost, and R2 across all steps within the epoch to represent the model performance. The MSE and total cost exhibit a notable reduction, converging to remarkably small values (MSE = 0.0686, cost = 0.389) and subsequently stabilizing. This trend indicates that the model achieves a high degree of prediction accuracy and demonstrates robust generalization capabilities. The model’s exceptional predictive performance is further substantiated by the consistently high R2 value (0.9087), underscoring its proficiency in capturing underlying patterns in the data.

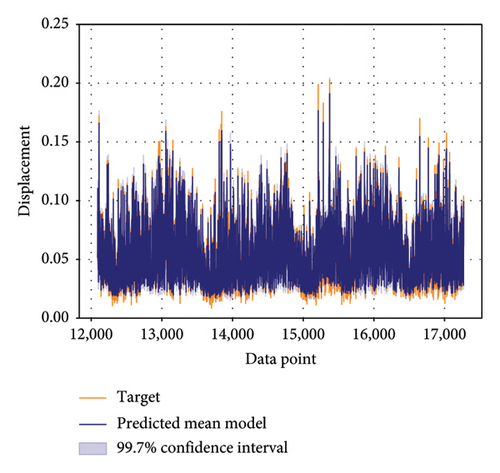

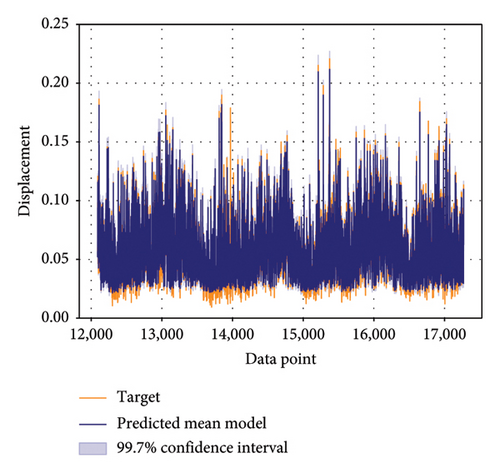

5.3. Prediction Results

Predicted outcomes accompanied by confidence estimates for untrained test data using the optimal BNN model on both the upstream and downstream sides are shown in Figure 11. These predictions were generated through the utilization of the optimal BNN model, applied to both the upstream and downstream sides. It is noteworthy that the R2 for predictions on both sides exceeds 90%, underscoring the accuracy and precision of the model in capturing the underlying patterns in the data. This high R2 value signifies a strong resemblance between the model’s predictions and the actual responses, reaffirming the model’s efficacy in delivering reliable and consistent results.

The confidence estimates that accompany the predicted outcomes provide valuable insights into the degree of certainty associated with each prediction. The elevated R2 values observed on both the upstream and downstream sides underscore the model’s robustness in making probabilistic predictions. These outcomes highlight not just the model’s proficiency in generating precise point estimates but also its capability to furnish dependable indicators of uncertainty. This resilient probabilistic aspect of the model equips stakeholders with the tools to make informed decisions, all while considering the intrinsic uncertainties prevalent in real-world scenarios. It fosters a nuanced comprehension of the data and acknowledges the potential variations in the observed responses.

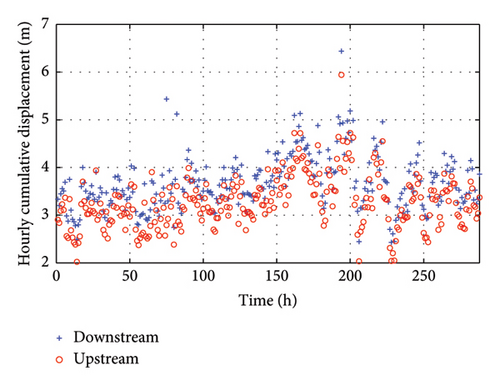

5.4. Anomaly Detection

Anomaly detection typically involves comparing baseline data with target data samples. Establishing the baseline for anomaly detection entails processing the 12-day CMDs, measured in one-minute intervals, into hourly CMD values, as depicted in Figure 12. Analysis reveals that the hourly CMD ranges from 2 to 6.5 m.

Subsequently, the Mahalanobis distance is calculated following the procedures described previously, and its distribution is illustrated in Figure 13. Estimation of parameters via maximum likelihood for the GEV distribution yields values of 0.1568, 0.2544, and 1.3459 for the location, scale, and shape parameters, respectively. Since the shape parameter ξGEV is positive, the distribution follows a Type II or Fréchet extreme value distribution.

With the GEV distribution determined, a Monte Carlo simulation scheme is adopted to derive the 97% threshold as the reference for alarm. A total of 1 million samples are generated, resulting in a calculated threshold of 26.07. The sample size proves to be a large enough number to obtain the precise estimate, and the obtained value is taken as the baseline for warning of hourly CMD.

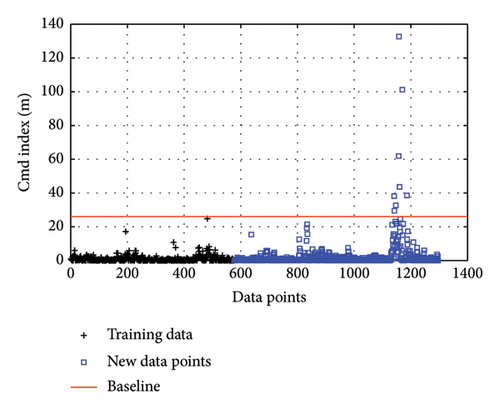

As shown in Figure 14, the training data represent the CMD index on both the upstream and downstream sides, while the new data are collected on bridge for 1 month. It is found that outliers appear in the newly collected datasets of the CMD index. As the index can reach as large as 130 m, there is a clear anomaly in the CMD, indicating damage in the components at the girder end. Inspection of the viscous dampers by engineers was carried out, which found malfunction due to leakage of high viscosity oil. The damper is illustrated in Figure 15. The malfunction caused deterioration in the damping of the device, and it was reflected in the proposed CMD index.

6. Conclusions

- 1.

The BNN model exhibits great performance, as evidenced by its impressive R2 value with 90% and the accompanying confidence estimates. This model demonstrates a profound understanding of the probabilistic nature of the data, positioning its significance for decision-making and risk assessment across various applications.

- 2.

The GEV distribution proves to be highly effective in capturing the underlying pattern of the CMD indicator. By accurately modeling the CMD with its parameters, the GEV distribution lays the groundwork for determining the threshold necessary for structural anomaly detection.

- 3.

The reliable threshold for the CMD indicator is successfully established through the proposed methodology, and its validation follows through the identification of malfunctioning viscous damper components in a real-world suspension bridge scenario. This practical verification underscores the robustness and applicability of our proposed approach in real-world engineering contexts.

Note that the proposed two-stage framework and machine learning–based anomaly detection techniques leverage applicable concepts, such as BNNs and Mahalanobis distance, which can be adapted to various types of bridge structures. Future work will focus on validating this framework across a broader range of structures, including different bridge types and environmental conditions, to further substantiate its generalizability.

Nomenclature

-

- GEV

-

- Generalized extreme value

-

- BDLM

-

- Bayesian dynamic linear model

-

- MLE

-

- Maximum likelihood estimation

-

- MAP

-

- Maximum A posteriori

-

- KL:

-

- Kullback–Leibler

-

- SHM

-

- Structural health monitoring

-

- BNNs

-

- Bayesian neural networks

-

- KNN

-

- K-nearest neighbors

-

- WIM

-

- Weigh-in-motion

-

- PTFE

-

- Polytetrafluoroethylene

-

- VAEs

-

- Variational autoencoders

-

- MD

-

- Mahalanobis distance

-

- GPS

-

- Global positioning system

-

- CMD

-

- Cumulative displacement

-

- MSE

-

- Mean square error

-

- R2

-

- Coefficient of determination

Disclosure

The interpretations and conclusions herein are solely the authors’ responsibility.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This study was financially supported by the National Key Research and Development Program of China under grant contract No. 2023YFC3805902 and Southeast University’s Start-up Research Fund (RF1028623304).

Acknowledgments

We thank the bridge monitoring company for their invaluable support.

Open Research

Data Availability Statement

The data that support this study are available on request from the corresponding author.