Facial Expression Recognition Method Based on Octonion Orthogonal Feature Extraction and Octonion Vision Transformer

Abstract

In the field of artificial intelligence, facial expression recognition (FER) in natural scenes is a challenging topic. In recent years, vision transformer (ViT) models have been applied to FER tasks. The direct use of the original ViT structure consumes a lot of computational resources and longer training time. To overcome these problems, we propose a FER method based on octonion orthogonal feature extraction and octonion ViT. First, to reduce feature redundancy, we propose an orthogonal feature decomposition method to map the extracted features onto seven orthogonal sub-features. Then, an octonion orthogonal representation method is introduced to correlate the orthogonal features, maintain the intrinsic dependencies between different orthogonal features, and enhance the model’s ability to extract features. Finally, an octonion ViT is presented, which reduces the number of parameters to one-eighth of ViT while improving the accuracy of FER. Experimental results on three commonly used facial expression datasets show that the proposed method outperforms several state-of-the-art models with a significant reduction in the number of parameters.

1. Introduction

With the rapid development of AI and computer vision, automatic facial expression recognition (FER) systems are now widely applied in various fields such as healthcare, transportation, education, and smart home technology. In the medical field, FER technology can help doctors monitor the emotional state of patients in real time, issue timely alerts to ensure the health of patients, and record long-term emotional state to assist in the control of disease [1]. In addition, in the field of classroom monitoring, FER system can be used to improve the quality of online class teaching, real-time monitoring of the emotional state of students and learning enthusiasm, to help teachers adjust the teaching strategy to improve students’ learning engagement [2]. In summary, FER technology has important significance and potential application research value in different fields.

The domain of FER has witnessed remarkable advances and transformations in recent decades. From the early stages, when it faced many challenges, to the current increase of deep learning and computer-based techniques, researchers are constantly exploring new methods and applications for FER. Datasets acquired under controlled laboratory conditions such as CK + [3] and JAFFE [4] have provided an important foundation for the development of the field, but at the same time, researchers are aware of the limitations of facial expression datasets acquired in a laboratory setting. Facial expressions captured in controlled laboratory settings often lack the diversity and complexity observed in real-life scenarios. This has led to a shift in research focus toward utilizing large-scale datasets gathered from natural scenes, including AffectNet [5], FERPlus [6], and RAF-DB [7]. However, FER in natural scenes faces many challenges, including complex backgrounds, head pose changes, and facial occlusion. These factors not only affect the quality of the dataset but also make it difficult to train expression classification networks.

With the emergence of deep learning techniques, most expression recognition methods have adopted convolutional neural networks (CNNs) [8] as their foundation, leading to significant advancements in this field. However, these CNN-based methods often lack robustness and are susceptible to common problems in natural scene datasets. CNNs achieve local-to-global image feature extraction by superimposing convolutional layers, but the approach is computationally intensive and prone to gradient vanishing problems, making it difficult for the network to converge. In contrast, the transformer [9] network transforms feature images into sequences of visual features and uses a global self-attention mechanism to model the contextual relationships between the sequences. The approach is not limited by local interactions, allowing the network to learn the relationships among feature sequences with more powerful feature extraction capabilities from the global perspective.

In 2021, Dosovitskiy [10] proposed the ViT, which has had a substantial impact on image recognition. Following its introduction, vision transformer (ViT) is widely applied in a variety of computer vision domains, such as the realm of expression recognition. Although there have been many research attempts to apply ViT to expression recognition tasks, the direct use of the original ViT structure tends to consume a lot of computational resources [11] and the performance needs to be further improved.

- 1.

We propose an orthogonal feature decomposition method to map extracted features to seven orthogonal sub-features to reduce feature redundancy.

- 2.

We propose an octonion orthogonal representation to preserve the intrinsic relationship between different orthogonal features.

- 3.

We propose to extend ViT to octonion field and build an octonion ViT module, which can capture rich local features while reducing the number of parameters to approximately one-eighth of ViT.

- 4.

Experimental results on three commonly used facial expression datasets show that the proposed method outperforms several state-of-the-art models with a significant reduction in the number of parameters.

2. Related Work

Early traditional face expression recognition methods achieved decent results on smaller-scale datasets, but, in the era of big data, dealing with massive facial expression data, these methods often demand significant time and computational resources to train the final network model. Additionally, enhancing the final recognition accuracy can be challenging. Recently, the accuracy and robustness of face expression recognition have been significantly improved with the advancement of deep learning algorithms. Compared to traditional methods, deep learning algorithm is capable of learning more abstract and advanced feature representations automatically, leading to better capture of the nuances of expression. In addition, deep learning algorithms can handle large-scale data, making them better able to cope with real-world face expression recognition problems.

Many researches have successively proposed expression recognition methods based on deep network models, especially the CNN. This network extracts local features of an image through operations such as convolution, thus realizing the recognition of expressions. For example, the direct application of CNN to FER tasks first appeared in 2012, when Rifaiet al. [12] first proposed to use CNN models to extract emotional feature from the pixels of a facial expression image, and then these emotional features are directly outputted as seven basic types of target expressions at the network output. Yu and Zhang [13] proposed an FER method for integrating multiple deep neural networks by adaptively assigning different weights to each network so that the integrated networks can complement each other. A single CNN is not good at extracting visual features from facial images, and some work has combined feature extraction algorithms with CNNs to achieve better results. Alphonse and Dharma [14] in 2017 proposed the enhanced Gabor filter to extract expression features from images and used discriminant analysis to extracted features for classification, which achieved a 35.40% recognition accuracy on the outdoor conditional dataset SFEW. Zadeh et al. [15] used the Gabor filter for feature extraction and input the feature images into the CNN for classification, with a shorter training time and higher accuracy.

Mainstream deep learning methods typically center on directly extracting deep features through CNNs. However, the filters in CNNs work mainly through local neighborhood operations. It can lead to the relationship among local and global features being overlooked. In contrast, transformer model is the sequential model based on the attention mechanism, which was originally utilized in the field of natural language processing. Recently, the transformer has also been applied to various computer vision tasks, including image classification, object detection, and facial recognition. In contrast to CNN, transformer employs the self-attention mechanism to establish attention-weighted relationships among image sequences, which better captures the global dependencies between features, leading to a more comprehensive understanding of the overall image information. However, since transformer lack a priori knowledge of features, they usually require richer datasets and longer training times, and these methods consume a lot of computational resources and need further improvement.

Some current works construct the hybrid model of CNN and transformer and use the feature maps processed by CNN as the input of transformer, which achieves good results. Ma et al. [16] first proposed the application of convolutional ViT to expression recognition, i.e., transforming face images into visual vocabulary sequences for expression recognition from a global point of view and summarizing the global and local facial information by attentional selective fusion (ASF) module, which guides the main stem to extract the required information. The experimental results demonstrate that the ViT has a much better classification result in the expression recognition task. Zhao et al. [11] proposed an innovative FER framework rooted in geometric guidance, leveraging graphical convolutional networks and transformers. This approach aims to discern emotions conveyed in video form. The POSTER [17] method achieved the SOTA performance of the FER algorithm at that time by integrating face key points and image features using the two-stream pyramid cross-fusion transformer network. Mao et al. [18] proposed the POSTER++ algorithm, which improves POSTER from three aspects: cross-fusion, two-stream, and multiscale feature extraction.

In recent years, there has been increasing interest in neural networks that utilize multidimensional number field values. Several works [19–21] have demonstrated that quaternion theory can effectively uncover the intrinsic relationships among various components and significantly decrease the number of parameters. Gaudet and Maida [22] and Zhou et al. [19] used quaternion operations to process multichannel images, which can consider the spatial dependence between channels during feature extraction. Octonions, as another form of generalization of quaternions and complex numbers, have been widely used in mathematical physics, especially in electrodynamics, electromagnetism, and quantum physics [23–25], and have great potential in the field of neural networks. The aim of this study is to develop a method that combines octonion with ViT to reduce the number of model parameters and improve the accuracy of expression recognition.

3. Methods

3.1. The Proposed Framework

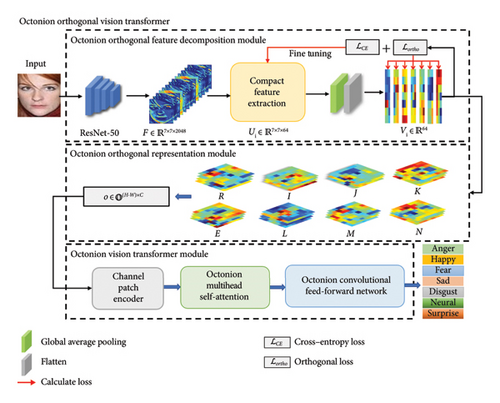

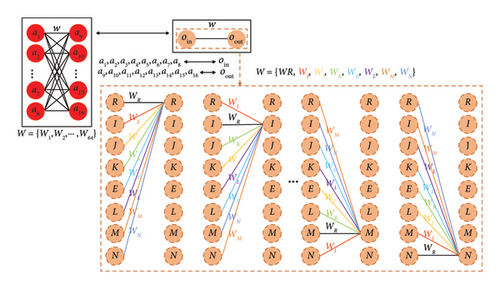

The architecture of the proposed model in this paper is illustrated in Figure 1. The model mainly consists of three parts: octonion orthogonal feature decomposition module, octonion orthogonal representation module, and octonion ViT module.

The original image is first fed into the octonion orthogonal feature decomposition module, and seven groups of orthogonal sub-features are obtained using the ResNet-50 model that adds orthogonal loss for fine-tuning. Then, the seven sets of orthogonal sub-features are constructed into an octonion matrix using the octonion orthogonal representation module. Finally, the inputs are fed into the octonion ViT module to process these octonion features and output the final FER results.

3.2. Octonion Orthogonal Feature Decomposition Module

Many FER-related tasks extract features from a pretrained backbone network. The pretrained model captures generic image features such as edges, textures, shapes, etc., which are useful in many image-related tasks. When performing face expression recognition tasks, these generic features can be used as a base to adapt to specific expression recognition tasks by adding additional layers (e.g., fully connected layers) on top to achieve higher accuracy. In this paper, the ResNet-50 model introduced in the subsection is chosen as the pretrained backbone network.

To reduce feature redundancy between features extracted from the pretraining skeleton, from the perspective of strengthening the difference between the features and enriching the diversity of the features, this paper proposes an orthogonality feature extraction method. Firstly, the expression feature tensor F is extracted from the original image by using the pretrained ResNet-50. Then, the dimension of the feature tensor F is reduced using compact feature extraction to obtain the feature mapping Ui. Next, the global average pooling and flattening operation is applied to each Ui to obtain the feature vector Vi. Then, the orthogonal loss Lortho is calculated to ensure the independence of the feature vectors. Finally, the combined loss function L is formed by combining the cross-entropy loss LCrossEntropy and the orthogonal loss Lortho. The octonion orthogonal feature decomposition module is fine-tuned by minimizing the combined loss function L. The specific process is as follows.

Expression Feature Extraction: Feature extraction using the model pretrained on the MS-Celeb1M dataset can take advantage of the model’s face feature representation learned from large-scale and diverse datasets, which helps to capture rich and generic features in the original images, which in turn could be used for subsequent expression recognition tasks. Therefore, the emotion feature tensor is first extracted from the original image by the pretrained ResNet-50 model.

Compact Feature Extraction: At the end of the ResNet-50 network, seven separate 1 × 1 convolutional layers are added. The purpose of these convolutional layers is to reduce the dimensionality of the feature F by linear transformations while retaining important information. Each convolutional layer outputs a feature mapping , where i takes on values from 1 to 7, indicating seven different feature mappings.

Global Average Pooling and Softmax Operation: Applying global average pooling to each Ui, this operation computes the average of each channel in the feature mapping, thus reducing the 7 × 7 × 64 tensor to a 1 × 1 × 64 tensor, which is reduced to the feature vector . The values of these feature vectors are then converted by Softmax operations into the form of probability distributions that are used to express the classification.

Orthogonal Loss: Orthogonal loss Lortho is introduced to ensure that the intermediate feature vectors Ui and Vi are orthogonal, i.e., they are mathematically independent. This helps the model to capture more diverse features, reduce redundancy, and improve feature representation.

In the combined loss function, LCrossEntropy quantifies the disparity from the model prediction to the actual label. The hyperparameter λ is used to adjust the relative impact of the cross-entropy loss and the orthogonal loss within the total loss function. By adjusting λ, the importance of orthogonality in model training can be controlled. The combined loss function improves generalization ability and model interpretability by simultaneously optimizing classification accuracy and feature orthogonality so that the model learns more robust and less redundant feature representations while maintaining high classification performance. The seven orthogonal feature vectors Ui obtained from the backbone network after fine-tuning will be input into the octonion orthogonal representation module.

3.3. Octonion Orthogonal Representation Module

The primary goal of the proposed octonion orthogonal representation module is to establish correlations among orthogonal features, thereby enhancing the efficiency of information extraction. Finally, the resulting octonion orthogonal matrix O is input into the octonion ViT module to classify facial expressions.

3.4. Octonion ViT Module

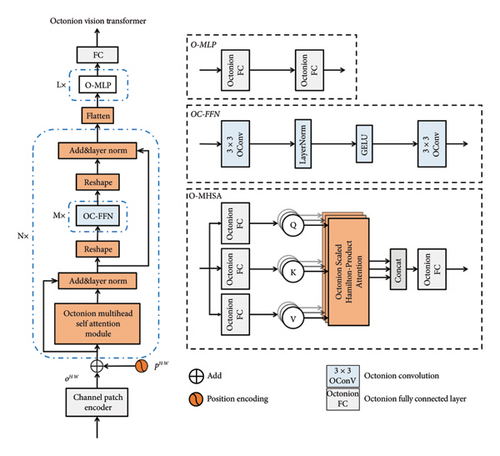

The general structure of the octonion ViT follows the ViT architecture, replacing the original ViT operations with octonion operations, including an octonion fully connected layer with an octonion convolutional layer, and introduces several key enhancements in critical modules. These improvements encompass the channel patch encoder, the octonion multihead self-attention (O-MHSA) module, and an octonion convolutional feed-forward network (OC-FFN) module. The complete architecture is illustrated in Figure 2, where hyperparameters N, M, and L denote the number of individual modules.

3.4.1. Channel Patch Encoder

The channel patch encoder is distinguished from the patch encoder of ViT by dividing the feature map into multiple patches along channel dimensions, and the exact computational procedure for the channel patch encoder module is as follows.

The final output sequence o‴ is fed into the O-MHSA module for further processing.

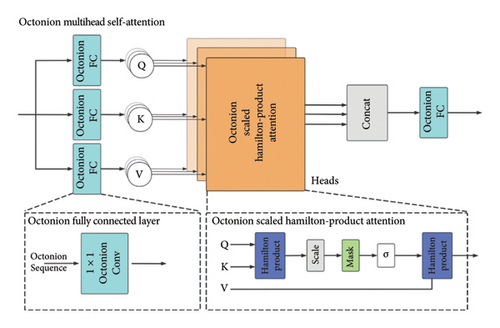

3.4.2. O-MHSA

O-MHSA module is generated by octonion operation. The specific structure is shown in Figure 3.

Ultimately, the octonion feature extracted by the O-MHSA module is forwarded to the OC-FFN module for further processing.

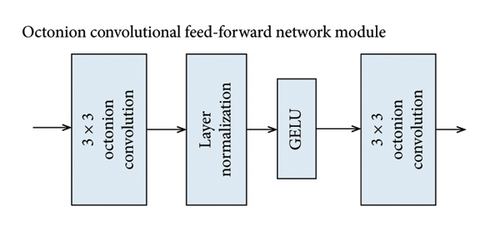

3.4.3. OC-FFN

To enhance the capability of capturing localized features, the octonion convolution operation is integrated into the OC-FFN module.

In the octonion ViT module, the octonion features are normalized by layer normalization before entering the OC-FFN module. The specific structure of the octonion convolutional feedforward network is shown in Figure 4. The OC-FFN module consists of octonion convolution, layer normalization, and GELU activation function. This design aims to fully utilize the properties of octonions to improve the expressiveness and performance of the network for data with complex structures and high-dimensional features.

During the classification phase, the octonion matrix Xout is reconstructed and then processed through an Add layer and a LayerNorm layer. The octonion features are then flattened into the one-dimensional vector, which is further processed by the octonion multilayer perceptron (O-MLP) and the fully connected layer to finalize the classification.

4. Experimental Results

4.1. Experimental Detail and Environment Setup

The network model is implemented using the deep learning library PyTorch and the programming language Python. The hardware configuration includes an NVIDIA GeForce GTX 3090 GPU. Specific details of the deep learning service architecture are provided in Table 1. The model is uniformly trained using the Adam Optimizer. The batch size is set to 12, the initial learning rate in the network model is set to 0.00001, the weight decay parameter is set to 0.0001, and the learning batch is set to 200. During the experiments, the hyperparameters of the model are configured as follows: M = 2 for the number of OC-FFN modules, L = 2 for the number of O-MLP modules, and N = 4 for the number of Encoder modules.

| Hardware and software equipment | Model and specification |

|---|---|

| Graphics card | NVIDIA GeForce GTX 3090 GPU |

| VRAM | 24G |

| Internal storage | 80G |

| Python | 3.8 |

| CUDA | 11.3 |

| Pytorch | 1.10 |

4.2. Accuracy Comparison With Other SOTA Methods

Table 2 lists the accuracies of the model proposed in this paper and the FER methods in the last 3 years on the RAF-DB dataset. The RAF-DB dataset contains seven basic expressions, and as with the other methods, the experiments evaluate the effectiveness of the network by recognizing the seven basic emotions. The experimental results show that the proposed model has the highest recognition rate on the RAF-DB dataset, reaching 90.43%.

Comparison with other FER methods on the SFEW 2.0 dataset is shown in Table 3. On the SFEW 2.0 dataset, our method achieved a FER accuracy of 62.28% for the seven expression categories. It is 3.39% higher than AHA [35] and 0.12% higher than the accuracy of the FDRL [39] method. In comparison with previous methods, the proposed model shows better generalization ability on the dataset.

Table 4 lists the test results of the model proposed in this paper and the FER methods from the last 3 years on the AffectNet dataset. Comparisons are made with many SOTA facial expression methods, including DAN [33], TransFER [41], DACL [40], EAC [34], APViT [38], POSTER [17], DDAMFN [42], POSTER++ [18], and S2D [43]. TransFER [41] designed a multiattention dropping (MAD) algorithm to eliminate the attentional map, pushing the model to extract from other than the most discriminative each facial part to extract comprehensive local information. POSTER++ [18] improves POSTER in terms of cross-fusion, two-stream, and multiscale feature extraction. The experimental results show that the proposed model achieves a 67.74% accuracy on the AffectNet dataset, which is 2.05% higher than that of DAN [33] and 1.51% higher than that of TransFER [41]. It is also 0.25% higher than the POSTER++ [18] model.

4.3. Visual Analysis

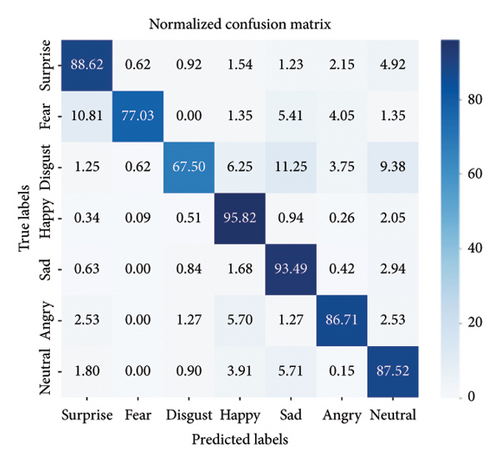

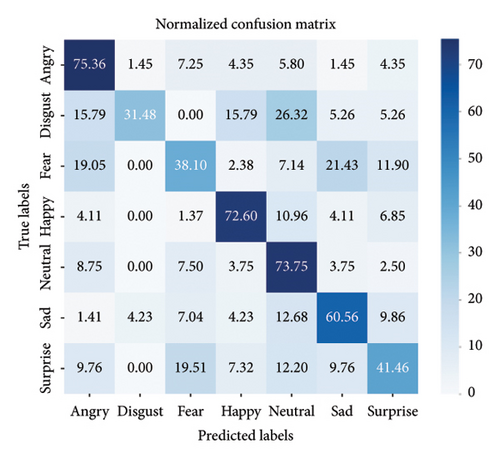

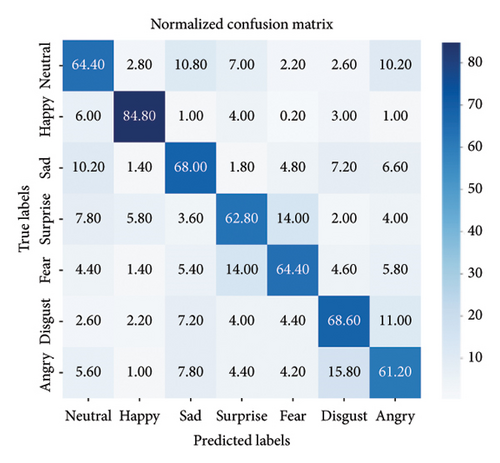

Accuracy is not entirely convincing in categorization tasks, especially in the face of categorization imbalance and without explicitly showing the performance of each category. The problem of category imbalance in FER can be explored by means of confusion matrices, which demonstrate the differences in categorization between different expressions. Figure 5 shows the corresponding confusion matrices plotted on the three datasets using the proposed model, which helps to evaluate the performance of the model.

The results of the confusion matrix show that, in general, our method not only achieves the highest performance in the “happy” expression category but also confounds some of the expression categories. The RAF-DB confusion matrix shows that the model’s accuracy performance is high in the categories of “happy,” surprise, “sad,” and “neutral.” On the SFEW 2.0 dataset, the proposed model had difficulty recognizing the “fear” and “disgust” categories. The “fear” category is easily confused with “angry” and “sad,” while “disgust” is easily confused with “neutral.” This confusion may be caused by the lack of data on “fear” and “disgust” in the training set. For the AffectNet dataset, the performance of our method on the “angry” category was relatively low often confused with the “disgust” category, and there was a recognition of “fear” as “surprise.” The root cause of this confusion may be due to the shared and overlapping signals used to convey these facial expressions, which results in some expressions being perceived as very similar, making it more difficult to accurately distinguish between them.

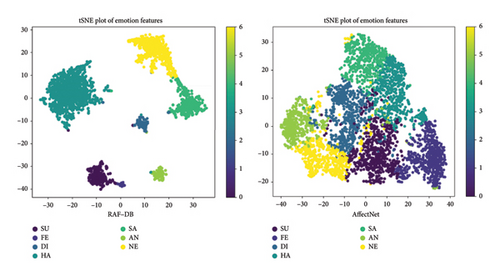

This article demonstrates the high-dimensional features of our method using the t-SNE method, as shown in Figure 6. The t-SNE visualization of the RAF-DB dataset exhibits clear clustering effects and significant cross-group segregation. The distance between points of different colors is relatively far, while points of the same color are tightly clustered in low-dimensional space. This indicates that they have similar features in high-dimensional space, with a tight distribution of features. This further demonstrates the state-of-the-art capability of our method in performing facial expression classification tasks. From the results on the AffectNet dataset, it can be seen that the distance between the points of each color is relatively close, which is due to its large sample size and imbalanced distribution between samples. However, the method presented in this article also demonstrates competitive performance.

4.4. Analysis of Model Parameter Quantity

Smaller models have lower memory and computational power requirements and are critical for applications with high real-time requirements. Such models are often able to complete training faster, which is especially beneficial when dealing with large datasets or when time is of the essence. In addition, models with fewer parameters are less likely to overfit the training data, and when the model becomes too complex, there is a tendency to memorize training examples rather than learn generic patterns. In contrast, simpler models are more likely to capture the underlying structure of the data and successfully generalize to new, unforeseen examples.

Table 5 compares the model size of our method with that of different methods and also compares their performance on the AffectNet and RAF-DB datasets. The proposed model uses 6.69 and 10.08 M of parameters and FLOPs, respectively, which corresponds to a much lower parameter size and computational complexity than the other models, and achieves excellent FER performance on the AffectNet and RAF-DB datasets, with accuracies of 90.43% and 67.74%.

| Methods | Params | FLOPs | Accuracy (%) | |

|---|---|---|---|---|

| RAF-DB | AffectNet | |||

| ViT [10] | 21.59M | 21.58M | 74.76 | 51.14 |

| RAN-ResNet18 [44] | 11.19M | 14.55G | 86.90 | 59.50 |

| SCN [45] | 11.18M | 1.82G | 87.03 | 60.23 |

| VTFF [27] | 51.8M | — | 88.14 | 61.85 |

| MA-Net [31] | 50.54M | 3.65G | 88.40 | 64.53 |

| PSR [46] | 20.24M | — | 88.98 | 63.77 |

| DMUE [47] | 78.4M | — | 89.42 | 63.11 |

| DAN [33] | 19.72M | 2.23G | 89.70 | 65.69 |

| Our method | 6.69M | 10.08M | 90.43 | 67.74 |

To facilitate comparison with the traditional ViT model, a real-valued ViT∗ model is constructed with the same structure as the Octonion-ViT∗ model. The input vectors for both models are of size (512, 49). The notation ∗ indicates specific hyperparameter settings (N = 1, M = 2, L = 1) in the octonion-ViT∗ model, chosen to enable intuitive comparisons in the table.

Table 6 provides a detailed comparison of the specific layer parameters between the two models. Both the octonion convolution layer and the octonion dense layer have their parameters reduced to 1/8 of the ViT model, while the quantity of parameters in the O-MHSA layer is increased compared to that of the regular multihead self-attention layer. The impact is minimal due to the low parameter count of this operation. Overall, the octonion ViT module significantly reduces the model’s parameter count, achieving a reduction of approximately 87.5% compared to the real-valued ViT model.

| Octonion-ViT∗ | ViT∗ | |||

|---|---|---|---|---|

| Layer | Name | Params | Name | Params |

| 1 | Input layer | 0 | Input layer | 0 |

| 2 | Channel patch encoder | 26,976 | Channel patch encoder | 26,976 |

| 3 | Layer normalization | 96 | Layer normalization | 96 |

| 4 | O-MHSA | 1344 | MHSA | 480 |

| 5 | Add | 0 | Add | 0 |

| 6 | Layer normalization | 96 | Layer normalization | 96 |

| 7 | Reshape | 0 | Reshape | 0 |

| 8 | Octonion convolutional layer | 5280 | Convolutional layer | 41,568 |

| 9 | Layer normalization | 192 | Layer normalization | 192 |

| 10 | Activation | 0 | Activation | 0 |

| 11 | Octonion convolutional layer | 10,464 | Convolutional layer | 83,040 |

| 12 | Octonion convolutional layer | 5232 | Convolutional layer | 41,184 |

| 13 | Layer normalization | 96 | Layer normalization | 96 |

| 14 | Activation | 0 | Activation | 0 |

| 15 | Octonion convolutional layer | 2640 | Convolutional layer | 19,008 |

| 16 | Reshape | 0 | Reshape | 0 |

| 17 | Add | 0 | Add | 0 |

| 18 | Layer normalization | 96 | Layer normalization | 96 |

| 19 | Flatten | 0 | Flatten | 0 |

| 20 | Dropout | 0 | Dropout | 0 |

| 21 | Octonion dense layer | 6,293,504 | Dense layer | 50,333,696 |

| 22 | Dropout | 0 | Dropout | 0 |

| 23 | Octonion dense layer | 263,168 | Dense layer | 2,098,176 |

| 24 | Dropout | 0 | Dropout | 0 |

| 25 | Dense | 7175 | Dense | 7175 |

| Total parameters | 6,616,359 | Total parameters | 53,651,879 | |

The principle of the low parameter numbers of the octonion ViT module can be explained in terms of the octonion algebra. The principle is shown in Figure 7. For the dense layer with 1024 hidden units and 1024 input values, the real-valued model would have parameters of 10,242 = 1M. In order to maintain an equal number of input and output nodes (1024), the equivalent octonion model would have 128 octonionic inputs and 128 octonionic hidden units. As a result, the count of parameters in the octonion ViT module would be 1282 × 8 = 0.125M, which is approximately 87.5% less.

4.5. Ablation Study

In order to validate the effectiveness of each module in the proposed model, we conducted ablation experiment of the RAF-DB dataset for the octonion module, ViT module, and orthogonal feature module, and the accuracy and parameters of the comparison models are illustrated in Table 7.

| Methods | Accuracy (%) | Params | FLOPs |

|---|---|---|---|

| Octonion-ViT | 76.01 | 6.69M | 10.08M |

| Ortho-CNN | 88.23 | 23.90M | 4.15G |

| Ortho-ViT | 88.38 | 21.59M | 21.58M |

| Our method | 90.43 | 6.69M | 10.08M |

First, the orthogonal feature module was removed from the proposed model and octonion-ViT was constructed to compare. From the experimental results, the orthogonal feature module improves the accuracy of the model by 14.42%. In addition, the ViT model was removed from the proposed model and the Ortho-CNN model was constructed for comparison, and the results of the experiment showed that the ViT module in the proposed model improved the accuracy by 2.2%, and the number of parameters was reduced by 17.21M. At the same time, the computational complexity is significantly reduced from 4.15G to 10.08M. Finally, the octonion module was removed from the proposed model and Ortho-ViT was constructed for comparison. After removing the octonion module, the accuracy is reduced by 2.05%, and the number of parameters and the computational complexity are increased to 21.59M and 21.58M, respectively. This demonstrates that octonion module can effectively represent high-dimensional data, enhancing the expressiveness and performance of the model. The experimental results prove the effectiveness of each module in the proposed model.

5. Conclusion

In this paper, we introduce an FER method based on octonion orthogonal feature extraction and octonion ViT. The octonion orthogonal feature decomposition module, octonion orthogonal feature representation module, and octonion ViT module proposed in this method further improve the accuracy of FER in natural scenes and keep the number of parameters low. The experimental results and analysis show that the accuracy of the proposed model on SFEW 2.0, AffectNet, and RAF-DB are 62.28%, 67.74%, and 90.43%, respectively, and the number of parameters remains at 6.69M. The ablation experiments of each module are carried out to verify the effectiveness of each module of the model. Compared with other models, the proposed model shows superior performance in the natural FER task.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the General Project for Education of National Social Science Fund, Study on the Mechanism of Emotional Engagement and its Intervention in Primary and Secondary School Teachers’ online Training (Grant Number: BCA230278).

Open Research

Data Availability Statement

The data used to support the findings of this study are included within the article.