Artificial Intelligence for Text Analysis in the Arabic and Related Middle Eastern Languages: Progress, Trends, and Future Recommendations

Abstract

In the last 10 years, there has been a rise in the number of Arabic texts, which necessitates a more profound understanding of algorithms to efficiently understand and classify Arabic texts in many applications, like sentiment analysis. This paper presents a comprehensive review of recent developments in Arabic text classification (ATC) and Arabic text representation (ATR). We analyze the effectiveness of various models and techniques. Our review finds that while deep learning models, particularly transformer-based architectures, are increasingly effective for ATC, challenges such as dialectal variations and insufficient labeled datasets remain key obstacles. However, developing suitable representation models and designing classification algorithms is still challenging for researchers, especially in Arabic. A basic introduction to ATC is provided in this survey, including preprocessing, representation, dimensionality reduction (DR), and classification with many evaluation metrics. In addition, the survey includes a qualitative and quantitative study of the ATC’s existing works. Finally, we conclude this work by exploring the limitations of the existing methods. We also mention the open challenges related to ATC, which help researchers identify new directions and challenges for ATC.

1. Introduction

Nearly 447 million people speak Arabic as their first language. At the same time, it is one of the world’s most widely spoken languages and is regarded as the fourth official language of the United Nations (UN) [1, 2]. The rise in the number of users leads to an increase in Arabic textual data generated daily. So, extracting information from such huge data is a challenging task, especially for Arabic text (AT). Therefore, there is a need to preprocess AT and remove words that do not have significant meaning, change words to their roots, eliminate noise, and improve the performance of Arabic text classification (ATC) [3].

The process of cleaning and preparing text for further processing is known as preprocessing. It is the preliminary step in any text classification pipeline. Specific preprocessing methods and algorithms are required to extract useful patterns from unstructured Arabic textual data. Preprocessing for AT includes many techniques such as white space removal, lemmatization, stemming, and stop-word removal. There are several preprocessing techniques that have been used to enhance the performance of ATC. However, most of the available techniques are still not able to cover all the requirements to prepare AT for further processing due to the complexity of AT [2].

Representation and feature engineering (selection and extraction) are the second steps in the ATC pipeline. The efficiency of succeeding natural language processing (NLP) tasks is strongly influenced by the quality of these techniques [4]. Representation is the process of converting unstructured text documents into their structured equivalent so that machine learning (ML) algorithms [5, 6] can understand [7]. Several feature extraction techniques, including bag-of-words (BoW) [8], term frequency–inverse document frequency (TF–IDF) [9], term class relevance (TCR) [10], term class weight–inverse class frequency (TCW–ICF) [10, 11], symbolic representation, and N-gram features, have been used for feature representation. At the same time, different levels of representation can be used to represent text with different levels, such as character-level, word-level, and phrase-level representations [8, 9].

Most of the researchers have used TF–IDF or BoW, which are inherently problematic due to the lack of the sequence of the words and skip the semantics meaning of the sentence, so various sentences might have the same vector if they have the same words with a different sequence, for example, علي مدرس (which means Ali is a teacher) and أعلي مدرس؟ (which means Dose Ali a teacher?). However, these techniques do not have problems with memory consumption for storage, but they lose semantic meaning. To overcome those limitations, many other techniques have been proposed, for example, Word2Vec [10], GloVe (https://nlp.stanford.edu/projects/glove/) [11], and contextualized word representations [12].

Text categorization is the process of determining if a text belongs to one of the several predefined categories based on its meaning [13, 14]. Once the representation of a given text is achieved, a classifier needs to classify AT into various classes [15]. Many of the ML algorithms such as Decision Trees (DT) [16, 17], Naive Bayes (NB) [18, 19], support vector machines (SVM) [20, 21], and artificial neural networks (ANN) [21, 22] were used for ATC. However, getting high performance is still a real challenge. Therefore, in this survey, we attempt to perform a comprehensive taxonomy study for ATC to find the strengths and weaknesses of the existing work.

Given the growing demand for accurate ATC in domains such as healthcare, finance, and e-commerce, it is essential to explore effective techniques, address linguistic challenges, and mitigate ethical concerns. This study aims to provide a comprehensive taxonomy survey of ATC, analyze existing approaches, and highlight open research challenges and future directions to improve the field. Due to the limited research on ethical considerations and bias in ATC, existing studies have not sufficiently addressed this aspect, making it a key future challenge for researchers. Therefore, there is a pressing need to advance research in fairness, transparency, and explainability in Arabic NLP systems to ensure the development of more equitable and accurate models that meet the requirements of various real-world applications.

1.1. Motivation

- •

Increase the number of Arabic users and text generation for the Arabic language in many domains, especially with COVID-19.

- •

Many researchers still use traditional representation techniques such as a BoW that cannot work well with huge.

- •

Little research is conducted on AT compared to other languages, such as English.

- •

Lack of tools and applications for the Arabic language.

- •

Many non-Arab people who speak and use the Arabic language as a second language are also more than native speakers; therefore, studying these limitations and finding solutions for these problems and challenges will help many people.

1.2. Contributions

- •

A comprehensive review of available studies and existing surveys in ATC, focusing on their objective, scopes, and research gaps.

- •

Explores the architecture of ATC and ATR.

- •

A comparative study of ATC stages such as preprocessing, representation, feature engineering, and classification.

- •

A comparative study of seven ATC and ATR models to evaluate their performance through an experimental analysis using the AlKhaleej dataset.

- •

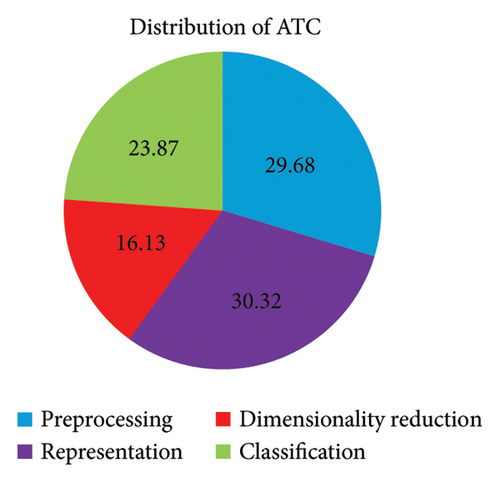

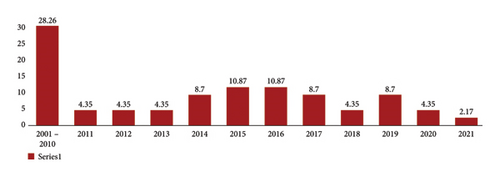

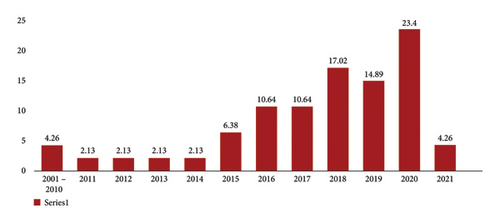

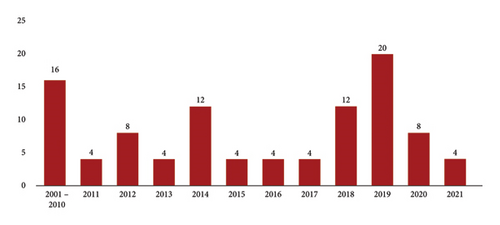

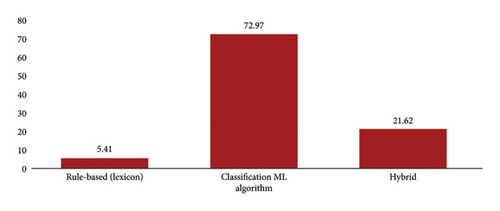

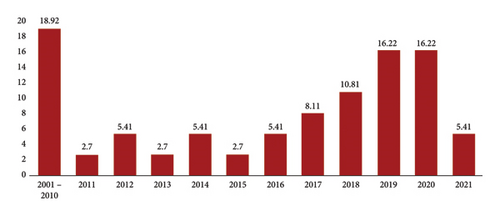

A quantitative analysis of the proposed techniques for ATC based on publication year and categories.

- •

Review and mention the available datasets and open-source libraries.

- •

Implementation and discussion for seven models based on preprocessing, feature selection, feature extraction, and classification algorithms such as NB and SVC to evaluate their performance.

- •

A qualitative analysis of the ATC and ATR models based on their strengths and weaknesses.

- •

An overview of current challenges and future research work after quantitative analysis.

While there have been several surveys on ATC and ATR, most of them focus on limited aspects such as preprocessing techniques or specific classification algorithms. This work offers a broader perspective by providing a comprehensive taxonomy that encompasses all stages of ATC, including preprocessing, representation, dimensionality reduction (DR), and evaluation. Furthermore, this work uniquely combines qualitative and quantitative analyses, which offer deeper insights into the strengths and limitations of existing methods. Unlike previous works, this survey also emphasizes the challenges specific to Arabic language features, such as its complex morphology and dialectical variations, and provides actionable recommendations for overcoming these challenges. Such a holistic approach has not been addressed in existing literature, making this study a novel and valuable contribution to the field. In addition, this article claims to increase the efficiency of learning cutting-edge methodologies for ATC. In addition, it identifies prospective research gaps, allowing researchers to pick their research routes. According to our information, it will enlarge their minds and open the path for future new approaches.

1.3. Organization of the Paper

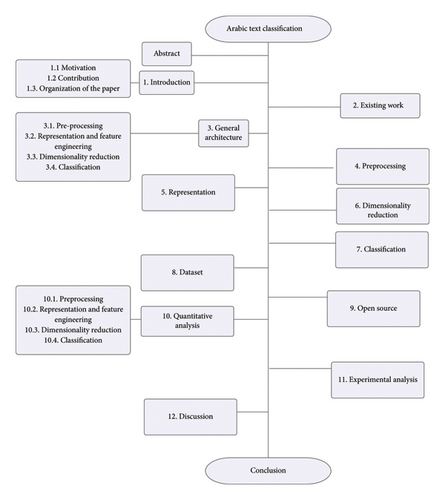

The organization of this survey is as follows: Section 2 studies and compares the existing surveys. Section 3 discusses the background and general architecture of the ATC model. The main steps of ATC are explored and analyzed in Sections 4, 5, 6, 7, and 8. The tools and open-source library are presented in Section 9. The quantitative analysis is highlighted in Section 10. The experimental analysis is presented in Section 11. The discussion and open challenges are highlighted in Sections 12 and 13. Finally, we conclude this survey in the conclusion section. For more clarity, Figure 1 illustrates this taxonomy using a mind map diagram using the lucid chart.

2. Existing Surveys

One of the pivotal goals of the article is to explore the existing surveys. However, some surveys have been done for ATC. This survey is examined, assessed, and compared with existing surveys in this section. We are inspired to survey all steps to make this research different from the existing one. There has been a slew of reviews and polling pieces published for ATC. However, most of them do not study each step individually. At the same time, in comparison with the previous work, this section will study the prior surveys on ATC and will provide an analysis comparing other researchers’ work with this taxonomy. In the next part, we study them and compare them. As shown in Table 1, there are various extant reviews and surveys in the state of ATC. However, they did not consider all stage aspects, as our study did.

| Ref. | Year | Preprocessing | Features extraction | Classification | Qualitative analysis | Taxonomy | Experimental analysis | Quantitative analysis | Evaluation matrices |

|---|---|---|---|---|---|---|---|---|---|

| [23] | 2016 | ✓ | ✓ | ✓ | |||||

| [24] | 2017 | ✓ | ✓ | ✓ | |||||

| [25] | 2017 | ✓ | ✓ | ✓ | |||||

| [26] | 2018 | ✓ | |||||||

| [27] | 2019 | ✓ | ✓ | ✓ | |||||

| [28] | 2019 | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| [29] | 2019 | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| [30] | 2019 | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| [31] | 2020 | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| [32] | 2020 | ✓ | ✓ | ✓ | ✓ | ||||

| [2] | 2021 | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| [33] | 2022 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| [34] | 2023 | ✓ | ✓ | ✓ | ✓ | ||||

| This work | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

A critical review of the methodologies revealed that while traditional techniques such as BoW and TF–IDF excel in simplicity and efficiency, they struggle with sparsity and fail to capture semantic relationships in AT. Similarly, deep learning (DL) methods, particularly transformer-based models like BERT, show promising results but require substantial training data, often unavailable for dialectical Arabic. The reviewed studies highlight a recurring limitation: the inability of existing models to adapt to Arabic’s morphological complexity and dialectical diversity. Addressing these challenges necessitates the development of more context-aware models and larger annotated datasets.

3. General Architecture of ATC

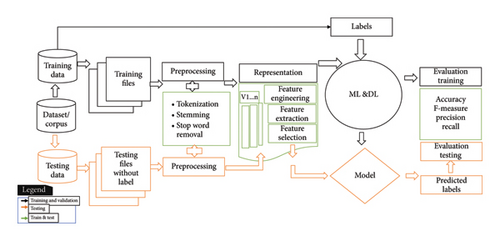

This part describes the entire ATC workflow, as shown in Figure 2, as well as a simple notion of preprocessing, representation, and classification models in Sections 3.1, 3.2, 3.3, and 3.4, respectively.

3.1. Preprocessing

The process of cleaning and preparing the text for subsequent processing is known as preprocessing. It is the initial step in the text categorization pipeline [35]. Tokenization, stop-word removal, and stemming are only a few of the methods for text preparation. Tokenization is a method of removing white space and special characters from a document. Stop words are general terms employed to complement informational material with minimal meaning; they provide a grammatical function but do not reveal the subject matter, and there are many other techniques [36].

3.2. Representation

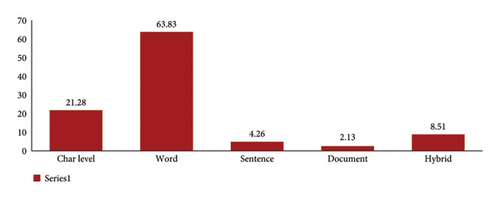

Text representation is a crucial stage in any text classification model. ML algorithms [37, 38] can understand the transformation of unstructured text into structured text documents. There are different types of representation (level) of text, such as character level, word level, sentence level, phrase level, document level, and so on. The most important thing here is not only representation; feature engineering (selection and extraction) is also significant in making the ATC system work efficiently and effectively.

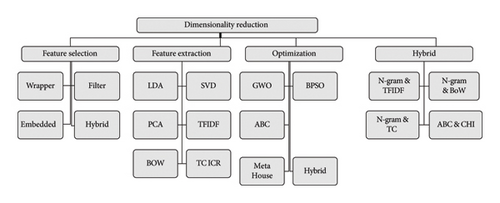

3.3. DR and Feature Engineering

DR is employed to reduce the dimensionality of the input feature space. There are various methods to reduce the size, such as feature selection (wrapper, embedded, filter), ensemble, and hybrid techniques. DR can be applied simultaneously in the preprocessing phase, such as stemming before or after representation, such as chi-square.

3.4. Classification

Once the representation for a given text collection is created through an optimal set of representation and feature extraction techniques, the classifier has to be trained to learn the pattern of classifying text into different classes [15]. There are many applications for text classification [39, 40] in other scenarios such as information retrieval (IR), sentiment analysis (SA), recommender systems, and hate speech detection. At the same time, text classification can be utilized in numerous domains such as health, social sciences, and law domains [41, 42].

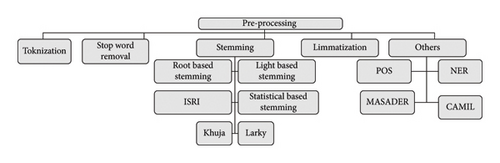

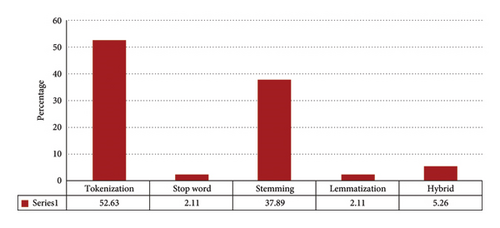

4. Preprocessing

Preprocessing techniques prepare text for further processing by transforming unstructured text into structured data. Many techniques have been used for this task. Figure 3 and Table 2 explore these techniques based on work that has been done for ATC. Each preprocessing method in ATC has its advantages and disadvantages, impacting model performance in different ways. For instance, diacritic removal simplifies text representation and reduces data sparsity, but it may lead to ambiguity, as some words have different meanings depending on diacritics. Stemming and lemmatization help normalize words by reducing them to their root forms, improving generalization; however, stemming can be overly aggressive, cutting words too short, and losing meaning, while lemmatization requires linguistic knowledge and is computationally expensive. Tokenization, especially in Arabic, is challenging due to the absence of clear word boundaries in certain cases, which may lead to errors in splitting words. Stop-word removal helps reduce computational complexity and improve efficiency, but in some contexts, stop words carry semantic importance, and their removal can affect classification accuracy. Normalization techniques, such as unifying different forms of Arabic letters (e.g., converting “ي” to “ى”), improve consistency but may lead to unintended modifications in certain words. Therefore, selecting the right preprocessing techniques requires balancing efficiency, linguistic integrity, and task-specific requirements to optimize the performance of ATC.

| Ref. | Year | Objective | Method | Dataset | Evaluation matrices |

|---|---|---|---|---|---|

| [43] | 2001 | Aim to improve normalization and stemming | Normalization and stemming | — | Precision |

| [44] | 2002 | To design new light stemmers | Stemming | — | Precision |

| [45] | 2002 | To improve retrieval effectiveness | Light stemming approach | Provided by the text retrieval conference | Precisions and recall |

| [46] | 2007 | The goal is to contrast and compare two feature selection techniques. Light stemming vs. stemming | Stem vectors and light stem vectors | 15,000 documents for three classes | F1-score |

| [47] | 2008 | To generate index words for AT documents | Stemming and weight assignment technique, and an autoindexing method | 24 arbitrary texts of different lengths | Recall and precision |

| [48] | 2008 | To introduce a novel lemmatization algorithm | Lemmatization | House corpus | Recall and precision |

| [49] | 2008 | Proposed a new method for stemming AT | Stemming techniques | — | — |

| [50] | 2008 | Design a new stemming algorithm | Stemming Arabic words with a dictionary | Arabic corpus | Accuracy |

| [51] | 2009 | Presents and compares three techniques for the reduction | Height stemming and word clusters | Create dataset | Recall and precision |

| [52] | 2010 | Sought to determine the effect of 5 measures with two types of preprocessing for R document clustering | The Information Science Research Institute stemmer | 1680 documents | Cosine, Jaccard, Pearson, Euclidean, and DAvg KL |

| [53] | 2010 | To create an efficient rule-based light stemmer | Light stemmer for the Arabic language | — | — |

| [54] | 2010 | Aim to present a new dictionary-based Arabic stemmer | Local stem | The dataset contains 2966 documents | Accuracy |

| [55] | 2010 | Aim to design Arabic morphological analysis tools | Stemming and light stemming | Open-Source Arabic Corpus | Accuracy |

| [56] | 2011 | Aim to work with many techniques for ATC | Stop-word removal | 2363 documents | Recall and precision |

| [57] | 2011 | Improved stemming to extract the stem and root of words | Dictionary-based stemmer | Collected Arabic corpus | Accuracy |

| [58] | 2012 | Aim to increase accuracy | 3 stemmers | House corpus collected | Accuracy |

| [59] | 2012 | Propose the first nonstatistically accurate Arabic lemmatizer algorithm that is suitable for information retrieval (IR) systems | An accurate Arabic root-based lemmatizer for information | The dataset contains 50 documents | Accuracy |

| [60] | 2013 | Investigates the relevance of using the roots of words as input features in a sentiment analysis system | Tashaphyne stemmer with ISRI stemmer and Khoja stemmer | Penn Arabic Treebank with movie corpus | Accuracy, recall, precision, and F1-score |

| [61] | 2013 | Aim to improve khoja | Enhancement of khoja | House corpus collected | Accuracy |

| [62] | 2014 | Aim to design a model for the extraction of the word root | Stemmer for feature selection | CNN FROM OSAC | Recall, precision, and F1-score |

| [63] | 2014 | Aim to design a light stemmer | Novel root-based Arabic stemmer | Dataset consists 6081 Arabic words | Accuracy |

| [64] | 2014 | Aim to design an analyzer for dialectal Arabic morphology | Analyzer called ADAM | SAMA databases | — |

| [65] | 2014 | Aim to compare studies for stemming | Khoja stemmer with chi-square | CNN FROM OSAC | Recall |

| [66] | 2015 | To study and compare the effect of three stemmer algorithms | Root extractor, light, and khoja stemmer | Arabic WordNet | F1-score |

| [67] | 2015 | To improve stemming P-stemmer | P-stemmer | House corpus collected | F1-score |

| [68] | 2015 | Aim to root extraction using transducers and rational kernels | Root extraction | Saudi Press Agency dataset | Accuracy, recall, precision, and F1-score |

| [69] | 2015 | To introduce a new stemming technique | Approximate stemming | — | Accuracy and F1-score |

| [70] | 2015 | To build a new Arabic light stemmer | A new algorithm for light stemming | The dataset consists of 6225 Arabic words | Accuracy |

| [71] | 2016 | To improve accuracy by design feature selection | Normalization and stemming techniques | Dataset 1, dataset 2, and dataset 3) collected from the website https://www.aljazeera.net | Accuracy, recall, precision, and F1-score |

| [72] | 2016 | To study the Khoja stemmer and the light stemmer stemming algorithm | Normalization, root base stemming, and light stemming approaches | Create a dataset with 750 documents | Recall, precision |

| [73] | 2016 | To design a software tool for AT stemming | Light stemmer | — | — |

| [74] | 2016 | Aims to highlight the effect of preprocessing tasks on the efficiency of the Arabic DC system | Stemming techniques with | House corpus collected | F1-score |

| [75] | 2016 | Aim to study a fast and accurate segmenter | Arabic segmenter | — | — |

| [76] | 2017 | To review stemming ATs | Effective Arabic stemmer | — | — |

| [77] | 2017 | To implement a new Arabic light stemmer | Light stemmer | ARASTEM dataset | Using Paice’s parameters |

| [78] | 2017 | To design a new morphological model based on regular expressions | Morphological model | Some Surat from the Holy Quran | False positive and false negative rate |

| [79] | 2017 | Evaluation study among several preprocessing tools in Arabic TC | Among several preprocessing tools | Alj-News Dataset and Alj-Mgz Dataset | F1-score |

| [80] | 2018 | To design the FS technique and improve the accuracy | Improved chi-square | Open-Source Arabic Corpora (OSAC) and (CNN) | Precision, recall, and F1-score |

| [81] | 2018 | Conduct a comparative study about the impact of stemming algorithms | Stemming | CNN-Arabic site and contains 5070 | Recall |

| [82] | 2019 | To study different steamer AR Stem, Information Science Research Institute, and Tashaphyne | Stemming | CNN-Arabic site and contains 5071 | F1-score |

| [83] | 2019 | Aim to extract a root by processing word-stemming levels to remove all additional affixes | Root extraction and stemming | Collection of 350 documents | Accuracy |

| [84] | 2019 | Aims to review the state of the retrieval performance of Arabic light stemmers | Light stemmers | TREC data | Accuracy |

| [85] | 2019 | To a novel method that detects not only domain-independent stop words | Stop word | Corpus combines 1261 Facebook comments, 781 tweets, and 32 reviews | F1-score |

| [86] | 2020 | To discuss the impact of the light stemming algorithm on text classification | Study the effects of the light stemming | BBC Arabic dataset | Recall, precision |

| [87] | 2020 | To discuss the impact of a stemming algorithm on word embedding representation | Stemming techniques | ANT version 1.1 and SPA corpus | F1-score |

| [88] | 2021 | Design a new method to prepare and analyze the AT | Normalization, such as shape repeated letters, non-normal words, and spelling mistakes | Collect data character | — |

| [33] | 2024 | Study how ATC work on hate speech | Many methods | Survey | — |

4.1. Tokenization

It is the process of segmenting a given text into small units. Alyafeai et al. proposed three novel text tokenization algorithms for AT [36].

4.2. Linguistic Preprocessing

It refers to additional preprocessing such as part-of-speech tagging, which is applied to get additional information about the content of the text, for instance, ADIDA, MADAMIRA, etc. [89].

4.3. Stop-Word Removal

It refers to the elimination of words that do not give meaning to the text. Auxiliary words, prepositions, conjunctions, modal words, and other high-frequency words in diverse publications are all examples of stop words [82].

4.4. Normalization

This refers to a collection of many documents of various formats that are transformed into a standard format such as “.txt” in case our data are represented as a multidocument. On the other side, when our data are represented as a single document, the normalization here is to make all words in the same form, and there are many techniques such as stemming. Finally, normalization takes in rules or regular expressions [71].

4.5. Lemmatization

Lemmatization reduces a word to its simplest form by replacing the suffix or prefix of a word with a different one or removing the suffix or prefix from the word utilizing lexical knowledge [90, 91].

4.6. Stemming

Text stemming is the process of reducing inflected or derived words to their common canonical form. For example, ‘teacher’, ‘school’, and ‘studying’ might be reduced to their root forms such as ‘teach’, ‘school’, and ‘study’ (مدرس, مدرسه, يدرس, الى, درس) [90]. There are various types of stemming, for example, root-based stemmers—Khoja, light-based stemmers—Larky, and statistical-based stemming like N-grams, as shown in Figure 2.

Larkey and Connel [43] implemented and improved normalization and stemming methods for AT. In addition, they have created a dictionary and expanded inquiries for AT with no prior knowledge of the language. Larkey et al. [44] further developed several light stemmers based on heuristics and statistical stemmers for Arabic retrieval. A morphological stemmer that sought to locate the root for each word proved more successful for cross-language retrieval than the best light stemmer did. Duwairi et al. applied different FS approaches to the Arabic corpus. They compared stemming and light stemming, coming to the conclusion that light stemming improves classification accuracy. Three feature reduction methods based on stemming, light stemming, and word clusters were proposed with K-NN as classifiers [51]. Mohd et al. attempted to describe the influence of several metrics, such as cosine similarity, Jaccard coefficient, Pearson correlation, Euclidean distance, and averaged Kullback–Leibler divergence on document clustering algorithms with two forms of morphology-based preprocessing [52]. Mansour et al. [47] proposed an autoindexing method for IR to create index words for AT documents while applying different grammatical rules to extract stems. Al-Shammari and Lin [48] introduced a novel lemmatization algorithm for AT and argued that lemmatization is a superior word normalization approach to stemming. Al-Shargabi et al. [56] applied different preprocessing methods and compared the performance of SVM, NB, J48, and SMO classifiers performance and concluded that SMO outperformed the other classifiers.

Hadni et al. [58] implemented an effective hybrid approach for ATC that is reported to supersede Larky, Khoja, and N-gram stemmer. Oraby et al. [60] studied the effect of stemming methods on Arabic SA. Their accuracy results were 93.2%, 92.6%, 92.6%, and 92.2% for Tashaphyne, stemmer, ISRI stemmer, and Khoja stemmer, respectively. Bahassine et al. [62] studied the effect of the origin stemmer and Khoja’s stemmer on Arabic document classification. CHI statistics were used to reduce the number of selected features. Their proposed stemming method outperformed Khoja’s stemmer. Al-Kabi et al. [63] proposed a new light stemmer for AT. The empirical evaluation indicated that the proposed stemmer’s accuracy is higher than one of the two well-known Arabic stemmers utilized as a baseline. Salloum and Habash [64] presented an analyzer for dialectal Arabic morphology for AT. It is an analyzer for dialectal Arabic, and its performance is comparable to an Egyptian dialectal morphological analyzer. Yousif et al. [66] presented an ATC system based on NB with a conceptual representation based on Arabic WordNet. They assessed the impact of three stemming algorithms: a light stemmer, a Khoja stemmer, and a best-performing root extractor.

Kanan and Fox [67] developed a taxonomy for Arabic news with automatic classification techniques using binary SVM classifiers and a novel Arabic light stemmer called P-Stemmer. Nehar et al. [68, 71] enhanced ATC utilizing an improved feature set, including the BoW and term-frequency approach and the frequency ratio accumulation method classifier. Nehar et al. provided a new approach to root extraction based on using an Arabic pattern stemmer to classify AT. Nasef and Jakovljević [73] presented the categorization of AT using stemming. The software is based on an open-source version of the Lucene-based light stemmer for Arabic, and it allows for stemming and categorization into 12 classes. Mustafa et al. [76] presented an extensive survey on Arabic stemmer. Abainia et al. [77] suggested the design of a unique Arabic light stemmer based on certain new principles for smartly removing prefixes, suffixes, and infixes. It is also the first book to address the irregular norms of Arabic infixes.

Bahassine et al. [80] increased the accuracy of Arabic document categorization; FS approaches employing IG, MI, and CHI were used. Boukil et al. [81] proposed the classification of Arabic documents while using stemming techniques as FE systems and KNN as a classifier. Alhaj et al. employed various stemmers, including Information Science Research Institute (ISRI), Tashaphyne, and ARLStem for ATC with SVM as the best-performing classifier. They further studied Arabic document classification utilizing light stemming techniques with FE techniques such as BoW and TF–IDF. Moreover, different FS methods, such as CHI, IG, and singular value decomposition (SVD), were used to select the most relevant features [82, 86]. Belal proposed a system for stemming word-level levels to extract a root in the process of removing all additional affixes. Eliminating all further affixes is proposed as a technique for stemming word-level levels to extract a root. If the procedure of matching between a word and proper names is accessible, remove the affixes using patterns and rules based on root dictionaries [83]. Ouahiba and Othman review the performance of various Arabic Light stemmers and conclude light 10 is the outperforming stemmer [84]. Almuzaini and Azmi [87] discussed the effect of Arabic document classification by stemming strategies and word embedding on different DL models, including CNN, CNN–long short-term memory network (LSTM), gated recurrent units (GRU), and attention-based LSTM which has been investigated with Word2Vec representation algorithm. Al-Shammari and Lin produced a novel method for stemming Arabic documents called educated text stemmer. They used stemming weight as an assessment measure to compare the new method’s performance to that of the Khoja stemming algorithm [49]. Ayedh et al. [74] investigated the influence of preprocessing tasks on the efficiency of the Arabic document categorization system. Three-classification approaches are utilized in this study: NB, KNN, and SVM. Al-Kabi [61] highlighted the flaws in the Khoja stemmer and brought about 5% improvements in accuracy by adding missing patterns. Nehar [69] developed a novel stemming approach known as “approximate stemming,” which is based on the usage of Arabic patterns using transducers without relying on any dictionary. Aljlayl and Frieder proposed rule-based light stemming and demonstrated its performance better than a root-based algorithm [45]. Kchaou and Kanoun [50] proposed a method for stemming AT that works similarly to Khoja’s strategy, but the difference here is that there are two dictionaries, one for roots and another for radicals. It addresses handicapped roots and radicals in Khoja.

Kanan et al. proposed a novel light stemming from AT and demonstrated its effectiveness in improving search in IR Elshammari [53]. Al-Shammari proposed a context-dependent stemmer without relying on a dictionary and improved ATC by utilizing a new free Arabic stemmer dictionary [54]. The proposed stemmer is compared with the root-based and light stemmers and outperforms them. Alhanini and Aziz proposed an improved stemmer for extracting the stem and root of Arabic words to address the shortcomings of light stemming and dictionary-based stemming. However, the proposed stemmer does not address the issue of broken (irregular) plurals [57]. El-Shishtawy [59] proposed a nonstatistical lemmatizer that uses several Arabic knowledge resources to produce accurate lemma forms and relevant features that can be utilized in IR systems. Abdelali et al. [75] proposed a Farasa Arabic segmenter based on SVM ranking with linear kernels that is comparable to the state. Said et al. reviewed several preprocessing tools in ATC and compared the raw text within many techniques, such as Al-Stem stemmer, Sebawai root extractor, and RDI MORPHO3 stemmer [79].

Elghannam [92] created a new technique for identifying the domain of a corpus. The detection is domain-independent and domain-dependent stop words. Othman et al. developed a new framework based on regular expressions and Arabic grammar rules to extract and recognize an Arabic sentence’s syntax analysis [78]. Hegazi et al. [88] designed an approach that provides a framework for building effective apps for analyzing and processing AT on social media.

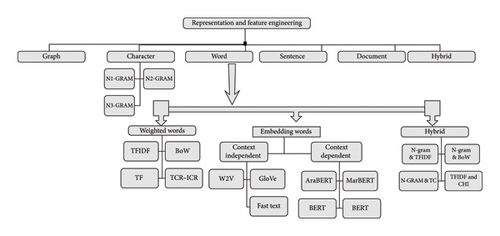

5. Representation and Feature Engineering

ML algorithms cannot understand unstructured text as humans do unless represented in terms of numbers. Hence, text representation is a process of converting unstructured text into its structured equivalent representation, which ML algorithms can understand and interpret. One of the most effective approaches to text representation is word embedding, which captures the semantic and syntactic relationships between words in a continuous vector space. Traditional techniques such as BoW and TF–IDF treat words as discrete entities, failing to capture contextual meaning. Machine-readable representations of text can be constructed using various representation methods. Figure 4 and Table 3 explore a different type of level representation first. Then, different feature extraction techniques are explored.

| Ref. | Year | Objective | Method | Dataset | Evaluation matrices |

|---|---|---|---|---|---|

| [93, 94] | 2008 | Aim to use ML for AT documents classification | Dice measure for classification and representing by trigram frequency statistics | Arabic documents corpus | Precision, recall |

| [95] | 2010 | Aim to explore the sentiment of AT at two levels: document and sentence | Design a novel grammatical approach and semantic orientation of words, documents, and sentences at the document and sentence level | 44 documents | Accuracy |

| [56] | 2011 | To make a comparison of different text classification algorithms | Stop-word removal | 2363 documents | Recall and precision |

| [96] | 2012 | Propose a conceptual representation for AT representation | Chi-square | Corpus of Arabic texts built by Mesleh | Precision, recall, and F1-score |

| [97] | 2013 | Aim to represent AT using rich semantic graph | Graph | A small dataset that contains three paragraphs | — |

| [98] | 2014 | Aim to design an algorithm by combining bag-of-words and the bag-of-concepts | TF and TF–IDF | Arabic 1445 dataset and Saudi newspapers (SNP) dataset | Accuracy, recall, precision, and F1-score |

| [99] | 2015 | Aim to propose four models for text sentiment classification in Arabic | Bag-of-words word embeddings | LDC ATB dataset | F1-score |

| [100] | 2015 | Aim to explore the efficient of word N-grams | N-grams | Saudi Press Agency dataset | Accuracy |

| [6, 101] | 2015 | Aim to represent a word in a vector and minimizing for cosine error outperforms | Word embeddings CBOW, SKIP-G, GloVe | Collected ATs | Root mean square error and Pearson’s correlation |

| [102] | 2016 | To use cosine similarity for ATC | Latent semantic indexing (LSI) | 4000 documents on 10 topics | Accuracy |

| [7] | 2016 | Aim to solve binary classifiers and detect subjectivity | Word embeddings | Collect datasets to create word representations | Accuracy |

| [103] | 2016 | Aim to study sentiment polarity from the AT | Word embeddings Word2Vec | 3.4 billion-word corpus. | Accuracy |

| [104] | 2016 | Aim to explore the character level for discriminating between similar languages and dialects | Character-level | DSL 2016 shared task | Accuracy and F1-score |

| [105] | 2017 | Aim to design a new graph-based algorithm for ATC | Graph | Essex Arabic summaries corpus | Recall, precision, and F1-score |

| [106] | 2016 | Aim to prove document embeddings better than text preprocessing methods | Word vectors and Doc2Vec model | BBC, CNN, OSAC, and Arabic Newswire LDC | Precision, recall, and F1-score |

| [107] | 2017 | Aim to propose pretrained word representation for AT | Word embeddings (AraVec) | Different resources: Wikipedia, Twitter, and Common Crawl webpages (word embedding) | None |

| [108] | 2017 | Aim to use various models for word representations to classify AT | (CBOW, Skip-Gram, and GloVe) | Two datasets: SemEval 2017 and ASTD | F1-score |

| [109] | 2017 | Aim to work on three problems for Arabic sentiment analysis | Word embedding with Word2Vec | Syria Tweets dataset | Accuracy recall, precision, and F1-score |

| [110] | 2017 | Aim to propose a study that minimizes the high dimension | TF–IDF | Corpus of sport news | Precision, recall, and F-measure |

| [111] | 2018 | Aim to utilize deep learning for Arabic sentences classification | Word embeddings | Essex Arabic summaries corpus (EASC) | None |

| [112] | 2018 | Aim to design graph model for document | Graph | Arabic dataset | Precision, recall, and F1-score |

| [113] | 2018 | Aim to distinguish the 5 dialects using char-level representation | Character level | ADI dataset for the shared task | Accuracy and F1-score |

| [114] | 2018 | Aim to propose a new representation technique | TCR–ICF | Collect a new dataset | Accuracy |

| [115] | 2018 | Aim to study of several word embedding models is conducted, including GloVe, CBOW, and Skip-gram | GloVe and Word2Vec | Many datasets such as OSAC, LABR, and Abu El-Khair corpus | — |

| [116] | 2018 | Aim to compare pretrained vectors of the word for AT | Word embedding (WE) models | Collected from Twitter | Accuracy of 93.5% with AraFT |

| [117] | 2018 | Aim to use word representation for sentiment analysis | Word2Vec | Language Health Sentiment Dataset | Accuracy |

| [118] | 2018 | Aim to use term weighting and multiple reducts | Term weighting | 2700 documents for 9 classes | Recall, precision, and F1-score |

| [119] | 2019 | Aim to create word embedding models | ARWORDVEC models | ASTD and ARASENTI | Accuracy and F1-score |

| [92] | 2019 | Aim to create a new bigram alphabet approach | Bigram alphabet | Arabic dataset Aljazeera News. | Accuracy |

| [120] | 2019 | Aim to introduce N-gram embeddings | N-gram embeddings | Using many western and eastern Arabic datasets | Accuracy, precision, recall, and F1-score |

| [121] | 2019 | Aim to study word embedding for text representation | Char level | Merge many datasets | Accuracy |

| [122] | 2019 | Aim to design an algorithm for a combined document embedding representation | Word sense | OSAC | Precision, recall, and F1-score |

| [123] | 2019 | Aim to propose a new representation model based on N-gram | N-gram | DOSC and HARD datasets | Accuracy, precision, recall, and F1-score |

| [124] | 2019 | Aim to introduce a graph-based semantic representation model | Graph | ArbTED | Accuracy precision, recall, and F1-score |

| [125] | 2020 | Aim to find a technique for the proposed technique by reducing the high dimensionality | TF–IDF | CNN dataset and Alj-News5 dataset | Precision, recall, and F1-score |

| [126] | 2020 | Aim to introduce Doc2Vec and machine learning approaches | PV–DM and PV–DBOW | Five Arabic datasets | Accuracy and F1-score |

| [127] | 2020 | Aim to use transfer learning as a new technique for representation | BERT | HARD; ASTD; ArSenTD-Lev; LABR:AJGT | Accuracy and F1-score |

| [128] | 2020 | Aim to create embeddings vector based on word and character | Character and word embeddings | TASK | Pearson correlation coefficient |

| [121] | 2020 | Aim to apply transfer learning for emotion analysis in Arabic | Character-level representation | Hotel reviews AND 1012 tweets | Accuracy |

| [129] | 2020 | Aim to study the impact of BERT model AT formal and unformal | BERT | CREATE TWO | F1-score |

| [130] | 2020 | Aim study word-level representations to tackle the Romanized alphabet of Tunisian | Word2vec | Word | Accuracy-measure |

| [131] | 2020 | Aim to study Arabic opinion mining using a different type of representation | Unigram, bigram, and trigram | HTL and LABR datasets | Accuracy |

| [132] | 2020 | Aim to use pretrained word embedding for Arabic sentiment | ARAVEC and FastText library | Arabic Gold Standard Twitter Data for sentiment analysis (ASTD) | ROC curve |

| [133] | 2020 | Aim to classify text utilizing fine-tuned Word2Vec | Word2Vec | Movie review dataset | Accuracy |

| [134] | 2021 | Aim to represent text at the word level and investigate an efficient bidirectional LST for classification | Word embedding | ASTD ArTwitter LABR MPQA | Precision, recall, and F1-score |

| [135] | 2021 | Aim to introduce a contextual semantic embedding representation | BERT | OSAC | Accuracy and F1-score |

| [136] | 2020 | Aim to propose a model for representation embeddings at the different levels | Character, word, and sentence embedding | IMDB movie dataset | Accuracy, precision, recall, and F1-score |

| [137] | 2024 | This work combines the trained Arabic language model ARABERT with the potential of long short-term memory (LSTM) | ARABERT | 4071 Arabic audio clips | Accuracy, word error rate, character error rate, BLEU score, and perplexity |

The basic unit in language is a word, which produces phrases, sentences, and documents. Because of this, word-based representations are the most critical research direction since the total number of words that we can get from any language is huge compared to characters or phrases.

5.1. Representation Based on Character-Level Methods

Character-level representation refers to a way of representing text data where each character in the text is considered a separate unit of analysis, as opposed to word-level or sentence-level representation, where words or entire sentences are treated as units of analysis. Character-level representation is commonly used in NLP tasks such as language modeling, TC, and machine translation. In this approach, each character in a text is mapped to a unique numeric representation using techniques such as one-hot encoding or embedding. One advantage of character-level representation is that it can handle out-of-vocabulary (OOV) words or rare words not present in a predefined vocabulary; each character can be mapped to a unique representation even if it has never been encountered before. However, character-level representation may not capture the semantics of words or phrases and may require more computational resources than word-level or sentence-level representation. The methodology of character-level embedding starts by dividing each Arabic word into basic letter forms and encoding each alphabet separately. There are two ways to represent text at the character level: encoding every alphabet alone or using another technique called N-gram, adding one, two, or three N-grams. The following subsections present the existing work on these representations.

5.1.1. N-Gram Embeddings

N-gram-level embedding divides each Arabic word into basic letter form and encodes each alphabet differently by taking two or three letters. Petasis et al. [138] proposed a model to deal with high dimensionality for ATC using trigram frequency to represent text, and their results demonstrated that trigram text categorization was effective. Al-Thubaity et al. [100] used a neural network to map English vectors from Arabic vectors, develop continuous representations that capture semantic and syntactic features, and test these vectors using intrinsic and extrinsic evaluations. Elghannam et al. [92] proposed a novel bigram character-based method to represent text for a TC system and evaluated it on the Aljazeera News dataset. Mulki et al. [120] proposed a model that uses N-gram embedding for sentiment in many Arabic dialects. Saeed et al. [123] represented text using the N-gram method in numerous classification algorithms to detect spam in Arabic opinion texts, including rule-based and ML algorithms. Elzayady et al. [131] proposed a model for SA by employing CNN for FS and RNN for classification. The method did not address the issue of OOV terms.

5.1.2. Character-Level Embeddings

Character-level embeddings separate each Arabic word into basic letter forms and then encode each alphabet separately. Belinkov et al. represented text at the character level using CNN to distinguish between similar languages and dialects [104]. Ali proposed a CNN-based model to distinguish five dialects of the Arabic language [113]. Omara et al. used a CNN-based model for SA at the character level. Furthermore, the model was evaluated for emotion identification and SA [121].

5.2. Word-Based Embeddings

Word representation refers to the process of encoding words as numeric vectors or embeddings, which can be processed by ML algorithms for various NLP tasks. Word embedding tokenizes a sequence of words at the word level and assigns a vector to each word. In the following section, state-of-the-art word embedding methods have been discussed.

5.2.1. Weighted Words

- •

BoW: BoW is a feature extraction technique that ignores word order in a text document. Al-Radaideh and Al-Abrat proposed a model based on term weighting for ATC and reduced the number of terms used to generate the classification rules [118]. Alahmadi et al. proposed combining BoW with bag-of-concepts to handle semantic relationships between words. Still, the problem of sparse matrix and complex preprocessing finally did not work with a problem like OOV [98]. Al Sallab et al. proposed three DL models for sentiment classification in AT, each using a different representation method, such as BoW. Their experiments were carried out on the LDC ATB dataset [99]. Alnawas introduced Doc2Vec with ML for SA of AT, and they proposed a continuous vector representation model. They were computed using the PV–DM and PV–DBoW architectures. Furthermore, these vectors were used to train four popular ML methods: LR, SVM, KNN, and RF [126].

- •

TF–IDF: TF–IDF assigns more weight to fewer common words in a document. Mahmood and Al-Rufaye applied and improved TM by decreasing dimensions utilizing k-means clustering algorithms [110]. Al-Taani et al. proposed an FCM approach to classifying AT by lowering the dimensionality of the representation. They employed SVD for DR, but it has significant disadvantages such as a high complexity time, a high-dimensional space, and a lack of consideration for the semantic level [125].

- •

TCR–ICF: TCW–ICF is a new method of representation that has been used for ATC. It works like term frequency, which replaces representation based on class instead of a word. Guru et al. proposed TCW–ICF, a novel term weighting system for ATC. Their method improves results by applying DR [114]. Finally, all of their experiments were implemented in the dataset that they created.

5.2.2. Word Embedding

- •

Context-Independent Word Embeddings: In this representation, the meaning of surrounding words is ignored; examples include Word2Vec, GloVe, and FastText.

- •

Word2Vec: In 2013, Mikolov et al. from Google implemented the W2V model. This model has two hidden layers, a continuous BoW and the second one, Skip-Gram, which both work on a high-dimensional vector for each word. Some researchers have used the following methods for representation.

- •

Altowayan represented text and created embedded words for SA tasks to represent AT. The embedding of features for binary classifiers was used to detect standard and dialectal AT, and they also presented word embedding as an alternative to extract features for Arabic sentiment classification. Their method depends on word embedding as AT as the primary source of characteristics. Two types of AT have been detected using this representation [7]. Dahou et al. detected Arabic review sentiment polarity and social media from AT. They used to study corpora from two domains: reviews and tweets [103]. Soliman et al. introduced a pretrained distributed representation called AraVec. They make this work open source to support the researcher community. Their model handles syntactic and semantic relations among words [107]. Al-Azani and El-Alfy designed a model for SA to solve three problems: microblogging data, handling imbalanced classes, and addressing dialectical Arabic. The oversampling technique solved the imbalanced dataset problem [109]. Sagheer and Sukkar resented classifying Arabic sentences using CNN models with a representation embedding layer. They have used AraVec as a pretrained system [111]. Alwehaibi et al. implemented SA for AT using the LSTM model on Arabic tweets. They assess the impact of pretrained vectors for numerical word representations that are already available. The experimental findings suggest that the LSTM–RNN model produces acceptable results [116]. Alayba et al. described how they have constructed Word2Vec models from a large Arabic corpus obtained from 10 newspapers in different Arab countries. Different ML algorithms and CNN with various FS methods were applied to the health sentiment dataset. They increase the accuracy of the form from 91% to 95% [117]. Fouad et al. showed that effective word embedding in ArWordVec was developed from Arabic tweets. They created a new approach for detecting word similarity. The experimental results suggested that the ArWordVec models outperform previously available models on Arabic Twitter data. Finally, they applied various models to obtain word embeddings, such as the CBoW, SG, and GloVe methods [119]. Abir Messaoudi et al. presented different word representations of different DL models (CNN and BiLSTM), without using any preprocessing step. They proved that CNN with M-BERT reached the best results compared to others [130]. Sharma et al. proposed a model to perfectly clean the data and generate word vectors from the pretrained Word2Vec model [133]. Elfaik and Nfaoui (2021) proposed a model for ATC. They represented text at the word level and investigated BiLSTM to improve the SA of AT. The F1 measure was 79.41 in LABR datasets. The complexity of preprocessing and time was greater. They did not use character level, which may solve some problems for the Arabic language [134].

- •

GloVe: It is an unsupervised learning algorithm for obtaining vector representations for words, a strong representation to represent text [90]. The approach is similar to the Word2Vec method. M. A. Z. et al. investigated the effective representation of N-grams as features for ATC. Their experiment used the SPA dataset [101]. Gridach et al. implemented various word representation models, such as CBoW, Skip-Gram, and GloVe utilizing two datasets called ASTD, and SemEval [108]. Suleiman and Awajan studied various word embeddings to represent AT. These techniques are GloVe and Word2Vec. Finally, they conclude that Word2Vec outperforms others [115].

- •

FastText: Facebook’s AI Research Lab released a novel technique to solve the representation issue by introducing a new word embedding method called FastText. Each word is represented as a bag of character N-gram. For example, given the word “محمد” and n = 4, FastText will produce the following representation composed of character trigrams: < مح, محم, حمد, مد >. Ibrahim Kaibi introduced NuSVC classifiers to classify AT using word embeddings representations known as AraVec and FastText. They combined both representation models based on the concatenation of their vectors. Evaluate the model using accuracy metrics [132].

- •

Context-Dependent: It is one type of representation in which the meaning of the context is included. This representation depends on the context of the sentence, which means there will be more simulation of humans.

- •

AraBERT: Antoun et al. implemented new transfer learning to classify AT. This model called AraBERT achieves the same BERT in English text. They compare multilingual BERT with AraBERT [127]. Chowdhury et al. studied the effects of the BERT model on a mixture of formal and informal texts. They applied new Arabic transfer learning for short-text datasets. They prove that greater generalization was made by the former when compared to others [129]. F. Zahra El-Alami et al. presented embedding representations that handle semantic context to improve ATC. This type of representation solves many complex problems. They implemented and compared their work with AraBERT [135].

- •

MarBERT: Abdul-Mageed et al. presented two powerful Transformer-based models, especially for Arabic. They train their models on large-to-massive datasets that cover different domains [139].

5.3. Document-Level Methods

Mahdaouy et al. introduced a classification system to classify text and documents in vector space, and their representations for the document in an unsupervised method are to carry implicit relationships and semantics between words [106].

5.4. Sentence-Level Methods

A sentence representation is usually used in many tasks in natural language. Sentence representation aims to encode the semantic information of the whole sentence into a real-valued representation vector, which could improve the understanding of the context of the text. Farra et al. examined sentiment text for Arabic at two-level document and word. They conclude that the work, which has been done in Arabic, is still limited. They studied a novel grammatical method and the semantic orientation of words with their corresponding [95].

5.5. Representation Based on Hybrid Methods

Hybrid methods try to merge more than one method for text representation, by utilizing some advantage in one method and another advantage from another. Al-Anzi et al. proposed TC for AT and compared some of them. They employed SVD to decrease the dimension and reduce the number of features [102]. El-Alami et al. presented a method that works with two phases of document embedding and sense disambiguation to improve accuracy. They implemented several experiments on the Open-Source Arabic Corpora dataset. However, there are some limitations, such as using TF–IDF representation, which takes a sparse matrix representation, a complex preprocessing, especially using the Khoja stemmer, and using a lexicon will cover only some vocabulary, so these cannot be appropriate for the Arabic language since it has a rich vocabulary and rare words [122]. Alharbi et al. designed a model to classify microblogs on social media using word and character representation. At the same time, they presented a new technique that joins different levels of word embedding [128]. El-Affendi et al. developed a novel DL multilevel model that uses a simple positional binary embedding scheme to compute contextualized embedding at the character, word, and sentence levels simultaneously. The suggested model is also shown to generate new state-of-the-art accuracies for two multidomain problems [136].

5.6. Representation Based on Graph Methods

The representation of text as a graph is one of the essential preprocessing steps in data and TM in many domains, such as TC. The graph representation approach is used to represent text documents in a graph to handle text features such as semantics [124, 140]. El Bazzi et al. implemented a system to classify documents using a graph model for representation. They studied the impact of the semantic relation between the text tokens on the papers [112]. Ismail et al. presented a system to summarize and classify AT using a rich semantic graph (RSG). It is a suitable method that supports the development of the Arabic language [97]. Hadni and Gouiouez proposed a new graph approach for representing text and classifying AT. This is accomplished through using BabelNet knowledge [105]. Etaiwi and Awaja introduced a graph representation to classify AT. Their model was evaluated using different metrics such as precision, accuracy, recall, and F1-score [124].

6. DR

Representation of text in vector space models (VSMs) such as BOW has several limitations, for example, sparse matrices. These methods are pretty expensive in terms of time complexity and memory utilization. Many researchers utilized DR to limit the size of the feature space to address this limitation. Existing DR methods used in AT categorization are discussed in this section and shown in Figure 5 and Table 4.

| Ref. | Year | Objective | Method | Dataset | Evaluation matrices |

|---|---|---|---|---|---|

| [141] | 2007 | Aims to implement an SVM with chi-square | Chi-square | Arabic data | Precision, recall, and F1-score |

| [142] | 2007 | Aims to explore the effectiveness of different feature selection methods | Chi-square | Arabic data | Precision, recall, and F1-score |

| [51] | 2008 | Aim to introduce three feature reduction techniques and compare them | Cluster with stemming | 15,000 documents | Precision and recall |

| [143] | 2009 | Aims to study the impact of the NB algorithm with the chi-square | Chi-square | SPA | Recall, precision, and F1-score |

| [144] | 2011 | Aim to study a feature reduction algorithm | Feature selection synonyms merge | House Arabic documents | F1-score |

| [96] | 2012 | Propose a conceptual representation for AT representation | Chi-square | Corpus of Arabic texts built by Mesleh | Precision, recall, and F1-score |

| [145] | 2012 | Aim to introduce LDA (latent Dirichlet allocation) algorithm b | LDA (latent Dirichlet allocation) | House corpus of ATs | F1-score |

| [146] | 2013 | This thesis introduces a new algorithm for feature selection called binary particle swarm optimization | The feature selection process, the filter wrapper approach | Akhbar-Alkhaleej, Arabic Alwatan, Al-Jazeera-News Arabic | Recall, precision, and F1-score |

| [147] | 2014 | Aim to improve the AT categorization system by reducing the dimension | Radial basis function | House Arabic documents | Precision, recall |

| [148] | 2014 | Proposes a new method for ATC in which a document is compared with predefined documents, using the chi-square measure | TF–IDF and chi-square | House containing 1090 documents | — |

| [101] | 2015 | Aim to improve accuracy by representing a word and decreasing the cosine error | Word embeddings CBOW, SKIP-G, GloVe | Collect home data | — |

| [106] | 2016 | Aim to prove that representation is better than text preprocessing method | Word vectors and Doc2Vec | BBC, CNN OSAC corpora2, Arabic Newswire LDC | Precision, recall, and F1-score |

| [110] | 2017 | Aim to propose a study that minimizes the features | TF–IDF | 200 sports news corpus | Precision, recall, and F1-score |

| [80] | 2018 | Aim to improve the chi-square | Improve chi | Open-Source Arabic Corpus (OSAC) | Precision, recall, and F-measure |

| [149] | 2018 | Aim to investigate one of the most successful classification algorithms which are C4.5. | Chi-square and symmetric uncertainty | Arabic dataset | Precision, recall |

| [150] | 2018 | Aim to propose a new feature selection method | Feature selection | Open-Source Arabic Corpus (OSAC) | Precision, recall, and F1-score |

| [151] | 2019 | The proposed feature selection approach improves the accuracy | Feature selection | — | Precision, recall, and F1-score |

| [152] | 2019 | Propose a solution for the main problem, a large number of involved features | Feature selection | — | — |

| [153] | 2019 | Aim to compare three-dimensional reduction methods |

|

2 linguistic corpora for English and Arabic | — |

| [154] | 2019 | Aim to design a method for feature selection | Feature selection | NN, BBC, and OSAC | — |

| [155] | 2019 | Aim to introduce hybridization feature set methods | Hybridized feature set | Dark Web Forum Portal | F1-score and accuracy |

| [156] | 2020 | Aim to improve the feature selection method by merging the chi-square and artificial bee colony | Hybrid | BBC | F1-score |

| [157] | 2020 | Aim to improve and enhance the wrapper FS called the binary grey wolf optimizer | Grey wolf optimizer | Alwatan, Akhbar-Alkhaleej, and Al-Jazeera-News | Precision, recall, and F1-score |

| [158] | 2012 | Aim to strengthen AT categorization system utilizing feature selection | Synonyms merge technique | House Arabic documents | F1-score |

6.1. Feature Selection

In general, FS has three categories known as embedded, wrapper, and filtering techniques, but in TC, the processes are more likely to use filters due to many features. Mesleh applied a TC system using SVM with CHI; simultaneously, they suggested other FS algorithms for future work [141]. Mesleh et al. collected a house dataset and used six FS techniques for ATC purposes. Based on their different experiences, they noted that FS is beneficial in increasing the accuracy of ATC [142]. Duwairi discussed three feature reduction approaches to improve accuracy in AT. At the same time, they made comparisons for stemming, light stemming, and word clustering [51]. Bahassine et al. developed a new method for the classification system of AT and applied CHI to improve classification accuracy and decrease size [80]. Larabi Marie-Sainte and Alalyani implemented SVM and FS methods used in numerous scenarios to study the classification of AT. Due to the complexity of Arabic, it was not done intensively. Their experimentation was evaluated using various matrices like precision, recall, and F1-score [150]. Rashid et al. implemented FS to increase the accuracy of ATCS, while precision, recall, and F1-score were used [151]. Belazzoug et al. proved that FS is important in enhancing the ATC system. They used BoW for representation; the main problem was having many features [152].

6.2. Feature Extraction

Mohamed applied a new algorithm for extracting features and decreasing the dimension. Principal component analysis (PCA), non-negative matrix factorization (NMF), and SVD have been used for clustering approaches. Finally, he evaluated three well-known techniques to demonstrate the advantages and disadvantages of each [153].

6.3. Optimization

Only a few works have been explored for ATC when compared to existing techniques. Chantar et al. designed a new method for FS to improve TC called the grey wolf optimizer (GWO). This method is a wrapper-based FS [157].

6.4. Hybrid

Sabbah and Selamat presented a hybrid FS method to improve the TC system. They represent text-using TF–IDF to represent text. At the same time, they used other techniques, such as PCA, to decrease dimension [155]. Hijazi et al. created a novel FS technique that combines artificial bee colony (ABC) and CHI. CHI has three advantages: quick and easy to use. The second phase used the ABC [156]. Chantar et al. proposed an ATC system using KNN and SVM to classify text and binary particle swarm optimization (BPSO), hybridized to select features [157]. Thabtah et al. introduced TC using the NB algorithm based on the CHI feature selection method. They have used many metrics for evaluation, such as F macro, recall, and precision [143].

Chantar et al. proposed an ATC system using KNN and SVM with BPSO as FS [157]. Hussein and Awadalla presented a TC system using different classification algorithms. By combining synonyms, dimensionality has been utilized as a semantic feature selection method [144]. Karima et al. proposed a conceptual representation of ATR. We used AWN to map the terms to the concept [96]. Zrigui et al. presented a conceptual representation for working with ATC. At the same time, AWN maps terms to the concept [145]. Saad et al. developed a new strategy for reducing the number of features by merging semantic synonyms and enhancing ATC [158]. Zaki et al. proposed an Arabic document system based on traditional models. Simultaneously, N-grams with TF–IDF representation techniques were applied [147]. Abu-Errub implemented TF–IDF representation techniques to classify documents into the right class. At the same time, they used the CHI method for FS [148].

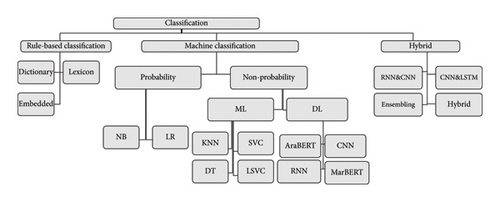

7. Classification Models

Once the representation and choosing the optimal feature have been done for a given text through an optimal representation technique, the selection of such a classifier is a crucial task in ATC [15]. Many classification algorithms have been implemented in the literature on ATC shown in Figure 6 and Table 5. One of the significant challenges in applying ML to low-resource languages such as Arabic is the limited availability of high-quality labeled datasets. Unlike widely studied languages such as English, Arabic suffers from data scarcity, particularly in specialized domains. Furthermore, the complexity of Arabic morphology, including rich inflection, derivation, and agglutination, poses additional difficulties in feature extraction and representation. Dialectal variations across different regions further complicate text classification, as models trained on modern standard Arabic (MSA) may struggle to generalize across various dialects. Additionally, the lack of standardized preprocessing techniques and annotated corpora makes it challenging to fine-tune models effectively. Addressing these issues requires the development of transfer learning approaches, data augmentation techniques, and hybrid models that can leverage both supervised and unsupervised learning methods to enhance performance in low-resource NLP tasks.

| Ref. | Year | Objective | Method | Dataset | Evaluation matrices |

|---|---|---|---|---|---|

| [159] | 2004 | Aim to apply Arabic web documents classification using NB | Naïve Bayes | Collected 300 web documents per category | Accuracy |

| [160] | 2006 | Aim to present a system for ATC | Rocchio classifier | Collected data corpus | — |

| [94] | 2006 | Aim to identify foreign words using three-classification method | Lexicons for AT | Collected dataset | — |

| [13] | 2007 | Aim to apply three algorithms for AT text classification techniques | KNN, Rocchio, and Naïve Bayes | 1445 document | Accuracy, precision, recall, and F1-score |

| [161] | 2008 | An implementation classification using a recognized statistics technique | SVM | Different | Accuracy |

| [162] | 2008 | This paper investigated different vector space models and use the KNN algorithm | SVM | Collected | F1 |

| [22] | 2009 | Aim to classify Arabic documents using artificial neural network | SVD and neural networks | Hadith! corpus | Accuracy precision, recall, and F1-score |

| [163] | 2011 | Proposed to classify documents using lexicon and k-NN | K-nearest | NONE | Precision, recall, and F1-score |

| [164] | 2012 | Aim to apply different rule-based classification algorithms | Rule-based, DT (C4.5), rule induction (RIPPER), hybrid | Published corpus | Rule-based |

| [165] | 2012 | Aim to compare six well-known classifiers after applying feature selection. | Naive Bayes without fs and maximum entropy with information gain | Arabic datasets | Precision, recall, and F1-score |

| [146] | 2013 | Aim to apply feature selection to improve accuracy | The feature selection process, the filter wrapper approach | Akhbar-Alkhaleej, Arabic Alwatan, Al-Jazeera-News Arabic dataset | Precision, recall, and F1-score |

| [166] | 2014 | Aim to improve accuracy by using a different classification algorithm | SVM, NB, and C4.5 | Using Arabic Wikipedia | Precision, recall, and F1-score |

| [167] | 2014 | Implemented the key nearest neighbor (KNN) algorithm | KNN | Dataset contains 621 documents | Precision and recall |

| [66] | 2015 | An implementation of a Naive Bayesian classifier for classification | Naive Bayesian classifier | BBC Arabic corpus | — |

| [9] | 2016 | Aim to classify text using a graph-based approach | KNN, Rocchio, and Naïve Bayes algorithms | Corpus of 1084 documents | F1-score |

| [168] | 2016 | Aim to classify AT utilizing a hybrid method | Conditional random field and LSTM | NONE | Precision, recall, and F1-score |

| [169] | 2017 | Aim to classify AT documents using a different algorithm | Rules, NB, LR, and AdaBoost with bagging | CNN BBC OSAC | Accuracy |

| [108] | 2017 | Aim to use DL for sentiment analysis | CBOW, Skip-Gram, and GloVe | ASTD and SemEval 2017 datasets. | F1-score |

| [170] | 2017 | Aim to use neural networks and SVM and compare them | RNN | HOTEL DATA | Accuracy and F1-score |

| [8] | 2018 | Aim to implement convolutional neural network (CNN) to classify AT from large datasets | CNN | Large dataset collection | Accuracy |

| [171] | 2018 | Aim to use a combination of CNNs and LSTMs | CNN–LSTM | Arabic health services (AHS) dataset | Accuracy |

| [172] | 2018 | Aim to design architectures to improve accuracy | CNN–LSTM | Task 1’s datasets | Accuracy |

| [173] | 2018 | Aim to classify text using different classification techniques | KNN, and Naïve Bayes algorithms .svm | CNN dataset | Precision, recall, and F1-score |

| [174] | 2019 | Aim to combine LSTM with CNN | LSTM with CNN | LABR, ASTD | Accuracy |

| [175] | 2019 | Aim to classify documents using a convolutional GRU | Many models | Khaleej Arabia akbarona | Accuracy |

| [176] | 2019 | Aim to classify Hadith document using different DT, RF, and NB | DT, RF, and Naïve Bayes | Hadith DATA | Accuracy |

| [174] | 2019 | Aim to detect dialectal Arabic using deep learning | LSTM, CNN | LABR, ASTD | Accuracy |

| [177] | 2019 | Aim to classify text using polynomial neural network | Polynomial neural networks | Arabic dataset | Precision, recall, and F1-score |

| [178] | 2019 | Aim to classify text utilizing the narrow structure of CNN | Narrow convolutional neural network | Twitter datasets for dialect | Accuracy, precision F1-score |

| [179] | 2020 | Aim to represent text as an image-based character to classify a document | CNN1D | They have created AWT and APD | F1-score |

| [180] | 2020 | Aim to classify text based on deep auto encoder representations and bag-of-concepts | A deep Autoencoder classifier | OSAC | Precision, recall, and F1-score |

| [181] | 2020 | Aim to classify AT documents by a combination of CNN and RNN | CNN and RNN | OSAC | Precision, recall, and F1-score |

| [182] | 2020 | Aim to use CNN, LSTM, and their combination for classification | CNN and LSTM | OSAC | F1-score |

| [183] | 2020 | Proposed methods to achieve very high accuracy using CNN | CNN | 15 different | Accuracy |

| [184] | 2020 | Aim to use the CNN architecture with LSTM to classify AT | CNN | LABR ASTD ArTwitter | Precision, recall, F1-score, and accuracy |

| [185] | 2021 | Aim to compare four machine learning algorithms in the task of ATC | Artificial neural network, DT, and LR | AJGT, ASTD, Twitter | Precision, recall, F1-score, and accuracy |

| [186] | 2021 | Aim to classify AT utilizing two models, GRU and IAN-BGRU | SVM, KNN, J48, and DT based on gated recurrent units and an interactive attention network based on bidirectional GRU | Arabic hotel reviews dataset | Precision, recall, F1-score, and ROC (%) |

7.1. Rule-Based (Lexicon or Dictionary)

Rule-based classifiers are one type of classifier that makes class decisions based on various “if…else” rules. Because these rules are simple to understand, these classifiers are commonly used to generate descriptive models. The condition used with “if” is referred to as the antecedent, and the predicted class for each rule is referred to as the consequent. Rule-based SA refers to the study conducted by language experts. The outcome of this study is a set of rules (lexicon or sentiment lexicon) according to which the words classified are either positive or negative. A dictionary-based (lexicon-based) SA uses lists of words called lexicons. In these lists, the words have been prescored for sentiment.

Different methods have been used under rule-based approaches, such as lexicons and dictionaries. ATCs systems use these rules with string comparisons of text for some tasks. A few researchers have used this method. Nwesri et al. introduced various algorithms to specify foreign words utilizing lexicons, patterns, and N-grams, and they have proven that the lexicon approach was the best [94]. Thabtah et al. conducted in-depth research on the problem of ATC and evaluated the efficacy of different rule-based classification algorithms [164].

7.2. Classification Using ML Algorithm

ML and DL approaches achieve state-of-the-art results on ATC. In this section, we explore the related work regarding ATC.

7.2.1. Probability

El Kourdi et al. studied a statistical ML algorithm based on NB to classify nonvocalized AT. The NB categorizer is evaluated using cross-validation trials [159]. Yousif et al. applied NB to classify texts utilizing WordNet for representation and different stemmers to compare them [66]. Syiam et al. presented a Rocchio classifier algorithm for TC, which outperformed KNN. At the same time, they are addressed by combining DR techniques such as stemming and FS to reduce the cost classification process [160].

7.2.2. Nonprobability

- •

Traditional ML: Al-Harbi et al. implemented AT documents on seven corpora generated for AT using a recognized statistical technique. Their method improved performance by utilizing FS and SVM with C5.0, which has been used. Finally, they conclude that C5.0 provides superior accuracy [161]. Mohammad et al. used a polynomial neural network in TC to produce successful outcomes [177]. Harrag and El-Qawasmah built a neural network for ATC and singular value decomposition to improve accuracy and reduce error [22]. Thabtah et al. studied different representation methods, such as term weighting approaches with the KNN algorithm for classification. In their comparison, they used the F1 evaluation metric [162]. El-Halees studied and combined approaches to classifying Arabic documents. He used three methods in the sequence: first, lexicon, ME, and k-NN to classify AT in different steps [163].

- •

DL: Gridach introduced a new architecture that represents text at the character level and word level to name the entity recognition. The problem of vanishing gradients arises in the context of long sequences, particularly in tasks like text classification, making it difficult for models to learn long-range dependencies. The OOV problem is still there because word-level embedding cannot predict new words that have not been seen before [168]. Abu Kwaik et al. investigated the DL technique to detect dialectal AT. Their architecture was word-level representations. The experimental results had an accuracy of 81% in the LABR dataset and 85.58% in the ASTD dataset [174]. Abuhaiba and Dawoud proposed combining rules, followed by two classification stages for ATC [169]. Gridach et al. proposed a DL system for SA using DL and CBoW, Skip-Gram, and GloVe for representation [108]. Alayba et al. combined CNN and LSTM networks for Arabic sentiment categorization. Because of the complexity of Arabic morphology and orthography, it also investigated the usefulness of applying various levels of SA. Abdullah et al. described a system to detect and classify Arabic tweets utilizing word and document embeddings. They used a combination of CNN–LSTM for the classification task [172]. Elnagar et al. used Word2Vec embeddings trained on the Wikipedia corpus for text classification. They report the accuracy of 91.18% achieved by convolutional GRU on the SANAD corpus. However, applying normalization by replacing the letters (أإآ) with a letter (ا) in some cases will change the meaning; for example, فأر (means “mouse”) will transform to “فار” (means “escaped”) [175]. Finally, their works are based on filtering all alphabets and deciding whether they belong to Arabic. They eliminated non-Arabic alphabets, which added confusion when we had the text from other languages like Urdu. Abu Kwaik et al. proposed a new model for TC by a combination of LSTM–CNN to detect the dialectal of AT [174]. Daif et al. presented the DL structure for AT document classification using image-based characters. Each Arabic character or alphabet was represented as a 2D image. They trained their model from start to finish with the weighted class loss function to avoid the imbalance issue. They produced AWT and APD datasets to evaluate their model [179]. El-Alami et al. proposed an AT categorization method based on bag-of-concepts and deep Autoencoder representations to eliminate problems like explicit knowledge in semantic vocabularies using Arabic WordNet. Their method combines implicit and explicit semantics and reduces feature space dimensionality. They achieved the best results by 94% and 93% for precision and F-measure, respectively. However, their methods still suffer from the complexity of preprocessing and they cannot properly handle the level of vocabulary. Finally, they do not handle the Arabic language ambiguity issue and enhance their system’s performance by utilizing sense embedding techniques [123, 180]. Ameur et al. proposed a combination of CNN and RNN for AT document categorization using static, dynamic, fine-tuned, and word embedding. The DL CNN model automatically learns the most meaningful representations from Arabic word embedding space. They evaluated their proposed DL model using the OSAc dataset. By comparing the performance with the individual models of CNN and RNN, their proposed hybridization model helped improve ATC’s overall performance. There are some limitations in, such as normalization by changing some alphabet to another form, but in some cases, the meaning will change; for example, “كرة” (means football) will transform to “كره” (means hate) [181]. El-Alami et al. studied a hybrid of DL (CNNs and LSTM) that shows promise for huge datasets. They resolved issues such as the polysemous term. Simultaneously, a method for context meaning employing embedding and word sense disambiguation was proposed [182]. Alhawarat and Aseeri suggested the CNN model for ATC, but it takes a long time to train compared to ML approaches. They produced good results utilizing 15 freely available datasets [183]. Ombabi et al. suggested a DL model for Arabic SA, with this model fully combining a one-layer CNN architecture with two LSTM layers. As the input layer, this approach is handled by word embedding and FastText [184]. Al-Smadi et al. proposed an SVM approach that outperforms the other RNN approach on Arabic hotels’ reviews [170]. Alali et al. suggested that CNN utilizes representations to classify tweets. A sensitivity study was carried out to assess the influence of different combinations of structural features [178].

7.3. Hybrid