MCOA: A Multistrategy Collaborative Enhanced Crayfish Optimization Algorithm for Engineering Design and UAV Path Planning

Abstract

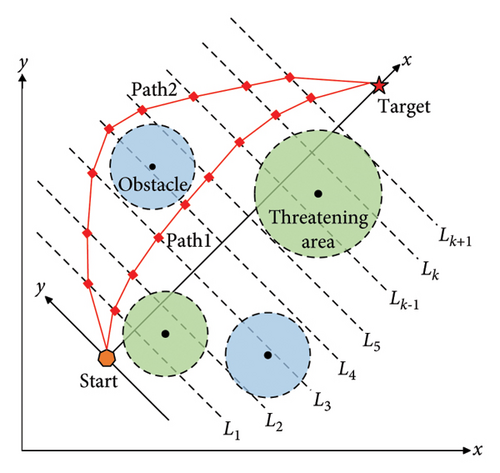

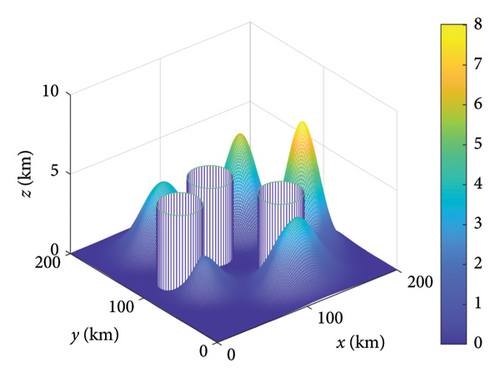

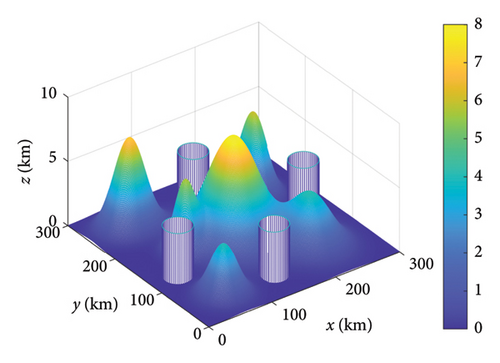

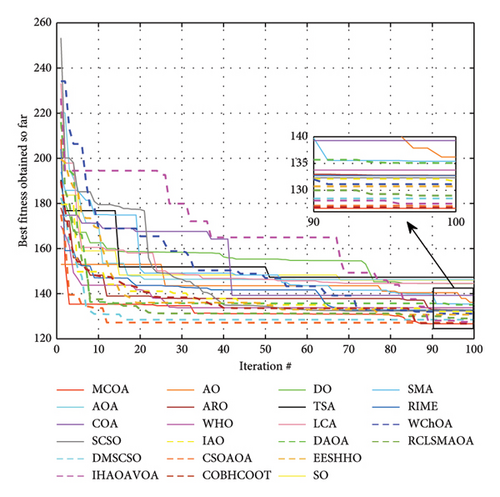

The crayfish optimization algorithm (COA) is a recent bionic optimization technique that mimics the summer sheltering, foraging, and competitive behaviors of crayfish. Although COA has outperformed some classical metaheuristic (MH) algorithms in preliminary studies, it still manifests the shortcomings of falling into local optimal stagnation, slow convergence speed, and exploration–exploitation imbalance in addressing intractable optimization problems. To alleviate these limitations, this study introduces a novel modified crayfish optimization algorithm with multiple search strategies, abbreviated as MCOA. First, specular reflection learning is implemented in the initial iterations to enrich population diversity and broaden the search scope. Then, the location update equation in the exploration procedure of COA is supplanted by the expanded exploration strategy adopted from Aquila optimizer (AO), endowing the proposed algorithm with a more efficient exploration power. Subsequently, the motion characteristics inherent to Lévy flight are embedded into local exploitation to aid the search agent in converging more efficiently toward the global optimum. Finally, a vertical crossover operator is meticulously designed to prevent trapping in local optima and to balance exploration and exploitation more robustly. The proposed MCOA is compared against twelve advanced optimization algorithms and nine similar improved variants on the IEEE CEC2005, CEC2019, and CEC2022 test sets. The experimental results demonstrate the reliable optimization capability of MCOA, which separately achieves the minimum Friedman average ranking values of 1.1304, 1.7000, and 1.3333 on the three test benchmarks. In most test cases, MCOA can outperform other comparison methods regarding solution accuracy, convergence speed, and stability. The practicality of MCOA has been further corroborated through its application to seven engineering design issues and unmanned aerial vehicle (UAV) path planning tasks in complex three-dimensional environments. Our findings underscore the competitive edge and potential of MCOA for real-world engineering applications. The source code for MCOA can be accessed at https://doi.org/10.24433/CO.5400731.v1.

1. Introduction

Optimization pertains to determining the optimal solution for a given problem under certain constraints [1]. Concomitant with the technological revolution and advancements driven by artificial intelligence, optimization problems are emerging in various research domains, rendering the question of how to address these problems efficiently a subject of ardent discourse among scholars [2, 3]. Currently, existing optimization techniques can be classified into traditional methods and metaheuristic (MH) algorithms [4]. Traditional methods primarily utilize specific mathematical rules, such as gradient descent, quasi-Newton techniques, and Levenberg–Marquardt [5]. These methods necessitate gradient information and invariably produce the same solution once an input is given, so they are only suitable for solving some small-scale optimization problems at a theoretical level [6]. Nevertheless, the complexity of real-world engineering optimization problems is escalating, manifesting nondifferentiable, discontinuous, and nonlinear characteristics [7]. This results in traditional methods being almost powerless when confronted with such challenges. As research deepens, a gradient-free, problem-independent, and efficient stochastic optimization technique, namely, the MH algorithm, has garnered prominent attention, which is capable of finding optimal or near-optimal solutions for highly complicated nonlinear optimization problems within a rational time frame [8]. Besides, the MH algorithm possesses the advantages of simple structure, good adaptability, and robust local optimum avoidance [9]. Consequently, they are regarded as an ideal alternative to traditional methods and are becoming increasingly prevalent in scientific and engineering applications.

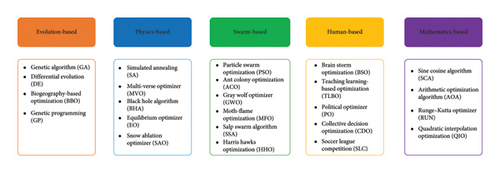

MH algorithms are inspired by natural phenomena, physical laws, or collective behaviors, and they can be grouped into five main categories [10]: evolutionary, physical, swarm-based, human-based, and mathematics-based algorithms, as shown in Figure 1. Evolutionary algorithms, like genetic algorithm (GA) [11], have a protracted development history and focus on natural selection and genetics. Physics-based algorithms derive their inspiration from chemical reaction processes and physical phenomena such as magnetism, attraction, and gravity. One well-known instance is simulated annealing (SA) [12], inspired by the principles of metallurgical annealing, which directs the convergence of the initial solution toward the global optimum according to the temperature distribution. The third category relates to the collective behaviors of birds, insects, animals, and other natural organisms referred to as swarm-based algorithms. Particle swarm optimization (PSO) [13] is a famous swarm-based optimization paradigm, which iteratively updates candidate solutions by emulating the foraging behavior observed in bird flocks. These algorithms are highly successful due to their ability to balance exploration and exploitation, allowing for efficient search across complex solution spaces. They are adaptable to various problem types, computationally efficient, and robust against local optima, making them reliable for diverse optimization tasks. Additionally, their self-organizing nature enhances their ability to handle distributed and dynamic environments. Human-based algorithms, including brain storm optimization (BSO) [14], are inspired by human social behavior, and mathematics-based algorithms, like sine cosine algorithm (SCA) [15], leverage mathematical principles for optimization.

- 1.

COA encompasses two exploitation phases, undermining its exploration capability. In the early iterations, COA maintains a high propensity to exploit, allowing for rapid convergence. Nonetheless, in later iterations, the exploration performance of COA is relatively feeble, making it susceptible to becoming trapped in local optima, thereby yielding suboptimal results. Consequently, there is a need to improve COA by adopting multiple strategies to establish a better exploration–exploitation balance and enhance stability so that it can cope with higher complexity and high-dimensional engineering optimization challenges.

- 2.

As the dimensionality and nonlinear constraints escalate, MH algorithms manifest a prevalent tendency of sluggish convergence and unsatisfactory solution accuracy for engineering optimization. While introducing a search operator can somewhat enhance the algorithm’s performance, it may indirectly impair its search capability in other facets. Therefore, multiple strategies can be adopted to synergistically reinforce the performance of MH algorithms from different aspects to confront complex optimization problems.

- 3.

According to the no free lunch (NFL) theorem [21], no single algorithm can guarantee the optimal solution for all optimization problems. Continuous development and enhancement of MH algorithms are necessary to provide more effective solutions for engineering optimization problems.

- •

We propose MCOA that integrates four improvement strategies: specular reflection learning for broader search, expanded exploration mechanism for enhanced global exploration, Lévy flight for efficient local search, and vertical crossover operator that helps stagnant individuals escape local optima.

- •

The performance of MCOA is comprehensively evaluated on 23 benchmark functions and the CEC2019/2022 test sets, comparing it with state-of-the-art MH algorithms across different dimensions (D = 50, 100, 500, 1000) to demonstrate its scalability.

- •

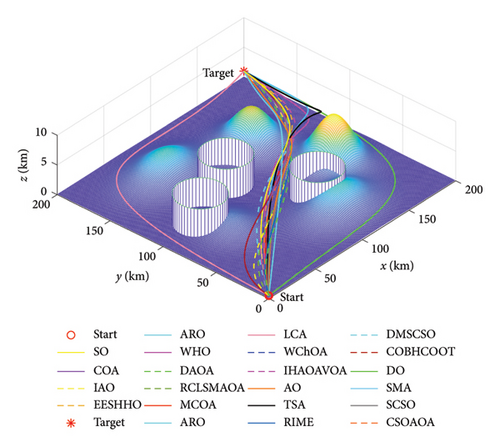

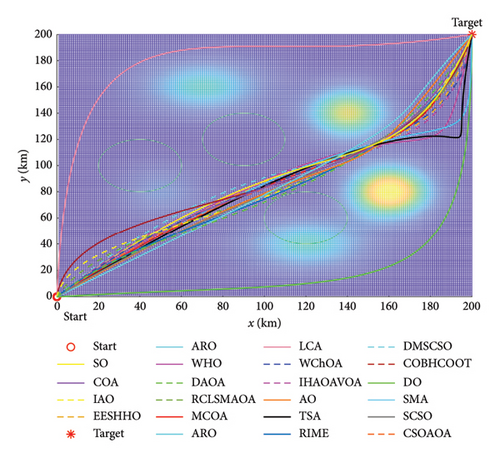

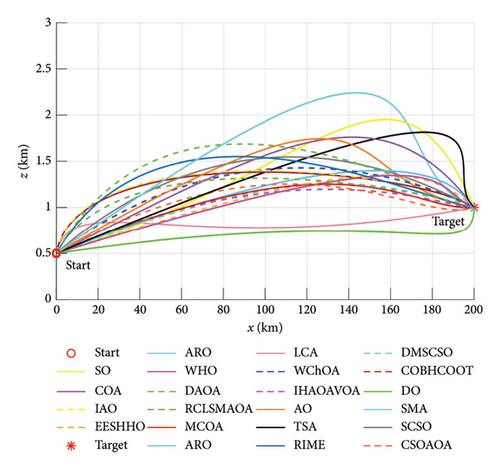

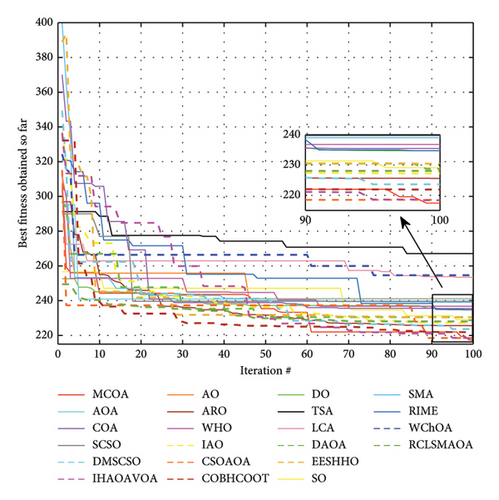

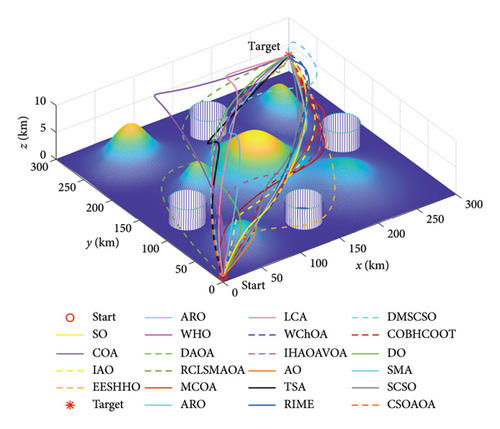

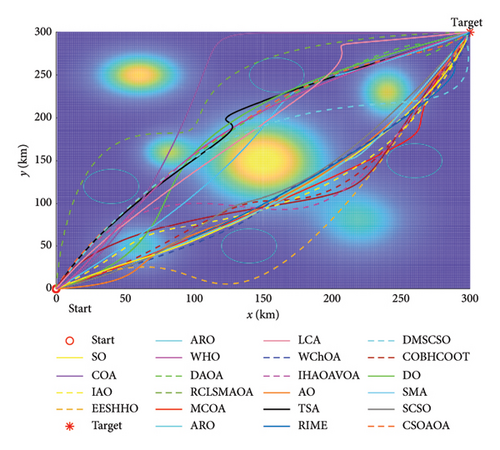

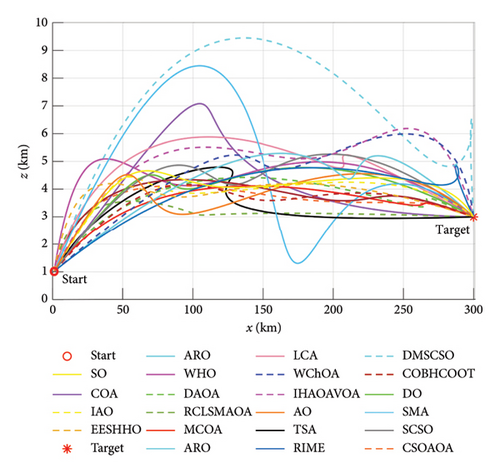

The applicability of MCOA is validated through seven constrained engineering design problems and UAV path planning cases, with experimental results confirming its superior convergence accuracy and stability even when facing highly complex optimization challenges.

The structure of this paper is as follows. Section 2 presents a literature review. Section 3 outlines the mathematical model of COA and the principles of the four improvement strategies. In Section 4, a detailed description of the proposed MCOA is provided, and its time complexity is analyzed. Section 5 compares the numerical optimization performance of MCOA with various well-established optimizers. MCOA is applied to address engineering design and UAV path planning problems in Section 6. Section 7 concludes this study.

2. Literature Review

Exploration and exploitation are indispensable components of MH algorithms. Exploration aims to comprehensively search the problem domain to uncover superior candidate solutions, while exploitation executes local searches within identified promising regions to converge toward the global optimum. Maintaining an appropriate balance between exploitation and exploration is crucial for an optimizer, as it directly determines its optimization performance. Notwithstanding the excellent flexibility and viability exhibited by MH algorithms in addressing optimization projects, due to the increasing complexity of emerging challenges in various fields, MH algorithms are inevitably susceptible to deficiencies such as sluggish convergence speed, suboptimal solution accuracy, and the propensity to become trapped in local optima [22]. The same is true for COA. Therefore, further enhancements to the original algorithm’s search performance are requisite. Wang et al. [23] proposed an improved multistrategy COA (IMCOA), which integrates the cave candidate strategy, fitness–distance balance competition strategy, optimal nondominance search strategy, and food covariance learning strategy. Compared to eight other algorithms, IMCOA can provide more reliable numerical results when solving three engineering design benchmarks. Zhong et al. [24] designed a hybrid remora crayfish optimization algorithm (HRCOA) for continuous optimization. HRCOA introduces exploitation operators from the remora optimizer to strengthen the exploitation behavior while reducing the complexity of the summer resort operator. On the CEC2020 and CEC2022 benchmark functions, as well as wireless sensor network coverage problems, HRCOA demonstrates superior convergence speed and accuracy over competing methods. To address the limitations of premature and insufficient exploitation capability, Maiti et al. [25] hybridized differential evolution (DE) with COA to develop the HCOADE algorithm. Through extensive experiments involving 34 benchmark functions and six engineering design problems, HCOADE exhibits robust global optimization performance with faster convergence. Wei et al. [26] presented a multistrategy fusion COA algorithm, namely JLSCOA, based on the subtraction averaging strategy, the sparrow search position update operator, and Lévy flight, which is applied to tune the parameters of the terminal sliding mode controller. Compared with the original COA, experimental results indicate that JLSCOA achieves the optimal value in 83% of scenarios. Elhosseny et al. [27] introduced an adaptive dynamic crayfish algorithm (AD-COA-L) with the local escape operator and lens opposition–based learning, successfully improving the stability in dealing with complex optimization challenges.

In this study, four strategies are adopted to augment the optimization performance of the original COA from multiple aspects, namely, specular reflection learning, expanded exploration strategy, Lévy flight, and vertical crossover operator. These strategies have been leveraged in many studies on MH algorithms, yielding substantial effects. For instance, El-Hameed et al. [28] incorporated specular reflection learning into the hunger games search to balance exploration and exploitation, thus enabling more accurate and stable gain regulation of proportional–integral–derivative (PID) controllers for load frequency control systems. Similarly, Adegboye et al. [29] proposed a modified gray wolf optimizer (CMWGWO) that employs specular reflection learning to enhance population diversity, allowing the algorithm to discover higher-quality inverse candidate solutions. As its name reveals, the expanded exploration operator focuses on boosting exploration and accelerating convergence. Consequently, Ma et al. [30] embedded the exploration mechanism into the position update formulation of GWO to design a hybrid Aquila gray wolf optimizer (AGWO), which can explore the search domain of complex high-dimensional problems more thoroughly. Wang et al. [31] proposed an enhanced hybrid Aquila optimizer and marine predator algorithm (EHAOMPA), which merges the exploration strengths of AO with the exploitation mechanisms of MPA, resulting in better numerical optimization performance for combinatorial problems. Lévy flight is a random walk with step sizes that follow a heavy-tailed distribution, allowing for occasional large jumps. This strategy enhances search efficiency by enabling the algorithm to explore distant regions of the solution space, thus improving convergence toward global optima. In [32], Lévy flight is utilized to guide the search agent through extensive exploration in the problem domain to quickly locate the target solution, alleviating the premature convergence of the artificial hummingbird algorithm. Additionally, He et al. [33] adopted Lévy flight to substitute the random step size of search individuals, endowing the butterfly optimization algorithm with superior local optima avoidance. The final strategy, vertical crossover operator, addresses the critical issue of search stagnation. In the enhanced rime optimization algorithm (CCRIME) developed by Zhu et al. [34], implementing the vertical crossover operation during the latter exploration phases promotes information exchange across diverse dimensions, significantly augmenting the algorithm’s local optima avoidance while boosting convergence accuracy. To address stagnation in search agents and sustain their exploration behavior, Chen et al. [35] suggested an improved shuffled frog leaping algorithm with a vertical crossover operator (HVSFLA), which showcases great potential in medical image segmentation.

This study proposes a multistrategy fusion COA (MCOA) to overcome the inherent limitations of traditional COA, providing more robust and adaptable solutions for complex optimization challenges. Distinguishing itself from existing literature, MCOA undergoes comprehensive evaluation through a wider spectrum of comparative algorithms, including both standard and improved variants. Our benchmarks incorporate 23 classical functions, 10 CEC2019 functions, and 12 CEC2022 functions, enabling performance assessment across diverse numerical optimization scenarios. Furthermore, MCOA is applied to solve multiple real-world constrained engineering design problems and UAV path planning tasks. The broader range of benchmark functions and real-world applications highlight the significant progress MCOA has made in the optimization field, demonstrating its superior performance and versatility.

3. Preliminaries

3.1. COA

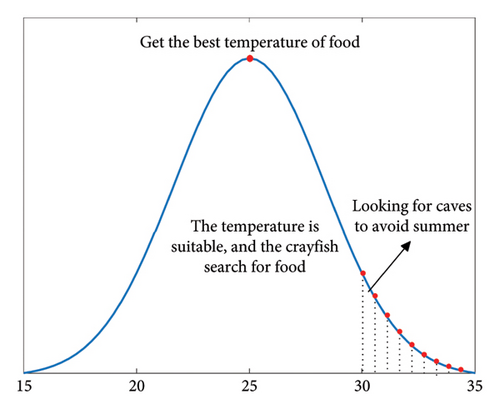

COA is a cutting-edge swarm intelligence optimization technique that mimics the summer sheltering, foraging, and competition behaviors of crayfish. As a eurythermal aquatic organism, the ideal temperature range for crayfish growth is between 15°C and 30°C, with the most favorable survival temperature being 25°C. Crayfish habitually dig burrows to evade predators and escape the summer heat. These burrows serve as shelters against natural enemies, prevent desiccation, and provide suitable conditions for hatching. During foraging, crayfish typically use their claws to seize and tear apart larger food items before transferring them to their second and third legs for further manipulation. When dealing with small food, crayfish directly capture and bite using their second and third walking feet. Based on these biological traits, the mathematical model of COA is developed, which consists of initialization, temperature definition, summering phase (exploration), competition phase (exploitation), and foraging phase (exploitation).

3.1.1. Initialization

3.1.2. Temperature Definition

3.1.3. Summer Resort Phase

3.1.4. Competition Phase

3.1.5. Foraging Phase

The pseudocode for COA is outlined in Algorithm 1.

-

Algorithm 1: Crayfish optimization algorithm (COA).

-

Input: Maximum iteration number Tmax, population size N, dimension size D, current iteration t = 1

- 1.

Initialize the position of each crayfish using equation (2)

- 2.

Evaluate the fitness value of all crayfish individuals to gain ,

- 3.

Whilet ≤ Tmaxdo

- 4.

Calculate the current temperature value Temp using equation (3)

- 5.

Fordo

- 6.

IfTemp > 30then

- 7.

Calculate the location of the cave according to equation (5)

- 8.

Ifrand < 0.5then

- 9.

Update the current position of the crayfish using equation (6)

- 10.

Else

- 11.

Update the current position of the crayfish using equation (8)

- 12.

End If

- 13.

Else

- 14.

Define the food intake p and food size Q using equations (4) and (11), respectively

- 15.

If Q > (C3 + 1)/2then

- 16.

Update the current position of the crayfish using equation (13)

- 17.

Else

- 18.

Update the current position of the crayfish using equation (14)

- 19.

End If

- 20.

End If

- 21.

End For

- 22.

Calculate the fitness value of each individual

- 23.

Update ,

- 24.

t = t + 1

- 25.

End While

-

Return: Global optimal solution

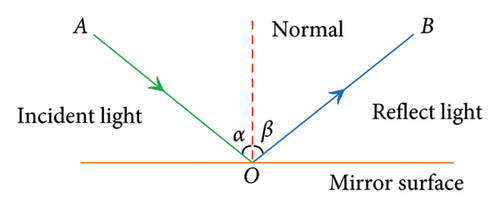

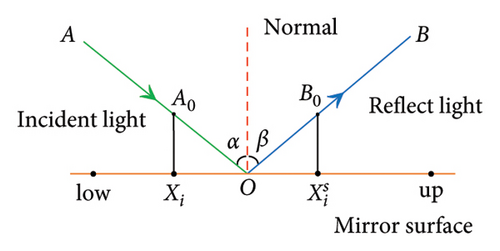

3.2. Specular Reflection Learning

Opposition-based learning (OBL) serves as a robust search mechanism that concurrently evaluates the fitness values of the current solution alongside its opposite solution. This approach then filters out the superior candidate solution for inclusion in subsequent iterations [36]. Drawing inspiration from the concept of specular reflection, Zhang [37] introduced a specular reflection learning strategy by combining OBL with the specular reflection law. Unlike the one-to-one corresponding relationship between a solution and its opposite solution in OBL, specular reflection learning entails a correspondence between one solution and one neighborhood of its opposite solution.

The initial positions of the population are generated using a randomized distribution mode. However, if the initial solution is distant from the global optimum or situated in the opposite direction, it may result in prolonged convergence time or even stagnation of the algorithm. In the early iterations, specular reflection learning facilitates a broader bidirectional simultaneous exploration of the solution space and increases the probability of finding the optimal solution.

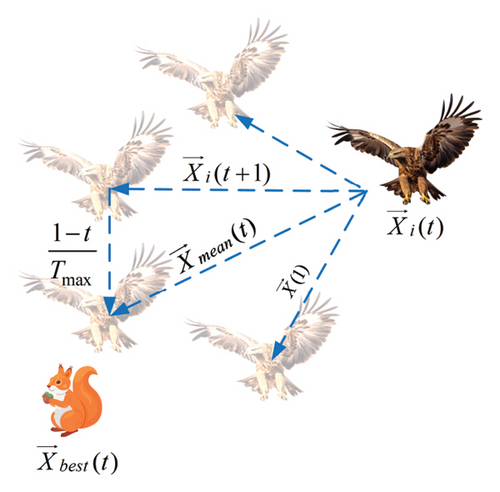

3.3. Expanded Exploration Strategy

In COA, search agents in early iterations use equation (6) to conduct global exploration to identify areas where the global optimum may be present. This stage simulates the behavior of crayfish moving toward the cave for the summer resort, where the current position is updated based on and . However, due to the limited spatial information as well as insufficient population diversity, this approach cannot swiftly guide the algorithm into the correct search domain. Hence, the exploration capability of COA still needs to be strengthened. On the other hand, the exploration procedure of AO directly incorporates the global optimal position , allowing individuals to execute Aquila’s fast flight and predation maneuvers in the search space with faster convergence and better exploration competence. Considering the above, we hope to take advantage of the expanded exploration strategy (equation (20)) to replace the original position update formula (equation (6)), thus bringing a more stable exploration–exploitation balance and faster convergence speed to the algorithm.

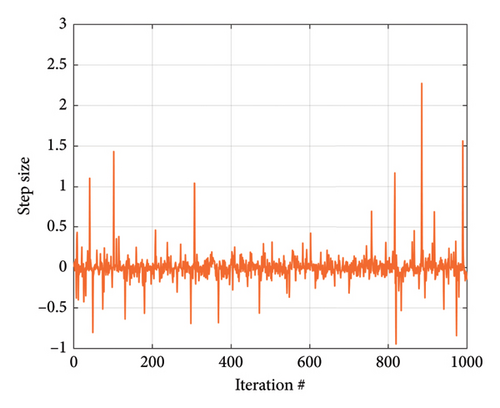

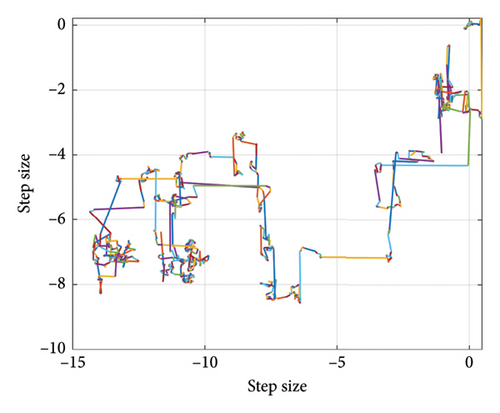

3.4. Lévy Flight

Lévy flight is a non-Gaussian stochastic walk method with step sizes taken from the Lévy probability distribution [39]. Figure 5 visualizes the distribution and 2D path of Lévy flight. Initially, the particle undergoes local movement, frequently taking numerous short-distance steps, and then occasionally embarks on a large step and commences a new cycle.

3.5. Vertical Crossover Operator

Here, F(·) indicates the fitness function. By using the vertical crossover strategy to perturb the position of the search agent, the algorithm’s local optima avoidance ability is enhanced, thereby further boosting convergence accuracy.

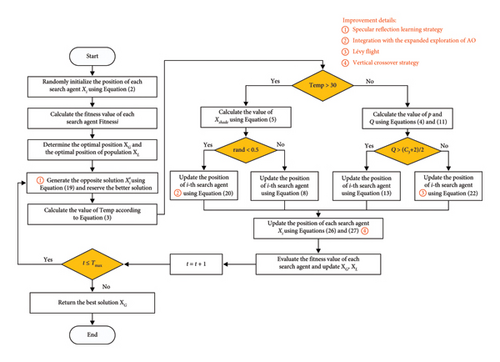

4. Proposed MCOA Algorithm

4.1. Detailed Implementation Steps for MCOA

- 1.

Specular reflection learning is introduced in the early iterations to enrich the population diversity and expand the search area.

- 2.

The expanded exploration strategy of AO is integrated with COA to replace its original position update formula, which endows the proposed method with better exploration capability and faster convergence speed.

- 3.

The Lévy flight step is embedded into the exploitation phase to improve the search efficiency in the neighborhood domain.

- 4.

The vertical crossover operator is applied in later iterations to randomly cross-perturb individual dimensions to further improve the solution accuracy.

-

Initialization

-

Step 1: Parameter setting: Maximum number of iterations Tmax, population size N, variable dimension D, and search range [LB, UB]. Fix the number of current iterations t = 1.

-

Step 2: Initialize the position of each search agent in the search space using equation (2).

-

Step 3: Evaluate the fitness value for each search agent Fitnessi(i = 1, 2, …, N) and record the optimal solution obtained so far and the optimal location for the whole population .

-

Iteration

-

Step 4: While t ≤ Tmax, perform specular reflection learning based on equation (19) to generate the corresponding opposite candidate solution for each individual and retain the superior one of both into the subsequent procedure.

-

Step 5: Calculate the ambient temperature value Temp using equation (3) to determine which position update strategy needs to be adopted in the following.

-

Step 6: If Temp > 30 and rand < 0.5, MCOA enters the exploration phase. The location of each search individual is updated using equation (20). Note that here and are equivalent, i.e., the current optimal solution.

-

Step 7: If Temp > 30 and rand ≥ 0.5, MCOA executes the competition exploitation strategy by updating the location of each search individual via equation (8).

-

Step 8: When Temp ≤ 30, MCOA performs the foraging exploitation strategy. Define the food intake p and food size Q using equations (4) and (11). In case Q > (C3 + 1)/2, update the location of each search individual using equations (12) and (13). Instead, update the location of each search individual using equation (22).

-

Step 9: Perform the vertical crossover operator to update the location of the population using equation (26).

-

Step 10: The fitness values of all search agents are reevaluated to update and .

-

Output

-

Step 11: Set t = t + 1; if t is greater than the maximum iteration termination criterion, output the global optimal solution ; otherwise, return to Step 4.

Figure 6 illustrates the flowchart of the proposed MCOA, while Algorithm 2 provides the pseudocode outlining the MCOA procedure.

-

Algorithm 2: Modified crayfish optimization algorithm with multistrategy (MCOA).

-

Input: Maximum iteration number Tmax, population size N, dimension size D, current iteration t = 1

- 1.

Initialize the position of each search agent using equation (2)

- 2.

Evaluate the fitness value of all search agents to gain ,

- 3.

Whilet ≤ Tmaxdo

- 4.

Perform the specular reflection learning to generate according to equation (19), and retain the better one of and into the next generation //Specular reflection learning

- 5.

Calculate the value of Temp using equation (3)

- 6.

Fordo

- 7.

IfTemp > 30then

- 8.

Calculate the value of using equation (5)

- 9.

Ifrand < 0.5then

- 10.

Update the current position of the search agent using equation (20) //Expanded exploration

- 11.

Else

- 12.

Update the current position of the search agent using equation (8)

- 13.

End If

- 14.

Else

- 15.

Define the food intake p and food size Q using equations (4) and (11), respectively

- 16.

IfQ > (C3 + 1)/2then

- 17.

Update the current position of the search agent using equation (13)

- 18.

Else

- 19.

Update the current position of the search agent using equation (22) //Lévy flight

- 20.

End If

- 21.

End If

- 22.

End For

- 23.

Perform the vertical crossover operator to update each search agent according to equations (26) and (27) //Vertical crossover strategy

- 24.

Evaluate the fitness value of all search agents

- 25.

Update ,

- 26.

t = t + 1

- 27.

End While

-

Return: Global optimal solution

4.2. Computational Complexity Analysis

The computational complexity of MCOA is determined by three main components: initialization, fitness evaluation, and position updating. The locations of all search individuals are randomly distributed in the search space during the initialization process with a computational complexity of O(N × D), where N is the population size and D is the problem dimension. In each iteration, the fitness values of all search agents’ positions are evaluated to obtain the current optimal solution as well as population optimal position , which needs to consume the computational complexity of O(N × Tmax), where Tmax is the maximum number of iterations. The computational complexity of updating the positions of all search individuals during the exploration and exploitation phases is O(N × D × Tmax). Moreover, the introduction of specular reflection learning and the vertical crossover operator to improve the optimization performance incurs an additional computational complexity of O(N × D × Tmax) + O(N × D × Tmax). Therefore, the total computational complexity of MCOA is: O(MCOA) = O(initialization) + O(fitness evaluation) + O(position update) = O(N × D) + O(N × Tmax) + O(3 × N × D × Tmax) = O(N × (D + Tmax + 3DTmax)).

5. Numerical Optimization Experiments

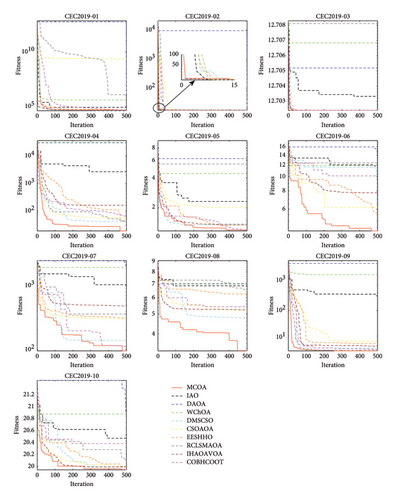

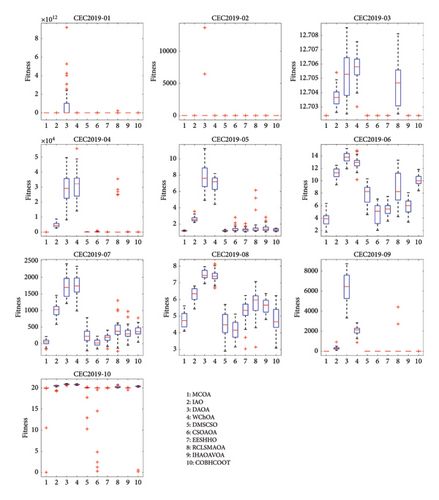

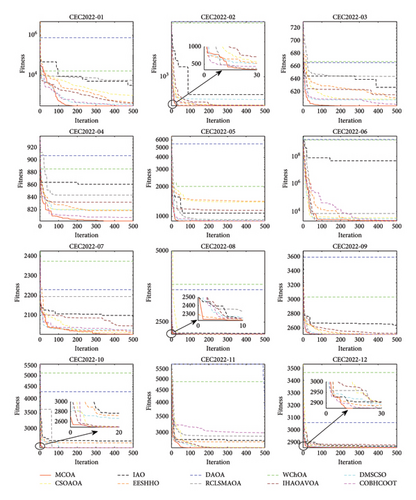

In this section, a set of numerical simulation studies are performed on the three IEEE CEC test suites: CEC 2005, CEC 2019, and CEC 2022 to thoroughly validate the effectiveness of MCOA. The implementation platform consists of hardware including an Intel(R) Core (TM) i7-13700F 2.10 GHz CPU with 32 GB RAM and MATLAB R2023b software running on Windows 10 operating system.

5.1. Benchmark Suites and Performance Indices

The CEC2005 suite comprises 23 classical functions [6] categorized as unimodal (F1∼F7, testing exploitation capability), multimodal (F8∼F13, assessing exploration and escape from local optima), and fixed-dimension multimodal (F14∼F23, evaluating stability). Functions F1∼F13 feature adjustable dimensions for testing scalability.

The CEC2019 test suite [42] contains 10 challenging functions designed for complex numerical optimization problems. In addition, this paper employs the popular CEC2022 test suite [43] to further demonstrate the superiority of MCOA. This test set includes not only unimodal (CEC2022-01) and multimodal functions (CEC2022-02∼CEC2022-05) but also hybrid functions (CEC2022-06∼CEC2022-08) as well as composition functions (CEC2022-09∼CEC2022-12). These functions are nonlinear, nonconvex, nonderivable, and closely resembling realistic optimization problems and can provide a comprehensive assessment of an algorithm’s tracking capability. Detailed descriptions of all benchmark functions appear in Tables A.1–A.5 (Appendix A Benchmark function description).

Performance evaluation employs two measures: mean fitness value (Mean) and standard deviation (Std), with statistical significance determined through the Wilcoxon rank-sum test [44] (significance level 0.05, where “+” indicates MCOA outperforms the comparison algorithm, “−” indicates underperformance, and “=” signifies no significant difference) and the Friedman ranking test [45] revealing each algorithm’s overall ranking (“Mean rank”) across all benchmarks.

5.2. Comparison With Different State-of-the-Art MH Algorithms on 23 Classical Functions

This subsection employs 12 advanced MH algorithms for comparison experiments with MCOA, namely, the original COA, AO [38], dandelion optimizer (DO) [46], snake optimizer (SO) [47], arithmetic optimization algorithm (AOA) [48], artificial rabbit optimization (ARO) [49], tunicate swarm algorithm (TSA) [50], slime mould algorithm (SMA) [51], wild horse optimizer (WHO) [52], liver cancer algorithm (LCA) [53], rime optimization algorithm (RIME) [54], and sand cat swarm optimization (SCSO) [55]. The maximum number of iterations Tmax and the population size N for each optimization method are fixed at 500 and 30, and other core parameters remain the same as those in the original literature, see Table 1. To avoid accidental errors as much as possible, all algorithms are implemented independently 30 times on each benchmark function.

| Algorithm | Year | Parameter value |

|---|---|---|

| AO | 2021 | ω = 0.005; R = 10; α = 0.1; δ = 0.1; g1 ∈ [−1, 1]; g2 = [2, 0] |

| DO | 2022 | α ∈ [0, 1]; k ∈ [0, 1] |

| SO | 2022 | c1 = 0.5; c2 = 0.05; c3 = 2 |

| AOA | 2021 | α = 5; μ = 0.499 |

| ARO | 2022 | — |

| TSA | 2020 | Pmin = 1; Pmax = 4 |

| SMA | 2020 | z = 0.03 |

| COA | 2023 | C1 = 0.2; C3 = 3; μ = 25; σ = 3 |

| WHO | 2021 | PC = 0.13; PS = 0.2; Crossover = Mean |

| LCA | 2023 | f = 1 |

| RIME | 2023 | w = 5 |

| SCSO | 2022 | rG = [2, 0]; SM = 2 |

5.2.1. Parameter Sensitivity Analysis

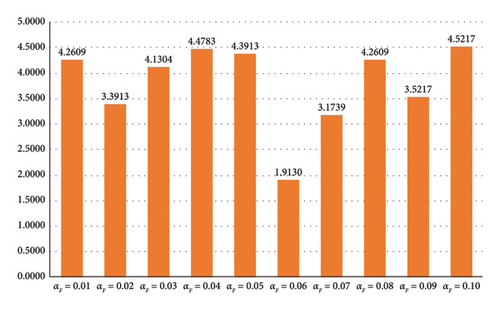

In Section 3.4, the addition of the Lévy flight strategy to the foraging phase of COA aims to enhance the search efficiency in locating potential food sources. The scale factor αF critically influences this improvement. If the value of αF is larger, the search agent will depend more on the optimal solution when updating its current position, and the algorithm is prone to premature convergence. Instead, a smaller αF value weakens the influence of Lévy flight on the guided foraging mechanism, leading to a decrease in the algorithm’s search capability and convergence accuracy. Based on the literature [32], the experimental range for αF is set between 0.01 and 0.1, with a value selected at each interval of 0.01. For each specified parameter value of αF, the results obtained by MCOA after 30 independent runs are shown in Table 2. With αF = 0.06, MCOA provides the most satisfactory solutions on 14 out of 23 test functions (60.87%), outperforming other values. For unimodal functions, MCOA performs consistently across different αF values on F1∼F4. The gap between the optimal solutions derived from different settings is also not particularly large on F5∼F7. The main reason is unimodal functions have fewer local solutions, which interfere less with the MCOA. For multimodal and fixed-dimension multimodal functions, a larger value of αF increases the step size of the Lévy operator, thus helping the proposed method to explore the search space more efficiently when facing complex optimization problems, especially on F8, F12, and F20. Figure 7 illustrates the Friedman mean rankings obtained by MCOA with different values of αF. MCOA exhibits the most desirable overall optimization performance when αF is 0.06, as it obtains the smallest ranking value of 1.9130. This indicates that this parameter value can balance exploration and exploitation favorably. Consequently, in the following sequence of experiments, taking αF = 0.06 is beneficial in avoiding local optimal solutions without affecting the sensitivity of the Lévy flight strategy and the algorithm’s convergence accuracy.

| Function | Metric | αF = 0.01 | αF = 0.02 | αF = 0.03 | αF = 0.04 | αF = 0.05 | αF = 0.06 | αF = 0.07 | αF = 0.08 | αF = 0.09 | αF = 0.1 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F2 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F3 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F4 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F5 | Mean | 1.2213E − 08 | 1.5859E − 06 | 9.3781E − 06 | 3.7685E − 06 | 2.5762E − 06 | 5.6298E − 08 | 1.2641E − 06 | 5.4132E − 06 | 8.3174E − 05 | 4.2134E − 05 |

| Std | 1.7049E − 08 | 2.1616E − 06 | 9.9527E − 06 | 3.8543E − 06 | 3.2459E − 06 | 7.9445E − 08 | 1.7644E − 06 | 6.0916E − 06 | 9.5472E − 05 | 5.6217E − 05 | |

| Rank | 1 | 4 | 8 | 6 | 5 | 2 | 3 | 7 | 10 | 9 | |

| F6 | Mean | 1.0933E − 21 | 1.5183E − 21 | 3.5111E − 21 | 1.5761E − 21 | 1.0039E − 19 | 3.3497E − 22 | 1.1515E − 19 | 5.4588E − 19 | 2.9277E − 22 | 3.0059E − 22 |

| Std | 4.7362E − 21 | 4.8629E − 21 | 1.6669E − 20 | 6.6035E − 21 | 4.6720E − 19 | 8.4095E − 22 | 5.7749E − 19 | 2.9868E − 18 | 6.2975E − 22 | 8.0293E − 22 | |

| Rank | 4 | 5 | 7 | 6 | 8 | 3 | 9 | 10 | 1 | 2 | |

| F7 | Mean | 3.7337E − 05 | 3.6381E − 05 | 4.4903E − 05 | 4.6794E − 05 | 5.0800E − 05 | 3.2531E − 05 | 2.9116E − 05 | 3.8652E − 05 | 4.0749E − 05 | 5.5205E − 05 |

| Std | 3.5815E − 05 | 4.1921E − 05 | 4.6964E − 05 | 4.2886E − 05 | 4.2928E − 05 | 2.7696E − 05 | 2.5914E − 05 | 2.6853E − 05 | 2.7816E − 05 | 7.1512E − 05 | |

| Rank | 4 | 3 | 7 | 8 | 9 | 2 | 1 | 5 | 6 | 10 | |

| F8 | Mean | −11772.32968 | −11761.48907 | −11787.84561 | −11812.18143 | −11742.52688 | −11853.70331 | −11784.61259 | −11803.85376 | −11739.794 | −11761.69685 |

| Std | 4.5979E + 02 | 3.2519E + 02 | 5.2271E + 02 | 3.9870E + 02 | 3.9325E + 02 | 3.0227E + 02 | 4.2833E + 02 | 4.8630E + 02 | 4.5192E + 02 | 3.1348E + 02 | |

| Rank | 6 | 8 | 4 | 2 | 9 | 1 | 5 | 3 | 10 | 7 | |

| F9 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F10 | Mean | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F11 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F12 | Mean | 1.6944E − 20 | 6.7606E − 22 | 4.8048E − 23 | 5.6620E − 22 | 4.1700E − 22 | 2.4595E − 23 | 1.7602E − 21 | 4.8386E − 22 | 3.7241E − 23 | 3.8594E − 22 |

| Std | 9.2709E − 20 | 2.7795E − 21 | 1.2321E − 22 | 2.3222E − 21 | 1.9451E − 21 | 1.0630E − 22 | 9.0052E − 21 | 2.0371E − 21 | 1.4032E − 22 | 1.8951E − 21 | |

| Rank | 10 | 8 | 3 | 7 | 5 | 1 | 9 | 6 | 2 | 4 | |

| F13 | Mean | 1.9120E − 21 | 4.8487E − 21 | 2.0410E − 17 | 2.5589E − 20 | 9.3884E − 22 | 2.3875E − 21 | 1.0154E − 21 | 1.9242E − 21 | 2.1038E − 20 | 1.0790E − 14 |

| Std | 8.5397E − 21 | 2.4178E − 20 | 1.1632E − 20 | 7.2840E − 20 | 2.4112E − 21 | 1.1178E − 16 | 3.2054E − 21 | 6.4821E − 21 | 8.2858E − 20 | 5.9102E − 14 | |

| Rank | 3 | 6 | 9 | 8 | 1 | 5 | 2 | 4 | 7 | 10 | |

| F14 | Mean | 1.3917E + 00 | 9.9800E − 01 | 1.3871E + 00 | 1.3917E + 00 | 1.5885E + 00 | 1.3917E + 00 | 1.1948E + 00 | 1.3917E + 00 | 9.9800E − 01 | 1.1948E + 00 |

| Std | 1.4982E + 00 | 0 | 2.1311E + 00 | 1.4982E + 00 | 1.8019E + 00 | 1.4982E + 00 | 1.0782E + 00 | 1.4982E + 00 | 0 | 1.0782E + 00 | |

| Rank | 9 | 1 | 5 | 8 | 10 | 5 | 3 | 5 | 1 | 3 | |

| F15 | Mean | 3.1278E − 04 | 3.1264E − 04 | 3.1472E − 04 | 3.1509E − 04 | 3.2881E − 04 | 3.0982E − 04 | 3.1116E − 04 | 3.1855E − 04 | 3.1267E − 04 | 3.8859E − 04 |

| Std | 1.5063E − 05 | 9.8728E − 06 | 1.2612E − 05 | 1.5755E − 05 | 9.7831E − 05 | 6.4028E − 06 | 8.5334E − 06 | 2.4872E − 05 | 1.4389E − 05 | 2.3911E − 04 | |

| Rank | 5 | 3 | 6 | 7 | 9 | 1 | 2 | 8 | 4 | 10 | |

| F16 | Mean | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 |

| Std | 1.1005E − 10 | 5.7139E − 11 | 2.5934E − 11 | 4.2697E − 11 | 3.2594E − 11 | 4.5518E − 11 | 8.4454E − 11 | 5.5814E − 11 | 6.9643E − 11 | 7.5303E − 11 | |

| Rank | 10 | 6 | 1 | 3 | 2 | 4 | 9 | 5 | 7 | 8 | |

| F17 | Mean | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 |

| Std | 1.5403E − 05 | 3.7291E − 06 | 8.0940E − 06 | 9.6520E − 06 | 8.8392E − 06 | 4.8362E − 06 | 2.1947E − 06 | 2.4870E − 05 | 7.9734E − 06 | 7.0392E − 06 | |

| Rank | 9 | 2 | 6 | 8 | 7 | 3 | 1 | 10 | 5 | 4 | |

| F18 | Mean | 3.0000 | 3.9000 | 3.0000 | 3.9000 | 3.0000 | 3.0000 | 3.0000 | 3.9000 | 3.0000 | 3.0000 |

| Std | 9.2199E − 16 | 4.9295E + 00 | 9.9643E − 16 | 4.9295E + 00 | 1.0464E − 15 | 1.1337E − 16 | 1.1095E − 15 | 4.9295E + 00 | 1.0784E − 15 | 1.2506E − 15 | |

| Rank | 2 | 8 | 3 | 8 | 4 | 1 | 6 | 8 | 5 | 7 | |

| F19 | Mean | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 |

| Std | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F20 | Mean | −3.3101 | −3.3061 | −3.2982 | −3.3101 | −3.3022 | −3.3220 | −3.3141 | −3.3180 | −3.3022 | −3.3220 |

| Std | 3.6278E − 02 | 4.1107E − 02 | 4.8370E − 02 | 3.6278E − 02 | 4.5066E − 02 | 7.5540E − 13 | 3.0164E − 02 | 2.1707E − 02 | 4.5066E − 02 | 2.5036E − 11 | |

| Rank | 5 | 7 | 10 | 6 | 9 | 1 | 4 | 3 | 8 | 2 | |

| F21 | Mean | −10.1532 | −10.1532 | −10.1532 | −10.1532 | −10.1532 | −10.1532 | −10.1532 | −10.1532 | −10.1532 | −10.1532 |

| Std | 7.1207E − 15 | 7.2269E − 15 | 7.1740E − 15 | 7.2269E − 15 | 7.1740E − 15 | 7.1207E − 15 | 7.1740E − 15 | 7.2269E − 15 | 7.0670E − 15 | 7.2269E − 15 | |

| Rank | 2 | 7 | 4 | 7 | 4 | 2 | 4 | 7 | 1 | 7 | |

| F22 | Mean | −10.4029 | −10.4029 | −10.4029 | −10.4029 | −10.4029 | −10.4029 | −10.4029 | −10.4029 | −10.4029 | −10.4029 |

| Std | 1.8915E − 10 | 9.3299E − 16 | 1.0940E − 15 | 1.1893E − 15 | 1.1893E − 15 | 1.0431E − 15 | 9.8958E − 16 | 9.3299E − 16 | 1.0940E − 15 | 2.4629E − 11 | |

| Rank | 10 | 1 | 5 | 7 | 7 | 4 | 3 | 1 | 5 | 9 | |

| F23 | Mean | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −10.5364 |

| Std | 3.9729E − 09 | 1.8067E − 15 | 1.9792E − 15 | 1.8949E − 15 | 1.8949E − 15 | 1.8067E − 15 | 1.8949E − 15 | 1.9515E − 15 | 1.8067E − 15 | 1.8949E − 15 | |

| Rank | 10 | 1 | 9 | 4 | 4 | 1 | 4 | 8 | 1 | 4 | |

- Note: The best results obtained are highlighted in bold.

5.2.2. Qualitative Analysis

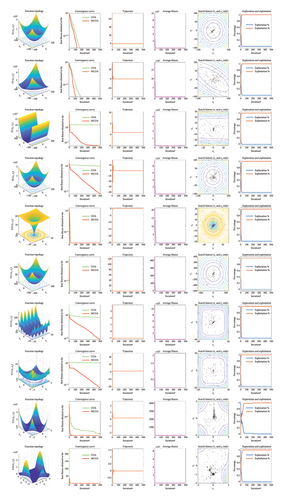

This subsection presents a qualitative analysis to visualize the optimization dynamics of the proposed algorithm throughout the iterative process. Four unimodal, four multimodal, and two fixed-dimension multimodal benchmark functions are selected for testing. As shown in Figure 8, the first column depicts the topological structure of each function in two-dimensional space. The second to sixth columns, respectively, display: (1) convergence curves, (2) search trajectories in the first dimension, (3) average fitness values, (4) search history of the first two agents, and (5) trends in exploration and exploitation percentages.

The convergence curves demonstrate how each algorithm progresses toward the optimal solution. Compared to COA, MCOA converges faster and achieves the desired convergence accuracy with fewer iterations in most test cases. On F1 and F3, both COA and MCOA converge to the global optimal point (0), but the latter requires fewer iterations. On F5 and F6, MCOA demonstrates stronger exploitation capabilities and achieves much higher convergence accuracy, thanks to its enhanced search mechanism guided by the Lévy flight strategy. For multimodal and fixed-dimension multimodal functions, both algorithms exhibit similar convergence accuracy and speed on F10, F11, and F18. However, on F12, F13, and F15, MCOA clearly has better local optimal avoidance, and the curve gradually decreases toward the global optimal solution.

The iteration trajectory can be approximated from the third column in Figure 8. In the early stages of iterations, the curves show large oscillations, indicating that the algorithm favors exploration at this stage. In the medium and late iterations, the curves gradually flatten out, which implies that exploitation is utilized to ensure that MCOA converges to a higher level of accuracy.

The average fitness value represents the average target optimal value of all dimensions in each iteration and is used to characterize the evolutionary tendency of the whole population. All the curves exhibit a significant decrease over iterations, suggesting that MCOA can quickly steer the population closer to the global optimum.

The search history illustrates the locations where the search agent has explored in its quest to find the global optimum during the optimization process. The red dot marks the best solution found within the given iteration limit. Many search agents are centered around the optimal solution, suggesting that MCOA is more inclined to exploit promising solutions. Additionally, the dispersion and traversal of search agents across the search space validate the excellent search breadth of MCOA.

Finally, the last column shows how MCOA balances exploration and exploitation based on a dimension-wise diversity model [56]. In the initial iteration stage, MCOA exhibits a high level of exploration to thoroughly explore the search space and locate promising regions. As iterations progress, the percentage of exploitation gradually increases, ensuring accuracy of the final solution. Throughout this procedure, MCOA achieves a smooth transition from exploration to exploitation. This figure also captures the switching time points between these two key components for MCOA.

5.2.3. Ablation Analysis of Multiple Improvement Strategies

In this subsection, an ablation analysis experiment is designed to verify the effectiveness of each improvement strategy. Table 3 outlines the 10 derivative variants of MCOA, where 1 indicates activation of the corresponding strategy, while 0 indicates its absence. COA, MCOA, and other different MCOA derivatives are tested simultaneously on 23 benchmark problems, and the results are shown in Table 4.

| Strategy | MCOA-1 | MCOA-2 | MCOA-3 | MCOA-4 | MCOA-5 | MCOA-6 | MCOA-7 | MCOA-8 | MCOA-9 | MCOA-10 | MCOA |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Specular reflection learning | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 1 |

| Expanded exploration | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 1 |

| Lévy flight | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 1 |

| Vertical crossover operator | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 1 | 1 |

| Function | Metric | COA | MCOA-1 | MCOA-2 | MCOA-3 | MCOA-4 | MCOA-5 | MCOA-6 | MCOA-7 | MCOA-8 | MCOA-9 | MCOA-10 | MCOA |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F2 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F3 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F4 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F5 | Mean | 2.8339E + 00 | 2.8229E + 00 | 9.4824E − 01 | 2.6837E + 00 | 2.5733E + 00 | 1.6028E − 03 | 2.6606E + 00 | 2.8015E + 00 | 1.8943E + 00 | 1.2088E − 03 | 2.6158E + 00 | 6.5468E − 10 |

| Std | 8.6474E + 00 | 8.6137E + 00 | 5.1048E + 00 | 8.1899E + 00 | 7.8531E + 00 | 8.0295E − 03 | 8.1201E + 00 | 8.5493E + 00 | 7.1088E + 00 | 5.4444E − 03 | 7.9825E + 00 | 3.3974E − 09 | |

| Rank | 12 | 11 | 4 | 9 | 6 | 3 | 8 | 10 | 5 | 2 | 7 | 1 | |

| F6 | Mean | 4.9581E − 01 | 3.6394E − 01 | 9.2552E − 04 | 4.6614E − 01 | 4.4874E − 08 | 2.7375E − 05 | 3.9998E − 01 | 2.9657E − 18 | 5.8338E − 04 | 3.0936E − 08 | 3.6069E − 08 | 1.1042E − 21 |

| Std | 3.5948E − 01 | 2.7476E − 01 | 1.8400E − 03 | 2.8322E − 01 | 5.9490E − 08 | 2.2632E − 05 | 2.7702E − 01 | 1.5185E − 17 | 9.9238E − 04 | 4.9425E − 08 | 2.8818E − 08 | 5.3596E − 21 | |

| Rank | 12 | 9 | 8 | 11 | 5 | 6 | 10 | 2 | 7 | 3 | 4 | 1 | |

| F7 | Mean | 8.1905E − 05 | 1.1367E − 04 | 6.3096E − 05 | 6.8164E − 05 | 4.1200E − 05 | 5.3659E − 05 | 7.8830E − 05 | 3.6702E − 05 | 7.9099E − 05 | 4.4214E − 05 | 3.6104E − 05 | 3.5231E − 05 |

| Std | 8.4466E − 05 | 1.0435E − 04 | 5.8071E − 05 | 6.8581E − 05 | 4.3879E − 05 | 4.6726E − 05 | 7.1452E − 05 | 3.2258E − 05 | 1.1355E − 04 | 3.5477E − 05 | 3.4912E − 05 | 3.0745E − 05 | |

| Rank | 11 | 12 | 7 | 8 | 4 | 6 | 9 | 3 | 10 | 5 | 2 | 1 | |

| F8 | Mean | −5242.7189 | −8686.5444 | −8329.8712 | −8106.5679 | −11587.9782 | −8213.3889 | −8658.6230 | −11691.5067 | −5573.7303 | −11508.0893 | −11714.0076 | −11829.1962 |

| Std | 6.8306E + 02 | 6.7034E + 02 | 1.3453E + 03 | 1.3844E + 03 | 4.8453E + 02 | 2.8154E + 03 | 6.9393E + 02 | 3.9648E + 02 | 1.5700E + 03 | 5.0753E + 02 | 4.0317E + 02 | 3.8003E + 02 | |

| Rank | 12 | 6 | 8 | 10 | 4 | 9 | 7 | 3 | 11 | 5 | 2 | 1 | |

| F9 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F10 | Mean | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 | 8.8818E − 16 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F11 | Mean | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Std | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Rank | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| F12 | Mean | 1.4950E − 02 | 1.3070E − 02 | 4.9624E − 05 | 1.4443E − 02 | 1.9757E − 09 | 2.1801E − 06 | 1.2297E − 02 | 8.1146E − 21 | 5.8363E − 05 | 1.3261E − 09 | 1.7528E − 09 | 4.4560E − 22 |

| Std | 1.3620E − 02 | 7.5554E − 03 | 1.2902E − 04 | 1.1037E − 02 | 2.1721E − 09 | 2.3345E − 06 | 9.2124E − 03 | 3.1105E − 20 | 8.9406E − 05 | 2.3415E − 09 | 2.0703E − 09 | 1.5576E − 21 | |

| Rank | 12 | 10 | 7 | 11 | 5 | 6 | 9 | 2 | 8 | 3 | 4 | 1 | |

| F13 | Mean | 2.5461E + 00 | 2.6629E + 00 | 1.1824E − 03 | 2.2444E + 00 | 9.8869E − 02 | 1.8785E + 00 | 2.1944E + 00 | 2.8123E − 07 | 3.0870E − 03 | 8.0494E − 09 | 3.7913E − 05 | 1.4575E − 21 |

| Std | 6.9273E − 01 | 6.5553E − 01 | 2.0458E − 03 | 2.8428E − 01 | 5.4153E − 01 | 1.4538E + 00 | 2.4985E − 01 | 4.0820E − 07 | 1.4676E − 02 | 1.5178E − 08 | 3.4548E − 05 | 6.1017E − 21 | |

| Rank | 11 | 12 | 5 | 10 | 7 | 8 | 9 | 3 | 6 | 2 | 4 | 1 | |

| F14 | Mean | 5.4081E + 00 | 2.6649E + 00 | 2.8500E + 00 | 3.4750E + 00 | 9.9800E − 01 | 2.7697E + 00 | 2.8928E + 00 | 1.1948E + 00 | 3.6212E + 00 | 2.1653E + 00 | 1.6159E + 00 | 9.9800E − 01 |

| Std | 4.9117E + 00 | 3.2382E + 00 | 3.4574E + 00 | 4.1251E + 00 | 1.7001E − 16 | 2.7369E + 00 | 3.4534E + 00 | 1.0782E + 00 | 4.0354E + 00 | 3.5616E + 00 | 2.4347E + 00 | 0 | |

| Rank | 12 | 6 | 8 | 10 | 2 | 7 | 9 | 3 | 11 | 5 | 4 | 1 | |

| F15 | Mean | 3.2644E − 03 | 4.4858E − 04 | 3.9398E − 04 | 1.2984E − 03 | 4.7506E − 04 | 3.9651E − 04 | 4.2208E − 04 | 3.3332E − 04 | 4.3627E − 04 | 3.1380E − 04 | 4.7124E − 04 | 3.1322E − 04 |

| Std | 6.8257E − 03 | 1.9829E − 04 | 1.9152E − 04 | 3.6113E − 03 | 2.4168E − 04 | 2.5464E − 04 | 1.3001E − 04 | 5.3566E − 05 | 2.9654E − 04 | 1.7453E − 05 | 2.0537E − 04 | 8.6955E − 06 | |

| Rank | 12 | 8 | 4 | 11 | 10 | 5 | 6 | 3 | 7 | 2 | 9 | 1 | |

| F16 | Mean | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0316 |

| Std | 1.3592E − 10 | 9.7968E − 12 | 8.7392E − 12 | 7.4590E − 15 | 7.8949E − 12 | 2.4479E − 11 | 1.6049E − 15 | 9.4566E − 16 | 1.6421E − 15 | 2.1587E − 15 | 7.6919E − 16 | 6.2532E − 16 | |

| Rank | 12 | 10 | 9 | 7 | 8 | 11 | 4 | 3 | 5 | 6 | 2 | 1 | |

| F17 | Mean | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 | 0.3979 |

| Std | 1.2830E − 05 | 8.3105E − 06 | 3.1170E − 06 | 4.1011E − 05 | 9.7162E − 09 | 6.6459E − 09 | 5.3952E − 08 | 7.1487E − 08 | 1.1628E − 05 | 1.1803E − 09 | 3.1557E − 09 | 1.4768E − 09 | |

| Rank | 11 | 9 | 8 | 12 | 5 | 4 | 6 | 7 | 10 | 1 | 3 | 2 | |

| F18 | Mean | 3.9000 | 3.0000 | 3.0000 | 3.0000 | 3.0000 | 3.0000 | 3.0000 | 3.0000 | 3.0000 | 3.0000 | 3.0000 | 3.0000 |

| Std | 4.9295E + 00 | 5.0484E − 06 | 1.9001E − 05 | 5.6397E − 11 | 2.4269E − 11 | 1.2397E − 15 | 1.2259E − 15 | 2.9733E − 16 | 4.9479E − 16 | 9.3201E − 12 | 1.0464E − 11 | 5.0835E − 16 | |

| Rank | 12 | 10 | 11 | 9 | 8 | 5 | 4 | 1 | 2 | 6 | 7 | 3 | |

| F19 | Mean | −3.8625 | −3.8628 | −3.8628 | −3.8370 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 |

| Std | 1.4390E − 03 | 1.1418E − 12 | 5.0657E − 09 | 1.4113E − 01 | 6.0842E − 13 | 2.7101E − 15 | 2.7101E − 15 | 2.7101E − 15 | 9.4576E − 09 | 3.8424E − 09 | 2.7101E − 15 | 2.7101E − 15 | |

| Rank | 11 | 7 | 9 | 12 | 6 | 1 | 1 | 1 | 10 | 8 | 1 | 1 | |

| F20 | Mean | −3.2565 | −3.2903 | −3.2900 | −3.2785 | −3.2774 | −3.2863 | −3.2943 | −3.3101 | −3.3180 | −3.2982 | −3.3220 | −3.3220 |

| Std | 7.7179E − 02 | 5.3481E − 02 | 5.3297E − 02 | 6.5862E − 02 | 5.7641E − 02 | 5.5415E − 02 | 5.1147E − 02 | 3.6278E − 02 | 2.1707E − 02 | 4.8370E − 02 | 4.1485E − 09 | 4.4621E − 09 | |

| Rank | 12 | 7 | 8 | 10 | 11 | 9 | 6 | 4 | 3 | 5 | 1 | 2 | |

| F21 | Mean | −7.2776 | −9.4735 | −8.7936 | −7.4343 | −9.1336 | −8.6237 | −9.9833 | −10.1532 | −10.1532 | −9.4735 | −9.8133 | −10.1532 |

| Std | 2.7974E + 00 | 1.7626E + 00 | 2.2929E + 00 | 2.5868E + 00 | 2.0741E + 00 | 2.3761E + 00 | 9.3076E − 01 | 6.8855E − 12 | 7.2269E − 15 | 1.7626E + 00 | 1.2934E + 00 | 7.1207E − 15 | |

| Rank | 12 | 7 | 9 | 11 | 8 | 10 | 4 | 3 | 2 | 6 | 5 | 1 | |

| F22 | Mean | −7.1337 | −9.4176 | −10.0485 | −7.4907 | −10.2258 | −9.8714 | −9.6826 | −10.4029 | −10.4029 | −10.4029 | −10.0500 | −10.4029 |

| Std | 3.2066E + 00 | 2.0430E + 00 | 1.3485E + 00 | 2.8020E + 00 | 9.7043E − 01 | 1.6218E + 00 | 1.8342E + 00 | 4.4101E − 09 | 7.4927E − 05 | 9.3299E − 16 | 1.3433E + 00 | 3.2986E − 16 | |

| Rank | 12 | 10 | 7 | 11 | 5 | 8 | 9 | 3 | 4 | 2 | 6 | 1 | |

| F23 | Mean | −7.5558 | −10.3561 | −10.1758 | −7.7540 | −10.5364 | −10.5364 | −10.5364 | −10.5364 | −10.3561 | −10.5364 | −10.5364 | −10.5364 |

| Std | 3.1046E + 00 | 9.8735E − 01 | 1.3720E + 00 | 3.1145E + 00 | 1.6546E − 09 | 2.0600E − 15 | 1.5055E − 08 | 8.8723E − 10 | 9.8734E − 01 | 5.9927E − 08 | 3.4279E − 09 | 1.8949E − 15 | |

| Rank | 12 | 8 | 10 | 11 | 4 | 2 | 6 | 3 | 9 | 7 | 5 | 1 | |

| Mean rank | 8.4783 | 6.4783 | 5.6087 | 7.3913 | 4.5652 | 4.6522 | 4.9565 | 2.6522 | 5.0870 | 3.2609 | 3.1739 | 1.1739 | |

| Final ranking | 12 | 10 | 9 | 11 | 5 | 6 | 7 | 2 | 8 | 4 | 3 | 1 | |

- Note: The best results obtained are highlighted in bold.

As shown in Table 4, MCOA achieves the best overall performance with a Friedman mean ranking of 1.1739, significantly outperforming all variant algorithms and the original COA. When examining individual strategies, their effectiveness can be quantitatively ranked from highest to lowest impact: vertical crossover operator (MCOA-4, rank 5.5652), expanded exploration strategy (MCOA-2, rank 5.6087), specular reflection learning (MCOA-1, rank 6.4783), and Lévy flight (MCOA-3, rank 7.3913).

The vertical crossover operator demonstrates exceptional local exploitation capability, evidenced by MCOA-9’s superior performance on unimodal functions F5, F6, and F7, where precise local search is critical. For multimodal functions, the expanded exploration strategy (MCOA-2) yields the best results among single-strategy variants, showing significantly lower mean values compared to COA, particularly on functions F8 (−8329.8712 vs. −5242.7189) and F13(1.1824E-03 vs. 2.5461E + 00). This confirms its effectiveness in overcoming COA’s exploration deficiencies while preserving exploitation strength.

Furthermore, the synergistic integration of these strategies in MCOA produces remarkably consistent performance, achieving optimal results on 18 of 23 benchmark functions with minimal standard deviations, particularly on complex multimodal functions. For instance, on F8, the mean value of MCOA is −11829.1962, while the mean value of COA is −5242.7189, representing a 125.6% improvement. The combination of specular reflection learning and vertical crossover operator (MCOA-7) proves particularly effective for navigating local optima traps, as evidenced by its second-place overall ranking (2.6522), demonstrating how complementary strategies enhance both exploration and exploitation phases. Specular reflection learning and expanded exploration strategy enhance exploration, whereas Lévy flight and vertical crossover operator focus on strengthening exploitation, synergistically leading MCOA toward the optimal solution.

5.2.4. Quantitative Analysis

In this subsection, we quantitatively evaluate the numerical optimization performance of MCOA against twelve established MH algorithms: AO, DO, SO, AOA, ARO, TSA, SMA, COA, WHO, LCA, RIME, and SCSO. The statistical results are presented in Table 5.

| Function | Metric | MCOA | AO | DO | SO | AOA | ARO | TSA | SMA | COA | WHO | LCA | RIME | SCSO |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 1.2014E − 101 | 1.1698E − 05 | 1.4548E − 94 | 3.9390E − 35 | 3.8009E − 65 | 6.6645E − 195 | 0 | 0 | 2.2857E − 44 | 2.0725E − 01 | 1.9599E + 00 | 2.1307E − 110 |

| Std | 0 | 6.5804E − 101 | 6.4523E − 06 | 3.4672E − 94 | 2.1575E − 34 | 2.0495E − 64 | 0 | 0 | 0 | 6.4927E − 44 | 2.4941E − 01 | 8.2185E − 01 | 1.1671E − 109 | |

| p value | — | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | NaN | NaN | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | |

| Rank | 1 | 6 | 11 | 7 | 10 | 8 | 4 | 1 | 1 | 9 | 12 | 13 | 5 | |

| F2 | Mean | 0 | 1.2580E − 52 | 1.5150E − 03 | 5.1539E − 43 | 0 | 5.5376E − 36 | 4.3975E − 100 | 0 | 0 | 9.4366E − 24 | 1.7163E − 01 | 1.7947E + 00 | 4.9236E − 61 |

| Std | 0 | 6.8904E − 52 | 7.7126E − 04 | 1.2954E − 42 | 0 | 1.2643E − 35 | 1.9856E − 99 | 0 | 0 | 5.0227E − 23 | 1.9407E − 01 | 1.4158E + 00 | 9.4981E − 61 | |

| p value | — | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | NaN | 1.2118E − 12 | 1.2118E − 12 | NaN | NaN | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | |

| Rank | 1 | 7 | 11 | 8 | 1 | 9 | 5 | 1 | 1 | 10 | 12 | 13 | 6 | |

| F3 | Mean | 0 | 2.7322E − 99 | 2.4004E + 01 | 2.7757E − 56 | 1.0008E − 02 | 5.9800E − 49 | 2.9285E − 182 | 0 | 0 | 1.9650E − 21 | 3.8052E + 01 | 1.3895E + 03 | 5.6172E − 111 |

| Std | 0 | 1.4965E − 98 | 1.8300E + 01 | 1.5105E − 55 | 1.5955E − 02 | 3.2090E − 48 | 0 | 0 | 0 | 1.0762E − 20 | 3.8905E + 01 | 4.0077E + 02 | 2.6073E − 110 | |

| p value | — | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | NaN | NaN | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | |

| Rank | 1 | 6 | 11 | 7 | 10 | 8 | 4 | 1 | 1 | 9 | 12 | 13 | 5 | |

| F4 | Mean | 0 | 1.0076E − 53 | 9.2019E − 01 | 9.8256E − 41 | 2.6076E − 02 | 3.6544E − 27 | 1.1759E − 91 | 0 | 0 | 2.6781E − 16 | 8.3346E − 02 | 7.1134E + 00 | 6.0476E − 59 |

| Std | 0 | 5.5085E − 53 | 6.8201E − 01 | 1.6184E − 40 | 2.0631E − 02 | 1.6360E − 26 | 3.7854E − 91 | 0 | 0 | 1.3583E − 15 | 5.5805E − 02 | 2.5478E + 00 | 2.1111E − 58 | |

| p value | — | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | NaN | NaN | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | |

| Rank | 1 | 6 | 12 | 7 | 10 | 8 | 4 | 1 | 1 | 9 | 11 | 13 | 5 | |

| F5 | Mean | 1.5982E − 07 | 8.0245E − 04 | 1.2713E + 01 | 8.1014E + 00 | 9.5104E + 00 | 1.1935E + 00 | 9.5724E + 00 | 3.7068E + 00 | 8.9767E + 00 | 1.2470E + 01 | 5.5234E − 01 | 1.1965E + 02 | 9.6259E + 00 |

| Std | 5.4520E − 07 | 1.7527E − 03 | 2.0941E + 01 | 1.2851E + 01 | 1.3642E + 01 | 4.9565E + 00 | 1.3770E + 01 | 9.2134E + 00 | 1.2927E + 01 | 2.1930E + 01 | 1.7195E + 00 | 3.1563E + 02 | 1.3846E + 01 | |

| p value | — | 1.7072E − 04 | 1.2710E − 11 | 1.7072E − 04 | 1.6025E − 09 | 1.7072E − 04 | 1.7072E − 04 | 3.8303E − 03 | 3.8303E − 03 | 1.7072E − 04 | 1.2710E − 11 | 1.2710E − 11 | 1.7072E − 04 | |

| Rank | 1 | 2 | 12 | 6 | 8 | 4 | 9 | 5 | 7 | 11 | 3 | 13 | 10 | |

| F6 | Mean | 1.1124E − 22 | 1.1238E − 04 | 1.7637E − 05 | 1.0880E + 00 | 3.1760E + 00 | 4.3183E − 03 | 6.1029E + 00 | 3.3753E − 03 | 3.4253E − 01 | 1.4773E − 02 | 1.9287E − 01 | 2.4266E + 00 | 2.5319E + 00 |

| Std | 2.1677E − 22 | 3.3118E − 04 | 7.9050E − 06 | 8.8946E − 01 | 2.3486E − 01 | 2.0796E − 03 | 9.6084E − 01 | 1.8976E − 03 | 2.6772E − 01 | 5.0520E − 02 | 3.4373E − 01 | 1.1534E + 00 | 4.7022E − 01 | |

| pvalue | — | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | |

| Rank | 1 | 3 | 2 | 9 | 12 | 5 | 13 | 4 | 8 | 6 | 7 | 10 | 11 | |

| F7 | Mean | 4.6440E − 05 | 9.5864E − 05 | 2.1025E − 02 | 2.6626E − 04 | 8.0932E − 05 | 6.6734E − 04 | 6.4010E − 05 | 1.2063E − 04 | 8.1337E − 05 | 1.2373E − 03 | 7.3769E − 04 | 4.0334E − 02 | 1.5063E − 04 |

| Std | 4.5346E − 05 | 1.1056E − 04 | 8.5499E − 03 | 1.6645E − 04 | 6.2971E − 05 | 4.2719E − 04 | 4.6300E − 05 | 1.0443E − 04 | 8.1403E − 05 | 1.1608E − 03 | 4.9856E − 04 | 1.3855E − 02 | 1.6752E − 04 | |

| p value | — | 6.1452E − 02 | 3.0199E − 11 | 1.4110E − 09 | 7.2884E − 03 | 2.3715E − 10 | 7.7272E − 02 | 8.1200E − 04 | 4.5146E − 02 | 3.0199E − 11 | 4.5043E − 11 | 3.0199E − 11 | 3.5638E − 04 | |

| Rank | 1 | 5 | 12 | 8 | 3 | 9 | 2 | 6 | 4 | 11 | 10 | 13 | 7 | |

| F8 | Mean | −11853.5931 | −7551.7382 | −7639.7174 | −12494.4857 | −5302.6190 | −10042.2968 | −3368.2420 | −12569.1358 | −8360.2620 | −8819.3101 | −8649.1994 | −9970.0736 | −4599.6274 |

| Std | 3.2266E + 02 | 3.7125E + 03 | 7.6321E + 02 | 1.1531E + 02 | 4.8120E + 02 | 5.0598E + 02 | 4.2519E + 02 | 2.4943E − 01 | 4.7041E + 02 | 5.3122E + 02 | 4.5318E + 03 | 4.8474E + 02 | 1.0661E + 03 | |

| p value | — | 2.0681E − 02 | 3.0199E − 11 | 7.3803E − 10 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 8.7663E − 01 | 3.0199E − 11 | 3.0199E − 11 | |

| Rank | 3 | 10 | 9 | 2 | 11 | 4 | 13 | 1 | 8 | 6 | 7 | 5 | 12 | |

| F9 | Mean | 0 | 0 | 2.4217E + 01 | 2.8386E + 00 | 0 | 0 | 2.0511E + 01 | 0 | 0 | 9.6122E − 12 | 1.7250E + 01 | 6.7690E + 01 | 0 |

| Std | 0 | 0 | 2.1331E + 01 | 8.0596E + 00 | 0 | 0 | 4.7975E + 01 | 0 | 0 | 3.6709E − 11 | 6.5699E + 01 | 1.4776E + 01 | 0 | |

| p value | — | NaN | 1.2118E − 12 | 1.7016E − 08 | NaN | NaN | 8.8658E − 07 | NaN | NaN | 1.6080E − 01 | 1.2118E − 12 | 1.2118E − 12 | NaN | |

| Rank | 1 | 1 | 12 | 9 | 1 | 1 | 11 | 1 | 1 | 8 | 10 | 13 | 1 | |

| F10 | Mean | 8.8818E − 16 | 8.8818E − 16 | 6.3064E − 04 | 2.1238E − 01 | 8.8818E − 16 | 8.8818E − 16 | 4.5593E − 15 | 8.8818E − 16 | 8.8818E − 16 | 1.9540E − 15 | 1.2623E − 01 | 1.9719E + 00 | 8.8818E − 16 |

| Std | 0 | 0 | 2.2418E − 04 | 6.5405E − 01 | 0 | 0 | 6.4863E − 16 | 0 | 0 | 1.6559E − 15 | 1.5477E − 01 | 5.5281E − 01 | 0 | |

| p value | — | NaN | 1.2118E − 12 | 1.2003E − 13 | NaN | NaN | 2.7085E − 14 | NaN | NaN | 1.3055E − 03 | 1.2118E − 12 | 1.2118E − 12 | NaN | |

| Rank | 1 | 1 | 10 | 12 | 1 | 1 | 9 | 1 | 1 | 8 | 11 | 13 | 1 | |

| F11 | Mean | 0 | 0 | 1.7566E − 02 | 9.7614E − 02 | 2.0686E − 01 | 0 | 2.1866E − 03 | 0 | 0 | 0 | 2.5235E − 01 | 9.7313E − 01 | 0 |

| Std | 0 | 0 | 1.5164E − 02 | 2.1233E − 01 | 1.7253E − 01 | 0 | 4.6871E − 03 | 0 | 0 | 0 | 2.9673E − 01 | 5.8124E − 02 | 0 | |

| p value | — | NaN | 1.2118E − 12 | 2.7880E − 03 | 1.2118E − 12 | NaN | 1.1035E − 02 | NaN | NaN | NaN | 1.2118E − 12 | 1.2118E − 12 | NaN | |

| Rank | 1 | 1 | 9 | 10 | 11 | 1 | 8 | 1 | 1 | 1 | 12 | 13 | 1 | |

| F12 | Mean | 5.5182E − 22 | 5.3309E − 06 | 4.1188E − 02 | 6.6459E − 02 | 5.0828E − 01 | 5.3152E − 04 | 1.0151E + 00 | 2.4929E − 03 | 1.3537E − 02 | 1.3919E − 02 | 2.4905E − 03 | 3.1475E + 00 | 2.1259E − 01 |

| Std | 2.4183E − 21 | 1.1174E − 05 | 1.5607E − 01 | 1.4895E − 01 | 5.5470E − 02 | 1.1914E − 03 | 2.9093E − 01 | 3.0834E − 03 | 1.0987E − 02 | 3.5814E − 02 | 5.3829E − 03 | 1.5219E + 00 | 9.1213E − 02 | |

| p value | — | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | |

| Rank | 1 | 2 | 8 | 9 | 11 | 3 | 12 | 5 | 6 | 7 | 4 | 13 | 10 | |

| F13 | Mean | 7.2674E − 19 | 3.7313E − 05 | 1.4965E − 03 | 5.6600E − 01 | 2.8558E + 00 | 6.1432E − 03 | 2.6254E + 00 | 5.1881E − 03 | 2.3028E + 00 | 8.2866E − 02 | 1.3434E − 02 | 2.8794E − 01 | 2.8650E + 00 |

| Std | 3.9609E − 18 | 7.7594E − 05 | 3.7935E − 03 | 9.2016E − 01 | 1.0236E − 01 | 1.6917E − 02 | 2.2479E − 01 | 6.3860E − 03 | 3.1519E − 01 | 1.6488E − 01 | 1.5913E − 02 | 1.9597E − 01 | 6.5642E − 02 | |

| p value | — | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | 3.0199E − 11 | |

| Rank | 1 | 2 | 3 | 9 | 12 | 5 | 11 | 4 | 10 | 7 | 6 | 8 | 13 | |

| F14 | Mean | 9.9800E − 01 | 2.6972E + 00 | 9.9800E − 01 | 9.9800E − 01 | 1.0065E + 01 | 9.9800E − 01 | 1.0742E + 01 | 9.9800E − 01 | 5.0974E + 00 | 1.6252E + 00 | 1.1330E + 01 | 9.9800E − 01 | 3.8790E + 00 |

| Std | 0 | 3.2838E + 00 | 1.4277E − 15 | 1.9755E − 06 | 3.7068E + 00 | 4.1233E − 17 | 5.2001E + 00 | 1.4114E − 12 | 4.9969E + 00 | 1.2032E + 00 | 2.9788E + 01 | 2.9483E − 12 | 3.9294E + 00 | |

| p value | — | 1.2118E − 12 | 1.1575E − 12 | 1.2177E − 07 | 1.2118E − 12 | 3.3371E − 01 | 1.2118E − 12 | 1.2118E − 12 | 1.2098E − 12 | 3.1296E − 04 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | |

| Rank | 1 | 8 | 3 | 6 | 11 | 2 | 12 | 4 | 10 | 7 | 13 | 5 | 9 | |

| F15 | Mean | 3.1838E − 04 | 4.9982E − 04 | 1.8442E − 03 | 5.7538E − 04 | 1.4003E − 02 | 3.2466E − 04 | 4.6376E − 03 | 5.7419E − 04 | 2.5654E − 03 | 1.3941E − 03 | 1.2771E − 02 | 4.4301E − 03 | 7.7314E − 04 |

| Std | 1.8035E − 05 | 1.2567E − 04 | 5.0410E − 03 | 2.9943E − 04 | 2.5025E − 02 | 3.6114E − 05 | 8.1702E − 03 | 2.8266E − 04 | 6.0362E − 03 | 3.6145E − 03 | 3.0340E − 02 | 7.5764E − 03 | 4.7210E − 04 | |

| p value | — | 1.2057E − 10 | 5.6073E − 05 | 1.2023E − 08 | 6.0658E − 11 | 6.6273E − 04 | 1.0702E − 09 | 8.3520E − 08 | 2.3768E − 07 | 3.1565E − 05 | 3.0199E − 11 | 7.3891E − 11 | 2.1544E − 10 | |

| Rank | 1 | 3 | 8 | 5 | 13 | 2 | 11 | 4 | 9 | 7 | 12 | 10 | 6 | |

| F16 | Mean | −1.0316 | −1.0313 | −1.0316 | −1.0316 | −1.0316 | −1.0316 | −1.0263 | −1.0316 | −1.0316 | −1.0316 | −0.8394 | −1.0316 | −1.0307 |

| Std | 4.9651E − 16 | 3.9153E − 04 | 1.0345E − 12 | 5.4546E − 16 | 1.2736E − 07 | 9.7461E − 11 | 1.1985E − 02 | 7.3076E − 10 | 1.3065E − 15 | 5.5319E − 16 | 1.6454E − 01 | 1.5929E − 07 | 2.4211E − 03 | |

| p value | — | 1.7895E − 11 | 3.5769E − 01 | 6.0025E − 01 | 1.7895E − 11 | 5.0829E − 01 | 1.7895E − 11 | 1.3120E − 06 | 3.7211E − 01 | 7.8859E − 05 | 1.7895E − 11 | 6.6730E − 11 | 1.7895E − 11 | |

| Rank | 1 | 10 | 5 | 2 | 8 | 6 | 12 | 7 | 4 | 3 | 13 | 9 | 11 | |

| F17 | Mean | 0.3979 | 0.3981 | 0.3979 | 0.3979 | 0.4077 | 0.3979 | 0.3993 | 0.3979 | 0.3979 | 0.3979 | 0.9020 | 0.3979 | 0.4130 |

| Std | 2.6258E − 15 | 3.0193E − 04 | 5.8965E − 11 | 1.3093E − 06 | 6.6929E − 03 | 4.4884E − 06 | 1.3987E − 03 | 1.3815E − 07 | 2.0909E − 09 | 0 | 1.1421E + 00 | 3.5878E − 07 | 2.0886E − 02 | |

| p value | — | 4.5043E − 11 | 3.0199E − 11 | 4.1040E − 11 | 3.0199E − 11 | 3.1602E − 12 | 3.3384E − 11 | 9.0632E − 08 | 3.0199E − 11 | 1.2118E − 12 | 3.0199E − 11 | 1.2477E − 04 | 3.0199E − 11 | |

| Rank | 2 | 9 | 3 | 7 | 11 | 8 | 10 | 5 | 4 | 1 | 13 | 6 | 12 | |

| F18 | Mean | 3.0000 | 3.0315 | 3.0000 | 7.5000 | 7.5005 | 3.0000 | 7.5007 | 3.0000 | 3.0000 | 3.0000 | 19.5239 | 3.0000 | 3.0010 |

| Std | 1.1125E − 15 | 3.3959E − 02 | 1.6494E − 08 | 1.0234E + 01 | 1.0234E + 01 | 1.3576E − 15 | 1.0236E + 01 | 9.6637E − 12 | 9.2370E − 12 | 1.6306E − 15 | 1.0812E + 01 | 1.3147E − 06 | 2.3255E − 03 | |

| p value | — | 1.7546E − 11 | 1.7546E − 11 | 5.7130E − 08 | 1.7546E − 11 | 3.7853E − 02 | 1.7546E − 11 | 1.7546E − 11 | 1.7546E − 11 | 7.6974E − 04 | 1.7546E − 11 | 1.7546E − 11 | 1.7546E − 11 | |

| Rank | 1 | 9 | 6 | 10 | 11 | 2 | 12 | 5 | 4 | 3 | 13 | 7 | 8 | |

| F19 | Mean | −3.8628 | −3.8565 | −3.8628 | −3.8628 | −3.8521 | −3.8628 | −3.8586 | −3.8628 | −3.8628 | −3.8628 | −3.2444 | −3.8628 | −3.8498 |

| Std | 2.4101E − 15 | 4.6038E − 03 | 3.1119E − 07 | 2.5391E − 15 | 4.9238E − 03 | 2.4338E − 15 | 3.8757E − 03 | 6.6070E − 07 | 1.4640E − 12 | 2.6962E − 15 | 3.8246E − 01 | 5.8676E − 07 | 8.1185E − 03 | |

| p value | — | 1.2118E − 12 | 1.2118E − 12 | 1.2819E − 04 | 1.2118E − 12 | 1.7918E − 07 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 3.3371E − 01 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | |

| Rank | 1 | 10 | 6 | 3 | 11 | 2 | 9 | 8 | 5 | 4 | 13 | 7 | 12 | |

| F20 | Mean | −3.3180 | −3.1614 | −3.2744 | −3.3101 | −3.0548 | −3.3022 | −3.1557 | −3.2543 | −3.2744 | −3.2863 | −1.7718 | −3.2705 | −2.5672 |

| Std | 2.1707E − 02 | 1.0313E − 01 | 5.9242E − 02 | 3.6278E − 02 | 8.7518E − 02 | 4.5066E − 02 | 1.7686E − 01 | 6.0203E − 02 | 6.6992E − 02 | 5.5415E − 02 | 5.7144E − 01 | 5.9930E − 02 | 4.6047E − 01 | |

| p value | — | 3.4939E − 10 | 2.7875E − 09 | 5.5310E − 03 | 1.2676E − 11 | 2.1547E − 06 | 8.2776E − 10 | 6.2217E − 10 | 8.2703E − 09 | 4.0485E − 01 | 1.2676E − 11 | 1.9262E − 09 | 1.2676E − 11 | |

| Rank | 1 | 9 | 5 | 2 | 11 | 3 | 10 | 8 | 6 | 4 | 13 | 7 | 12 | |

| F21 | Mean | −10.1532 | −10.1458 | −6.8968 | −10.0293 | −3.5438 | −10.1505 | −6.6362 | −10.1528 | −6.7781 | −7.9737 | −4.8880 | −8.3036 | −3.2110 |

| Std | 7.2269E − 15 | 1.0302E − 02 | 3.4191E + 00 | 2.5029E − 01 | 1.1557E + 00 | 1.2640E − 02 | 1.4262E + 00 | 2.1748E − 04 | 2.9086E + 00 | 2.9988E + 00 | 1.2337E + 00 | 2.7217E + 00 | 2.1989E + 00 | |

| p value | — | 1.2118E − 12 | 1.2118E − 12 | 1.1680E − 12 | 1.2118E − 12 | 1.2009E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 5.0090E − 09 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | |

| Rank | 1 | 4 | 8 | 5 | 12 | 3 | 10 | 2 | 9 | 7 | 11 | 6 | 13 | |

| F22 | Mean | −10.4029 | −10.3955 | −6.5803 | −10.3717 | −3.4513 | −9.9577 | −5.6700 | −10.4027 | −7.6337 | −8.4904 | −5.0128 | −9.3472 | −3.7030 |

| Std | 9.8958E − 16 | 1.0012E − 02 | 3.7393E + 00 | 1.2710E − 01 | 1.1586E + 00 | 1.6944E + 00 | 2.4093E + 00 | 2.4446E − 04 | 3.0825E + 00 | 3.0370E + 00 | 9.3867E − 01 | 2.1455E + 00 | 1.9874E + 00 | |

| p value | — | 1.0149E − 11 | 1.0149E − 11 | 3.8609E − 08 | 1.0149E − 11 | 1.0045E − 11 | 1.0149E − 11 | 1.0149E − 11 | 1.0149E − 11 | 1.3184E − 03 | 1.0149E − 11 | 1.0149E − 11 | 1.0149E − 11 | |

| Rank | 1 | 3 | 9 | 4 | 13 | 5 | 10 | 2 | 8 | 7 | 11 | 6 | 12 | |

| F23 | Mean | −10.5364 | −10.5225 | −5.9477 | −10.4759 | −3.9802 | −10.1326 | −6.0682 | −10.5361 | −8.0181 | −8.3159 | −4.5777 | −9.7278 | −4.1211 |

| Std | 1.8067E − 15 | 2.2253E − 02 | 3.9028E + 00 | 1.9642E − 01 | 1.6526E + 00 | 1.5454E + 00 | 2.6659E + 00 | 2.2169E − 04 | 2.9789E + 00 | 3.4865E + 00 | 1.4196E + 00 | 2.1065E + 00 | 1.4776E + 00 | |

| p value | — | 1.2118E − 12 | 1.2118E − 12 | 3.9038E − 12 | 1.2118E − 12 | 1.2068E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | 1.5405E − 04 | 1.2118E − 12 | 1.2118E − 12 | 1.2118E − 12 | |

| Rank | 1 | 3 | 10 | 4 | 13 | 5 | 9 | 2 | 8 | 7 | 11 | 6 | 12 | |

| +/−/= | — | 19/1/3 | 22/1/0 | 22/1/0 | 20/0/3 | 18/2/3 | 22/1/0 | 16/0/7 | 15/1/7 | 19/1/3 | 22/1/0 | 23/0/0 | 20/0/3 | |

| Mean rank | 1.1304 | 5.2174 | 8.0435 | 6.5652 | 9.3478 | 4.5217 | 9.1304 | 3.4348 | 5.0870 | 6.6087 | 10.4348 | 9.6522 | 8.4348 | |

| Final ranking | 1 | 5 | 8 | 6 | 11 | 3 | 10 | 2 | 4 | 7 | 13 | 12 | 9 | |

- Note: The best results obtained are highlighted in bold.

For unimodal functions, SMA, COA, and our proposed MCOA converge to the global optimal value (0) on F1∼F4, sharing the top ranking among all methods. AOA also achieves the global optimal solution on F2. These functions are still relatively easy to solve, and the advantage of MCOA still cannot be effectively emphasized. However, on F5∼F7, MCOA significantly outperforms the other comparison algorithms. On F5 and F6, MCOA achieves solution orders of magnitude better than COA and is substantially closer to the theoretical optimum. These results on unimodal functions, which contain only one global optimum, highlight MCOA’s exceptional exploitation capability in thoroughly searching the solution space. This enhanced performance stems from the integration of the Lévy flight strategy in equation (14), which strengthens local search, while the innovative vertical crossover operator ensures precise convergence.

For multimodal functions, MCOA obtains the most optimal results in terms of the average fitness value and the standard deviation on F9∼F13. Especially on F12 and F13, the convergence accuracy of MCOA is significantly improved over AO and COA owing to the powerful exploration mechanism of AO. It is worth noting that on F9 and F10, MCOA, AO, AOA, ARO, SMA, COA, and SCSO show consistent searchability, all providing desirable solutions. On F11, MCOA performs the same as AO, ARO, SMA, COA, WHO, and SCSO, and they rank tied for first. On F8, MCOA is slightly inferior to SMA and SO and ranks third among all methods. However, it can be noticed that the convergence accuracy of MCOA is still significantly enhanced compared with that of COA. Synthesizing these results, MCOA demonstrates strong exploration and local optimum avoidance abilities in solving multimodal functions. The expanded exploration mechanism of AO compensates for the lack of exploration capability, and the specular reflection learning strategy enables the search agent to perform bidirectional searches in the iterations, thus increasing the probability of locating the global optimum.

For fixed-dimension multimodal functions, MCOA ranks first on 9 out of 10 functions. On F17, the standard deviation of MCOA turns out to be slightly worse than that of WHO, thus ranking second. The underperformance of COA on F21∼F23, with solutions far from theoretical optimal values, indicates insufficient balance between exploration and exploitation in the original algorithm. MCOA, on the other hand, establishes a robust balance between exploration and exploitation and therefore can better address fixed-dimension multimodal functions.

The third to last row in Table 5 reports the p values of the Wilcoxon rank-sum test. It can be observed that MCOA outperforms AO on 19 problems, DO on 22, SO on 22, AOA on 20, ARO on 18, TSA on 22, SMA on 16, COA on 15, WHO on 19, LCA on 22, RIME on all 23, and SCSO on 20 problems. Furthermore, the last two rows in Table 5 present the Friedman mean ranking as well as the final ranking of different algorithms on 23 benchmark functions. MCOA attains the best mean ranking value of 1.1304. These comprehensive quantitative results show that the proposed technique has good potential for numerical optimization on the CEC2005 test set.

5.2.5. Convergence Analysis

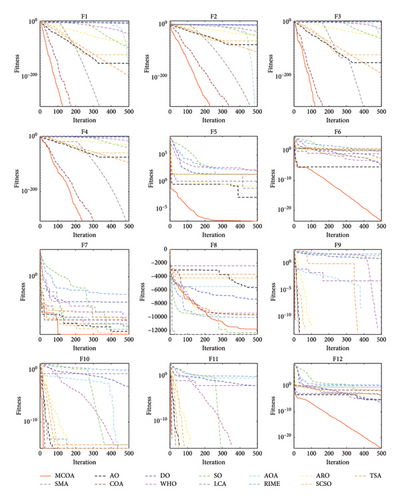

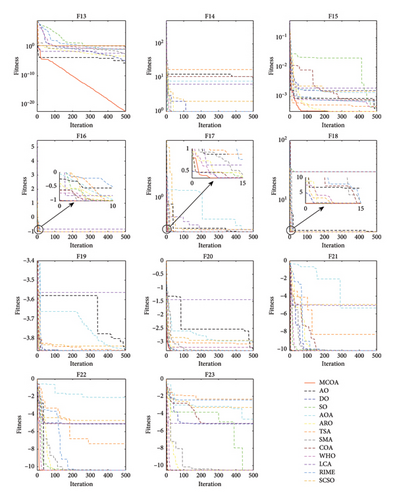

Figure 9 presents the convergence curves of MCOA compared with other algorithms across all 23 benchmark functions. The results demonstrate that MCOA can continuously converge to the optimal solution within 500 iterations in most test cases. For unimodal functions, while MCOA, COA, and SMA all reach the optimal value (0) on F1∼F4, MCOA requires notably fewer iterations, indicating superior convergence speed. This acceleration can be attributed primarily to the Lévy flight operator, which enhances the exploitation phase and guides the algorithm more efficiently toward the global optimum. For the more challenging F5 and F6, the convergence curves of MCOA exhibit steady decay with continuously improving solution accuracy. In contrast, the other comparison algorithms stagnate earlier. On F7, MCOA is slightly inferior in the initial iterations but eventually obtains better convergence results than other optimizers. For multimodal functions, MCOA rapidly identifies promising regions during early iterations and intensively exploits these neighborhoods to enhance convergence accuracy. On F9∼F11, MCOA provides desirable high-quality solutions with minimal iterations. MCOA inherits the superior exploration capability of AO due to the fusion of the position update mode of expanded exploration. On F12 and F13, the exploration performance of MCOA and AO in the early iterations is almost similar, but the former demonstrates superior local optima avoidance, ensuring better convergence accuracy. On F8, MCOA performs slightly worse than SO and SMA, ranking third in the test. For fixed-dimension multimodal functions, MCOA also maintains good convergence speed and accuracy. It can be found that MCOA rapidly transitions from exploration to exploitation in the initial iteration, effectively circumventing local optima. Particularly on F14, F15, F22, and F23, COA visibly falls into local optima due to imbalance between exploration and exploitation—a problem that MCOA successfully overcomes. The above experimental results indicate that the four improvement steps adopted in this paper strengthen the algorithm’s exploitation and exploration, allowing it to converge more rapidly to higher accuracy and avoid local stagnation in the optimization process. In summary, MCOA achieves highly competitive convergence performance compared to other state-of-the-art MH algorithms.

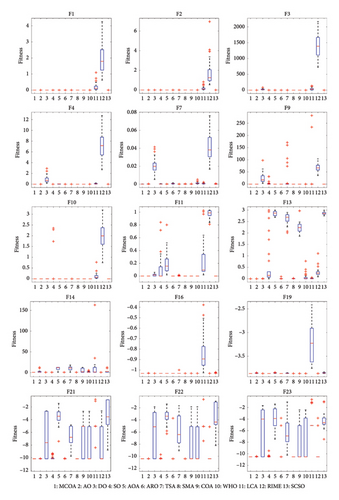

5.2.6. Boxplot Behavior Analysis

Figure 10 presents the boxplot of MCOA and comparison algorithms on partial benchmark functions. In these boxplots, the center line represents the median value, while the upper and lower whisker edges indicate maximum and minimum values, respectively, with outliers denoted by plus signs (“+”). From the results, MCOA shows good data consistency on unimodal functions F1, F2, F3, F4, and F7. Notably, on F7, both AO and COA generate outliers, while MCOA does not. Since multimodal and fixed-dimension multimodal functions have local minima, this is a stability check for the optimizer. MCOA presents a few outliers on F13, but the other algorithms are not as satisfactory, with obvious outliers for SO, and the medians of TSA and COA are far from the theoretical optimum (0). In other test cases, the boxplots of MCOA are more centralized than comparison algorithms in terms of maximum, minimum, and median values. These boxplot analyses confirm that the integration of multiple search strategies significantly improves the robustness of COA.

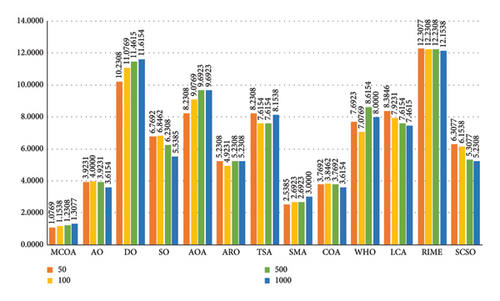

5.2.7. Scalability Analysis

The problem dimension represents the number of independent decision variables to be considered in the optimization problem. As problem dimension increases, many algorithms suffer from dimensional catastrophe, experiencing significant degradation in convergence accuracy. To assess dimensional robustness, we extend the dimension (D) of 13 benchmark functions (F1∼F13) from 30 to 50, 100, 500, and 1000, respectively, with other parameter settings remaining unchanged. The detailed experimental results are presented in Tables 6, 7, 8, and 9.

| Function | Metric | MCOA | AO | DO | SO | AOA | ARO | TSA | SMA | COA | WHO | LCA | RIME | SCSO |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 7.7349E − 104 | 2.2841E − 03 | 3.7615E − 88 | 2.5430E − 04 | 2.3024E − 64 | 1.8368E − 185 | 0 | 0 | 9.0793E − 40 | 1.8894E − 01 | 1.9083E + 01 | 6.7692E − 117 |

| Std | 0 | 4.2358E − 103 | 1.0956E − 03 | 1.4491E − 87 | 8.9585E − 04 | 9.3060E − 64 | 0 | 0 | 0 | 2.8325E − 39 | 2.4430E − 01 | 5.0240E + 00 | 3.3274E − 116 | |

| F2 | Mean | 0 | 4.2901E − 50 | 2.1083E − 02 | 3.4499E − 39 | 2.4315E − 186 | 3.7916E − 35 | 1.1161E − 95 | 0 | 0 | 1.1617E − 22 | 2.5103E − 01 | 9.6854E + 00 | 2.3729E − 59 |

| Std | 0 | 1.7196E − 49 | 5.1066E − 03 | 5.2408E − 39 | 0 | 1.1433E − 34 | 2.5455E − 95 | 0 | 0 | 2.2054E − 22 | 1.6048E − 01 | 3.5615E + 00 | 1.1698E − 58 | |

| F3 | Mean | 0 | 1.5405E − 104 | 1.1544E + 03 | 2.0839E − 52 | 7.7499E − 02 | 5.5181E − 46 | 1.8650E − 174 | 0 | 0 | 3.8369E − 20 | 1.4141E + 02 | 1.4235E + 04 | 1.5770E − 110 |

| Std | 0 | 8.4251E − 104 | 6.3811E + 02 | 9.0553E − 52 | 6.4032E − 02 | 2.4178E − 45 | 0 | 0 | 0 | 1.1419E − 19 | 1.6365E + 02 | 2.6278E + 03 | 7.3573E − 110 | |

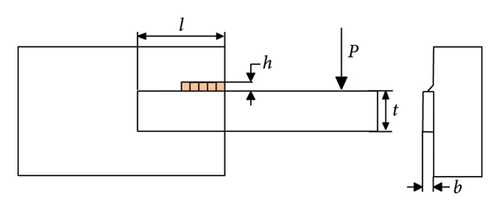

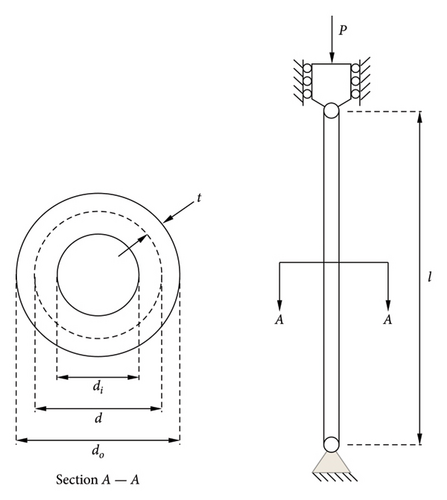

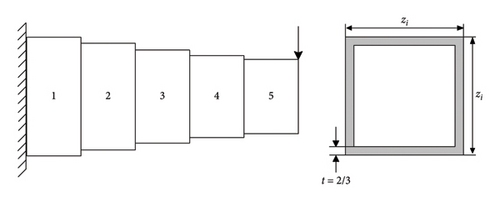

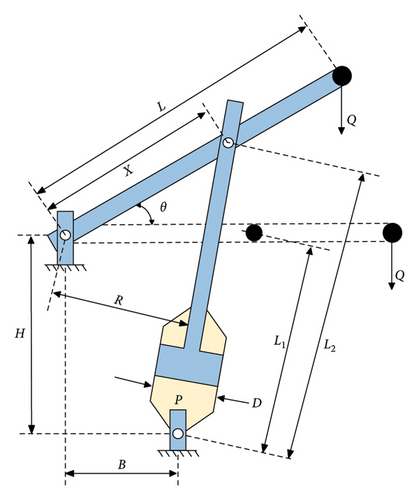

| F4 | Mean | 0 | 2.2620E − 56 | 1.5925E + 01 | 2.2015E − 38 | 5.5102E − 02 | 5.5789E − 25 | 1.7409E − 87 | 0 | 0 | 5.3149E − 15 | 9.0639E − 02 | 3.2034E + 01 | 1.2047E − 57 |