IntFedSV: A Novel Participants’ Contribution Evaluation Mechanism for Federated Learning

Abstract

Federated learning (FL), which is a distributed privacy computing technology, has demonstrated strong capabilities in addressing potential privacy leakage for multisource data fusion and has been widely applied in various industries. Existing contribution evaluation mechanisms based on Shapley values uniquely allocate the total utility of a federation based on the marginal contributions of participants. However, in practical engineering applications, participants from different data sources typically exhibit significant differences and uncertainties in terms of their contributions to a federation, thus rendering it difficult to represent their contributions precisely. To evaluate the contribution of each participant to FL more effectively, we propose a novel interval federated Shapley value (IntFedSV) contribution evaluation mechanism. Second, to improve computational efficiency, we utilize a matrix semitensor product-based method to compute the IntFedSV. Finally, extensive experiments on four public datasets (MNIST, CIFAR10, AG_NEWS, and IMDB) demonstrate its potential in engineering applications. Our proposed mechanism can effectively evaluate the contribution levels of participants. Compared with the case of three advanced baseline methods, the minimum and maximum improvement rates of standard deviation for our proposed mechanism are 11.83% and 99.00%, respectively, thus demonstrating its greater stability and fault tolerance. This study contributes positively to promoting engineering applications of FL.

1. Introduction

In recent years, owing to the continuous development of technologies such as 5G, the Internet of Things, and artificial intelligence, the significance of big data has been constantly highlighted [1]. However, owing to the requirements of trade secrets and privacy protection laws [2, 3], significant amounts of data are typically stored and distributed on the edge devices of various enterprises and individuals. Ensuring the privacy and security of data from all parties while achieving data fusion as well as mining the actual value of big data has become an urgent issue [4]. Federated learning (FL), which is a novel distributed machine-learning technology, is a viable option for solving these issues [5]. Unlike common centralized machine-learning schemes [6], FL only allows participants to train local models using private data locally and then transfer model parameters or gradient information under the protection of encryption mechanisms, thus ultimately yielding a convergent global model. FL is widely applied in various industries, such as healthcare [7], financial risk control [8], and intelligent manufacturing [9].

As a distributed privacy computing technology, FL cannot be applied commercially without an unbiased and reasonable incentive mechanism to encourage more participants to join a federation [10]. A key prerequisite for designing incentive mechanisms is the accurate evaluation of participants’ contribution level [11]. However, in practical engineering applications, the heterogeneity of participants from different data sources in terms of data, computing power, and communication capabilities results in different contribution levels to a federation [12, 13]. Additionally, the order in which participants join the federation and the probability properties of FL result in significant uncertainty in the contribution level of participants [14, 15]. These differences and uncertainties render it difficult to evaluate the participants’ contributions to precise values. Difficulties in effectively evaluating the contribution level of participants will result in adverse problems such as “free riding” and “malicious attacks,” which will degrade the overall utility of a federation [16]. Over time, these problems affect the enthusiasm of participants to join the federation and ultimately affect the sustainable development of the federation ecosystem [17].

From the perspective of game theory, participants of FL exhibit a cooperative game relationship [18]. Therefore, many scholars have considered using the Shapley value (SV) method in cooperative games to evaluate the contribution of FL, based on which positive progress has been realized [11, 19, 20]. Existing SV-based methods uniquely allocate the total utility of a federation based on the marginal contribution of the participants. However, in practical FL scenarios, the total utility generated by a federation typically exhibits significant uncertainty and cannot effectively represent precise values [21]. Hence, we propose an interval federated Shapley value (IntFedSV) contribution evaluation mechanism. This mechanism extends the classic single-point SV to a finite interval, thus enabling the contribution level of participants to be present in the interval. Unlike its counterparts, the IntFedSV mechanism introduces an interval cooperative game SV into the design of a contribution evaluation mechanism for FL, thus effectively addressing the challenges posed by the differences and uncertainties in participant contribution levels in mechanism design. However, the calculation of IntFedSV is exponential. To reduce the time required for calculating the IntFedSV, we introduce the matrix semitensor product theory.

- 1.

Propose an innovative contribution evaluation mechanism: To address the challenges posed by differences and uncertainties in participant contribution levels in practical engineering applications, this paper proposes the IntFedSV contribution evaluation mechanism based on the interval Shapley value (ISV) in FL.

- 2.

Improve computational efficiency: To further improve computational efficiency, this study introduces a matrix semitensor product method to calculate the IntFedSV and provides detailed calculation steps and a verification process.

- 3.

Validate the mechanism performance: The proposed IntFedSV mechanism is validated for its performance in evaluating participant contribution levels through extensive experiments conducted on four public datasets: MNIST, CIFAR10, AG_NEWS, and IMDB. The experimental results show that compared with the case of three advanced baseline methods, the minimum and maximum improvement rates of the standard deviation of the proposed mechanism are 11.83% and 99.00%, respectively, thus demonstrating its greater advantage in terms of stability and fault tolerance. This study contributes positively to promoting the engineering application of FL.

The remainder of this paper is organized as follows: in Section 2, we briefly introduce the existing studies related to the design of the contribution evaluation mechanism for FL; in Section 3, we present the relevant preliminary information and definitions; in Section 4, we detail the proposed mechanism; the experimental design and result analysis are presented in Section 5; finally, we provide the conclusions and future outlook.

2. Related Work

In this section, we first briefly introduce the existing contribution evaluation mechanisms for FL, in particular contribution evaluation methods based on the SV. Second, we introduce the current state of research and applications pertaining to interval cooperative games and matrix semitensor products.

2.1. Contribution Evaluation Mechanism for FL

Currently, the contribution evaluation mechanisms for FL are primarily categorized into four types: self-report [22], marginal contribution [19, 20, 23], similarity [24, 25], and combination optimization [26, 27]. Owing to space limitations, we focus on the marginal contribution evaluation method based on the SV. It was proposed by Professor Lloyd Shapley in 1953, is a classic concept in cooperative game theory [28] and is typically used to measure the contribution of each participant to a value created via cooperation. Song, Tong, and Wei [19] proposed a contribution index (CI) based on the SV and used it to evaluate the contribution levels of participants in horizontal federated learning (HFL). However, directly calculating the CI requires exponential model retraining. They proposed two gradient-based approximation methods (one-round and multiround updating) that significantly improved the evaluation efficiency. To further accelerate the computational efficiency of SV-based contribution evaluation methods, researchers primarily focused on two aspects: (1) improving the speed of single-round evaluation and (2) reducing the number of evaluations for submodels. To improve the speed of single-round evaluations, we can utilize the gradient update approximation reconstruction model trained locally via FL instead of retraining the required submodels [19, 29]. To reduce the number of evaluations of submodels, Monte Carlo sampling or group testing methods are typically used [20, 30]. However, both approximate reconstruction models and Monte Carlo sampling methods generate SV-based contribution levels with large stochastic uncertainties. This is because, in each FL, a client selection mechanism is typically used to select some of the participants for model training. Second, the computing power and communication abilities of the participants during each FL process differ significantly. Therefore, the SV obtained solely from a single gradient update cannot represent the actual contribution of the participants. Hence, we propose an FL contribution evaluation mechanism based on the ISV, which extends the classic single-point SV to a finite interval. The contribution level of the participants is presented within an unbiased and reasonable range.

Previous studies focused primarily on the design of contribution evaluation mechanisms in HFL scenarios [31]. Wang, Dang, and Zhou [11] first considered the contribution evaluation mechanism in vertical federated learning (VFL) and used the SV to calculate the importance of grouped features. Subsequently, Han et al. [32] proposed a new data-valuation metric, named Shapley-CMI (CMI = conditional mutual information), based on information theory and game theory and claimed that it can effectively evaluate the value of participants in VFL tasks without relying on any specific model. However, calculating the conditional mutual information requires accessing the labels of each client, which may be impractical under VFL. Hence, Fan et al. [33] proposed the vertical federated Shapley value (VerFedSV) method and applied it to synchronous and asynchronous VFL algorithms. Numerous experimental results validated the fairness, effectiveness, and adaptability of the VerFedSV method. For more details regarding the contribution evaluation mechanism of VFL, please refer to the most recent review by Cui et al. [34]. In this study, we first apply our proposed IntFedSV contribution evaluation method in HFL.

2.2. Interval Cooperative Games and Matrix Semitensor Product

Owing to the complexity and uncertainty of the environment, the marginal profits obtained by participants in cooperative games are not exact numerical values. Therefore, using the interval to represent uncertainty may be more reasonable. Interval cooperative games are an extension of classical cooperative games, which have been extensively investigated [35]. Based on the SV in cooperative games, Alparslan Gök, Branzei, and Tijs [36] formally defined the ISV. They introduced the ISV into uncertain transportation conditions, investigated a transportation interval game based on partial subtraction operations, and provided interval kernel conclusions for the transportation interval game. Han, Sun, and Xu [37] proposed the relevant operations and properties of real-number intervals based on the Moore subtraction operator and extended them to interval cooperative game models, thus providing solutions for interval kernels and ISV. Palancı et al. [38] proved that the ISV satisfies additivity, effectiveness, symmetry, and virtual participant properties.

Investigations into interval cooperative games are difficult to conduct owing to the interval linear operations involved. Alparslan Gök, Branzei, and Tijs [36] defined an interval subtraction, [aL, aR] − [bL, bR] = [aL − bL, aR − bR]; however, it can only be performed when the condition aR − aL ≥ bR − bL is satisfied, thus posing significant limitations. Fei, Li, and Ye [39] transformed interval profits into a cooperative game with a parameter α. The advantage of this method is that it uses only the maximum and minimum profits of each alliance without requiring the calculation of interval subtraction, thus effectively avoiding the problems of irreversibility and expanded uncertainty for interval operations. Additionally, Fei, Li, and Ye [39] proposed a discounted Shapley value (DSV) profit distribution scheme and proved that the DSV satisfy effectiveness, symmetry, additivity, and δ-discounted player property. When the discount factor δ = 1, δ-discounted players are virtual players; when δ = 0, δ-discounted players are invalid players. The introduction of the discount factor renders alliance profits more realistic, as it not only considers the contribution of each participant to the total profits but also avoids the problem of reduced cooperation enthusiasm among participants due to the average distribution of total profits, thereby compensating for the adverse effects of uncertainty factors.

In recent years, the matrix semitensor product method has become an effective tool for analyzing finite cooperative games [40, 41]. The potential games [42] and partially symmetric games [43] have been extensively investigated under the promotion of matrix semitensor product theory. For more information, readers can refer to the most recent review by Zhao, Li, and Hou [44] regarding matrix semitensor products. In this study, we used the matrix semitensor product method to calculate the IntFedSV of an interval cooperative game with a discount factor in HFL scenarios.

3. Preliminaries

3.1. HFL

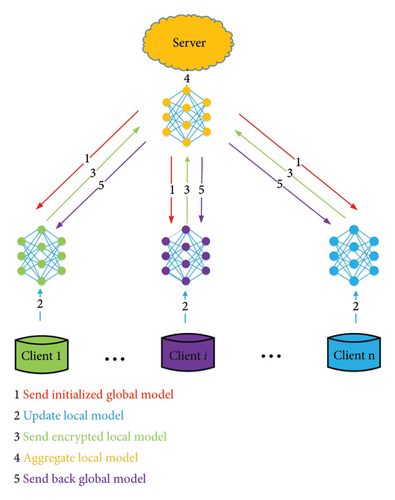

-

Step 1: The server sends an initialized global model M0 to each participant i.

-

Step 2: Participant i trains local model using dataset Di.

-

Step 3: Participant i encrypts model using privacy protection mechanisms such as homomorphic encryption and differential privacy and then sends it to the server.

-

Step 4: The server decrypts the encrypted model from the participants and aggregates them to obtain a new global model Mt+1.

-

Step 5: The server resends the aggregated global model Mt+1 to all participants i.

We adopted the necessary privacy protection mechanisms in HFL to transfer local/global models, thus ensuring the security of the entire training process.

3.2. ISV

The ISV is an improved concept based on the classical SV [36] and is primarily used to manage data with uncertainty or interval values. In the classical SV, the contributions of participants are determined by calculating their marginal contributions in different collaborative situations. Within the ISV framework, the contribution of each participant is extended into an interval [ai, bi], where ai and bi are the minimum and maximum contributions of the participants in different scenarios, respectively. For example, consider three participants, A, B, and C, cooperating to complete a task. The resulting benefit is v(s), where S represents a subset of the participants. In the classical SV, we calculate the marginal contribution of each participant and their average. Meanwhile, in ISV, we calculate the marginal contribution interval of each participant in different contexts and provide an interval for the participant’s contribution. The ISV introduces intervals to address uncertainty, thus rendering it more flexible and adaptable to complex situations in practical applications. It is particularly suitable for problems involving uncertain data or risk assessments and provides more information and robustness to the calculations contributed by participants as compared with the classical SV.

3.3. Matrix Semitensor Product

Matrix semitensor products are the main research tools for state-space methods in logical systems and have been widely applied for solving nonlinear problems. Next, we briefly introduce the definitions, properties, and other aspects of a matrix semitensor product.

Definition 1 (Matrix semitensor product) [46]. If matrix and matrix are provided, then the semitensor product of matrices and is defined as follows:

The matrix semitensor product is an extension of matrix multiplication that extends ordinary matrix multiplication to the multiplication of any two matrices. When n = p, the matrix semitensor product is a regular matrix multiplication. Unless otherwise specified, matrix multiplication mentioned herein refers to a semitensor product, and ⋉ is omitted.

Definition 2 (Permutation matrix). If permutation matrix , then the following is satisfied:

Because is a sparse matrix, it is typically expressed in concise form, W[m, n] = δmn[1 1 + m ⋯ 1 + (n − 1)m ⋯ mm + m ⋯ m + (n − 1)m]. The semitensor product of the matrices satisfies pseudocommutativity [47]. According to Definition 2, vector multiplication can be exchanged with the semitensor product, i.e., .

In the study of finite-interval games, the utility functions of participants can be regarded as pseudo-Boolean (logic) functions. The following proposition provides an algebraic expression for the pseudological function.

Proposition 1 [48]. Let be a pseudological function, and if a unique 1 × 2n-matrix exists that satisfies , then is known as the unique structural matrix of the pseudological function f.

Lemma 1. Let be a pseudological interval function. If a unique interval matrix exists that satisfies , then is known as the unique structural matrix of the pseudological interval function f.

4. Methodology

To address the challenges posed by the differences and uncertainties in the contribution levels of participants from different data sources for mechanism design, we propose the IntFedSV contribution evaluation mechanism for the first time in HFL scenarios. The IntFedSV is a variant of the ISV for FL scenarios. Unlike previous methods [19, 20], the core idea of the IntFedSV is to extend the classical single-point SV to a finite interval using the different utility values of the subfederation in different iteration rounds and introducing the discount factor to adjust the final allocation effect of the IntFedSV. This method is advantageous as it fully utilizes the fault tolerance of the ISV to offset the adverse problems caused by the differences and uncertainties of the participants. Ultimately, the participants’ contribution levels are within a more stable range. Additionally, to improve the computational efficiency, we utilized a matrix semitensor product-based method to compute the IntFedSV.

4.1. System Workflow

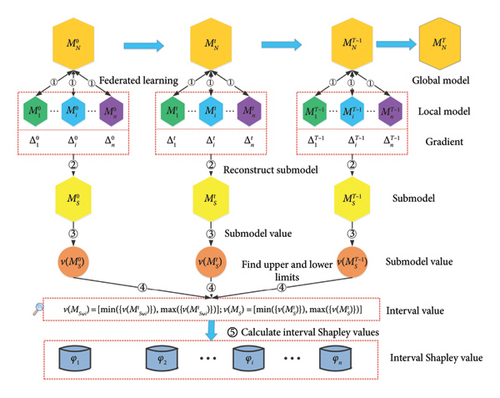

-

Step 1: FL. In each iteration round t ∈ {0, 1, ⋯, T − 1}, all n participants i ∈ N = {1, 2, ⋯, n} perform the FL task based on the steps outlined in Section 3.1 to obtain a local model .

-

Step 2: The subfederation model is approximately reconstructed [19]. The server utilizes the gradient information obtained from the FL task to approximately reconstruct the model for subfederation S. Here, .

-

Step 3: Evaluate the utility of the submodel. The server maps the subfederation model based on a preset utility function to obtain the utility value of the submodels. The utility function maps the data of each participant to the performance metrics (accuracy [20] or loss [49]) of the model trained on the data.

-

Step 4: Determine the upper and lower limits of the utility interval. The server traverses the utility value of all subfederated models in each iteration round t and identifies the utility interval , where S ∈ 2N.

-

Step 5: Calculate the IntFedSV (which will be defined later.). The server uses the matrix semitensor product method to calculate the IntFedSV Φ(v) of the participants.

4.2. Definition of IntFedSV

Without loss of generality, we used a binary structure (N, v) to denote interval federated cooperative games, where the set of finite elements N = {1, 2, ⋯, n} denotes the set of participants for FL, and the feature function satisfies v(∅) = [0, 0], where represents the real number. All subsets of N are denoted as 2N = {S|S⊆N}. For ∀S ∈ 2N, v(MS) is the utility function of the subfederated model MS, and the function value is an interval denoted as , where S ∈ 2N, t ∈ {0, 1, ⋯, T − 1}. When , the interval federated cooperative game degenerates into the classical federated cooperative game.

However, in many FL scenarios, the total utility is typically affected by various uncertain factors beyond the performance of the federated model. To measure the contribution level of the participants more fairly, we formally define the IntFedSV based on the ISV with a discount factor by Fei, Li, and Ye [39].

Definition 3 (IntFedSV). Let (N, v) denote the interval federated cooperative game, N = {1, 2, ⋯, n} denote the set of participants for FL, and denote the interval value of the utility for subfederated model MS. Therefore, the IntFedSV for participant i can be expressed as

4.3. IntFedSV Calculation Method Based on Matrix Semitensor Product

If we calculate the IntFedSV directly using (7) in Definition 3, then the time complexity of the calculation is , where n is the number of participants and T is the maximum iteration round. This method is time-consuming.

To reduce the time required for calculating the IntFedSV, we introduce the matrix semitensor product theory. We first present the calculation method and then provide the relevant proof. Theorem 1 provides a convenient method for calculating the IntFedSV. Please refer to Appendix A for the detailed proof.

Theorem 1. For an interval federated cooperative game (N, v), where N = {1, 2, ⋯, n} is the set of participants and is the structural matrix of the interval utility function v(MS), the SV of the interval federated cooperative games can be calculated as follows:

According to Theorem 1, the time complexity of calculating the IntFedSV based on the matrix semitensor in (8) is reduced to . Additionally, we introduce truncation techniques to accelerate the computational efficiency of the IntFedSV (which will be discussed later).

4.4. IntFedSV Algorithm

Algorithm 1 presents the pseudocode of the proposed method. In general, the algorithm comprises two components: the server and client. In the first line, the server randomly initializes the global model and submodels. For each iteration round t, in Lines 4–9, the server aggregates the global model. In Lines 11–15, the server uses the participant’s gradient values during FL training to reconstruct the subfederation model, which effectively avoids the resource waste caused by retraining the subfederation. Additionally, we set a round-truncated threshold τ > 0 during the training process to improve the speed of evaluating the utility of the submodels. In Lines 18–23, the server first uses the evaluation function to obtain the utility interval of the subfederated model and then uses the IntFedSV formula (Definition 3) to calculate the contribution values of each participant. In Lines 24–30, the client uses the classic minibatch stochastic gradient descent algorithm to train the local model on a local dataset [19].

-

Algorithm 1: IntFedSV.

-

Input: Participants set N, local data Di, training rounds T, local minibatch size B, local epochs E, learning rate η, evaluation function v, round-truncated threshold τ, discount factor λ, and Shapley matrices K and F.

-

Output: IntFedSV Φ(v).

-

Server executes:

-

1. Initialize global model M0 and submodel

-

2. for each round t = 0, 1, …, T − 1do

-

3. # Aggregate global model

-

4. Send global model Mt to all participants

-

5. for each participant i in parallel do

-

6.

-

7.

-

8. end for

-

9.

-

10. # Approximate reconstruction submodel

-

11. if|v(Mt+1) − v(Mt)| > τthen

-

12. for each subset S⊆Ndo

-

13. +

-

14. end for

-

15. end if

-

16. end for

-

17. # Calculate interval federated Shapley Value (IntFedSV)

-

18. Initialize the structural matrix

-

19. for each S⊆Ndo

-

20.

-

21. end for

-

22.

-

23. returnMT and Φ(v)

-

ClientUpdate(i, Mt):

-

24. Initialize local model to global model Mt

-

25. for each local epoch e = 0, 1, …, E − 1do

-

26. for batch B ∈ Dido

-

27.

-

28. end for

-

29. end for

-

30. return

4.5. Time Complexity Analysis

As presented in Section 4.2, the time complexity of calculating the IntFedSV Φ(v) based on the matrix semitensor product is . After introducing the truncated threshold τ (in Section 4.4), the time complexity of calculating the IntFedSV is between and . Specifically, a smaller truncated threshold τ implies fewer truncated rounds and a time complexity closer to . In the opposite case, the time complexity is closer to . However, the actual time complexity is closely related to the distribution and size of the datasets.

5. Experiments

To verify the performance of the proposed IntFedSV contribution evaluation method in practical engineering applications, we conducted FL simulation experiments using an open-source distributed learning simulator [20] on the MNIST [50], CIFAR10 [49], AG_NEWS [51], and IMDB [52] datasets. The MNIST dataset comprises 70,000 black and white images containing 0–9 handwritten digits, each measuring 28 × 28 pixels. We used 60,000 and 10,000 images for training and testing, respectively. The CIFAR10 dataset contains 60,000 color images measuring 32 × 32 pixels from 10 categories, where 50,000 and 10,000 images were specified for training and testing, respectively. The MNIST and CIFAR10 datasets are public datasets commonly used for image classification. The AG-NEWS dataset contains 120,000 training samples and 7600 testing samples from four major categories. Each category features 30,000 and 1900 samples in the training and testing sets, respectively. The IMDB dataset contains 50,000 movie reviews, of which 25,000 were used for training and 25,000 for testing. Both the training and testing sets contained 50% positive and 50% negative comments. AG-NEWS and IMDB are public datasets commonly used for text classification in NLP. The experiment was conducted on a Linux system equipped with 32G main memory and a 12G RTX 4070Ti graphics card. The source code and partial results are available at https://github.com/yunshuichanxin520/distributed_learning_simulator. We assumed that six participants participated in the FL model training.

5.1. Dataset Settings

- 1.

Same distribution and same size (iid): For each dataset, we randomly apportioned the dataset (training and testing sets) into six segments of the same size to ensure that each participant obtained the same data distribution.

- 2.

Same distribution and different sizes (iid): For each dataset, we randomly sampled from the entire training set based on a preset ratio while ensuring that each participant had the same amount of data for each class label. That is, the ratios of Participants 1 and 2 were 10%, those of Participants 3 and 4 were 50%, and those of Participants 5 and 6 were 90%.

- 3.

Different distributions and same size (non-iid): Each dataset was randomly apportioned into six segments of the same size. Subsequently, the labels were “flipped” based on the preset ratio to add noise. That is, the ratios of Participants 1 and 2 were 1%, those of Participants 3 and 4 were 10%, and those of Participants 5 and 6 were 20%. Here, “flip” refers to randomly shuffling the labels of each sample (image/comment) into incorrect values.

- 4.

Different distributions and different sizes (non-iid): We used the common Dirichlet distribution to apportion each dataset. The setting and range of concentration parameter α > 0 are crucial for controlling the data size and distribution. The larger the value of α, the more concentrated the generated distribution is. The smaller the value of α, the more dispersed the generated distribution is [53]. To generate datasets of different sizes and distributions, we set α to 0.5.

5.2. Comparison Algorithms

- 1.

TMR-Shapley [29]. This method is an extension of the MR method presented in [19]. It controls the weight of each participant’s SV by adding a decay factor and introduces a truncated threshold to reduce unnecessary submodel reconstruction.

- 2.

GTG-Shapley [20]. In this method, two truncated techniques guided by Monte Carlo sampling between and within rounds are proposed to further improve the computational efficiency of SV-based contribution indices.

- 3.

ComFedSV [47]. This method is an improvement of the FedSV [14]. To reduce computational costs, a certain number of participants are selected for model training in each round, which results in an incomplete utility matrix. Hence, the low-rank matrix is solved to complete the utility matrix and then the SV is calculated.

5.3. Tasks and Parameter Settings

In our setup, the contribution evaluation mechanism is independent of FL model training. Therefore, to ensure the optimal training effect of the FL model, we referred to the method presented in [20, 54] to set the optimal hyperparameters related to the training of the FL model (as listed in Table 1), during which the client selected the best result for uploading based on the validation set in five epochs. We used the test accuracy of the model as the value of the utility function. For text classification tasks, word embeddings were initialized using glove word embeddings [55]. For the ComFedSV, we set the rate of participants to 0.5, which implies that three clients participate in the FL model training and contribution evaluation in each round. We will conduct ablation experiments on the hyperparameters (round-truncated threshold τ and discount factor λ) related to the performance of the contribution evaluation mechanism in the future. In the comparative experiment section, for each dataset, we set τ to 0.005; additionally, we set λ to 1 (From Definition 3, one can infer that when λ = 1, the discounted SV degenerates into a classical SV. Since other baseline methods used for comparison are classical SVs, we set λ = 1).

| Dataset | Participants | Model | Optimizer | Batch size | Learning rate | Rounds | Epochs | Parameters |

|---|---|---|---|---|---|---|---|---|

| MNIST | 6 | LeNet5 | SGD | 64 | 0.01 | 20 | 5 | 60,000 |

| CIFAR10 | densenet40 | 0.1 | 100 | 0.17 million | ||||

| AG_NEWS | Classification model with two transformer encoder layers | 0.01 | 17 million | |||||

| IMDB |

5.4. Evaluation Metric

5.5. Analysis of Experimental Results

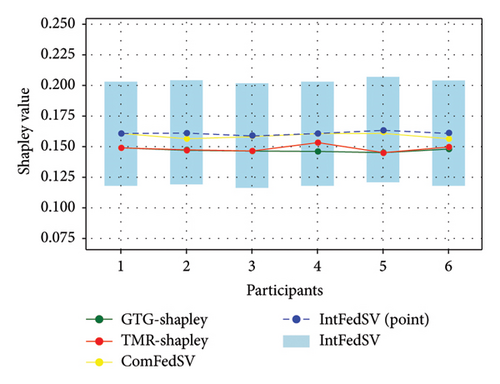

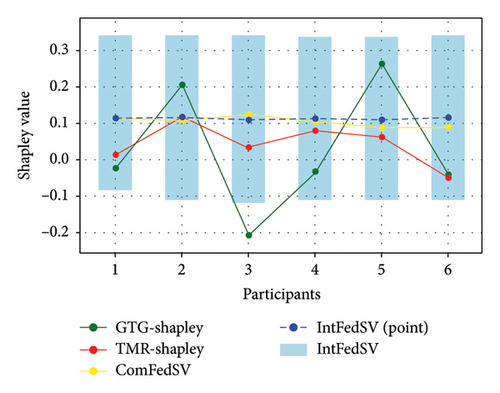

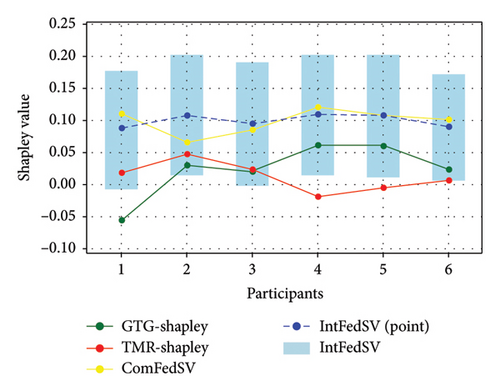

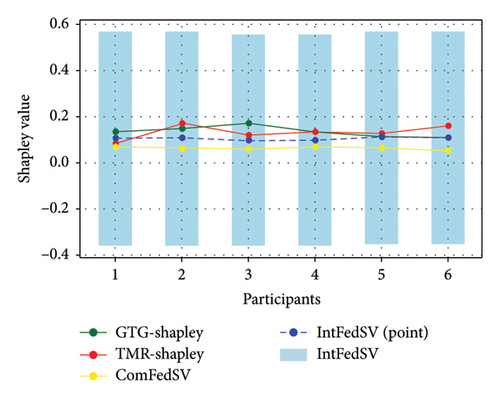

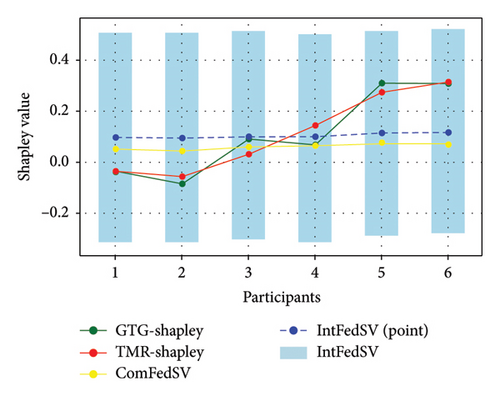

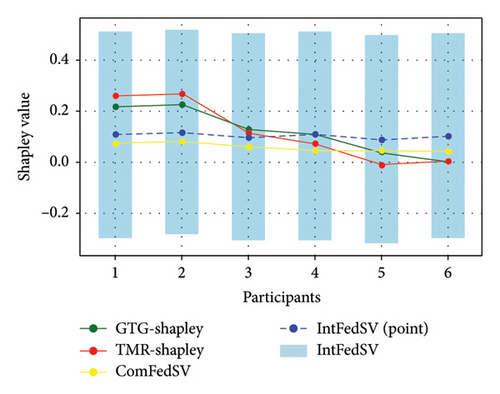

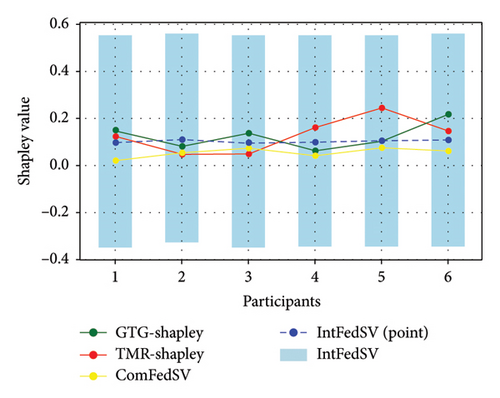

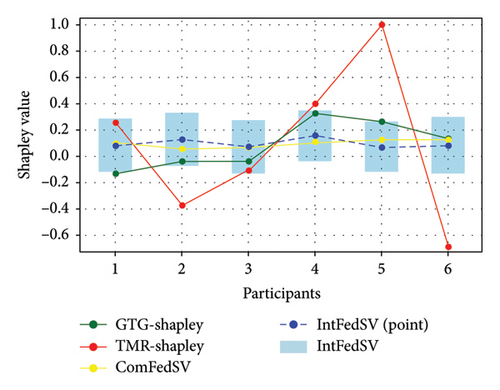

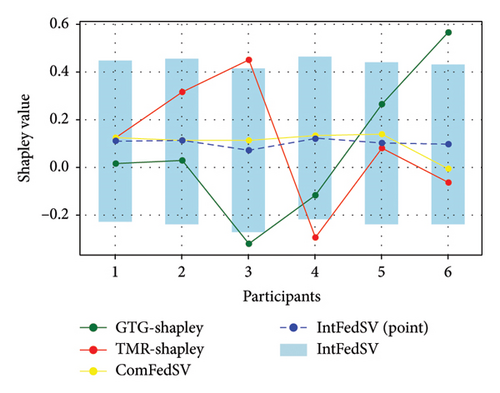

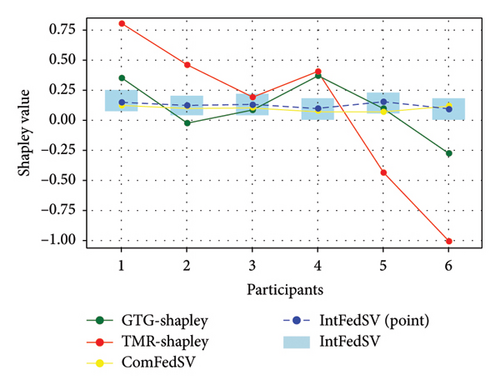

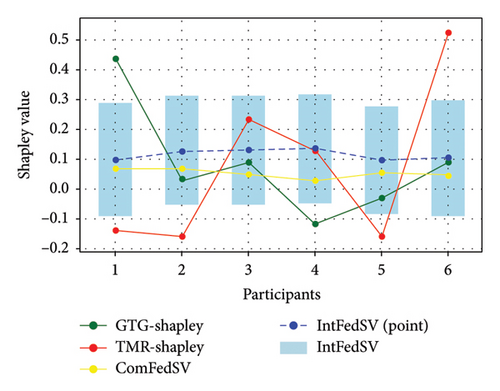

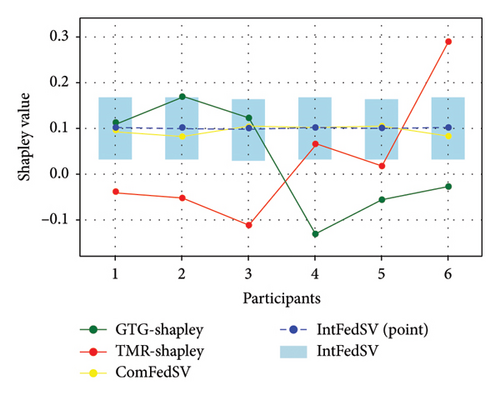

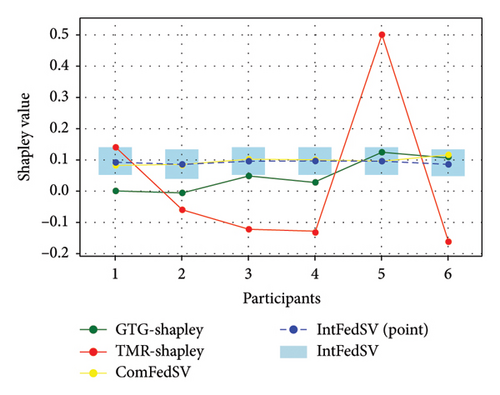

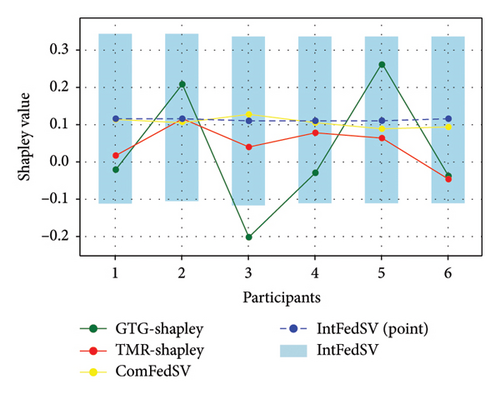

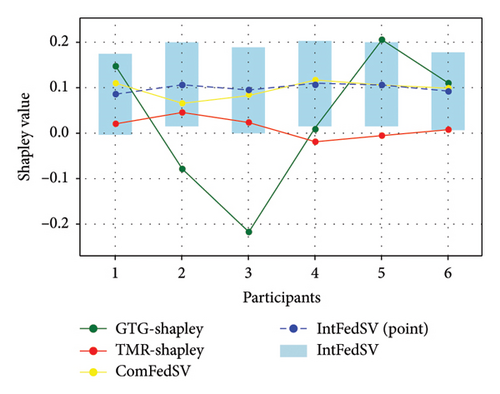

Figures 3, 4, 5, and 6 show the variation in the SV for each participant under different settings for the four datasets. As shown in the figures, the proposed IntFedSV contribution evaluation mechanism extends the classical single-point SV to a finite interval. However, the other baseline methods calculated a single-point SV. To facilitate comparison, we selected the median value of the IntFedSV interval as an example (dashed blue line). Intuitively, the SV calculated by the TMR-Shapley and GTG-Shapley mechanisms fluctuated significantly, whereas the IntFedSV and ComFedSV were relatively stable, particularly on the MNIST, AG-NEWS, and IMDB datasets.

To further quantify the performances of the different evaluation mechanisms, we calculated the standard deviation/improvement rate of the participants’ SVs for each mechanism under different settings in the datasets. As shown in Tables 2, 3, 4, and 5, the standard deviation of the proposed IntFedSV evaluation mechanism was the minimum under the different settings of the four datasets. Additionally, its minimum and maximum improvement rates were 11.83% and 99.00%, respectively. This demonstrates the superiority of the proposed mechanism in terms of stability.

| CIFAR10 settings | IntFedSV | GTG-Shapley | TMR-Shapley | ComFedSV |

|---|---|---|---|---|

| Same distribution and same size | 0.0011 | 0.0014/21.43% | 0.0027/59.26% | 0.0020/45.00% |

| Same distribution and different size | 0.0417 | 0.1147/63.64% | 0.1981/78.95% | 0.1334/68.74% |

| Different distribution and same size | 0.0024 | 0.1593/98.49% | 0.0519/95.38% | 0.0117/79.49% |

| Different distribution and different size | 0.0087 | 0.0393/77.86% | 0.0205/57.56% | 0.0179/51.40% |

| CIFAR10 settings | IntFedSV | GTG-Shapley | TMR-Shapley | ComFedSV |

|---|---|---|---|---|

| Same distribution and same size | 0.0059 | 0.0218/72.94% | 0.0272/78.31% | 0.0069/14.49% |

| Same distribution and different size | 0.0100 | 0.1538/93.50% | 0.1441/93.06% | 0.0150/33.33% |

| Different distribution and same size | 0.0085 | 0.0833/89.80% | 0.1107/92.32% | 0.0160/46.88% |

| Different distribution and different size | 0.0058 | 0.0516/88.76% | 0.0681/91.48% | 0.0169/65.68% |

| AG_NEWS settings | IntFedSV | GTG-Shapley | TMR-Shapley | ComFedSV |

|---|---|---|---|---|

| Same distribution and same size | 0.0221 | 0.1682/86.86% | 0.5445/95.94% | 0.0336/34.23% |

| Same distribution and different size | 0.0168 | 0.2800/94.00% | 0.2414/93.04% | 0.0485/65.36% |

| Different distribution and same size | 0.0231 | 0.2245/89.71% | 0.6141/96.24% | 0.0262/11.83% |

| Different distribution and different size | 0.0153 | 0.1732/91.17% | 0.2536/93.97% | 0.0173/12.27% |

| IMDB settings | IntFedSV | GTG-Shapley | TMR-Shapley | ComFedSV |

|---|---|---|---|---|

| Same distribution and same size | 0.0013 | 0.1090/98.81% | 0.1294/99.00% | 0.0092/85.87% |

| Same distribution and different size | 0.0037 | 0.0485/92.37% | 0.2318/98.40% | 0.0124/70.16% |

| Different distribution and same size | 0.0024 | 0.1593/98.49% | 0.0519/95.38% | 0.0117/79.49% |

| Different distribution and different size | 0.0088 | 0.1462/93.98% | 0.0206/57.28% | 0.0180/51.11% |

In general, the IntFedSV ensured that the contribution levels of the participants remained stable. Compared with the unique allocation of a single-point SV, the utility interval demonstrated greater fault tolerance and incentive, which encouraged participants to join the FL alliance more effectively. In particular, under uncertain environments, the advantage of the IntFedSV was more significant.

5.6. Ablation Experiments

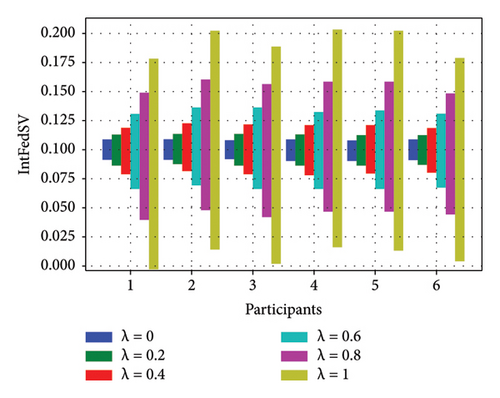

5.6.1. Ablation Experiment of Discount Factor

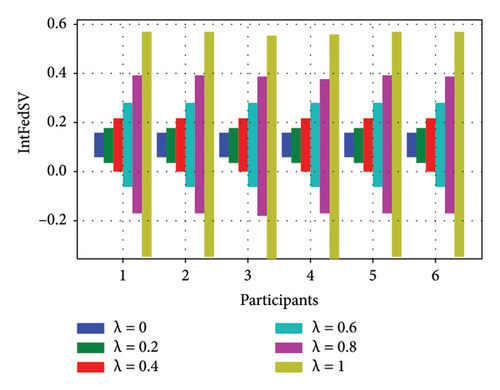

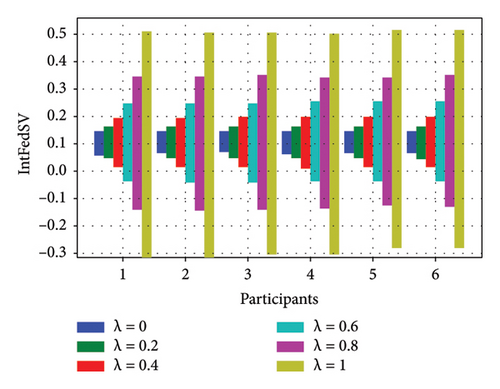

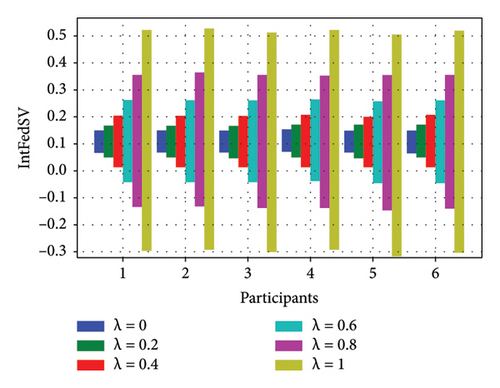

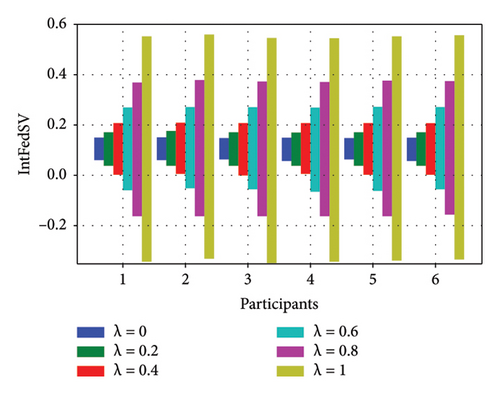

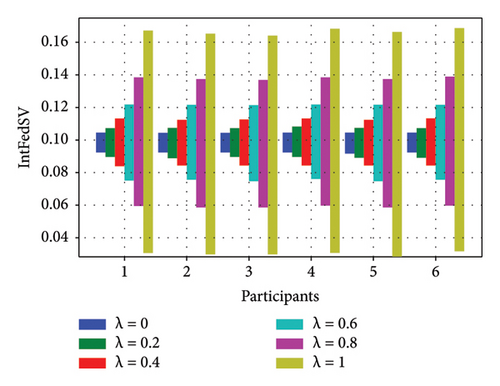

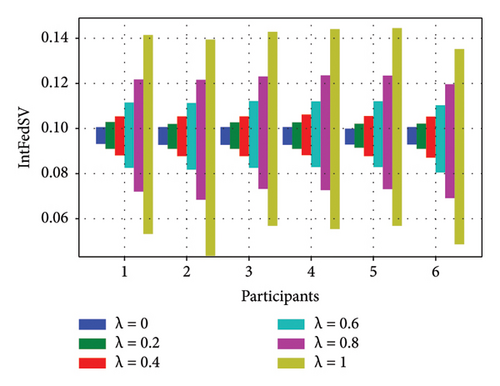

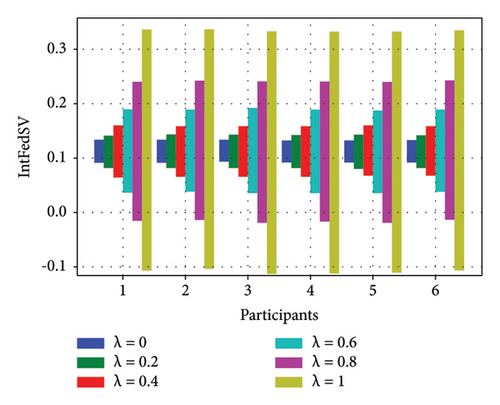

To further investigate the effect of the discount factor on the contribution level of the participants, we used the CIFAR10 and IMDB datasets to examine the IntFedSV corresponding to different discount factors. Specifically, we set the values of λ to 0, 0.2, 0.4, 0.6, 0.8, and 1. Similarly, we considered four scenarios for the datasets. The experimental results are shown in Figures 7 and 8.

Based on Figures 7 and 8, under the four settings of the four datasets, the IntFedSV interval length of the participants increased significantly with the discount factor, although the increase in magnitude varied. When λ = 0, the same IntFedSV results were obtained for each participant, which is consistent with the findings presented in the theoretical section. Therefore, we can control the utility interval and fault tolerance of the participants by setting the appropriate discount factors.

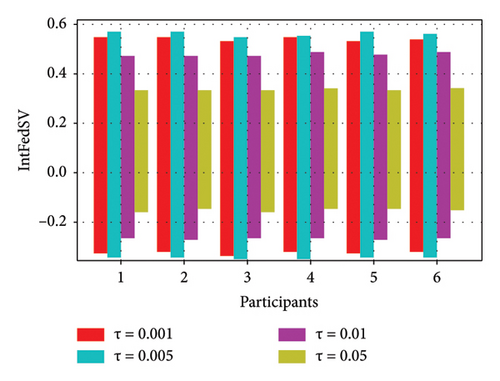

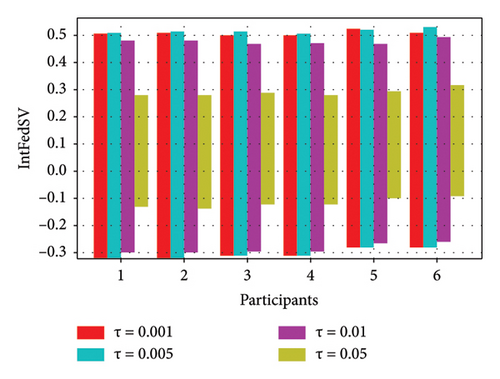

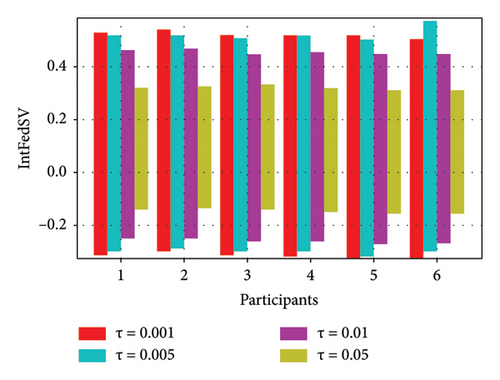

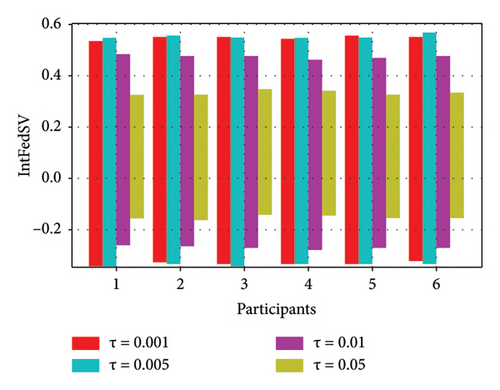

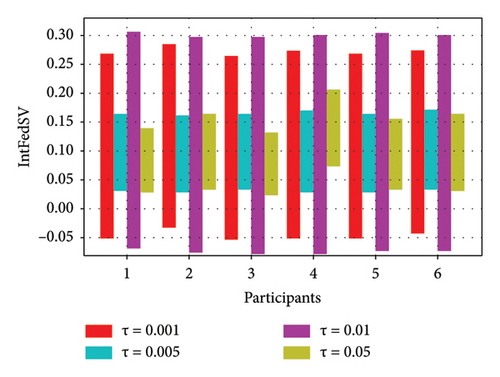

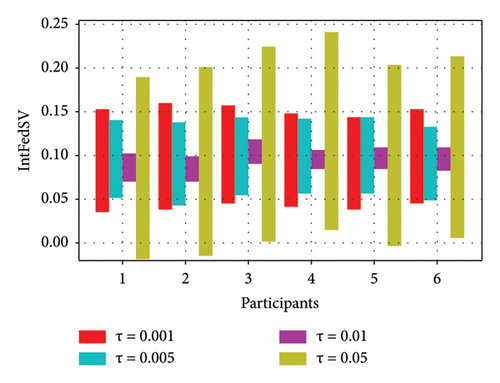

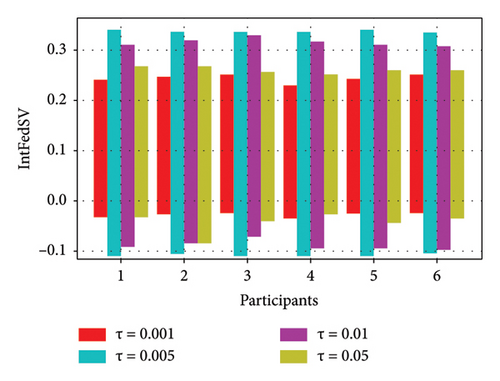

5.6.2. Ablation Experiment of Round-Truncated Threshold

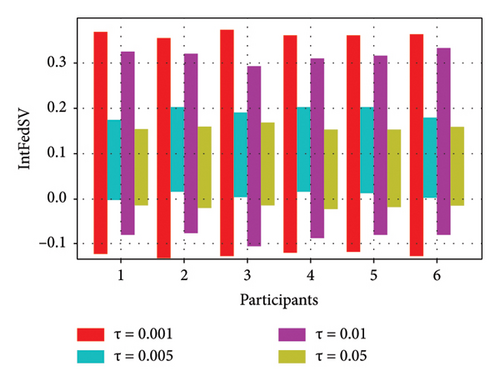

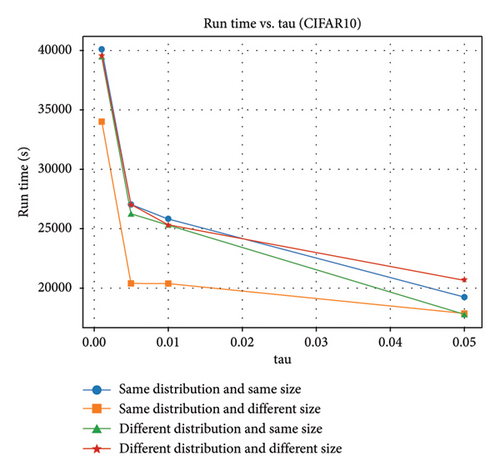

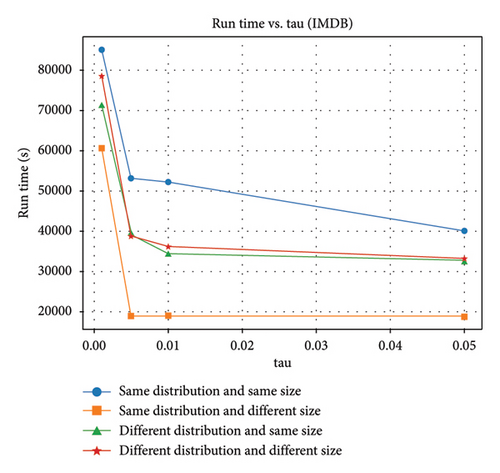

To further investigate the effect of the round-truncated threshold on the contribution evaluation mechanisms, we used the CIFAR10 and IMDB datasets to examine the IntFedSV corresponding to different round-truncated thresholds. Specifically, we set the values of τ to 0.001, 0.005, 0.01, and 0.05. Similarly, we considered four scenarios for the datasets. The experimental results are shown in Figures 9 and 10.

As shown in Figures 9 and 10, the round-truncated threshold significantly affected the IntFedSV of the participants in all four scenarios. However, the effect varied depending on the dataset settings. As presented in Section 4.5, the larger the round-truncated threshold, the shorter the algorithm runtime is. To further investigate the effect of the round-truncated threshold on the algorithm runtime, we analyzed the variation of Algorithm 1’s runtime with a round-truncated threshold under different settings of the dataset. As shown in Figures 11 and 12, the larger the round-truncated threshold, the shorter the algorithm runtime is. Therefore, in actual FL experimental scenarios, we should comprehensively consider the IntFedSV and runtime to select an appropriate round-truncated threshold.

In summary, the superiority of the proposed IntFedSV contribution evaluation mechanism was demonstrated through comparative experimental results with the result of baseline methods on public datasets. In uncertain environments, the advantage of the IntFedSV was more significant. In further ablation experiments, we investigated the effects of the discount factor and round-truncated threshold on the experimental results. The results showed that the IntFedSV interval length of the participants increased significantly with the discount factor, although the increase in magnitude varied. Therefore, we can control the utility interval and fault tolerance of the participants by setting the appropriate discount factors. The larger the round truncation threshold, the shorter the algorithm runtime is. Therefore, in practical FL applications, we should comprehensively consider the IntFedSV and runtime to select the appropriate round truncation threshold. This study fills the research gap pertaining to the application of interval SVs in the design of FL contribution evaluation mechanisms and contributes positively to the promotion of FL engineering applications.

6. Conclusion

To address the challenges posed by the differences and uncertainties in the contribution levels of participants from different data sources, we proposed an IntFedSV mechanism for HFL. This mechanism is advantageous as it fully utilizes the fault tolerance of the ISV to offset the adverse problems caused by the differences and uncertainties of the participants. Ultimately, the participants’ contribution levels were within a more stable range. Second, to further accelerate computational efficiency, we introduced a matrix semitensor product method to calculate the IntFedSV. Finally, extensive experiments on public datasets demonstrated that the proposed mechanism can effectively evaluate the contribution level of the participants. Compared with the classic single-point SV, the IntFedSV offers greater fault tolerance and incentive, which allows it to promote FL engineering applications more effectively. Under uncertain environments, the advantage of the IntFedSV is more significant.

However, our study presents some limitations. First, the IntFedSV must traverse all 2n subsets in each round of utility evaluation, and the computational complexity of the algorithm is relatively high. Although we adopted the round-truncated technique and the matrix semitensor method to improve the computational efficiency of the IntFedSV, the risk of an exponential explosion remains. Second, the real-time requirements of FL systems are gradually increasing. However, we did not combine the IntFedSV allocation results with a new round of resource allocation to form a dynamic feedback mechanism. In the future, we will address the issues of utilizing IntFedSV to allocate a new round of data, computing power, and communication resources, as well as develop a real-time online IntFedSV contribution evaluation and resource allocation system.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Tianxu Cui: investigation, conceptualization, methodology, data curation, formal analysis, writing – original draft, and writing – review and editing. Ying Shi: conceptualization, methodology, supervision, and writing – review and editing. Wenge Li: methodology, formal analysis, writing – original draft, and visualization. Rijia Ding: methodology, writing – review and editing, funding acquisition, and supervision. Qing Wang: data curation, formal analysis, writing – original draft, and visualization.

Funding

This work was supported by the National Key R&D Program (No. 2022YFF0607404) and the Fundamental Research Funds for the Central Universities (Ph.D. Top Innovative Talents Fund of CUMTB) (No. BBJ2024077).

Acknowledgments

This work was supported by the National Key R&D Program (No. 2022YFF0607404) and the Fundamental Research Funds for the Central Universities (Ph.D. Top Innovative Talents Fund of CUMTB) (No. BBJ2024077). We used Wiley Editing Services to revise the English language issues in this paper.

Appendix A: Proof of Theorem 1

Proof 1. Considering interval federated cooperative games (N, v), for ∀ S ∈ 2N, v(MS) is the utility function of the subfederated model MS. Therefore, we construct , where

According to Lemma 1, the utility function can be expressed as

First, we consider the marginal contribution v(MS∪i) − λv(MS) in Equation (7). If , then from the permutation matrix (Definition 2), we can obtain

Second, using the method of Cheng et al. [46], we construct a vector sequence , i.e.,

Lemma 2 [46]. Let S ⊂ N = {x1, x2, ⋯, xn}, if , then (A.7)

Where denotes the jth component of an.

According to Lemma 2, let ; subsequently, we construct a vector sequence , where

For convenience, we segment vector γ into k-block vectors sequentially, with j = 1, 2, 22, ⋯, 2n−1, as follows:

Therefore, based on Equations (A.5) and (A.7), one can infer from Equation (7) that

Notably,

Therefore, for ∀i = 1, 2, ⋯, n, we construct matrix Λi as follows:

Based on the pseudocommutative property of the matrix semitensor product, the following can be obtained:

Then,

Similarly,

Then, for ∀i = 1, 2, ⋯, n, we construct matrix Γi as follows:

Thus, we can obtain

Thus, Theorem 1 is proven.

Nomenclature.

To facilitate understanding, we have summarized the main abbreviations and mathematical symbols used in this section in Table A1.

| Abbreviations/symbols | Meaning |

|---|---|

| FL | Federated learning |

| HFL | Horizontal federated learning |

| SV | Shapley value |

| ISV | Interval Shapley value |

| IntFedSV | Interval federated Shapley value |

| i ∈ N = {1, 2, ⋯, n} | Participants (clients) |

| t ∈ {0, 1, ⋯, T − 1} | Global iteration rounds |

| Di | Dataset owned by each participant |

| Local model | |

| Mt | Global model of all participant set N in the tth round |

| Gradient of local model | |

| ∆t | Gradient of global model |

| Global model of subfederation S in the tth round | |

| Utility value of subfederation S in the tth round | |

| MS | Subfederated model |

| Interval utility function of subfederated model MS | |

| (N, v) | Interval federated cooperative games |

| N = {1, 2, ⋯, n} | Set of participants for FL |

| Feature function | |

| Φi(v) | IntFedSV for participant i |

| λ ∈ [0, 1] | Discount factor |

| τ > 0 | Round-truncated threshold |

| Structural matrix of interval utility function v(MS) | |

| K and F | Shapley matrix |

Open Research

Data Availability Statement

The four datasets used in this paper are public datasets and are only used for scientific research.