BeGuard: An LSTM–Fused Defense Model Against Deepfakes in Competitive Activities–Related Social Networks

Abstract

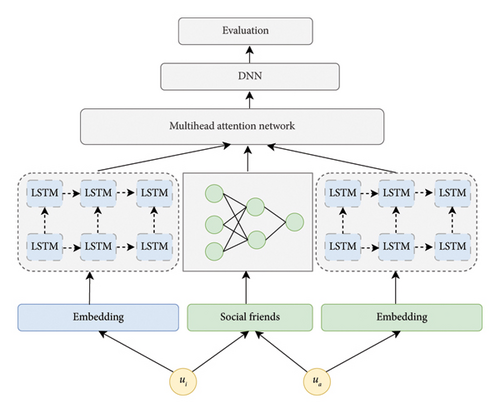

We propose a novel defense mechanism for protecting users from deepfakes by analyzing their behaviors in competitive activities and their social interactions. The model dynamically embeds user behaviors based on their participation in competitive activities, capturing these activities’ temporal dynamics through long short–term memory networks. This allows the model to effectively identify patterns and changes in user behaviors. BeGuard also considers users’ social relationships, embedding the behaviors of their social friends to account for the influence of these connections on their actions. This results in a richer and more contextually aware behavioral representation. To improve detection accuracy, the model uses an attention mechanism to evaluate abnormal values in user behaviors, particularly those indicating potential deepfake content. This attention-based evaluation enhances the model’s capacity to detect subtle anomalies, providing a more effective defense against deepfakes in competitive activities–related social networks.

1. Introduction

In the digital age, competitive activities events are not only a source of entertainment but also a significant part of global culture and economy. High-profile games and athlete interviews are broadcasted worldwide, attracting massive audiences [1, 2]. However, the rise of deepfake technology poses a serious threat to the integrity of sports media. Malicious actors can manipulate videos to alter game outcomes, fabricate athlete statements, or create scandalous content, leading to misinformation, damaged reputations, and financial losses. The fast-paced nature of competitive activities broadcasting demands robust defense mechanisms to detect and prevent the spread of such manipulated content [3]. Social networks have become the primary platforms for communication, content sharing, and information dissemination. They enable users to connect and share multimedia content instantly across the globe [4]. Unfortunately, this rapid sharing capability also facilitates the spread of deepfakes. On social media, deepfake videos can go viral within hours, spreading misinformation, influencing public opinion, and potentially inciting social unrest. The user-generated content model of social networks makes it challenging to monitor and control the dissemination of deepfakes, highlighting the need for effective detection and defense strategies tailored to these platforms [5, 6].

Defense mechanisms against deepfakes are crucial to maintain information integrity and protect individuals and organizations from harm [7, 8]. Advanced defense technologies often leverage adversarial learning techniques, such as generative adversarial networks (GANs) [9]. While GANs are commonly used to create deepfakes, they can also be employed to detect them by identifying artifacts and inconsistencies introduced during the generation process [1]. Adversarial training methods enhance the robustness of detection models by simulating attacks and improving their ability to recognize manipulated content [2]. In addition, long short–term memory (LSTM) networks are effective in handling sequential and temporal data, making them suitable for analyzing video content where temporal inconsistencies may indicate manipulation [10]. By integrating LSTM networks with GAN–based detection methods, defense models can more accurately identify deepfakes by examining both spatial and temporal features. This fusion of adversarial learning and sequential modeling allows for proactive defense strategies adaptable to the unique challenges presented by both sports media and social networks [11, 12].

While both competitive activities and social networks are vulnerable to the threats posed by deepfakes, the context and impact differ. Among competitive activities, deepfakes can directly affect the integrity of events, athletes’ reputations, and associated commercial interests. The content is often high-quality and professionally produced, requiring detection methods that can analyze subtle manipulations (as shown in Figure 1). In contrast, social networks deal with a vast amount of user-generated content with varying quality, where deepfakes can rapidly influence public perception and spread misinformation. Defense technologies must be adaptable to different data types and dissemination patterns [13, 14]. The proposed BeGuard model addresses these challenges by fusing LSTM networks with behavioral analysis to create a robust defense mechanism applicable to both domains, accounting for their specific characteristics and threats.

- •

Misinformation and manipulation of competitive activities content: Deepfakes enable the alteration of game footage and the creation of fabricated videos involving athletes and events. This manipulation can lead to the spread of false information, such as falsified game highlights or fabricated interviews, which mislead fans, undermine the credibility of sports media, and can influence public perception and sponsorship decisions.

- •

Damage to athletes’ reputation and personal privacy: Athletes are public figures whose reputations are critical to their careers. Deepfakes can be used to produce malicious content that depicts athletes in compromising or unethical situations that never occurred. The rapid dissemination of such content on social media can cause irreparable harm to an athlete’s image, leading to personal distress and professional consequences.

- •

Impact on competitive activities betting and financial integrity: The sports betting industry relies on accurate and timely information. Deepfakes can distort this information ecosystem by presenting false data or events, leading to unfair betting practices and significant financial losses for stakeholders. This undermines trust in the betting system and can have legal and economic repercussions.

Detecting deepfakes in competitive activities is particularly challenging due to the high production quality of legitimate competitive content, which makes subtle manipulations harder to spot. Unlike general social network activities, where user interactions such as sharing, commenting, or liking are driven by organic social behaviors, deepfake behavior introduces maliciously crafted content intended to manipulate or mislead users. These behaviors often exhibit unique propagation patterns, such as rapid dissemination driven by sensationalism, and can involve coordinated sharing to amplify influence. In addition, the real-time nature of competitive activities broadcasting requires defense mechanisms that can operate swiftly to prevent the spread of deepfakes before they cause harm. The global reach of competitive activities content further complicates detection and mitigation efforts, as deepfakes can quickly cross international boundaries through social media platforms.

In light of the need for robust and adaptive defense mechanisms, we propose BeGuard, an LSTM–fused defense model designed specifically to address deepfake threats in competitive activities and social networks. BeGuard integrates multiple data sources, including item ratings, category information, and dynamic behavior records, to create a comprehensive profile of both content and user interaction patterns.

The core innovation of BeGuard lies in its fusion of adversarial learning techniques with LSTM networks. Concurrently, the LSTM component analyzes temporal sequences to identify inconsistencies over time, such as unnatural movements or audio-visual desynchronization. This dual approach enhances detection accuracy by capturing both spatial and temporal anomalies. Moreover, BeGuard incorporates user behavior analysis from social networks. By monitoring how content is shared, liked, or commented on, the model can identify unusual activity patterns that often accompany the spread of deepfakes, such as sudden spikes in sharing from newly created or bot-controlled accounts. This behavioral analysis adds an additional defense layer by addressing the social propagation aspect of deepfakes.

By combining these methodologies, BeGuard offers a robust solution capable of real-time detection and mitigation of deepfakes in both sports media and social networking environments. It addresses the specific challenges of high-quality content manipulation in competitive activities and the rapid dissemination mechanisms inherent in social networks.

2. Related Work

2.1. Generalized Behavior Recognition Approaches

Traditional skeleton-based behavior recognition methods typically rely on handcrafted features to model human actions, such as joint angles, distances between joints, and motion trajectories [15–17]. These handcrafted features require expert knowledge to extract and are often tailored to specific types of actions, lacking generalization capability for unknown actions or complex scenarios [18]. For instance, PhaseForensics is a typical deepfake video detection method. It uses a phase-based motion representation of facial temporal dynamics to address the limitations of existing methods [19]. In addition, these methods usually overlook the spatial relationships between different parts of the human body during modeling, failing to fully utilize the topological structure information of skeleton data. This limitation constrains the model’s ability to understand and represent actions effectively [20, 21].

With the development of deep learning, data-driven methods have become mainstream in action recognition. The most common approaches in deep learning are based on recurrent neural networks (RNNs) [22] and convolutional neural networks (CNNs) [23]. RNN–based methods concatenate the 3D coordinates of all joints in each skeleton frame into a vector sequence and input it into the RNN to learn temporal dependencies between joints. RNNs excel at processing sequential data and can capture dynamic changes in actions. However, this approach simplifies skeleton data into one-dimensional vector sequences, failing to fully exploit the spatial relationships between joints [24, 25].

CNN–based methods attempt to convert skeleton data into a two-dimensional pseudoimage format, mapping the 3D coordinates of joints onto pixel values or using them as channels of input features [26]. They then leverage the strong feature extraction capabilities of CNNs to model the spatiotemporal dependencies between joints [27, 28]. However, forcing irregular skeleton data into a two-dimensional grid may lead to the loss of spatial structural information, making it difficult to accurately reflect the topological structure of the skeleton.

In reality, skeleton data are naturally suitable to be represented in the form of graphs, where joints are the nodes and bones are the edges. Therefore, transforming skeleton data into vector sequences or two-dimensional grids (as in RNNs and CNNs) does not fully utilize its unique graph structural features, leading to limitations in the model’s expressive power and poor generalization ability [29, 30].

Compared to previous methods, current graph convolutional network (GCN)–based methods have substantially improved action recognition accuracy by directly modeling the irregular graph structure of skeletons [22]. GCNs can perform convolution operations on graph structures, fully leveraging the topological relationships and spatial structural information between joints. In addition, GCNs can be combined with temporal convolutions or recurrent networks to capture dynamic changes in actions over time [31]. This approach allows the model to understand and represent human actions more comprehensively, enhancing its ability to recognize complex actions and maintain good generalization performance when facing unseen action types.

In summary, GCN–based methods overcome the limitations of traditional RNN and CNN approaches by fully utilizing the graph structural features of skeleton data, achieving more accurate and robust recognition of human actions. This advancement brings new research directions and development opportunities to the field of action recognition.

2.2. GCN–Based Action Recognition Approaches

In recent years, owing to their powerful modeling capabilities for irregular data, GCNs have been successfully applied to skeleton-based action recognition tasks [32, 33]. Yan et al. [34] were the first to introduce GCNs into skeleton-based action recognition by proposing the spatial–temporal GCN (ST–GCN). This model uses a general graph-based formulation to model dynamic skeletons and constructs a skeleton graph, which is a predefined graph with physical connections between joints. However, for different actions, such a static skeleton graph may not be the optimal choice. The limitations of this fixed topology mean that dependencies between nonphysically connected joints are not fully exploited [35, 36].

To address this issue, numerous works have emerged focusing on learning dynamic skeleton topologies. Shi et al. [37] designed the two-stream adaptive GCNs (2s–AGCNs), which introduce learnable and flexible skeleton topologies that can adapt to different GCN layers and skeleton samples, capturing dependencies between arbitrary joints. Moreover, the 2s–AGCN framework integrates joint streams and bone streams, further improving the accuracy of action recognition.

Subsequently, based on ST–GCN or 2s–AGCN, various skeleton-based action recognition methods have been proposed [38]. Ye et al. [39] proposed dynamic GCNs (Dynamic GCN), which consider the contextual information of each joint from a global perspective and automatically learn the skeleton topology. The authors in references [33, 40, 41] further explored spatial–temporal attention mechanisms to capture dynamic spatial–temporal dependencies.

Despite the significant progress made by GCN–based methods in skeleton-based action recognition, several challenges remain to be addressed.

First, the separate handling of spatial and temporal dimensions limits the model’s ability to capture complex spatiotemporal patterns inherent in human actions. Actions are dynamic processes where spatial configurations evolve over time, and modeling them independently may result in the loss of critical interaction information [42, 43]. To overcome this limitation, researchers have begun to develop models that jointly consider spatial and temporal dependencies. For instance, Li et al. [28] introduced the actional-structural GCN (AS–GCN), which simultaneously models the actional dynamics and structural relationships in a unified framework, enhancing the representation of spatiotemporal features.

Second, the reliance on predefined or static graph topologies may not adequately capture the variability and complexity of different actions [44]. Human body joints can exhibit varying degrees of correlation depending on the action being performed. To address this, more adaptive and flexible graph learning methods have been proposed. For example, Zhang et al. [22] presented the semantics-guided neural networks (SGNs), which learn both the node features and the graph structure adaptively based on the input data, allowing the model to adjust the joint connections dynamically according to the action context.

Third, many existing methods do not fully exploit higher-order relationships and global context within the skeleton data, considering that only immediate joint connections may overlook important patterns that involve distant joints or global body posture. To tackle this issue, some approaches incorporate attention mechanisms and global context modeling. For example, Chen et al. [40] proposed the multiscale ST–GCNs (MS-G3D), which capture multiscale dependencies by modeling joint, part, and body-level features, providing a more comprehensive understanding of the action dynamics.

Moreover, the challenge of real-time processing and computational efficiency is crucial for practical applications such as surveillance and human–computer interaction [45, 46]. Efficient model architectures and optimization techniques are being explored to reduce computational complexity without sacrificing accuracy.

In addition, robustness to noise, occlusions, and variations in viewpoint remains an open problem. Data augmentation strategies, robust feature extraction, and normalization techniques are employed to enhance model generalization and performance in real-world scenarios [47, 48].

While GCN–based methods have significantly advanced skeleton-based action recognition by effectively modeling the spatial configurations of human joints, ongoing research continues to focus on fully integrating spatial and temporal dimensions, learning adaptive and dynamic graph structures, capturing higher-order dependencies, improving computational efficiency, and enhancing robustness. These efforts aim to develop more accurate and generalizable models that can handle the complexities of human actions in diverse and unconstrained environments.

Compared to traditional methods that rely on handcrafted features such as joint distances or motion trajectories, BeGuard introduces a data-driven approach by employing embedding techniques to represent dynamic temporal behaviors. This allows it to capture temporal nuances and sequential patterns, overcoming the rigidity and limited generalization of handcrafted features. Unlike RNN–based methods, which simplify data into sequential vectors and lose spatial relationships, BeGuard combines LSTM networks with multihead attention mechanisms to simultaneously model temporal dependencies and distinguish between behavioral and social relationship features. This dual-focus approach offers deeper insights into user actions compared to CNN–based methods, which often distort the structural integrity of data by forcing it into 2D grids. Furthermore, BeGuard goes beyond existing GCN–based methods by integrating an outlier detection mechanism [39], enabling it to identify anomalies in user behavior, a critical but often overlooked aspect in behavior analysis. Its use of temporal embeddings across multiple periods dynamically adapts to evolving behaviors, ensuring robustness and generalization in complex, real-world scenarios.

In order to effectively tackle the aforementioned challenges, the BeGuard model consists of four crucial components. First, the initial feature extraction plays a fundamental role in gathering and preparing the raw data for further analysis. This step helps to identify and extract the most relevant and informative features from the complex datasets related to the competitive activities detection. Second, the representation of dynamic behavior features is essential as it focuses on capturing the temporal and changing aspects of the behavior under study. It aims to model how the behavior evolves over time and identify any patterns or trends in the dynamic nature of the data. Third, the extraction of attention features allows the model to selectively focus on the most important aspects of the data. By using attention mechanisms, the model can assign different weights to different features or parts of the data, highlighting the ones that are more significant for the task at hand, such as detecting anomalies. Finally, the outlier evaluation is the final stage where the model assesses and identifies the data points that deviate significantly from the normal patterns. This helps in detecting any unusual or abnormal behavior or events. Together, these four parts work in an integrated manner to enhance the performance and effectiveness of the BeGuard model in dealing with the specific challenges it is designed to address.

3. Beguard Model

3.1. Embedding Features

- •

U = {u1, u2, …, um}: represents a set of users, such as sports enthusiasts.

- •

S = {S1, S2, …, Sn}: represents a set of competitive activities, such as football matches and basketball games.

-

= {si,1,t, si,2,t, …, si,k,t}, denotes the set of competitive activities participated by user ui. Here, si,j,t are the j-the competitive activities that user ui participated in or watched at time t.

-

= {ri,1,t, ri,2,t, …, ri,k,t}, denotes the set of ratings given by user ui. Here, ri,j,t are the j-th competitive activities that user ui had given the ratings to at time t.

-

= {ci,1,t, ci,2,t, …, ci,k,t}, denotes the set of categories corresponding to the competitive activities participated by user ui. Here, ci,j,t denotes the category or type of competitive activities si,1,t.

3.2. Dynamic Behavior Features

3.3. Attention Features Extraction

By inputting the four features into a multihead attention network, we can effectively model the intricate relationships and dependencies between different users’ features over time. This approach leverages the strengths of both DNN and LSTM representations and utilizes the powerful attention mechanism to enhance the model’s capability in tasks such as anomaly detection or deepfake identification.

3.4. Evaluation

The DNN consists of multiple layers where each layer applies a linear transformation followed by a nonlinear activation function. It captures complex patterns and relationships in the data while reducing the dimensionality from d to d′. Each layer l reduces the dimensionality from dl−1 to dl.

To further enhance and optimize the overall operational efficiency and performance of the model in practical applications, we decided to adopt the cross-entropy loss function, a method that is widely used and has significant effects in the field of machine learning. By using the cross-entropy loss function, the model can more accurately measure the difference between the predicted result and the actual result during the training process, thereby guiding the model parameters to adjust and optimize in a better direction. In the continuous iterative training, the model gradually learns the inherent laws and patterns in the data, so that its prediction ability is continuously enhanced, and finally, the optimal result of the model under the current conditions can be obtained.

The BeGuard method is also designed to handle real-world complexities, such as noisy data and unseen deep fakes, through its intrinsic nature. By utilizing embedding techniques and LSTM networks, it captures intricate temporal patterns while minimizing the influence of irrelevant or inconsistent information. The multihead attention mechanism focuses on critical behavioral and social relationship features, ensuring reliable performance even with imperfect data. In addition, its outlier evaluation mechanism detects anomalies in user behavior, enabling the identification of deepfake-like patterns. With dynamic temporal embeddings that adapt to changing user behaviors, BeGuard demonstrates robust generalization and effectiveness, as validated through extensive experiments on diverse and noisy datasets.

Moreover, the behavioral features in BeGuard, such as temporal sequences, user ratings, and social relationships, differ fundamentally from skeleton-based behavior features, which rely on the spatial and temporal dynamics of human skeletal joints. BeGuard focuses on capturing dynamic patterns in user behavior data, rather than the physical movement or spatial configurations of a human skeleton. However, both approaches share a similar goal of identifying and modeling dynamic patterns over time.

While skeleton-based methods are typically applied in areas such as action recognition or video analysis, BeGuard is designed for user behavior analysis in domains such as recommendation systems or social trust prediction. This distinction highlights the complementary nature of these methods, with BeGuard offering robust capabilities for analyzing abstract user interactions and skeleton-based methods excelling in understanding physical movement dynamics.

3.5. Discussion

- 1.

BeGuard prioritizes real-time detection of potential deepfake content by leveraging its embedding techniques and LSTM networks to capture temporal patterns in user behavior as early as possible. By focusing on rapid analysis of initial interactions, such as uploads or immediate user engagement, the system can flag suspicious content before it gains significant traction.

- 2.

While real-time detection is critical, monitoring how content spreads (e.g., through shares, likes, and comments) provides valuable insights into its propagation dynamics and the influence of social relationships. BeGuard achieves this by asynchronously analyzing interaction patterns using its multihead attention mechanism, which can focus on key features without delaying immediate defense responses.

- 3.

To balance real-time defense with comprehensive monitoring, BeGuard adopts a two-stage approach grounded in a DNN framework and evaluation strategies. In the initial real-time alert phase, the DNN model processes input data rapidly to identify and mitigate potentially harmful content, leveraging its ability to capture temporal and semantic patterns in user behavior. In the secondary analysis phase, advanced evaluation strategies are employed to analyze the spread and interaction patterns of flagged content, refining the DNN’s predictive capabilities and enhancing its robustness over time. This dual-phase design enables BeGuard to address immediate risks efficiently while continuously improving its defense mechanisms by incorporating insights from user interactions.

BeGuard effectively balances the need for real-time defense with the requirement to monitor social interactions, ensuring both immediate prevention of harm and long-term improvement in the system’s robustness.

4. Experiments

In this section, we perform a series of experiments to show the effectiveness of the method.

4.1. Experimental Settings

4.1.1. Dataset Selection

The popular datasets, Epinions and Ciao are selected1. The Epinions dataset consists of 7400 users, 6149 services, and 300,548 social relationships, comprising 209,700 records. It includes details such as user IDs, service IDs, user ratings, and timestamps for services. The Ciao dataset contains 2240 users, 16,852 items, and 56,544 social relationships, totaling 36,065 records. All services in the dataset are rated uniformly. Both datasets include behavioral data and social relationship statistics.

The Yelp dataset is a widely used dataset provided by Yelp as part of the dataset challenge2. It contains rich information about services, users, and their interactions, making it a valuable resource for various research domains, such as social network analysis, and anomaly detection [51, 52]. The dataset includes detailed user reviews, ratings, timestamps about services (e.g., categories) as well as user profiles (e.g., user IDs and friend networks). With its diverse and large-scale nature, the Yelp dataset enables researchers to study user behaviors and social interactions in real-world scenarios. In our experiment, Yelp contains 20,627 users, 16,763 services, 150,094 records, and 90,004 social relationships between users. Here, services can be classified as sports projects.

To conduct an effective experimental evaluation, the dataset is divided into a training set and a validation set with proportions of 80% and 20%, respectively. For example, the training set accounts for 80% and is used for model training and learning; the validation set accounts for 20% and is used to adjust the model’s hyperparameters and evaluate the model’s performance during training.

4.2. Model Parameters

- •

Learning rate: Set to 0.00001. The learning rate determines the step size of parameter updates during model training. An appropriate learning rate can make the model converge to a better result more quickly during training while avoiding overfitting or underfitting problems.

- •

Batch size: Set to 64. The batch size affects the efficiency and stability of model training. A larger batch size can improve computational efficiency but may require more memory; a smaller batch size may make the training process more unstable, but in some cases, it can help the model better learn the data distribution.

- •

Number of neurons in the hidden layer: In the hidden layer of the model, the number of neurons is set to 3, which needs to be adjusted according to the characteristics of the dataset and the complexity of the model. Too many neurons may lead to overfitting, while too few may not be able to fully learn the patterns in the data.

- •

Number of attention heads: Set to 4. The number of attention heads determines the number of different aspects that the model can focus on when processing data. An appropriate number can help the model better capture multiple features and relationships in the data.

4.3. Baseline Approaches

- •

Time-aware WS [53]: This approach primarily utilizes exponential functions to diminish the impact of past time on current behavior.

- •

MF [54]: This method mainly involves matrix factorization, decomposing the rating matrix of users across multiple time periods into two latent feature matrices.

- •

LINE [55]: This technique represents the nodes in large social networks and employs embedding technology to obtain a comprehensive representation of the nodes.

- •

MEWRGNN [26]: This method leverages graph neural networks to capture contextual relationships in user behaviors over time for robust and interpretable deepfake detection in complex social networks.

4.4. Evaluation Metrics

- •

Accuracy: The calculation formula is Accuracy = (TP + TN/(TP + TN + FP + FN)), where TP represents true positives (i.e., the number of samples that are actually positive and predicted as positive by the model), TN represents true negatives (the number of samples that are actually negative and predicted as negative by the model), FP represents false positives (the number of samples that are actually negative but predicted as positive by the model), and FN represents false negatives (the number of samples that are actually positive but predicted as negative by the model). Accuracy measures the proportion of samples that the model predicts correctly among the total number of samples and is a commonly used overall performance evaluation metric.

- •

F1-score: The F1-score is the harmonic mean of accuracy and recall, and the calculation formula is F1 = (2∗precision∗recall)/(precision + recall), where precision = TP/(TP + FP) (precision, which represents the proportion of true positives among the samples predicted as positive). The F1-score comprehensively considers the model’s precision and recall and strikes a balance between the two, which is useful for evaluating the overall performance of the model.

4.5. Experimental Analysis

In this section of the experimental analysis, our proposed method, BeGuard, is evaluated and compared with three different competitive approaches from the perspectives of accuracy and F1-score. The goal is to assess the effectiveness and performance of BeGuard in relation to existing methods in the context of handling the relevant data and tasks. By comparing these aspects, we aim to demonstrate whether BeGuard can outperform or offer unique advantages over the other methods.

4.5.1. The Effectiveness of the Proposed Approach

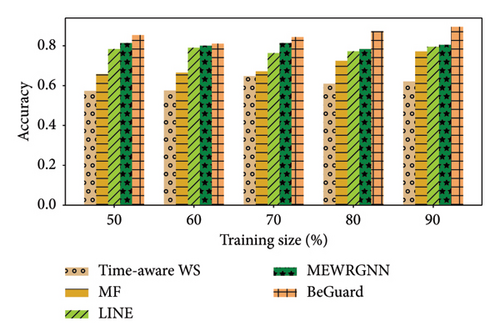

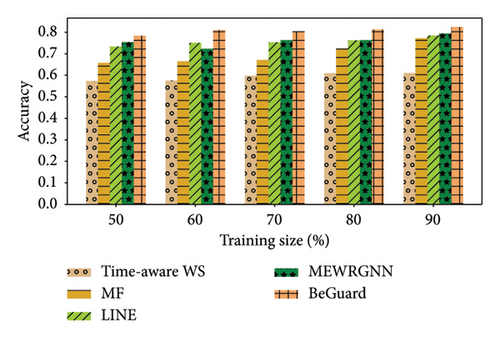

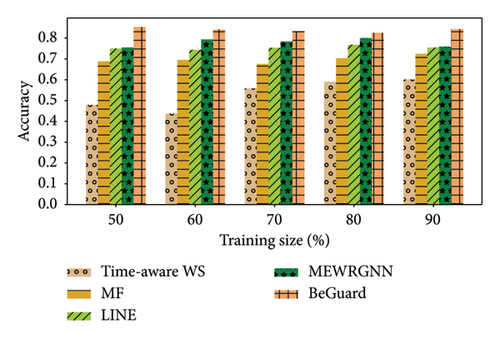

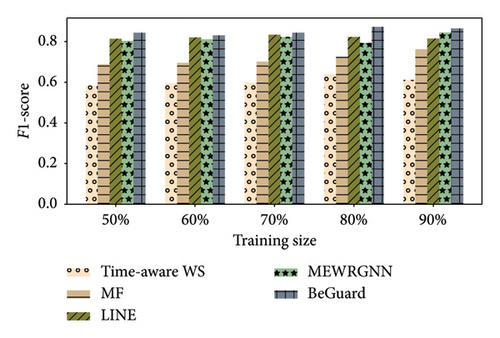

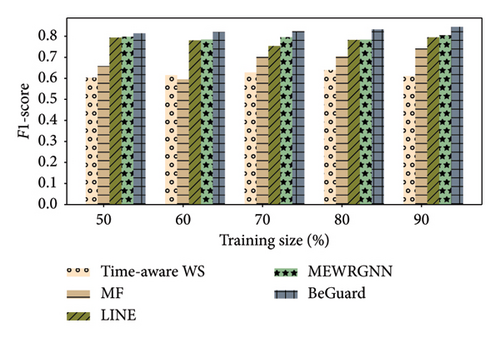

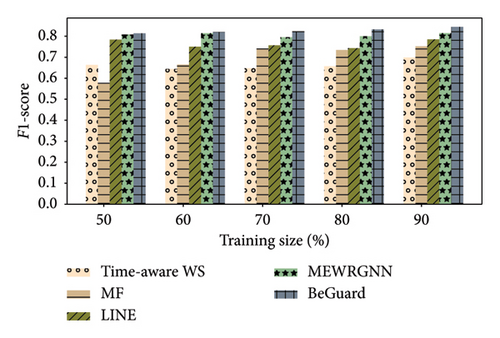

In this part, we perform a series of experiments. As shown Figures 3, 4, 5, 6, 7, and 8, there are the performance of four different methods, time-aware WS, MF, LINE, and BeGuard, across various training scales (50%, 60%, 70%, 80%, and 90%). The performance metrics shown are accuracy and F1-score, where time-aware WS appears to perform best in most scenarios, particularly at higher training scales, suggesting it effectively utilizes temporal dynamics in the data. MF shows moderate performance, generally better than LINE but worse than time-aware WS, BeGuard, and MEWRGNN. LINE generally has the lowest performance, indicating that its approach may be less suited for analyzing the dynamic behavior sequence. BeGuard performs competitively surpassing time-aware WS, especially at higher training scales, which could indicate robustness in handling larger datasets. MEWRGNN utilizes the GNN network, which is able to effectively capture the higher-order social relationships of users, so it has a relatively good performance. Generally, both metrics improve as the training scale increases, indicating that more training data enhances the model’s ability to generalize.

In the Epinions dataset, similar trends are observed; however, the improvements in performance metrics from increasing training data are more consistent across all methods. In the Ciao dataset, the improvement in performance from 50% to 90% training scale is more pronounced for BeGuard in terms of accuracy. F1-score improvements are noticeable but less dramatic than accuracy. In the Yelp dataset, all methods demonstrate the best performance compared to the Epinions and Ciao datasets. The performance of MEWRGNN consistently improves compared to the other three methods, while BeGuard still achieves the best overall performance.

4.5.2. The Ablation Experiments

We performed ablation experiments to validate the effectiveness of the various components of the BeGuard model. As shown in Tables 1 and 2, BeGuard-E denotes the use of the embedding technique only and BeGuard-LS utilizes both embedding and LSTM networks in the model. For both the Epinions and Ciao datasets, all versions of BeGuard demonstrate significant improvements in performance as the training scale increases. Specifically, the basic BeGuard-E variant in the Ciao dataset shows an increase in performance from 42.03% at 60% training scale to 54.21% at 90%, an overall growth of approximately 29%. This trend is consistent across both datasets, indicating that more training data facilitates better learning and generalization capabilities of the models. Similarly, in the Epinions dataset, BeGuard-E grows from 39.18% to 72.38% as training data increases from 60% to 90%, marking a substantial improvement of about 85%.

| Approaches | 60% | 70% | 80% | 90% |

| BeGuard-E | 39.18% | 65.11% | 71.02% | 72.38% |

| BeGuard-LS | 73.33% | 78.29% | 79.62% | 78.15% |

| BeGuard | 83.44% | 85.78% | 86.65% | 87.36% |

| Approaches | 60% | 70% | 80% | 90% |

| BeGuard-E | 42.03% | 52.46% | 53.74% | 54.21% |

| BeGuard-LS | 75.07% | 76.29% | 78.02% | 78.68% |

| BeGuard | 80.20% | 81.50% | 81.24% | 82.76% |

Across the datasets, the full BeGuard model consistently outperforms its variants, maintaining the highest scores at all training scales. For instance, in the Ciao dataset, BeGuard starts at 80.20% at a 60% training scale and reaches up to 82.76% at 90%, a growth of about 3.19%. Although this percentage increase is less dramatic compared to BeGuard-E, the full model’s superior initial performance highlights its effectiveness. In contrast, the BeGuard-LS variant shows a moderate growth rate of 4.81% in the Ciao dataset and 6.57% in the Epinions dataset, reflecting its balance between complexity and performance enhancement.

As shown in Table 3, BeGuard consistently achieves the best performance across all training sizes, demonstrating its robustness and effectiveness, with performance increasing from 82.24% to 84.55% as the training size grows. BeGuard-LS shows stable improvement, outperforming BeGuard-E. In contrast, BeGuard-E exhibits the lowest performance and the slowest growth, improving only slightly from 51.22% to 55.33%. These results highlight the importance of leveraging larger training data and demonstrate the superior design of BeGuard.

| Approaches | 60% | 70% | 80% | 90% |

| BeGuard-E | 51.22% | 53.42% | 53.67% | 55.33% |

| BeGuard-LS | 76.07% | 78.19% | 78.34% | 79.68% |

| BeGuard | 82.24% | 83.65% | 82.89% | 84.55% |

The relative improvements observed, especially in BeGuard-E, demonstrate the variant’s higher proportional benefit from increased training data, despite its lower efficiency without the additional features present in other versions. For example, BeGuard-E in the Epinions dataset exhibits the most significant relative improvement, skyrocketing by 85% as training data increases. This emphasizes the potential for simpler models to make substantial gains in performance with sufficient training data, though they may still fall short of more complex models in absolute terms.

4.5.3. The Effectiveness of Parameters

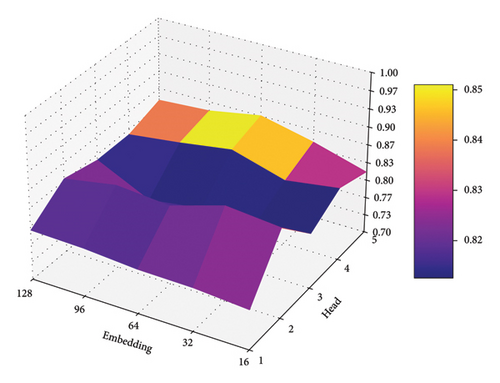

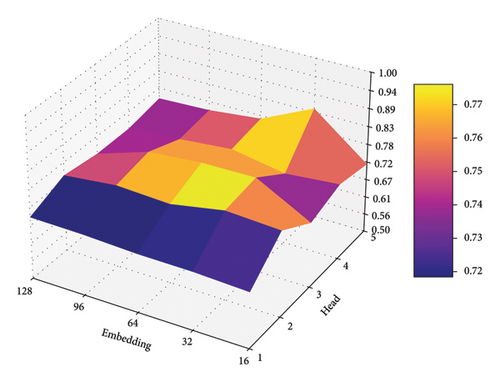

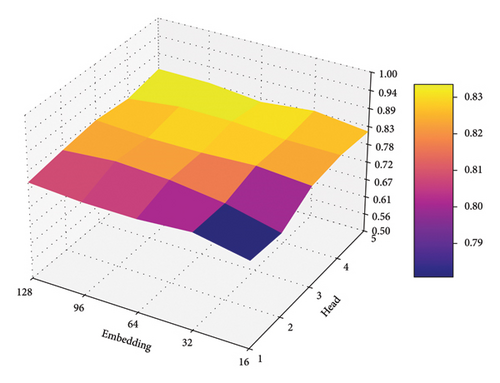

As shown in Figures 9, 10, and 11, we illustrate the effects of varying embedding dimensions and the number of attention heads on BeGuard model’s performance, measured by F1-score, given the color scale from 0.5 to 1.0.

Over the three datasets, the model performs more smoothly under different parameter validations, From the impact of embedding size perspective, there is a clear trend that increasing the embedding size generally results in higher performance. This trend is likely due to larger embedding dimensions providing more capacity to capture and represent complex patterns or features in the data.

From the impact of a number of heads perspective, all figures show that having more heads in the multihead attention mechanism generally leads to better performance up to a point, after which the performance plateaus or slightly declines. The optimal number of heads in these cases appears to be 4. This suggests that beyond a certain number of heads, the additional complexity does not contribute significantly to performance improvement and might even hinder it due to overparameterization or increased difficulty in training.

From these results, there is a balance to be struck in selecting the size of the embedding and the number of attention heads to maximize model performance. Larger embeddings consistently benefit model accuracy, while the number of heads should be chosen carefully, with 4 heads appearing to be the most effective in this task.

5. Conclusion

In this research, we have introduced the BeGuard method, which has shown significant potential in analyzing and understanding user behavior. By employing embedding techniques, we were able to effectively embed the dynamic temporal behavior records of users over multiple time periods. This allowed us to capture the temporal nuances and sequential patterns in their actions. Utilizing the LSTM network proved crucial in extracting the behavior features of users across different time intervals. It was able to handle the temporal dependencies and capture the evolving patterns in user actions. Coupled with the multihead attention network, we could distinguish the weights of behavior features and social relationship features. In addition, the evaluation of outliers in the user’s ratings of competitive activities added another layer of analysis, helping to identify unusual patterns or anomalies in their preferences. Through a series of comprehensive experiments, it has been clearly demonstrated that the BeGuard method exhibits superior performance compared to other methods. It achieved better results in terms of key metrics, indicating its effectiveness and viability in handling the complex task of user behavior analysis.

In the future, we will focus on improving the interpretability of the BeGuard model by developing comprehensive methods to provide insights into how the model makes decisions and why it assigns specific weights to different features. For instance, this could involve employing techniques such as attention weight visualization or feature importance analysis to better understand the underlying mechanisms of the model. Enhancing interpretability will not only build trust among users and stakeholders but also provide actionable insights for refining the model’s architecture and improving its performance in various scenarios. Beyond interpretability, future research directions will explore the incorporation of multimodal data, such as text, audio, and video, to capture more comprehensive and nuanced aspects of user behavior. For example, integrating textual data such as user reviews or social media posts could offer deeper insights into user intentions, while audio and video data might help analyze behavioral patterns in contexts such as virtual meetings or interactive sessions. These extensions would significantly enhance the model’s ability to represent and analyze user actions across diverse domains.

Another critical avenue for future work is addressing the scalability of the BeGuard method to ensure its applicability to large-scale networks and real-world datasets. This will involve optimizing the model’s computational efficiency and designing distributed or parallel processing techniques to handle the increasing complexity of data in massive networks. By scaling BeGuard to accommodate large-scale systems, we can ensure its effectiveness in environments with millions of users and interactions, making it more robust for deployment in industrial and enterprise applications. With these advancements, the BeGuard method will be further empowered to handle increasingly complex tasks in user behavior analysis while maintaining its superior performance and reliability.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This work was supported by the Shandong Provincial Natural Science Foundation (Grant no. ZR2023QC116) and the Doctoral Research Foundation of Weifang University (Grant no. 2024BS39) and Nature Science Foundation of Shandong Province (ZR2021MF085).

Acknowledgments

This work was supported by the Shandong Provincial Natural Science Foundation (ZR2023QC116), Doctoral Research Foundation of Weifang University (2024BS39), and Nature Science Foundation of Shandong Province (ZR2021MF085).

Endnotes

Open Research

Data Availability Statement

Research data are not shared.