Improving Patient Understanding of Glomerular Disease Terms With ChatGPT

Abstract

Background: Glomerular disease is complex and difficult for patients to understand, as it involves various pathophysiology, immunology, and pharmacology areas.

Objective: This study explored whether ChatGPT can maintain accuracy while simplifying glomerular disease terms to enhance patient comprehension.

Methods: 67 terms related to glomerular disease were analyzed using GPT-4 through two distinct queries. One aimed at a general explanation and another tailored for patients with an education level of 8th grade or lower. GPT-4’s accuracy was scored from 1 (incorrect) to 5 (correct and comprehensive). Its readability was assessed using the Consensus Reading Grade (CRG) Level, which incorporates seven readability indices including the Flesch–Kincaid Grade (FKG) and SMOG indices. Flesch Reading Ease (FRE) score, ranging from 0 to 100 with higher scores indicating easier-to-read text, was also used to evaluate the readability. A paired t-test was conducted to assess differences in accuracy and readability levels between different queries.

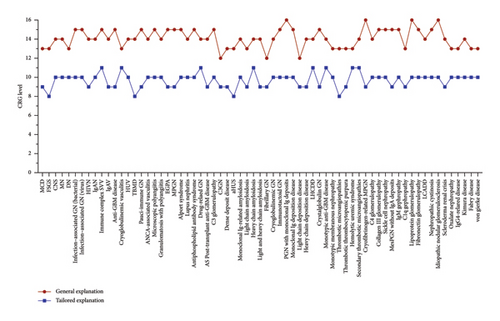

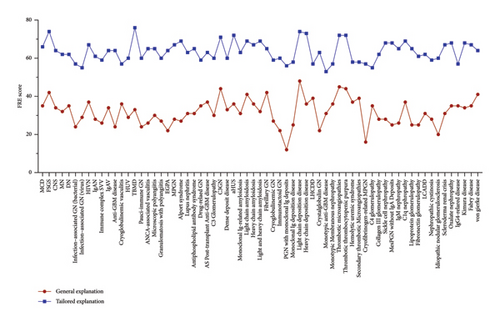

Results: GPT-4’s general explanations of glomerular disease terms averaged at a college readability level, indicated by the CRG score of 14.1 and FKG score of 13.9. SMOG index also indicated the topic’s complexity, with a score of 11.8. When tailored for patients at or below an 8th-grade reading level, readability improved, averaging 9.7 by the CRG score, 8.7 by FKG score, and 7.3 by SMOG score. The FRE score also indicated a further improvement of readability from 31.6 for general explanations to 63.5 for tailored explanations. However, the accuracy in GPT-4’s tailored explanations was significantly lower than that in general explanations (4.2 ± 0.4 versus 4.7 ± 0.3, p < 0.0001).

Conclusion: While GPT-4 effectively simplified information about glomerular diseases, it compromised its accuracy in the process. To implement these findings, we suggest pilot studies in clinical settings to assess patient understanding, using feedback from diverse groups to customize content, expanding research to enhance AI accuracy and reduce biases, setting strict ethical guidelines for AI in healthcare, and integrating with health informatics systems to provide tailored educational content to patients. This approach will promote effective and ethical use of AI tools like ChatGPT in patient education, empowering patients to make informed health decisions.

1. Introduction

Glomerular disease is a prevalent cause of chronic kidney disease. Patients diagnosed with glomerular disease frequently encounter difficulties in grasping the full scope of their condition due to its multifaceted nature [1]. The literacy level of patients also has a profound effect on healthcare delivery [2, 3]. In the U.S., approximately 14% of people possess health literacy below the basic level [4], and the average American reads at the 7th- to 8th-grade level [5].

The medical terms and complicated concepts associated with explaining the glomerular disease process, diagnostic methods, and treatment options can be challenging and overwhelming for patients to understand. This complexity may hinder clear and/or effective communication between healthcare providers and patients, potentially creating a literacy gap that could impact patient comprehension, adherence to treatment plans, and overall disease management, especially in scenarios where there is a shortage of qualified medical professionals. Therefore, it becomes essential to bridge this literacy gap with personalized information technology solutions that adapt to the varying comprehension levels of patients. Such solutions are vital in aiding patients to grasp their medical choices and make informed decisions.

Advanced artificial intelligence (AI) language models have been widely used for education, including in the field of medicine [6–8]. Such approaches offer a promising solution to bridge this literacy gap through their potential to transform complex medical jargon into more accessible language, making it easier for patients to understand. For instance, our group has demonstrated that ChatGPT, a sophisticated AI language model, can significantly reduce the readability levels of complex medical information about living kidney donation [9]. In other areas such as surgery, hyperlipidemia, cancer, and ophthalmology, ChatGPT has also demonstrated the potential to enhance the readability of patient-facing content and educational materials, thereby improving information accessibility [10–16]. However, it is essential to assess the accuracy of the information provided by these AI models to ensure that patients receive reliable and medically sound explanations.

In this study, we investigated whether ChatGPT can maintain accuracy while simplifying complex medical terms related to glomerular diseases, with the aim to improve patients’ comprehension in this area.

2. Methods

2.1. Study Design

This study focuses on glomerular diseases that are relatively common in clinical practice. We initially conducted a thorough review of the current literature, including authoritative clinical guidelines—The KDIGO 2021 Clinical Practice Guideline for the Management of Glomerular Diseases [17], and books—Handbook of Glomerulonephritis [18] and Comprehensive Clinical Nephrology [19]. We aimed to comprehensively cover the spectrum of glomerular diseases while minimizing the inclusion of obscure or very rarely encountered conditions. Consequently, we selected 67 terms that represent a range of etiological categories (Table 1). These categories include glomerular diseases associated with nephrotic syndrome, glomerular diseases associated with nephritis, glomerular diseases associated with complement disorders, paraprotein-mediated glomerular diseases, hereditary glomerular disorders, and other miscellaneous conditions. This selection ensures that our analysis remains focused yet diverse enough to encapsulate the pertinent aspects of glomerular disease.

| No. | Glomerular disease terms |

|---|---|

| 1 | Minimal change disease |

| 2 | Focal segmental glomerulosclerosis |

| 3 | Congenital nephrotic syndrome |

| 4 | Membranous nephropathy |

| 5 | Diabetic nephropathy |

| 6 | Bacterial infection–Associated GN |

| 7 | Peri-infectious GN associated with viral infections |

| 8 | HIV-associated nephropathy |

| 9 | IgA nephropathy |

| 10 | Immune complex small vessel vasculitis |

| 11 | IgA vasculitis or Henoch–Schönlein purpura |

| 12 | Anti-GBM disease |

| 13 | Cryoglobulinemic vasculitis |

| 14 | Hypocomplementemic urticarial vasculitis |

| 15 | Thin basement membrane disease |

| 16 | Pauci-immune GN |

| 17 | ANCA-associated vasculitis |

| 18 | Microscopic polyangiitis |

| 19 | Granulomatosis with polyangiitis |

| 20 | Eosinophilic granulomatosis with polyangiitis |

| 21 | Membranoproliferative glomerulonephritis |

| 22 | Alport syndrome |

| 23 | Lupus nephritis |

| 24 | Antiphospholipid antibody syndrome |

| 25 | Drug-related GN |

| 26 | Alport syndrome post-transplant anti-GBM disease |

| 27 | C3 glomerulopathy |

| 28 | C3 GN |

| 29 | Dense deposit disease |

| 30 | Atypical hemolytic uremic syndrome |

| 31 | Monoclonal immunoglobulin-related amyloidosis |

| 32 | Light chain amyloidosis |

| 33 | Heavy chain amyloidosis |

| 34 | Light and heavy chain amyloidosis |

| 35 | Fibrillary GN |

| 36 | Cryoglobulinemic GN (type I and type II) |

| 37 | Immunotactoid GN |

| 38 | Proliferative glomerulonephritis with monoclonal ig deposits |

| 39 | Monoclonal ig deposition disease |

| 40 | Light chain deposition disease |

| 41 | Heavy chain deposition disease |

| 42 | Light and heavy chain deposition disease |

| 43 | Crystalglobulin GN |

| 44 | Monotypic anti-GBM disease |

| 45 | Monotypic membranous nephropathy |

| 46 | Thrombotic microangiopathies |

| 47 | Thrombotic thrombocytopenic purpura |

| 48 | Hemolytic uremic syndrome |

| 49 | Secondary thrombotic microangiopathies |

| 50 | Cryofibrinogen-related MPGN |

| 51 | C4 glomerulopathy |

| 52 | Collagen III glomerulopathy |

| 53 | Sickle cell nephropathy |

| 54 | Mesangial proliferative GN without IgA deposits |

| 55 | IgM nephropathy |

| 56 | C1q nephropathy |

| 57 | Lipoprotein glomerulopathy |

| 58 | Fibronectin glomerulopathy |

| 59 | Lecithin-cholesterol acyltransferase deficiency disease |

| 60 | Nephropathic cystinosis |

| 61 | Idiopathic nodular glomerulosclerosis |

| 62 | Scleroderma renal crisis |

| 63 | Oxalate nephropathy |

| 64 | Immunoglobulin G4-related disease |

| 65 | Kimura disease |

| 66 | Fabry disease |

| 67 | von Gierke disease |

- Abbreviations: GBM, glomerular basement membrane; GN, glomerulonephritis; MPGN, membranoproliferative glomerulonephritis.

Two separate queries were employed to evaluate the performance of the advanced ChatGPT model, GPT-4, in interpreting these terms. The first query, which aimed at a general explanation, used the prompt: “Please provide a brief explanation of the kidney condition—[specific glomerular disease name, e.g., minimal change disease] for patients, and keep the description under 200 words.” The second query, which is tailored for patients at or below an 8th-grade reading level, used the prompt: “Please provide a brief explanation of the kidney condition—[specific glomerular disease name, e.g., minimal change disease] for patients at or below an 8th-grade level, and keep the description under 200 words.” To ensure the AI’s responses remained unbiased and independent of previous interactions, a new chat session was initiated for each glomerular disease case. While previous interactions may slightly influence the responses from ChatGPT by personalizing them according to learned context—such as projects, preferences, and tasks—the impact of this personalization on the results is negligible within the context of the study.

2.2. Accuracy Assessment

The accuracy of the responses generated by GPT-4 were evaluated by two independent investigators (YHA and JM) using a scale from 1 to 5 (1 = completely incorrect, 2 = mostly incorrect. 3 = partly correct and partly incorrect, 4 = correct but not comprehensive, and 5 = correct and comprehensive). The average score from the two investigators was used for each response. Inter-rater reliability was assessed using Cohen’s kappa coefficient [20].

2.3. Readability Assessment

The plain text of each GPT-4’s response (Supporting Table (available here)) was assessed using a publicly available readability calculator [11, 21, 22]. This assessment included several readability metrics: the Automated Readability Index, Flesch Reading Ease (FRE) score, Gunning Fog Index, Flesch–Kincaid Grade (FKG) Level, Coleman-Liau Index, Simple Measure of Gobbledygook (SMOG) Index, Linsear Write Formula, and the FORCAST Readability Formula.

The FRE score ranges from 0 to 100. The text with higher FRE scores indicates easier-to-read. Typically, a score between 80 and 70 corresponds to a seventh-grade reading level, while a score between 70 and 60 corresponds to an eighth or ninth-grade reading level. Apart from the FRE score, the average of the other seven indices was calculated to determine a Consensus Reading Grade (CRG) Level, ranging from grade 1 to grade 16.

2.4. Statistical Analysis

Accuracy and readability levels are presented as mean ± standard deviation. Comparisons of the accuracy and readability levels between the general and tailored explanations were conducted using a paired two-sided t-test. The p value of less than 0.05 indicates statistical significance. The effect size is also used to highlight the differences in readability and accuracy between the general and tailored explanation groups. Based on guidelines established by Jacob Cohen, it is calculated by the difference in means between the two groups (the mean of general explanation group minus the mean of the tailored explanation group) divided by the standard deviation of the tailored explanation group, and often categorized as small (d = 0.2), medium (d = 0.5), and large (d ≥ 0.8) [23]. Effect sizes can be greater than 1 when the difference between the means of two groups exceeds the standard deviation, which generally indicates a very large difference between the groups relative to their variability. Additionally, the Pearson’s correlation coefficient was used to assess the relationship between accuracy and readability scores.

3. Results

The general explanations received an average accuracy score of 4.74 ± 0.31, indicating that the responses were nearly all correct and comprehensive. In contrast, the tailored explanations for patients who have a reading level at or below an 8th-grade received a lower accuracy score of 4.23 ± 0.35 (Table 2). The difference in accuracy between the two types of explanations was statistically significant (p < 0.0001). The inter-rater reliability for accuracy assessment was substantial, with a Cohen’s kappa coefficient of 0.645.

| General explanation | Tailored explanationa | Effect sizeb | p valuec | |

|---|---|---|---|---|

| Accuracyd | 4.74 ± 0.31 | 4.23 ± 0.35 | 1.46 | < 0.0001 |

| Readability | ||||

| CRG levele | 14.09 ± 0.98 | 9.69 ± 0.76 | 5.79 | < 0.0001 |

| FKG | 13.85 ± 1.19 | 8.72 ± 0.84 | 6.11 | < 0.0001 |

| SMOG | 11.76 ± 0.93 | 7.25 ± 0.84 | 5.37 | < 0.0001 |

| ARI | 15.24 ± 1.29 | 10.47 ± 0.87 | 5.48 | < 0.0001 |

| GFI | 15.97 ± 1.27 | 10.38 ± 0.94 | 5.94 | < 0.0001 |

| CLI | 15.14 ± 1.17 | 10.68 ± 0.94 | 4.74 | < 0.0001 |

| LWF | 14.24 ± 1.37 | 9.79 ± 1.41 | 3.16 | < 0.0001 |

| FORCAST | 12.52 ± 0.47 | 10.33 ± 0.53 | 4.13 | < 0.0001 |

| FRE scoref | 31.63 ± 6.97 | 63.52 ± 5.32 | 4.58 | < 0.0001 |

- aTailored for patients at or below 8th grade level.

- bThe effect size is assessed by Cohen’s d value, calculated by the difference in means between the two groups (the mean of general explanation group minus the mean of the tailored explanation group) divided by the standard deviation of the tailored explanation group. If ≥ 0.8, suggesting large difference effect.

- cA paired two-sided t-test.

- dAccuracy is scaled from 1 to 5: 1 = completely incorrect, 2 = mostly incorrect, 3 = partly correct and partly incorrect, 4 = correct but not comprehensive, and 5 = correct and comprehensive.

- eConsensus Reading Grade (CRG) Level is calculated based on seven readability metrics: the Automated Readability Index (ARI), Gunning Fog Index (GFI), Flesch–Kincaid Grade (FKG) Level, Coleman–Liau Index (CLI), Simple Measure of Gobbledygook (SMOG) Index, Linsear Write Formula (LWF), and the FORCAST Readability Formula.

- fFlesch Reading Ease (FRE) scores range from 0 to 100, with higher scores indicating easier-to-read text.

The CRG level for the general explanations averaged 14.09 ± 0.98. While for the tailored explanations, the CRG level averaged significantly lower at 9.69 ± 0.76 (p < 0.0001) (Table 2, Figure 1). Specifically, the FKG level for the general explanations averaged 13.85 ± 1.19, while the tailored explanations averaged around 8th-grade level, with the score of 8.72 ± 0.84 (Table 2). The difference in the FKG levels was statistically significant (p < 0.0001). The SMOG index for the general explanations was 11.76 ± 0.93, while the tailored explanations had a significantly lower SMOG index of 7.25 ± 0.84 (p < 0.0001) (Table 2). Similarly, the FRE score for the general explanations averaged 31.63 ± 6.97, indicating that the text is difficult-to-read. In contrast, the tailored explanations had a significantly higher FRE score of 63.52 ± 5.32 (p < 0.0001) (Table 2, Figure 2), corresponding to text that is much easier to read and understand.

All the effect size Cohen’s d values were greater than 0.8, highlighting the substantial differences in readability and accuracy between the general and tailored explanation groups (Table 2). Additionally, a moderate negative correlation was observed between accuracy and readability scores (r = −0.417, p < 0.001), suggesting that as readability improved, accuracy decreased.

4. Discussion

This study underscores the potential of AI tools, such as GPT-4, to adjust patient-focused medical information to an average readability level.

Communicating medical information between healthcare providers and patients presents a significant challenge due to varying levels of health literacy [24]. Simplifying medical information, especially for complex conditions like glomerular disease, to an average (e.g., 8th-grade in the U.S.) reading level can greatly benefit individuals with limited literacy skills. This strategy helps reduce health disparities and promotes greater equity in healthcare, ensuring that all patients can understand and actively engage with their health information.

In this study, GPT-4 successfully simplified the explanations of glomerular disease in terms of multiple readability assessment formulars. The increase of the FRE score from 31.6 to 63.5 indicates that the text readability was greatly enhanced. Moreover, the CRG level, incorporating 7 formulars, decreased from 14.1 of the general explanations to 9.7 of the tailored explanations. Among these formulars, the FKG test is used extensively in the field of education. The SMOG index is also widely used, but particularly for checking health messages [25]. The reduction of the FKG level from 13.9 to 8.7 and the SMOG index from 11.8 to 7.3 indicates that ChatGPT can effectively simplify medical information. These significant differences demonstrate its potential as a valuable tool for enhancing accessibility in the medical field. However, it is important to note that the tailored explanations achieved a lower accuracy score of 4.23 compared to 4.72 for the general explanations. We also identified a negative correlation coefficient between the simplification and the accuracy of the glomerular diseases, indicating that as simplification increases, there tends to be a loss of accuracy in the information. However, this reduction in precision does not necessarily affect the conveyance of critical information essential for effective patient communication. Notably, the average accuracy rating for tailored explanations remains above 4, suggesting that while these explanations are generally accurate, they lack comprehensiveness. For example, regarding minimal change disease, focal segmental glomerulosclerosis, and congenital nephrotic syndrome, tailored explanations omitted details about pathogenetic mechanism or treatment strategies compared to general explanation. This finding is underscored in instances where essential terms are translated into layman’s terms, thereby preserving clinical relevance despite a decrease in specificity. According to our accuracy assessment scale, we propose that the minimum acceptable score for tailored explanation be set at 4 (indicates correct but not comprehensive). Scores below this threshold will lead to misinterpretations of medical conditions or treatment protocols. This consideration is crucial for developing patient education materials that must balance understandability with factual accuracy. To ensure the reliability of the information provided to patients, we recommend that all simplified content created by ChatGPT be reviewed by a medical professional before being distributed to patients. Additionally, the study’s limited sample size and focus on a specific area may affect its generalizability. Further investigation is necessary to evaluate how effectively ChatGPT addresses readability challenges across different contexts.

When developing patient education materials, healthcare providers should strive to balance preserving essential accuracy with ensuring the information is accessible and easily understood by the general public. To elevate the accuracy and readability of AI-generated responses in healthcare, several strategies can be implemented. Firstly, this may involve exploring innovative approaches such as employing dual-layer explanations, where simplified summaries are supplemented with links or expandable sections that delve into more complex details for those who seek deeper understanding. Secondly, advancements in user experience design may offer proven methods for delivering complex information with precision. For instance, we could tailor user profiles to enhance response accuracy specifically for nephrological queries. Additionally, we can refine ChatGPT’s performance by implementing feedback loops that allow for ongoing enhancement of its responses. Optimizing the prompts used, such as employing detailed inputs, varied prompt strategies like chain-of-thought, and incorporating clinical guidelines via retrieval-augmented generation, are also viable strategies. Thirdly, expert oversight should be incorporated by involving healthcare professionals not only during the initial training phase but also in continuous reviews of AI outputs, particularly for critical medical information. This would involve protocols for regular expert validation before clinical application. Additionally, expanding the diversity of training datasets is essential. This includes the integration of peer-reviewed medical literature, clinical guidelines from various global healthcare organizations, and patient case studies across different demographics and regions to reduce bias and increase the comprehensiveness of the AI. Furthermore, regular tests with simulated clinical scenarios can identify and mend gaps in the AI’s knowledge and its capability to communicate complex medical details effectively. Finally, conducting ethical and bias audits regularly, carried out by independent groups that include ethicists and diverse representatives, can ensure the AI’s integrity and fairness. These enhancements will provide a more thorough exploration of how to maintain high levels of precision while enhancing readability.

Importantly, a primary concern is the potential for biases in AI-generated content, which could stem from the training data or the algorithms themselves. These biases might inadvertently affect the way medical information is presented, possibly leading to disparities in patient understanding and care outcomes. For instance, if training data predominantly includes literature from specific geographic regions or demographic groups, the AI’s outputs may not generalize well to other populations, potentially leading to biased or less accurate information. Unfortunately, the training methodologies and datasets used for models including ChatGPT are often not transparent, which raises significant concerns about accountability and ethical implications. Addressing these biases is crucial to ensure that AI tools contribute positively to patient education and promote equitable healthcare practices. Therefore, it is essential to emphasize the need for continuous monitoring and updating of AI systems, facilitated by collaborations between AI researchers and healthcare professionals, to effectively mitigate these ethical risks. This is not only a technical challenge but also a societal imperative to ensure that AI technologies serve the broadest public interest.

We acknowledge the limitations inherent in this study. While these readability metrics are widely used, they may not completely capture nuances of readability [26]. The complexities of patient understanding, particularly for individuals who may need additional support beyond simplified language, may be overlooked. A broader evaluation considering these nuances in the future study would enhance the study’s relevance to diverse patient populations. The current study also lacks a real human control population to evaluate the comprehensibility of the responses for the target group. Future studies could benefit from incorporating a diverse human control group, enabling a direct and more authentic assessment of how well the target audience comprehends the responses, thereby enhancing the external validity and applicability of the findings. Moreover, the literacy skills widely vary across different populations and countries or regions. It is crucial to discuss the suitability of targeting an eighth-grade readability level as a universal standard for content complexity. Future research should explore personalized approaches for patient education that consider the specific literacy levels and learning preferences of individuals. This could include developing adaptive content that adjusts its complexity based on the user’s literacy profile or incorporating interactive features to engage learners with diverse cognitive abilities.

5. Conclusion

This study demonstrated that ChatGPT can effectively enhance the readability of educational materials for patients with glomerular diseases, though it also highlights the complex trade-off between simplifying content and maintaining medical accuracy. To integrate these findings into clinical practice and patient education, we recommend conducting pilot studies in clinical settings to evaluate patient comprehension, incorporating direct feedback from diverse patient groups to tailor content, expanding research to larger and more diverse datasets to improve AI performance and reduce biases, establishing strict ethical guidelines to oversee AI use in healthcare, and exploring integration with existing health informatics systems to deliver personalized educational content directly to patients. These steps will ensure the effective and ethical application of AI tools like ChatGPT in enhancing patient education and empowering patients to make informed health decisions.

Ethics Statement

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

The authors received no specific funding for this work.

Acknowledgments

The authors have nothing to report.

Supporting Information

Supporting Table. Explanations of glomerular disease terms from ChatGPT.

Open Research

Data Availability Statement

All data presented in the study are included in the article/supporting information. Further inquiries can be directed to the corresponding author.