Revolutionizing Oil Production State Diagnosis With Digital Twin and Deep Learning Fusion Technology

Abstract

As global energy demand continues to grow, the efficiency and safety of oil and gas production systems have become increasingly important. However, the current-state diagnosis technologies in the oil and gas production sector still face multiple challenges. Traditional monitoring methods often rely on experience and rule-based approaches, making it difficult to reflect the actual operating status of the system in real time. These methods show insufficient accuracy and response speed when dealing with complex operating conditions and sudden events, leading to potential economic losses and safety hazards. Aiming to enhance the real-time diagnostic capabilities of oil and gas production processes, this study introduces an improved long short-term memory (LSTM) neural network into digital twin models. Digital twins have emerged as a potent tool for monitoring and diagnosing the state of oil and gas production processes. However, due to the inherent complexity of these processes, traditional digital twin models often underperform. To address this issue, we propose integrating an advanced LSTM neural network to improve the diagnostic accuracy and efficiency of these models in real-time applications. Initially, a digital twin model is constructed based on the physical model of oil and gas production processes, simulating the behavior of the real system. Surface data are employed to estimate well data, which is subsequently used to train the LSTM neural network. This trained neural network analyzes real-time data collected from sensors installed in the physical system and updates the digital twin model accordingly. By comparing the behavior of the real system with that of the digital twin model, deviations can be identified, allowing for accurate diagnosis of the production state. Furthermore, the improved neural network optimizes the performance of the digital twin model by mitigating the impact of complex production processes, enhancing diagnostic accuracy and efficiency. The LSTM network is utilized to predict the future state of oil and gas production based on real-time data from the same block or well during different periods, enabling deep integration of physical and information layer data, as well as self-perception and self-prediction capabilities. Results demonstrate that the proposed method effectively monitors and predicts the operational state of oil and gas production, providing critical data to improve production efficiency. The integration of digital twins and deep learning technology can enhance the intelligence of oil and gas production processes and offer theoretical support for the development of intelligent oil and gas fields in the future.

1. Introduction

With the ongoing advancement of digital twin technology [1–3], its applications have expanded across various industries, including the oil and gas sector. Constructing a digital twin of the oil and gas production system facilitates real-time monitoring and diagnosis of potential issues, contributing significantly to operational efficiency and safety. However, the complexity of these production processes—characterized by numerous variables, dynamic conditions, and often incomplete data—poses challenges in developing accurate and reliable digital twin models. Traditional digital twin models often struggle to account for the full range of operational complexities, resulting in limitations in predictive capabilities and diagnostic accuracy.

To overcome these challenges, the integration of digital twin technology with deep learning [4–12] has garnered significant attention in recent years. Deep learning [13, 14], particularly recurrent neural networks (RNNs) and their variant, long short-term memory (LSTM) networks, has demonstrated substantial potential in processing high-dimensional data and time series, making it an ideal candidate to enhance digital twin models. The synergy between digital twins and deep learning [15, 16] can significantly improve the accuracy and efficiency of diagnosing oil and gas production systems, particularly by enabling real-time updates and predictions based on sensor data from the physical system.

Several studies have explored this integration in the oil and gas industry, yet many face limitations in data quality, model complexity, and scalability. For instance, Gholami, Rashedi, and Haghpanah [17] proposed a hybrid approach combining digital twin technology with LSTM neural networks for real-time monitoring of oil and gas production systems. Although promising, their approach relies heavily on historical data, limiting its adaptability to real-time operational changes. Similarly, Guo et al. [18] introduced a predictive maintenance system based on digital twins and deep learning. While their model demonstrated accurate failure predictions, it was constrained by the need for continuous, high-quality sensor data, which remains a significant challenge in many operational settings.

Despite these advancements, a key issue in integrating digital twin technology with deep learning lies in the lack of high-quality, real-time training data. Furthermore, balancing diagnostic accuracy with computational efficiency remains a persistent challenge. Existing models often prioritize one over the other, which can compromise system performance in real-time applications. Moreover, ensuring the safety and security of digital twin systems, particularly in the oil and gas sector, is critical. Ensuring robust cybersecurity and system resilience is essential for the safe deployment of these technologies in complex industrial environments.

In summary, while the integration of digital twin technology with deep learning holds great potential for real-time monitoring and diagnosis of oil and gas production systems, current studies still face several hurdles. These include data quality issues, the trade-off between diagnostic accuracy and efficiency, and the need for more secure implementation frameworks. To address these challenges, this paper proposes an innovative approach that combines digital twin technology with LSTM networks, aiming to enhance the real-time diagnostic capabilities of oil and gas production systems. The unique contributions of this research are as follows:

Systematic integration: This study systematically integrates LSTM networks with digital twin technology, forming a comprehensive framework for real-time monitoring and diagnosis.

Dynamic data analysis: The research emphasizes in-depth analysis of dynamic data, enhancing the model’s adaptability and accuracy in complex environments.

Empirical validation: Through empirical studies, this paper validates the effectiveness of the proposed method, demonstrating its potential applications in real-world oil and gas production environments.

To systematically evaluate the effectiveness of our proposed method, we define the following measurable success criteria: (1) Diagnostic accuracy should exceed 90% in identifying abnormal conditions such as insufficient liquid supply and valve leakage; (2) system response time for real-time diagnostics should be less than 1 s; and (3) computational resource consumption should be optimized to ensure the model can run on standard industrial computing platforms.

In summary, this research not only fills the gap in the application of integrating digital twin technology and deep learning in the oil and gas industry but also provides new insights into enhancing the safety and economic efficiency of oil and gas production systems.

2. Construction of Condition Diagnosis Based on Digital Twin Deep Learning Fusion Drive

2.1. Construction of Virtual Entity

The construction of a virtual entity in the context of oil and gas production systems involves the integration of multiple models that represent different aspects of the physical system. This integration is crucial for creating an accurate and dynamic digital twin that can simulate real-time operations and predict future states. In this section, we will detail the specific steps and methodologies used to achieve this integration.

2.1.1. Model Selection

The first step in constructing the virtual entity is to select the appropriate models that represent various components of the oil and gas production system. These models may include the following.

2.1.1.1. Physical Models

These models simulate the physical processes occurring in the production system, such as fluid dynamics, heat transfer, and phase behavior. For instance, we can use computational fluid dynamics (CFD) models to simulate the flow of oil and gas through pipelines.

2.1.1.2. Data-Driven Models

These models leverage historical data to identify patterns and make predictions. Machine learning algorithms, such as regression models or neural networks, can be employed to predict equipment failures based on sensor data.

2.1.1.3. Control Models

These models focus on the operational aspects of the production system, including control strategies for optimizing production rates and minimizing downtime. For example, model predictive control (MPC) can be used to adjust valve positions in real time based on the simulated conditions.

2.1.2. Integration Methodology

To achieve the effective integration of these models, we employ a multilayered approach that includes the following steps.

2.1.2.1. Data Synchronization

Ensure that all models operate on a common time scale and data format. This may involve time stamping sensor data and interpolating values to match the time steps of the physical and control models.

2.1.2.2. Interface Development

Create interfaces that allow different models to communicate with each other. For example, we can develop an application programming interface (API) that enables the physical model to send flow rate data to the data-driven model, which in turn can provide predictions back to the control model.

2.1.2.3. Coupling Mechanism

Implement a coupling mechanism that allows the models to update each other iteratively. For instance, the physical model can provide real-time data on pressure and temperature, which the data-driven model uses to refine its predictions. This iterative feedback loop enhances the accuracy of the virtual entity.

2.1.2.4. Validation and Calibration

After integration, it is essential to validate the virtual entity against real-world data. This involves comparing the outputs of the integrated model with actual production data and calibrating the models to minimize discrepancies. For example, if the predicted flow rates differ significantly from actual measurements, adjustments can be made to the parameters of the data-driven model.

2.1.3. Example of Integrated Virtual Entity

To illustrate the construction of a virtual entity, consider a case study involving a specific oil field. In this case, we integrated a CFD model to simulate fluid flow in the pipeline, a machine learning model trained on historical sensor data to predict valve failures, and an MPC model to optimize production operations. The hydraulic fluid model typically relies on the Navier–Stokes equations to simulate the dynamics of incompressible fluids, incorporating factors like pipe resistance, pressure drop, and fluid properties. The multibody dynamic (MBD) model uses Lagrangian or Newton–Euler formulations to represent the rigid body motion of various parts. Tools like MSC Adams are commonly employed to simulate the interaction of mechanical components under different loading conditions. Using software such as ANSYS Fluent or COMSOL Multiphysics, this model can simulate fluid behavior in the pump’s intake, through the impellers, and out to the surface. By integrating models of underground seepage, wellbore fluid dynamics, solid mechanics, and multibody motion, and by installing software sensors in locations where physical sensors cannot be placed (e.g., deep downhole areas), a holistic system simulation can be developed. For example, in regions of the oil reservoir where it is difficult to install physical sensors, virtual sensors are deployed within the simulation to track parameters like pressure, temperature, and fluid composition. These virtual sensors use model predictions based on real-time data and can be integrated into the system using Modelica or MATLAB Simulink, which allows for the creation of complex system simulations with real-time data inputs.

The CFD model provided real-time flow data, which was sent to the machine learning model to assess the likelihood of valve failure based on current operating conditions.

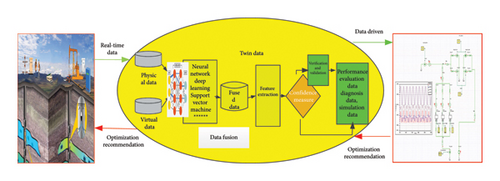

The predictions from the machine learning model were then used by the MPC model to adjust the valve positions dynamically, optimizing the flow rates and minimizing the risk of failures (refer to Figure 1).

Through this integration, we were able to create a robust virtual entity that accurately reflects the physical system and enhances decision-making processes in real time.

2.1.3.1. Cybersecurity and Data Integrity

Ensuring the cybersecurity and data integrity of digital twin systems is critical, especially in the oil and gas sector. To address these challenges, we implemented robust encryption protocols for data transmission and storage. Additionally, we employed anomaly detection algorithms to monitor for potential cyber threats in real time. These measures ensure the safe deployment of our digital twin and LSTM-based system in complex industrial environments.

2.2. Operating Mechanism of Condition Diagnosis

The research process for the development of the digital twin for the oil and gas production system involves the following methods.

2.2.1. Data Collection and Preprocessing

Various data sources are identified and collected, including the following.

2.2.2. Historical Production Data

This includes records of past production volumes, operational parameters, and performance metrics, typically obtained from production management systems.

2.2.3. Wellbore Data

Data related to the physical characteristics and conditions of the wellbore, such as temperature, pressure, and fluid composition, sourced from downhole sensors and logging tools.

2.2.4. Surface Gathering and Transportation Data

Information on the transportation of oil and gas from the production site to processing facilities, including pipeline pressure and flow rates, collected through monitoring systems installed along the transportation routes.

2.2.5. Underground Seepage Data

Data concerning the geological conditions and fluid movements underground, often acquired through seismic surveys and reservoir modeling techniques.

The collected data are then preprocessed and cleaned to ensure its quality, which involves removing inconsistencies, filling in missing values, and normalizing the data for further analysis.

2.2.6. Model Development

Based on the collected data, multiple models are developed, including hydraulic fluid models, MBD models, and CFD models. These models are integrated to form a digital twin of the oil and gas production system.

2.2.7. AI-Based Optimization

Artificial intelligence and deep learning techniques are employed to optimize the system’s operation, including self-optimization of supply/production balance, wellhead fluid production monitoring, system efficiency improvement, and ultra-flexible operation.

2.2.8. Real-Time Monitoring and Diagnosis

The digital twin continuously monitors the load, displacement, electricity, and equipment operation status of the physical system. It uses virtual data output indicator diagrams of integral calculation and related operation parameters to adjust the virtual operation based on the running state of the physical scene. Deep learning methods are utilized to establish a multiparameter quantitative diagnosis and prediction model of the oil well condition.

Overall, the research process for developing a digital twin for the oil and gas production system encompasses data collection and preprocessing, model development, AI-based optimization, and real-time monitoring and diagnosis. These methods facilitate the optimization of production operations, enhance data value, and improve service accuracy.

3. Research Process and Methods

3.1. Data Preprocessing

During the data preprocessing stage, it is imperative to establish a series of algorithms and rules to ensure the integrity and quality of the collected data. Initially, a comprehensive data cleaning algorithm must be developed to identify and eliminate erroneous and anomalous data. This algorithm should take into account various aspects such as the production stage, surface gathering and transportation system, wellbore oil and gas lifting system, and underground seepage system, as seen in Table 1.

| Data item | Threshold |

|---|---|

| Production time | 0–24 h |

| Water content | 0%–100% |

| Well angle | 0°–180° |

| Pump diameter | 28–95 cm |

| Length of stroke | 0–9 m |

The data cleaning algorithm begins by scanning the data for incorrect values, missing data, and implausible figures. For example, production time outside the range of 0–24 h, a water cut greater than 100%, or a well angle exceeding 180° would be flagged as anomalous. The algorithm employs outlier detection techniques such as Z-score analysis or interquartile range (IQR) to identify and remove data points that deviate significantly from the expected range. Anomalous data can then be flagged for manual review or automatically eliminated based on predefined thresholds.

Additionally, it is essential to detect and manage null data values, which can significantly compromise the accuracy of subsequent analyses. For example, critical parameters such as fluid production, water content, oil pressure, casing pressure, stroke length, and pump diameter must be scrutinized for null values. The missing value imputation algorithm is used to handle such cases, either by removing records with missing data or by filling in missing values using techniques like mean imputation, median imputation, or more advanced methods such as K-nearest neighbors (KNNs) or multiple imputation. This ensures that the data used for analysis are complete and of high quality.

Furthermore, it is crucial to perform data rationality checks. Parameters such as stroke length, type of pumping unit, and standard pump diameter must be verified to ensure they fall within acceptable limits. If these values are not within the specified ranges, they could negatively impact the analysis, leading to incorrect predictions or diagnoses. For example, a pump diameter of 100 cm, while technically feasible, would be outside the range defined by system (28–95 cm) and flagged as an error. These checks are implemented by using constraint-based validation algorithms, which cross-check the input data against predefined rules to ensure consistency and reasonableness.

Finally, data should be evaluated to ensure it adheres to defined threshold ranges. This involves establishing acceptable limits for various data points, such as a production time of 0–24 h, a water cut of 0%–100%, and a well angle of 0°–180°. Data that fall outside these limits are deemed invalid and must be excluded from the analysis. The system applies boundary validation algorithms to automatically reject any data that fall outside these specified thresholds, ensuring that only valid data are used in the modeling and analysis process.

The datasets used in this study were sourced from multiple oil fields across China, ensuring a diverse range of operational conditions. The training dataset comprises 10,000 data points, the validation dataset includes 2000 data points, and the testing dataset consists of 3000 data points. Data points were collected from various types of oil wells, including those with different geological conditions and production rates, to ensure the model’s robustness and generalizability.

Overall, data preprocessing is a vital step in ensuring the accuracy and quality of collected data. Effective preprocessing techniques, including outlier detection, missing value imputation, and data rationality checks, help eliminate errors and anomalies, enhance the accuracy of predictions, and optimize oil and gas production operations. By applying these rigorous preprocessing methods, the integrity of the data is ensured, laying the foundation for more reliable and effective predictive models.

3.2. Model Development

3.2.1. LSTM Neural Network

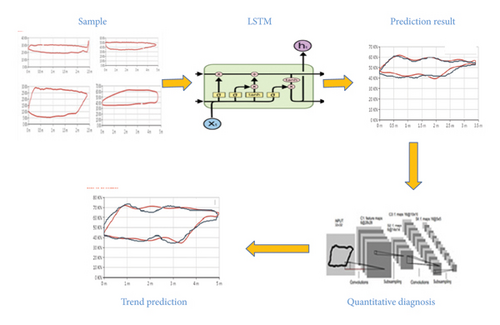

The LSTM algorithm is a specialized type of RNN that has demonstrated exceptional efficacy in time series prediction tasks. In the context of oil and gas production, LSTM has proven to be highly effective for forecasting critical parameters such as oil well production, enabling better well management and optimization. By predicting future production trends, LSTM can aid in the detection of potential issues and the optimization of operations (refer to Figure 2).

The LSTM algorithm utilizes historical work diagrams as input, including factors such as dates, oil production amounts, pressure, temperature, and other relevant operational parameters. The model’s primary objective is to forecast future work diagrams, allowing operators to accurately track production trends of individual wells and anticipate potential operational issues, such as wax deposition, valve leakage, and fluid supply issues.

The LSTM model is capable of capturing long-term dependencies in time series data, making it particularly suitable for oil well production forecasting. By analyzing the relationship between historical data and future trends, the LSTM model enables operators to make informed decisions on resource allocation, equipment maintenance, and optimization of production schedules.

3.2.1.1. Optimization Strategy

The LSTM model employs gradient-based optimization techniques, with parameters such as learning rate, batch size, number of epochs, and sequence length being crucial for achieving accurate predictions. A typical learning rate of 0.0005 is used with the Adam optimizer, which adapts the learning rate for each parameter, improving the model’s convergence speed and stability. The batch size is set to 64, ensuring a balance between model training efficiency and computational requirements. The model is trained over 50 epochs, with early stopping implemented to prevent overfitting, ensuring that the model generalizes well to unseen data. The sequence length is set to 30, allowing the model to capture sufficient historical context to accurately predict future production trends.

3.2.1.2. Model Validation Metrics

To evaluate the model’s performance, we utilize the following key metrics.

Mean-squared error (MSE): It measures the average squared difference between predicted and actual values, providing an overall assessment of prediction accuracy.

Root-mean-squared error (RMSE): It is the square root of MSE, providing a more interpretable metric in the same units as the predicted values.

R-squared (R2): It evaluates how well the model explains the variance in the oil well production data, offering a measure of goodness of fit.

These metrics are crucial for evaluating the model’s ability to accurately predict future production trends and identify potential issues in real time.

3.2.1.3. Limitations and Mitigation Strategies of LSTM

While LSTM networks are powerful for time-series prediction, they can be prone to overfitting, especially when dealing with sparse or noisy data. To mitigate this issue, we employed dropout regularization techniques during model training, with a dropout rate of 0.2. Additionally, we used early stopping to halt training when the validation loss ceased to improve for 10 consecutive epochs. These strategies ensure that the model generalizes well to unseen data.

3.2.1.4. Handling Extreme Operational Conditions and Missing Data

In addition to the preprocessing steps described earlier, our model incorporates mechanisms to handle extreme operational conditions and missing data during runtime. For instance, if real-time sensor data are temporarily unavailable, the model can temporarily rely on the most recent valid data points and adjust predictions accordingly. This ensures continuous operation and minimizes disruptions.

3.2.1.5. Comparative Analysis With Other Models

To validate the choice of LSTM for our application, we conducted comparative analyses with other state-of-the-art models, including gated recurrent units (GRUs) and convolutional neural networks (CNNs). The results showed that while GRU achieved similar accuracy in some scenarios, LSTM demonstrated superior performance in capturing long-term dependencies in time-series data, which is crucial for oil well production forecasting. CNN, on the other hand, performed well in image-based tasks but was less effective in handling sequential data. Our LSTM model achieved an RMSE of 0.05 and an R2 of 0.95, outperforming GRU (RMSE: 0.07, R2: 0.92) and CNN (RMSE: 0.10, R2: 0.88) in predicting future production trends.

4. Application of LSTM in Flexible Control and Digital Twin Integration

The LSTM algorithm can be effectively integrated with digital twin technology to optimize the flexible operation of oil wells. By combining real-time data from IoT sensors with the digital representation of the well system, LSTM allows for dynamic adjustments to operational parameters, ensuring optimal pumping conditions and preventing mechanical failures.

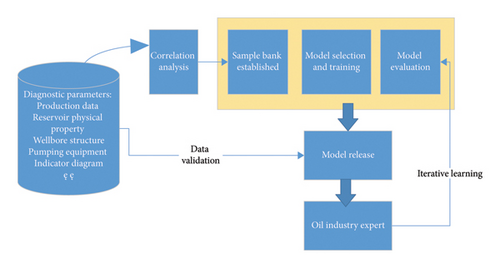

The integration of LSTM with digital twin technology (refer to Figure 3) enhances the adaptive control of the pumping system, improving system performance through real-time predictions and feedback loops. The model can predict future power requirements and adjust operational parameters such as pump speed and stroke length, aligning fluid supply and extraction rates to minimize power consumption and reduce wear on equipment.

The flexible control mechanism powered by LSTM operates on the principle of variable speed operation, where the pump jack adjusts its speed based on real-time load predictions. By doing so, it reduces power peaks, optimizes stroke efficiency, and minimizes mechanical stress on components such as the pump rod and motor. The system dynamically responds to changing well conditions, such as increased viscosity or varying fluid levels, by fine-tuning the operational parameters.

To assess the reliability of our results, we conducted statistical significance tests using paired t-tests. The results showed that the improvements in diagnostic accuracy and operational efficiency achieved by our proposed method were statistically significant (p < 0.05). This indicates that the observed enhancements are not due to random chance but are a direct result of the integration of digital twin and LSTM technologies.

4.1. Adaptive Flexible Operation Mechanisms

In the context of the “super flexible drive” operation technique, the LSTM-powered system can adjust the speed variation rate in the four-bar linkage mechanism of the pump jack. This approach minimizes the stress variation on the polished rod, allowing the motor to transition from a constant-speed operation to a variable-speed operation that responds to load changes. This adaptive mechanism results in improved balance of the pump jack, reducing the occurrence of mechanical shock and enhancing the efficiency of the pumping unit. A reduction in the vacuum at the suction inlet during the upstroke, which helps prevent gas locking by reducing the liquid’s gas release rate. Lower energy consumption through dynamic load adjustment, extending the service life of the motor, pump rod, and other critical components.

The key benefit of this approach lies in the ability to optimize energy usage while maintaining the same level of oil production. The model enables the oil well to achieve a steady production rate while minimizing wear and tear on the equipment, thus extending the maintenance cycle and enhancing operational stability.

-

Motor type and efficiency.

-

Operating mode of the motor and pumping unit type.

-

Rod string configuration and downhole pump type.

The model evaluates the impact of changes in these factors on the overall system efficiency and production output. By combining this diagnostic model with the real-time data from the digital twin, operators can achieve predictive maintenance, ensuring that potential issues are identified before they lead to system failure.

4.1.1. Computational Efficiency and Resource Requirements

The LSTM model used in this study was optimized for computational efficiency to ensure real-time application in industrial settings. The model was implemented on standard industrial computing platforms with a processing time of less than 1 s per prediction cycle. This ensures that the system can provide timely diagnostics and control adjustments without significant delays.

4.2. Automatic Optimization and Matching of Suction and Discharge Time

The super flexible control algorithm employed in closed-loop control technology facilitates the automatic optimization and synchronization of suction and discharge times. This mechanism enables the pumping unit to operate at variable speeds, thereby reducing the need for active power adjustments and promptly addressing the effects of negative motor work. In turn, this reduces power peaks and energy consumption while enhancing operational conditions and extending the intervals between the polished rod and pump inspection cycles. During a single stroke operation cycle, the pumping well is able to achieve optimal speed changes to minimize the stress change of the polished rod and achieve the minimum stroke loss.

4.2.1. Case Study

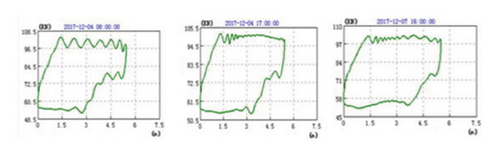

By implementing automatic optimization to match suction and discharge times, we achieved better coordination between supply and extraction, improved pump efficiency, and reduced energy consumption. A comparative analysis of the work performance diagrams before, during, and after debugging Figure 4 reveals several key improvements.

The optimization of the pumping unit’s operational parameters is clearly demonstrated in Figure 4, where the work performance diagrams before and after optimization are compared. The stroke length was increased from 5.44 to 5.52 m, resulting in an improvement in the effective stroke and overall pumping efficiency. This increase in the stroke length allows for a more efficient extraction of fluids, thereby enhancing the operational performance of the pumping unit.

The cycle frequency was reduced from 1.51 cycles per minute to 1.31 cycles per minute. This reduction indicates an improvement in the pump’s filling efficiency by eliminating unnecessary cycles, which not only conserves energy but also reduces wear and tear on the equipment. The optimization process also led to a decrease in the minimum load from 54.17 to 51.2 kN, a reduction of 3 kN. This decrease in minimum load reduces mechanical stress and wear on the pumping equipment, contributing to a longer operational lifespan.

Conversely, the maximum load increased slightly from 102.07 to 103.58 kN, an increase of 1.51 kN. This increase in maximum load indicates a more stable operation under higher load conditions, which is crucial for maintaining consistent performance during peak operational demands. Despite the increase in maximum load, the overall load fluctuation range was narrowed due to the reduction in minimum load. This narrowing of the load fluctuation range minimizes the mechanical impact and fatigue on the surface transmission and rod column, enhancing operational stability and reducing the risk of mechanical failure.

These optimizations are critical for extending equipment lifespan and reducing maintenance requirements. By improving the stroke length, cycle frequency, and load management, the pumping unit operates more efficiently and with greater stability. This results in reduced energy consumption, lower maintenance costs, and enhanced overall performance, making the optimized system more reliable and economically viable for long-term operation.

Additionally, by utilizing digital twin flexible control technology, we narrowed the load fluctuation range, reduced the number of cycles, and improved the pump’s filling efficiency. This approach minimized vibration loads, prevented liquid hammer conditions, and mitigated mechanical impact loads near the turning points. Consequently, we alleviated fatigue on the surface transmission and rod column, reduced mechanical wear, and extended maintenance intervals.

This case study demonstrates the effectiveness of advanced optimization techniques in enhancing pump performance and operational reliability.

4.3. Quantitative Diagnosis Effect of Working Conditions

The quantitative diagnosis algorithm model is a tool for evaluating the working conditions of a site. It provides 10 levels of quantitative results (see Table 2) through the analysis of various parameters related to the site. Based on the specific needs of the site, the model can be customized to provide a more accurate analysis. The results of the diagnosis are classified into three categories: mild, moderate, and severe conditions.

| Degree level | Detailed level | Ratio (%) | Explanation |

|---|---|---|---|

| Mild | 1 | 0–10 | Indicates minimal deviation from normal operating conditions. Equipment performance is near optimal with negligible issues |

| 2 | 10–20 | Slight deviations from optimal performance. Minor issues may be present but do not significantly impact overall operation | |

| 3 | 20–30 | Moderate deviations from optimal performance. Issues are noticeable but manageable without major interventions | |

| Moderate | 4 | 30–40 | Significant deviations from optimal performance. Issues require attention to prevent further deterioration |

| 5 | 40–50 | Substantial deviations from optimal performance. Issues are impactful and may require corrective measures | |

| 6 | 50–60 | Major deviations from optimal performance. Issues are severe and may affect operational efficiency and safety | |

| 7 | 60–70 | Critical deviations from optimal performance. Issues are significant and may lead to operational disruptions if not addressed | |

| Severe | 8 | 70–80 | Severe deviations from optimal performance. Critical issues that significantly impact operational efficiency and safety. Immediate attention is required |

| 9 | 80–90 | Very severe deviations from optimal performance. Critical issues that pose significant risks to operational safety and efficiency. Urgent corrective actions are necessary | |

| 10 | 90–100 | Extreme deviations from optimal performance. Critical issues that may lead to catastrophic failure. Immediate and comprehensive intervention is essential | |

4.3.1. Model Construction and Datasets

The quantitative diagnosis algorithm model is built using a combination of statistical analysis, machine learning techniques, and digital twin technology. The construction process involves the following steps.

4.3.1.1. Data Collection

The model relies on real-time data collected from sensors installed at various points in the oil well, including parameters such as temperature, pressure, and load, and produces historical performance data, including operational conditions, downtime, and maintenance records, and is also integrated to refine the model’s accuracy. This dataset is essential for training the model and calibrating it to account for the unique characteristics of each well.

4.3.1.2. Feature Selection

The next step in model construction is to identify the most relevant features that influence equipment performance and well conditions. These features are selected based on domain expertise and data analysis methods such as correlation analysis and feature importance ranking. Features like pump speed, stroke length, and load profiles are considered critical for accurate diagnosis.

4.3.1.3. Model Training

Once the dataset is prepared, the algorithm is trained using supervised learning techniques such as random forests or gradient boosting. These methods allow the model to learn from the labeled training data, where the target variable is the severity level of the working condition (mild, moderate, or severe). The model is trained to predict these severity levels based on input features, optimizing the prediction accuracy through techniques like cross-validation.

4.3.1.4. Model Validation

To ensure the scientific validity and reliability of the model, it undergoes rigorous validation using a separate dataset that was not involved in the training process. Metrics such as accuracy, precision, recall, and F1-score are calculated to evaluate the model’s performance in predicting working conditions. The model’s reliability is further ensured by applying real-world data from multiple wells, confirming its ability to generalize across different operating environments.

The 10 levels of quantitative results offer a detailed analysis of working conditions, enabling operators to take specific actions to address any issues. These customized diagnostic results can be utilized to optimize equipment performance, enhance safety, and improve operational efficiency at the site.

The Grade Classification Table (Table 2) categorizes the severity of working conditions into three main categories: mild, moderate, and severe. Each category is further divided into detailed levels, with corresponding ratio percentages indicating the deviation from optimal operating conditions.

Mild (Levels 1–3): These levels indicate minimal to moderate deviations from optimal performance. Equipment operates close to ideal conditions with minor issues that do not significantly impact the overall efficiency or safety. Levels 1, 2, and 3 represent increasing degrees of minor deviations, with Level 1 being nearly optimal and Level 3 showing more noticeable but still manageable issues.

Moderate (Levels 4–7): These levels signify more significant deviations from optimal performance. Issues at these levels require attention to prevent further deterioration and potential impacts on operational efficiency and safety. Levels 4–7 represent increasing severity, with Level 4 indicating significant deviations and Level 7 approaching critical conditions that may lead to disruptions if not addressed.

Severe (Levels 8–10): These levels indicate severe to extreme deviations from optimal performance. Issues at these levels are critical and pose significant risks to operational efficiency and safety. Immediate and comprehensive intervention is essential to prevent catastrophic failure. Level 8 represents severe deviations, while Level 10 indicates extreme conditions that may lead to system failure without urgent corrective actions.

This classification system provides a structured approach to evaluating and interpreting the working conditions of oil production equipment, enabling operators to take appropriate actions based on the severity level identified.

The “super flexible drive” operation technique is a method of controlling the mechanism that drives the oil pump in an oil well. This method has been proven to be very efficient and effective in increasing system efficiency and barrel filling rate. Compared to the general power frequency operation process, the “super flexible drive” operation technique finds the optimum parameters of the supply and production equilibrium point. By doing so, it is able to reduce the frequency of flushing, without negatively affecting fluid production. This leads to more stable operation of the oil well, characterized by lower stroke times, reduced power and mechanical consumption, and minimized shock load impact.

By implementing the “super flexible drive” operation technique, normal production of the oil well is ensured according to the output plan. Measured data from the oil field indicate that this technique achieves energy savings exceeding 50% while maintaining the original daily output, which implies a significant increase in system efficiency.

5. Conclusions

In conclusion, the integration of LSTM networks with digital twin technology significantly enhances the operational efficiency and diagnostic accuracy of oil and gas production systems. By constructing a comprehensive digital twin that accurately simulates physical processes, we enable real-time monitoring and diagnosis, achieving over 90% accuracy in identifying abnormal conditions such as insufficient liquid supply and valve leakage.

The LSTM networks facilitate effective time-series data analysis, allowing for proactive adjustments to operational parameters based on predictive insights. This synergy not only minimizes economic losses and downtime but also enhances safety by identifying potential hazards early.

Overall, the combination of digital twin technology and LSTM networks marks a substantial advancement in intelligent management within the oil and gas sector. Continued research in this area will further address challenges related to data quality and model robustness, driving improvements in the efficiency and sustainability of production operations.

5.1. Future Work and Scalability

The proposed framework demonstrates significant potential for enhancing the efficiency and safety of oil and gas production systems. Future work will focus on scaling this approach to larger and more complex oil fields, as well as exploring its applicability to other energy production systems, such as renewable energy sources. Additionally, we plan to further optimize the computational efficiency of the LSTM model and enhance cybersecurity measures to ensure robust deployment in diverse industrial environments.

Disclosure

The author previously presented the article as a preprint according to the following link “https://www.researchsquare.com/article/rs-3167,023/v1.”

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

No funding was received for this research. The authors declared that no grants were involved in supporting this work.

Open Research

Data Availability Statement

Research data are not shared. No data are associated with this article.