Designing a New Control Method to Improve the LFC Performance of the Multi-Area Power System Considering the Effect of Offshore Wind Farms on Frequency Control

Abstract

The presence of offshore wind farms (OWFs) reduces the inertia of the power system and jeopardizes its frequency stability. Considering virtual inertia control (VIC) for these farms improves the frequency stability and inertia in the power system. In this paper, the robust H∞ controller based on deep reinforcement learning (DRL) is designed to improve the frequency stability in the load–frequency control (LFC) of the power system by considering the effect of OWFs on frequency control. The proposed method is robust to disturbances (load and wind fluctuations) and uncertainties related to system parameters and can adapt to uncertainties. The robust H∞ controller is designed based on linear matrix inequality (LMI) and DRL optimizes the robust H∞ controller and will improve the overall performance of the system. To examine the performance of the proposed method (H∞–DRL), several scenarios have been considered and compared with DMPC and PID control methods. The results show the superiority of the proposed method, which has been able to reduce load and wind fluctuations, frequency deviations, and also power deviations of the tie-line between the lines of the multi-area power system.

1. Introduction

Owing to the potential for strong and steady winds, offshore wind farms (OWFs) are regarded as one of the most significant sources of clean energy and one of the most significant renewable energy sources [1–3]. OWFs provide a number of benefits, including as better utilization of marine resources, more steady and greater energy production, and less noise pollution because they are located far from land regions [4–6]. The presence of OWFs in the power system also has challenges [7]. One of the most important challenges is that these farms use induction generators instead of synchronous generators, which makes the farms unable to provide natural inertia [8]. The inability of OWFs causes the performance of the load–frequency control (LFC) in the power system to be compromised in the presence of these farms, and frequency fluctuations as well as power fluctuations between tie-lines in the power system increase [9]. By modeling the behavior of mechanical inertia, virtual inertia control (VIC) design for these farms enhances the power system’s frequency stability [10].

In [11, 12], to improve the frequency stability of the power system along with the LFC, the concept of VIC for OWFs has been proposed. When the power signal and the maximum efficiency tracking signal are combined in [13], the WTs actively take part in the system’s frequency regulation. In [14–16], VIC on OWFs based on the proportional–derivative method (VIC and VDC) is proposed. The larger the VDC gain and VIC in OWFs, the more kinetic energy will be released from the rotor, which will improve the frequency stability [17]. The value of the VDC gain and the VIC gain depends on the rotor speed, and if these values are considered large, it may cause the rotor speed to go out of the operating range and lead to system instability [18]. Therefore, the presence of VIC and VDC for OWFs along with the LFC in the power system seems essential. Several control methods have been considered to improve the LFC in the power system with the presence of OWFs and the advantages and disadvantages of each are fully stated in Table 1 for reference.

| Methodologies employed | Main findings | Deficiency of the method used |

|---|---|---|

| Cascaded FOPD–FOPID controller based on DOSA [19] | Increasing the frequency stability of the power system when there are disruptions and system unpredictability | OWFs do not participate in power system frequency control, not resistant to severe disturbances |

| Robust fuzzy controller [20] | Enhancing a power system’s frequency deviations to make it more resilient to minor disruptions and moderate parameter uncertainties | Inadequate resilience to significant disruptions and significant ambiguity surrounding the boundaries of the power system, OWFs do not participate in power system frequency control |

| FOPID–TID controller [21] | Increasing the frequency stability of the power system when there are disruptions and system unpredictability | OWFs do not participate in power system frequency control, not resistant to severe disturbances |

| FMPC controller [22] | Lower frequency deviations, increase the power system’s stability, and withstand small uncertainties in the parameters of the power system | Insufficient resilience to significant disruptions and significant ambiguity surrounding the system’s specifications, OWFs do not participate in power system frequency control |

| Fuzzy PID controller [23] | Enhancing a power system’s frequency deviations to make it more resilient to minor disruptions and moderate parameter uncertainties | Inadequate resilience to significant disruptions and significant ambiguity surrounding the boundaries of the power system, OWFs do not participate in power system frequency control |

| PIλ (1+PDF) controller [24] | Increasing the frequency stability of the power system when there are disruptions and system unpredictability | OWFs do not participate in power system frequency control, not resistant to severe disturbances |

| 3DOF–PID controller [25] | Increasing the frequency stability of the power system when there are disruptions and system unpredictability | OWFs do not participate in power system frequency control, not resistant to severe disturbances |

| SMC controller [26, 27] | Reduce frequency deviations, improve power system stability, resistant to mild disturbances, and mild uncertainty related to system parameters | Chattering phenomenon (high frequency oscillations) during severe disturbances |

| MPC controller [28, 29] | Lower frequency deviations, increase the power system’s stability, and withstand small uncertainties in the parameters of the power system | Insufficient resilience to significant disruptions and significant ambiguity surrounding the system’s specifications |

| DMPC controller [30] | Lower frequency deviations, increase the power system’s stability, and withstand small uncertainties in the parameters of the power system | Insufficient resilience to significant disruptions and significant ambiguity surrounding the system’s specifications |

- •

DRL is a data-driven and adaptive method that can reduce the access limitations of the H∞ robust controller in terms of access to the complete model and adapt itself to the changing conditions of the power system.

- •

Given that in this paper, the FNN is used in the DRL structure, which ensures the conditions of the H∞ robust controller and also leads to the creation of an optimal controller for LFC of the power system.

- •

DRL will continuously improve the performance of the robust controller in the LFC of the power system.

- •

Combining H∞ controller with DRL to improve the LFC problem in the power system considering the effect of OWFs on frequency control: In this paper, instead of numerical solution methods used for designing robust control, DRL is used for its design.

- •

Converting the H∞ controller to LMI and using LMI in calculating the reward in DRL.

- •

Online learning of the robust controller in the presence of disturbances and uncertainty of power system parameters.

- •

Using a neural network to predict the H∞ controller.

- •

Multiobjective optimization: DRL allows the agent to simultaneously optimize multiple objectives (satisfying the conditions of the H∞ robust controller, optimizing the performance of H∞, etc.)

The structure of the paper consists of several sections. Section 2 presents the dynamic model of the power system considering the effect of OWFs on frequency control. In Section 3, the proposed controller is designed. Sections 4 and 5 present the simulation and results, respectively.

2. The Dynamic Model of Power System Considering the Effect of OWFs in Frequency Control

2.1. The Model of OWFs in Frequency Control

The following phases are included in OWFs, which are made up of many WTs that transform wind energy into mechanical and electrical energy.

2.1.1. Conversion of Wind Energy Into Mechanical Power

In Equation (1), Pm (mechanical power of WTG): the mechanical power extracted from the wind by the turbine blades; Cp (power coefficient): represents the efficiency of the wind energy conversion process; λ (tip-speed ratio): the ratio of the blade tip speed to the wind speed, which affects power efficiency; β: the angle of the turbine blades, which controls power extraction; ρ: the density of air; r: radius of the wind turbine blades; v: wind speed.

2.1.2. Transferring Mechanical Power to the Generator

In Equation (2), ΔT = Pm − Pe/ω, F: friction coefficient of the transmission system, Pe: output of the generator of WTG, ω: rotor speed of WTG, and Hw: inertia coefficient of transmission system of WTG.

2.1.3. MPPT

In Equation (3), Kc: maximum power tracking coefficient of WTG, ωn: rated rotor speed, and Pω: the optimal power in the WTs.

2.1.4. Dynamic Modeling of VIC and VDC in WTs

In Equation (3), ΔPf = −(KDs + Kp)Δf, KD: VIC gain, KP: virtual damping gain, Te: time constant of generator of WTG, and Pe: the electromagnetic power output of OWFs.

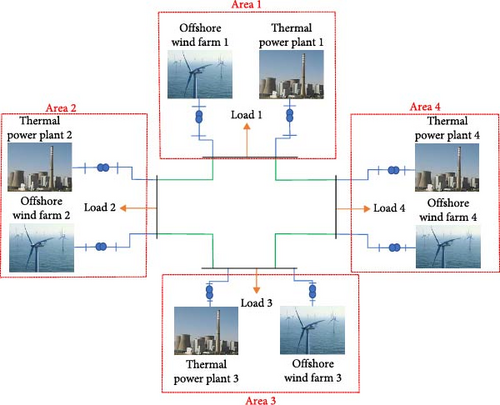

2.2. The TPP Modeling

TPPs, which supply electricity and regulate the frequency in this system, are among the most crucial parts of the power system [29, 30]. The components of TPPs that are modeled for frequency stability include the Governor model, the Boiler model, the turbine model, and the frequency regulation model. In this paper, the power system is considered to consist of four areas as shown in Figure 1, each area including TPPs and OWFs [29, 30]. In Figure 2, the dynamic model of the power system including TPPs and OWFs is shown by considering VIC and VDC on these farms for one area [28–30]. This dynamic model is a first-order (reduced order) model for the power system components, which is a suitable model for frequency stability analysis. The parameters related to the power system are given in Table A1 [28–30].

2.3. The State–Space Model of the Power System and OWFs for Each Area

3. Design of the Proposed Controller

3.1. H∞ Controller

The scope of parameter uncertainty is defined as follows:

Uncertainty in system inertia (Mi): The system inertia can change due to changes in the system load or changes in the number of generators connected to the system. In this paper, the uncertainty in system inertia is considered as (±20%) of the nominal value.

Uncertainty in damping coefficient (Di): The damping coefficient of the system can also change due to changes in the system load or changes in environmental conditions. In this paper, the uncertainty in the damping coefficient is considered as (±20%) of the nominal value.

Solving the LMI associated with the H∞ controller (Equation (13)) can be complex and time-consuming and this robust H∞ controller cannot adapt adaptively to environmental changes. DRL is used in this paper to learn the H∞ control policy adaptively and online. On the other hand, this method models the changes caused by uncertainty and disturbances well. DRL controls the performance of H∞ and robust stability together.

3.2. RL

RL is an important branch of machine learning that aims to train an agent to make optimal decisions in an environment [34]. In this method, the agent learns an optimal policy to maximize long-term reward through interaction with the environment and specifically through trial and error. The following describes the principles of reinforcement learning and its details, including the Q-learning method used in this article.

- •

Agent: A decision-maker who takes action in the environment.

- •

Environment: The system in which the agent operates and which provides responses such as state changes and rewards to the agent.

- •

States (s): The states that the environment can be in. The states of the system are described in terms of state variables and are discretized for simplicity.

- •

Actions (a): A set of inputs or controls that the agent can perform. In this paper, inputs are chosen as discrete values over a range of continuous values.

- •

Reward (r): A signal that the environment gives the agent for each action to evaluate the quality of its performance.

- •

Policy (π(a|s)): A rule or strategy that the agent uses to make a decision in each state.

In Equation (14), π(a|s) is the probability of choosing action a in state s.

In Equation (17), r represents the reward for the quality of action a in state s, Λ is the discount rate, which has a value between zero and one.

3.3. DRL

It is an improved version of RL in which the neural network extracts more complex features from the training data that perform better in new environments. Also, in this paper, the reward is based on the robust stability criterion and the LMI error reduction, so DRL can better model complex rewards and learn policies that optimize this robust controller criterion H∞.

In Equation (18), θ are the weights of the neural network.

In Equation (19), J(πθ) is the objective function defined as a cumulative reward and needs to be optimized.

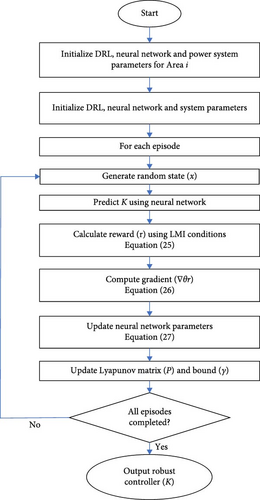

3.4. Design of H∞ Robust Controller Based on DRL

- 1.

In this learning stage, a random state x is generated from the power system state-space of each area according to Equation (23).

- 2.

At this stage, the neural network πθ predicts the matrix corresponding to the robust controller (K) by receiving the state x of the power system according to Equation (24).

- 3.

After obtaining K, the robust stability condition and the performance of H∞ should be evaluated. The reward function is defined as Equation (25). The goal of this reward function is to improve the LMI condition and minimize the values of trace (P) and γ2. In Equation (25), decreasing r means improving the robust controller.

- 4.

In order to update the FNN parameters, the gradient of the reward function with respect to the FNN parameters θ is calculated according to Equation (26).

- 5.

Using the gradient and the learning rate α, the neural network parameters are updated according to Equation (27).

Updating the neural network parameters according to Equation (27) improves the policy πθ and produces a more optimal controller. Figure 3 displays the suggested method’s flowchart. Table 2 displays the suggested method’s beginning settings. Table 3 displays the pseudocode for the suggested approach.

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| n (Number of system states) | 7 | Θ | I7: Initial Lyapunov matrix |

| m (Number of control inputs) | 1 | γ | 10 |

| q (Number of system outputs) | 1 | Input layer (neural network) | 7 (the number of system states) |

| p (Number of external disturbances) | 1 | Hidden layers (neural network) | 1 (64 neurons) |

| H (Uncertainty matrix) | Uncertainty matrix (7 × 7) | Output layer (neural network) | 7 (the dimensions of the control matrix K) |

| E (Uncertainty matrix) | Uncertainty matrix (7 × 7) | Learning rate (α) (reinforcement learning) | 0.01 |

| P | I7: Initial Lyapunov matrix | Number of episodes (reinforcement learning) | 500 |

|

4. Simulation

Parameters related to the multi-area power system are included in Table A1. In order to compare the proposed method (H∞–DRL) in the LFC structure of the power system considering the effect of OWFs on frequency control, three scenarios are considered and compared with DMPC and PID control methods. Using various control techniques, the impact of minor disruptions on the multi-area power system is examined in Scenario (1). In Scenario (2), several control techniques are used to examine the impact of moderate disruptions and mild uncertainty pertaining to the multi-area power system’s characteristics. In Scenario (3), several control techniques are used to examine the impact of severe disruptions and severe uncertainty pertaining to the multi-area power system’s characteristics.

4.1. Scenario (1)

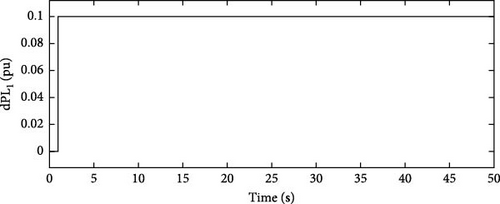

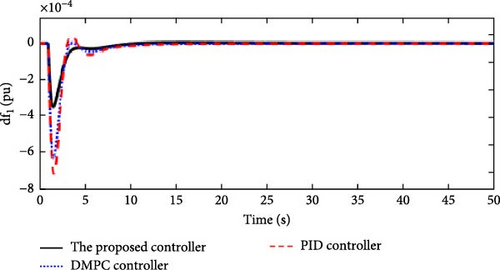

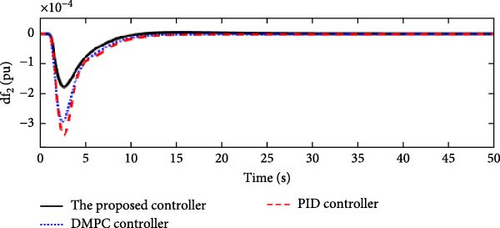

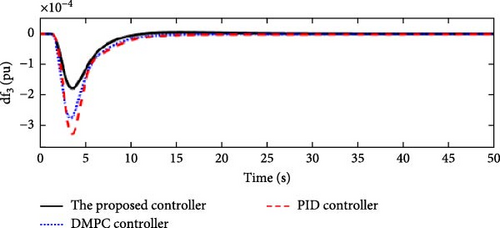

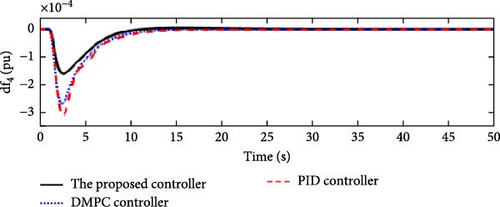

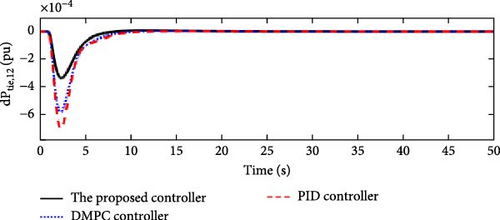

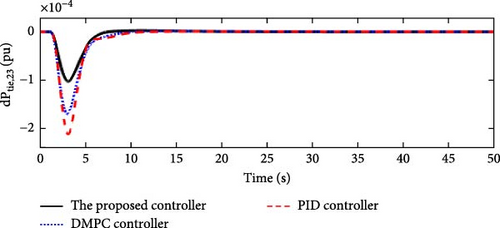

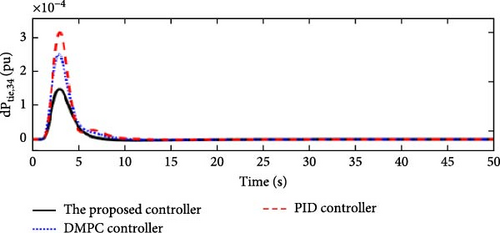

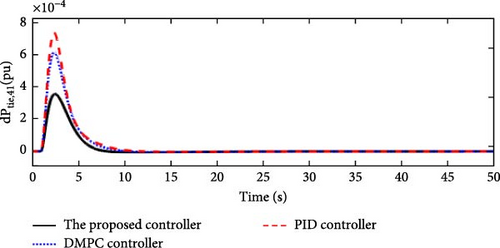

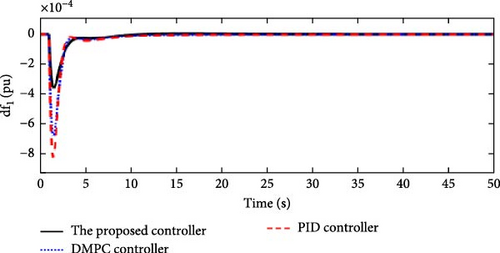

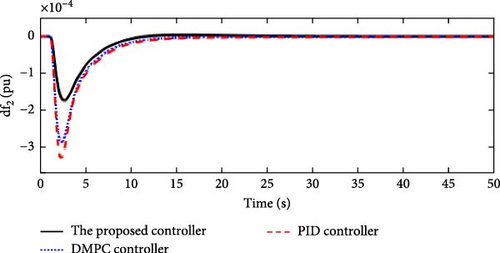

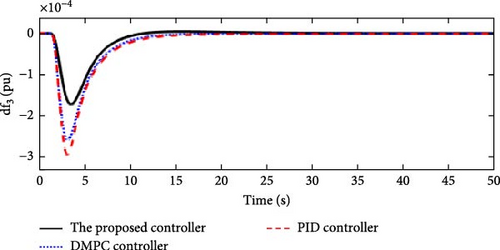

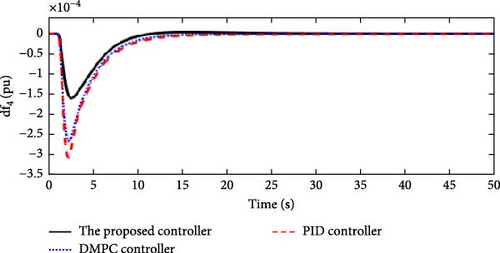

In this scenario, a load disturbance is introduced into Area 1 of a multi-area power system as shown in Figure 4. Figures 5, 6, 7, and 8 show the FDs of Areas 1, 2, 3, and 4 using different control methods, respectively. Figures 9, 10, 11, and 12 show the PDs of the tie-line for Areas 1–2, 2–3, 3–4, and 4–1 using different control methods, respectively. According to Figures 5–12, the proposed control method (H∞–DRL) has been able to reduce the FDs as well as the PDs between the tie-lines and has an effective performance against mild disturbances. The proposed method has also been able to suppress the oscillations in a shorter time. The results of Scenario (1) are shown in Tables 4 and 5.

| Controller | Δf1(pu) | Δf2(pu) | Δf3(pu) | Δf4(pu) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | |

| The proposed controller | 0 | 3 × 10−4 | 9 | 0 | 1.7 × 10−4 | 9 | 0 | 1.5 × 10−4 | 10 | 0 | 1.5 × 10−4 | 10 |

| DMPC controller | 0 | 6 × 10−4 | 11 | 0 | 3 × 10−4 | 11 | 0 | 2.8 × 10−4 | 11 | 0 | 2.8 × 10−4 | 11 |

| PID controller | 1 × 10−4 | 7 × 10−4 | 13 | 0 | 3.5 × 10−4 | 13 | 0 | 3.3 × 10−4 | 14 | 0 | 3.3 × 10−4 | 14 |

| Controller | ΔPtie,12(pu) | ΔPtie,23(pu) | ΔPtie,34(pu) | ΔPtie,41(pu) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | |

| The proposed controller | 0 | 3 × 10−4 | 9 | 0 | 1.1 × 10−4 | 7 | 1.5 × 10−4 | 0 | 8 | 3.8 × 10−4 | 0 | 8 |

| DMPC controller | 0 | 5.8 × 10−4 | 11 | 0 | 1.8 × 10−4 | 10 | 2.5 × 10−4 | 0 | 10 | 6 × 10−4 | 0 | 10 |

| PID controller | 1 × 10−4 | 6.5 × 10−4 | 13 | 0 | 2.2 × 10−4 | 12 | 3.3 × 10−4 | 0 | 12 | 7.2 × 10−4 | 0 | 13 |

4.2. Scenario (2)

In this scenario, a load disturbance is introduced into Area 1 of a multi-area power system as shown in Figure 4. Also, in this scenario, the effect of slight uncertainties in the parameters D and M (M = D = −10%) in each of the areas of the power system is considered. Figures 13, 14, 15, and 16 show the FDs of Areas 1, 2, 3, and 4 using different control methods, respectively. Figures 17, 18, 19, and 20 show the PDs of the tie-line for Areas 1–2, 2–3, 3–4, and 4–1 using different control methods, respectively. According to Figures 13–20, the proposed control method (H∞–DRL) has been able to reduce FDs, as well as PDs between tie-lines and has an effective performance against mild disturbances and mild uncertainties and the proposed method has also been able to suppress oscillations in a shorter time. The results for Scenario (2) are shown in Tables 6 and 7.

| Controller | Δf1(pu) | Δf2(pu) | Δf3(pu) | Δf4(pu) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST(s) | MO (pu) | MU (pu) | ST(s) | |

| The proposed controller | 0 | 3.3 × 10−4 | 9.4 | 0 | 1.67 × 10−4 | 10 | 0 | 1.6 × 10−4 | 11 | 0 | 1.5 × 10−4 | 10 |

| DMPC controller | 0 | 7 × 10−4 | 12 | 0 | 2.9 × 10−4 | 12 | 0 | 2.7 × 10−4 | 13 | 0 | 2.7 × 10−4 | 13 |

| PID controller | 1 × 10−4 | 8 × 10−4 | 14 | 0 | 3.3 × 10−4 | 14 | 0 | 3 × 10−4 | 15 | 0 | 3.1 × 10−4 | 15 |

| Controller | ΔPtie,12(pu) | ΔPtie,23(pu) | ΔPtie,34(pu) | ΔPtie,41(pu) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | MO (pu) | MU (pu) | ST (s) | |

| The proposed controller | 0 | 3.1 × 10−4 | 9.3 | 0 | 1 × 10−4 | 7 | 1.4 × 10−4 | 0 | 9 | 3.5 × 10−4 | 0 | 8 |

| DMPC controller | 0 | 5.84 × 10−4 | 11 | 0 | 1.7 × 10−4 | 9 | 2.4 × 10−4 | 0 | 10 | 6 × 10−4 | 0 | 10 |

| PID controller | 1 × 10−4 | 6.7 × 10−4 | 13 | 0 | 1.8 × 10−4 | 10 | 2.9 × 10−4 | 0 | 12 | 7.1 × 10−4 | 0 | 13 |

4.3. Scenario (3)

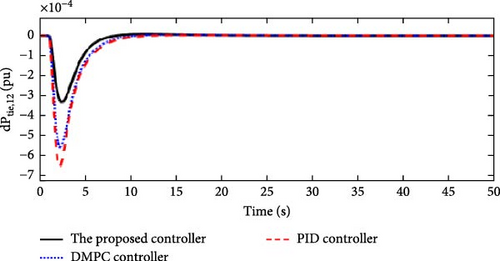

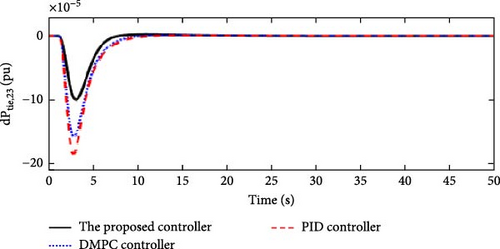

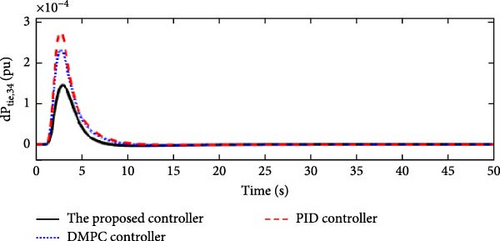

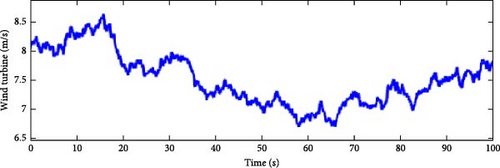

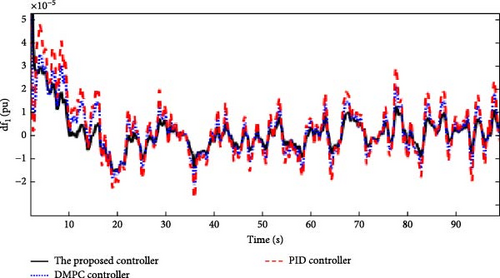

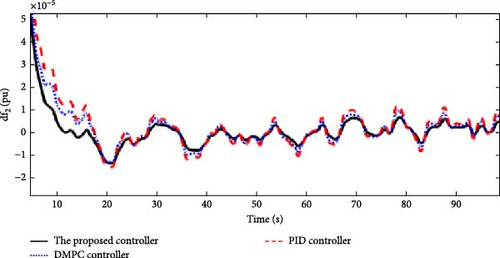

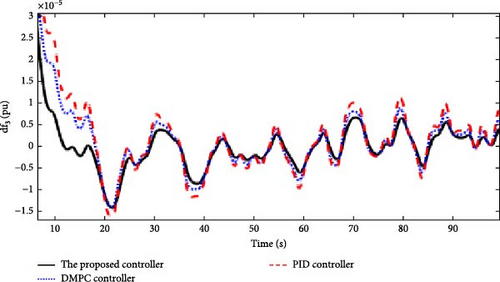

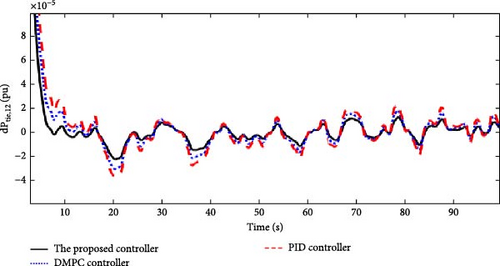

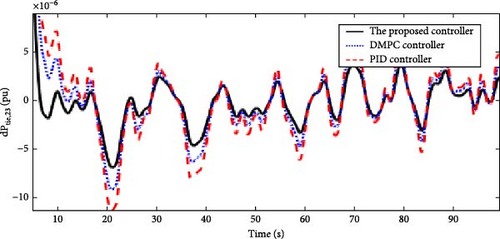

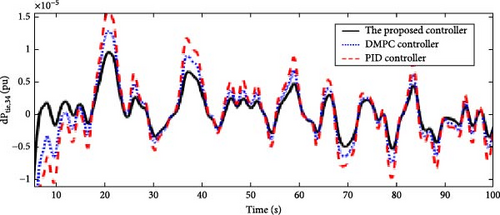

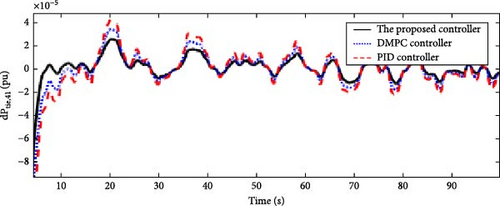

In this scenario, the time-varying wind speed is applied to the Area 1 of the multi-area power system as shown in Figure 21. Also, in this scenario, the effect of severe uncertainties in the parameters D and M (M = D = −20%) in each of the areas of the power system is considered. Figures 22, 23, 24, and 25 show the FDs of areas 1, 2, 3, and 4 using different control methods, respectively. Figures 26, 27, 28, and 29 show the PDs of the tie-line for areas 1–2, 2–3, 3–4, and 4–1 using different control methods, respectively. According to Figures 22–29, the proposed control method (H∞–DRL) has been able to reduce FDs, as well as PDs between tie-lines and has an effective performance against severe disturbances and severe uncertainties. The results for Scenario (3) are shown in Tables 8 and 9.

| Controller | Δf1(pu) | Δf2(pu) | Δf3(pu) | Δf4(pu) |

|---|---|---|---|---|

| MFD (pu) | MFD (pu) | MFD (pu) | MFD (pu) | |

| The proposed controller | 5 × 10−5 | 5 × 10−5 | 4.5 × 10−5 | 4.4 × 10−5 |

| DMPC controller | 7 × 10−5 | 5 × 10−5 | 5.5 × 10−5 | 5.6 × 10−5 |

| PID controller | 8 × 10−5 | 6 × 10−5 | 6 × 10−5 | 6.4 × 10−5 |

| Controller | ΔPtie,12(pu) | ΔPtie,23(pu) | ΔPtie,34(pu) | ΔPtie,41(pu) |

|---|---|---|---|---|

| MD (pu) | MD (pu) | MD (pu) | MD (pu) | |

| The proposed controller | 10 × 10−5 | 13 × 10−5 | 7 × 10−5 | 7.1 × 10−5 |

| DMPC controller | 11.5 × 10−5 | 17 × 10−5 | 8.5 × 10−5 | 11 × 10−5 |

| PID controller | 13 × 10−5 | 21 × 10−5 | 9.8 | 12 × 10−5 |

5. Conclusion

- •

Improvement of frequency deviations due to disturbances and uncertainties in the power system by 50%.

- •

Improvement of communication line power deviations due to disturbances and uncertainties by 46%.

Nomenclature

-

- α:

-

- Coefficient of frequency deviation

-

- Tij:

-

- The equivalent coefficient of tie-line i j

-

- Di:

-

- The equivalent damping coefficient of i-th area

-

- Mi:

-

- The equivalent inertia coefficient of i-th area

-

- Tr,i, Tt,i, Tg,i:

-

- The equivalent inertia coefficient of i-th area

-

- Kr,i:

-

- Time constant of boiler, turbine and governor in i-th area

-

- CP:

-

- Wind energy utilization coefficient

-

- r:

-

- Radius of the wind turbine blades

-

- λ:

-

- Wind energy utilization coefficient

-

- Pm:

-

- Mechanical power of WTG

-

- Pe:

-

- Output of the generator of WTG

-

- v:

-

- Wind speed

-

- Pf:

-

- Auxiliary frequency control command of WTG

-

- ΔT:

-

- Difference in torque between the electromagnetic power and the mechanical power

-

- ω:

-

- Rotor speed of WTG

-

- Te:

-

- Time constant of generator of WTG

-

- F:

-

- Friction coefficient of the transmission system of WTG

-

- Kc:

-

- Maximum power tracking coefficient of WTG

-

- ρ:

-

- Density of air

-

- ωn:

-

- Rated rotor speed

-

- ω0:

-

- Initial rotor speed

-

- Δfi:

-

- Frequency deviation of i-th area

-

- ΔPg,i:

-

- Output change of governor of thermal power plant in i-th area

-

- ΔPtie,i:

-

- Tie-line power in i-th area

-

- ΔPL,i:

-

- Load demand disturbance in i-th area

-

- β:

-

- Pitch angle

-

- ui:

-

- Active power control signal of thermal power plant in i-th area

-

- ΔXg,i:

-

- Output change of boiler of thermal power plant in ith area

-

- ΔPr,i:

-

- Output change of turbine of thermal power plant in i-th area

-

- ACEi:

-

- area control error in i-th area

-

- Hw:

-

- Inertia coefficient of transmission system of WTG

Abbreviations

-

- 3DOF-PID:

-

- Three degrees of freedom proportional–integral–derivative

-

- DMPC:

-

- Distributed model predictive control

-

- DOSA:

-

- Developed owl search algorithm

-

- FDs:

-

- Frequency deviations

-

- FMPC:

-

- Fuzzy model predictive control

-

- FNN:

-

- Feed-forward neural networks

-

- FOPID:

-

- Fractional-order proportional–integral–derivative

-

- MO:

-

- Maximum overshoot

-

- MPC:

-

- Model predictive control

-

- MPPT:

-

- Maximum power point tracking

-

- MU:

-

- maximum undershoot

-

- PDs:

-

- Power deviations

-

- PIλ (1+PDF):

-

- Proportional–fractional integrator plus proportional–derivative with filter

-

- RL:

-

- Reinforcement learning

-

- SMC:

-

- Sliding mode controller

-

- ST:

-

- Settling time

-

- TID:

-

- Tilt-integral–derivative

-

- TPP:

-

- Thermal power plants

-

- VDC:

-

- Virtual damping control

-

- WTs:

-

- Wind turbines.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

This research was done without any financial support or funding.

Appendix

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Mi | 25 | Tt,i | 0.03 |

| Di | 0.5 | Tg,i | 0.2 |

| T12 | 0.2 | Kr,i | 0.3 |

| T23 | 0.15 | R | 0.02 |

| T34 | 0.25 | ωn | 1.091 |

| T41 | 0.21 | λn | 8.1 |

| Tr | 7 | Hw | 5.19 |

| F | 0.01 | Te | 0.02 |

| CP | 0.44 | Kc | 0.5787 |

| KP0 | 0.02 | KD0 | 46.6 |

Open Research

Data Availability Statement

The data are contained within the article.