Deep Learning Algorithms for Assessment of Post-Thyroidectomy Scar Subtype

Abstract

The rising incidence of thyroid cancer globally is increasing the number of thyroidectomies, causing visible scars that can greatly affect the quality of life due to cosmetic, psychological, and social impacts. In this study, we explored the application of deep learning algorithms to objectively assess post-thyroidectomy scar morphology using computer-aided diagnosis. This study was approved by the Institutional Review Board of Yonsei University College of Medicine (approval no. 3-2021-051). A dataset comprising 7524 clinical photographs from 3565 patients with post-thyroidectomy scars was utilized. We developed a deep learning model using a convolutional neural network (CNN), specifically the ResNet 50 model and introduced a multiple clinical photography learning (MCPL) method. The MCPL method aimed to enhance the model’s understanding by considering characteristics from multiple images of the same lesion per patient. The primary outcome, measured by the area under the receiver operating characteristic curve (AUROC), demonstrated the superior performance of the MCPL model in classifying scar subtypes compared to a baseline model. Confidence variation analysis showed reduced discrepancies in the MCPL model, emphasizing its robustness. Furthermore, we conducted a decision study involving five physicians to evaluate the MCPL model’s impact on diagnostic accuracy and agreement. Results of the decision study indicated enhanced accuracy and reliability in scar subtype determination when the confidence scores of the MCPL model were integrated into decision-making. Our findings suggest that deep learning, particularly the MCPL method, is an effective and reliable tool for objectively classifying post-thyroidectomy scar subtypes. This approach holds promise for assisting professionals in improving diagnostic precision, aiding therapeutic planning, and ultimately enhancing patient outcomes in the management of post-thyroidectomy scars.

1. Introduction

The incidence of thyroid cancer is increasing rapidly worldwide. Traditional thyroidectomy involves making transverse incisions in the neck, leaving visible scars in exposed areas. Therefore, post-thyroidectomy scars can lead to considerable cosmetic problems and are associated with poor quality of life [1, 2]. Furthermore, as scars remain visible in exposed anatomical regions, they can result in negative psychological and social consequences, including low self-esteem, anxiety, depression, and stigmatization [3, 4]. In addition to esthetic issues, symptoms associated with post-thyroidectomy surgery such as pruritus, tightening, pain, and restriction of mobility on the scar could significantly impair the quality of life of patients [1, 4].

Various modalities have been used to treat post-thyroidectomy scars, including surgical revision, intralesional steroid injections, topical therapies, antimitotic agents, and laser treatment. However, scar management remains a challenge for dermatologists, as many cases require long-term, repeated, and combined treatment, tailored to the unique characteristics of each scar [5–8]. In addition, individual patients may want specific features of their scars treated, such as the size, shape, protrusion, recession, adhesion, and difference of color compared to that of the surrounding normal skin [9]. Therefore, as these features vary by scar subtype, appropriately assessing the morphological subtypes of scars to establish a suitable and personalized therapeutic strategy for individual patients is necessary.

Various scar evaluation tools for assessing both diagnostic and cosmetic outcomes are widely used and are known to reflect the therapeutic needs of patients; however, a standardized tool is still lacking, which remains a significant limitation in the field [10–12]. Recently, many studies using deep learning have been conducted in the field of dermatology [13–17]. In a 2017 study, a deep learning model demonstrated skin cancer diagnosis accuracy comparable to that of a dermatologist [18]. Since then, in 2021, deep learning models have become mainstream, with 85% of skin disease diagnosis algorithm developments using deep learning methods [19]. Additionally, deep learning–based computer-aided diagnosis systems have proven beneficial to physicians beyond simple skin diagnosis (Table S1) [20–22].

Although multiple images of the same skin lesion can be obtained, the deep learning model may not remain consistent [23]. The post-thyroidectomy scar dataset we collected also contains multiple images of the same scar (Figure S1). To overcome this problem, a new learning method was developed in a recent study [24]. This method utilizes the characteristics of skin data obtained from multiple clinical photographs of a single lesion. Therefore, we hypothesized that deep learning models could classify scar subtypes after thyroidectomy and that utilizing multiple images of the same scar could help develop more robust deep learning models.

Considering the limitations of current scar evaluation methods, particularly in subjective assessments, we propose a deep learning model to classify post-thyroidectomy scar types by analyzing their morphological characteristics. Using deep learning, we aimed to minimize the variability in scar assessments while improving diagnostic accuracy. We developed a deep learning model that employs multiple clinical photography learning (MCPL) from multiple clinical photographs. To validate its effectiveness, we compared a conventional deep learning model with a model employing MCPL. In addition, we conducted a decision study using the MCPL method to evaluate the improvements in diagnostic accuracy and physician agreement.

2. Materials and Methods

2.1. Data Source and Study Approval

This study was conducted on patients with post-thyroidectomy scars who visited the Department of Dermatology at Yonsei University Gangnam Severance Hospital between 2009 and 2019. Clinical data and photographs of their post-thyroidectomy scars were obtained. This study was conducted in accordance with the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of Yonsei University College of Medicine (approval no. 3-2021-051). The requirement for written informed consent was waived owing to the retrospective nature of the study, in which deidentified data were used. The analysis only included photographs captured during the initial visits. Three board-certified dermatologists (M.R., J.K., and S.L.) independently assessed each photograph to determine the diagnostic criteria (gold standard) for scar type. In the case of discrepancies between the reviewers, a final decision was reached by majority rule.

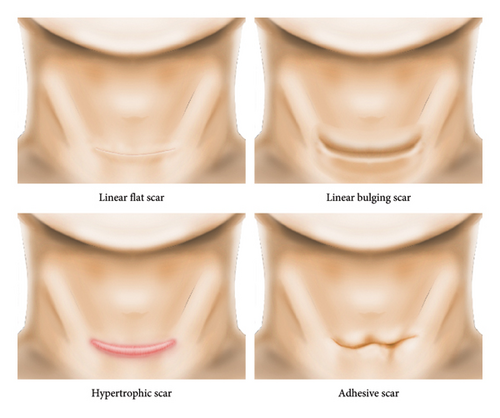

3. Data Preparation

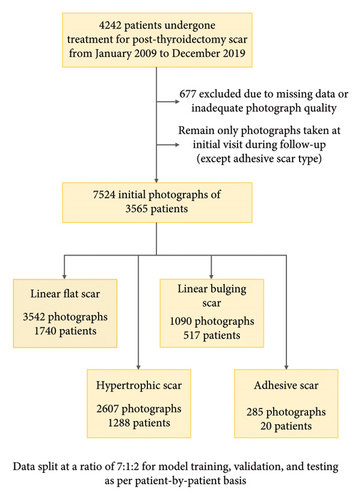

We developed and validated deep learning models to classify four scar types (Figure 1(a)). We excluded missing and inadequate-quality photographs (e.g., images of scars that were not from horizontal incisions or were not in frontal view). Among the follow-up data for each patient, only first-visit data were used. However, all the photographs of adhesive scars were used because of insufficient data. A total of 7524 photographs from 3565 patients were included in the study. The number of multiple images of the same lesion per patient varied from one to seven. The most common scar type was linear flat (n = 1740; 48.81%), followed by hypertrophic (n = 1288; 36.13%), linear bulging (n = 517; 14.50%), and adhesive (n = 20; 0.56%). Most patients were women (3283 [92.09%]), and the mean age was 38.55 ± 8.41 years. A history of keloids and c-sec hypertrophic scars was found in 38 (1.07%) and 41 (1.35%) patients, respectively. We split the data into training, validation, and test datasets for each subtype at a ratio of 7:1:2 on a patient-by-patient basis. This process is summarized in Figure 1(b), and information regarding the dataset is presented in Table 1.

| Scar type (no. of images, %) | Linear flat scar (1740, 48.81%) | Linear bulging scar (517, 14.50%) | Hypertrophic scar (1288, 36.13%) | Adhesive scar (20, 0.56%) | Total (3565, 100.00%) |

|---|---|---|---|---|---|

| Sex (female), no. of patients (%) | 1635 (93.97%) | 481 (93.04%) | 1148 (89.13%) | 19 (95.00%) | 3283 (92.09%) |

| Age, mean (SD) | 38.37 (8.90) | 43.68 (10.37) | 36.57 (8.57) | 49.05 (11.98) | 38.55 (9.41) |

| Height, mean (SD) | 162.14 (5.94) | 161.15 (6.18) | 162.71 (7.25) | 160.01 (4.1) | 162.19 (6.49) |

| Weight, mean (SD) | 58.43 (10.07) | 60.59 (10.71) | 62.4 (12.54) | 57.9 (5.51) | 60.18 (11.24) |

| BMI, mean (SD) | 22.18 (3.25) | 23.31 (3.73) | 23.5 (3.89) | 22.65 (2.38) | 22.58 (3.62) |

| History of keloid, no. of patients (%) | 14 (0.80%) | 5 (0.97%) | 19 (1.48%) | 0 (0.00%) | 38 (1.07%) |

| History of c-sec hypertrophy, no. of patients (%) | 28 (1.61%) | 4 (0.77%) | 16 (1.24%) | 0 (0.00%) | 48 (1.35%) |

| No. of images in train/valid/test (no. of patients in train/valid/test) | 2480/354/708 (1218/172/350) | 755/106/229 (361/51/105) | 1836/257/514 (901/127/260) | 185/0/100 (12/0/8) | 5256/717/1551 (2492/350/723) |

- Note: History of keloid: Previous occurrence of keloids. History of c-sec hypertrophy: Previous occurrence of hypertrophic scarring from a cesarean section. No. of images in train/valid/test: The images were split into training, validation, and test datasets in a 7:1:2 ratio on a patient-by-patient basis.

- Abbreviations: BMI, body mass index; SD, standard deviation.

3.1. Convolutional Neural Network (CNN)

CNNs are actively used in computer vision owing to recent hardware developments and the availability of big data. CNNs differ from traditional machine-learning methods in that they employ an integrated structure [25]. This allows them to extract and classify relevant features simultaneously during the learning process. Figure S2 illustrates an example of the CNN inference process.

The ResNet 50 CNN model was used, in which the number of last nodes in the scar-type classification was four [26]. For training the CNN, all images were resized to 224 × 224 and trained, and the ImageNet-pretrained CNN was fine-tuned. The Adam optimizer was used for all the tasks (model learning rate, 1 × 10−5) to prevent the CNN from overfitting the training data. The validation loss was measured for 10 epochs; if it did not decrease, early stopping was applied. Considering the limitations of the GPU CUDA memory, the batch size was set to 64.

For the deep learning method, the PyTorch framework Version 2.0.1 (https://pytorch.org/) with torchvision 0.15.2/CUDA 11.8 was used. The hardware system consisted of an Intel i9-13900K (CPU mark: 59107), a 64-GB DDR4 RAM, a 1-TB solid-state drive, and one NVIDIA GeForce RTX 4090TI 24-GB GPU.

3.2. Training Mechanism

Figure S1 (supporting information) shows examples of multiple images of each scar subtype after thyroidectomy captured per patient in the scar dataset. We developed an MCPL method that utilizes instance-based similarity loss. This method learns the dermatological data characteristics on a per-patient basis from multiple images of a single lesion per patient. As shown in Figure S3 (supporting information), the developed loss function is divided into three parts. First, LSPI(same patient instance) assumes that lesion data from the same patient have similar characteristics. Next, LPS(positive similarity) assumes that the characteristics of data from the same lesion class are similar. Finally, LNS(negative similarity) ensures that different lesion classes have distinct characteristics. As shown on the left in Figure S3, feature maps are extracted using the deep learning model (in this case, batch size 10). Among the 10 feature maps, the feature map numbers (from the left) 1–3, 4–7, and 8–10 are patients 1 (blue), 2 (yellow), and 3 (red), respectively. For same patient instance loss (LSPI), multiple images taken of same lesions of each patient have very similar features. Therefore, features extracted from various images of the same lesion are assumed to be very similar. Positive similarity loss (LPS) indicates that images of the same scar type have similar features. Negative similarity loss (LNS) indicates that feature maps extracted from images of different scar types are different. All three losses are calculated based on cosine similarity. Finally, learning is performed by adding a weighted cross-entropy loss function that considers class imbalance.

4. Primary Outcome and Measurement

The area under the receiver operating characteristic curve (AUROC) was the primary outcome for measuring the performance of the models. Sensitivity, specificity, precision, F1-score, and accuracy were evaluated. To assess model confidence variation between multi-instance images, we analyzed the confidence scores of multi-instance images of the same lesion per patient to confirm the confidence variation between the data. All statistics were reported using point estimates and 95% confidence intervals (CIs). Data were analyzed and visualized using Python 3.7.0 (Python Software Foundation).

4.1. Explanatory Deep Learning Model

We employed a class activation map (CAM) to explain the predictions of our deep learning model [27]. A CAM provides an intuitive understanding of the importance of deep learning models for a particular class. It explains the decision-making process of the models and helps to increase their reliability. Using the CAM, we analyzed the confidence difference between multiple dermatological data points for one lesion in the MCPL model.

4.2. Decision Study

We conducted a decision study to determine the effectiveness of the MCPL model in helping physicians determine morphological scar subtypes. Figure S4 shows the decision-making process for the decision study. Five physicians were recruited, and 100 images (human set) were extracted from the test dataset for the decision study. At a 2-week interval, the scar type was first determined by only viewing an image (Phase 1), and then, the decision study was conducted again with the confidence score of the MCPL model (Phase 2). Figure S5 shows an example of the decision study Google Survey distributed to physicians.

5. Results

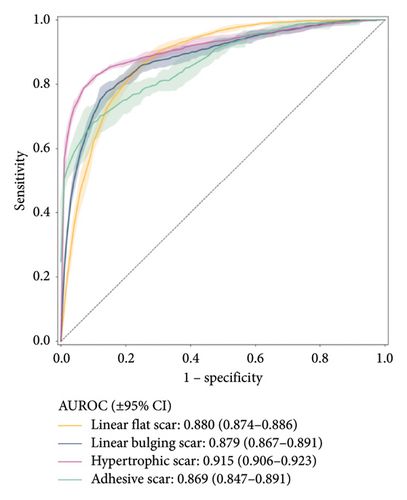

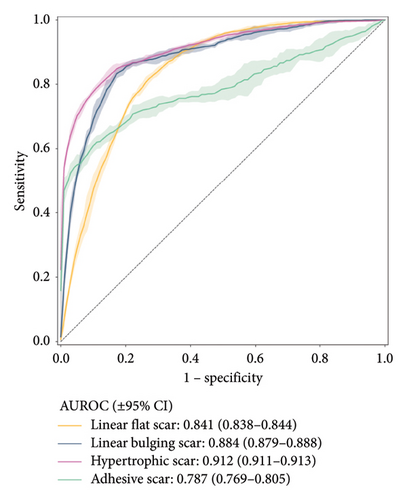

Figure 2 shows the receiver operating characteristic curve for each scar type. Among the four subtypes, the MCPL and baseline models exhibit the highest performance in classifying hypertrophic scars (AUROC: 0.915 vs. 0.912; 95% CI: 0.906–0.923 vs. 0.911–0.913), followed by linear flat scars (AUROC: 0.880 vs. 0.841; 95% CI: 0.874–0.886 vs. 0.838–0.844), linear bulging scars (AUROC: 0.879 vs. 0.884; 95% CI: 0.867–0.891 vs. 0.879–0.888), and adhesive scars (AUROC: 0.869 vs. 0.787; 95% CI: 0.847–0.891 vs. 0.769–0.805). In terms of mean AUROC and accuracy, the MCPL model (mean AUROC: 0.886 and accuracy: 76.312) outperforms the baseline model (mean AUROC: 0.856 and accuracy: 72.624). Details of the other metrics, such as precision, recall, specificity, and F1-score, are listed in Table S2. In addition, we used the Inception V3 model [28], and the results demonstrated that it performed similarly to the ResNet 50 model. This is shown in Figure S6 (supporting information).

6. Confidence Variation of Multi-Instance Images

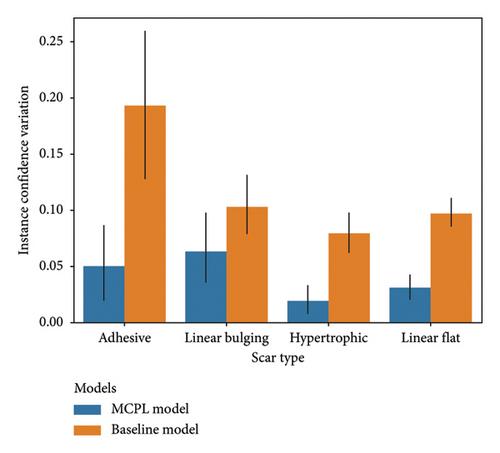

Figure 3(a) shows that both the MCPL and baseline models exhibit variations in instance confidence. For adhesive scars, these variations were 0.049 (95% CI: 0.015–0.084) in the MCPL model and 0.193 (95% CI: 0.124–0.261) in the baseline model. For the linear bulging scars, the variations were 0.063 (95% CI: 0.033–0.093) in the MCPL model and 0.103 (95% CI: 0.077–0.129) in the baseline model. For the hypertrophic scars, the variations were 0.019 (95% CI: 0.008–0.030) in the MCPL model and 0.079 (95% CI: 0.063–0.095) in the baseline model. For the linear flat scars, the variations were 0.031 (95% CI: 0.021–0.041) in the MCPL model and 0.097 (95% CI: 0.086–0.108) in the baseline model. Using the MCPL model, all the variations were lower than those obtained using the baseline model.

7. Performance Based on Decision Methods

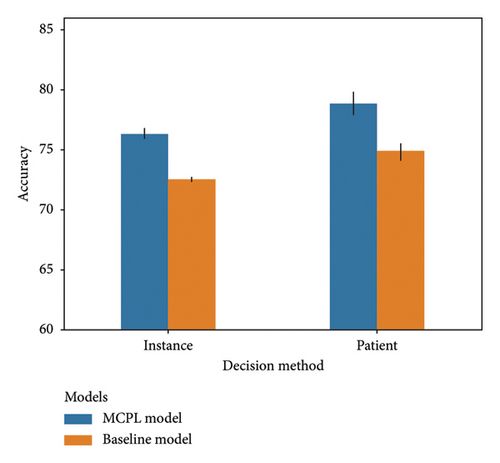

The bar chart in Figure 3(b) compares the accuracy of the results obtained using data from patients with two or more images. The instance-based prediction method measures the accuracy of individual data, whereas the patient-based prediction method calculates the accuracy based on the arithmetic mean of the confidence score for each patient. Using both methods, the accuracy of the baseline model improved from 72.519 (95% CI: 72.380–72.657) to 74.882 (95% CI: 73.933–75.831). For the MCPL model, the accuracy increased from 76.334 (95% CI: 75.848–76.820) to 78.817 (95% CI: 77.427–80.206) using the same methods.

7.1. Explanatory Deep Learning Model

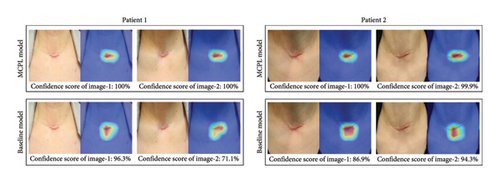

Figure 3(c) shows the CAM results obtained using both the MCPL and baseline models. The color CAMs use red to highlight areas important in the decision-making process of the model and blue to highlight the areas considered less significant.

7.2. Augmented Decision Making

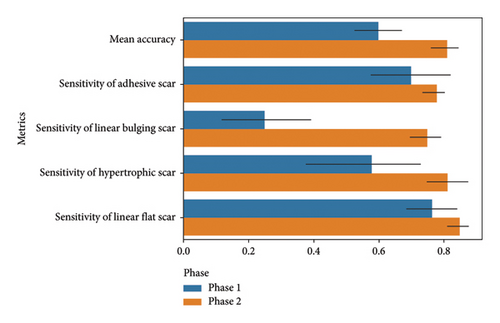

Figure 4 summarizes the results of the physicians in each phase. In Phase 1, the mean accuracies of the physicians were 0.600 (95% CI: 0.485–0.715), 0.250 (95% CI: 0.031–0.469), 0.580 (95% CI: 0.309–0.851), and 0.765 (95% CI: 0.646–0.884) for adhesive, linear bulging, hypertrophic, and linear flat scars, respectively. In Phase 2, with the inclusion of the confidence score of the MCPL model, the mean accuracies improved to 0.812 (95% CI: 0.751–0.873), 0.750 (95% CI: 0.674–0.826), 0.813 (95% CI: 0.718–0.909), and 0.850 (95% CI: 0.796–0.904) for adhesive, linear bulging, hypertrophic, and linear flat scars, respectively, demonstrating a significant improvement in accuracy. Fleiss kappa and an agreement heatmap (Figure S7) were utilized to assess the agreement reliability among the five physicians, yielding kappa values of 0.349 (95% CI: 0.207–0.492) for Phase 1 and 0.778 (95% CI: 0.674–0.881) for Phase 2.

8. Discussion

This study showed that the models performed reasonably well in classifying the subtypes of post-thyroidectomy scars compared with previous dermatologic applications [29]. Multiple medical images of patients with the same pathology, such as retinal fundus images or mammograms, often show similar features [24]. These similarities are observed even when images are taken from different perspectives of the same lesion. Consequently, the features extracted from multiple images using a deep learning model may be comparable. Specifically, we hypothesized that the MCPL method, which utilizes multiple images of the same lesion, could reduce confidence variations in the assessment of post-thyroidectomy scars using a deep learning model. Although the AUROC (0.879 vs. 0.884; 95% CI: 0.867–0.891 vs. 0.879–0.888) for linear bulging scars showed a slight decrease, the performance on the other three scar subtypes, particularly adhesive scars (AUROC: 0.869 vs. 0.787; 95% CI: 0.847–0.891 vs. 0.769–0.805), improved significantly. The MCPL model demonstrated superior performance in predicting hypertrophic scars (AUROC: 0.915; 95% CI: 0.906–0.923) compared with the other types, probably owing to the distinct red-to-pinkish coloration of these scars. The predictive performance for linear flat scars (AUROC: 0.880; 95% CI: 0.874–0.886) and linear bulging scars (AUROC: 0.879; 95% CI: 0.867–0.891) was moderate, with discrepancies observed in cases of mild hypertrophic changes or bulging. These discrepancies were also noted among the human experts, potentially contributing to the variance. In the decision study (mean accuracy: 0.812 vs. 0.6; 95% CI: 0.751–0.873 vs. 0.485–0.715; p value < 0.05) involving five physicians, there was a significant improvement in diagnostic accuracy during Phase 2. In this phase, predictions were made with reference to the MCPL model (mean accuracy: 0.812; 95% CI: 0.751–0.873). This was compared with Phase 1, in which predictions were based only on images (mean accuracy: 0.6; 95% CI: 0.485–0.715). The MCPL method significantly reduced the confidence variation for all scar types in the results of multiple images of the same scar for each patient. The CAM results confirmed that the MCPL model consistently identified the same lesion areas across multiple images. Moreover, the patient-based prediction method showed enhanced accuracy (MCPL: 76.334 (95% CI: 75.848–76.820) to 78.817 (95% CI: 77.427–80.206) compared to the instance-based prediction when determining the scar subtype of a patient.

Classifying the subtypes of post-thyroidectomy scars in dermatology is crucial because each patient has different treatment needs based on their scar subtype. For example, patients with such scars, for whom we obtained the best performance with this model, tended to be treated for color in addition to protrusion. This is because this scar subtype shows a distinct erythematous color compared with other subtypes. Intralesional corticosteroid injection, particularly with triamcinolone acetonide (TCA), is a common treatment for hypertrophic scars. TCA is effective because of its antimitotic effects on keratinocytes and fibroblasts, which contribute to scar protrusion [30]. Additionally, it induces a vasoconstrictive effect on scars, preventing the delivery of oxygen and nutrients, which are known to proliferate scars [31]. Therefore, TCA injection is considered the main treatment for hypertrophic scars because it has shown a good effect when used in combination with other therapeutic options such as copper bromide laser and ablative carbon dioxide laser [32, 33]. However, TCA injection within scars can induce side effects such as atrophy, pigmentary changes, and telangiectasia, which can accentuate other features that patients want to treat. Therefore, they must undergo other therapeutic options such as laser treatment [34]. For scars characterized by contraction and retraction, fractional ablative carbon dioxide laser or subcision treatment is preferred [35–37]. These therapeutic needs of patients are well represented in the Vancouver Scar Scale (VSS), the most widely used tool to assess the quality of a scar. The VSS consists of four scar characteristics (height, pliability, pigmentation, and vascularity) and evaluates a semiquantitative score ranging from 0 to 13 points. In a previous study, although scar characteristics scored differently for each thyroid scar, subtypes were not identified, the VSS scores differed significantly among the thyroid scar subtypes, suggesting that each subtype has different characteristics that require treatment [1].

Recently, artificial intelligence has been increasingly integrated into dermatology, forming various medical modalities and demonstrating capabilities comparable to or surpassing those of dermatologists for diagnosis and classification [38–41]. A recent study showed that a CNN model classifying postoperative scars by severity achieved expert-level performance [42]. As dermatologists encounter many patients with post-thyroidectomy scars and ambiguously classify scar subtypes in real-world dermatologic clinics, they need a more objective assessment of scars beyond their visual and physical assessments. This deep learning model reliably assessed the morphological characteristics of post-thyroidectomy scars, and physicians demonstrated improved accuracy and sensitivity in classifying scar subtypes after augmented decision-making. We believe that these findings can significantly contribute to medical practice, from diagnosis to therapeutic planning, for post-thyroidectomy scars.

This study had some limitations. The clinical photographs were obtained at a single tertiary institution, and the models were not validated using external data. Additionally, the skin color of most of the study population was either III or IV on the Fitzpatrick scale. Hence, the performance was suboptimal for patients with other skin colors.

9. Conclusion

In this study, we developed a deep learning model to distinguish between post-thyroidectomy morphological scar subtypes. A total of 7524 scar images from 3565 patients were used, and the datasets were organized for each of the four scar subtypes. In particular, we used the MCPL method, considering that skin images can be multiple images of the same lesion. The MCPL method not only improved the performance of the baseline model but also significantly reduced the confidence variation when assessing multiple images of the same patient. In addition, the incorporation of decision-making with deep learning models markedly improved the diagnostic accuracy and reliability of the agreement between assessments. We expect that these deep learning models will be instrumental in aiding the evaluation and treatment planning of post-thyroidectomy scars, thereby enhancing both diagnostic precision and therapeutic outcomes.

Disclosure

This manuscript was presented at the 18th Congress of the Asian Association of Endocrine Surgeons—AsAES 2023.

Conflicts of Interest

The authors declare no conflicts of interest.

Author Contributions

Yuseong Chu: formal analysis, methodology, software, visualization, writing–original draft. Seung-Won Jung: data curation, investigation, methodology, writing–original draft.

Solam Lee: conceptualization, data curation, writing–original draft.

Sang Gyun Lee: data curation, resources.

Yeon-Woo Heo: investigation, resources.

Sang-Hoon Lee: investigation, resources.

Hang-Seok Chang: data curation, resources.

Yong Sang Lee: data curation, resources.

Seok-Mo Kim: data curation, resources.

Sang Eun Lee: data curation, resources.

Byungho Oh: Methodology, writing–review and editing.

Mi Ryung Roh: conceptualization, funding acquisition, project administration, writing–review and editing.

Sejung Yang: conceptualization, funding acquisition, supervision, writing–review and editing.

Yuseong Chu and Seung-Won Jung contributed equally to this work.

Funding

This study was funded by the National Research Foundation of Korea (NRF) through grants from the Ministry of Science and ICT (NRF-2022R1A2C2091160, NRF-2021R1A2C1094638) and supported by the “Regional Innovation Strategy (RIS)” program, funded by the Ministry of Education (MOE) and managed through the NRF (2022RIS-005).

Supporting Information

Figure S1. Post-thyroidectomy scar subtypes: multiple images per patient.

Figure S2. Example of convolutional neural network analysis process to classify scar subtypes of post-thyroidectomy scar images. Initially, low-level features are extracted from the feature extractor, and as it becomes deeper, high-level features are extracted. Based on the extracted features, the fully connected layer multiplies the weight of each node to create the prediction (output).

Figure S3. Multiple clinical photography learning (MCPL) process.

Figure S4. Schematic flow of decision study. The study was divided into two phases to assess physicians’ performance in diagnosing. In Phase 1, the physician determined the morphological type by looking at the scar image alone; In Phase 2, the physician made a decision by referring to the scar image and AI results. An example of the Google survey used in the decision study can be found in Figure S5.

Figure S5. Example of a decision study distributed to physicians. (a). Example of Phase 1. (b) Example of Phase 2.

Figure S6. Receiver operating characteristics of post-thyroidectomy scar prediction using Inception V3 model: (a) MCPL model. (b) Baseline model.

Figure S7. Agreement heatmap between physicians at each phase. (a). Agreement heatmap of Phase 1. (b) Agreement heatmap of Phase 2.

Table S1. Comparison of diagnostic sensitivity and specificity of physicians with and without AI assistance in skin cancer detection.

Table S2. Comparison of the performance between the baseline and MCPL model.

Open Research

Data Availability Statement

The data reported in this study are available from the corresponding author upon reasonable request. Also, the source code for this work is available at https://github.com/wormschu/multiple-clinical-photography-learning-/.