Complexity Engineering: How Subjective Issues Become Objective

Abstract

This study details the substantial technological progress experienced in the last few decades, its impact on engineering, and how machine learning along with data science can contribute to solving human problems. The objective here is to establish the principles of “complexity engineering,” based on the works of Edgar Morin, and to demonstrate how these principles are suitable for engineering to deal with complex systems and the wicked problems linked to them. Thus, initially, a history of the events, discoveries, and disruptive inventions that marked engineering in recent centuries is made. Conceptual considerations and practical applications of complexity engineering in different areas of knowledge are also shown. The idea is to provide a historical perspective of engineering development that started before the advent of scientific methodological contributions and is based on accurate observation of natural behaviors. Since Galileo and Newton’s objective vision, scientific progress strongly influenced engineering progress, leading to the creation of unthinkable wonders, allowing spatial trips, and mainly providing a more comfortable daily life. However, two important new issues have emerged: ways to relate this progress with life on Earth and techniques to use the big data available to improve methodological engineering attitude. In this study, these questions are discussed, and we have shown that objectively obtained big data can be used to address subjective human problems, creating a new discipline called complexity engineering. The applications of complexity engineering to real problems are presented using examples of research developed by the authors involving urban planning, mental health, and landscape ecology in problems that require massive use of data.

1. Introduction

Engineering as a human endeavor has a long history marked by disruptive events, discoveries, and inventions that changed the ways of understanding the world and the universe and acting on them. The virtuous cycle between advances in engineering and science was gradually consolidated, being significantly influenced by paradigms that shaped the worldviews of engineers and scientists at each time.

Since prehistoric times, engineering has been a part of human life and has led to the development of weapons for hunting and tools to convert hunted animals into food.1 Shelters were created to protect against bad weather, and fire was discovered, allowing preparation of food and furnishing necessary heat to guarantee living conditions [2, 3].2 The First Agricultural Revolution, which marks the change in the way of life from hunter-gatherer to sedentary farmer, required our ancestors to develop new tools and new ways of occupying geographic space suitable for this new transition in the history of humanity [5].

In ancient great civilizations, engineering played a fundamental role in the consolidation and expansion of their empires. In the Egyptian civilization, the building industry was developed upon remarkable constructions and the invention of cement, combining polishing materials with gypsum and water. The Greeks improved building techniques by combining aesthetics with construction abilities and used precision cutting techniques to build columns and large structures that have existed from VII B.C. until now [6, 7].

According to the best engineering patterns, all such works were carried out, and monuments were built without the knowledge of the laws of physics, relying only on accurate natural observation and meticulous techniques [6]. Following this heritage, the Romans invented concrete and developed advanced building technologies for large structures. In addition, urban concepts began to appear, with water distribution and roads playing an important role in improving human life [6, 8].

The XVI century can be viewed as a time corresponding to the transition from empirical engineering to a scientific way of thinking about problems using formal mathematical ideas and initial natural laws. Simple machines, such as pulleys, gears, and levers, were used for power transmission and force multiplication [8]. Inspired by a vision brought by the Scientific Revolution that was taking place during this period, engineering adopted the mechanistic-deterministic paradigm as its guide and the mastery of nature as its objective.

Such a paradigm, focused on the isolated understanding of each part of a system and the conception that the whole is simply the sum of these parts [9], has often caused engineers and scientists to interpret challenges as if they were “tame” or “benign” and not as “wicked” problems [10].

However, problems that humanity currently faces are increasingly complex and interconnected, requiring a new approach to finding solutions to them. Nowadays, exploring a new way of thinking, called “complexity paradigm,” has proven to be a suitable worldview for this purpose. Complex ideas are pervading science in the XXI century, as evident from the 2021 Physics Nobel Prize [11], which recognized a multidisciplinary methodology of pursuing science that must be extended to technological issues, legitimizing the concept of “complexity engineering” [12, 13].

The objective of this study is to establish the principles of “complexity engineering” based on the works of Edgar Morin [14, 15]. As pointed out by Piqueira & Mascarenhas [16], complexity engineering is “a type of engineering, of multi and transdisciplinary origin, considering the nonlinear and chaotic dynamics of physical and biological systems, combined with the immense computational capacity currently available”. So, complexity engineering is a way for engineers to think and act with complex systems and the wicked problems linked to them from a systemic perspective. Thus, initially, a history of the events, discoveries, and disruptive inventions that marked engineering in recent centuries is made (Section 2), and then, in the next sections, conceptual considerations and practical applications of complexity engineering in different areas of knowledge are shown.

In Section 3, these principles are described in connection with a case study of design and building processes, considering that they are composed of open systems [17], have nonlinearities and noise [18], and lead to the emergence of unexpected and self-organized behavior [19]. In turn, Section 4 focuses on one of the pillars of artificial intelligence (AI), highlighting how the theoretical-conceptual bases and advances in computer engineering are applied to machine learning (ML).

In sequence, Section 5 describes ways to measure complexity using concepts derived from Claude E. Shannon’s information theory [20], followed by Section 6, which explains a method to formulate an engineering problem in a complex manner and presents two examples, one from behavior science and the other from environmental science, showing the diversity of possible applications of complex engineering tools to convert human issues into objective variables.

2. Engineering and Its Fruitful History in Partnership With Sciences and Mathematics

As previously pointed out, the XVI century marks a change from empirical engineering to one strongly based on science and mathematics.

In the XVII and XVIII centuries, hegemonic nations created military academies, where building techniques were taught and developed, allowing skilled armies to build ships, roads, bridges, and weapons essential for dominating new unknown territories [8]. At that time, engineering knowledge built and modified nature, allowing comfort and quality of life for human beings from a naturalist perspective. Rational interpretations guided engineering, and people started to use Galileo’s scientific method to systematize the development of engineering knowledge as a discipline based on mathematics and emerging physics [21]. French scientists such as Poisson, Navier, Coriolis, Poncelet, and Monge defined a technological approach based on scientific knowledge, resulting in the creation of École Polytechnique in Paris in 1774, where research and engineering activities were initiated with a theoretical systematization [22].

In addition, in 1794 in Paris, École de Mines started to teach and develop technological disciplines to explore mineral resources in a scientific manner. Combined with the simultaneous popularity of positivism, this turned engineering into a respected profession, and many new schools were created worldwide: Praga (1806), Vienna (1815), Karlsruhe (1825), Munich (1827), MIT (1865), Carnegie Mellon (1905), and Caltech (1919) [21].

As a consequence of the strong industrial development based on thermal machines, such a technological environment improved the automation of production processes and transformed the means of mass transportation. In addition, the automotive industry was born, and individual mobility began to change urban life all over the world [22].

Simultaneously, the phenomenon of electromagnetism, discovered by Michael Faraday (1791–1867), provided the possibility of producing electrical currents by magnetic flux variations. Owing to the work of Nikola Tesla (1856–1943), electromagnetism was used to build electrical machines, allowing massive generation and transmission of energy over geographically distributed regions [23]. Combined with the automotive industry, electrical power distribution facilities modified the means of production and transformed daily human life in urban and rural areas [24]. In 1899, Guglielmo Marconi (1874–1937) conducted the first wireless communication through the English Channel [25]. This historic achievement was based on the ideas of Faraday and Tesla, the experiments of Hertz (Henrich Hertz 1857–1894), and involved the remarkably general Maxwell equations (James Clerk Maxwell: 1831–1879).

As a consequence of the new facilities of energy distribution and communication, the 20th century experienced remarkable development in material science, which was the building block for big infrastructure projects, industrial manufacturing, all modes of transportation, more productive crops, and health care, ensuring a more comfortable daily life [26]. Halfway through the same century, the Manhattan Project, headed by Julius Robert Oppenheimer, showed how engineering and science, combined with computation technology development [22, 25], can come together to design and execute a large project, and demonstrated that both are not separated from society and that they need to be based on ethical principles [27]. Thus, although this project managed to promote the “art of directing the great sources of power in nature for the use and convenience of man” (a reference to the objective of civil engineering present in the Royal Charter of the Institute of Civil Engineering of 1828 [28]), its consequences for humanity itself was strongly contested, including by Oppenheimer himself.

This newly developed scientific and technological environment, as well as the dilemmas brought by it, generated feedback on ideas, with the entire knowledge behavior modeled as a general feedback system [7, 29, 30]. A new scientific paradigm emerged from the action of overly many thinkers around the world, nowadays called “complexity,” that combined with data science, has been producing an important methodological revolution [31].

3. Complexity Engineering Principles

The current concept behind engineering problems is based on the disjunction principle, that is, the entire problem is viewed as a system composed of interacting parts that are studied independently, with the interactions defined by the technical specifications of each part [32].

Adapting the advances to system theory proposed by Morin [14], the engineering design process can be improved by considering the internal interactions of the system more accurately, considering the inherent nonlinearities and stochastic behavior related to the parts [31]. Such thinking allows the prediction of cascade bifurcations owing to long-term parameter variations, avoiding spurious oscillations and chaotic behaviors [19] and establishing precise models for perturbations and noise [33].

Extending this approach to the interactions between a system and the environment permits modeling of possible mutual effects of physical, chemical, biological, economic, and social phenomena [11–13, 34], approaching engineering problems akin to the concept of a wicked problem, which emerged to characterize problems related to social policies [10].

As mentioned earlier, complexity theory can be applied to engineering problems and considers the possible emergence of unexpected behaviors because of internal nonlinearities and randomness [35]. In addition, the system must be conceived as open, with all external constraints playing a role in the design conception [36]. Scaling laws is another fingerprint of complex systems, which would be detected by multiscale approaches [37].

It must be noted that complexity, in this context, has a connotative meaning that differs from the usual ideas of structural complication and many equation models; that is, it is related to the way of seeing an object as open, nonlinear, stochastic, and self-organized [38].

3.1. Elevated Road as an Archetype of Complexity Engineering

An elevated road, which is a highly complex engineering construction, can be used to illustrate these concepts. The design process begins with the necessity of connecting two places separated by a geographic factor that prevents or hinders the traffic of vehicles and people. In the initial phase, economic, social, environmental, and financial constraints determine the localization and maximum budget allowed to define the first specifications to initiate the design process.

Possible natural forces, permissible loads owing to traffic, and geometric factors need to be considered in calculations. Internal efforts and possible atmospheric variations determine the types of materials, beams, pillars, paving, and structural supports. This phase is followed by the executive project. The material and labor costs are detailed to translate ideas from the paper to the real world. Building work is demanding, and constant follow-ups are needed to solve unexpected and unavoidable problems during implementation.

After the inauguration, elevated roads must be maintained, with constant measurements using position and load sensors. Such monitoring allows the prevention and correction of faults. Hence, it is well known that conceiving, designing, building, and maintaining a bridge is a complex (complicated) engineering problem.

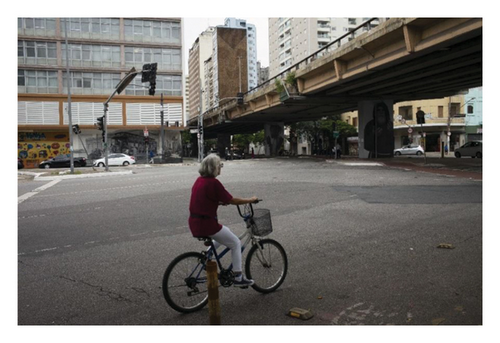

An emblematic example of this work is shown in Figure 1, with a lateral view of the “Elevado João Goulart,” built in the downtown area of São Paulo at the end of the 1960s, which is well maintained and operational until now.

Considering this way of thinking, that is, the good operational behavior of the elevated road as a robust structure to resolve traffic issues, it was built and designed as a closed system, not considering several environmental and human questions.

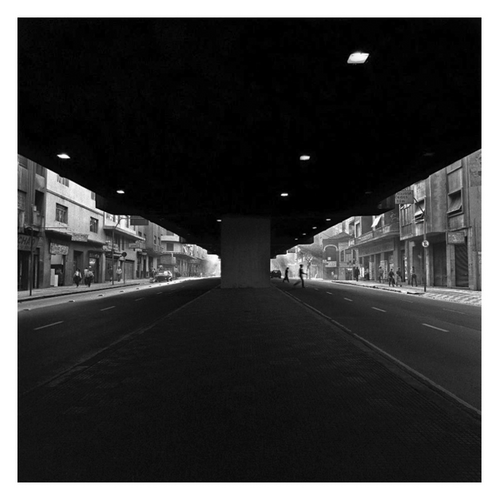

However, as shown in Figure 2, the elevated road was built in a populated region, which caused noise, traffic jams, and pollution that degraded life in the neighborhood. Hence, the first ideas to consider the “Elevado João Goulart” as an open system were born. Some new variables related to the interactions between the “Elevado” and the environment became important to the population’s health. Air quality degradation and acoustic noise can increase the prevalence of cardiorespiratory diseases and hearing loss.

As a new engineering facility is intended to improve life, these variables must be considered when a project is conceived. However, at that time, it was very difficult to consider these factors because there were not enough databases, access to computers was very restricted, and only subjective ideas could be traced. Currently, many computational and communication algorithms and databases allow the conversion of epidemiological and environmental data into objective boundary conditions to conceive and implement a new engineering well [39].

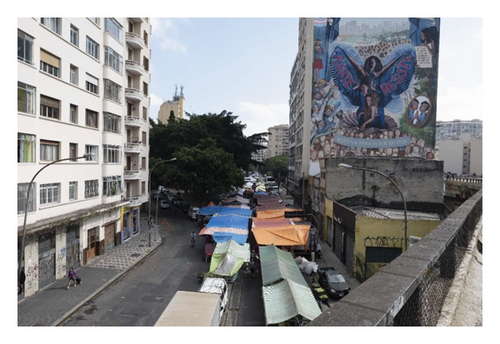

In addition, in the case of the “Elevado,” economic and social effects appeared because of hidden stochastic and nonlinear variables [40], provoking the emergence of radical changes under the “Elevado,” creating poorness niches and degrading the local commerce (Figure 3).

However, self-organization [33], an important natural and human phenomenon, operates positively. People living around the “Elevado” pressured the local administration to free the road from weekend traffic, allowing a recreational space (Figure 4). This attitude led to a decrease in pollution and improved the quality of life of the inhabitants.

Another self-organizing attitude was the conception of a low-cost open market (“feira livre”) (Figure 5), which provided food suitable for the acquisitive conditions of the neighborhood. Transformations in the “Elevado João Goulart” are part of a project to requalify the central region of São Paulo, which includes the “Belvedere Roosevelt” lookout.

It is a remnant area of the structure designed to connect the “Elevado” with other streets that will have rain gardens. The use of this nature-based solution demonstrates a shift from engineering solutions based exclusively on gray infrastructure to the inclusion of the so-called blue and green infrastructure.

As presented on subsequent sections using two case studies, methods and measures used to evaluate complex systems, sometimes leveraging the support of AI, can be used to evaluate changes in quality of life and environmental quality over time and space around the elevated road.

In a nutshell, by observing the “Elevado João Goulart” and its neighborhood from its conception up to now, it is possible to understand an engineering action from a complexity perspective: open, nonlinear, stochastic, and self-organizing systems. The issue of excess subjective variables can be addressed using new AI procedures.

4. ML

Previously, anonymous engineering designers and workers learned techniques based on empirical data and reality sensing to build the Egyptian pyramids, Greek monuments, and Roman urban facilities. Likewise, in modern times, ML techniques have allowed the exploration of important aspects of phenomena that are not dominant in the current science stage.

The seeds of the ML revolution can be found in Alan Turing’s seminal papers [41–45], published in the middle of the 20th century, which were improved by extreme electronic miniaturization and by building solid-state memories. Consequently, the main obstacles faced in Turing’s time, that is, low computational speed and magnetic memories, have been overcome by very fast processors and high-capacity memory.

During World War II, on December 8th, 1943, the first electronic digital computer (Colossus) began operating. It was built by Thomas Flowers’ engineering team at the Post Office Research Station in Dollis Hill, London [41]. As Jack Copeland [41] pointed out, Colossus contained 1600 electronic valves, while von Neumann’s IAS computer, built at the Princeton Institute of Advanced Studies in 1952, had 2600.

However, Colossus lacked two important features: it was not able to store programs internally, and it was not a general-purpose machine, as it was built for cryptanalytic tasks. In this context, on October 1st, 1945, Turing started to work at the Mathematics Division of the National Physical Laboratory (NPL) at Teddington, London [41], looking for a general-purpose computer machine as a concrete form of the Turing machine (TM) [46].

Turing’s approach to building the machine was different from that of von Neumann [47]. He attempted to avoid additional hardware-privileging software, that is, complex behavior achieved by programming instead of complex hardware. In 1947, Sir Charles Galton Darwin, the Director of NPL, worried about the slow progress in building the machine proposed by Turing, authorized him to spend a sabbatical at Cambridge to improve the theoretical basis of the whole work [42].

Facing technical and religious prejudices, the results of the sabbatical period were presented in 1948 as a report titled “Intelligent Machinery” [42] that was complemented by the seminal paper “Computer Machinery and Intelligence” [43] published in the journal “Mind”, in 1950.

The Turing test (imitation game) was described by combining software principles with the concept of computable numbers [48]. Then, mathematical and technological criticisms were answered with the proposition of a robust architecture, even considering Godel´s “Incompleteness theorem” [44, 49]. These ideas were developed because of the decisive participation of Alonzo Church and led to the “Church-Turing test” [44, 45].

Turing’s ideas about natural and AI, mainly reported in References [41, 45], have been converted into real computer systems and devices. These have been supported by the development of cheap memory devices and fast processors, owing to strong electronic miniaturization at the end of the 20th and the beginning of the 21st century.

Expert systems are ML programs that can provide advice regarding specific fields of knowledge [50], such as medical diagnosis or corporate planning [42]. These systems consist of a database complemented by an inference rule and a search engine [51]. The inference rule is engineered using information obtained either from human experts, building supervised learning (SL) systems, or inferred from collected actual data, constituting unsupervised learning (UL) systems [39].

Connectionism, another ML method, considers unorganized artificial networks as the simplest model of the central nervous system. This approach is described in McCulloch and Pitts’s work [52] and suggests a learning process resulting from interference with training between the connected neurons, adjusting the connection weights. McCulloch and Pitts neuron, combined with the concept of a “B-type unorganized machine” [42], inspired the modeling of cognitive systems as artificial neural networks (ANNs) [53], that is, devices with self-organized learning.

Experiments with systems trained to learn language rules, such as correctly forming the past tense of irregular verbs [54], conducted in the mid-1980s, contributed to the popularization of connectionism strategies in ML development.

Currently, the two main approaches to ML and their combinations have resulted in many methods and strategies that can be adequately applied to all problems. The process of designing an ML model begins with the type of task to be performed: “classification,” “regression,” or “regression-classification” [39].

Classification tasks are used to define the boundaries between classes. For instance, determining whether an image shows a tumor corresponds to a malignant case [51]. In such cases, the learning mechanism can be implemented by a specialist medical doctor who observes the data and adjusts the inference strategies in an expert system or by the weights in a neural network.

This study assumes that there is a reasonable set of available training data and that the ML process is engineered based on an SL mechanism. When training data are not available in advance, the criteria must be defined, and part of the working data is used to establish inference strategies or weights [50].

Regression tasks involve obtaining mathematical relations between relevant variables in a process that can be modeled by relating either objective, measurable variables or subjective human feelings, such as fashion preferences, happiness, humor states, or any other expressible sensation. According to the problem to be modeled, adequate scales must be established to give ideas about how subjective variables can be represented by objective measures [55, 56].

Regression and classification must be used together for certain types of problems when the mathematical relationships among the problem variables must define the boundaries. Regression defines the boundaries setting the classification ranges, with learning mechanisms that function similarly to the development of biological and social life [57, 58].

In this context, ML algorithms such as transformer deep-learning architecture [59] have enabled sophisticated implementation of regression, classification, or regression-classification tasks. The pinnacle of such progress is represented by notable advancements in natural language processing (NLP), for instance, prominently showcased by models GPT-3.5 and GPT-4 that are used to power ChatGPT [60].

5. Complexity Measures

The science of complexity involves several traditional disciplines: computation, ecology, economics, management, politics, and social sciences. Although life is governed by the laws of physics, physics cannot predict life [61].

All engineering activities involve interventions in nature aimed at improving life on the planet. A good project requires a nondisjunction complexity approach, considering complexity concepts, that is, circular causality, sensitivity to initial conditions, emergence, and unpredictability owing to an open system view [61].

Such reasoning requires consideration of variables and parameters that are essential to operate a project, even though it is difficult to associate them with quantitative figures. The classical statistical method can be used to treat time series, complemented by measures that discuss the unpredictability and self-organization of the entire system [62–64].

Some ideas about complexity measures have been developed, and these originated in computer science. Such methods attempt to quantify the extent of complication in a program as well as the memory space needed to execute computational tasks [65].

Kolmogorov’s ideas about complexity measures are related to algorithms and conformal mapping [66, 67] represented by programs implemented to be executed by a universal TM (UTM), which is the basis for the “Algorithmic information theory (AIT)” [46].

- -

Complexity K(x) is the smallest program q(x) that generates x.

- -

q(x) comprises a finite set of binary instructions of length |q(x)|bits.

- -

q(x) can be implemented using a TM.

Then, min|q(x)| = K(x) is called the complexity of x and can be referred to as algorithmic information content, algorithmic complexity, algorithmic entropy, or Kolmogorov complexity [46].

The definition in Equation (1) assumes finite discrete sources, and due care is required to define S for continuous sources and infinite domains, as proved in Reference [68].

Since Shannon proposed the definition of entropy in the context of SIT, there has been considerable discussion on the relation between this idea and the definition of entropy in the context of thermodynamics. This aspect is beyond the scope of this work. However, interesting and clarifying text can be found in References [69, 70].

The algorithmic and information perspectives are approximately equal for sources corresponding to a large number of events, that is, the mean value of K(x), with x belonging to source X, is approximately equal to S, calculated over the same source [71].

Considering the equivalence between the definitions of K(x) and S, in this study, only S is considered to support the several proposed definitions of complexity.

The disorder measure (∆), expressed by Equation (2), was the first idea of a general measure of complexity and was analogous to the manner of expressing complexity when computational tasks were evaluated [62, 73].

However, when this method of defining complexity is applied to natural and self-organized systems, for instance, in biological or ecological contexts, an important criticism emerges because, in such cases, the real complex behavior is a combination of disorder and emergent order [62, 73].

Equations (3) and (5) are convex functions that present maximum values when the order and disorder are equivalent. Complementarily ordered or disordered systems are zero-complexity situations compatible with intuitive reasoning regarding nature [62, 65, 73].

Furthermore, the expressions of CSDL and CLMC can be generalized to continuous systems [70] and quantum information contexts [76, 77]. It should be noted that CSDL and CLMC do not measure deterministic complexity but structural complexity [37, 78, 79].

6. Complex Engineering Approach: Examples

Many advancements in engineering have been derived from this classic method: breaking down of most engineering projects into many pieces. However, as shown in the example of “Elevado João Goulart,” this approach ignores the processes of emergence and self-organization that occur in complex systems and the wicked problems that engineering deals with. The construction of sustainable cities with structures resilient to climate change is another example that these objectives cannot be achieved with a “Lego” engineering approach.

- -

Identify processes that are inherently difficult to measure.

- -

Classify possible subjective impressions by attributing grades.

- -

Attempt to obtain larger, different, real situations as classified earlier.

- -

Design a computational program to process large datasets that have been obtained.

- -

Design an algorithm to calculate the order, disorder, and complexity parameters.

- -

Discuss and present conclusions regarding the meaning of the calculated parameters.

Diverging from the tractable nature of “tame problems” that align with conventional engineering paradigms, the domain of “wicked problems” is characterized by the absence of a definitive solution and mandates a singular contextualized approach, presented above by the systematic framework of procedures. Hence, the discipline of complexity engineering is responsible for addressing the nuances of each situation and converting the subjective into the objective.

This discourse presents two examples in which the methodology for objective and quantitative analysis of complex situations is contextualized and applied. These examples encompass human behavior and environmental science. Nevertheless, the scope of this exposition is delimited to the presentation of these particular cases. It is imperative to emphasize that the framework of procedures to solve a “wicked problem” is employed systematically, and there is no loss of generality.

6.1. Methodologies Applied in Case Studies

Several methods can be adopted to conduct research into the complex systems that complexity engineering deals with. Some of them are shown here based on two case studies of applying complexity engineering to real problems.

In the first case, the data obtained from a ward, using psychiatric scales in a certain period, were converted into quantitative measures, providing ways to model the dynamical behavior of the whole set of inpatients allowing decisions and preventive actions.

For the second case, some measures mentioned in item 5 were applied to evaluate the complexity of landscapes in RapidEye satellite images. The idea of evaluating landscape complexity is to extract information from remote sensor images allowing for complexity measurement based on the spatial heterogeneity grades shown by these images. The number of pixels associated with a digital state and its relative frequency were chosen as variables to input into Equations (1)–(5). Considering that the greatest spatial complexity of landscapes lies in an intermediate zone between order and disorder, measures based on information entropy CLMC and CSDL were used. The disorder measure (∆) was used to locate the region of the order-disorder gradient in which each evaluated area is located.

6.2. Behavior in a Psychiatry Ward

As the first example to illustrate the reasoning behind complexity engineering, a problem related to a former psychiatric ward at the end of the 1990s in the Clinics Hospital of São Paulo University is extended, based on Reference [56]. It shows the way subjective inpatient states can be converted into objective, observable variables processed by a back-propagation neural network [39], allowing possible actions to improve the quality of human life.

This work can be considered a pioneer work. Despite not being a traditional engineering problem, it follows the new way of doing science, without considering rigid knowledge frontiers. Nowadays, profitable studies are being developed in the same context with profitable dialogs between areas [80].

It must be noted that such wards were discontinued because of Brazilian mental health policies, and the data were only used to show the approach to analyze an apparently subjective issue by assuming it as objective.

The first step, that is, to identify processes to be measured, was proposed in Reference [57], which was published in 1999. It described a psychiatric ward, surveyed the behavior of 68 inpatients over 62 days, and assigned a daily score regarding the sociability and restlessness of each individual.

Data were collected from acute patients consecutively admitted to a female psychiatric unit. The ward had a capacity of up to 30 inpatients. The mean stay period was 42.7 days, and the shortest duration in the ward was 2 days.

The main criteria for admission to the inpatient unit were aggressiveness, suicide attempts, suicidal ideation, and severe disruptive behavior requiring medical support. During their stay, the patients participated in group activities such as occupational therapy, art therapy, relaxation sessions, and sports in a small garden area outside the building, but with restricted access.

The diagnostic profile of the sample was based on Reference [81] and is shown in Table 1.

| Number | Percentage (%) | Diagnosis |

|---|---|---|

| 18 | 26.5 | Affective bipolar disorder |

| 12 | 17.6 | Depressive disorder |

| 10 | 14.7 | Schizophrenia |

| 8 | 11.8 | Alcohol and drug dependence |

| 4 | 5.9 | Organic mental disorder |

| 2 | 2.9 | Personality disorders |

| 14 | 20.5 | Others |

The scales used to convert the subjective variables, that is, the psychomotor activity (X) and social interaction (Y), are based on Reference [82] and are shown in Tables 2 and 3.

| Grade | Description of the behavior |

|---|---|

| 1 | Calm, adequate |

| 2 | Mild restlessness, noticed only when asked |

| 3 | Clearly uneasy, frequently walking around the ward |

| 4 | Severe agitation, disturbing other patients |

| 5 | Extreme excitation, needing sedation and/or physical restriction |

| Grade | Description of the behavior |

|---|---|

| 1 | Active social contact, interacting with the other patients and staff |

| 2 | Mild tendency to socially withdraw, noticed only when asked |

| 3 | Clearly isolated, keeping some social contact with a few people |

| 4 | Severe social inhibition, keeping contact only when stimulated |

| 5 | Absence of verbal and nonverbal communication, catatonia |

Besides matrix A, Reference [50] also provided another matrix, called ΔA, constructed by storing the total amount of absolute grade changes equal to i – 1 = |ΔY| = |Y1 – Y2| and j – 1 = |ΔX| = |X1 – X2|, respectively, in each entry Δai,j.

Considering these data, it is possible to forecast and classify a pattern of dangerous days using a back-propagation ANN [39] in a hypothetical scenario, which is further described in the text.

Such comprehension of the ward state is important for preparing the ward-staff action plan. In the example, the grades of interest are those with X = 5. Their occurrences require active action by the ward staff, with drug administration or physical restrictions on the patient.

In Equations (6) and (7), matrix Q represents the relative frequency of each state, and ΔQ represents the relative frequency of each grade change.

Defining Ek as a possible state for a patient, k = 1 to 25, ordered with respect to a column of grades from matrix A, a transition between two states, Ek1 to Ek2, occurs with a probability calculated as the joint probability of two independent events, that is, choosing state Ek2 and the grade differences between states.

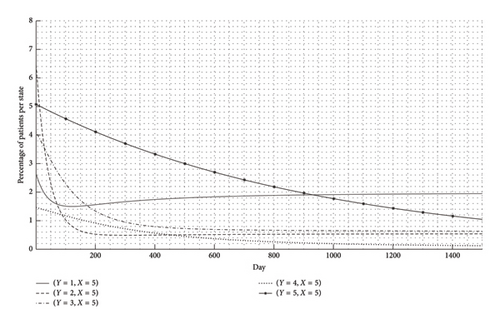

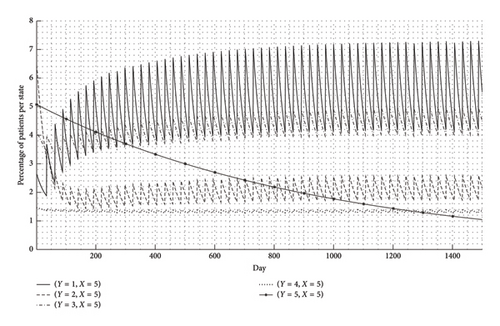

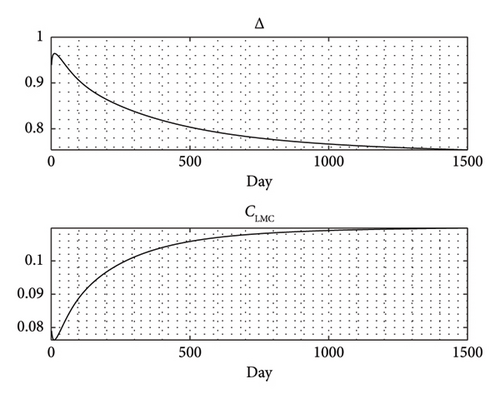

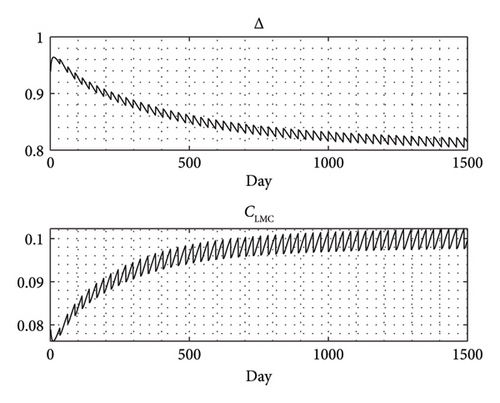

To illustrate these hypothetical state transition dynamics, a simulation using the state space presented in Equation (12) was conducted for a random initial state S(0). The system evolution was simulated for 1500 days, and the results for the grades of interest with X = 5 are shown in Figure 6.

As expected, in this particular case, the percentage of patients per state tends asymptotically to the values of relative occurrence. Thus, the dynamics are self-organized and stable [56]. Regardless of the classification criteria, all days are equally classified from a particular time. This results in the absence of a periodic dynamic pattern to forecast with an AI algorithm and the loss of the purpose of this example.

- -

Every day in which the state (Y, X) = (1, 1) is found in the majority of patients, that is, for the grade with the highest percentage of patients, 1/3 of those patients are released.

- -

Subsequently, the same number of released patients are admitted, all in states with X = 5.

- -

The admission distribution by state respects the probability of occurrence of the state, represented in matrix Q.

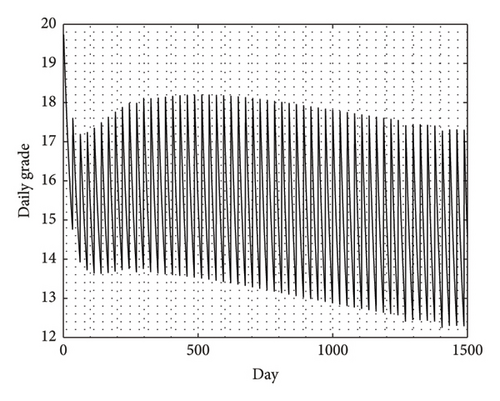

Employing the premises stipulated above, Figure 7 illustrates the temporal evolution of each daily grade. Within the identical simulation framework, the results of X = 5 are delineated in the context of Figure 6. Notably, discernible cyclic tendencies are observed for most of the grades, thereby affording the potential for predictive modeling using an ANN.

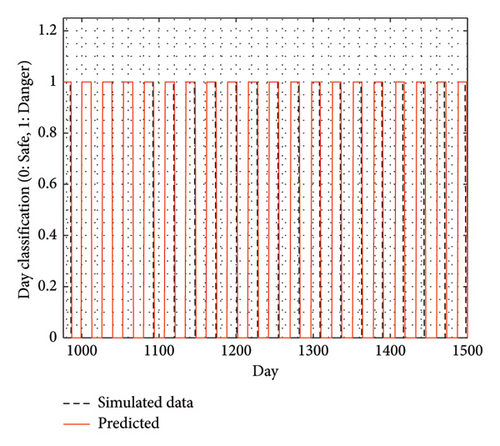

To objectively define a dangerous day, a general grade, defined as the sum of the percentages of states with X = 5, was attributed to each day, considering the examples presented in Figures 7, 8.

States with X = 5 were chosen to define dangerous days because, as previously stated, their occurrence dictates the active actions of the ward staff.

Assuming a dangerous day as a day in which grade X = 5 exceeds 15%, to predict and classify the danger pattern, a back-propagation ANN was adapted from the source code proposed in [84].

A neural network was defined with a hidden layer containing eight neurons. For training, 65% of the generated time series was classified and used for 20,000 epochs. The result presented in Figure 9 is a forecast of 450 days, with 4.57% false positives and 0% false negatives.

False positives are preferred to false negatives in intervention systems because they help ensure staff preparedness for potential interventions, which is critical for optimizing the overall ward performance.

This example demonstrates the effectiveness of using a quantitative approach in conjunction with ML to address a subjective problem objectively.

Prior knowledge of the dynamic behavior or operational modes of the ward is not required. Instead, by utilizing the available data and relevant quantitative parameters, the approach provides strong support for decision-making by the ward staff.

The results of the complexity measurements applied to the daily evolution of the ward’s grade are shown in Figures 10 and 11, corresponding to the scenarios without and with release and readmission, respectively. These results indicate that the inclusion of release and readmission procedures introduces an oscillatory pattern in the LMC complexity measure.

This periodic fluctuation in the system complexity denotes a recurring variation in the amount of information present within the system. Consequently, it can be concluded that the ANN exhibits a remarkable performance because the periodic variation in information allows for effective training based on relevant information.

Conversely, in the absence of an admission and release procedure, the ANN has no periodic variation in the training data, resulting in a lack of relevant information to provide accurate predictions, except for trivial information.

The code for predicting behavior in the psychiatric ward was fully implemented using MATLAB version 2019b. It was executed on a desktop computer equipped with an AMD Ryzen 5 2600× 3.80 GHz CPU, an Nvidia GTX1660 dedicated GPU, and 16GB of RAM. The ANN training and prediction process took 5.48 s to complete and reached a peak memory usage of 4916.00 kb.

6.3. Landscape Complexity

For this study, the chosen theoretical assumption is that landscape patterns result from internal dynamical processes [85]. Consequently, evaluating the complexities of a landscape and its components can indicate integrity and resilience [86].

The automation of information calculation procedures from remote sensor images was initiated with the development of the CompPlexus program [87], dependent on the Envi program to obtain the relevant data associated with the chosen image regions.

After this, QGIS CompPlex HeROI, CompPlex Janus, and CompPlex Chronos toolboxes were developed to evaluate regions of interest (ROIs) and obtain their complex signatures from an image or temporal series of images [8]. The version used here was developed in Phyton, requiring 416 MB memory, and is able to process 100 images with 1024 × 1024 pixels for the four types of measures in 600 s for a 5 × 5 window.

Using these programs and toolboxes, available at (https://github.com/lascaufscar/pyCompPlex) with a Notebook Lenovo IdeaPad 1 15IAU7 equipped with Windows 10, the important environmental problem of preserving river basins was addressed for the Ivinhema River, located in Mato Grosso do Sul state in the central region of Brazil.

The idea is to define the remaining native vegetation areas to protect the river basin by measuring their complexity patterns and collecting the image data available from the Brazilian Environment Ministry (https://geocatalogo.mma.gov.br).

CompPlex HeROI was used to evaluate the measures defined by Equations (1)–(5) for regions selected from the RapidEye satellite images. The results are shown in Table 4.

| Band | Region of interest | Measure | ||||

|---|---|---|---|---|---|---|

| S | S_max | Δ | C_SDL | C_LMC | ||

| 1 | Azulão#01 | 6.792464 | 7.179909 | 0.946038 | 0.051050 | 0.003665 |

| Azulão#02 | 6.799217 | 7.159871 | 0.949628 | 0.047834 | 0.003303 | |

| Azulão#03 | 6.775995 | 7.179909 | 0.943744 | 0.053091 | 0.003647 | |

| MS157#01 | 6.957021 | 7.330917 | 0.948997 | 0.048401 | 0.003051 | |

| MS157#02 | 7.031646 | 7.459432 | 0.942652 | 0.054059 | 0.003340 | |

| MS157#03 | 7.075787 | 7.426265 | 0.952806 | 0.044967 | 0.002775 | |

| Paradouro#01 | 6.819339 | 7.199672 | 0.947173 | 0.050036 | 0.003425 | |

| Paradouro#02 | 6.933362 | 7.348728 | 0.943478 | 0.053327 | 0.003532 | |

| Paradouro#03 | 6.891678 | 7.294621 | 0.944762 | 0.052187 | 0.003417 | |

| São Marcos#01 | 6.958585 | 7.357552 | 0.945775 | 0.051285 | 0.003278 | |

| São Marcos#02 | 7.382743 | 7.754888 | 0.952012 | 0.045686 | 0.002463 | |

| São Marcos#03 | 7.260766 | 7.651052 | 0.948989 | 0.048409 | 0.002761 | |

| 2 | Azulão#01 | 7.457993 | 7.794416 | 0.956838 | 0.041299 | 0.002172 |

| Azulão#02 | 7.471047 | 7.768184 | 0.961749 | 0.036787 | 0.001903 | |

| Azulão#03 | 7.436417 | 7.781360 | 0.955671 | 0.042364 | 0.002168 | |

| MS157#01 | 7.422917 | 7.741467 | 0.958852 | 0.039455 | 0.002037 | |

| MS157#02 | 7.352594 | 7.665336 | 0.959201 | 0.039135 | 0.002098 | |

| MS157#03 | 7.399540 | 7.693487 | 0.961793 | 0.036748 | 0.001923 | |

| Paradouro#01 | 7.350534 | 7.679480 | 0.957166 | 0.041000 | 0.002226 | |

| Paradouro#02 | 7.444855 | 7.787903 | 0.955951 | 0.042108 | 0.002197 | |

| Paradouro#03 | 7.565056 | 7.888743 | 0.958968 | 0.039348 | 0.001935 | |

| São Marcos#01 | 7.446766 | 7.768184 | 0.958624 | 0.039664 | 0.002066 | |

| São Marcos#02 | 7.628992 | 7.936638 | 0.961237 | 0.037260 | 0.001810 | |

| São Marcos#03 | 7.452275 | 7.787903 | 0.956904 | 0.041239 | 0.002140 | |

| 3 | Azulão#01 | 6.905711 | 7.321928 | 0.943155 | 0.053614 | 0.003536 |

| Azulão#02 | 6.735231 | 7.149747 | 0.942024 | 0.054615 | 0.003950 | |

| Azulão#03 | 6.648753 | 7.055282 | 0.942379 | 0.054300 | 0.004091 | |

| MS157#01 | 6.479446 | 6.930737 | 0.934886 | 0.060875 | 0.004809 | |

| MS157#02 | 6.415515 | 6.906891 | 0.928857 | 0.066082 | 0.005213 | |

| MS157#03 | 6.586924 | 7.066089 | 0.932188 | 0.063214 | 0.004573 | |

| Paradouro#01 | 6.546897 | 7.011227 | 0.933773 | 0.061841 | 0.004860 | |

| Paradouro#02 | 6.731903 | 7.159871 | 0.940227 | 0.056200 | 0.004004 | |

| Paradouro#03 | 6.778799 | 7.199672 | 0.941543 | 0.055040 | 0.003815 | |

| São Marcos#01 | 6.867165 | 7.247928 | 0.947466 | 0.049774 | 0.003441 | |

| São Marcos#02 | 6.887667 | 7.247928 | 0.950295 | 0.047235 | 0.003290 | |

| São Marcos#03 | 6.786834 | 7.139551 | 0.950597 | 0.046963 | 0.003348 | |

| 4 | Azulão#01 | 8.284628 | 8.531381 | 0.971077 | 0.028086 | 0.001019 |

| Azulão#02 | 8.234723 | 8.467606 | 0.972497 | 0.026746 | 0.000980 | |

| Azulão#03 | 8.251655 | 8.495855 | 0.971257 | 0.027917 | 0.001017 | |

| MS157#01 | 7.878033 | 8.149747 | 0.966660 | 0.032229 | 0.001377 | |

| MS157#02 | 7.747349 | 8.055282 | 0.961772 | 0.036766 | 0.001667 | |

| MS157#03 | 7.803447 | 8.103288 | 0.962998 | 0.035633 | 0.001640 | |

| Paradouro#01 | 8.269737 | 8.491853 | 0.973844 | 0.025472 | 0.000942 | |

| Paradouro#02 | 8.306431 | 8.543032 | 0.972305 | 0.026928 | 0.000975 | |

| Paradouro#03 | 8.470220 | 8.724514 | 0.970853 | 0.028297 | 0.000965 | |

| São Marcos#01 | 8.203993 | 8.455327 | 0.970275 | 0.028841 | 0.001053 | |

| São Marcos#02 | 8.384526 | 8.614710 | 0.973280 | 0.026006 | 0.000896 | |

| São Marcos#03 | 8.205244 | 8.479780 | 0.967625 | 0.031327 | 0.001175 | |

| 5 | Azulão#01 | 9.159228 | 9.273796 | 0.987646 | 0.012201 | 0.000317 |

| Azulão#02 | 9.158603 | 9.269127 | 0.988076 | 0.011782 | 0.000302 | |

| Azulão#03 | 9.137857 | 9.252665 | 0.987592 | 0.012254 | 0.000316 | |

| MS157#01 | 9.035174 | 9.172428 | 0.985036 | 0.014740 | 0.000399 | |

| MS157#02 | 8.969456 | 9.124121 | 0.983049 | 0.016664 | 0.000465 | |

| MS157#03 | 8.834457 | 9.000000 | 0.981606 | 0.018055 | 0.000517 | |

| Paradouro#01 | 9.153739 | 9.271463 | 0.987302 | 0.012536 | 0.000324 | |

| Paradouro#02 | 9.170683 | 9.278449 | 0.988385 | 0.011480 | 0.000290 | |

| Paradouro#03 | 9.293519 | 9.375039 | 0.991305 | 0.008620 | 0.000210 | |

| São Marcos#01 | 9.112922 | 9.233620 | 0.986928 | 0.012901 | 0.000332 | |

| São Marcos#02 | 9.203221 | 9.310613 | 0.988466 | 0.011401 | 0.000293 | |

| São Marcos#03 | 9.147723 | 9.259743 | 0.987902 | 0.011951 | 0.000304 | |

| B5 − B4 | Azulão#01 | 9.036264 | 9.172428 | 0.985155 | 0.014624 | 0.000392 |

| Azulão#02 | 9.045538 | 9.184875 | 0.984830 | 0.014940 | 0.000407 | |

| Azulão#03 | 9.077883 | 9.204571 | 0.986236 | 0.013574 | 0.000357 | |

| MS157#01 | 8.884691 | 9.044394 | 0.982342 | 0.017346 | 0.000482 | |

| MS157#02 | 8.896821 | 9.057992 | 0.982207 | 0.017477 | 0.000500 | |

| MS157#03 | 8.758025 | 8.948367 | 0.978729 | 0.020819 | 0.000627 | |

| Paradouro#01 | 9.022756 | 9.167418 | 0.984220 | 0.015531 | 0.000428 | |

| Paradouro#02 | 9.105607 | 9.228819 | 0.986649 | 0.013173 | 0.000343 | |

| Paradouro#03 | 9.160561 | 9.278449 | 0.987294 | 0.012544 | 0.000341 | |

| São Marcos#01 | 9.037050 | 9.184875 | 0.983906 | 0.015835 | 0.000442 | |

| São Marcos#02 | 9.150485 | 9.266787 | 0.987450 | 0.012393 | 0.000325 | |

| São Marcos#03 | 9.072654 | 9.199672 | 0.986193 | 0.013616 | 0.000360 | |

| B5 + B4 | Azulão#01 | 9.248514 | 9.342075 | 0.989985 | 0.009915 | 0.000246 |

| Azulão#02 | 9.195568 | 9.301496 | 0.988612 | 0.011259 | 0.000286 | |

| Azulão#03 | 9.241198 | 9.33539 | 0.98991 | 0.009988 | 0.0002489 | |

| MS157#01 | 9.023044 | 9.159871 | 0.985062 | 0.014715 | 0.000397 | |

| MS157#02 | 9.104019 | 9.224002 | 0.986992 | 0.012839 | 0.000331 | |

| MS157#03 | 9.018152 | 9.157347 | 0.9848 | 0.014969 | 0.000409 | |

| Paradouro#01 | 9.208998 | 9.312883 | 0.988845 | 0.011031 | 0.000282 | |

| Paradouro#02 | 9.27725 | 9.368506 | 0.990259 | 0.009646 | 0.000245 | |

| Paradouro#03 | 9.326057 | 9.398744 | 0.992266 | 0.007674 | 0.000183 | |

| São Marcos#01 | 9.211837 | 9.308339 | 0.989633 | 0.01026 | 0.000252 | |

| São Marcos#02 | 9.282064 | 9.366322 | 0.991004 | 0.008915 | 0.00022 | |

| São Marcos#03 | 9.222876 | 9.326429 | 0.988897 | 0.01098 | 0.000284 | |

| NDRE | Azulão#01 | 9.608645 | 9.611025 | 0.999752 | 0.000248 | 0.000006 |

| Azulão#02 | 9.601955 | 9.607330 | 0.999440 | 0.000559 | 0.000016 | |

| Azulão#03 | 9.612159 | 9.612868 | 0.999926 | 0.000074 | 0.000002 | |

| MS157#01 | 9.600992 | 9.605480 | 0.999533 | 0.000467 | 0.000011 | |

| MS157#02 | 9.609608 | 9.611025 | 0.999853 | 0.000147 | 0.000003 | |

| MS157#03 | 9.599404 | 9.603626 | 0.999560 | 0.000439 | 0.000010 | |

| Paradouro#01 | 9.599404 | 9.605480 | 0.999367 | 0.000632 | 0.000018 | |

| Paradouro#02 | 9.607057 | 9.609179 | 0.999779 | 0.000221 | 0.000005 | |

| Paradouro#03 | 9.609608 | 9.611025 | 0.999853 | 0.000147 | 0.000003 | |

| São Marcos#01 | 9.604506 | 9.607330 | 0.999706 | 0.000294 | 0.000006 | |

| São Marcos#02 | 9.609608 | 9.611025 | 0.999853 | 0.000147 | 0.000003 | |

| São Marcos#03 | 9.609608 | 9.611025 | 0.999853 | 0.000147 | 0.000003 | |

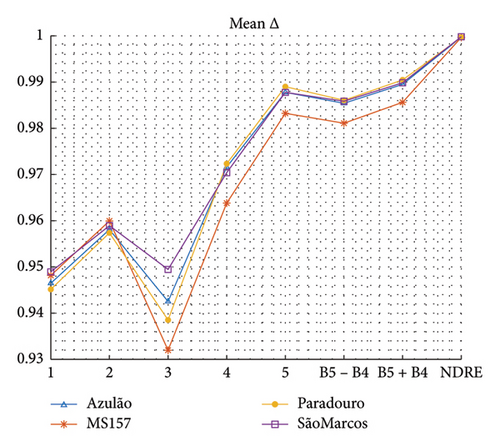

It can be observed that the woods region called “MS157” presents, for the majority of bands and compositions, the lowest values for disorder measures (S, Smax, and Δ) and the highest values for the real complexity measures (CLMC and CSDL), combining order and disorder.

This is an important qualitative result of the objective measures and suggests a way of preserving the entire system with decreasing diversity combined with increasing complexity because of human actions.

Another interesting result, shown in Figures 12 and 13, provides evidence that “Band 3” is the most effective frequency band to characterize a forest landscape.

However, as pointed out by Haken [33], in spite of obtaining useful results and developing an interesting way of research, there is no considerable advance in the explanation of the biological processes as fluxes of energy and matter are not considered during the analysis and only the whole macroscopic effects are the object of study.

Elucidating biological phenomena implies considering chemical energy sources feeding the system and being processed in a microscopic way, resulting in macroscopic outcomes such as morphogenesis, movements coordination, and recognizing visual patterns [33].

7. Discussions and Criticisms

7.1. About Engineering Progress

As shown throughout this text, complexity engineering represents a new, more systemic and integrative way of thinking and doing engineering. Its applications range from a new way of interpreting classic engineering problems, as illustrated by the example of Elevado João Goulart, to problems in more recent areas of engineering, such as biomedical and environmental issues. From the first case, it can be concluded that an engineering project cannot disregard the context in which it is inserted and, therefore, cannot escape the nonlinearities and uncertainties involved in this process. The other two last examples presented here show that the same concept (information entropy) and measures derived from it can be used for very different problems but that they are part of the same category of problems and have the same way of interpreting: “wicked problems” evaluated from complexity engineering.

Several other theories and methodologies associated with complexity engineering can be included for studies similar to those presented here, as well as other real wicked problems involving complex systems. Thus, it will be possible to evaluate the potential and limitations of each of them and their suitability for complexity engineering.

Consequently, it is difficult to present a list of subjects when defining complexity engineering. This field can be understood as a combination of nonlinear dynamics [88], systems theory [29, 30], pattern formation [89], evolutionary computation [90], networks [91], collective behavior [92], and stochastic processes [83], with many books and papers about applications and case studies.

This study serves as a manifesto advocating for a forward-thinking approach to engineering, illustrated through some information-based applications. One of such examples demonstrates the integration of ML with complexity measures, allying Turing’s concept of AI with SIT. The diverse range of applications discussed, spanning behavioral and environmental challenges, highlights the pressing need for a multidisciplinary perspective in contemporary technological development.

Moreover, the study addresses several project-related dilemmas, often referred to as wicked problems, which introduce nonlinear and multiplicative dynamics into the system under investigation. Such complexities render traditional linear engineering methodologies inadequate. In response, this work argues that the future of technological innovation lies in the paradigm of complexity engineering, as defined here.

Key contributions of this study include the formalization of complexity engineering, the demonstration of the inherently multidisciplinary nature of modern challenges, and the proposal of systematic approaches to project design. The main goal is to inspire the development of an engineering methodology that transcends conventional problem-solving, prioritizing human advancement while safeguarding the planet and its biodiversity.

7.2. About Human Phenomenon

At this moment, the hottest discussion is about the development of AI and ML algorithms and how they could change our lives, potentially replacing human jobs with automation and impacting scientific methodology.

It is reasonable to think that computational tools, like all scientific progresses, have the potential to improve life. That is the main scientific discussion that needs to break barriers between traditional disciplines, creating real collaborative research groups.

Concerning the example presented in the subsection “Behavior in a Psychiatry Ward,” the dichotomy silico/in vivo is present with four different main factors to be considered [93]. The first is the existence of consciousness, essential to human beings, responsible for language, love, and humor [93].

The second is intentionality, related to intentions, creeds, desires, willingness, fears, love, anger, mourning, disgust, shame, pride, and irritation. The third is the subjectivity of mental states: there are facts that sadden us but do not sadden other people. Finally, there is mental causality: our thoughts and feelings change the way we act, producing effects in the physical world [93].

7.3. Hints About AI and ML

AI and ML are disciplines that developed in a very explosive way. Nowadays, they are pervasive in all areas of human knowledge, changing human daily life with a lot of popular publications and short courses.

However, it is important to acquire a real knowledge about how these popular tools and connection procedures work, avoiding possible mistakes and misunderstanding about scientific results and spreading news [94].

Flach’s book [39] gives a complete vision of how to understand, compare, and implement ML algorithms presenting the several types of models: geometric, probabilistic, logical, grouping, and grading. As for the large scope of ML applications, different strategies for treating data are compared: classification, trees, rules, and distance-based algorithms. In addition, linear algebra is the most basic knowledge to understand and implement AI and ML strategies [95].

Concerning ML applications, many knowledge areas, in addition to engineering, are contemplated as, for instance: medical diagnosis [96], business [97], and urban planning [98].

7.4. Road Map

Talking about complexity implies long digressions and extensive bibliography. In reality, it is mainly a road to be traveled with curiosity and without fears about corrections and, sometimes, strong criticism.

This work presented the results of a collaborative effort, trying to avoid prejudices about how to build a new engineering way of thinking (References [10–13, 16, 32, 88–92]), exploring the complexity world (References [9, 14, 19, 22, 36–38, 40, 46, 58, 61–65, 68, 74, 77–79]).

The origins of our curiosity are the interpretations of biological phenomena (References [29, 30, 56–59, 66, 67, 69–71, 73, 80–82, 85–87, 93]), combined with Turing’s works (References [43–45, 47, 48, 52, 53, 55, 57]), and helped by data science new developments (References [34, 35, 39, 51, 60, 84, 94–99]).

Finally, it is important to cite the seminal work from Haken [100] that connects information measures to self-organizing phenomenon.

7.5. Multiplying and Feedback Effects of Complexity Engineering

The historical review of advances in engineering and the theoretical-methodological discussions of complexity engineering and its applications presented here demonstrate the multiplying and feedback effects of complexity engineering. On the one hand, concepts and theories originating from engineering, such as those linked to information theory, can be applied in areas as diverse as health, ecology, economics, and microscopy, enabling new insights and advances in these areas and to which our group has contributed. On the other hand, the principles and methods that guide the studies of complex systems in different areas of knowledge feed the development and consolidation of complexity engineering as a new way of studying and acting on complex systems of interest.

In this way, complexity engineering, by associating engineering with the complexity paradigm, reveals a perspective that seeks to find common theoretical-methodological foundations that can be used to understand the organization and dynamics of physical, biological, and social systems. It is worth highlighting again that Haken [100], in his proposal that synergetics be an approach to finding the unifying principles of complex systems, emphasizes that self-organization is responsible for the structure of the system, and information is a way to understand this process.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

José Roberto C. Piqueira was supported by the Brazilian Research Council (CNPq) (grant number 304707/2023–6) and São Paulo Research Support Foundation (grant number 2022/00770-0).

Sérgio Henrique Vannucchi Leme de Mattos was supported by the Brazilian Research Council (CNPq) (grant number 443175/2014–4).

Roberto Costa Ceccato was supported by the São Paulo Research Support Foundation (FAPESP) (grant number 2021/13997-0).

Acknowledgments

The authors thank Thales Trigo and Cristiano Mascaro for the photographs; Andrés Eugui for the references; Luiz Eduardo Vicente, Daniel Gomes dos Santos, and Wendriner Loebmann for the landscape images; Claudio Bielenki Júnior for CompPlex HeROI processing; and Antonio Carlos Bastos de Godói for critical reading.

Endnotes

1This fact is brilliantly illustrated in the classic scene from Stanley Kubrick’s film “2001: A space odyssey” [1], based on the book of the same name by Arthur C. Clarke and set to the song “Also Sprach Zarathustra,” by Richard Strauss (in turn inspired by the book by Friedrich Nietzsche of the same title). The scene begins with an ancestral hominid discovering that the bone of an animal can be used as a weapon for hunting and ends with a scene transition of this bone thrown upwards and its “replacement” by a ship in space, summarizing in a few minutes how the transformation of elements and processes of nature into tools is a striking feature of human history.

2Another classic film shows the importance of our ancestors’ mastery of fire: “Quest of Fire,” by Annaud [4].

Open Research

Data Availability Statement

Data sharing is not applicable to this article, as no new data were created or analyzed in this study.