Fusion of Deep Features of Wavelet Transform for Wildfire Detection

Abstract

Forests uniquely deliver different vital resources, particularly oxygen and carbon dioxide purification. Wildfire is the leading cause of deforestation, where massive forest areas are annually lost due to the failure to identify and predict forest fires. Accordingly, early detection of wildfires is crucial to inform operational and firefighting teams to prevent fires from advancing. This study analyzes images taken by unmanned aerial vehicles for wildfire detection. For this purpose, the two-dimensional discrete wavelet transform was first performed on the images. Next, due to its superior ability, a convolutional neural network was utilized to extract deep features from wavelet transform sub-bands. Then, the features obtained from each sub-band were merged to create the final feature vector. Afterward, multidimensional scaling was employed to reduce the extracted non-useful features. Ultimately, the presence or absence of wildfire locations in the images was detected using proper classifiers. The proposed method reaches an accuracy and F1 score of 0.9684 and 0.9672, respectively, from the images of the FLAME dataset, indicating its efficiency in detecting the presence of wildfire locations. Thus, this method can significantly contribute to the on-time and prompt firefighting operations and prevent extensive damage to forests.

1. Introduction

1.1. Motivations

Forests play unique roles in human life and deliver various vital resources. They purify the air by drawing in CO2 and breathing out O2, so forests are called the “lungs of the planet”. Likewise, they are a habitat for most animals and clean drinking water by filtering out many pollutants from the water [1, 2]. Regrettably, massive forest areas have been burned and destroyed in the past years due to human activities regarded as the foremost cause of wildfires. Accordingly, elevated awareness can prevent most damages and forest losses from happening. Furthermore, early wildfire detection is crucial in diminishing associated risks and casualties and assists firefighters in fire smothering immediately in the early phases. The time spanning from wildfire detection to alerting the relevant authorities is critical and can minimize the risks and consequences. Thus, to rapidly control the fire, early detection of wildfires is vital [3].

Several techniques have been recently proposed to detect wildfires early and allocate appropriate resources to extinguish the fire. These techniques are often based on aerial and ground-based technologies, such as watchtowers with multiple sensors and satellites. However, these technologies have some constraints that lessen overall wildfire detection performance. For example, watchtowers have a limited field of view and impose high construction costs. Likewise, although satellites deliver a pervasive field of view, they come with huge costs and suffer from flexibility issues and low spatial/temporal image resolution, all of which prevent detecting the fire spot at the right time [4, 5].

1.2. Related Works

Computer vision allows machines (e.g., unmanned aerial vehicles [UAVs]) to visually perceive their surrounding environment and respond based on their predefined mission. Computer vision technologies intended for wildfire detection are classified into two classes. The first class covers machine learning (ML)-based conventional methods that work using handcrafted feature extraction techniques and identifying changes in the color [6, 7]. Choosing these features is time-consuming and requires qualified experts to pick features suitable for developing efficient algorithms. These techniques are inefficient when solving complicated problems, such as wildfire detection from images with a cluttered background in dense forests. The techniques in the second class employ deep learning (DL) algorithms to extract relevant and robust features automatically. DL algorithms allow machines to perform difficult and multifaceted tasks such as time-series analysis [8, 9], vehicle [10] and face recognition [11], self-driving cars [12], and the diagnosis of herbal diseases [13].

Recently, UAVs have been broadly utilized in several applications related to forests, like forest discovery, search and rescue operations, forest resource surveys, and wildfire smothering. Furthermore, recent technological breakthroughs have enabled drones to process visual data automatically. Fire and smoke detection in remote and hard-access areas and forests using DL-based techniques has been a subject of interest in recent years. Flame and smoke can be visual features for early and precise wildfire detection. Some studies have explored fire detection using flames [14, 15]. Likewise, some studies have inspected early fire detection using smoke as a proper signal for wildfire [16, 17]. Flames can be concealed in the early phases of wildfire, particularly in the forest [3]. For these restraints to be overcome, recent research has targeted concomitant flame and smoke detection.

Image classification-based methods classify input images (i.e., whether or not images contain fire patterns). Handcrafted pixel-based features were extracted in [18] and classified by support vector machine (SVM). Recently, several studies have worked with a convolutional neural network (CNN) to classify drone-captured wildfire images. For example, authors in [19] propose using AlexNet, a basic CNN architecture, to detect wildfire. The five CNN architectures, including AlexNet, GoogleNet, VGG, and modified versions of the last CNNs, were considered in [19] to classify UAV images into fire and non-fire events. Authors in [20] proposed a CNN-based method for early wildfire detection using a hexacopter with an optical camera. Preprocessing techniques such as histogram equalization and nonlinear filters are applied to enhance data quality and diminish noises. Similarly [16], proposes a method to classify and determine the precise location of wildfires. Other methods based on CNN were proposed in [21, 22]. Transfer learning with InceptionV3, DenseNet121, ResNet50V2, VGG19, and NASNetMobile CNNs was considered in [23] for forest wildfire detection. Reduce-VGGnet was employed in [24] for image classification with the aim of wildfire detection.

On the other side, object detection algorithms draw a bounding box around the object’s extent [25]. Here, objects can be flames or smoke. Several object detection algorithms have been reported to yield acceptable performances in recent years. The region candidates (which may contain fire events) are first generated using a selective search method in region-based algorithms. Next, these region candidates are classified depending on the occurrence or non-occurrence of the desired object. The region-based CNN (R-CNN) family is a well-established and efficient algorithm among region-based algorithms. Contrarily, single-step detectors (STDs) overlook the region candidate generation step by processing the input image in a single step, thus offering faster detection while retaining high accuracy. Yolo and RetinaNet are among the most efficient STDs [26]. A method based on YOLOv5 was proposed in [27] for domain-free fire detection. In [28], YOLOv5 and YOLOv8 were used to identify forest fires. The combination of physical and DL schemes was utilized in [29].

DL-based computer vision algorithms are not merely used for image classification and object recognition. They further can be employed for semantic segmentation. These algorithms are among the most effective DL techniques for wildfire detection. They mainly classify each pixel in the image according to the object class it belongs to (i.e., flame, smoke, forest, etc.). Nonetheless, semantic segmentation algorithms are highly multifaceted, entail higher computing performance, and take a more prolonged course to annotate training images. In recent years, several semantic segmentation techniques have been proposed to detect wildfires from digital images and videos captured by high-accurate UAV platforms. Examples of these techniques are DeepLab [30], U-Net [31], SegNet [32], and CTNet [33].

1.3. Contributions

Taking note of the above topics and regarding the necessity for early detection of wildfires to launch firefighting operations and avoid massive casualties, this study proposes an effective method to detect wildfires from images taken by UAVs from forests. The proposed method is based on time–frequency analysis and extracting deep features from its content. First, images are subjected to a one-level two-dimensional discrete wavelet transform (2D DWT). Wildfires exhibit distinct patterns at different scales. Previous works did not consider these patterns, and 2D DWT can extract them. Also, 2D DWT highlights specific regions in the image where changes occur. Next, the obtained sub-bands pass through CNNs to extract deep features. Since CNNs use local receptive fields to capture spatial patterns, they can be used for local analysis of 2D DWT by identifying relevant features. Then, the deep features of different sub-bands are merged and subjected to feature reduction. Ultimately, the right decision is made using suitable classifiers.

- •

Proposing a new method based on deep time–frequency features from images captured by UAV for wildfire detection in forests.

- •

Extracting time–frequency features by 2D DWT and deep features by CNN.

- •

Reducing the number of deep features by multidimensional scaling (MDS).

- •

Performing extensive simulations to indicate the performance of the proposed method.

The rest of this paper is organized as follows. Section 2 describes the dataset used in this paper. The proposed method is explained in detail in Section 3. Section 4 contains the simulation results, and section 5 concludes the paper.

2. Dataset and Preliminaries

2.1. Dataset

As mentioned, this paper aims to present the wildfire detection algorithm for forests due to their unique roles in human life. The use of UAVs for wildfire detection in forests has been attracted due to the challenges and issues of traditional methods. The FLAME (Fire Luminosity Airborne-based Machine learning Evaluation) dataset [34] is an open-access dataset covering wildfire images. The videos were recorded by DJI Phantom 3 Professional and DJI Matrice 200 drones with Zenmuse X4S, FLIR Vue Pro thermal, and DJI Phantom 3 cameras. The first and second videos are similarly raw (16 min long) and recorded by a Zenmuse X4S camera with frames per second (FPS) of 29. The second video presents the behavior of one pile from the start of burning. The resolution of the first and second videos is 1280 × 720. The third, fourth, and fifth videos are WhiteHot, GreentHot, and Heatmap. They are 89-s, 5-min, and 25-min long, respectively, and a FLIR camera has recorded all with an FPS of 30 and a resolution of 640 × 512. The sixth video is a 17-min HD RGB video recorded by a DJI Phantom 3 camera with an FPS of 30—the resolution of this video is 3840 × 2160. The seventh and eighth repositories contain 39,375 and 8617 frames with a resolution of 254 × 254. For image classification, the ninth and tenth repositories have more than 2000 fire and ground truth mask frames with high resolution for the fire segmentation problem. The primary purpose of this dataset is to classify images into “wildfire” or “non-wildfire” and perform fire image segmentation. Furthermore, they can detect wildfires from RGB images and thermal drones. Table 1 summarizes the details of the parts of the database used in this study. Likewise, Figures 1 and 2 provide examples of images of “wildfire” and “non-wildfire” events.

| Class | Training set | Validation set | Testing set | Total |

|---|---|---|---|---|

| Wildfire images | 540 | 180 | 180 | 900 |

| Non-wildfire images | 600 | 200 | 200 | 1000 |

| Total | 1140 | 380 | 380 | 1900 |

2.2. Preliminaries

2.2.1. 2D DWT

DWT performs the Time-frequency analysis of the signal. The DWT is a multi-resolution technique that can analyze individual frequencies at different resolutions. 2D DWT provides multi-scale analysis by decomposing an image into different frequency components at various scales (low-frequency and high-frequency details). Wildfires exhibit distinct patterns at different scales (e.g., large flames vs. smoke plumes). By analyzing these scales, DWT enhances the ability to detect fire-related features. Also, 2D DWT provides spatial localization of features. When a wildfire occurs, 2D DWT highlights specific regions in the image where changes occur (localized energy). This localization aids in pinpointing fire-affected areas accurately. 2D DWT coefficients represent different frequency bands and can be input for ML models such as CNN.

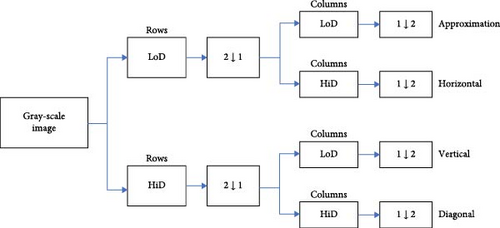

By passing through a series of lowpass and highpass filters and downsampling the output signal by a factor of two, it is possible to measure the representation of the wavelet of a discrete signal x[n] consisting of N samples. Now, each frequency band contains N/2 samples. This operation is reversible with the proper selection of filters. This process decomposes the original signal into two sub-bands [35]. Such transformation can be extended to multiple dimensions using separable filters. This is, depending on the signal input dimensions, DWT can be implemented in one-dimensional (1D), two-dimensional (2D), and three-dimensional (3D) forms.

The 2D DWT calculation applies highpass and lowpass filters on image pixels. The highpass filter can create detailed information about the image pixel, while the lowpass filter generates the approximation to the input image on each level. The outputs of filters are subjected to downsampling with a factor of two. 2D DWT is separable and is obtained from two 1D-DWT. Hence, 2D DWT can be performed by first performing a 1D-DWT on each row (i.e., the image’s horizontal filter) and then performing a 1D-DWT on each column (i.e., the vertical filter).

2.2.2. CNN

2D DWT decomposes an image into different frequency subbands (approximation and detail coefficients) at various scales. CNNs are designed to learn hierarchical features; therefore, CNNs can effectively learn and extract relevant features from these subbands. CNNs use local receptive fields (small windows) to capture spatial patterns, and 2D DWT subbands represent different frequency components. This localized analysis helps identify relevant textures, edges, and structure features. CNNs exhibit translation invariance, meaning they can recognize features regardless of their position in the image. By combining CNNs with DWT, deep hierarchies are created for feature extraction. Hence, the combination of DWT subbands and CNNs provides a robust framework for extracting relevant image features. It leverages spatial and frequency information, improving wildfire detection performance.

The CNN structure consists of multiple layers: convolution, pooling, and being fully connected. A typical architecture includes repetitions of the convolution stack and pooling layers followed by one or more fully connected layers. The convolution layer performs feature extraction as a central component of the CNN architecture. To obtain the output value in the corresponding position of the output tensor, an elementwise product between each element of the kernel and the input tensor is calculated at each location of the tensor and summed, which is called the feature map. The pooling layer is responsible for typical downsampling to introduce a translation invariance to small shifts and distortions and reduce the number of subsequent learnable parameters. Notably, there is no learnable parameter in every pooling layer, and filter sizes, strides, or padding are hyperparameters of a pooled operation similar to convolution operations. Deep features extracted by convolution and pooling layers are mapped with a subset of fully connected layers towards the final outputs, such as probability per class in the classification task. The final fully-connected layer typically contains the same output nodes as the number of classes [36, 37].

2.2.3. Multidimensional Scaling (MDS)

Multidimensional scaling (MDS) is the FDR technique employed to visualize differences and is broadly used in multidimensional data analysis [38]. MDS aims to find the projection of multidimensional data in low-dimensional space by preserving the similarity or inconsistency of the data. The object’s proximity index shall be optimally mapped to the distance between its multi-dimensionally located points. To achieve an intuitive spatial graph, MDS compresses a large amount of data that contains multiple variables in a smaller dimension space. MDS translates distances between each pair of objects into a configuration of points in an abstract Cartesian space, simplifying the representation while retaining essential information. Since MDS is the nonlinear dimensionality reduction, it can capture complex relationships and nonlinear structures in the data, which is impossible for linear methods, such as principal component analysis (PCA).

The steps of the classical MDS analysis algorithm are given below:

Step 1: Constructing matrix based on the distance matrix D = [dij] ∈ Rn×n.

Step 2: Calculating the inner product matrix .

Step 4: As the k value is determined, we have . Ek is a matrix made up of the first k eigenvectors retained by matrix B, and Lk is a diagonal matrix composed of k eigenvalues. As can be seen, the classical MDS and PCA are essentially similar. The difference is that the former is based on the sample, while the latter is based on variables.

3. Proposed Wildfire Detection

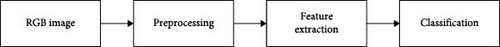

Concerning the need for early wildfire detection and timely warning of different departments, this section describes the proposed method for wildfire detection in forests. As shown in Figure 3, this method includes preprocessing, feature extraction, and classification. These parts are explained in the following.

3.1. Preprocessing

3.2. Feature Extraction

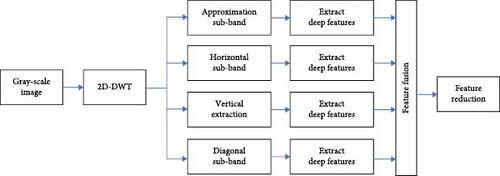

Figure 4 demonstrates the proposed feature extraction method for wildfire detection. Images containing fire events contain considerable information on the edges that need to be extracted, although such information has not been well used in previous studies. For this, the first step is to extract the edges of the images and put the information in the high frequencies of the image. In this study, the 2D DWT is employed for this purpose.

In this study, 2D DWT was applied in one step using Daubechies 4 filters. A diagram of the single-level of 2D DWT is shown in Figure 5. As a result, four sub-bands are obtained, including the approximation band (LL), the vertical band (LH), the horizontal band (HL), and the diagonal detail band (HH), which are represented by xa, xh, xv, and xd, respectively.

For extracting deep features, each of the sub-bands obtained in the previous step is separately passed through the considered CNN, and the features obtained from the flattened layer are considered deep features for each sub-band. Notably, the dimensions of each matrix obtained for different sub-bands are altered based on the dimensions of the CNN’s input layer before being subjected to the CNN. This results in feature vectors ya, yh, yv, and yd, which correspond to the sub-bands xa, xh, xv, and xd, respectively. The size of all the feature vectors obtained is nd × 1. These feature vectors are merged into yf = [ya, yh, yv, yd] to obtain the final feature vector with dimensions of (4nd × 1).

The final feature vector consists of many characteristics. There is often redundant information, such as related or duplicate factors, in this feature vector. Thus, feature dimensionality reduction (FDR) overcomes the outcomes of redundant or irrelevant information, mapping the practical information in the main features to fewer features.

3.3. Classification

In the last step, the proposed method classifies the reduced features obtained from MDS to determine if the image contains fire events. Accordingly, this study uses several classification techniques and compares their performances.

Support vector machine (SVM): SVM has been proposed as a robust classifier. SVM is broadly used in various studies with binary scenarios because of its lower computational complexity and easy processing. The optimal hyperplane in SVM maximizes the marginal distance between classes. The linear SVM is utilized in this paper.

k-Nearest neighbor (kNN): kNN is a well-established method in ML-based classification algorithms. kNN is a simple classifier used for wildfire detection. The distance between the test data and the nearest samples in training data determines the test data class. Thus, the value of k plays a unique role in the performance of kNN.

Decision tree: This classifier is a supervised ML technique where a dataset is constantly divided into subsets based on a specific parameter. This classifier uses a tree-like structure consisting of a root, internal decision, and end nodes. The root node is regarded as the whole dataset classified into branches. The internal subsets are called decision nodes; the final node represents the predicted class.

4. Results

This section evaluates and measures the efficacy of the proposed method in detecting wildfires. First, we explain the simulation setup and give the results.

4.1. Simulation Setup

The simulations were carried out using MATLAB R2023a on the computer with the following hardware specifications: CPU: Core i7 13700 H, RAM: 32 GB, GeForce RTX3050, HDD: 1TB. We consider the 10-fold cross-validation to partition the dataset into the train and test data. This scheme randomly divides the dataset into 10 equally sized parts, and the training and test procedure is repeated 10 times. In each cross-validation, one part is considered test data, and the remaining train the proposed method. This procedure was repeated for all parts as test data, and the results were averaged.

| Parameter | Value |

|---|---|

| Optimizer | Stochastic gradient descent with momentum (SGDM) |

| Loss function | Cross-entropy |

| Number of epochs | 50 |

| Batch size | 16 |

| Learning rate | 0.0001 |

| Momentum | 0.85 |

- 1.

Adding zero-mean Gaussian noise with variances of 0.005 and 0.01 to images.

- 2.

Applying gamma correction with gamma value ranging from 0.7 to 1.3.

- 3.

Rotating images with a step size of 5° from the angle of −45° to 45°.

- 4.

Mosaic by combining four randomly cropped images to create a new image.

4.2. Classification Accuracy

Table 3 shows the classification accuracy for various mother wavelet, CNN, and classifier combinations. Mother wavelets are Daubechies 4 (db4), Symlet 8 (sym8), biorthogonal 1.5 (bior 1.5), and reverse biorthogonal 3.5 (rbior 3.5). CNNs, including AlexNet, MobileNet, and InceptionV3, were used to extract deep features. Likewise, SVM, kNN, and decision tree classifiers were utilized to classify reduced deep features. From the results, we observe: (1) the db4 wavelet consistently yields better classification outcomes than sym8, bior1.5, and rbior3.5, likely because it balances edge characterization and noise suppression effectively; (2) InceptionV3 outperforms AlexNet and MobileNet in nearly every wavelet–classifier pairing, indicating that its deeper multi-scale filters are beneficial for wildfire vs. nonwildfire discrimination; and (3) SVM tends to produce higher accuracy compared to kNN and decision tree under the same feature sets, likely thanks to its robust margin-maximizing properties. Overall, the db4 + InceptionV3 + SVM combination achieves the highest accuracy, about 96.8%.

| Mother wavelet | CNN | Classifier | ||

|---|---|---|---|---|

| SVM | kNN | Decision tree | ||

| db4 | AlexNet | 0.925 | 0.887 | 0.872 |

| MobileNet | 0.940 | 0.900 | 0.880 | |

| InceptionV3 | 0.968 | 0.932 | 0.916 | |

| sym8 | AlexNet | 0.914 | 0.871 | 0.860 |

| MobileNet | 0.928 | 0.885 | 0.871 | |

| InceptionV3 | 0.955 | 0.919 | 0.904 | |

| bior1.5 | AlexNet | 0.890 | 0.852 | 0.836 |

| MobileNet | 0.905 | 0.868 | 0.852 | |

| InceptionV3 | 0.915 | 0.890 | 0.877 | |

| rbior3.5 | AlexNet | 0.905 | 0.864 | 0.850 |

| MobileNet | 0.924 | 0.882 | 0.870 | |

| InceptionV3 | 0.939 | 0.905 | 0.891 | |

- Note: The bold value is the maximum classification accuracy.

4.3. Confusion Matrix

Table 4 gives the confusion matrix for the proposed method. Confusion matrix is a square matrix with dimensions nc × nc, where nc denotes the number of classes that are equal to two in this study. The confusion matrix represents the prediction summary in the matrix form and indicates how many predictions are correct and incorrect per class. Such a representation allows us to understand the classes that are being confused by the model as other classes. As shown, from 180 images with wildfire events, only three are wrongly detected as non-wildfire events. Likewise, from 200 images without wildfire events, nine are wrongly recognized as images with wildfire events. The confusion matrix further represents the sensitivity of each class, which is equal to the correct classification accuracy for each class. The sensitivity and precision are 0.9833 and 0.9516, respectively. Regarding the importance of early wildfire detection and the fast action by firefighters, these results confirm the efficiency of the proposed wildfire detection method. Also, the value of 0.9672 for the F1 score indicates the balanced ability of the proposed model to handle positive cases while maintaining accuracy.

| Predicted class | Sens. | Prec. | F1 score | ||

|---|---|---|---|---|---|

| Positive (wildfire) | Negative (non-wildfire) | ||||

| Actual class | |||||

| Positive (wildfire) | 177 | 3 | 0.9833 | 0.9516 | 0.9672 |

| Negative (non-wildfire) | 9 | 191 | |||

4.4. Ablation Study

Table 5 presents the results of investigating the feature reduction algorithm’s effect on the proposed method’s accuracy. As discussed earlier, CNNs extract many deep features from 2D DWT sub-bands, which are highly correlated and thus prolong classification, increase complexity, and reduce efficiency. Thus, using feature reduction methods is crucial. Table 5 compares the accuracy of MDS, PCA, and linear discriminant analysis (LDA). Furthermore, the accuracy of the proposed method is reported while using no feature reduction method. As shown, classification accuracy is improved after using any feature reduction method. Furthermore, supervised LDA outperforms unsupervised PCA, while MDS achieves the highest classification accuracy.

| Method | MDS | PCA | LDA | Without feature reduction |

|---|---|---|---|---|

| Accuracy | 0.9684 | 0.9211 | 0.9368 | 0.8895 |

Table 6 compares the efficacy of various optimization algorithms in training CNNs. According to the results, the optimizer stochastic gradient descent with momentum (SGDM) achieves the highest accuracy than the adaptive moment estimation (ADAM) and root mean squared propagation (RMSProp) ().

| Optimizer | ADAM | RMSPROP | SGDM |

|---|---|---|---|

| Accuracy | 0.8868 | 0.8737 | 0.9684 |

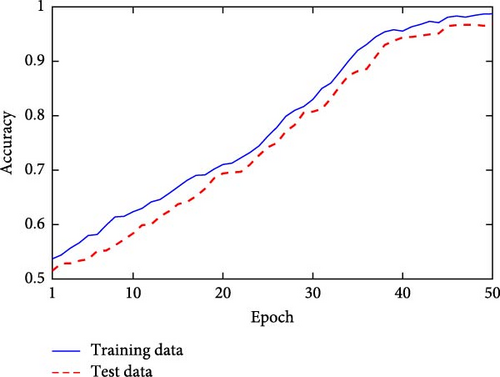

4.5. Convergence Speed

Figure 6 presents the convergence speed of the proposed method for wildfire detection. As observed, the proposed method has an appreciated convergence speed. Also, the difference between the training and test data accuracy indicates that the model is converged and there is no overfitting or underfitting.

4.6. Computational Time Analysis

In that section, we present a temporal-cost table that quantifies the average processing time for each major step of our approach—namely, (1) wavelet decomposition, (2) CNN-based deep feature extraction, (3) dimensionality reduction (MDS), and (4) final classification. Table 7 illustrates the proposed method’s overall computational. These results confirm that the CNN’s forward pass is the primary driver of runtime. However, given modern GPUs or on-board accelerators (like the NVIDIA Jetson family), our entire pipeline remains practical for near real-time applications, mainly if further optimizations (such as model pruning or quantization) are applied.

| Stage | Operation | Average Time |

|---|---|---|

| 1. Wavelet transform | One-level 2D DWT (Daubechies-4) | 3–5 ms |

| 2. CNN feature extraction | Forward pass (InceptionV3, batch size = 16) | 110–130 ms |

| 3. Dimensionality reduction | MDS (classical) on concatenated features | 8–12 ms |

| 4. Classification | SVM inference | 1–2 ms |

| Total per Image | All steps combined | 122–149 ms |

5. Conclusion

Concerning the unique role of forests and their extensive destruction by frequent wildfires, early detection of wildfires is crucial to protect these natural resources. Hence, this study proposed a new method for detecting forest wildfires using UAV images. The proposed method is based on extracting deep features from four sub-bands of one-step 2D DWT. The deep features of the sub-bands are merged to create the final feature vector. Then, by using the MDS method, the redundant features are removed to reduce the computational complexity. Ultimately, a classifier detects if the images contain wildfire events. This study employed the FLAME dataset to train and evaluate the proposed method. In addition, the efficiency of CNNs (i.e., AlexNet, MobileNet, and InceptionV3) and classifiers (i.e., SVM, kNN, and the decision tree) were calculated. It was found that the Inception-SVM combination achieves the highest accuracy of 0.9686. The accuracy of the correct detection of wildfire and non-wildfire events was 0.9833 and 0.955, respectively, implying the high efficiency of the proposed method. Also, it was shown that MDS outperforms conventional methods such as PCA and LDA regarding wildfire detection.

This research classified images into wildfire and non-wildfire categories, and the fire-affected regions were not determined. In the future, we can use semantic segmentation models to segment wildfire-affected regions precisely, aiding firefighting efforts. Also, we can consider hybrid DL models that combine CNNs with attention mechanisms to capture local and global features.

Conflicts of Interest

The authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest, or non-financial interest in the subject matter or materials discussed in this manuscript.

Funding

The authors received no specific funding for this work.

Open Research

Data Availability Statement

Data is available online at: https://ieee-dataport.org/open-access/flame-dataset-aerial-imagery-pile-burn-detection-using-drones-uavs.