Fractional Integration via Picard Method for Solving Fractional Differential-Algebraic Systems

Abstract

In this paper, we applied an efficient integrative method called the fractional Picard method to find the approximate solution to a system of fractional algebraic differential equations (SFADEs). By comparing the results with the exact solution, it was found that this method is highly efficient for finding solutions. Also, a genetic algorithm (GA) was used to speed up the approximate solutions when choosing the best values for the fractional derivative, which increased the efficiency of Picard’s fractional integral method in finding the best solutions. The MATLAB program was used to find approximate solutions.

1. Introduction

The French mathematician Charles Emile Picard (1856–1941), whose name is often linked with the technique, made early and substantial contributions to the method’s systematic development of iterations for boundary and initial value issues in both ordinary and partial differential equations. Theory and application of the technique of iterations to differential equations have attracted a great deal of attention throughout the 20th century and beyond, and both have grown significantly [1]. Many researchers have dealt with the Picard method, either alone or with other methods, and we mention among them. The study [2] gives a comparison between Picard’s iteration method and the Adomian decomposition method (ADM) in solving nonlinear differential equations. In [3], homotopic perturbation and Picard techniques were employed to solve the fractional-time Schrödinger equations. In [4], the approximate solution of the fractional differential equations was discovered through the application of Picard′s iterative technique. In [5], they generalized Picard’s iterated approximation to solve the fractional differential equations with an initial condition. The article’s objective [6] is to expand the Boundary Value Problems Picard Method (BVPP) applications for ODES with nonlinear and Robin boundary conditions. While [7] studied a class of new Picard–Mann iteration processes. Also, the successive approximations method (Picard–Lindelof method) is applied to solve multipantograph delay equations and neutral functional-differential equations [8]. An inexact Picard iteration method (PIM), named Picard-SHSS, was suggested to solve the absolute value equation [9]. The predator–prey model with the numerical methods applied is the ADM and the PIM [10]. The paper [11] proposed an iterative scheme for the solution of second order fuzzy differential equations with fuzzy initial conditions using the fuzzy Picard method, and the convergence is also discussed under generalized H-differentiability. Picard iterative approach for the ψ−Hilfer fractional differential problem was solved in [12]. Picard’s iteration method has been submitted as a technique for solving initial value problems of the first and second order linear differential equations [13]. The Picard method for solving nonlinear quadratic Volterra integral equations using self-canceling noise terms was utilized in [14]. The differential equations arising in fractal heat transfer with a local fractional derivative were solved using the Picard Successive Approximation Method [15]. The PIM was applied to fractional quadratic Riccati differential equations [16].

Genetic algorithms (GAs) are types of optimization algorithms inspired by natural selection. A population of potential solutions is used for a problem, which iteratively evolves to find better solutions. The algorithm selects the fittest individuals in the population and uses them to create new individuals through recombination and mutation. Over time, the population evolves towards better solutions through a process of selection [17]. Many sciences and their branches have benefited from GAs to find and improve solutions to scientific problems that require optimal solutions [18–20].

The multiplicity and diversity of specializations in the field of fractional algebraic equations, such as quantum mechanics, as well as biomathematics, in addition to circuit systems, control systems, electric motors, and other fields that serve the rest of the sciences, put researchers in front of a great challenge to search for guaranteed methods to find solutions to these systems used in real applications, such as [21–24]. From this standpoint, the quest to find a simplified and available method was one of the most important challenges facing this manuscript that the technique relies essentially on a traditional process that was developed to obtain solutions close to the exact solution while preserving the properties of the algebraic equation, especially the values of the fractional derivative, which have an effective role when studying the behavior of these systems. There was another challenge lying in how to reach the optimal values for the fractional derivatives that make the approximate solutions close to the exact solution. For this reason, the idea of using the Picard fractional method to solve fractional algebraic systems and using the GA to find the optimal values was born, thus achieving the goal of the paper.

The vocabulary of our research consists of several sections. The next section contains an overview of Picard’s method with a definition and some properties of fractional integration. We continue with the third section, where the most important concepts and steps for applying the GA are presented to obtain the optimal values for the approximate solution, followed by an explanation of the basic steps for solving problems, and finally the application and results.

2. Picard Method and Fractional Integration Definition

Now reviewing the proposed solution method, we combine Picard’s method with fractional integration to solve systems containing the fractional derivative, which requires not ignoring the values of the fractional derivative and preserving the properties of these systems.

Definition 1. The Riemann–Liouville fractional integration of order α is defined as

With the properties of the Riemann–Liouville integral:

3. GA

- 1.

Starting with a population of expected solutions.

- 2.

Assess the fitness of each chromosome by a fitness function.

- 3.

A neoteric population is produced from a sequence of concepts:

- a.

Selection mechanism: selecting two chromosomes from the solution consequently to their fitness.

- b.

Two chromosomes crossing over join their genetic material to produce new offspring.

- c.

Mutation to maintain genetic diversity and extract new potential solutions.

- a.

- 4.

Finally, after the best option for the present population is identified, the entire procedure can be restarted at step one to achieve even further optimization.

One of the most important features of the GA, which encourages researchers to use it, is that the method proposes solutions to the problem by improving the solutions across stages or generations. The algorithm uses the concept of individuals in the primary community as a potential solution. Then, it begins the process of enhancing solutions across more than one generation to find the best solution. As for the various swarm algorithms, for example, they depend mainly on the behavior of the individuals, the nature of their interaction, and the behaviors issued by each individual towards the other, which often lead to improving the performance of the swarm, that is, improving the solutions to achieve the goal of the problem. In short, the GA depends on successive stages (generations) to improve the solution, while swarm algorithms depend on the behaviors of individuals in the swarm.

4. The Basic Steps for the Solution

Second step: the definition and properties of the rational derivative detailed in Section 2 are applied.

Third step: to produce the sequence of functions x0(t), x1(t), x2(t), ⋯, we repeat the process n times until we obtain an approximate solution close to the exact solution. In this research, the MATLAB software was used to achieve the purpose.

Fourth step: to improve the solution and obtain the best values for (αi), we use the GA and begin with specific values for the fractional derivative, which range between 0.1 and 0.9, then convert these values to the binary form, i.e., zeros and ones, as shown in Table 1.

| Decimal number | Binary number |

|---|---|

| 0.1 | 0.00011001 |

| 0.2 | 0.00110011 |

| 0.3 | 0.01001100 |

| 0.4 | 0.01100110 |

| 0.5 | 0.10000000 |

| 0.6 | 0.10011001 |

| 0.7 | 0.10110011 |

| 0.8 | 0.11001100 |

| 0.9 | 0.11100110 |

Through this operation, the initial population is generated so that the steps mentioned in Section 3 on the selection processes continue to be applied. The intersection between the chromosomes (αi) and the mutation process led to the selection of the best values for the fractional derivatives so that they give results close to the exact solution.

5. The Application on Selected Issues

By considering the following SFADEs, the method’s effectiveness will be examined. The results were calculated using MATLAB software.

Example 1. Let us have SFADEs [29].

The exact solutions are

Equation (17a) can be written in the form

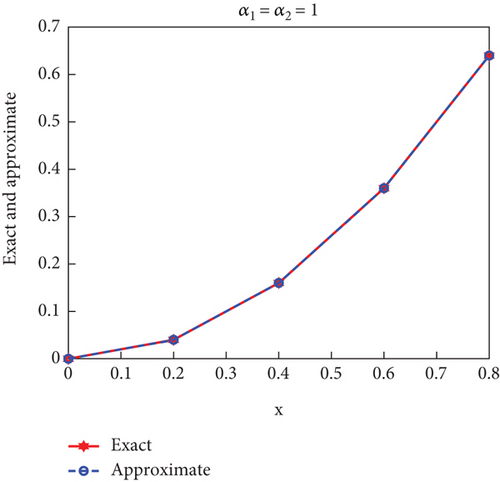

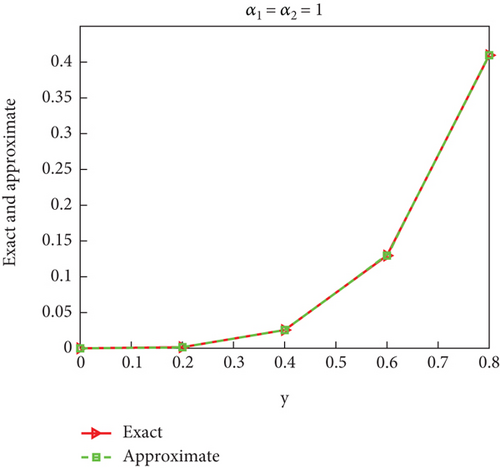

Case 1. Assume that α1 = α2 = 1.

Figure 1 expresses the results of the solution when α1 = α2 = 1, and it can be observed that the approximate and exact solutions coincide.

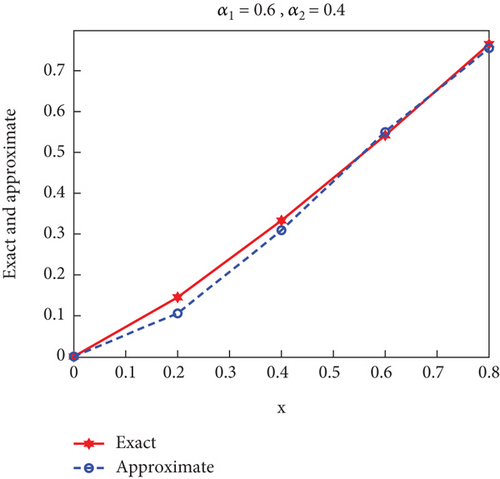

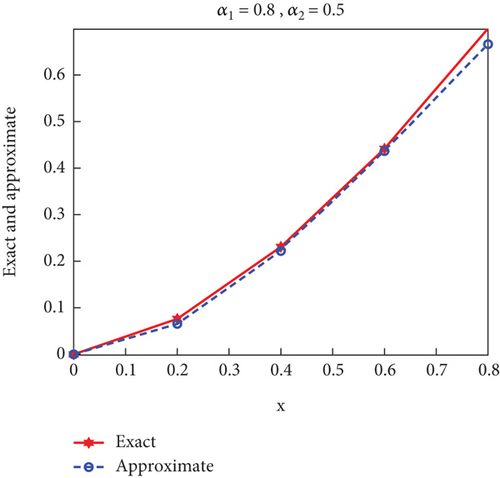

Case 2. In this case, the research sought to study the effect of the different value of α1 and α2 on the exact and approximate solution; suppose that α1 = 0.6, α2 = 0.4 and α1 = 0.8, α2 = 0.5, then the results appeared as in Figure 2.

Let us focus on the effect of alpha values on each other and the results when the values are not optimal. Replacing the values of α1 and α2, an inverse relationship was found between their values, as shown in Figure 2 for the values x.

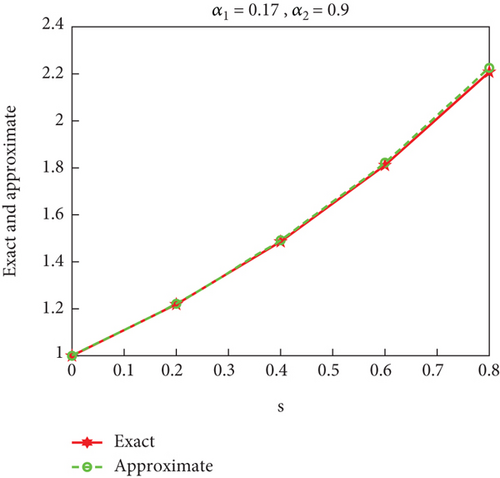

Seeking to make the approximate solutions close to the exact solution without eliminating the effect of the value of the fractional derivative, a GA was used to perform this task, the details of which are described in the fourth section, to arrive at the best alpha values that make the approximate solutions closer to the exact solutions, as shown in Figure 3.

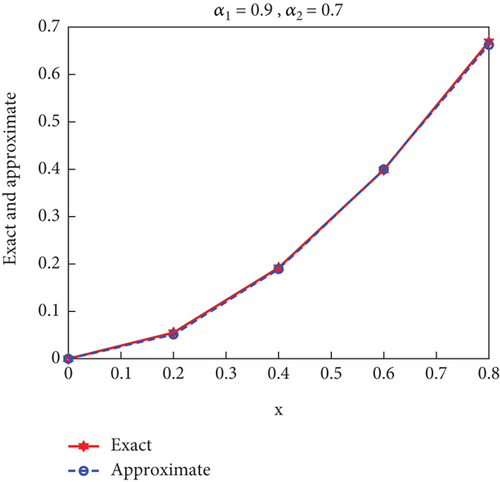

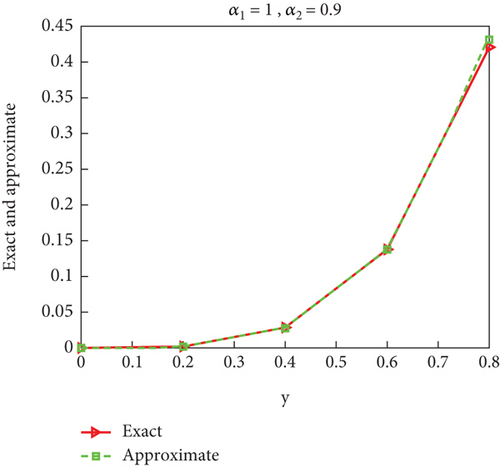

Tables 2 and 3 present the impact of the optimal values of α1 and α2 on the approximate solution for the values of x and y, respectively, and make it close to the exact solution, which preserves the fractional behavior of the system of algebraic equations. The results showed that the best value for x is when α1 = 0.9, α2 = 0.7 and the best value for y is when α1 = 1, α2 = 0.9.

Example 2. Another SFADEs [30]. By following the same steps implemented in the first example, the second is solved as follows:

For α1 = α2 = 1, the exact solutions are s(t) = et, q(t) = e2t, p(t) = e−t.

Applying Picard’s definition, then

The expression for the first equation in the system (36a) is as follows:

By substituting the initial values and applying the fractional Riemann–Liouville derivative, we obtain

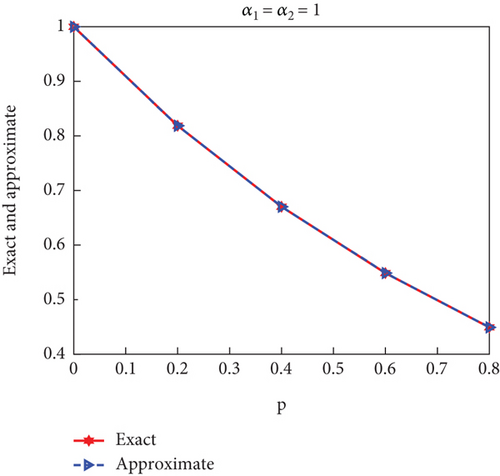

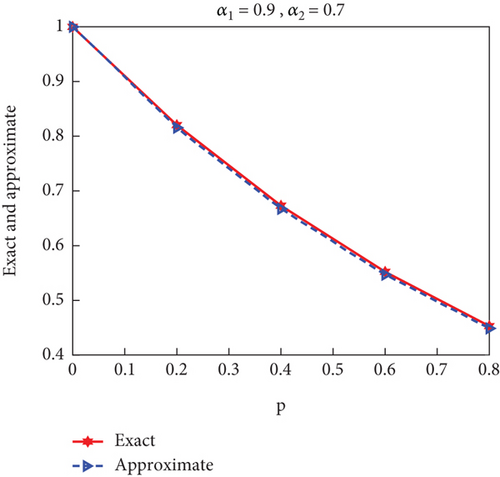

Case 3. If α1 = α2 = 1

Obviously, from Figure 4, the results for x and y are identical when α1 = α2 = 1.

| x | α1 | α2 | xexact | xapproximate | |xexact − xapproximate| |

|---|---|---|---|---|---|

| 0 | 0.9 | 0.7 | 0 | 0 | 0 |

| 0.2 | 0.046984757723521 | 0.045317124246040 | 0.001667633477481 | ||

| 0.4 | 0.175353316221635 | 0.173969894157095 | 0.001383422064540 | ||

| 0.6 | 0.378867520493613 | 0.378203909305038 | 0.000663611188575 | ||

| 0.8 | 0.654441716840687 | 0.646620796327241 | 0.007820920513446 |

| y | α1 | α2 | yexact | yapproximate | |yexact − yapproximate| |

|---|---|---|---|---|---|

| 0 | 1 | 0.9 | 0 | 0 | 0 |

| 0.2 | 0.001940869713876 | 0.001756583063930 | 0.000184286649946 | ||

| 0.4 | 0.028575429474892 | 0.027526929715363 | 0.001048499759528 | ||

| 0.6 | 0.137792903508991 | 0.137670240922619 | 0.000122662586373 | ||

| 0.8 | 0.420716117025556 | 0.431366939095611 | 0.010650822070055 |

As in the previous example, the GA was applied to obtain the best α values, and the results appeared as shown in Figure 5.

Tables 4 and 5 involve the details of the results for the values of p and s, respectively, when substituting the optimal values for the fractional derivatives α1 and α2.

| p | α1 | α2 | pexact | papproximate | |pexact − papproximate| |

|---|---|---|---|---|---|

| 0 | 0.9 | 0.7 | 1.000000000000000 | 1.000000000000000 | 0 |

| 0.2 | 0.820369853137831 | 0.815557500672764 | 0.004812352465067 | ||

| 0.4 | 0.673006695937386 | 0.667312040880429 | 0.005694655056958 | ||

| 0.6 | 0.552114404306931 | 10.546855264192774 | 0.005259140114157 | ||

| 0.8 | 0.452938012776558 | 0.448688378047819 | 0.004249634728738 |

| s | α1 | α2 | sexact | sapproximate | |sexact − sapproximate| |

|---|---|---|---|---|---|

| 0 | 1 | 0.9 | 1.000000000000000 | 1.000000000000000 | 0 |

| 0.2 | 1.218962393821643 | 1.221402947361165 | 0.002440553539522 | ||

| 0.4 | 1.485869317551390 | 1.491826785628538 | 0.005957468077148 | ||

| 0.6 | 1.811218820228573 | 1.822119710254121 | 0.010900890025548 | ||

| 0.8 | 2.207807628840633 | 2.225541787371441 | 0.017734158530808 |

6. Conclusion

- •

Using fractional integration with Picard’s method gave the best results for solving such systems of equations.

- •

The values of α1 and α2 had an impact on the solution through an inverse relationship linking their values when finding the approximate solution by the method proposed in this research. It also has an impact on the values of each other, according to the equation. In the first example, the value of α1 affected the values of α2, but in the second example, the effect was not clear.

- •

The GA played a major role in accelerating the finding of approximate solutions that are close to the exact solution by finding the best values for α1 and α2, which saved effort and time and increased the efficiency of the method in finding the best approximate solution.

Finally, it can be said that the research achieved its goal, which was to solve a system of fractional equations by mixing fractional integration and its properties with the Picard method and improving the results with intelligent techniques.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

The research was funded by the researchers, and they did not receive financial support from any party when preparing the research.

Open Research

Data Availability Statement

The information that backs up the study’s conclusions is always available to readers, when they ask.