FLDATN: Black-Box Attack for Face Liveness Detection Based on Adversarial Transformation Network

Abstract

Aiming at the shortcomings of the current face liveness detection attack methods in the low generation speed of adversarial examples and the implementation of white-box attacks, a novel black-box attack method for face liveness detection named as FLDATN is proposed based on adversarial transformation network (ATN). In FLDATN, a convolutional block attention module (CBAM) is used to improve the generalization ability of adversarial examples, and the misclassification loss function based on feature similarity is defined. Experiments and analysis on the Oulu-NPU dataset show that the adversarial examples generated by the FLDATN have a good black-box attack effect on the task of face liveness detection and can achieve better generalization performance than the traditional methods. In addition, since FLDATN does not need to perform multiple gradient calculations for each image, it can significantly improve the generation speed of the adversarial examples.

1. Introduction

Face recognition technology has been widely used in mobile payment, access control, and smartphone unlocking. However, with the development of face recognition technology, spoofing attacks against face recognition systems are also increasing. Common face spoofing attacks include photo presentation attacks, video replay attacks, and 3D mask attacks. To ensure the security of the face recognition system, face liveness detection (face antispoofing) has received extensive attention from academia and industry, and it has been developed rapidly in recent years [1, 2].

Recently, adversarial attacks in the physical domain have brought serious challenges to the existing face liveness detection models [3–5]. Since the input image of this attack is a large area of a living face and a small area is decorated with adversarial examples such as earrings and glasses, it can not only successfully bypass the face liveness detection model but also directly launch an adversarial attack on the face recognition system. Different from the high image quality requirements for adversarial attacks perceived by the human eyes, its target only needs to successfully pass face detection, face liveness detection, and face recognition by using adversarial examples. However, since the adversarial examples for face liveness detection generated in the digital domain may introduce discriminative traces again in the physical domain acquisition, a success attack is hard to achieve. Zhang, Tondi, and Barni [6] proposed an adversarial attack method for face liveness detection. It first generates adversarial examples from face images in the replayed video playback. After reshooting the adversarial examples, they are input into the liveness detection model to cheat the face liveness detection model. However, it requires averaging gradients over the augmented 2000 images, and it results in slow generation speed. Furthermore, it is a white-box attack, and the generalization of adversarial examples needs further exploration.

- •

An ATN for face liveness detection black-box attack is designed. The designed ATN does not need to perform multiple gradient calculations for each image after training, which can effectively improve the generation speed of adversarial examples.

- •

Feature-level adversarial loss functions for cosine similarity (CS) and mean square error (MSE) are proposed. With these loss functions, the face liveness detection model can be successfully attacked without knowing the optimizer and loss function used for training the attack object model.

- •

The experimental results and analysis show that the adversarial examples generated by FLDATN have a good effect on the black-box attack on the binary classification task of face liveness detection and have better generalization ability than existing attack methods.

The remainder of this paper is organized as follows. The related work is summarized in Section 2. Some preliminaries are presented in Section 3. The proposed FLDATN is detailed in Section 4. Experiment and analysis are previewed in Section 5. Some discussions are made in Section 6. Finally, some conclusions are drawn in Section 7.

2. Related Work

As the main target of the paper is to generate adversarial examples to attack face liveness detection module, face liveness detection and adversarial attacks are, respectively, reviewed.

2.1. Face Liveness Detection

In the early stage, artificially designed descriptors were created for face liveness detection, and typical descriptors include local binary pattern (LBP) [7, 8], histogram of oriented gradient (HOG) [9], and scale-invariant feature transform (SIFT) [10]. In addition, some early face liveness detection methods still relied on various auxiliary information and other hardware devices. Typical methods include human heart rate [11], rPPG [12], 3D scanner [13], and light field cameras [14], which further improve the detection performance. However, it increases the cost.

Practically, the artificially extracted features cannot well distinguish the living face from the prosthetic face, while the features extracted by the convolutional neural network (CNN) are more distinguishable. Thus, it is gradually replacing the artificially crafting features. Xu, Li, and Deng [15] developed an end-to-end network for face liveness detection. It fuses CNN and long short-term memory (LSTM) into CNN-LSTM network architecture, and the experiments demonstrate the effectiveness of image background information. Atoum et al. [16] considered the face depth map as the difference feature between the living face and the prosthetic face. The fundamental reason is that the printed photos and screen replay are 2D images which lack depth information, while the living face is 3D and has rich depth information. Liu, Jourabloo, and Liu [17] pointed out that many previous approaches treat the face liveness detection problem as a simple binary classification problem. Auxiliary supervision training is introduced by using depth map as spatial information supervision and rPPG number as temporal information supervision. It comprehensively considers the depth map and rPPG signal to distinguish live and prosthetic faces. The above methods utilized various auxiliary information pieces to improve the performance of face liveness detection, but the accuracy is still limited due to the imperfect CNN backbone network. Yu et al. [1] implemented central differential convolution to build central difference convolutional networks (CDCNs). It finally outputs a depth map, and the difference between the output depth map and the depth map in the dataset is acted as an evaluation metric. The central differential convolution operation greatly improves the detection accuracy, and CDCN is one of the best available face liveness detection models. Wang et al. designed PatchNet to mine local information and proposed classification loss and self-supervised similarity loss based on asymmetric margins to regularize the embedding space [18]. Deep learning networks can extract more discriminative features and exhibit excellent performance in various visual tasks, which significantly improve the accuracy of facial liveness detection models. In [19], universal liveness features were extracted from different modal data, and a flexible modal transformer network was constructed based on the transformer network structure. Using multimodal data for training, only one modal data can be used for liveness detection during testing. However, it requires additional multispectral imaging equipment larger scale deep learning network models. Recently, Liu et al. proposed Class Free Prompt Learning paradigm for domain generalization based face antispoofing [20]. Two lightweight transformers are utilized to learn the different semantic prompts conditioned on content and style features by using a set of learnable query vectors, respectively. It can achieve good performance on several cross-domain datasets.

2.2. Adversarial Attacks

2.2.1. Gradient-Based Adversarial Attack Methods

The most common adversarial attack is gradient-based adversarial attacks. It back-propagates the gradient from the loss function until the gradient propagates to the input layer, where an adversarial perturbation is added according to the gradient at the input layer, and the range of this perturbation is limited to a certain range. Goodfellow, Shlens, and Szegedy [21] created the FGSM method and found that the linear nature of high-dimensional neural networks is vulnerable to adversarial attacks. It performs only one gradient update along the gradient sign direction on each pixel of the image. It is fast, but the success rate of white-box attacks is low. Subsequently, based on FGSM, BIM was proposed by Kurakin et al. [22]. It needs multiple gradient back propagations while limits much change of a certain pixel. Compared with FGSM, smaller distortion, higher attack success rate, and slower speed can be achieved. After that, PGD was put forward by Madry et al. [23]. It adds random noise before performing multiple gradient back propagations. The generated adversarial examples can obtain good transferability. A new attack C&W was designed for the defensive distillation defense method [24]. It uses L0, L2, and l∞ distance metrics to constrain the image quality. Therefore, the generated adversarial examples can launch successfully attack with minimal image perturbation. However, due to the large of iteration time, its speed is slower than that of the method [18–20]. Recently, Wan, Huang, and Zhao designed an average gradient-based adversarial attack [25]. It optimizes the added perturbations through a dynamic set of adversarial examples, and the size of the dynamic set increases with the number of iterations. It possesses good extensibility and can be integrated into most existing gradient-based attacks.

2.2.2. General Adversarial Attacks

Gradient-based adversarial attack requires back-propagating gradients for each image, so the speed is slow because a large number of adversarial examples need to be generated. Moosav et al. proposed an adversarial attack [26]. The generated adversarial perturbations can be added to various kinds of images. Adversarial examples with the same perturbations can mislead the classifier, and it can achieve good transferability to other neural networks with similar structures. The ATN was first contributed by Baluj and Fischer [27]. A trained ATN can quickly generate adversarial examples after inputting images. However, the generated adversarial examples have low generalization ability. After that, several GAN-based methods were emerged to generate adversarial examples. AdvGAN [28] is typical one of them. It consists of a generator, a discriminator, and a classifier. Different from ATN, AdvGAN complements a discriminator module to determine whether the input data are generated by the generator. Based on [28], Jandia et al. improved the model’s input layer [29]. It first extracts features from the input image, and then random noise is added to the extracted features as the input of the generator. The improved generator can generate more diverse adversarial examples. Liu and Hsieh proposed PGD-generated adversarial examples for adversarial training to get better generators [30]. In addition, the performance of the discriminator is also improved. In a summary, generator-based adversarial attack methods can achieve relatively good generalization on some specific tasks. Yang et al. proposed an adversarial example generation with AdaBelief optimizer and crop invariance [31]. By adopting the adaptive learning rate into the iterative attacks, it can optimize the convergence process, and more transferable adversarial examples can be obtained.

2.2.3. Adversarial Attacks against Facial Recognition Systems

The initial attacks on face recognition systems were attacks in the digital domain. Bose and Aarabi suggested adding tiny perturbations to the input image through the generator [32], which lead to wrong judgment of the faster R-CNN [33]. The AdvFaces [34] inherits the framework of the AdvGAN. In this network, the identity information of the face is utilized to train the generator to get the mask of the adversarial perturbation, which is added to the source image to obtain the adversarial example. However, since it is difficult for ordinary people to directly access the inside of the face recognition system in reality, the attack on the digital domain has less impact. However, the attack on the physical domain will cause more serious consequences. Sharif et al. [3] attached the generated adversarial examples to the glasses to launch attack. In this way, the identity of the person wearing the glasses is regarded as the identity of another person. It reduces the geometric distortion of adversarial examples in the physical domain and improves the generalization of adversarial examples. The effectiveness of adversarial examples in the real world is first validated. Wenger et al. built a poisoning attack against the physical domain [4]. It first puts a small number of poisonous samples into the training data and then adds triggers such as tattoos, handkerchiefs, and glasses during testing, which make the face identity discriminator misjudge the target’s identity. Yin et al. simulated adversarial examples in the digital domain by applying makeup to human faces [5]. To get a more natural generated image, a mixed-policy makeup is used to ensure the style consistency of the generated image and the original image. In addition, fine-grained meta-learning is also exploited to improve the transferability of adversarial examples to black-box models. These physical domain adversarial examples can bypass the face liveness detection module and directly attack the face authentication module. Zhang, Tondi, and Barni addressed an adversarial attack for face liveness detection [6]. It uses the expectation over transformation (EOT) to generate adversarial examples, which can remain robust under various transformations. Thus, the generated adversarial examples cannot be easily affected by the reshooting. However, the generation speed of adversarial examples is slow, and it also lacks black-box attack capability. Recently, Patnaik et al. proposed to an automated generative adversarial network to simulate print and replay attacks and generate adversarial images that can fool face presentation attack in physical domain [35]. Nevertheless, its robustness is still limited.

Gradient-based attack methods are fast for generating a small number of adversarial examples, but they are relatively slow for generating a large number of adversarial examples. For generator-based adversarial sample generation methods, they often require to design special generators according to specific tasks. At present, most of the attacks in the physical domain of the face recognition system are trying to bypass the face liveness detection submodule and then directly attack the face recognition submodule. The adversarial attack against the face liveness detection submodule itself is far from mature. Therefore, to quickly generate a large number of adversarial examples with good generalization, a network model named as FLDATN is proposed in this paper.

3. Preliminaries

3.1. ATN Network Definition

3.1.1. Training

3.1.2. Loss Function

3.2. Threat Model

This paper aims to attack an end-to-end face recognition system. It contains three submodules. The first one is the face detection module, and the function of it is to mark the position of the face in the image. The second one is the face liveness detection module, and it determines whether the input face image is a living face. The last one is the face identity authentication module, and it authenticates whether the face identity matches the face identity in the database.

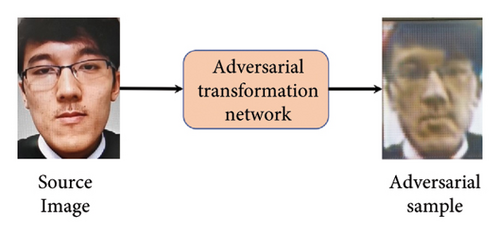

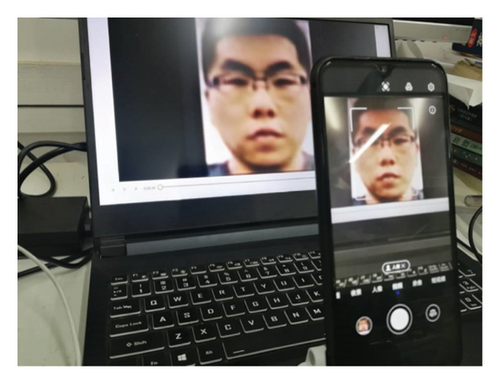

Here, it is assumed that the attacker has a good understanding of the three submodules of the face recognition system but has no access to the inside of the system. It means that the attacker has the same permissions as ordinary people, and the only system interface that can be accessed is the camera. Therefore, the adversarial examples generated in this paper need to meet the following requirements: (1) the adversarial examples on the screen must be captured by the face detection module, (2) the captured face images must be misjudged as a living face by the face liveness detection module, and (3) when face identity authentication is performed, the identity of the adversarial sample is consistent with the source image. A successful attack process is shown in Figure 1. Step (a) generates adversarial examples, and step (b) passes through each module of the face recognition system one by one. The most critical step is to successfully deceive the face liveness detection module. For the face detection module and the face identity authentication module, it only requires that the distortion of the adversarial sample will not affect the normal judgment of the module. Therefore, the pass rate of the face detection module and the face identity authentication module can be also used to judge the image quality of the adversarial sample.

4. The Proposed FLDATN

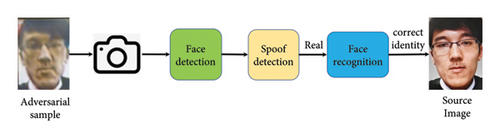

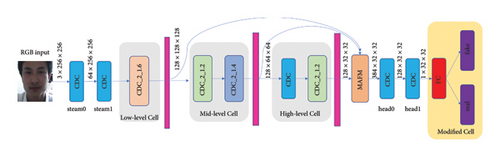

Based on the architecture in [26], an ATN is designed for face liveness detection in this paper and the architecture for training adversarial examples is shown in Figure 2. Lχ and Ly are corresponded to equation (2). The network consists of a generator and a classifier, where the generator is FLDATN and the classifier is CDCN++∗, and the parameter settings of two classifiers CDCN++∗ are exactly the same. After the image x is input into the FLDATN network, it is converted into an adversarial sample x′. At this time, the Lx loss function is used to calculate the distortion degree of the image x and the image x′, and then the images x and x′ are both input into the CDCN++∗. After that, the feature vector of the image x and the feature vector of the image x′ can be, respectively, obtained, and the Ly loss function is used to calculate the similarity between the feature vectors of two images. When the input image x is a living face, the distance between the feature vectors of x and x′ should be as close as possible. If the input image x is a prosthetic face, the distance between the feature vectors of x should be as far as those of x′.

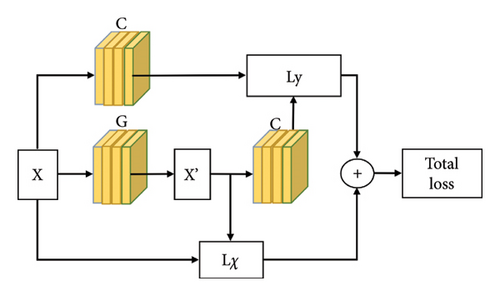

4.1. The Network Structure of FLDATN

The structure of the proposed FLDATN is shown in Figure 3, which is a U-shaped network. The first half of FLDATN is an encoder, and it is used to downsample the image, and each downsampling reduces the image area by 4 times. The second half of FLDATN is a decoder, which is used to upsample the image. The encoder consists of convolutional layers and max-pooling layers, and the decoder consists of a combination of convolutional layers, deconvolutional layers, and nearest neighbor upsampling. In addition, CBAM module [37] is exploited to improve generalization, and it is used in both downsamplings. For two upsamplings, the first is upsampling with a deconvolution layer, and the second is upsampling with the nearest neighbor upsampling. The adversarial sample size obtained after twice upsamplings is the same as the original image size. Since the generation of adversarial examples in the prediction stage is faster than that in the training stage, the generation of adversarial examples needs to be conducted after the FLDATN network is trained.

4.2. Loss Function

The total loss function to be optimized is defined in equation (2), where the Lχ loss function uses SSIM and MSE, and the Ly loss function uses the adjusted CS and MSE to adapt FLDATN network training.

4.2.1. MSE (L2 Norm)

4.2.2. CS

5. Experimental Results and Analysis

5.1. Experimental Setting

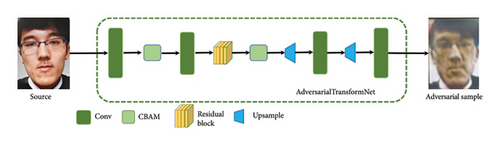

In the experiment, face detection and face identity authentication used in this paper are known methods. Considering the efficiency, robustness, accuracy, and ease of deployment, MTCNN [38] is used for face detection and FaceNet [39] is chosen for face authentication. The attacked face liveness detection model is CDCN++ [1]. It needs to mention that this paper does not use the original CDCN++ model during training, and some changes are made to the final output layer. Because the original CDCN++ output is a depth map, and this paper changes the output layer to a Softmax function for the classification task. The changed CDCN++ model is shown in Figure 4, and it acts as a white-box attack model for generating adversarial examples. The used camera is Honor play4T pro (48 million pixels). The display parameters are 15.6 inches, 1920 × 1080 resolution, and 144 Hz. The attacked model is shown in Figure 2. The reason for choosing this model is that the model has won the multimodal track championship and the single-modal track runner-up in the 2020 ChaLearnFace Anti-spoofing Attack Detection Challenge. The dataset used in the experiment is the Oulu-NPU dataset [40], which consists of 4950 living and prosthetic face videos from six different mobile devices (Samsung Galaxy S6 edge, HTC Desire EYE, MEIZU X5, ASUS Zenfone Selfie, Sony XPERIA C5 Ultra Dual, and OPPO N3) cameras. The attack types in Oulu- NPU dataset are printing and video playback, and the original presentation attack videos of Oulu-NPU will not be used in this experiment. Considering that different shooting equipment will affect the experiment, our own equipment is used to reshoot the face of the Oulu-NPU dataset. To make the prosthetic face in the training dataset to be consistent with the collection method of the adversarial examples, it should be ensured that the shooting equipment and the experimental environment are the same. The process of shooting adversarial examples with a mobile phone is shown in Figure 5. It can be seen that the face detection of the mobile phone can successfully detect adversarial examples.

The input image and output image size of the FLDATN network are both 224 × 224, batch size is set to 16, epoch is set to 10, and learning rate is set to 0.0002. For the selection of the yi label in the equations (9) and (13), instead of using labels in the original dataset, the value predicted by the face living detection model is utilized as the label. The training input of the face liveness detection module is 224 × 224, the batch size is set to 32, the epoch is set to 10, and the learning rate is set to 0.0002. The experiment requires developing 400 adversarial examples of 20 different faces to test the attack success rate. The processing of the dataset requires extracting face images every 5 frames from the videos in the Oulu- NPU and our own reshooting videos. The size of the training dataset has 8000 prosthetic faces and 7974 living faces; the test dataset has 1965 prosthetic faces and 1862 living face images, where the faces in the test dataset and the faces in the training dataset belong to different identities. In addition, since the ratio between the width and height of the faces in the Oulu-NPU dataset is not consistent, it needs to be scaled according to the original ratio, when the image is reduced to 224 × 224. The image transformation in [6] is exploited to enhance the image in the experiments.

5.2. Evaluation Metrics

The attack methods for comparison are FGSM [21], BIM [22], PGD [23], and C&W [24], and their parameter settings are listed in Table 1. Furthermore, some pretrained neural network models are chosen for black-box testing of liveness detection, and they are ResNet [41], MobileNet [42], DenseNet [43], VGG [44], EfficientNet [45], and VisionTransformer [46]. These pretrained models are available from [47]. They are first pretrained by ImageNet and then trained by the training set of the Oulu-NPU dataset. After that, they are used for the face liveness detection task.

5.3. Experiments and Performance Analysis

5.3.1. Black-Box Testing

The current digital domain adversarial attack methods have achieved a high attack success rate. Thus, they will not be discussed here, and our focuses are mainly on attacks in the physical domain. The single image cropped during the attack does not need to be stitched to the original image as [6], and it directly shoots the adversarial sample. As seen from Figure 5, it can be recognized by face detection even if it is not stitched to the source image. In the following, the expression of the proposed attack will be appeared in the form of visual loss function plus misclassification loss function. For example, “SSIM + MSE,” where SSIM is the visual loss function and MSE is the misclassification loss function. The experimental results of adversarial examples generated by the CDCN++∗ model in the physical domain are listed in Table 2.

| Method | CDCN++∗ | DenseNet-161 | DenseNet-121 | MobileNetv3-large-100 | Vgg-19 | TF-EfficientNet-b0 | ResNet-152 | Vit-Base-ResNet-50 |

|---|---|---|---|---|---|---|---|---|

| FGSM [18] | 15.22 | 2.30 | 1.88 | 11.92 | 0.46 | 1.92 | 3.88 | 0.75 |

| BIM [19] | 78.71 | 0.67 | 2.16 | 5.80 | 0.00 | 0.04 | 2.84 | 1.00 |

| PGD [20] | 75.74 | 1.05 | 1.77 | 6.73 | 0.00 | 0.13 | 0.80 | 0.92 |

| C&W [21] | 70.08 | 0.00 | 0.00 | 0.00 | 0.00 | 0.04 | 1.29 | 0.25 |

| SSIM + MSE | 41.84 | 49.76 | 3.27 | 6.59 | 6.11 | 38.40 | 7.66 | 10.42 |

| SSIM + cosine | 43.44 | 27.27 | 0.21 | 2.53 | 0.51 | 31.10 | 0.97 | 4.05 |

| SSIM + CE | 25.78 | 54.83 | 0.67 | 6.44 | 1.96 | 31.42 | 11.98 | 4.55 |

| MSE + MSE | 53.30 | 72.93 | 0.62 | 1.36 | 3.60 | 50.97 | 3.78 | 8.63 |

| MSE + cosine | 48.06 | 61.30 | 0.58 | 0.54 | 2.24 | 42.20 | 0.49 | 1.79 |

| MSE + CE | 19.18 | 7.68 | 0.0 | 0.33 | 0.12 | 13.16 | 2.04 | 7.78 |

- Note: The bold values represent the best results.

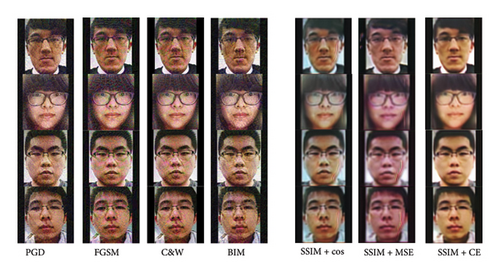

It can be found that FGSM can achieve the best performance 11.92% on MobileNetv3-large-100, and BIM can achieve the best performance 78.71 on CDCN++∗, while the proposed scheme can achieve the best performance on the rest models with different combinations. The attack success rate on DenseNet-161, TF-EfficientNet-B0, and VIT-Base-ResNet-50 is higher than that of the three models. At the same time, it can be seen that it is equally effective to use the CS of the feature vector instead of the Euclidean distance of the feature vector as the classification loss function. Comparing SSIM with MSE, it can be seen that the attack effect of “SSIM + CE” loss function combination is obviously better than that of “MSE + CE” loss function combination. The generated adversarial examples are shown in Figure 6. It can be found that the adversarial perturbation generated by the proposed attack is relatively concentrated. The adversarial perturbation generated by CS and MSE is located on the right cheek, while the adversarial perturbation of the gradient-based methods is scattered to all corners of the image. The results show that the effects of equations (9) and (13) are indeed similar.

The training status of each face liveness detection module is listed in Table 3. From Table 3, CDCN++∗ model is not as good as other models when it is changed to a binary classification task. Therefore, DenseNet-161 is used as the threat model to test the attack success rate of the generated adversarial examples. The results are listed in Table 4.

| None | CDCN++∗ | DenseNet-161 | DenseNet-121 | MobileNetv3-large-100 | Vgg-19 | TF-EfficientNet-b0 | ResNet-152 | Vit-Base-ResNet-50 |

|---|---|---|---|---|---|---|---|---|

| APCER | 6.02 | 0.16 | 0.16 | 0.11 | 0.16 | 0.00 | 0.16 | 0.16 |

| BPCER | 3.92 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.05 | 0.00 |

| ACER | 4.97 | 0.08 | 0.08 | 0.06 | 0.08 | 0.00 | 0.11 | 0.08 |

| Method | CDCN++∗ | DenseNet-161 | DenseNet-121 | MobileNetv3-large-100 | Vgg-19 | TF-EfficientNet-b0 | ResNet-152 | Vit-Base-ResNet-50 |

|---|---|---|---|---|---|---|---|---|

| FGSM [18] | 17.32 | 46.43 | 39.63 | 34.42 | 0.78 | 6.44 | 14.97 | 5.70 |

| BIM [19] | 16.54 | 98.02 | 84.01 | 26.79 | 0.04 | 16.51 | 18.27 | 6.32 |

| PGD [20] | 15.63 | 97.51 | 82.71 | 37.10 | 0.13 | 19.20 | 15.54 | 12.4 |

| C&W [21] | 18.65 | 76.37 | 17.35 | 15.71 | 0.13 | 4.93 | 5.97 | 2.25 |

| SSIM + MSE (high) | 22.00 | 100.0 | 0.17 | 4.21 | 11.61 | 22.68 | 22.26 | 26.88 |

| SSIM + MSE (low) | 21.75 | 76.18 | 0.04 | 4.43 | 0.53 | 15.03 | 11.99 | 7.18 |

| SSIM + CE | 26.89 | 93.54 | 0.67 | 1.15 | 3.21 | 10.46 | 11.30 | 7.21 |

- Note: The bold values represent the best results.

From the results, the attack success rate of all methods has been improved. For different face liveness detection models, the proposed method attack and the gradient-based methods have their own advantages. It is worth noting that the training of the SSIM + MSE combination has large fluctuations, and the results of the best case and the worst case are quite different. The reason for this may be that the image enhancement operation adds randomness, and stochastic gradient descent gets stuck in a local optimum. Along with the results in Table 2, the attack success rate of CDCN++∗ model is lower than that of DenseNet-161, which indicates that the robustness of CDCN++∗.

5.3.2. Image Quality Analysis

The actual attack success rate also needs to judge the pass rate of the face detection module and the pass rate of the face authentication module. The final attack success rate of the adversarial examples generated on CDCN++∗ model is listed in Table 5, and the final attack success rate of the adversarial examples generated on DenseNet-161 is listed in Table 6, where Face Det represents the accuracy of the face detection module, and Face Rec represents the accuracy of the face recognition module. It can be seen from the tables that the success rate of face detection is basically above 90%. However, on the face authentication module, our proposed method has a low pass rate, especially when MSE is used as the image quality loss function, which results in the much image distortion. According to the experimental results, for different attack methods, the attack method with low PSNR does not necessarily have a lower pass rate of face target detection and face identity authentication. Comparing Tables 5 and 6, it can be found that the adversarial examples generated by DenseNet-161 have significantly lower pass rates on Face Det and Face Rec when the PSNR is not much different. This means that the image quality of the adversarial examples generated by DenseNet-161 is worse. The general trend is that the better the image quality is, the better the total ASR is. Nevertheless, the generation of adversarial examples inevitably introduces perturbation. Thus, it needs to strike a balance between the image quality and the attack performance.

| Method | PSNR | Face Det | Spoof Det | Face Rec | Total ASR |

|---|---|---|---|---|---|

| FGSM [18] | 20.82 | 99.87 | 15.22 | 95.86 | 14.85 |

| BIM [19] | 20.55 | 99.46 | 78.71 | 94.24 | 73.77 |

| PGD [20] | 19.88 | 99.16 | 75.74 | 94.40 | 70.89 |

| C&W [21] | 26.75 | 99.95 | 70.08 | 96.56 | 67.63 |

| SSIM + MSE | 21.09 | 97.15 | 41.84 | 87.91 | 35.73 |

| SSIM + cosine | 20.72 | 98.54 | 43.44 | 90.29 | 38.64 |

| SSIM + CE | 20.72 | 99.66 | 25.78 | 89.19 | 22.91 |

| MSE + MSE | 19.45 | 94.24 | 53.30 | 79.52 | 39.94 |

| MSE + cosine | 18.98 | 93.32 | 48.06 | 77.12 | 34.58 |

| MSE + CE | 18.88 | 99.54 | 19.96 | 82.64 | 16.41 |

- Note: The bold values represent the best results.

| Method | PSNR | Face Det | Spoof Det | Face Rec | Total ASR |

|---|---|---|---|---|---|

| FGSM [18] | 20.84 | 95.55 | 46.43 | 88.32 | 39.18 |

| BIM [19] | 21.19 | 95.03 | 98.02 | 82.11 | 76.48 |

| PGD [20] | 20.70 | 97.51 | 95.49 | 85.81 | 79.89 |

| C&W [21] | 23.66 | 96.25 | 76.37 | 89.60 | 65.86 |

| SSIM + MSE (high) | 19.88 | 99.87 | 100.0 | 78.86 | 78.75 |

| SSIM + MSE (low) | 19.58 | 98.70 | 76.18 | 81.97 | 61.63 |

| SSIM + CE | 21.24 | 99.58 | 93.54 | 89.67 | 83.52 |

- Note: The bold values represent the best results.

5.3.3. Adversarial Sample Generation Speed

To explore the adversarial sample generation speed of various methods, each attack requires generating 400 adversarial examples and the average of the generation speed of 400 adversarial examples is the final result. The experimental results are listed in Table 7. Form the results, the proposed adversarial attack has the fastest generation speed. Additionally, FGSM is faster than other gradient-based methods because only one gradient back propagation is performed. As the proposed attack does not need gradients and can directly obtain adversarial examples through the FLDATN network, the speed of generating adversarial examples is much faster than FGSM.

5.3.4. Ablation Experiment

To explore the effectiveness of CBAM module in generating adversarial examples, ablation experiments are conducted, and the results are listed in Table 8. During training, without changing the training parameters, the distortion of the adversarial examples generated in the digital domain will be different. After reshooting the same adversarial sample, the attack success rate will slightly fluctuate. Descending training under stochastic gradient and reshooting of adversarial examples can interfere with the quality of the adversarial examples. The results of two experiments without CBAM both show that the attack success rate for the domain model TF-EfficientNet-b0 and the model Vit-Base-ResNet-50 decreases, while the attack success rate for CDCN++∗ increases, and the results show that the generalization of the CBAM module has been improved, but the success rate of the white-box attack is decreased. In two experiments without the CBAM module, adversarial examples with poorer image quality can achieve better generalization.

| CBAM | PSNR | Face Det | CDCN++∗ | DenseNet-161 | DenseNet-121 | MobileNetv3-large-100 | Vgg-19 | TF-EfficientNet-b0 | ResNet-152 | Vit-Base-ResNet-50 |

|---|---|---|---|---|---|---|---|---|---|---|

| 20.34 | 96.72 | 60.07 | 46.86 | 0.39 | 2.62 | 2.62 | 14.63 | 2.32 | 0.90 | |

| √ | 22.10 | 99.70 | 43.19 | 48.65 | 0.67 | 3.96 | 3.96 | 35.90 | 9.53 | 7.83 |

- Note: The bold values represent the best results.

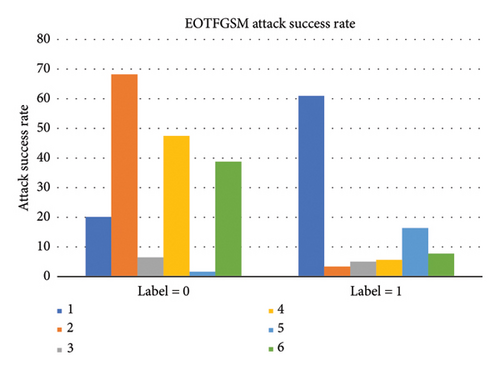

6. Discussion

FLDATN is suitable for the situation with a large number of datasets. However, when a dataset has only a single image, FLDATN cannot be trained. In this case, it is more efficient to use a gradient-based method. To discuss the difference in the attack success rate of adversarial examples generated by faces with different identities, and meanwhile, consider the speed of generating adversarial examples, a method combining EOT and FGSM is used to generate adversarial examples for a single face image. In the experiment, the image transformation distribution settings are consistent with [6], and the number of transformed images is also 2000. Experiments are carried out with 12 different identities in the Oulu-NPU dataset (6 of which are living faces and 6 are prosthetic faces), and the attack object is CDCN++∗. The results are shown in Figure 7. It can be found that the attack difficulty of different identities is different. Combining with Table 2, it can be seen that the extreme value of the FGSM attack success rate will be far away from the average value of the attack success rate. From the results, the attack success rate of adversarial samples of some faces will be high, while the attack success rate of adversarial samples of other faces will be very low. In actual attacks, only one face image is often selected to attack. At this time, the attack success rate of adversarial examples may be high or low.

7. Conclusion

A black-box attack for face liveness detection based on ATNs is proposed in this paper. FLDATN can quickly generate adversarial examples after a large amount of data training, and the CBAM module can effectively improve the generalization performance of the adversarial examples, and it also can better locate the location of adversarial perturbations. Experiments show that reasonable application of feature vector similarity can achieve a good attack success rate and good generalization in the physical domain without knowing the loss function and optimizer of the threat model. Compared with MSE, SSIM can better control the image distortion. Compared with other adversarial example generation methods, the proposed method can achieve better generalization and faster generation speed of adversarial examples. However, the quality of the generated adversarial examples is still far from satisfactory, and the attack success rate still has room to improve. Furthermore, many face detection models use depth map as the output of the model, which is not a simple binary classification task. Our future work will be concentrated on the attack of such face detection models.

Conflicts of Interest

The authors declare no conflicts of interest.

Funding

Research was supported by the National Natural Science Foundation of China (10.13039/501100001809) ∗ 62072055.

Acknowledgments

This work was supported by a project supported by the National Natural Science Foundation of China (Grant No. 62072055). The authors would like to thank the authors of the previous works for sharing their codes.

Open Research

Data Availability Statement

The data that support the findings of this study are the Oulu-NPU dataset, and they are available from https://sites.google.com/site/oulunpudatabase.