HTTPSmell: A Deep Learning Approach on Malicious HTTP Traffic Detection via Data Augmentation and Label Refactoring

Abstract

Anomaly detection is essential to ensuring system security and reliability. As one of the basic techniques in the cyberattack, the existing malicious traffic classification method has been facing diverse challenges such as insufficient samples, poor denoising ability, and weak generalization of the classification model. In this paper, we propose a novel method for detecting malicious HTTP traffic based on a framework (HTTPSmell; it refers to the sniffing of some network attacks launched by exploiting the HTTP protocol.)), and XSS dataset obtained from GitHub that automatically applies a deep learning model with high generalization ability even under a small training dataset. With HTTPSmell, we are able to achieve positive results from a semisupervised model that leverages the unsupervised data augmentation (UDA) and the keywords library avoidance (KLA)-based data augmentation method that holds higher learning generalization, higher scenario coverage rate, and better detection efficiency in the smaller training samples. Finally, we demonstrate through comprehensive experiments in the realistic enterprise environment that HTTPSmell can achieve an accuracy of 96.77% for identifying complex and advanced cyberattacks, while only maintaining a constant 60 MB of memory and sustaining up to 27 k/s throughput.

1. Introduction

In modern cyberattacks, web services, as the primary target, play an important role in carrying out data theft and vulnerability attacks. It greatly helps to enhance network security for analyzing such network traffic and detect malicious HTTP traffic in time through anomaly detection methods [1]. However, as one of the most numerous network services in cyberspace, web services have been suffering from various forms of security threats, and many traditional malicious traffic detection methods have become ineffective due to the emerging vulnerabilities and the ingenious exploits of attackers.

Conventional anomaly detection strategies make rules based on the traffic of normal behaviors, any of which breaks the rules will be identified as an attack behavior. However, this sort of detection strategy is similar to the white list mechanism in that all rules are set in a specific range in advance [2]. Once the user produces unexpected but still normal operational traffic, the detection strategy will be misjudged as malicious traffic. The research [3] aimed at statistical-based classification and behavior-based classification, those of which are mostly completed by using machine learning. Specifically, in the first stage, the required traffic features are extracted. Then, the dataset is inputted into a machine-learning model for training. Finally, the resulting model is used for traffic detection. In the work of other researchers [4–10], we found that the generalization ability of malicious HTTP traffic detection is normally weak in real-world scenarios, and the malicious web traffic with obfuscations can often exploit the detection model. Although the accuracy of some malicious network traffic detection methods is very high in certain specified datasets, the results in other datasets could be significantly reduced [7]. Web attackers use various attack methods, generating the diversity of the traffic behavior so that most of the current anomaly detection often requires a large number of traffic samples for training. However, it is challenging to collect malicious traffic samples, especially malicious traffic payloads. In addition, traffic data collected in the real-world scenario may lack trusted labels. It will cause the label error corresponding to the proposed feature, thereby resulting in overfitting and weak generalization ability. To solve the problem, data augmentation, as a promising and effective method, is brought out to generate more training samples based on a small number of training datasets with a better feature model extraction. More precisely, data augmentation technology can allow neural networks to extract text features such as keywords, word order, and semantic associations through fewer data. Although this technology for text data is conducive to assisting the machine learning model in extracting its semantic association characteristics, the HTTP traffic data (e.g., “script,” “select,” and “for”), similar to text data, are unable to adopt the way of synonym substitution for data augmentation. After these training data are expanded through traditional text data augmentation methods, they will be sent to the server for label refactoring using a cooperative semisupervised algorithm. The influence of the mislabeled data on the model can be eliminated and improve the final classification accuracy.

Finally, one of the main challenges we considered was the trade-off between detection accuracy, efficiency, and overhead. Most existing detection models are heavy, resource-intensive, and have low detection efficiency, hindering model iteration and maintenance. We implemented our design, called HTTPSmell, a lightweight and efficient detection model that sacrifices only slightly lower precision. The memory usage of HTTPSmell remains constant (60 MB) over time while achieving an accuracy of 96.77% and keeping a throughput of 27 k/s. This allows us to free up valuable computing resources and significantly reduce the inference time, enabling the future integration of more tasks.

- (i)

A novel framework is proposed to generate a more extensive training dataset systematically. Firstly, the marked HTTP traffic is automatically segmented according to the special characters, and then, the keywords are extracted to establish the keywords library. Based on the novel keywords library avoidance (KLA) method, the nonkeywords of the original training samples are randomly replaced. Finally, the augmented samples, together with the original training data, are combined to generate a larger training dataset. The optimal parameters of data augmentation are obtained through experimental evaluations in Section 3.

- (ii)

An improved semisupervised training method is proposed to effectively deal with the problem of poor model performance in complex real-world scenarios. The training dataset statements are first transformed into vector statements by Word2vec. And then, a semisupervised model detection method is proposed, by which label refactoring is carried out using a cooperative semisupervised algorithm. Finally, a model with strong generalization ability is obtained.

- (iii)

Various experimental performance tests on the whole proposed methods for model optimization are individually evaluated. We analyze the influences of various data noise ratios in KLA-based data augmentation and different data preprocessing methods on the model detection performance separately and demonstrate that our proposed data augmentation method and preprocessing approaches can enhance the robustness and generalization ability of the detection model. The final performance overhead experiments by comparing the detection efficiency and performance overhead of our model with the WebSmell [11] show that HTTPSmell has lightweight characteristics, practical application value, and higher detection efficiency.

The remainder of this paper is organized as follows. Section 2 introduces our proposed HTTPSmell framework. Section 3 shows the performance evaluation results from various experimental analyses. Section 4 describes the related works on malicious HTTP traffic detection and data augmentation for machine learning. Section 5 gives a further discussion of the results of our case studies. Finally, Section 6 draws final conclusions.

2. Methodology

This section introduces the overall architecture of our proposed malicious HTTP traffic detection model, HTTPSmell, using deep learning via data augmentation and label refactoring. We then discuss several important topics of two parts, including the data augmentation and classification models.

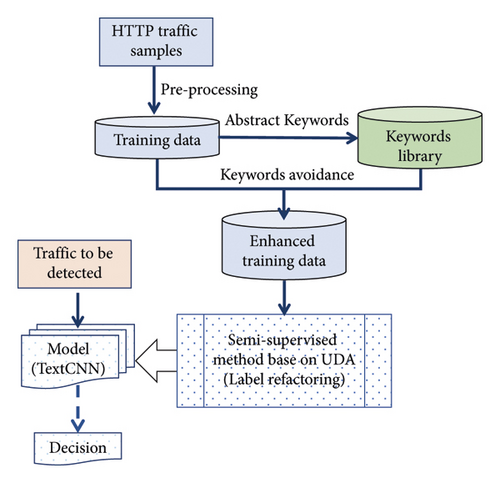

2.1. HTTPSmell Framework

- (i)

The training data are obtained by mixing the request data of normal traffic and malicious traffic. All the data in the training set are segmented based on special symbols and strings in the keyword library, which consists of high-frequency words extracted from the malicious dataset.

- (ii)

Based on the keywords library avoidance (KLA) strategy, the training samples after data enhancement processing are merged into the training data to get the final augmented training set. Word2vec is used to convert all the traffic data in the training dataset into vector statements.

- (iii)

An augmented training dataset will be sent to the server for label refactoring using the semisupervised algorithm based on UDA (unsupervised data augmentation) [12].

- (iv)

The vector statements are inputted into our learning model based on TextCNN to obtain the detection model.

When using HTTPSmell to identify HTTP traffic, the input needs to be preprocessed as text, split into word lists by our proposed segmentation method, and converted into vector statements using Word2vec. Then, the UDA-based semisupervised training method is used for label refactoring. Finally, the detection task is performed by utilizing the deep learning model.

2.2. Data Augmentation

Poor data augmentation algorithms that process unlabeled samples will weaken the performance of UDA models. When the sample diversity and semantic information after data enhancement processing are not guaranteed, the model will be prone to produce overfitting, especially the deep learning model with many parameters and strong learning ability. Traditional data augmentation algorithms such as back translation, RandAugment, and TF-IDF word replacement used in literature [12] will destroy the semantics of traffic samples because the replacement of keywords will weaken malicious traffic characteristics into benign traffic. Applying an appropriate noise-adding method to training samples can enhance the diversity of training samples and help the machine learning models counteract the impact of noise, thus lowering the risk of overfitting.

Therefore, this paper adopts the idea of feature reservation. On the one hand, the critical feature text is retained. On the other hand, the nonkey feature text is randomly replaced to achieve data augmentation while preserving the critical information of web traffic.

2.2.1. Traffic Parsing and Keyword Extraction

This paper divides the malicious HTTP traffic into three categories: SQL injection, Cross-site scripting (XSS), and directory traversal. SQL injection is to enter the query string requested by the domain or page to trick the server into executing malicious SQL commands. XSS embeds malicious script into a page. When normal users visit the page, the embedded malicious script code can be executed, thus achieving the purpose of malicious attacks on users. Directory traversal allows attackers to access restricted directories and execute commands outside the root directory of the Web server. Since the attack payload of most web attacks exists in the request path or form data of HTTP traffic, they need first to be parsed to form samples. To parse the data in the sample correctly, preprocessing is necessary, so the following steps are carried out.

(1) Data Extraction. Extract the request path in the Get request and the form data in the Post request. This paper mainly considers “Post” and “Get” because they are the most common.

(2) Data Cleaning. Perform URL decoding, lower case characters, and remove spaces and other invalid characters on the extracted data to obtain the final samples.

2.2.2. Keyword Library Generation

In order to process the text data effectively, we first divide the text into a large number of independent units. To the best of our knowledge, text data are usually divided into single characters; as there is a lot of data in complex requests, only single characters will ultimately reflect the distribution and frequency of a character, leading to overfitting in the final model prediction. Therefore, it is essential to segment the samples based on the word unit to establish the semantic relationship between words.

For example, SQL injection attack payload exists in Data 1 of Table 1.

| Data | Data content | Type |

|---|---|---|

| Data 1 | https://www.httpsmell.com?id=1′ or 1 = 1 union select 1, group_concat (user, password) from user# | SQL injection |

| Data 2 | https://www.httpsmell.com?id=1’%09or%091=1%09/∗!%55NiOn∗/%09/∗!%53eLEct∗/%091, group_concat (user, password) %09from%09users# | SQL injection |

| Data 3 | /httpsmell.cfm?<script>xss_javascript.js</script> | XSS |

| Data 4 | httpsmell cfm script xss javascript js script | XSS |

| Data 5 | 1’%09or%091 = 1%09/∗!%55NiOn∗/%09/∗!%53eLEct∗/%091, group_concat (user, password) %09from%09users# | SQL injection |

| Data 6 | 8’%09or%091 = 1%09/∗!%55NiOn∗/%09/∗!%53eLEct∗/%097, group_concat (pig,dog) %09from%09tigers# | SQL injection |

| Data 7 | 8’%09or%095 = 3%09/∗!%55NiOn∗/%09/∗!%53eLEct∗/%097, happy_test (pig,dog) %09car%09tigers# | SQL injection |

| Data 8 | /httpsmell.cfm?<script>xss_javascript.js</script> | XSS |

| Data 9 | /3a02t8er.Qt?<script>er3_bsttesmrel.ca</script> | XSS |

In order to recognize SQL injection correctly, we should focus on the characteristics of SQL injection. For example, when a SQL query exists in the requested data, it is most likely a malicious request. Accordingly, common SQL query statements can be recorded as keywords (similarly, common statements in XSS attack and directory traversal can also be recorded as keywords). However, there are the following problems in manually recording web traffic keywords: first, the number of web traffic keywords is huge, the cost of manual recording is high, and the recall rate is low. Second, as the attack technique and attack load constantly evolve, some keywords will be confused, failing to record manually. For instance, space is replaced with “%09,” “union” is replaced with “/ ∗!%55NiOn ∗/,” and “select” is replaced with “/ ∗!%53eLEct ∗/,” as shown in Data 2 which is converted from Data 1.

-

Algorithm 1: Keyword library generation algorithm.

-

Input:

- (1)

word filter threshold θ

- (2)

List of malicious URLs with SQL injection, XSS and directory traversal Listmal urls

- (3)

List of benign URLs Listben urls Output: Set of keywords library

- (1)

Setkeywords ⟵ ∅

- (2)

for each m url ∈ Listmal urls do

- (3)

Listwords ⟵ SplitURL (m url)

- (4)

Setkeywords ⟵ Setkeywords ∪ RemoveSingleChars (Listwords)

- (5)

end for

- (6)

//Initialize the map stores words and counts

- (7)

Mapword count ⟵ ∅

- (8)

for each b url ∈ Listben urls do

- (9)

Listwords ⟵ SplitURL (b url)

- (10)

for each word ∈ RemoveSingleChars (Listwords) do

- (11)

Mapword count ⟵ Mapword count ∪ (word, count (word))

- (12)

end for

- (13)

end for

- (14)

//sort map by counts

- (15)

Mapword count ⟵ Sorted (Mapword count.values ())

- (16)

//get total words counts

- (17)

total count ⟵ Sum (Mapword count.values ())

- (18)

for each (word, count) ∈ Mapword count do

- (19)

if θ < count/total count then

- (20)

Setkeywords.remove (word)

- (21)

end if

- (22)

end for

A plausible solution we propose is to analyze the lexical patterns of network traffic based on training samples, automatically extract web traffic keywords, and construct a keyword library. Many studies have used N-grams to carry out keyword mining on the text [5], effectively capturing byte frequency distribution and sequence information. Although the content and sequence of high-frequency words are analyzed, it is easy to produce a large number of meaningless words, and the calculation cost of mining keywords with a long length is very high (e.g., “union” is a keyword, then the substring of “union” such as “Ni,” “NiO,” and “iOn” are also extracted as keywords). As a result, insights based on web traffic statements can be divided into meaningful words, and we consider various special symbols in web traffics and extract the substring between two special symbols into one word to simplify the segmentation process. Meanwhile, the words extracted by this way often have actual semantics in web traffic. Accordingly, the 28 notable characters in web traffic (including .,’”<>+- ∗={}()[]∼/#:; ?!-&@) are used as the basis for segmentation.

Taking the valid request path Data 3, for example, the new data after segmentation will become Data 4. As can be seen from the example, keywords strongly related to XSS semantics such as script and javascript will be segmented.

2.2.3. KLA-Based Data Augmentation

After the keywords are extracted and the keyword library is built, the data augmentation method is applied to the training samples. The key insight of this method is that malicious requests inevitably contain special keywords, so we retain keywords and randomly replace nonkeywords in samples to expand them. The details of KLA-based data augmentation are shown in Algorithm 2.

-

Algorithm 2: KLA-based data augmentation algorithm.

-

Input:

- (1)

added noise ratio α

- (2)

List of URLs to be enhanced ListURLs

- (3)

List of keywords Listkeywords

- (4)

List of special symbols Listsymbols Output: List of enhanced URLs

- (1)

Listenhanced URLs ⟵ ∅

- (2)

for each URL ∈ ListURLs do

- (3)

Listwords ⟵ SplitURL (URL)

- (4)

enhanced URL ⟵ ∅

- (5)

//get the length of the splited URL

- (6)

URL len ⟵ Length (Listwords)

- (7)

//count the replaced word number

- (8)

replaced num ⟵ 0

- (9)

for each word ∈ Listwords do

- (10)

if word/∈ Listkeywords & word/∈ Listsymbols then

- (11)

enhanced URL ⟵ Concatenate (enhanced URL, Replace (word))

- (12)

else

- (13)

enhanced URL ⟵ Concatenate (enhanced URL, word)

- (14)

end if

- (15)

replaced num ++

- (16)

if replaced num/URL len ≥α then

- (17)

break

- (18)

end if

- (19)

end for

- (20)

//add enhanced URL to return list

- (21)

Listenhanced URLs ⟵ Listenhanced URLs ∪ enhanced URL

- (22)

end for

The request is first segmented to extract various words (line 3). According to the experimental data, words such as “union” and “from” contained in the keywords library can be detected in the new attack payload in Data 2. The central part of the extracted data is as shown in Data 5, in which we can find that “1,” “user,” “password,” and “users” are all regular variables in the SQL query. Hence, variable substitution can be performed (lines 10-11), by which Data 6 is converted.

Since the word “1 = 1,” “group concat,” and “from” still exist in Data 5 and Data 6, the word vector matrix that formed by them has not changed. When character substitution is performed without evading keywords, Data 7 is converted. The SQL syntax of Data 7 no longer conforms to the specification, which makes the weight of the word matrix of Data 7 decline. As a result, it is difficult to be effectively identified in the model training phase.

In most cases, the training samples are often collected in the same network and target-ranged environment, and the simulation attackers often adopt the automatic attack mode. For instance, these words such as “password,” “username,” and “passwd” often exist in a large number of training samples and exist in the form of variables in the normal request simultaneously. Eventually, if these samples are not replaced adequately by adding noise, statements with “password” and other variations in the normal request samples will be recognized as malicious.

The change of other characters besides the keywords helps to improve the generalization ability of the model. So we add the noise to the data and follow the rules of random noise replacement until the replacement ratio α is reached (lines 15-16). According to the above rules, generating noise data is Data 9 based on XSS attack valid data (Data 8). It can be seen that the generated noise data and the keywords of malicious traffic have been reserved while other characters have been changed.

The data enhancement method we proposed is versatile enough to be applied to other kinds of network attacks. In this article, we only evaluated it on three types of network attacks: Sql injection, XSS, and path traversal.

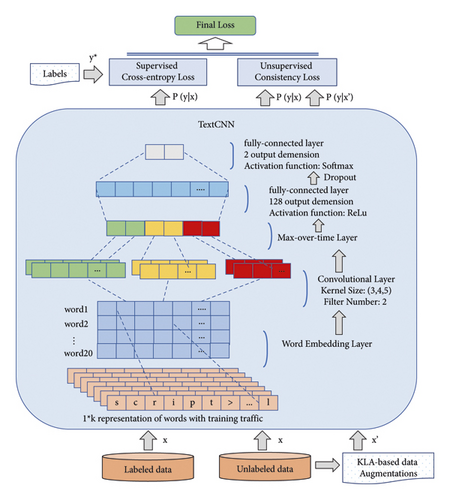

2.3. Deep Learning Models

One of the primary purposes of HTTPSmell is to verify the effectiveness of the data augmentation method in the DL-based detection algorithms. For the demonstration, two DL algorithms are chosen. One is TextCNN, based on convolutional neural networks [13] using supervised learning for classification, and the other is the UDA mechanism [12] leveraging semisupervised learning for label reconstruction.

2.3.1. TextCNN Model

Compared to classic machine learning methods such as naive Bayes (NB) and SVM, convolutional neural networks (CNNs) are better in large datasets and do not need to extract features manually. CNNs usually include the following layers: embedding layer, convolutional layer, pooling layer, and fully connected layer in text classification.

The embedding layer in TextCNN can map words to be a set of vectors. For all words in the dataset, each word can be characterized as a vector, which constitutes an embedding matrix M. Meanwhile, each row in M is a word vector. The function of the convolutional layer in TextCNN is to extract the local features of the text and splicing the features obtained by the convolution kernels of different sizes to form a high-dimensional feature vector. The pooling layer is aimed at extracting the main features and getting the final feature vector from the output of the convolutional layer. The input of the fully connected layer is a one-dimensional vector formed after the pooling operation, which is output through the activation function. Furthermore, the dropout layer is added to prevent overfitting.

Capturing local features is the core idea of convolutional neural networks. For text, local features are sliding windows composed of several words, similar to N-gram. It dramatically helps to combine and filter the N-gram features for obtaining semantic information at different levels of abstraction. Practically, TextCNN, based on the convolutional neural network, has a good performance on text classification.

2.3.2. UDA Model

Another model employed in HTTPSmell is UDA, an unsupervised data enhancement technique and a semisupervised learning approach introduced by the Google team in 2020. By applying a data augmentation algorithm to a small amount of labeled data and a large amount of unlabeled data, it trains a deep learning model that outperforms the ones trained with abundant labeled data. HTTPSmell combines UDA with the TextCNN model to detect malicious HTTP attacks.

Without enhanced training data and unsupervised consistency training in the semisupervised training, the procedure will result in poor label refactoring, and the model would be prone to the risk of overfitting or underfitting [12]. Therefore, equations (2) and (3) are combined in the model training phase. Based on the improved method of consistency training applied in the UDA, minimizing the KL divergence of the original unlabeled data and augmented data is used to enhance the smoothness and improve the generalization ability of the model (as shown in equation (2)), where q ( | x) is a data augmentation transformation and is a fixed copy of the current parameters θ indicating that the gradient is not propagated through . Equation (2) aims to achieve predictive consistency between each unlabeled sample and its augmented sample by minimizing the KL divergence between them. With the help of data enhancement methods, the distribution of unlabeled samples is closer to the realistic data distribution and increases the number and diversity of unlabeled samples. As a result, the training data are expanded and the accuracy and credibility of the model prediction are improved.

Figure 2 shows using the UDA-based semisupervised training procedure for label refactoring, considering consistency between labeled data and unlabeled data. Meanwhile, TextCNN is conducted in the final classification.

First, we need to extract potential word order and semantic associations of keywords in malicious traffic (e.g., malicious statements, a second “script” will appear after the first “script” to generate closed tag pairs). Then, Word2vec is used to generate a word vector file from the before-augmented traffic training set and noised traffic training set. The marked traffic samples and unlabeled training set are used as input when training the model, and the word vector is inputted in the embedding layer to construct a semantic association. Finally, the detection model is obtained.

3. Experimental Evaluations

This paper designs and implements a prototype system for monitoring HTTP malicious traffic and conducts experiments and evaluations using the open network dataset to verify the proposed method. The performance of the HTTPSmell can be depicted by the following six representative metrics, and their definitions are listed in Table 2.

| Index | Expression |

|---|---|

| True-positive rate (TPR or recall) | TP/(TP + FN) |

| False-positive rate (FPR) | FP/(FP + TN) |

| False-negative rate (FNR) | FN/(FN + TP) |

| Precision | TP/(TP + FP) |

| F-measure | 2∗precision∗recall/(precision + recall) |

| Accuracy | (TP + TN)/(TP + TN + FP + FN) |

The true-positive rate (TPR or recall) represents the probability that positive examples can be correctly classified. In this paper, recall is the probability that malicious traffic can be correctly recognized. False-positive rate (FPR) and false-negative rate (FNR) measure the possibilities of misclassifying positive and negative samples, respectively. Precision denotes the proportion of correctly identified malicious traffic in total positive samples, and F-measure designates the harmonic mean of precision and recall rate. Accuracy is the ratio of all correctly classified samples to all instances.

3.1. Dataset Introduction

We collect a set of open-source WAF (web application firewall) request datasets from Fsecurify based on HTTP protocol as training set and test set. 45,000 malicious traffic with malicious behavior characteristics and 45,000 normal traffic were selected randomly and then divided into a training set and test set according to a ratio of 7 : 2 under the supervised learning model (only TextCNN) as the control group. The training set under the semisupervised learning model is composed of 700 labeled data and 70,000 unlabeled data, and test set is the same as the supervised training. The ratio of positive and negative samples among the 700 labeled data is 1 : 1, and the ratio of path traversal, sql, and xss data in positive samples is 3 : 4 : 3.

3.1.1. Wild Dataset

Simultaneously, 6,465 wild malicious traffic samples were collected by the honeypot server (deployed in March 2019) as a generalization performance test dataset. The honeypot is online for three days, and the model detects all the traffic at the same time with IDS and WAF. Since IDS and WAF are relatively mature and have high reliability, their detection results are regarded as accurate results, while the model’s inference is used as reference results. In this paper, these malicious traffic samples, SQL injection, XSS, and directory traversal samples account for 80%∼90%, and backdoor control, privacy theft traffic samples, etc., account for about 10%.

3.1.2. ECML/PKDD 2007 Dataset

The ECML/PKDD 2007 [14] is a dataset containing benign and various attack HTTP requests for machine learning methods released by the Brazilian Conference on Intelligent Systems (BRACIS). We extract the traffic data related to SQL injection, XSS, and directory traversal according to the classification labels, as described by the poster, the attack traffic is randomly generated, and 10% of them are taken out of context. Thereafter, some preprocessing methods should be performed such as URL simplification on the test sets to make them closer to real attack scenarios [15].

3.1.3. Kaggle/GitHub Dataset

At the same time, we collected some personally published datasets on GitHub and Kaggle to evaluate the generalization ability of HTTPSmell. The SQL injection dataset was acquired from Kaggle (https://www.kaggle.com/datasets/syedsaqlainhussain/sqlinjectiondataset), and the XSS dataset was obtained from GitHub (https://github.com/fmereani/CrossSiteScriptingXSS), which is used for evaluation in [16]. The Path traversal dataset was also obtained from GitHub (https://github.com/omurugur/Path_Travelsal_Payload_List/blob/master/Payload/Dp.txt). The specific distribution of these datasets is shown in Table 3.

| Model | Wild test set | Kaggle test set | ECML/PKDD test set | Enterprise test set | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mal.1 | Ben.2 | Mal. | Ben. | Mal. | Ben. | Mal. | Ben. | |||||||

| S-I3 | XSS | D-T4 | S-I | XSS | D-T | S-I | XSS | D-T | ||||||

| TextCNN | 614 | 4,093 | 1,758 | 6,465 | 11,341 | 15,141 | 1,092 | 27,574 | 2,274 | 1,825 | 2,295 | 6,394 | 521 | 52,100 |

| HTTPSmell | ||||||||||||||

| WebSmell (Bert) | ||||||||||||||

| WebSmell (Albert) | ||||||||||||||

- (1) Mal.: malicious; (2) Ben.: benign; (3) S-I: SQL-injection; (4) D-T: directory traversal.

3.1.4. Enterprise Dataset

The last dataset is derived from a database of targeted cyberattacks that an ISP company we collaborate with encountered in the real world. This dataset encompasses various attack techniques, ranging from SQL injection, XSS, and path traversal to more sophisticated attack vectors such as code injection, CVE exploitation, and covert channel, which can provide additional insights to assess the detection performance and generalization ability of our model in the real-world enterprise environment. We employ the “scapy” package to parse the pcap files, extract all the HTTP-related request data, and finally filter out a total of 521 malicious samples as the test set.

The ratio of malicious traffic samples to normal traffic samples in the training set under supervised learning is 1 : 1, both of which are 35,000. Moreover, the ratio in the test set is also 1 : 1, both of which are 10000. The training set for the semisupervised model consists of 700 labeled data and 70,000 unlabeled data, and the test dataset is the same as the supervised learning model. The other three datasets (Wild dataset, Kaggle/GitHub dataset, and ECML/PKDD 2007 dataset) used to verify the generalization ability of the models, respectively, contain 6,465, 27,574, and 6,394 malicious samples, with a corresponding number of benign samples. The specific data distribution is shown in Tables 3 and 4.

| Model | Training set | Test set | ||||

|---|---|---|---|---|---|---|

| Malicious | Benign | Malicious | Benign | |||

| Label | Unlabel | Label | Unlabel | |||

| TextCNN | 35,000 | 0 | 35,000 | 0 | 10,000 | 10,000 |

| HTTPSmell | 350 | 35,000 | 350 | 35,000 | ||

| WebSmell (Bert) | ||||||

| WebSmell (Albert) | ||||||

3.2. Experiment Setup

3.2.1. Model Settings

We heuristically set some hyperparameters in the model, such as “uda softmax temp,” “uda confidence thresh,” and “batch size,” and the values of these parameters will have a significant impact on the learning effect of the UDA model. After repeated training and verification, the final configuration of hyperparameters in TextCNN and UDA is shown in Table 5. Model implementation was carried out using Python 3.10.4, scikit-learn 1.1.2, and torch 2.0.0.

| Model | Hyperparameters | Value |

|---|---|---|

| TextCNN | Convolutional layer number | 3 |

| Convolution kernel size | [3, 4, 5] | |

| Filter number | 2 | |

| Pooling method | Maximum | |

| Pool size | 1 | |

| Learning rate | 2e − 5 | |

| Activation function | ReLU | |

| Optimizer | Adam | |

| Dropout | 0.25 | |

| Dense layer | 2 | |

| Max sequence size | 20 | |

| Embedding size | 100 | |

| UDA | Warmup | 0.1 |

| tsa | Linear schedule | |

| uda softmax temp | 0.9 | |

| uda confidence thresh | 0.45 | |

| unsup coeff | 1 | |

| sup batch size | 8 | |

| upsup batch size | 24 | |

| eval batch size | 32 | |

3.2.2. Experiments

We conduct four types of comparative experiments to emphasize the comprehensive advantages of HTTPSmell. (1) The first experiment aims to verify the enhanced learning effect of the KLA-based data augmentation method (see Algorithm 2) on the semisupervised model and to compare it with the fully supervised learning model (TextCNN) to highlight the advantages of improving generalization ability with only a small portion of labeled samples. Subsequently, we explore the impact of different noise ratios in the KLA-based data augmentation algorithm on the model detection performance and ultimately obtain the model with the best detection performance when we set noise ratios as 100%. (2) The second experiment compares different data preprocessing techniques to demonstrate that our proposed automatic keyword extraction algorithm (see Algorithm 1) combined with particular words segmentation method can significantly improve the model detection performance and is more efficient than manual keyword extraction with conventional characters segmentation method. (3) The third experiment verified the lightweight characteristics and practical application value of HTTPSmell by comparing the detection efficiency and performance overhead of the system WebSmell [11]. (4) The last experiment assesses the ability of HTTPSmell to detect various cyberattack traffics in a real-world enterprise environment, which demonstrates HTTPSmell’s strong detection capabilities for advanced and unknown attacks.

3.3. Effectiveness of Data Augmentation

In this experiment, we use the same convolutional neural network structure of TextCNN (see Figure 2) to evaluate the detection performance of the full supervised learning model that requires a large number of labeled samples to train and the semisupervised learning model (UDA combined with TextCNN) that only needs a small portion of labeled samples in the training period. In order to demonstrate the effectiveness of the KLA-based data augmentation algorithm, the experiments of different noise-added ratios in the data augmentation method for unlabeled samples in the UDA model are conducted as well.

From the experimental results in Table 6, it can be seen that the supervised model generated based on a large number of labeled samples has good performance on the test set with 96.96% accuracy and 96.35% precision. However, the semisupervised model can narrow the gap after training on the noise-adding dataset and achieve comparable performances as the supervised model with 96.85% accuracy and 96.80% precision. Table 7 demonstrates that the semisupervised model can adapt well to the other open-source traffic datasets and maintain relatively better performance compared with a fully supervised model without any noises added, which indicates the noise-adding method can effectively strengthen the generalization ability of the model. We can conclude that with only 700 labeled samples, HTTPSmell outperforms the supervised training model with 70,000 labeled samples in comprehensive performance, which reflects the superiority of our KLA-based data augmentation algorithm. Although 70,000 unlabeled samples are used in the training phase of HTTPSmell, it is easier to obtain unlabeled samples and, most importantly, reduce the workload of manually labeling samples.

| Model | Noise ratio | TPR (%) | FPR (%) | FNR (%) | Precision (%) | F-measure (%) | Accuracy (%) |

|---|---|---|---|---|---|---|---|

| TextCNN (full supervised) | — | 97.61 | 3.70 | 2.39 | 96.35 | 96.98 | 96.96 |

| HTTPSmell (semisupervised) | 20% | 94.44 | 3.62 | 5.56 | 96.31 | 95.37 | 95.41 |

| 40% | 95.12 | 3.91 | 4.88 | 96.05 | 95.58 | 95.61 | |

| 60% | 95.37 | 4.08 | 4.63 | 95.90 | 95.63 | 95.65 | |

| 70% | 95.47 | 4.03 | 4.53 | 95.95 | 95.71 | 95.72 | |

| 80% | 93.73 | 3.88 | 6.27 | 96.03 | 94.86 | 94.93 | |

| 90% | 93.15 | 3.32 | 6.85 | 96.56 | 94.82 | 94.92 | |

| 100% | 96.90 | 3.20 | 3.10 | 96.80 | 96.85 | 96.85 | |

- The bold values in Table 1 represent the optimal detection performance of the model for attack traffic in our noise comparison experiment when the noise level is set to 100%. This is characterized by lower false alarm rate, lower miss rate, higher recall, precision, accuracy, and F-measure.

| Model | Noise ratio | Wild test | Kaggle/GitHub test | ECML/PKDD 2007 test | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| D-T1 (%) | S-I2 (%) | XSS (%) | D-T (%) | S-I (%) | XSS (%) | D-T (%) | S-I (%) | XSS (%) | ||

| TextCNN (full supervised) | — | 90.64 | 96.99 | 97.87 | 97.49 | 97.46 | 97.69 | 96.84 | 97.78 | 97.52 |

| HTTPSmell (semisupervised) | 20% | 97.16 | 97.56 | 97.97 | 97.2 | 97.93 | 98.05 | 96.25 | 98.04 | 97.01 |

| 40% | 97.24 | 97.31 | 97.9 | 97.61 | 97.79 | 97.98 | 96.79 | 97.77 | 96.75 | |

| 60% | 97.61 | 97.64 | 97.94 | 97.46 | 97.82 | 98.08 | 96.25 | 97.78 | 96.78 | |

| 70% | 97.21 | 97.07 | 97.94 | 97.39 | 97.48 | 97.69 | 97.25 | 97.7 | 96.47 | |

| 80% | 96.99 | 97.88 | 98.0 | 97.77 | 98.07 | 98.12 | 97.25 | 97.95 | 96.89 | |

| 90% | 97.3 | 97.56 | 98.2 | 97.64 | 98.04 | 98.19 | 96.7 | 98.01 | 97.54 | |

| 100% | 97.44 | 98.45 | 98.44 | 97.17 | 98.07 | 98.26 | 97.12 | 98.35 | 97.78 | |

- (1) D-T: directory traversal; (2) S-I: SQL injection. The bold values indicate the optimal detection performance of the model for attack traffic from different datasets in our noise comparison experiment when the noise level is set to 100%. This is characterized by lower false alarm rate and miss rate, as well as higher recall, precision, accuracy, and F-measure.

The addition of 100% noise (replacing all nonkeywords in each sample) to the training dataset enhances the detection accuracy, generalization ability, and overall performance of the model in distinguishing normal and malicious traffic. In order to verify the model performs best when adding 100% noise data, a more detailed test is conducted on the ratio near 10% (that is, adding 90% noise for testing). The final results show that when the noise ratio of the training set is 90%, the performance of the model is similar to that of adding 80% noise, which exhibits poor detection performance on the test dataset.

As shown in Tables 6 and 7, the model’s detection capability reaches the peak after the ratio of the noise increases to 100%. This implies that augmenting the samples by replacing all words that are not in the keyword library can improve the diversity of the dataset and simultaneously boost the robustness of the semisupervised model against noise. Otherwise, the model will tend to be underfitting (80%∼90% noise ratio). A possible cause of the underfitting is that the noise proportion is out of a certain threshold range, which will reduce the weight of keywords order and result in misjudgment of model detection. For example, the string in malicious traffic such as “echo” and “jsp2” will be misjudged as benign traffic by the model.

To sum up, the KLA-based data augmentation algorithm improves the generalization ability of the model, and the model achieves its optimal detection performance when the noise-adding ratio is set to 100% during the training phase.

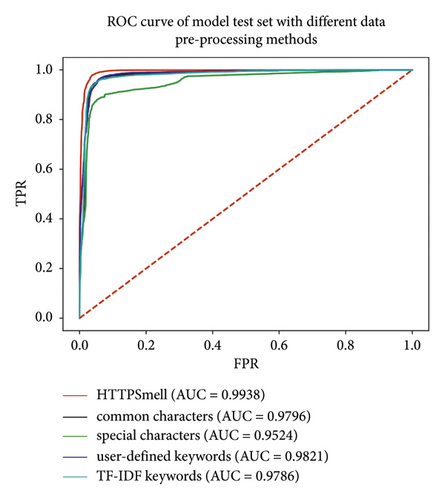

3.4. Preprocessing Influence

In the data preprocessing step, we use our preprocessing method, segmentation based on customized special tokens and automatically extracted keywords, and the methods of [3, 6, 17, 18] to obtain the corresponding training models. The performance of models trained by different data preprocessing methods is compared in six aspects (see Table 2). The same training set and test set are used in different data preprocessing methods, and the same neural network is used to train the model. In this experiment, we use the previously trained model HTTPSmell (100% noise-adding) to compare with other models to illustrate the superiority of our data preprocessing method. The comparison results are shown in Tables 8 and 9.

| Studies | TPR (%) | FPR (%) | FNR (%) | Precision (%) | F-measure (%) | Accuracy (%) |

|---|---|---|---|---|---|---|

| HTTPSmell | 96.90 | 3.20 | 3.10 | 96.80 | 96.85 | 96.85 |

| Segmentation based on common characters [6] | 93.89 | 3.97 | 6.11 | 95.94 | 94.91 | 94.96 |

| Segmentation based on special characters [17] | 87.84 | 5.21 | 12.16 | 94.40 | 91.00 | 91.32 |

| Segmentation based on user-defined keywords [3] | 93.44 | 4.44 | 6.56 | 95.46 | 94.44 | 94.50 |

| Segmentation based on TF-IDF [18] | 95.25 | 5.30 | 4.75 | 94.73 | 94.99 | 94.98 |

| Studies | Wild test accuracy | ECML/PKDD 2007 test accuracy | Kaggle test accuracy | ||||||

|---|---|---|---|---|---|---|---|---|---|

| D-T1 (%) | S-I2 (%) | XSS (%) | D-T (%) | S-I (%) | XSS (%) | D-T (%) | S-I (%) | XSS (%) | |

| HTTPSmell | 97.44 | 98.45 | 98.44 | 97.17 | 98.07 | 98.26 | 97.12 | 98.35 | 97.78 |

| Segmentation based on common characters [6] | 95.08 | 98.21 | 97.57 | 97.24 | 97.71 | 97.07 | 95.7 | 97.74 | 93.44 |

| Segmentation based on special characters [17] | 74.29 | 84.45 | 87.27 | 80.87 | 79.23 | 79.32 | 62.23 | 87.17 | 87.29 |

| Segmentation based on user-defined keywords [3] | 91.84 | 96.99 | 97.73 | 89.67 | 89.8 | 90.82 | 94.83 | 96.19 | 94.34 |

| Segmentation based on TF-IDF [18] | 94.94 | 97.72 | 97.52 | 95.07 | 95.33 | 95.84 | 95.83 | 97.29 | 95.98 |

- (1) D-T: directory-traversal; (2) S-I: SQL injection.

Based on the data in Tables 8 and 9, it can be seen that the method proposed in this paper has higher accuracy and lower false alarm rate, and the overall performance is optimal compared with other existing methods when using small training traffic samples. The model obtained by Park et al. [6] based on common characters segmentation adopts the way of splitting sentences with 68 common characters to get character-level representation. This model has high generalization ability, but it ignores the significance of keyword features, which prevents it from differentiating malicious samples from normal traffics in complex cyberattack scenarios.

However, the method based on special characters segmentation [17] splits sentences with several customized special characters to get word-level representation. Training the model with a large dataset processed in this way results in underfitting in malicious traffic detection due to the lack of consideration of malicious features. It is likely to identify a large number of malicious traffic as normal traffic, which declines the overall accuracy and weakens the ability to distinguish malicious traffic. The user-defined keyword segmentation method [3] manually summarized special keywords from 95 URL-valid characters, but it does not take into account the keyword distribution of benign traffic samples, which leads to a high false alarm rate. Although the model can detect and identify conventional malicious traffic well, it is difficult to manually construct a keywords library that encompasses all malicious traffic keywords and potential attack payloads due to the diversity of HTTP attack statements. This ultimately leads to the weak generalization ability of the model.

In this paper, we split the samples according to keywords and special characters. The keywords library is automatically established through statistics to maximize the retention of keywords in malicious traffic, while the high-frequency words that appear in benign traffic samples are removed. Then, the training dataset is fed into the model after sample augmentation processing. Minimizing the loss between original samples and augmented samples makes the detection model more easier to extract the keywords and related word order features. Moreover, the extensive training dataset also helps the detection model avoid the risk of overfitting during the training phase.

After examining the malicious traffic dataset from a recently deployed honeypot and the attack traffic collected from an ISP company, the analysis shows that the wild attack vectors tend to be more obfuscated and advanced. For example, the attack data is confused by multiple encoding and grammatical substitution to bypass WAF’s keyword detection. Because the method in this paper can automatically build a keywords library based on a large amount of malicious traffics, it is difficult to avoid all keywords even if some keywords are reduced in the attack payload. Accordingly, the data preprocessing method in this paper still has a good detection effect. However, in the face of the above kind of attack traffic based on a user-defined keyword segmentation method, the preset malicious traffic keywords are easy to bypass through the confusion and syntax substitution. For example, the change of “select” into “se/ ∗∗/lect” makes the keyword select unable to be captured, which reduces the ability of model detection. As the segmentation method based on common characters produces large dimensions and ignores the semantic relationship between keywords, the ability of model detection will decrease when the attacker highly obfuscates the attack payload. The method based on particular symbol segmentation can easily ignore essential information when dealing with nonletter confusion attack data with a large number of special symbols, for example, “(∼%8F%97%8F%96%91%99%90) (); ” means “phpinfo(); ” After confounding malicious payload, the capability of the model detection is reduced.

In binary classification problems, the ROC (receiver operating characteristic) curve and AUC (area under the curve) values are often used to evaluate a binary classifier. ROC is a comprehensive indicator reflecting the continuous variables of sensitivity and specificity. Precisely, each point on the ROC curve reflects the sensitivity to the same signal stimulus. The value of AUC is the area under the ROC curve. The calculation method of AUC considers the classifier’s classification ability for both positive and negative samples and can still make a reasonable evaluation of the classifier in the case of unbalanced samples. From Figure 3, it can be seen that whether it is the test set or the cross-data set, the ROC curve of the model obtained by the data preprocessing method of HTTPSmell is farther away from the diagonal than the other data preprocessing methods, the curve is smoother, and its AUC value is also the highest.

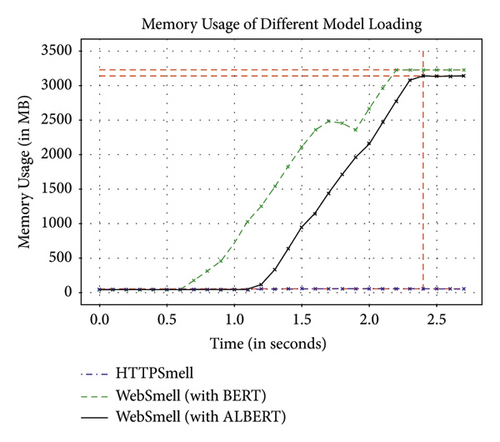

3.5. Overhead Analysis

This experiment explores the overhead of the HTTPSmell (optimal parameters) under the computational latency and memory usage. Table 10 and Figure 4 show the experimental results.

| Model | Computing unit | Model loading time (s) | Training time (s) | Evaluation time (s) | Throughput (#/s) |

|---|---|---|---|---|---|

| WebSmell (Bert) | GPU1 | 0.891 | 912.81 | 8.46 | 2364 |

| WebSmell (Albert) | 0.723 | 448.88 | 4.81 | 4158 | |

| WebSmell (Bert) | CPU2 | 0.396 | >3600 | 569.87 | 35 |

| WebSmell (Albert) | 0.023 | >3600 | 566.41 | 35 | |

| HTTPSmell | 0.006 | 90.65 | 0.73 | 27089 | |

- (1) GPU: RTX 4090 in this experiment; (2) CPU: Intel (R) Core (TM) i5-11400 in this experiment.

To demonstrate the lightweight features and practical application value of HTTPSmell, we also perform some comparative experiments of performance overhead with WebSmell [11] and its Albert [19] implementation. It should be noted that the original implementation of the WebSmell model is based on Bert [5], a multilayer bidirectional transformer encoder model that performs self-supervised learning on a large-scale corpus in the pretraining stage. It conducts two types of tasks, masked language model (MLM) and next sentence prediction (NSP), to learn text representations with rich semantic information. Bert can achieve excellent performance on different downstream tasks through fine-tuning. Still, it has a vast amount of parameters, which means it will consume too many computational resources during the inference period. To this end, we additionally replace the Bert model in WebSmell with Albert, which reduces the model parameters through factorized embedding parameterization and cross-layer parameter-sharing techniques. Compared with the 110 M parameters of BERTbase, the Albert-base model has only 12 M parameters, 10 times less, and the model training speed is 5 times faster. To solve the problem of model performance degradation after parameter reduction, Albert replaced the next sentence prediction (NSP) task with sentence order prediction (SOP) in the pretraining stage, which even makes Albert have higher average scores than Bert in multiple NLP tasks. We use the Python packages “timeit” and “memory-profiler” as performance analysis tools to conduct an experimental analysis of these three models regarding computing latency and memory overhead. Finally, we will make a comprehensive comparison of their detection performance.

To compare the performance overhead of these three models, we use two types of computing units: GPU (RTX 4090) and CPU (Intel (R) Core (TM) i5-11400) as the devices for model training. The computing efficiency and throughput (number of samples processed per second) of WebSmell running on a GPU are nearly 66 times higher than that of running on a CPU device. At the same time, the training time of the model speedup almost 2 times. This is because the GPU device has more parallel computing units that can handle a large number of matrix operations simultaneously, while the CPU can only execute instructions sequentially. The Bert model involves a large number of matrix operations during the inference process. Therefore, WebSmell runs more efficiently on GPU devices with higher parallelism. When WebSmell (Albert) runs on the GPU, its model training time is about 8 minutes, nearly 2 times faster than WebSmell (Bert), and its computing throughput is also nearly twice as large, reaching up to 4158 per second. These indicate that techniques such as parameter reduction and sentence-order prediction can reduce the computational resource overhead. In contrast to the other two WebSmell models, HTTPSmell achieves significantly better performance on a CPU device in terms of loading time, training time, and throughput. It can process up to 27089 samples per second and complete the training in only 90 seconds. The throughput of HTTPSmell on a CPU is nearly an order of magnitude higher than that of WebSmell on a GPU. The end-to-end detection efficiency is one of the most critical considerations of deploying the model to the production environment, where CPU resources are often more cost-effective and scalable. This can substantially reduce the overall task latency, accelerate data delivery, and provide more decision time for downstream tasks.

We also analyze the memory overhead required for loading the model parameters and external dependencies into the main memory. It can be seen from Figure 4 that during the process of loading the best parameters saved in the training stage into the main memory, the memory usage increases over time. Due to the numerous parameters of the model itself and the additional external packages for function implementation, WebSmell needs to occupy a significant amount of memory, averaging 3 GB once fully loaded. In contrast, HTTPSmell is more lightweight and only requires around 60 MB of memory.

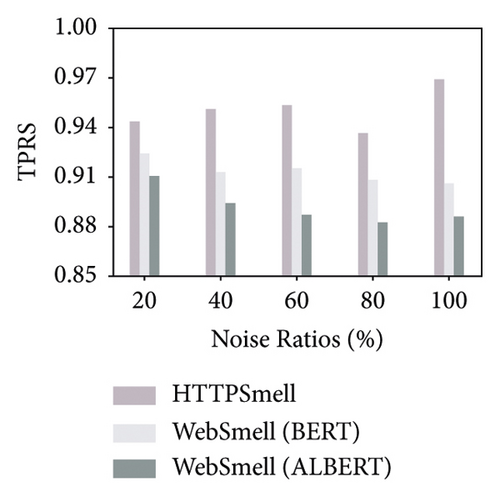

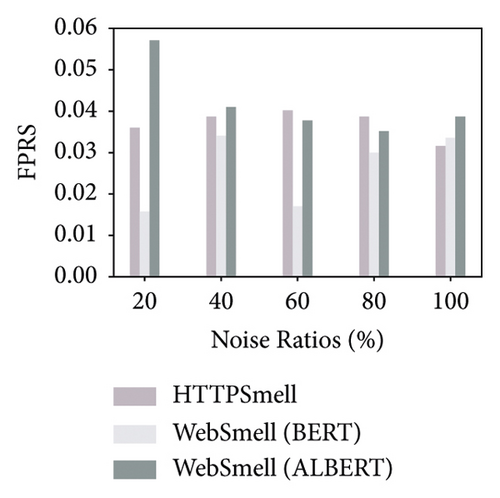

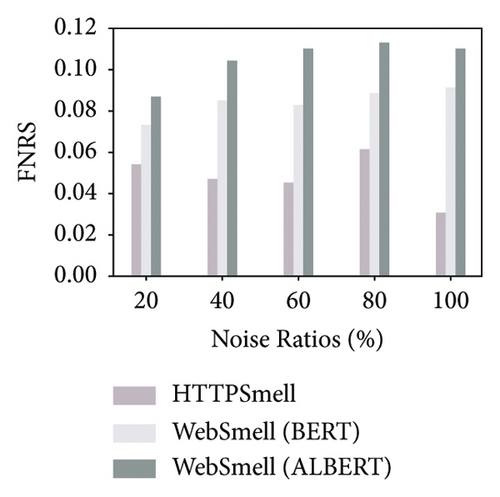

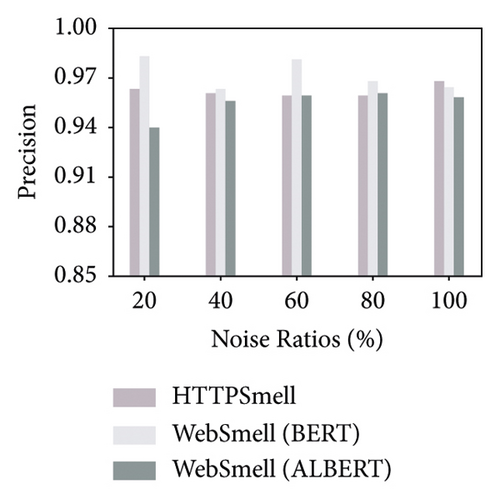

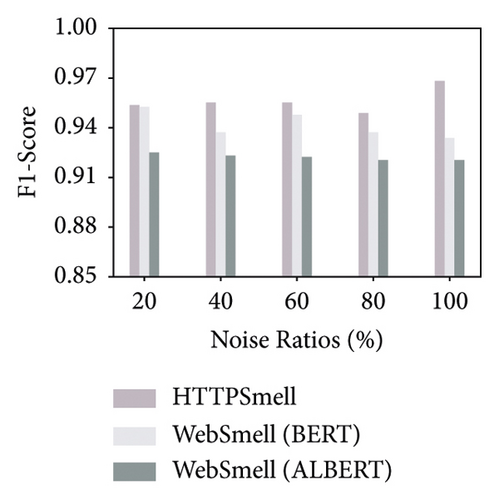

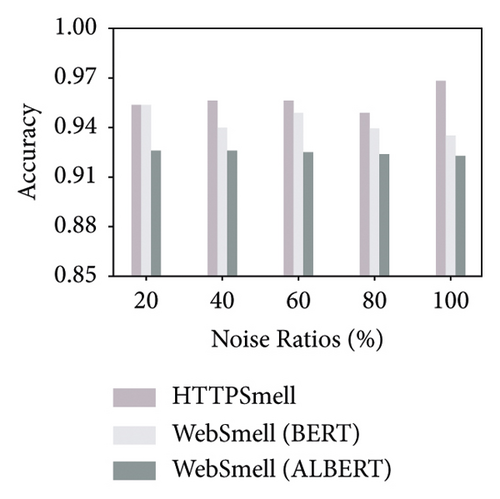

Besides the low-overhead characteristics, it is essential to ensure the excellent detection capabilities of the model as well. To this end, we further analyze the detection performance of these three models. Figure 5 shows the model’s performance on the test set, which contains 10,000 benign and 10,000 malicious samples, constituting a quarter of our data set, and the remaining three quarters are used for model training. We compare and evaluate the detection performance of the three models on the six metrics in Table 2 under different noise ratios. The y-axis in Figure 5 represents the corresponding detection rate of each metric under investigation, and the x-axis represents the noise ratio added during the model training stage. To distinguish the performance difference among the comparison models, we set the starting position of the y-axis to start from 0.85 in the four subgraphs (a, d, e, and f). The results show that the Albert implementation of WebSmell (100% noise ratio) has the worst performance among all metrics, with the lowest values of true-positive rate (88.64%), F1-score (92.08%), and accuracy (92.38%) and also the highest values of false-positive rate (3.88%) and false-negative rate (11.36%), which indicates that even if the model added noised samples during the training stage, it cannot learn high-level and low-level features that are important for distinguishing malicious traffic from benign traffic in the word vector representation. WebSmell’s Bert implementation is superior to Albert’s in all indicators, owing to its powerful learning ability with 110 M parameters. It also achieves the highest performance among all models in terms of precision (98.32%). However, it is still inferior to HTTPSmell in several other indicators. We can see from Figure 5 that HTTPSmell (100% noise ratio) outperforms WebSmell almost in all six indicators, with the highest accuracy rate (96.85%) and the lowest false detection rates (3.2% and 3.11%). Meanwhile, it keeps 10 times the throughput of WebSmell and reduces the memory usage by up to 50 times (∼3000 MB) while preserving outstanding detection performance. The detection efficiency does not suffer a significant loss, with only a minor drop in precision rate (∼1%), which is acceptable considering the significant reduction in performance overhead.

Through the comparative analysis of the abovementioned experiments, we can conclude that complex models represented by Bert and Albert have few advantages in classifying small samples, unbalanced categories, and short texts. On the contrary, simple structures and fast training models represented by TextCNN are a better solution. The experimental results also confirm that TextCNN has more advantages in training and inference performance, and it is a better choice to use in low-end devices and low-latency scenarios. The HTTPSmell combines TextCNN with the UDA model and leverages a semisupervised learning method to solve the problem of labeled sample scarcity. It simultaneously achieves outstanding detection performance, reduces memory usage and runtime latency, and significantly improves throughput.

3.6. Detection Performance in Enterprise Environment

To explore the detection performance and generalization ability of the HTTPSmell (optimal parameters) under real-world enterprise cyberattacks, we further conducted experiments on the attack traffics that realistic enterprise encountered. Table 9 presents the experimental results.

This dataset encompasses various attack techniques, ranging from SQL injection, XSS, and path traversal to other sophisticated attacks such as code injection, CVE exploitation, and covert channels. The diversity and complexity of the test sample set can verify the ability of the model to detect unknown cyberattacks. The experimental result in Table 9 demonstrates that the model is robust enough to detect advanced attack traffic in the enterprise environment and achieves an accuracy rate as high as 96.77% under the validation set with unbalanced samples obtained from the enterprise. This also implies that HTTPSmell can learn the characteristics of these unknown attacks even if they are not present in the training dataset. This is sufficient to attest the strong learning ability of our model, which can generalize well to unknown attack vectors. If we can use higher quality datasets in the training phase, such as attack samples in the enterprise environment, the detection ability of the HTTPSmell will be stimulated to a greater extent.

4. Related Work

In this section, we review notable efforts done to malicious HTTP traffic detection and data augmentation and compare with them in data feature retention, dimension explosion, and data augmentation rationality to highlight the novelty of our approaches.

4.1. Malicious HTTP Traffic Detection

Most malicious traffic is encoded based on some ordinary malicious traffic, and many variants are added. There is a large amount of reused data between them, which has significant characteristics of malicious traffic. Based on the normal behavior in the network, any network traffic inconsistent with the expected normal behavior is considered abnormal. Most of them use machine learning, data mining, or statistical methods to infer the normal traffic characteristics and detect malicious traffic.

In terms of extracting effective data from web traffic, Xu et al. [7] detected evaded network attacks by using the inconsistency of content-type field in HTTP, which can find unknown malware from traffic effectively. Arzhakov et al. [8] used honeypot technology to collect statistical information on attackers’ behavior and used statistical classification methods to distinguish malicious traffic. Torrano-Gimenez et al. [9] proposed an intrusion detection method of network traffic based on exceptions, using XML files to classify incoming requests into normal and abnormal requests. The N-gram model was used to extract the corresponding features from the three fields of HTTP log: web source, query attributes, and user agents. Then, some algorithms in machine learning are used to process the data. Vartouni et al. [20] analyze the HTTP traffic and construct features from HTTP data based on the n-gram character-based model. To solve the dimensionality problem, a stacked auto-encoder is leveraged to extract relevant features from data. In the final, isolation forest has been used as a one-class learner to detect anomalies. Torrano-Gimenez et al. [21] proposed the web attack detection method of applying generic feature selection to reduce redundant and irrelevant features and combine expert knowledge with the n-gram feature construction method for reliability and efficiency. Aiming at the root causes of WAF’s inefficiency, including target computation complexity and unnecessary recomputation, Chen et al. [22] proposed to build an efficient rule caching engine under two scenarios. The rule-based processing of WAF is very time-consuming, and at the same time, the order of the rule set and the maintenance of the white list require rich expert experience and knowledge support. The HTTPSmell traffic detection scheme based on the natural language processing (NLP) model proposed in this paper can achieve high throughput and accuracy (see Section 3.5) without the support of rich expert experience.

| Learning model | Accuracy of training dataset (%) | Accuracy of enterprise dataset (%) |

|---|---|---|

| HTTPSmell | 96.85 | 96.77 |

In terms of malicious traffic detection, many studies have used different models and data preprocessing methods to extract web traffic characteristics. Park et al. [6] proposed a method for anomaly detection of HTTP messages based on a character-level binary image transformation, named CAE (convolutional automatic encoder), which is superior to the traditional heuristic machine learning method for selecting input features, using a data preprocessing method based on character-level segmentation. Zolotukhin et al. [23] proposed an anomaly detection method for web attacks by analyzing HTTP logs. Yang et al. [3] designed a convolutional gated-recurrent-unit (CGRU) neural network for malicious URLs detection based on characters as text classification features. Yu et al. [17] proposed a Bi-LSTM (bidirectional long short-term memory) and the deep neural network model of attention mechanism, which models HTTP traffic as a natural language sequence to detect malicious traffic. Malekghaini et al. [24] investigate the impact of data drift on two state-of-the-art models for classifying encrypted traffic. Research shows that traffic shape-based models are more robust to data drift. For network traffics, data drift can lead to model performance degradation, especially when the model is trained on historical data and applied to new data in a production environment. As discussed in Section 2.2, the KLA-based algorithm can effectively enhance the model’s generalization ability for various attack traffic scenarios. The results of Experiment III-F further confirm the advantages of this system design. Lin et al. [25] designed a multilevel feature fusion model that combines timing, byte, and statistical features for traffic anomaly detection in an IoT environment. Zheng et al. [26] conducted a research work on encrypted malicious traffic detection. They proposed an efficient encrypted malicious traffic detection method based on graph convolutional network (GCN), named GCN-ETA. Hou et al. [27] introduce the related research work on intrusion detection system. Various deep learning techniques and models are used, such as LSTM, CNN, and GRU, as well as methods such as word embeddings and statistical features, to build intrusion detection systems.

In terms of the hybrid model, Corona et al. [28] designed a multiclassifier system to detect web attacks by modeling normal requests. The system uses a set of predefined models, which is based on different fields in the HTTP request and adopts two basic models: statistical distribution model and hidden Markov model. Choras and Kozik [29] proposed a machine learning method to simulate the normal behavior of web applications and detect network attacks at the same time. This model obtains information from HTTP requests, using graph-based segmentation technology and dynamic programming to generate models. Al-Obeidat and El-Alfy [30] proposed a new supervised hybrid machine learning method based on fuzzy decision tree and attribute selection for traffic analysis. Erfani et al. [31] presented a hybrid model where an unsupervised deep belief network (DBN) is trained to extract generic underlying features, and a one-class support vector machine (SVM) is trained from the features learned by the DBN. LSTM is generally used for anomaly detection and diagnosis in system logs [32]. Zhang et al. [33] proposed to use multiple models to check HTTP request messages to identify attacks. Three learning models, probability distribution model, hidden Markov model, and one-class of support vector machine model, were used to detect different fields of HTTP request messages. If one model reported anomalies, the overall detection result was abnormal. Dong et al. [34] proposed a network abnormal traffic detection model based on semisupervised deep reinforcement learning. The proposed model uses a double deep Q-network (DDQN), a representative of a deep reinforcement learning algorithm, and includes unsupervised techniques like autoencoder and K-means clustering algorithms to reduce manual labeling and detect unknown attacks. They tested the model using NSL-KDD and AWID datasets, achieving good results in various evaluation metrics.

Moreover, in detecting traffic packet content, malicious traffic was identified through the analysis and statistics of the content of the packets. Tekerek [35] proposed a web attack detection architecture based on CNN deep learning algorithm. The proposed method uses the bag-of-words model to preprocess data, generates an HTTP request image based on the URL and payload, and feeds the image as a CNN model input to obtain the classification result. Some malicious traffic may inject itself into legitimate flows, causing the header entropy of illegal traffic to be higher than that of legitimate traffic. Yadav et al. [36] presented an anomalous traffic detection method based on comparing the header field entropy of a plurality of flows with a predetermined amount. Shi et al. [37] used the LSTM for traffic anomaly detection. The input of the model is a history of recent traffic preprocessed by the big data processing framework of Kafka and Spark, and the output is the label of the recent data of a flow.

The above work uses a variety of models and extracts different features to model and classify the traffic, but most of the work in the data preprocessing part uses simple N-grams word segmentation or data statistics. Using simple data statistics to preprocess the traffic will lead to the problem that the traffic data characteristics cannot be well preserved while using the N-grams method will cause a dimensional explosion. Moreover, in most of the web traffic, there are no semantic association characters and encoding characters, and then, using N-grams to extract keywords of different lengths will generate many coded characters and invalid strings, while traditional malicious keywords are often not reserved.

This paper proposes to segment web traffic, retain traditional malicious keywords, merge the keywords set of different types of malicious web traffic, remove single characters, and maximize the reserved keywords representing the malicious web traffic. The reserved malicious web traffic keywords also play a crucial role in data augmentation methods.

4.2. Data Augmentation for Machine Learning

Most of the current data augmentation methods are suitable for image or audio data, for example, by shifting the image, transforming the angle of view, transforming the size, and applying Gaussian noise, which makes the added noise data have a lower level of information distortion. However, these methods are not suitable for the augmentation task of text data. For text data, existing researchers have proposed synonym substitution [23], EDA [38] (e.g., synonym replacement, random insertion, random swap, and random deletion), and the method of constructing weighted undirected graphs [8]. However, this method is suitable for texts such as articles and Q&A, mainly studying semantic association characteristics. Most of the data in HTTP traffic belongs to computer code, such as “script,” “for,” “insert,” so all kinds of characters and words are unable to adopt the way of synonym substitution for data augmentation. In addition, malicious HTTP traffic often has a specific word-order structure and semantic associations, such as SQL injection, and there will be a combination of “select from,” and “<script></script>” in XSS attacks. Furthermore, this kind of word-order structure generally does not exist in normal web traffic. It is not easy to extract features through simple regular matching using the word-order structure of web traffic.

The key to detecting malicious traffic through HTTP traffic is using text data classification methods to classify HTTP traffic. However, web attack statements are highly time-sensitive and may be encrypted, confused, and replaced in various ways. For obvious reasons, the amount of publicly available datasets of real-world user browsing behavior is scarce. Therefore, it is necessary to augment the training data appropriately so that the neural network can better extract features of malicious traffic.

In the field of natural language processing, since natural language itself is a discrete abstract symbol, a small change may lead to a massive deviation in meaning, and the conventional data augmentation methods can be carried out through multiple translation conversions [39], such as translation from Chinese to English and then to Chinese, translation from English to French and Chinese, then to English. In the data preprocessing stage, the existing methods [3, 6] augment the data by simple statement segmentation and character normalization, thereby enhancing the feature extraction of the model. Data augmentation aims at creating novel and realistic-looking training data by applying a transformation to an example without changing its label [40].

Zhang et al. [41] proposed to replace words or phrases with synonyms, and they used an English thesaurus to expand the text data. Existing studies have also proposed methods for constructing weighted undirected graphs. Chen et al. [42] proposed the HDA method to augment the original training data by randomly rotating, masking individual samples, and mixing sample pairs in arbitrary proportions. In audio processing, the background sound is different due to the different environments of audio recording. Although various denoising models can be applied, there may be differences in accent and intonation of audio. Therefore, data augmentation is often carried out by changing the tone, introducing multiple accents, multiaudio fusion, etc.

In the text data augmentation, it is unreasonable to use image or speech recognition signal conversion to increase data because the sequence of characters will affect the syntax and semantics [43]. Moreover, most of the characters in web traffic data are independent or meaningless encoding and nonsemantic words. The use of synonym replacement will affect the original feature extraction and destroy the malicious keywords in malicious traffic. This paper proposes a malicious HTTP traffic detection framework via data augmentation, which augments the web traffic data based on retaining most keywords in the malicious traffic.

A preliminary version of the work is reported in WebSmell [11]. Compared with the work WebSmell, our system has the following advantages. (1) We propose an optimized framework to augment data with a high scenario coverage rate, which can improve the diversity and quality of the training data and reduce the data bias. (2) We propose a particular UDA-based method to effectively deal with the problem of poor generalization ability in complex real-world scenarios, which can enhance the model’s adaptability to unseen attacks. (3) We combine two learning models: one is for label refactoring and data augmentation and another is for data training, which significantly improved the accuracy and robustness of the model. (4) We conducted extensive performance tests, which not only demonstrated the effectiveness of our keyword extraction and data augmentation method but also verified the lightweight feature and efficient detection capability of HTTPSmell in enterprise environments, both superior to the WebSmell.

5. Analysis and Discussions

We mainly study the influence of different data augmentation ratios on the model classification effect, the influence of different data preprocessing methods on the model classification effect, and the semisupervised learning model classification effect under the data preprocessing. Finally, we get the optimal parameters of this data augmentation method and prove that this data augmentation method is better than other data preprocessing methods for model classification. Through the combination of TextCNN and neural networks based on UDA, researchers can adopt different DL classification models according to the actual application needs and verify the generalization of the data augmentation method proposed in this paper.

The work of UDA is a relatively new data augmentation framework proposed by Google. By applying our data augmentation method with this framework, the experimental results are outstanding and verify the HTTPSmell’s generalization ability.

The present study relies primarily on open-source datasets for model training. Future research could enhance the validity of the findings by incorporating more realistic attack data from enterprise environments and evaluating the performance of the model trained on enterprise-level datasets. Additionally, we will further optimize the data augmentation method and extend its applicability to novel network malicious traffic detection domains such as IoT and industrial Internet.

6. Conclusion

This paper presents the system HTTPSmell to detect malicious HTTP traffic through the UDA-based neural network via data augmentation. Our contribution to the research community is the design, implementation, and evaluation of HTTPSmell that autonomously abstracts the web traffic keywords to build a keywords library extends data according to augmentation facilities and detects malicious HTTP traffic by enhanced learning model.

Unlike the problems of low cross-dataset detection ability, huge training data collection cost, low scene coverage, and weak generalization ability of classification models in previous studies, our system improves the cross-dataset detection ability. The data augmentation method based on keyword library avoidance is used to build the model, and the input cost of the training dataset is reduced by preprocessing in optimal noise-adding parameters. The method makes the deep learning model have strong generalization and traffic anomaly detection ability only through a small training dataset.

Our data augmentation-based deep learning approach for malicious HTTP traffic detection, HTTPSmell, demonstrates outstanding detection performance in realistic enterprise environments. The experimental results on complex and advanced datasets derived from enterprise sources indicate that our system achieves the values of 96.77% in the classification accuracy. It can meet the generalization, efficiency, and usability requirements jointly in malicious HTTP traffic detection.

Disclosure

An earlier version of this paper has been presented at a conference in Information Security and Cryptology which has been compared in Section 4.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

This work was supported partly by the following grants: Zhejiang Key R&D Projects under Grant no. 2021C01117, National Natural Science Foundation of China under Grant nos. U22B2028 and U1936215, 2020 Industrial Internet Innovation and Development Project under Grant no. TC200H01V, Wenzhou Key Scientific and Technological Project under Grant no. ZG2020031, and Scientific Research Project of Zhejiang Provincial Department of Education under Grant no. Y202249647.

Open Research

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.